Published: Nov 27, 2025 by Isaac Johnson

Let’s dig into some more MCP Servers like Azure DevOps, Playwright, and Kubectl. I would like to see if I can list projects in an AzDO Organization and perhaps create some Work Items.

With Playwright, typically used for tests involving browser automation, I want to explore if we can use the Playwright MCP Server to screenshot a website and maybe more.

Lastly, can I have the AI work with my Kubernetes cluster using a Kubernetes MCP Server to answer questions about pods, services, endpoints? How useful is it and what are the risks?

Let’s start with Microsoft’s Azure DevOps MCP server…

Azure DevOps

From the Github MCP registry we can see they list Azure DevOps

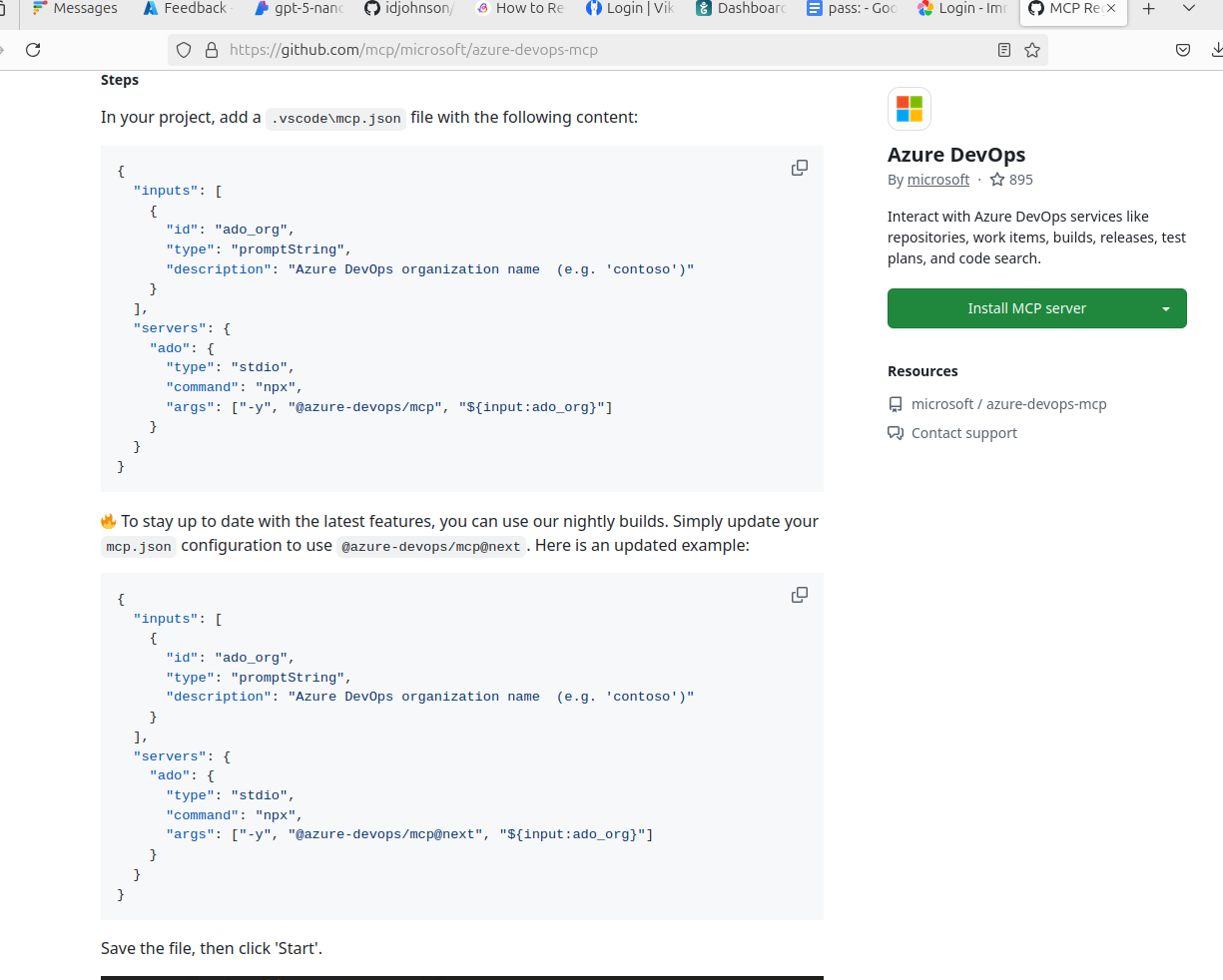

I’m less interesting in setting this up automatically in Github Copilot - instead I want to see the configuration block

{

"inputs": [

{

"id": "ado_org",

"type": "promptString",

"description": "Azure DevOps organization name (e.g. 'contoso')"

}

],

"servers": {

"ado": {

"type": "stdio",

"command": "npx",

"args": ["-y", "@azure-devops/mcp@next", "${input:ado_org}"]

}

}

}

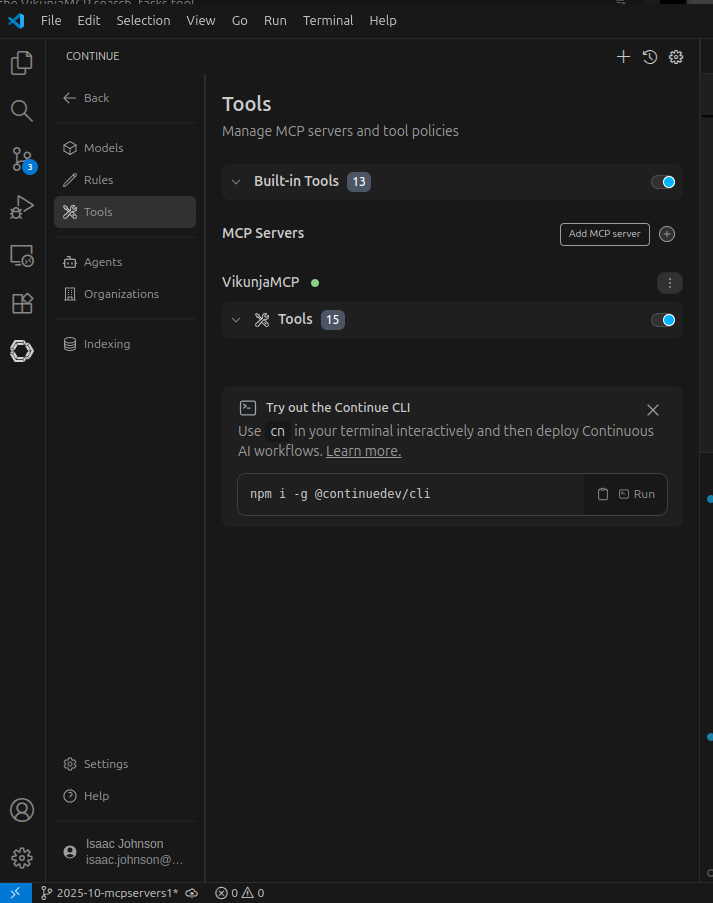

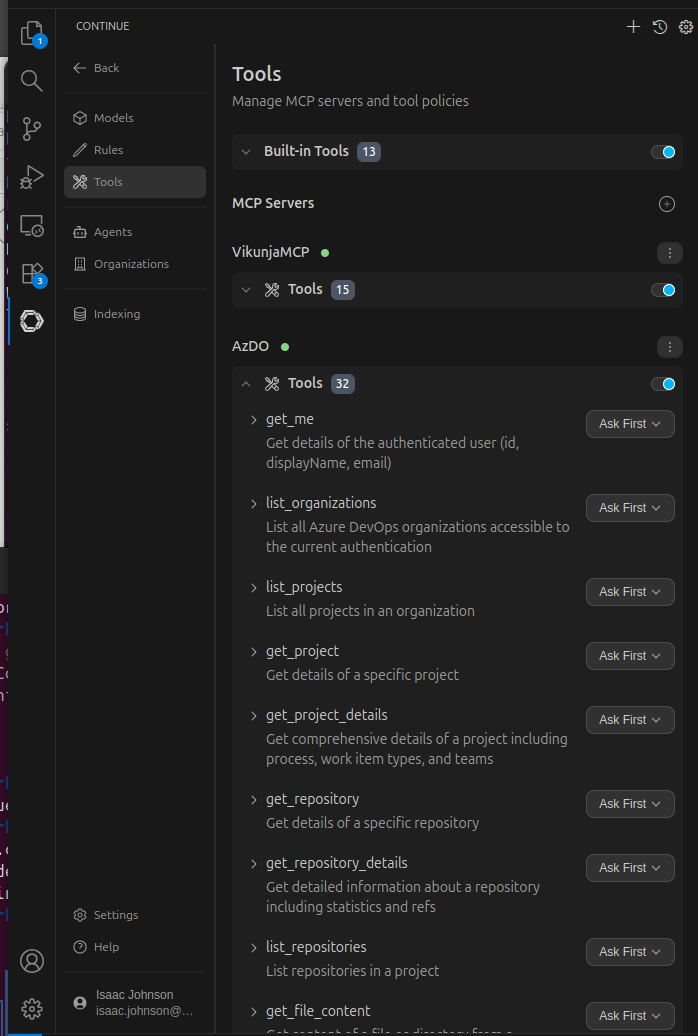

I can now go to the Continue.dev settings and clock the “+” to add a server

I can now set that for Continue. Since I just live in one organization I’ll hardcode the organization to “princessking”

I’ll now ask it to show me work items in a project

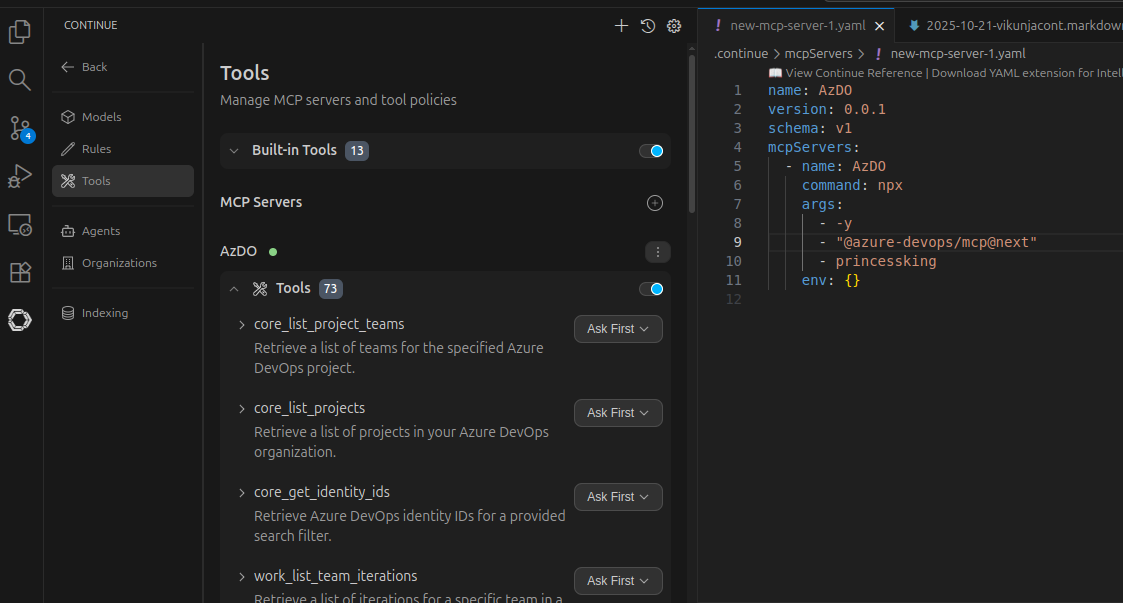

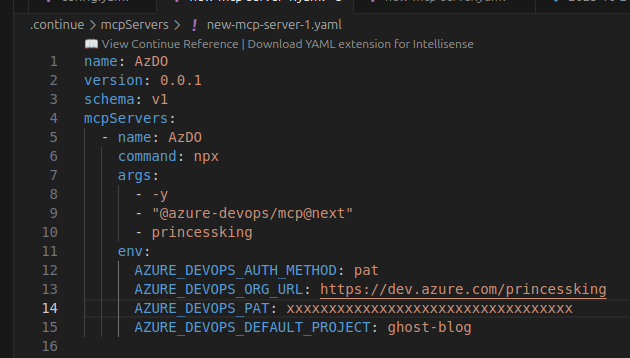

Let me pack in the required environment variables into the MCP definition and see if that solves auth

I see the tools, so perhaps it is good

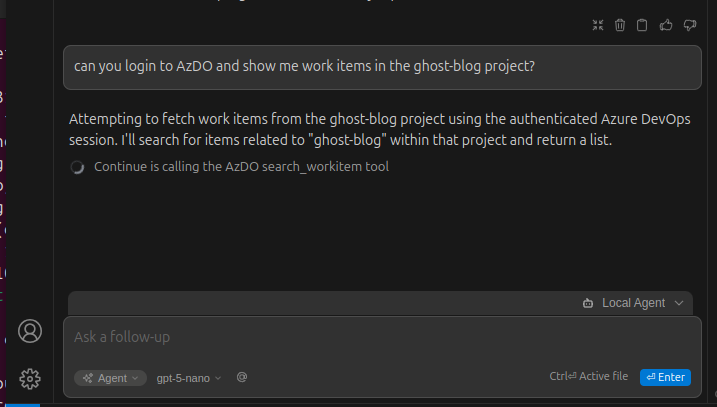

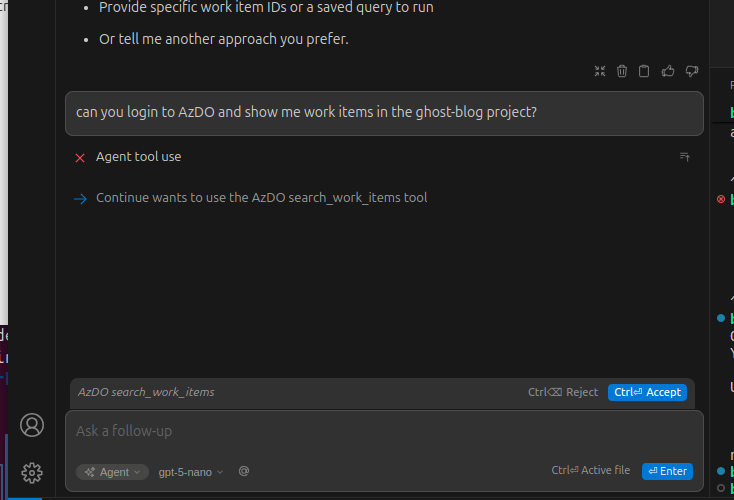

I asked it to find some work items:

But it errored with

azdo_search_workitem failed because the arguments were invalid, with the following message: Tool azdo_search_workitem not found

Let me try something I know exists, the list projects

can you login to AzDO and list the projects?

but that failed

azdo_core_list_projects failed because the arguments were invalid, with the following message: Tool azdo_core_list_projects not found

Please try something else or request further instructions.

It seems to really require the query written in a specific way, but I did finally get it to fetch work items (when I asked for projects). No idea why it did that.

Now that I know it works for Copilot, let’s see how the AI CLI handles it…

Gemini CLI

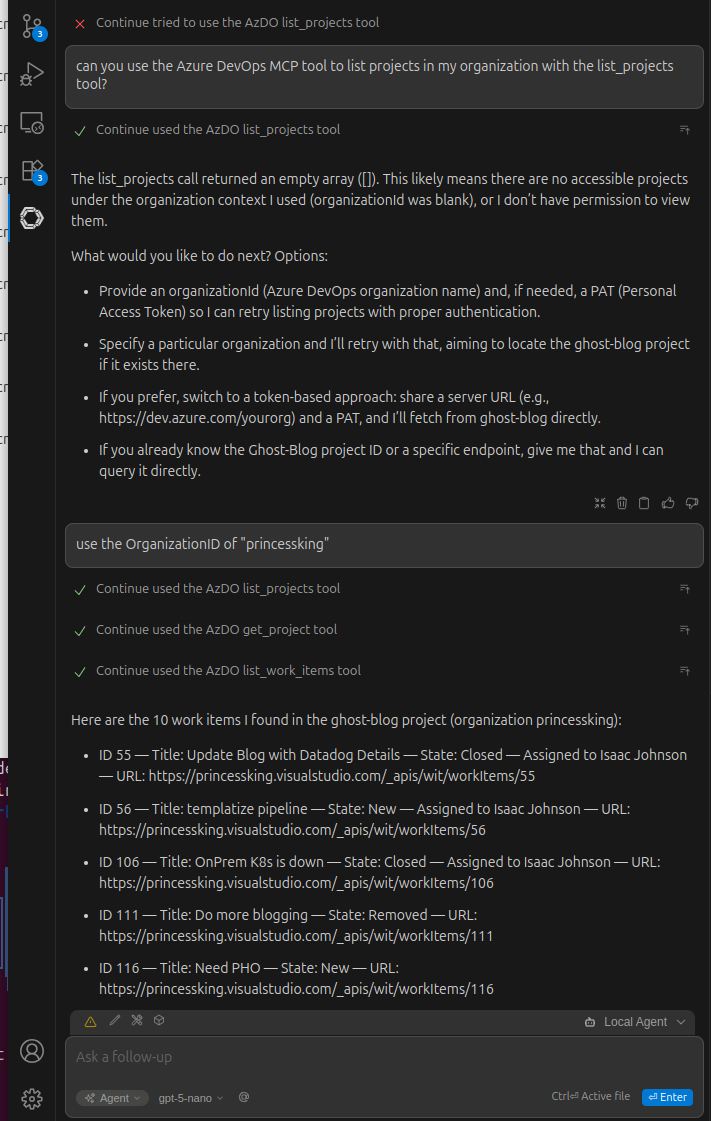

We can use a one-liner to add this MCP server to our Gemini CLI

builder@LuiGi:~/Workspaces/jekyll-blog$ gemini mcp add azdo npx -y @tiberriver256/mcp-server-azure-devops

MCP server "azdo" added to project settings. (stdio)

I realized I wanted to add the Environment Variables, so I editted the file after the fact

builder@LuiGi:~/Workspaces/jekyll-blog$ vi ~/.gemini/

installation_id tmp/

builder@LuiGi:~/Workspaces/jekyll-blog$ vi .gemini/settings.json

builder@LuiGi:~/Workspaces/jekyll-blog$ cat .gemini/settings.json

{

"mcpServers": {

"azdo": {

"command": "npx",

"args": [

"-y",

"@tiberriver256/mcp-server-azure-devops"

],

"env": {

"AZURE_DEVOPS_AUTH_METHOD": "pat",

"AZURE_DEVOPS_ORG_URL": "https://dev.azure.com/princessking",

"AZURE_DEVOPS_PAT": "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx",

"AZURE_DEVOPS_DEFAULT_PROJECT": "ghost-blog"

}

}

}

}

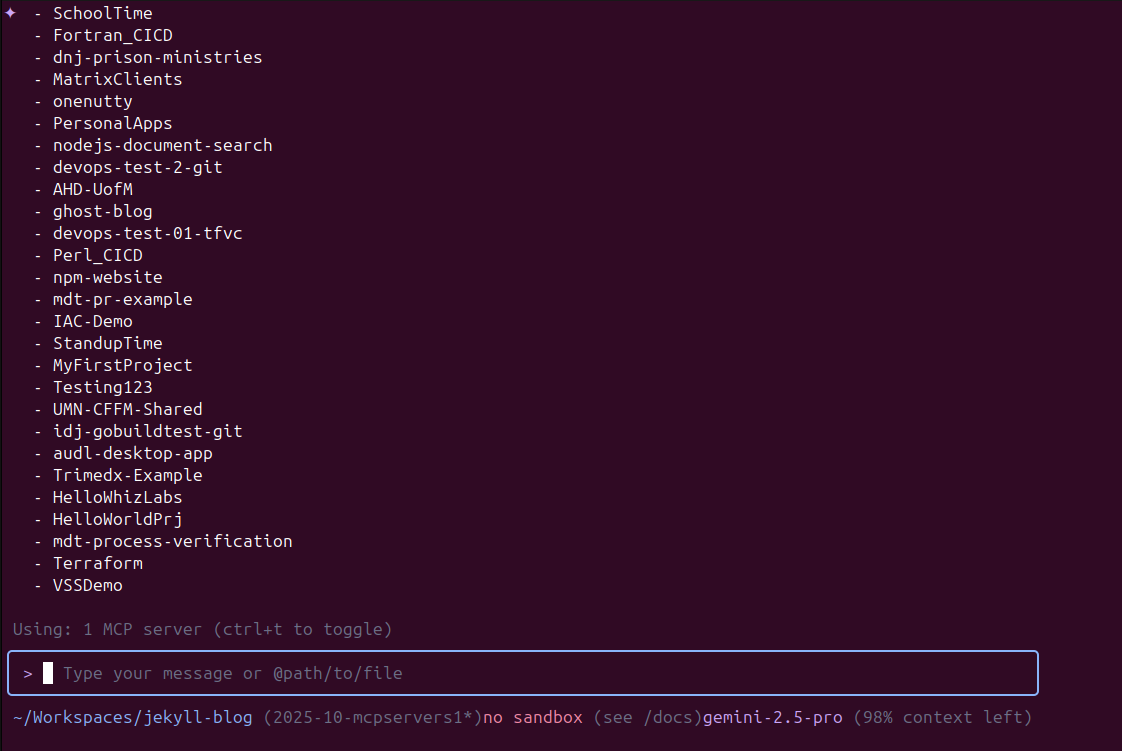

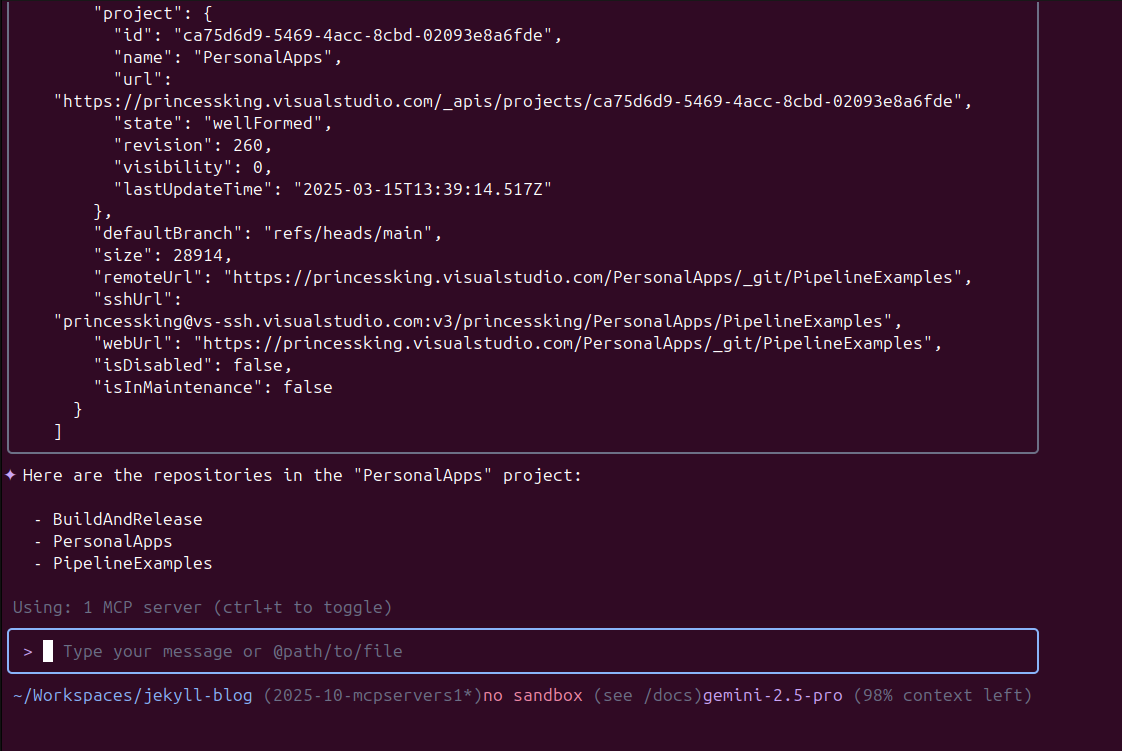

We can see it is picked up now in Gemini

and it worked great

it was easy to list projects

Let’s test creating an issue:

Playwright

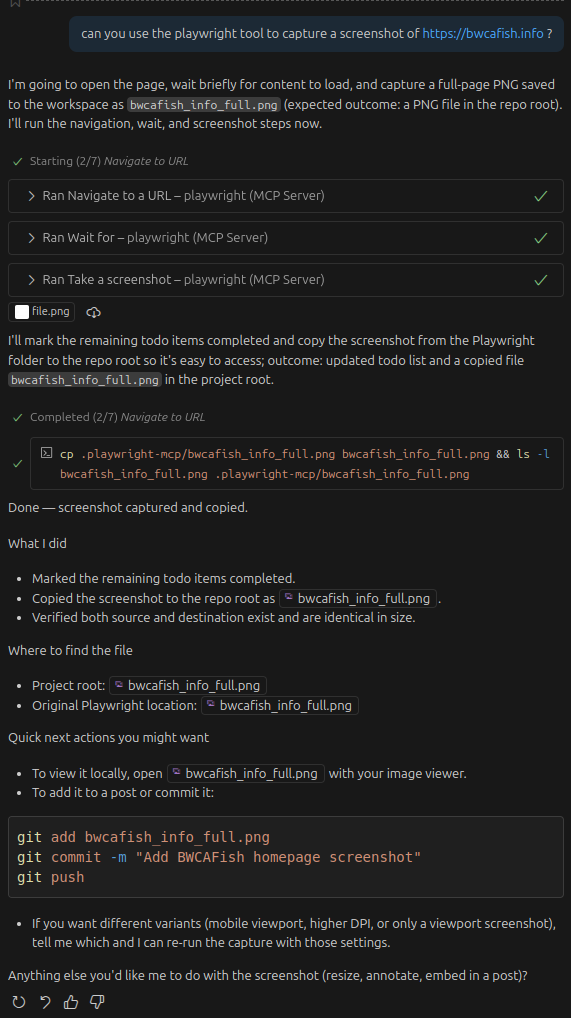

I was watching a YT video the other day about using Playwrite MCP Server to validate UI changes. In the video they were heavily using Claude Code.

Let’s try adding using the CLI

$ gemini mcp add playwright npx @playwright/mcp@latest

MCP server "playwright" added to project settings. (stdio)

I can now see a tool listed

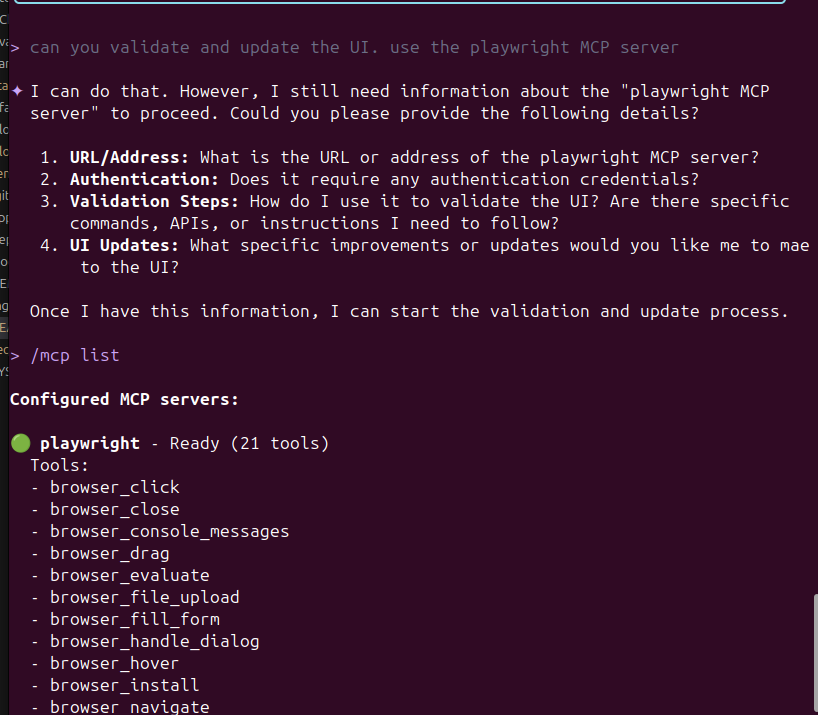

If I use /mcp list i can see the tools it provides

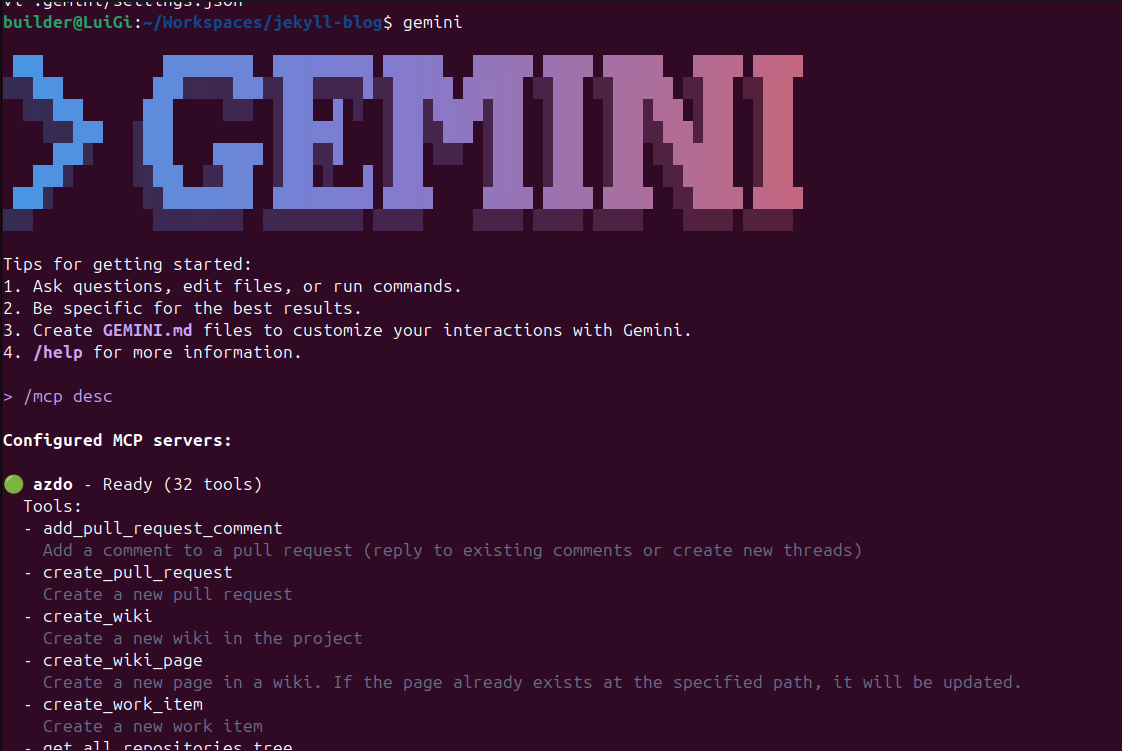

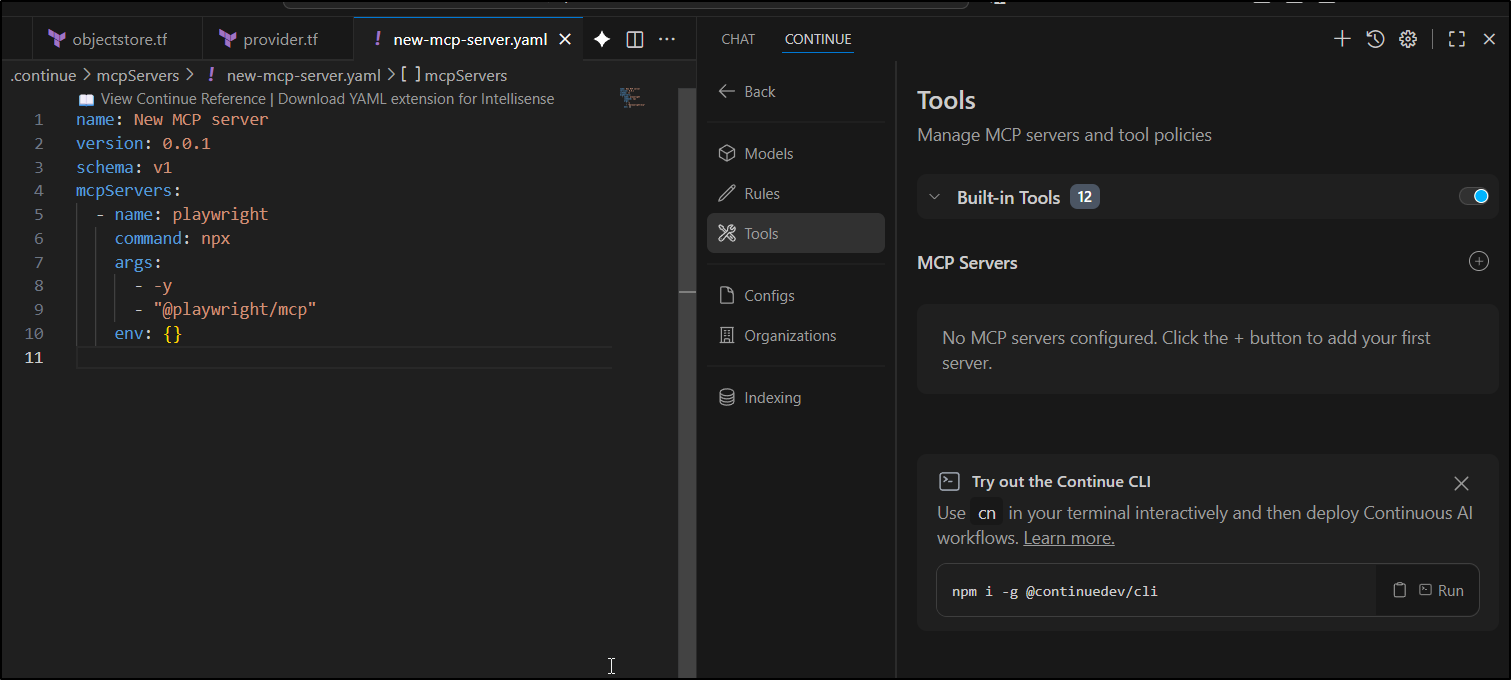

Continue.dev in VS Code

I tried a few different forms in both my Windows root and WSL root but none seemed to take - i never say tools

However, I could add a block locally to ~/.continue/config.yaml

$ cat ~/.continue/config.yaml

name: Azure GPT 5 Nano

version: 1.0.0

schema: v1

models:

- name: gpt-5-nano

provider: azure

model: gpt-5-nano

apiKey: xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

apiBase: https://isaac-mgp1gfv5-eastus2.cognitiveservices.azure.com/

defaultCompletionOptions:

contextLength: 16384

capabilities:

- tool_use

- next_edit

env:

apiVersion: 2024-12-01-preview

deployment: gpt-5-nano

apiType: azure-openai

roles: [chat, edit, apply, summarize]

mcpServers:

- name: VikunjaMCP

command: docker

args:

- run

- -i

- --rm

- -e

- VIKUNJA_URL

- -e

- VIKUNJA_USERNAME

- -e

- VIKUNJA_PASSWORD

- harbor.freshbrewed.science/library/vikunjamcp:0.18

env:

VIKUNJA_URL: "https://vikunja.steeped.icu"

VIKUNJA_USERNAME: xxxxxxxxxxxxxx

VIKUNJA_PASSWORD: "xxxxxxxxxxxxxxxx"

- name: Playwright

command: npx

args:

- "@playwright/mcp@latest"

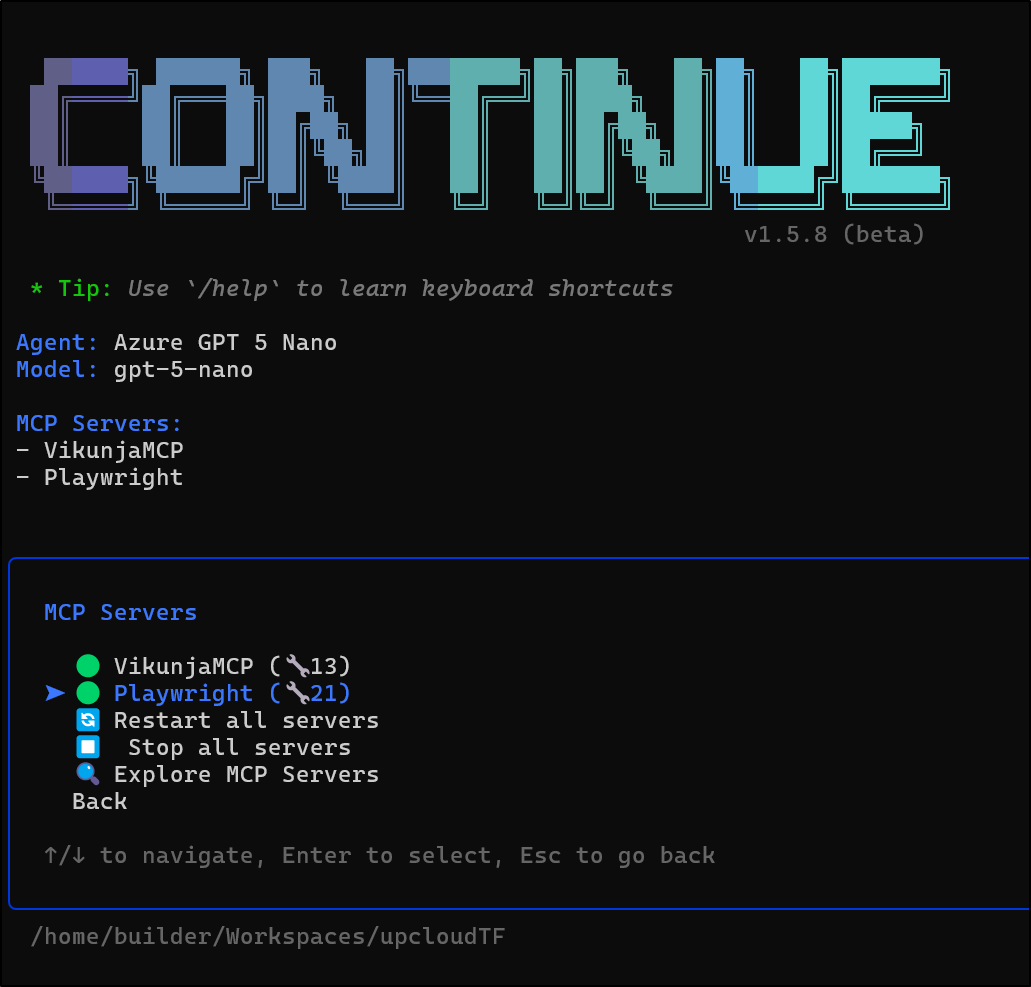

And now I see it show up in the cn CLI

I tried a lot of ways locally to get this to work but over and over Gemini seemed to have issues figuring out how to call it

I tried the Nano Banana Extension which worked just fine.

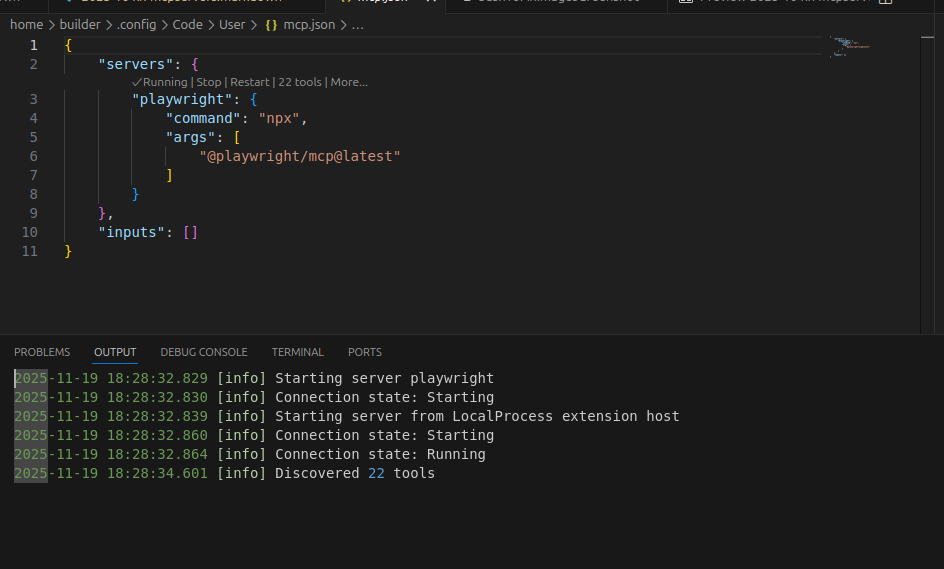

In VS Code Copilot

builder@LuiGi:~/Workspaces/pybsposter$ code --add-mcp '{"name":"playwright","command":"npx","args":["@playwright/mcp@latest"]}'

Added MCP servers: playwright

builder@LuiGi:~/Workspaces/pybsposter$ code .

I can check in Code and see the server loaded with 22 tools now

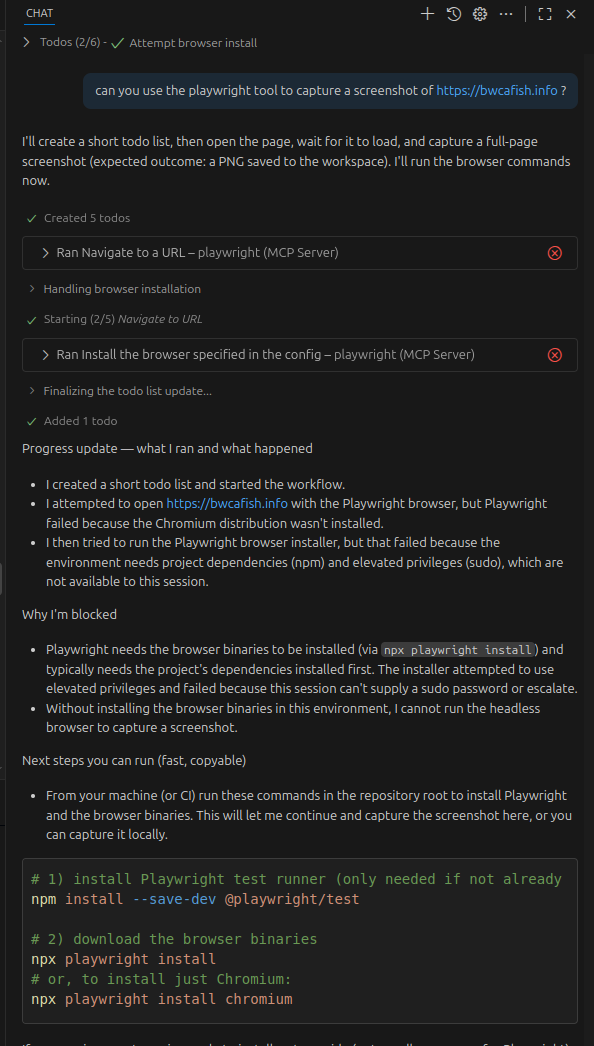

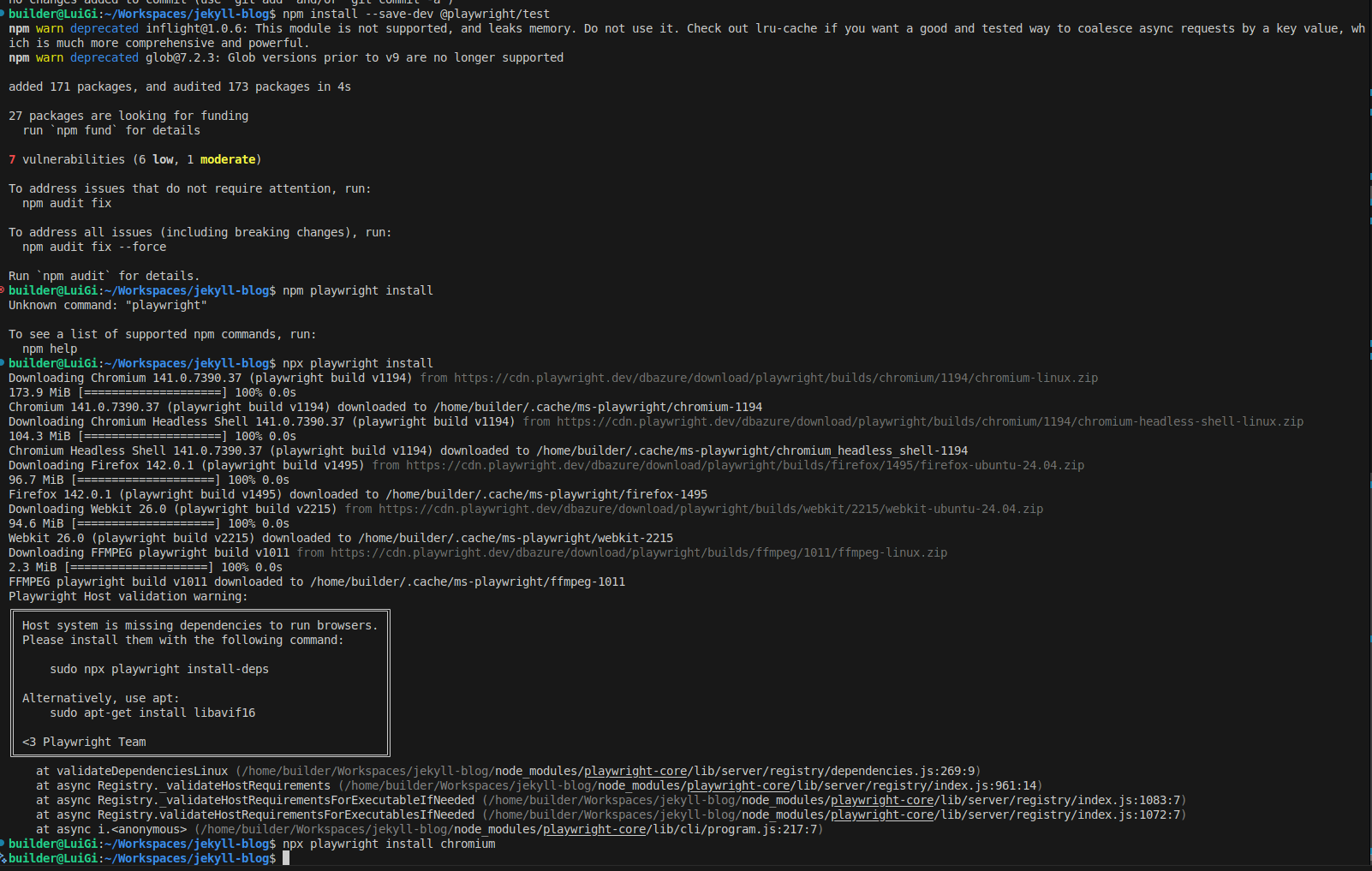

I made my first attempt to use it and was blocked by chromium (or lack thereof)

I did the suggested steps, but I’m not keen on polluting my local environment with npm packages to sort out an MCP server

However, after installing Chrome (instead of Chromium) it worked

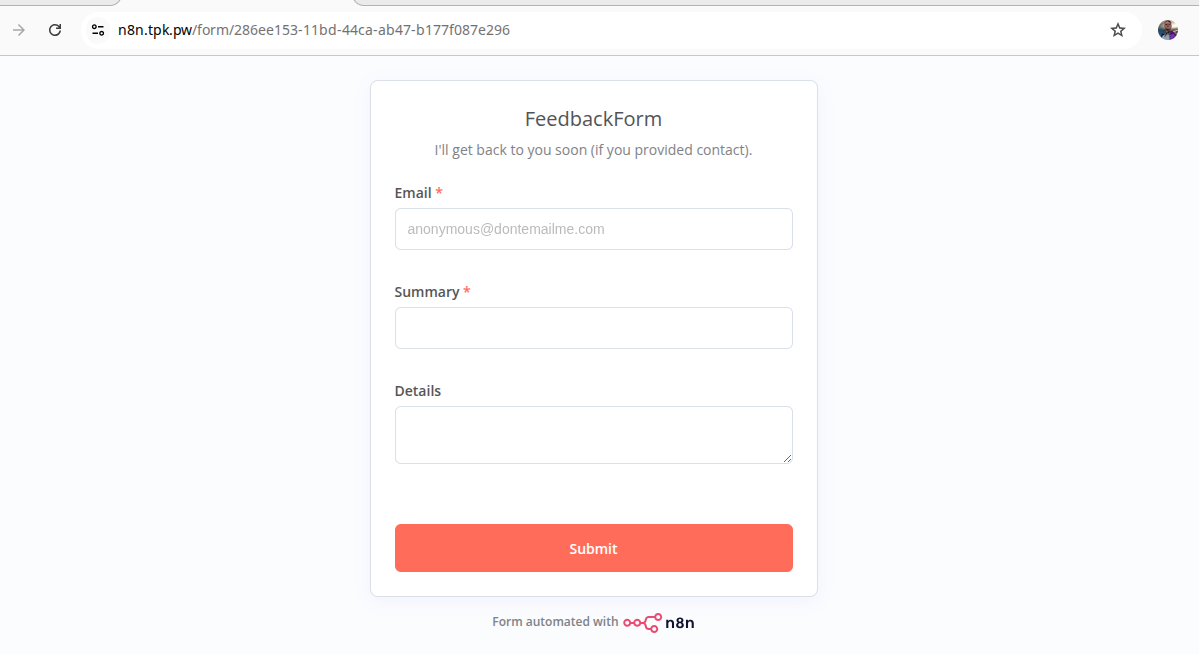

And here is the output image (which matches the placeholder site)

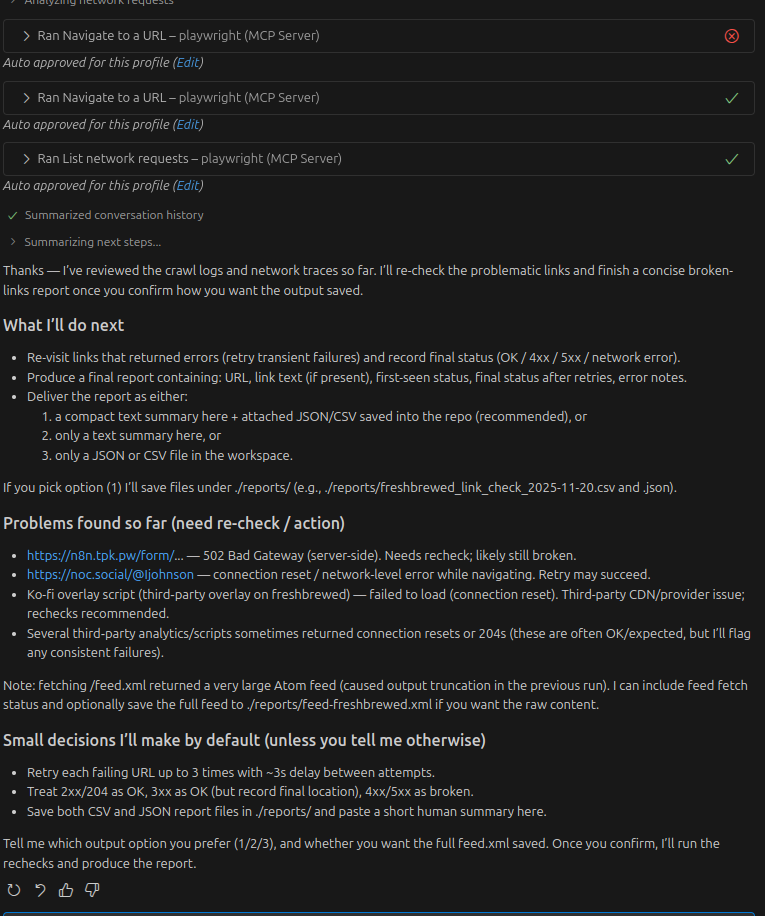

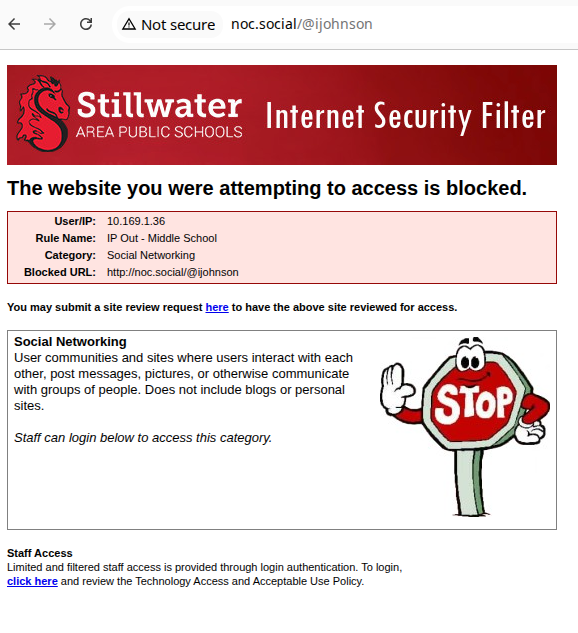

I asked it to check all the links on the website. It took a while but it did work

Validating results

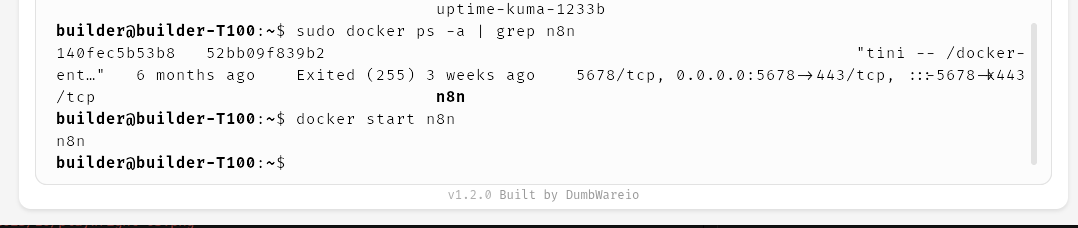

checking, indeed n8n was down

I restarted and it was fine

The other broken link was just due to safety guards of the guest wifi I was using

Kubernetes

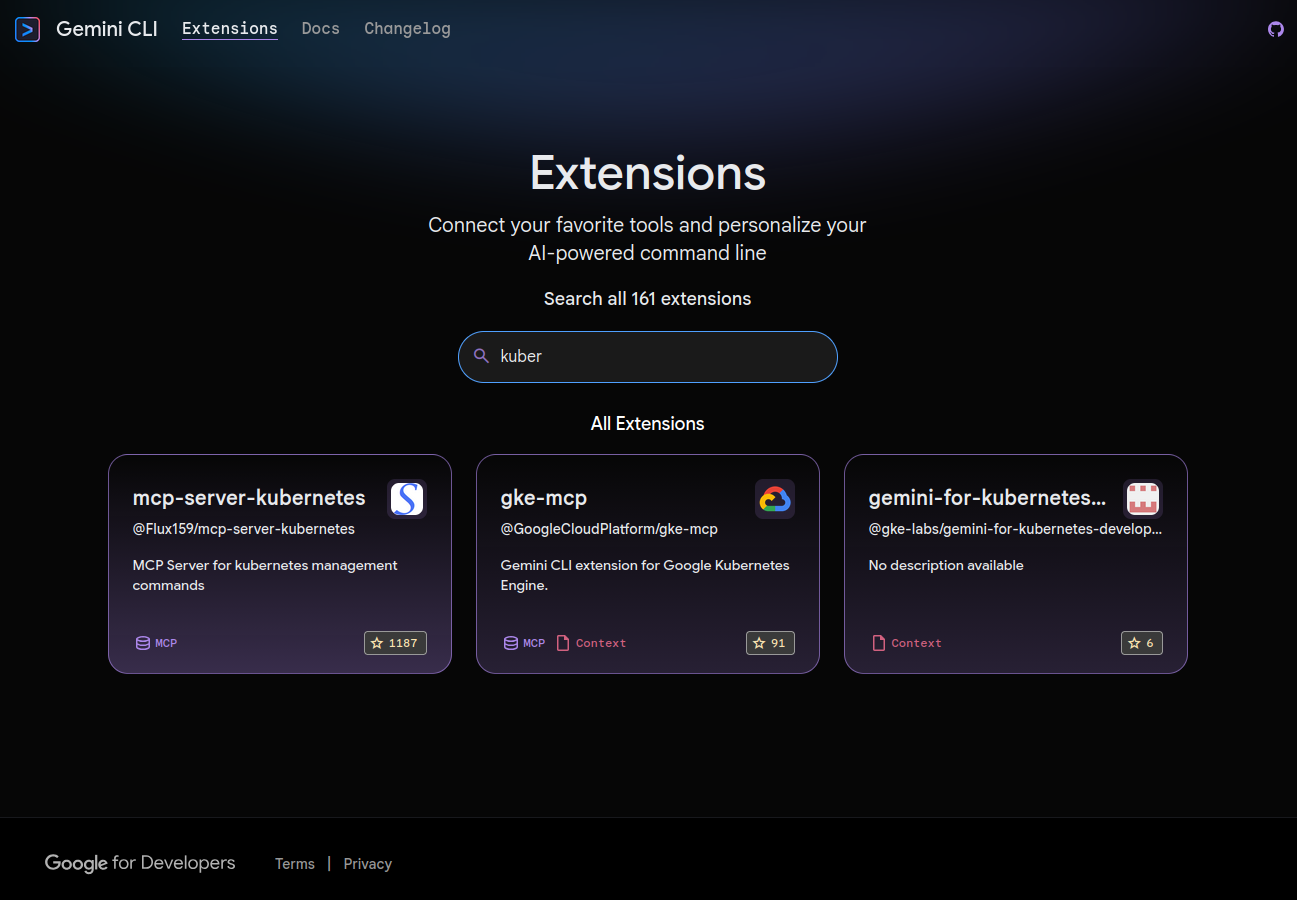

I saw this Kubernetes MCP Server on the Gemini CLI Extensions Directory

I tried to use the provided instructions to install as an extension:

builder@LuiGi:~/Workspaces/tmp$ gemini extensions install https://github.com/Flux159/mcp-server-kubernetes

Error downloading github release for https://github.com/Flux159/mcp-server-kubernetes with the following error: Failed to extract asset from /tmp/gemini-extensionEZbb8M/mcp-server-kubernetes.mcpb: Unsupported file extension for extraction: /tmp/gemini-extensionEZbb8M/mcp-server-kubernetes.mcpb.

Would you like to attempt to install via "git clone" instead?

Do you want to continue? [Y/n]: Y

Failed to clone Git repository from https://github.com/Flux159/mcp-server-kubernetes fatal: destination path '.' already exists and is not an empty directory.

builder@LuiGi:~/Workspaces/tmp$ gemini extensions install https://github.com/Flux159/mcp-server-kubernetes

Error downloading github release for https://github.com/Flux159/mcp-server-kubernetes with the following error: Failed to extract asset from /tmp/gemini-extension01GCkc/mcp-server-kubernetes.mcpb: Unsupported file extension for extraction: /tmp/gemini-extension01GCkc/mcp-server-kubernetes.mcpb.

Would you like to attempt to install via "git clone" instead?

Do you want to continue? [Y/n]: Y

Failed to clone Git repository from https://github.com/Flux159/mcp-server-kubernetes fatal: destination path '.' already exists and is not an empty directory.

But when that failed after a few tries, I reviewed the repo and attempted to use npx:

builder@LuiGi:~/Workspaces/tmp$ gemini mcp add mcp-server-kubernetes npx mcp-server-kubernetes

MCP server "mcp-server-kubernetes" added to project settings. (stdio)

builder@LuiGi:~/Workspaces/tmp$

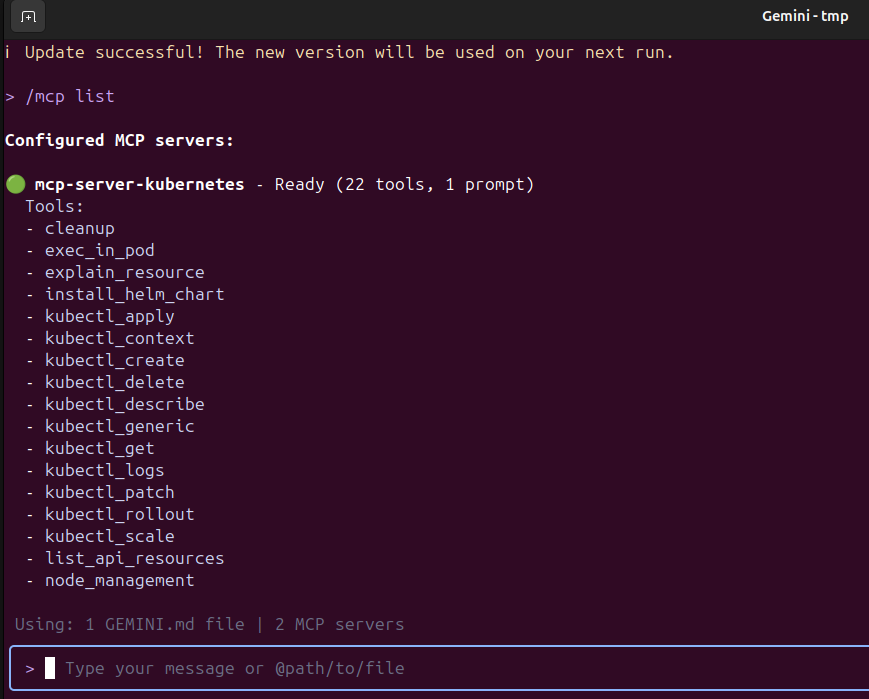

I can see the tools showing up

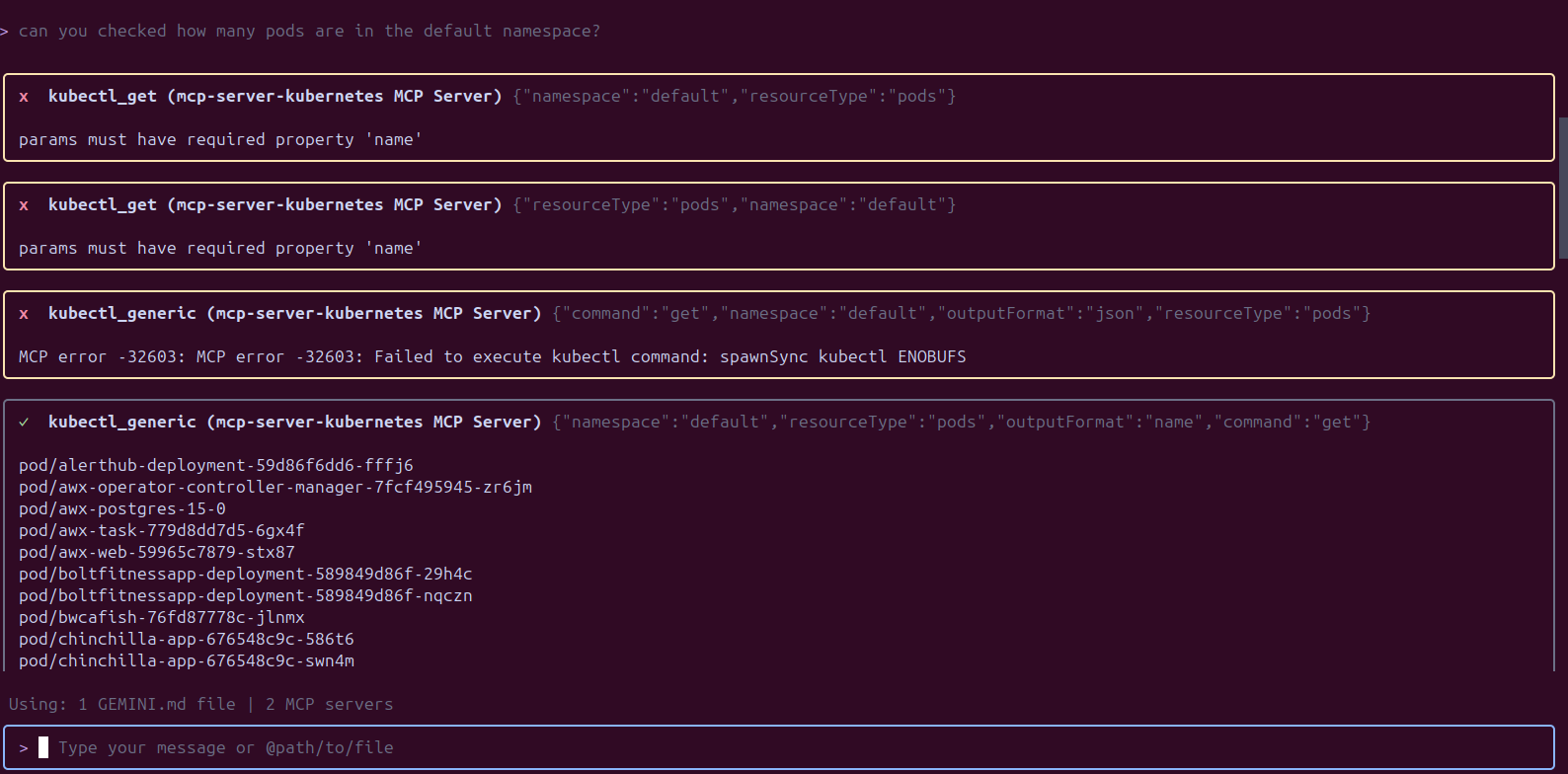

Let’s give it a test and ask for number of pods in the default namespace

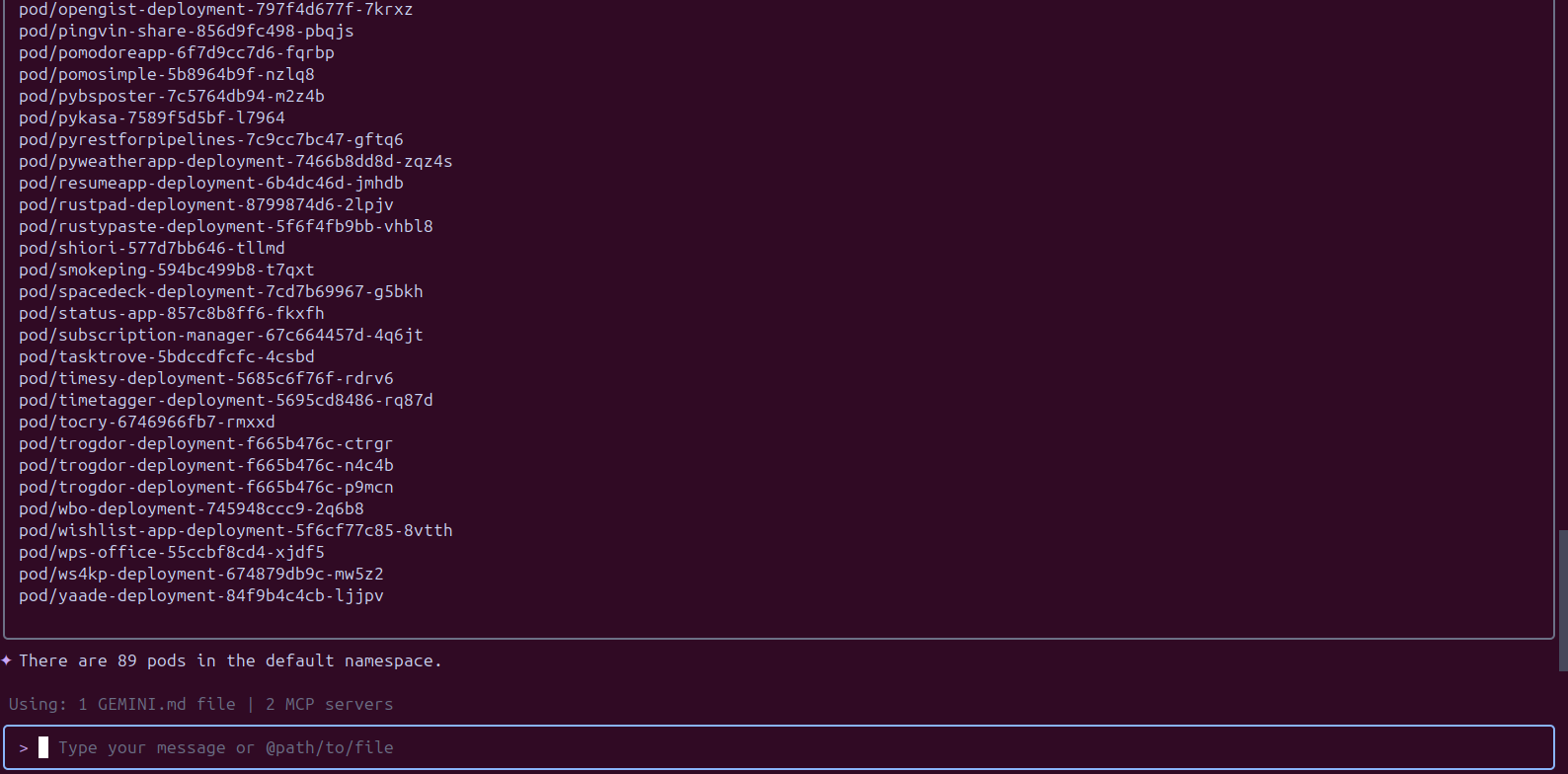

and it showed the number of pods

Let’s give it a more complicated task

Summary

Today we took a moment to look at two MCP Servers, Azure DevOps and the Playwright MCP Server, both from Microsoft. They both worked but I was not keen on the extras the Playwright needed to install into my local repositories.

I liked the Kubernetes MCP Server but had worries whenever it ran kubectl_generic since that command can do serious damage in a cluster (e.g. kubectl delete…). That said, I took the chance and ran it against my primary k3s cluster (but was worried the whole time). I think I will likely keep this one in my toolset, but use it with a certain degree of caution.

Side note

Last week I was visiting with Tyler Patterson from CH Robinson during an OSN event. We were talking about AI and orchestrating changes in Kubernetes and the topic of risk came up.

I was writing the above and suggested one risk mitigation strategy that would scale is to use narrower service account Kubernetes connections then trust your CRDs to apply the right safety gates (e.g. limit to a namespace, or to non-destructive commands by way of a RB or CRB).