Published: Jun 19, 2025 by Isaac Johnson

Bolt has been hosting this month “The Worlds Biggest Hackathon”. It is really open-ended but fundamentally needs to start with Bolt.new and have something to share when done.

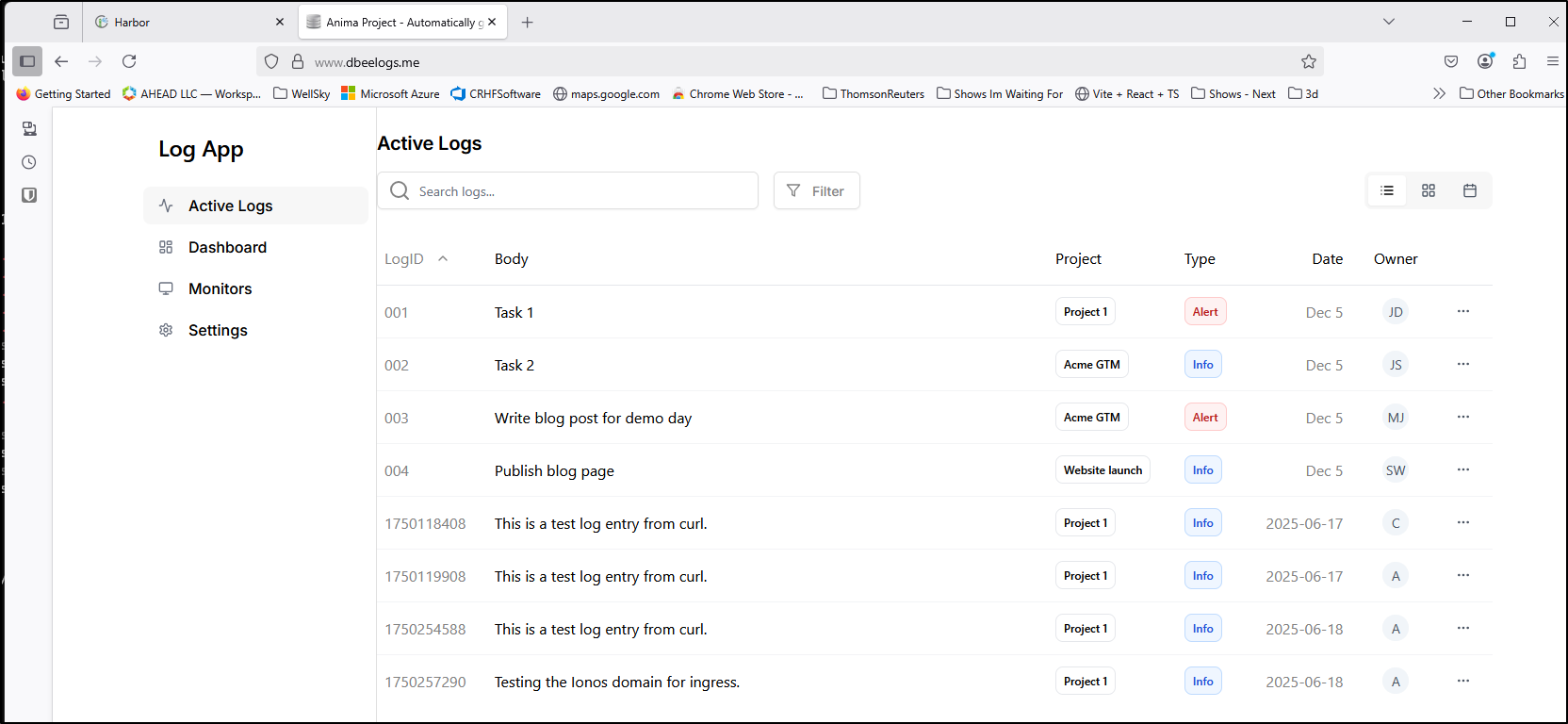

As irony would have it, the day I started, Google had a massive outtage which affected Bolt and just about everyone else. That said, soon after I got going and built out Database Log reporting service I dubbed “DB Logs App” and later renamed “DBeeLogs.me”.

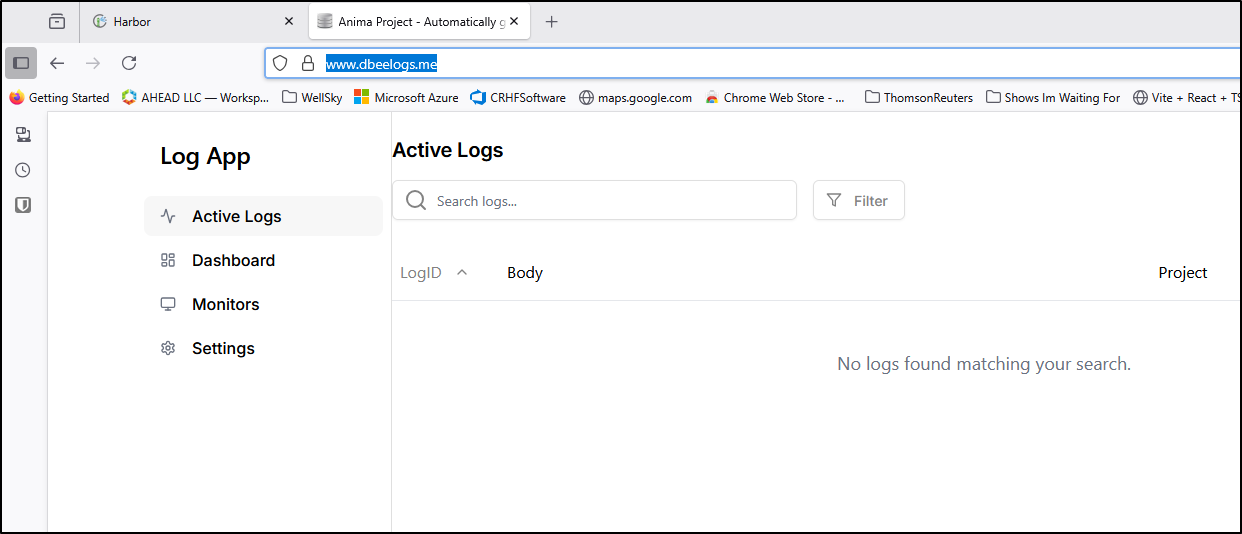

You can visit the end result at https://www.dbeelogs.me/ and use the username/pass admin/mypass.

In this article I’ll cover the start of ideation with Figma that then drove a Bolt.new flow to build the UI. Once the UI was together, I pulled into VS Code to refine using Gemini Code Assist and a variety of engines from GPT-4.1 to Sonnet 3.5 in Copilot. I refined it into containers for docker, then to Kubernetes. While Bolt likes to use Supabase for Databases, I like PostgreSQL so there was some work to move back ends.

I built out the backend API service and tied it into the Ingress and lastly worked through an LogIngest service, once again starting with Bolt, to build something in Javascript that can expose a REST interface externally to pull in logs.

Let’s dig in and see how we hack something together using Bolt.new.

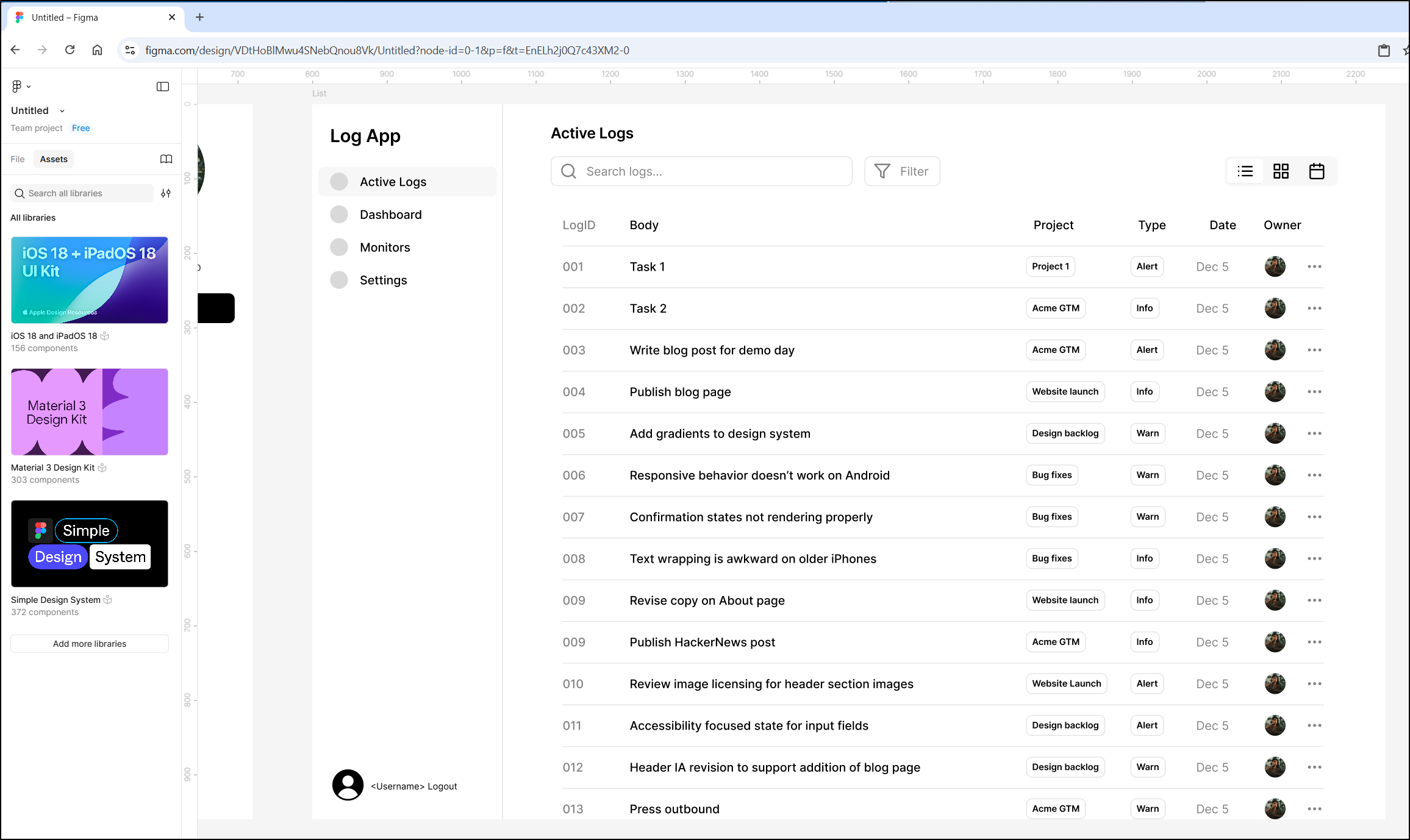

Figma

Before I go into Bolt, I need to get some designs put together in Figma.

I started with a List Web style and customized it to focus on logs

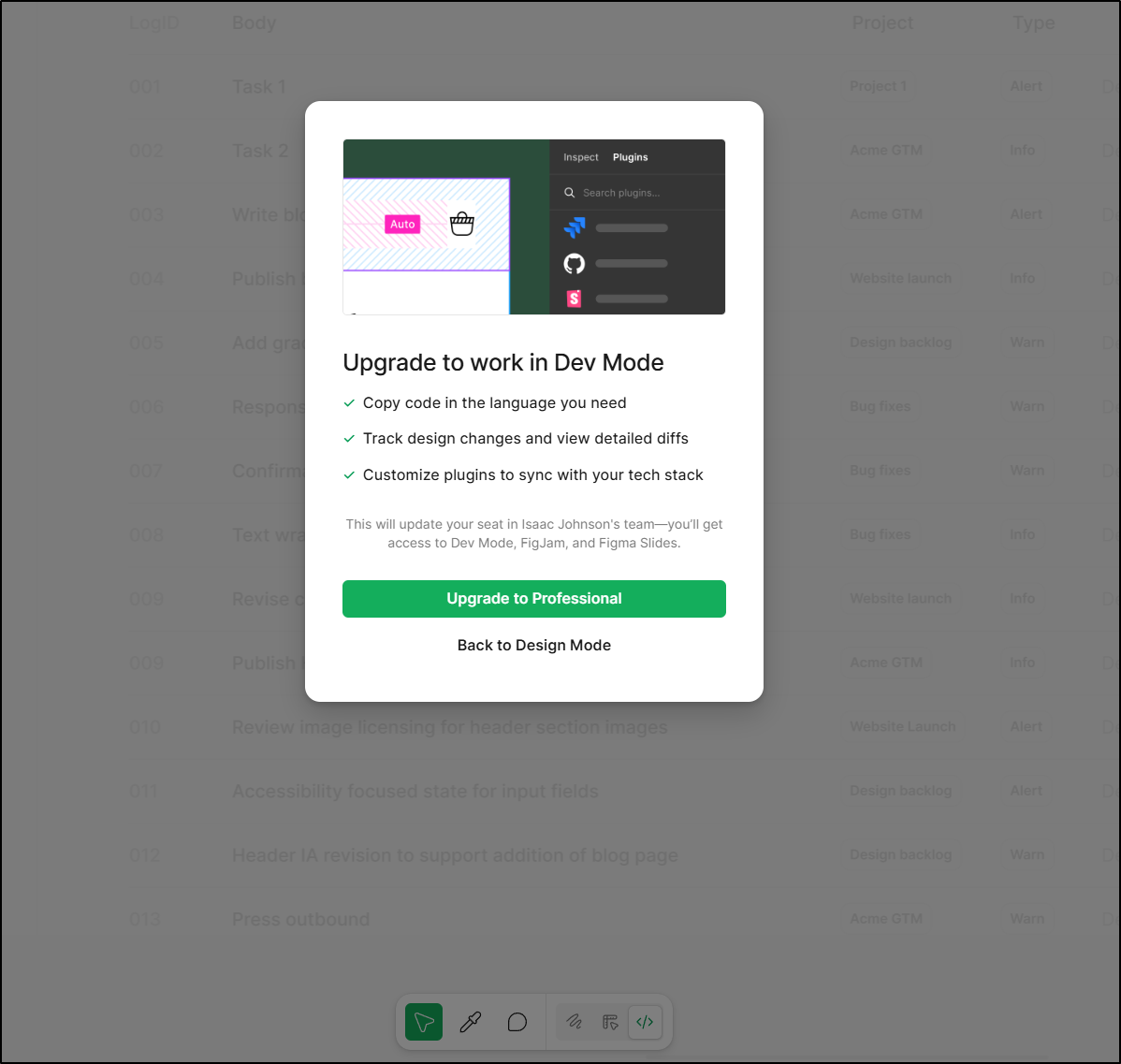

While I would need to pay to export code

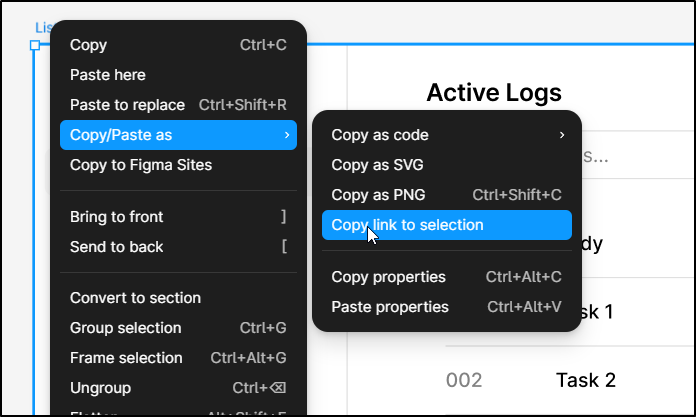

I can screen shot the page and go to Bolt.new. However, this time I plan to copy a link by right clicking the frame and using “Copy/Paste”

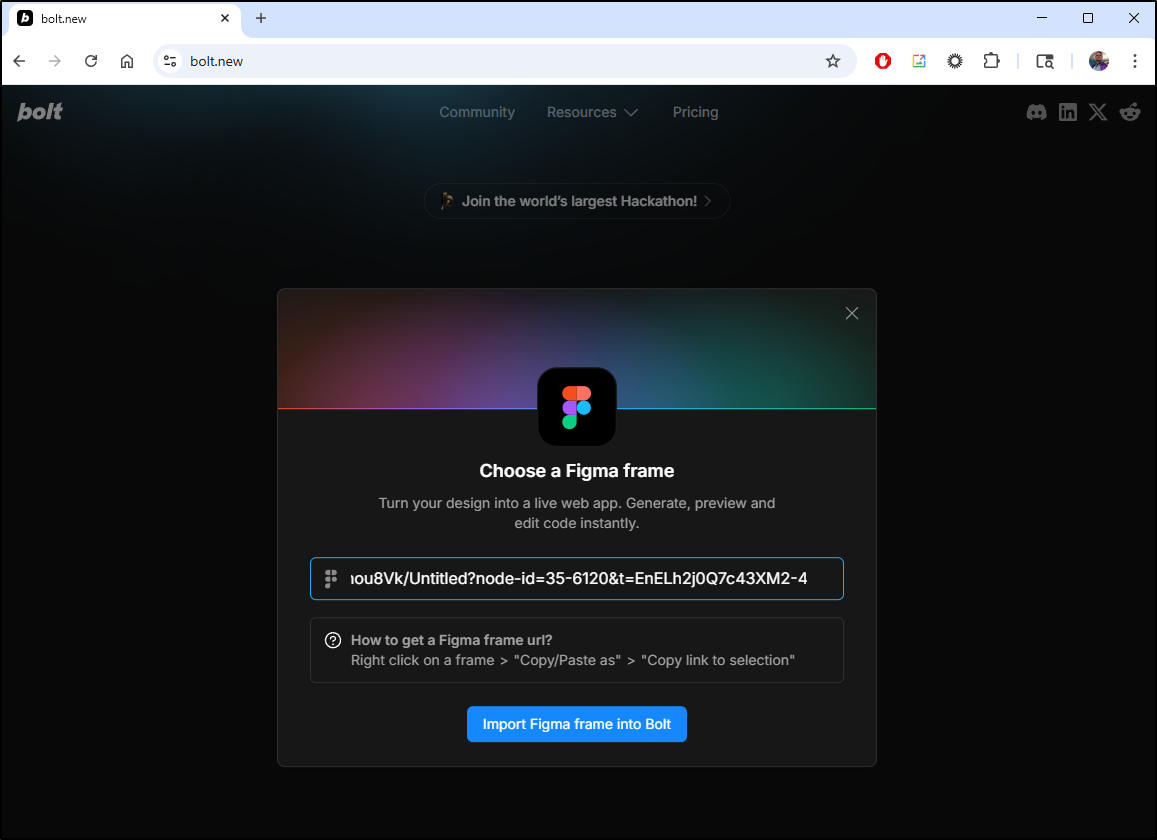

Back in Bolt, I can use that with the Figma connector

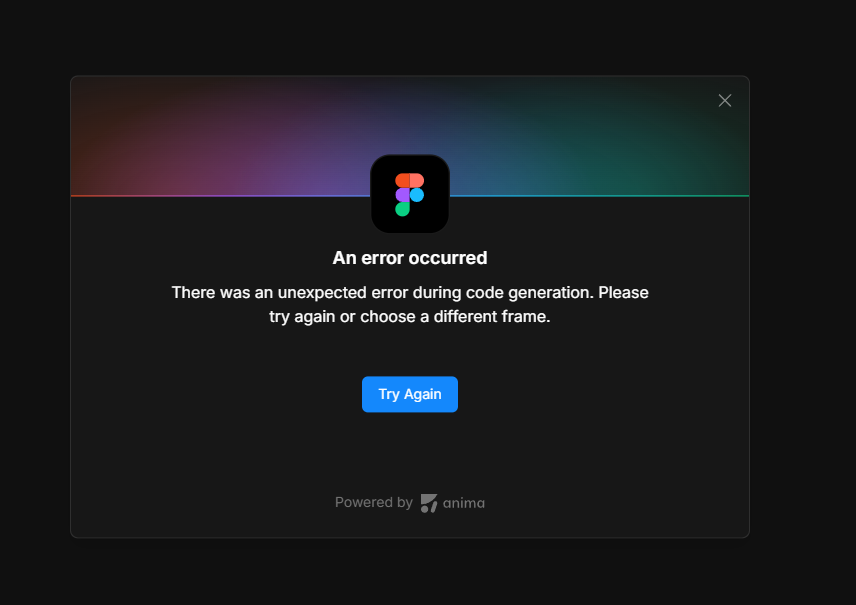

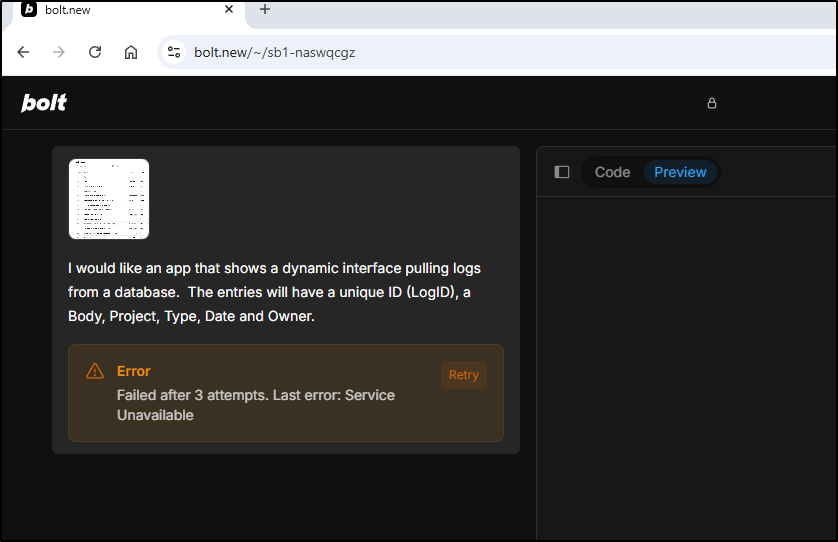

However, each time I tried, it failed

(note: I would later find that both Figma and Bolt were affected by a Google global outage and I just happened to start during that time)

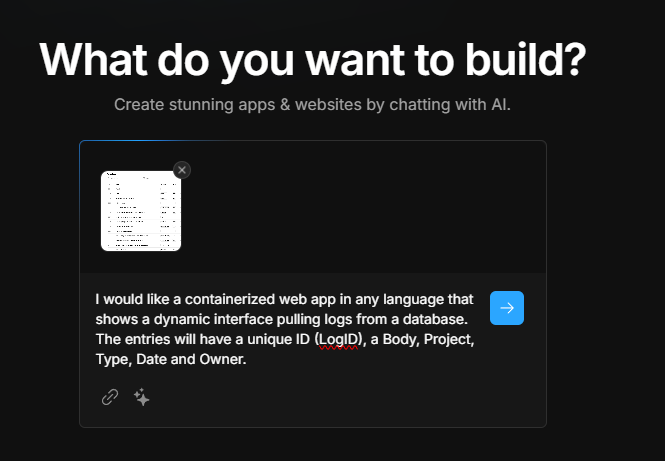

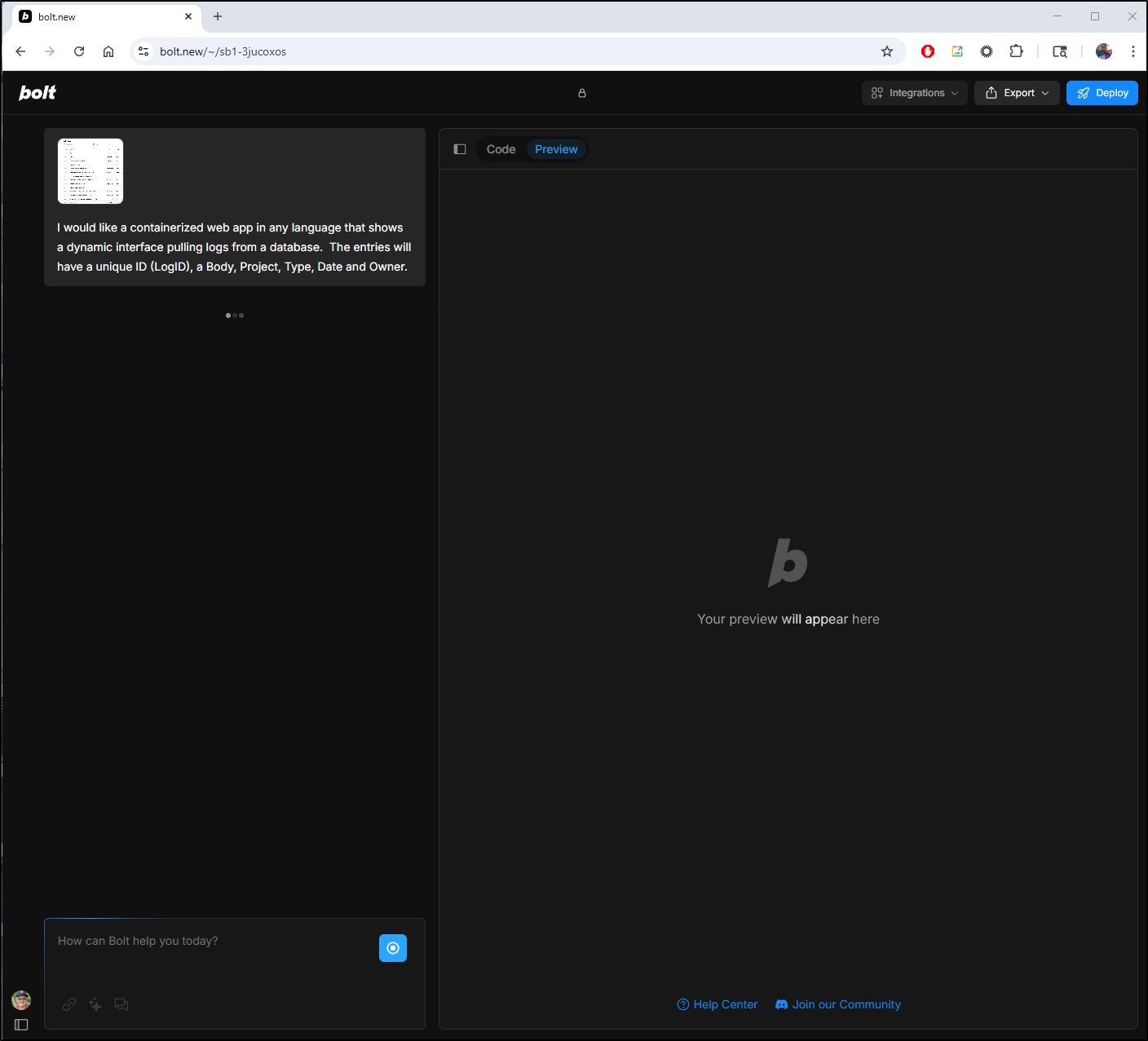

After a few failed tries, I pivoted to using a screenshot and attaching as an image

Bolt now begins to build the app

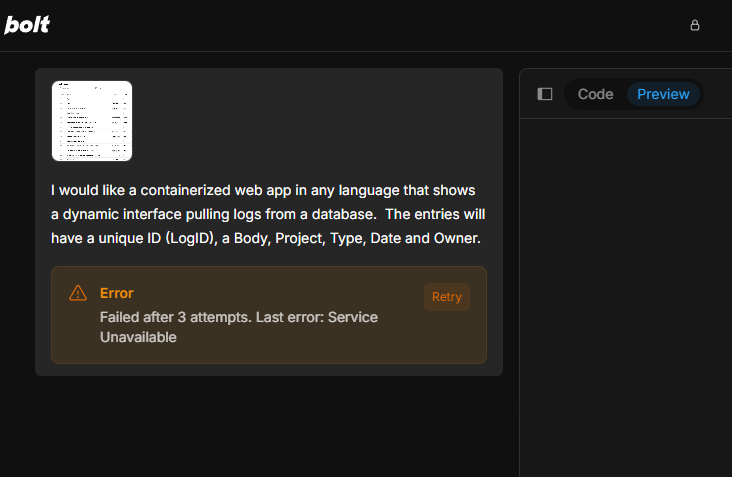

The first time I got an error

I reduced it further and still got an error (it could be under load). I’ll try back later

Wild as it may be, the one day I chose to work on this was the one day there was a CloudFlare and Google outage that was affecting everyone, including Bolt.

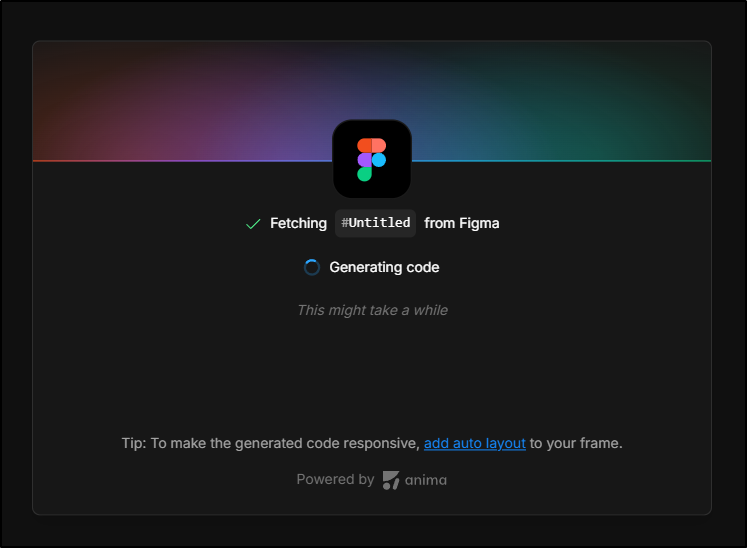

Trying the next day and Figma imports went smooth

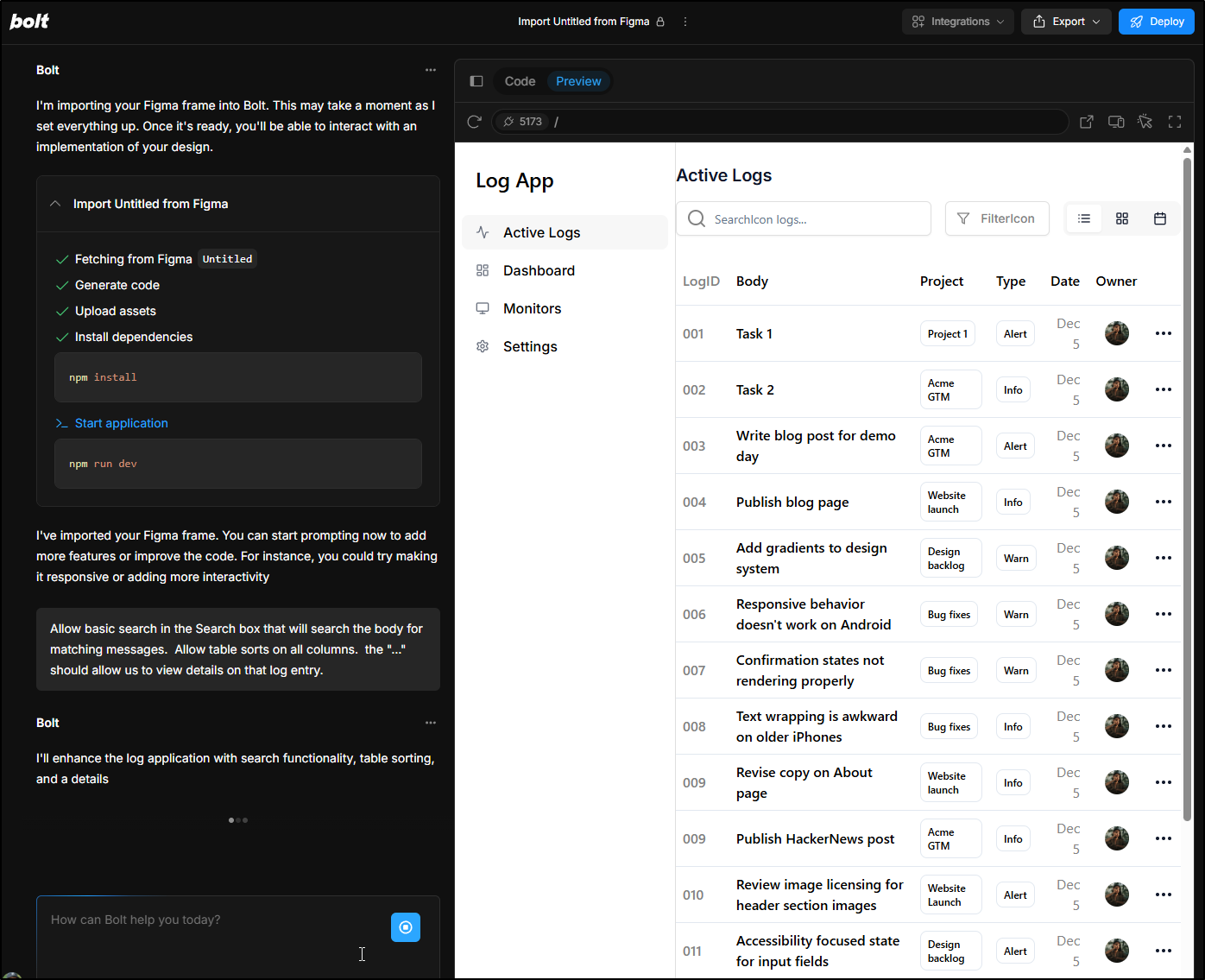

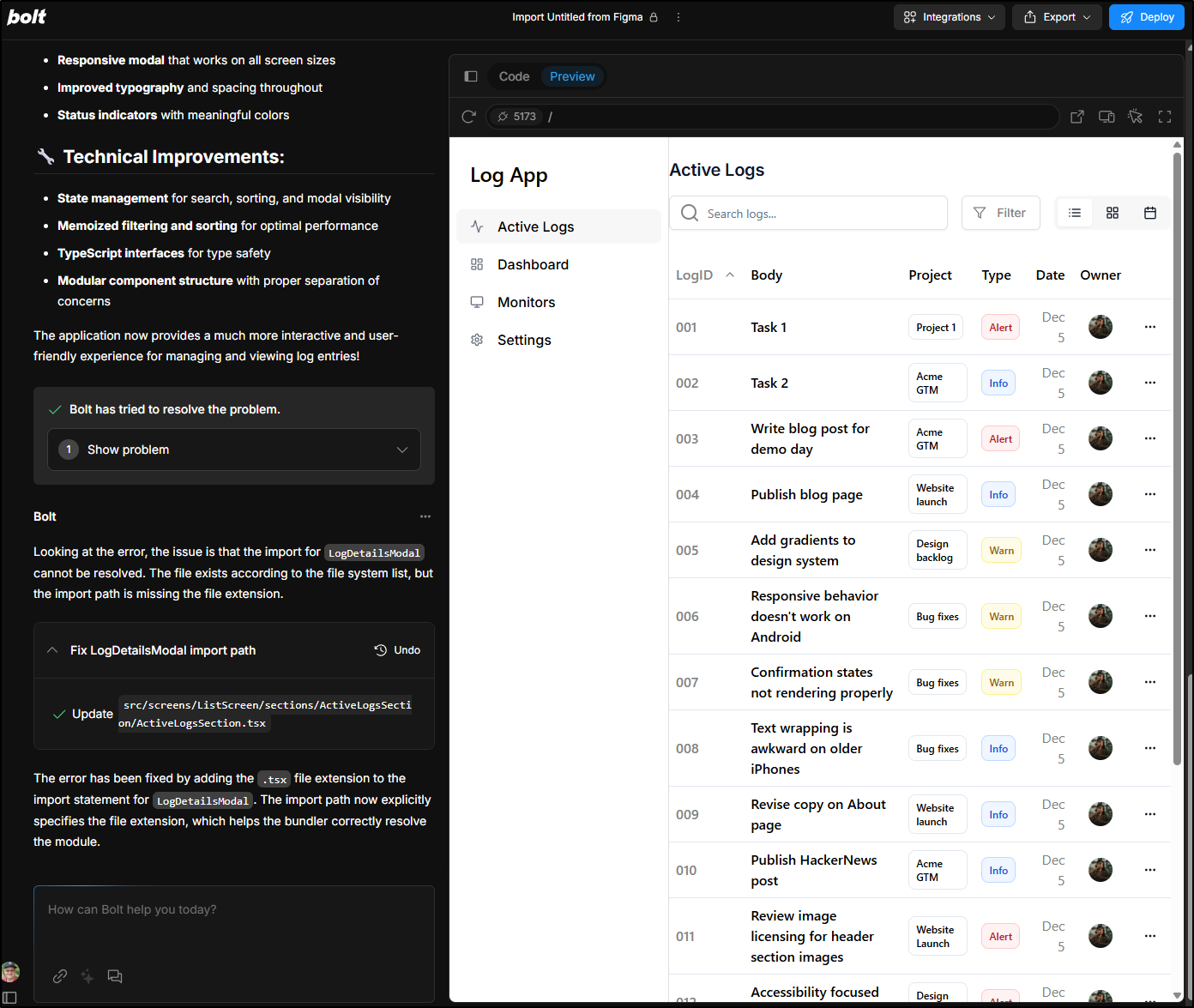

And once imported, Bolt did a fantastic job of building an app around it

Bolt worked through a couple of issues, but was able to correct them

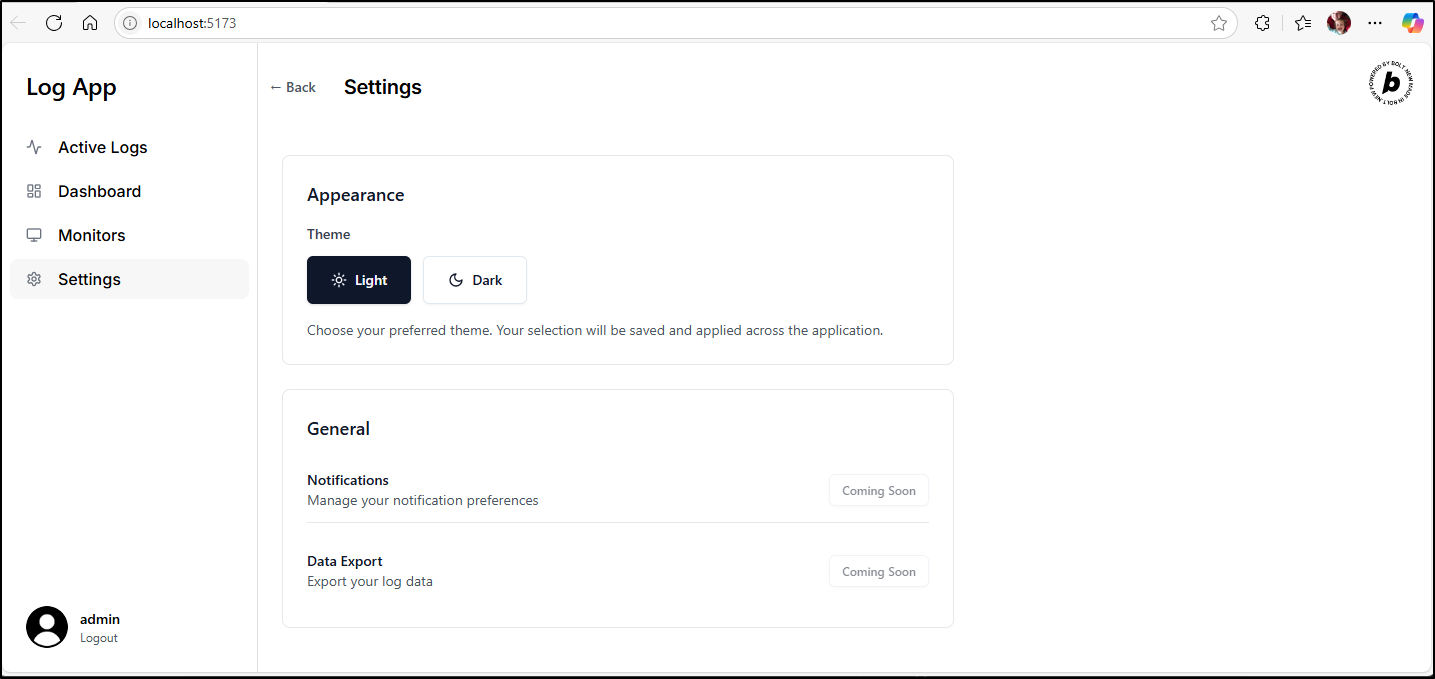

It looks pretty good

As we go along it’s worth occasionally downloading the project in case we take a misstep

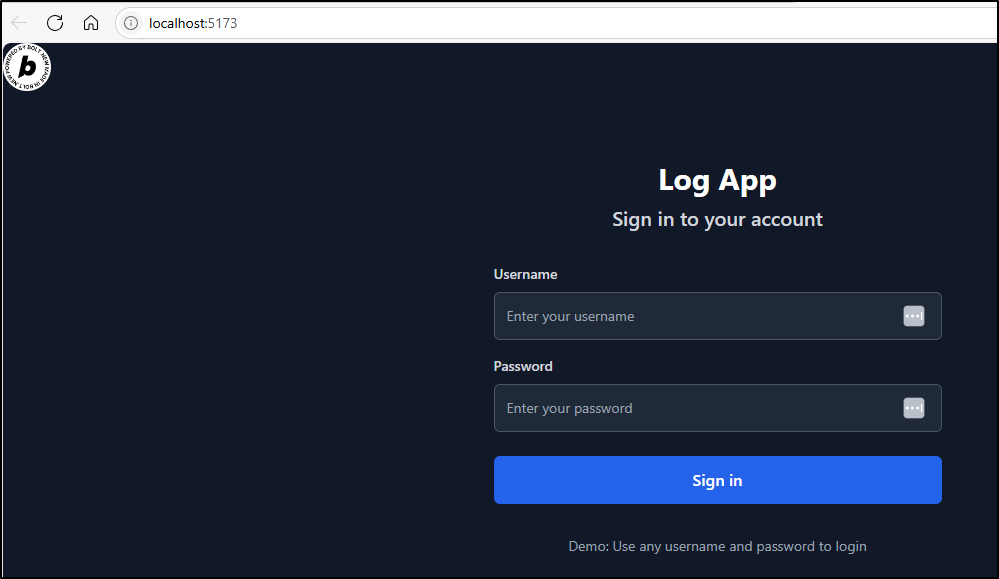

I also used Bolt to create a proper light/dark mode

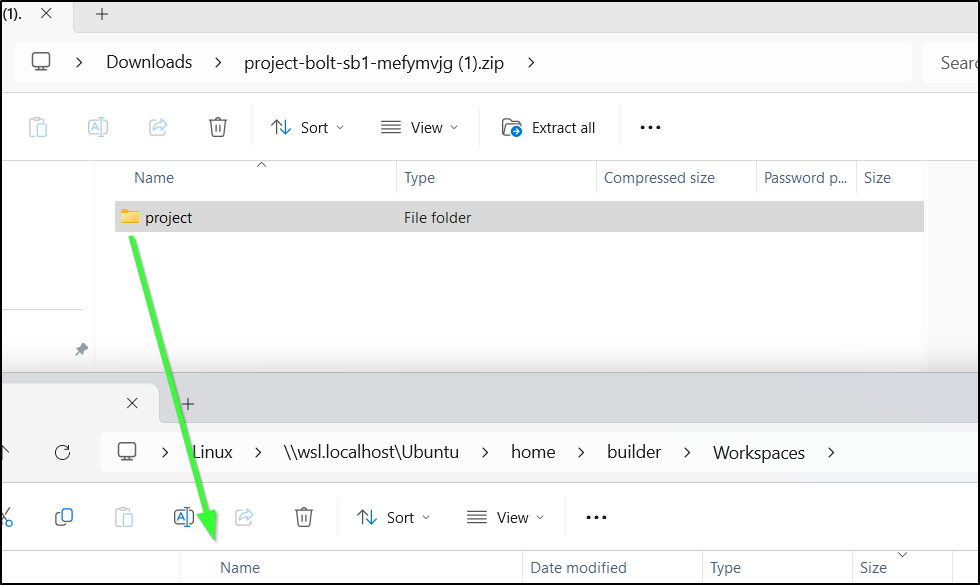

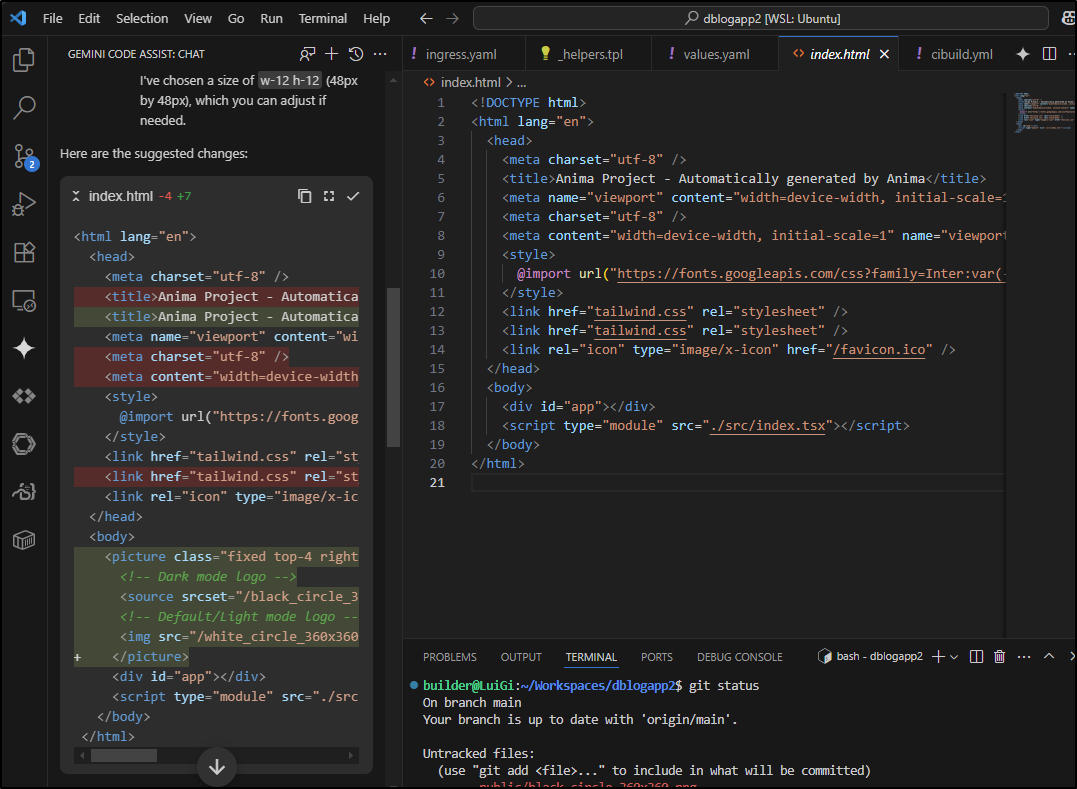

My usual flow is then pull the project from Bolt into WSL where we can do further refinements

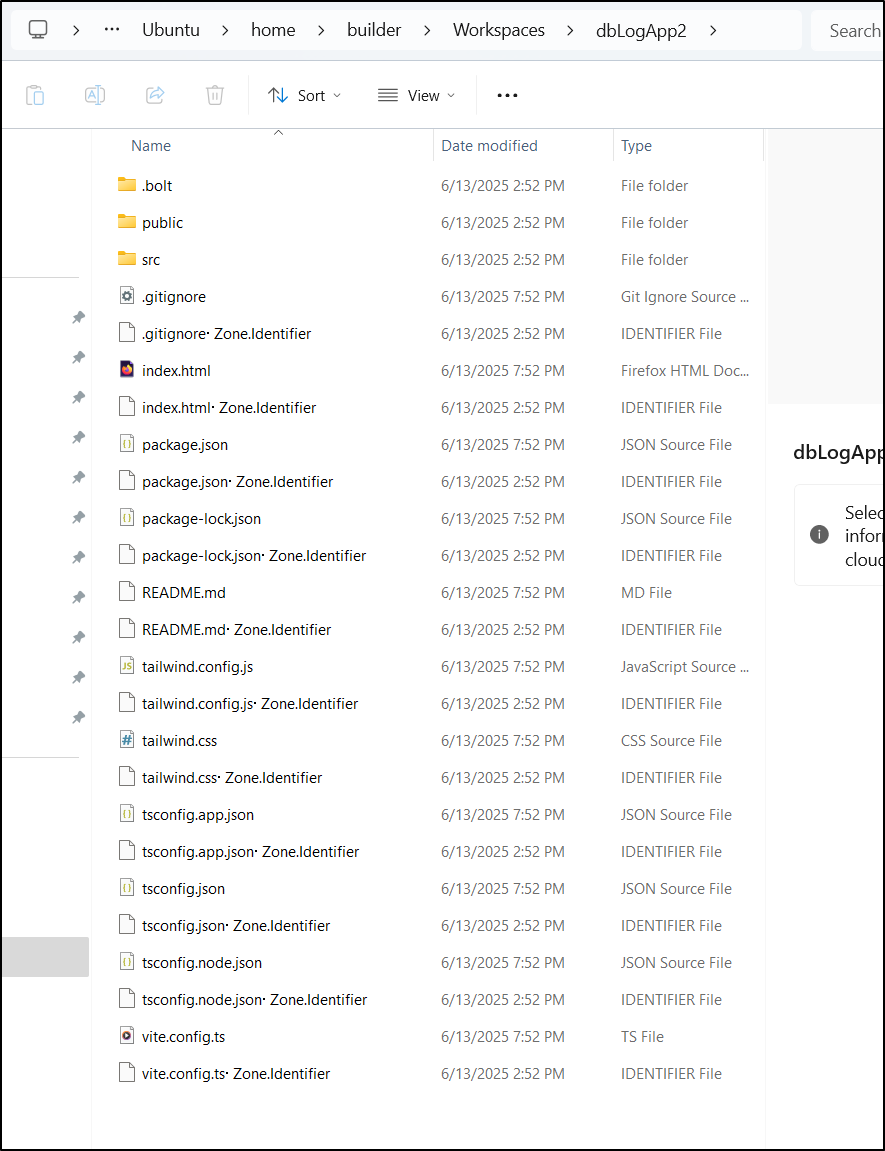

I rename it to dbLogApp2 and make sure I can see the files

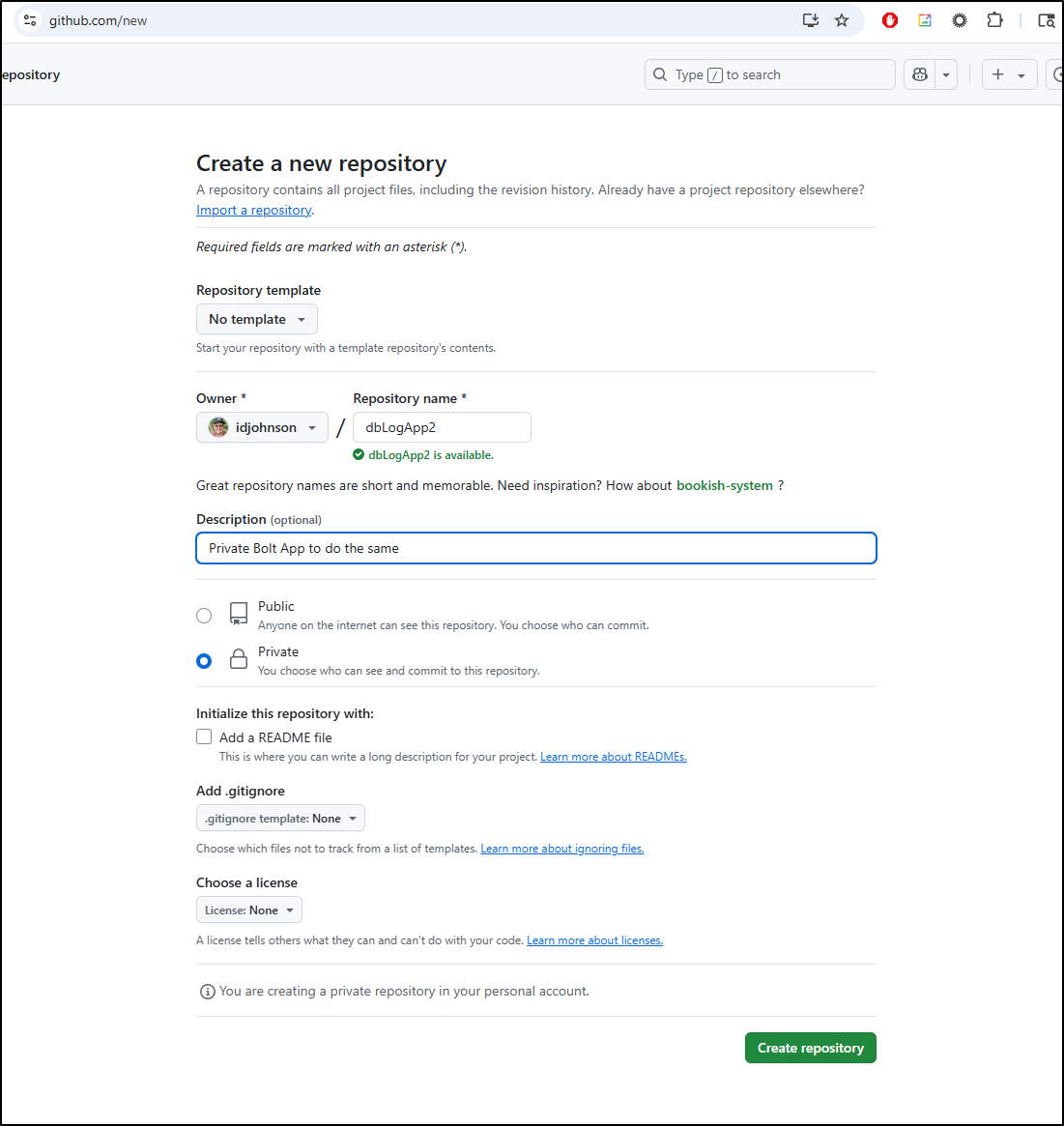

I’m going to want to iterate on this between hosts, so it’s a perfect time to push it to a Github repo

I’ll now init and push to Github

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ git commit -m "Initial Bolt Sync"

[master (root-commit) c2991c7] Initial Bolt Sync

88 files changed, 5451 insertions(+)

create mode 100644 .bolt/ignore

create mode 100644 .bolt/ignore:Zone.Identifier

create mode 100644 .gitignore

create mode 100644 .gitignore:Zone.Identifier

create mode 100644 README.md

create mode 100644 README.md:Zone.Identifier

create mode 100644 index.html

create mode 100644 index.html:Zone.Identifier

create mode 100644 package-lock.json

create mode 100644 package-lock.json:Zone.Identifier

create mode 100644 package.json

create mode 100644 package.json:Zone.Identifier

create mode 100644 public/calendar.svg

create mode 100644 public/calendar.svg:Zone.Identifier

create mode 100644 public/filter.svg

create mode 100644 public/filter.svg:Zone.Identifier

create mode 100644 public/grid.svg

create mode 100644 public/grid.svg:Zone.Identifier

create mode 100644 public/image-1.png

create mode 100644 public/image-1.png:Zone.Identifier

create mode 100644 public/list.svg

create mode 100644 public/list.svg:Zone.Identifier

create mode 100644 public/more-horizontal.svg

create mode 100644 public/more-horizontal.svg:Zone.Identifier

create mode 100644 public/rectangle-1-15.png

create mode 100644 public/rectangle-1-15.png:Zone.Identifier

create mode 100644 public/search.svg

create mode 100644 public/search.svg:Zone.Identifier

create mode 100644 src/App.tsx

create mode 100644 src/App.tsx:Zone.Identifier

create mode 100644 src/components/ui/avatar.tsx

create mode 100644 src/components/ui/avatar.tsx:Zone.Identifier

create mode 100644 src/components/ui/badge.tsx

create mode 100644 src/components/ui/badge.tsx:Zone.Identifier

create mode 100644 src/components/ui/button.tsx

create mode 100644 src/components/ui/button.tsx:Zone.Identifier

create mode 100644 src/components/ui/input.tsx

create mode 100644 src/components/ui/input.tsx:Zone.Identifier

create mode 100644 src/components/ui/table.tsx

create mode 100644 src/components/ui/table.tsx:Zone.Identifier

create mode 100644 src/components/ui/toggle-group.tsx

create mode 100644 src/components/ui/toggle-group.tsx:Zone.Identifier

create mode 100644 src/components/ui/toggle.tsx

create mode 100644 src/components/ui/toggle.tsx:Zone.Identifier

create mode 100644 src/contexts/AuthContext.tsx

create mode 100644 src/contexts/AuthContext.tsx:Zone.Identifier

create mode 100644 src/contexts/ThemeContext.tsx

create mode 100644 src/contexts/ThemeContext.tsx:Zone.Identifier

create mode 100644 src/index.tsx

create mode 100644 src/index.tsx:Zone.Identifier

create mode 100644 src/lib/utils.ts

create mode 100644 src/lib/utils.ts:Zone.Identifier

create mode 100644 src/screens/ListScreen/ListScreen.tsx

create mode 100644 src/screens/ListScreen/ListScreen.tsx:Zone.Identifier

create mode 100644 src/screens/ListScreen/index.ts

create mode 100644 src/screens/ListScreen/index.ts:Zone.Identifier

create mode 100644 src/screens/ListScreen/sections/ActiveLogsSection/ActiveLogsSection.tsx

create mode 100644 src/screens/ListScreen/sections/ActiveLogsSection/ActiveLogsSection.tsx:Zone.Identifier

create mode 100644 src/screens/ListScreen/sections/ActiveLogsSection/LogDetailsModal.tsx

create mode 100644 src/screens/ListScreen/sections/ActiveLogsSection/LogDetailsModal.tsx:Zone.Identifier

create mode 100644 src/screens/ListScreen/sections/HeaderSection/HeaderSection.tsx

create mode 100644 src/screens/ListScreen/sections/HeaderSection/HeaderSection.tsx:Zone.Identifier

create mode 100644 src/screens/ListScreen/sections/HeaderSection/index.ts

create mode 100644 src/screens/ListScreen/sections/HeaderSection/index.ts:Zone.Identifier

create mode 100644 src/screens/ListScreen/sections/NavigationSidebarSection/NavigationSidebarSection.tsx

create mode 100644 src/screens/ListScreen/sections/NavigationSidebarSection/NavigationSidebarSection.tsx:Zone.Identifier

create mode 100644 src/screens/ListScreen/sections/NavigationSidebarSection/index.ts

create mode 100644 src/screens/ListScreen/sections/NavigationSidebarSection/index.ts:Zone.Identifier

create mode 100644 src/screens/LoginScreen/LoginScreen.tsx

create mode 100644 src/screens/LoginScreen/LoginScreen.tsx:Zone.Identifier

create mode 100644 src/screens/LoginScreen/index.ts

create mode 100644 src/screens/LoginScreen/index.ts:Zone.Identifier

create mode 100644 src/screens/SettingsScreen/SettingsScreen.tsx

create mode 100644 src/screens/SettingsScreen/SettingsScreen.tsx:Zone.Identifier

create mode 100644 src/screens/SettingsScreen/index.ts

create mode 100644 src/screens/SettingsScreen/index.ts:Zone.Identifier

create mode 100644 tailwind.config.js

create mode 100644 tailwind.config.js:Zone.Identifier

create mode 100644 tailwind.css

create mode 100644 tailwind.css:Zone.Identifier

create mode 100644 tsconfig.app.json

create mode 100644 tsconfig.app.json:Zone.Identifier

create mode 100644 tsconfig.json

create mode 100644 tsconfig.json:Zone.Identifier

create mode 100644 tsconfig.node.json

create mode 100644 tsconfig.node.json:Zone.Identifier

create mode 100644 vite.config.ts

create mode 100644 vite.config.ts:Zone.Identifier

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ git branch -M main

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ git remote add origin https://github.com/idjohnson/dbLogApp2.git

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ git push -u origin main

Enumerating objects: 62, done.

Counting objects: 100% (62/62), done.

Delta compression using up to 16 threads

Compressing objects: 100% (56/56), done.

Writing objects: 100% (62/62), 17.70 MiB | 3.93 MiB/s, done.

Total 62 (delta 6), reused 0 (delta 0)

remote: Resolving deltas: 100% (6/6), done.

To https://github.com/idjohnson/dbLogApp2.git

* [new branch] main -> main

Branch 'main' set up to track remote branch 'main' from 'origin'.

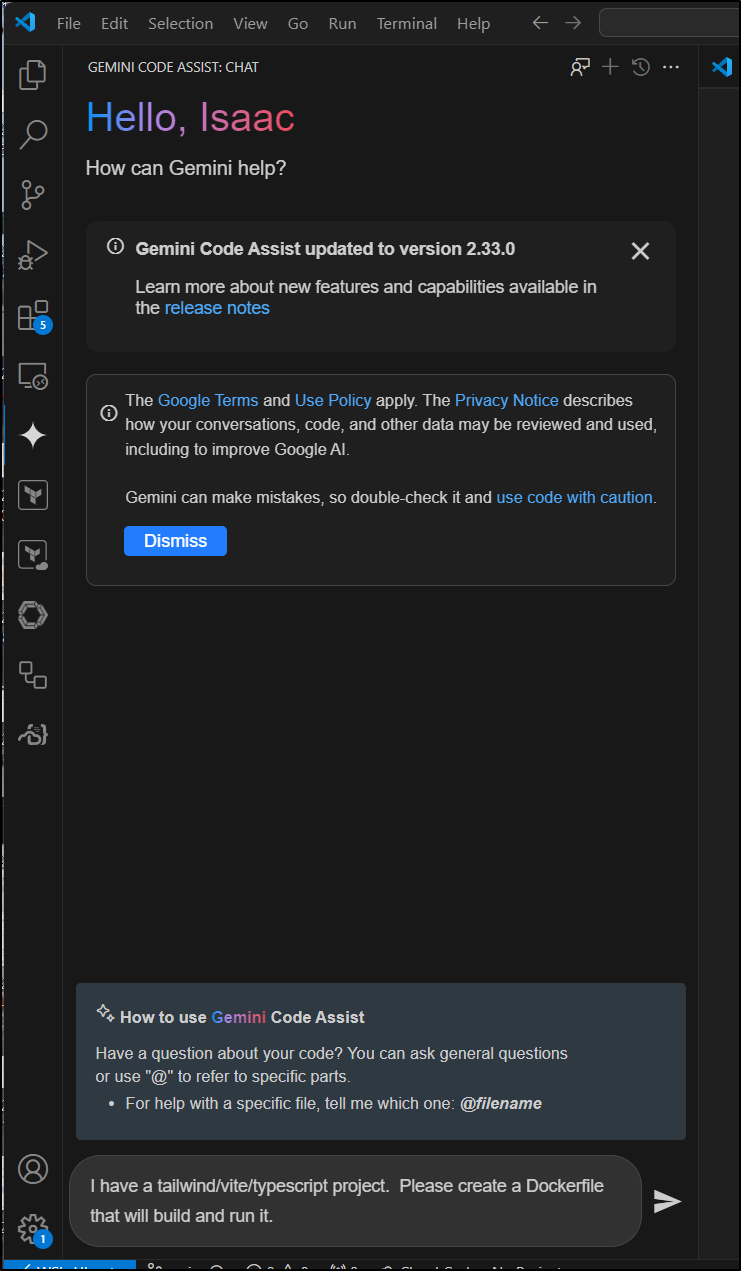

Here is we now have a lot of choice for how to proceed. We could use Cursor, Claude Code, some AI models via Github Copilot or Google Gemini Code Assist. Lot’s of AI tools from which to pick. All of the prior except Claude Code are essentially free to me.

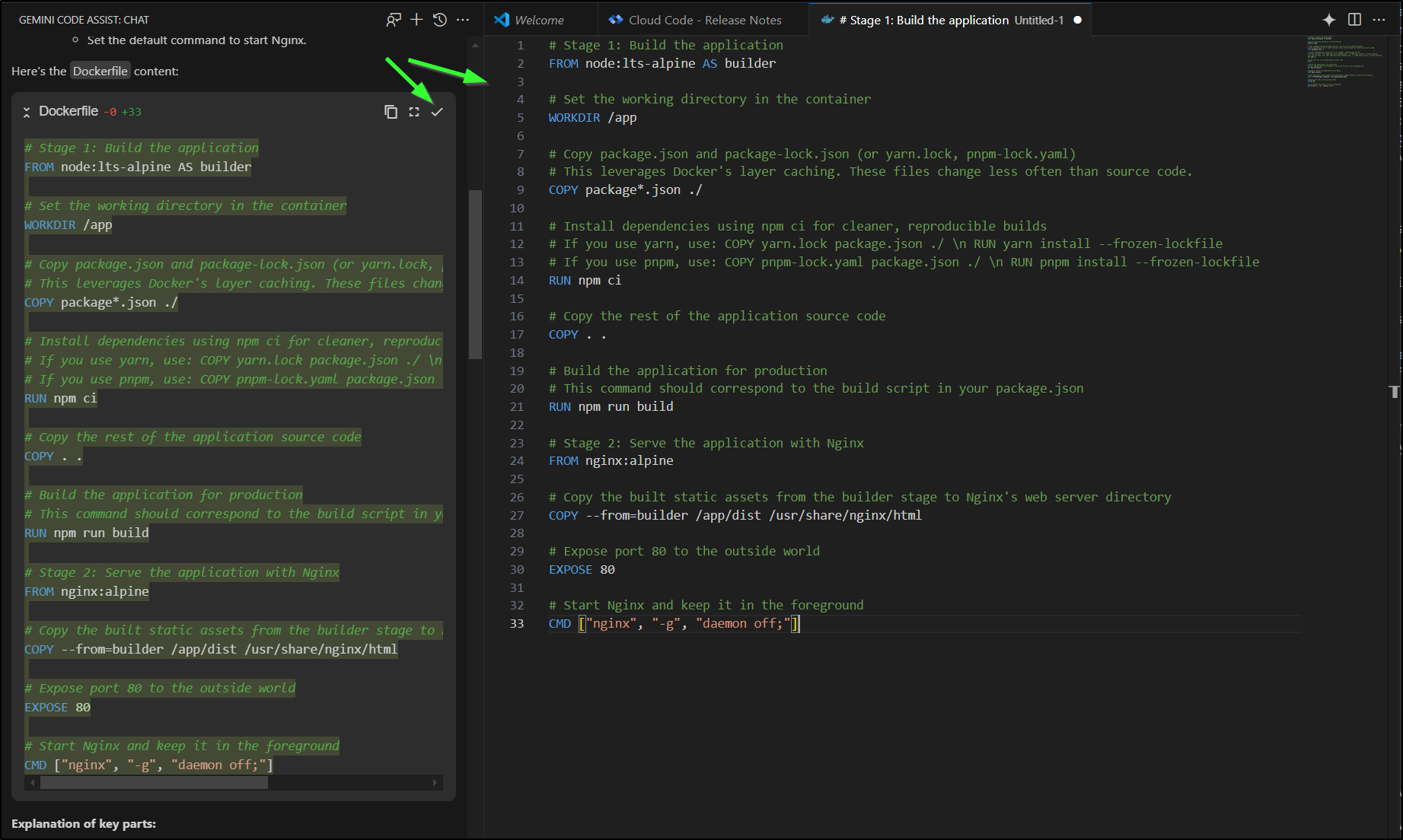

Let’s start with Gemini Code Assist to get a working Dockerfile.

I have a tailwind/vite/typescript project. Please create a Dockerfile that will build and run it.

I can use the check to kick the proposed Dockerfile over to a new editor window

This Dockerfile is really designed to compile a static site and just serve it up via Nginx

# Stage 1: Build the application

FROM node:lts-alpine AS builder

# Set the working directory in the container

WORKDIR /app

# Copy package.json and package-lock.json (or yarn.lock, pnpm-lock.yaml)

# This leverages Docker's layer caching. These files change less often than source code.

COPY package*.json ./

# Install dependencies using npm ci for cleaner, reproducible builds

# If you use yarn, use: COPY yarn.lock package.json ./ \n RUN yarn install --frozen-lockfile

# If you use pnpm, use: COPY pnpm-lock.yaml package.json ./ \n RUN pnpm install --frozen-lockfile

RUN npm ci

# Copy the rest of the application source code

COPY . .

# Build the application for production

# This command should correspond to the build script in your package.json

RUN npm run build

# Stage 2: Serve the application with Nginx

FROM nginx:alpine

# Copy the built static assets from the builder stage to Nginx's web server directory

COPY --from=builder /app/dist /usr/share/nginx/html

# Expose port 80 to the outside world

EXPOSE 80

# Start Nginx and keep it in the foreground

CMD ["nginx", "-g", "daemon off;"]

Still, let’s test it with a docker build

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ docker build -t mybolttest:0.1 .

[+] Building 19.0s (16/16) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.14kB 0.0s

=> [internal] load metadata for docker.io/library/nginx:alpine 1.0s

=> [internal] load metadata for docker.io/library/node:lts-alpine 0.9s

=> [auth] library/node:pull token for registry-1.docker.io 0.0s

=> [auth] library/nginx:pull token for registry-1.docker.io 0.0s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> [builder 1/6] FROM docker.io/library/node:lts-alpine@sha256:41e4389f3d988d2ed55392df4db1420ad048ae53324a8e2b7 6.7s

=> => resolve docker.io/library/node:lts-alpine@sha256:41e4389f3d988d2ed55392df4db1420ad048ae53324a8e2b7c6d19508 0.0s

=> => sha256:fb419a5d2f1d0d7ebb463275ecb1b4e71b70ecc6b8afa26e48cbe8918cfd944b 6.21kB / 6.21kB 0.0s

=> => sha256:fe07684b16b82247c3539ed86a65ff37a76138ec25d380bd80c869a1a4c73236 3.80MB / 3.80MB 0.3s

=> => sha256:65b9c193e6b7c9d299d1f52e4accba0a196032bdc252ed873ada8952c50612b2 50.96MB / 50.96MB 2.1s

=> => sha256:826f8ad948ffe9f7dc092f12ede9e6f14c1db6dae6c0c20bad69d3d9056a02a4 1.26MB / 1.26MB 0.4s

=> => sha256:41e4389f3d988d2ed55392df4db1420ad048ae53324a8e2b7c6d19508288107e 6.41kB / 6.41kB 0.0s

=> => sha256:11d923cca2138d844282dc0c333132bba72deb913d635c3c33e54523b455a4da 1.72kB / 1.72kB 0.0s

=> => extracting sha256:fe07684b16b82247c3539ed86a65ff37a76138ec25d380bd80c869a1a4c73236 0.3s

=> => sha256:cb37e5b9a0a186241dd70c5ceab5af7a7a891d3180a4b160af2fce1cf46383ac 446B / 446B 0.4s

=> => extracting sha256:65b9c193e6b7c9d299d1f52e4accba0a196032bdc252ed873ada8952c50612b2 4.1s

=> => extracting sha256:826f8ad948ffe9f7dc092f12ede9e6f14c1db6dae6c0c20bad69d3d9056a02a4 0.1s

=> => extracting sha256:cb37e5b9a0a186241dd70c5ceab5af7a7a891d3180a4b160af2fce1cf46383ac 0.0s

=> [stage-1 1/2] FROM docker.io/library/nginx:alpine@sha256:65645c7bb6a0661892a8b03b89d0743208a18dd2f3f17a54ef4b 2.9s

=> => resolve docker.io/library/nginx:alpine@sha256:65645c7bb6a0661892a8b03b89d0743208a18dd2f3f17a54ef4b76fb8e2f 0.0s

=> => sha256:62223d644fa234c3a1cc785ee14242ec47a77364226f1c811d2f669f96dc2ac8 2.50kB / 2.50kB 0.0s

=> => sha256:6769dc3a703c719c1d2756bda113659be28ae16cf0da58dd5fd823d6b9a050ea 10.79kB / 10.79kB 0.0s

=> => sha256:65645c7bb6a0661892a8b03b89d0743208a18dd2f3f17a54ef4b76fb8e2f2a10 10.33kB / 10.33kB 0.0s

=> => sha256:61ca4f733c802afd9e05a32f0de0361b6d713b8b53292dc15fb093229f648674 1.79MB / 1.79MB 0.8s

=> => sha256:b464cfdf2a6319875aeb27359ec549790ce14d8214fcb16ef915e4530e5ed235 629B / 629B 0.7s

=> => extracting sha256:61ca4f733c802afd9e05a32f0de0361b6d713b8b53292dc15fb093229f648674 0.1s

=> => sha256:d7e5070240863957ebb0b5a44a5729963c3462666baa2947d00628cb5f2d5773 955B / 955B 0.8s

=> => sha256:81bd8ed7ec6789b0cb7f1b47ee731c522f6dba83201ec73cd6bca1350f582948 402B / 402B 0.9s

=> => sha256:197eb75867ef4fcecd4724f17b0972ab0489436860a594a9445f8eaff8155053 1.21kB / 1.21kB 0.9s

=> => sha256:34a64644b756511a2e217f0508e11d1a572085d66cd6dc9a555a082ad49a3102 1.40kB / 1.40kB 1.0s

=> => sha256:39c2ddfd6010082a4a646e7ca44e95aca9bf3eaebc00f17f7ccc2954004f2a7d 15.52MB / 15.52MB 1.8s

=> => extracting sha256:b464cfdf2a6319875aeb27359ec549790ce14d8214fcb16ef915e4530e5ed235 0.0s

=> => extracting sha256:d7e5070240863957ebb0b5a44a5729963c3462666baa2947d00628cb5f2d5773 0.0s

=> => extracting sha256:81bd8ed7ec6789b0cb7f1b47ee731c522f6dba83201ec73cd6bca1350f582948 0.0s

=> => extracting sha256:197eb75867ef4fcecd4724f17b0972ab0489436860a594a9445f8eaff8155053 0.0s

=> => extracting sha256:34a64644b756511a2e217f0508e11d1a572085d66cd6dc9a555a082ad49a3102 0.0s

=> => extracting sha256:39c2ddfd6010082a4a646e7ca44e95aca9bf3eaebc00f17f7ccc2954004f2a7d 0.8s

=> [internal] load build context 0.4s

=> => transferring context: 37.36MB 0.3s

=> [builder 2/6] WORKDIR /app 0.3s

=> [builder 3/6] COPY package*.json ./ 0.0s

=> [builder 4/6] RUN npm ci 6.0s

=> [builder 5/6] COPY . . 0.2s

=> [builder 6/6] RUN npm run build 4.2s

=> [stage-1 2/2] COPY --from=builder /app/dist /usr/share/nginx/html 0.1s

=> exporting to image 0.2s

=> => exporting layers 0.2s

=> => writing image sha256:715d6bcd51d5af73fd0465beb7f0860655b97790477320915c6f9e2df9f0c6c8 0.0s

=> => naming to docker.io/library/mybolttest:0.1 0.0s

Then run the built container

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ docker run -p 8088:80 mybolttest:0.1

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Sourcing /docker-entrypoint.d/15-local-resolvers.envsh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2025/06/13 20:04:57 [notice] 1#1: using the "epoll" event method

2025/06/13 20:04:57 [notice] 1#1: nginx/1.27.5

2025/06/13 20:04:57 [notice] 1#1: built by gcc 14.2.0 (Alpine 14.2.0)

2025/06/13 20:04:57 [notice] 1#1: OS: Linux 6.6.87.1-microsoft-standard-WSL2

2025/06/13 20:04:57 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2025/06/13 20:04:57 [notice] 1#1: start worker processes

2025/06/13 20:04:57 [notice] 1#1: start worker process 30

2025/06/13 20:04:57 [notice] 1#1: start worker process 31

2025/06/13 20:04:57 [notice] 1#1: start worker process 32

2025/06/13 20:04:57 [notice] 1#1: start worker process 33

2025/06/13 20:04:57 [notice] 1#1: start worker process 34

2025/06/13 20:04:57 [notice] 1#1: start worker process 35

2025/06/13 20:04:57 [notice] 1#1: start worker process 36

2025/06/13 20:04:57 [notice] 1#1: start worker process 37

2025/06/13 20:04:57 [notice] 1#1: start worker process 38

2025/06/13 20:04:57 [notice] 1#1: start worker process 39

2025/06/13 20:04:57 [notice] 1#1: start worker process 40

2025/06/13 20:04:57 [notice] 1#1: start worker process 41

2025/06/13 20:04:57 [notice] 1#1: start worker process 42

2025/06/13 20:04:57 [notice] 1#1: start worker process 43

2025/06/13 20:04:57 [notice] 1#1: start worker process 44

2025/06/13 20:04:57 [notice] 1#1: start worker process 45

We can see it works just fine (albiet static)

I used GPT-4.1 via Copilot to recreate the Dockerfile to run NodeJS instead

# Use Node.js base image

FROM node:lts-alpine

WORKDIR /app

# Install dependencies

COPY package*.json ./

RUN npm ci

# Copy source code

COPY . .

# Expose Vite's default port

EXPOSE 5173

# Start Vite dev server

CMD ["npm", "run", "dev", "--", "--host", "0.0.0.0"]

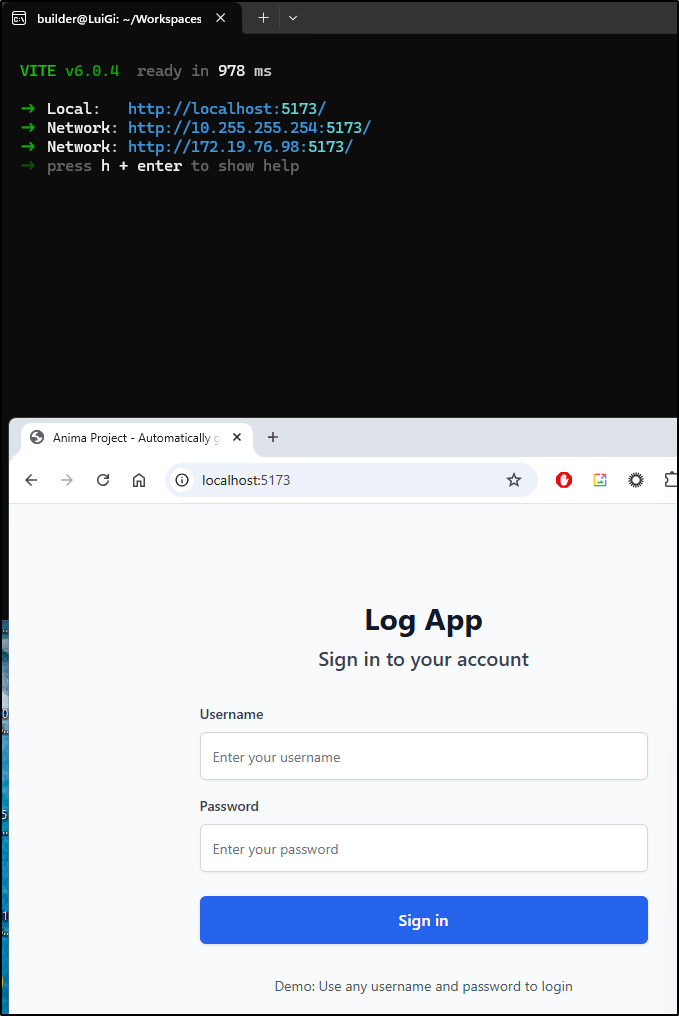

And just testing the local steps seems to work

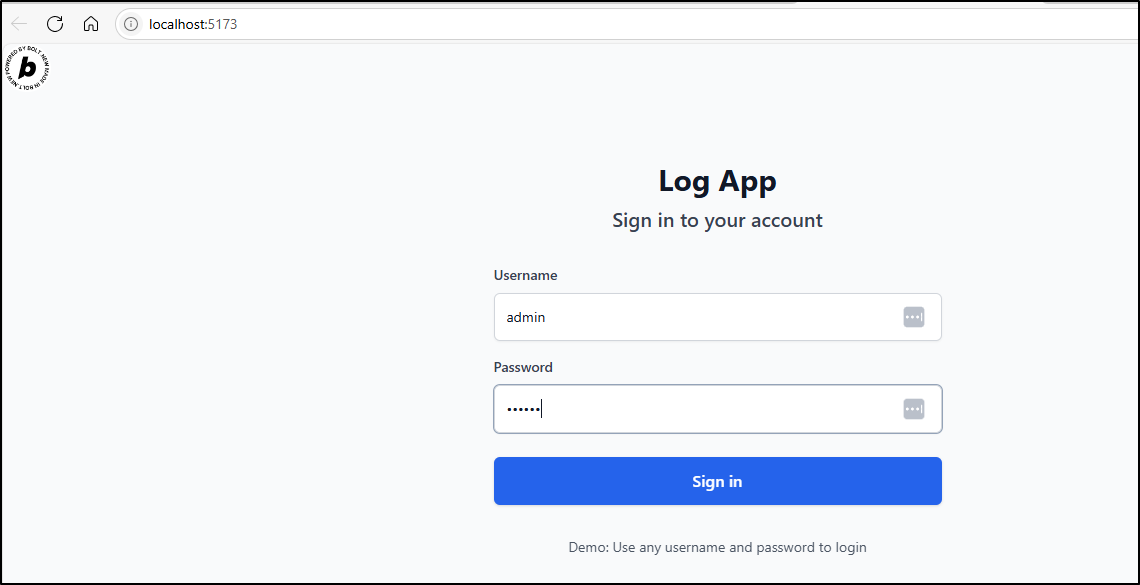

Adding a login and password next:

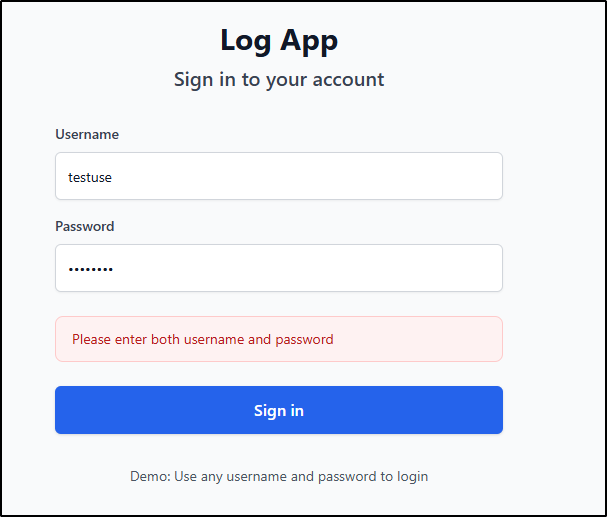

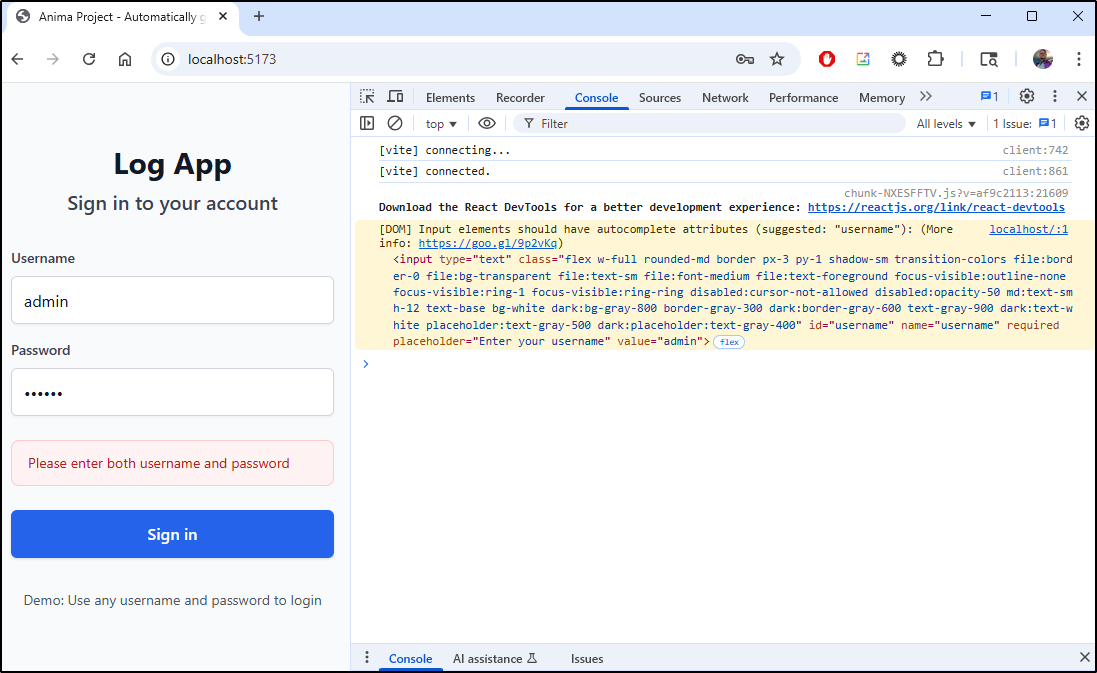

Which I can test locally

builder@LuiGi:~/Workspaces/dblogapp2$ export VITE_APP_USERNAME=admin

builder@LuiGi:~/Workspaces/dblogapp2$ export VITE_APP_PASSWORD=mypass

builder@LuiGi:~/Workspaces/dblogapp2$ npm run dev -- --host 0.0.0.0

After fixing a minor typescript error, i could see it work locally

Now I see an error when using the valid credentials however

Which was caused by a type (I originally set up “VITA_APP_PASSWORD”). Setting the environment keys correctly sorted that out.

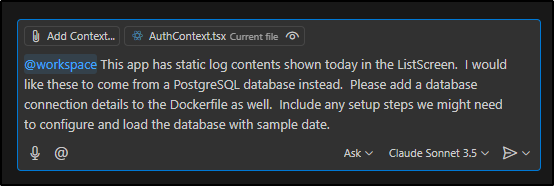

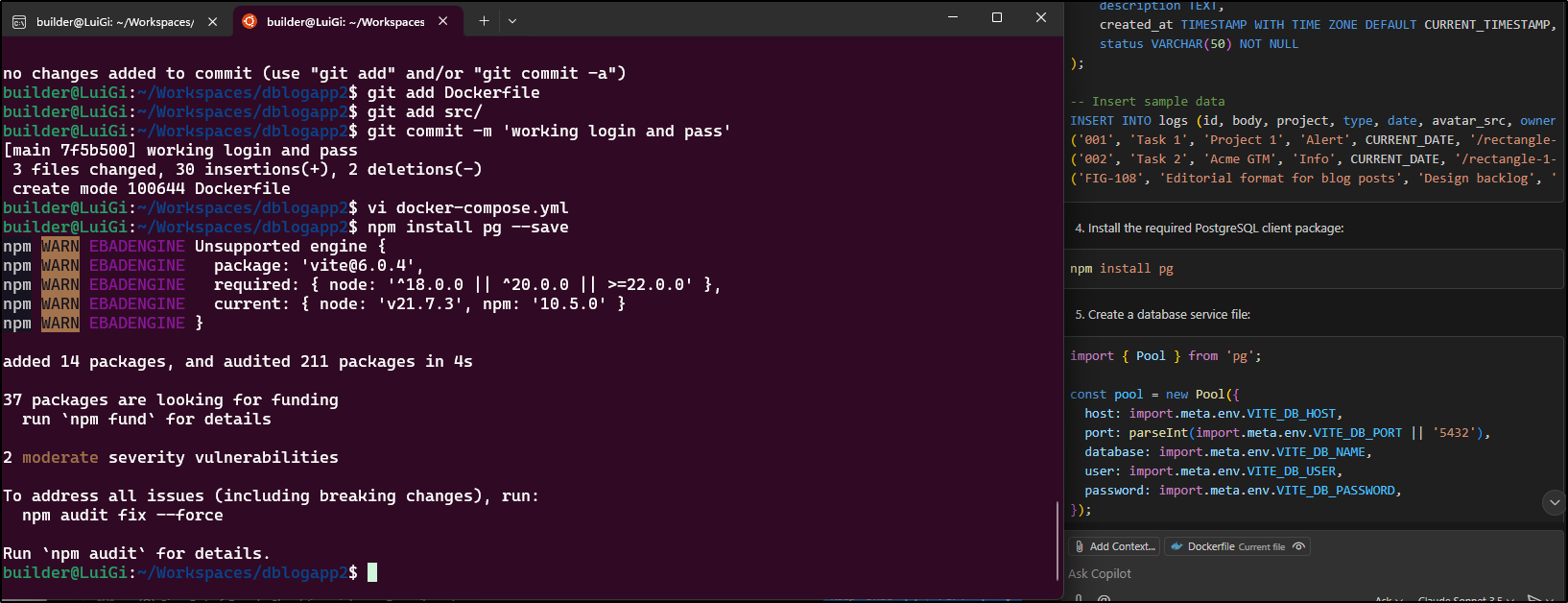

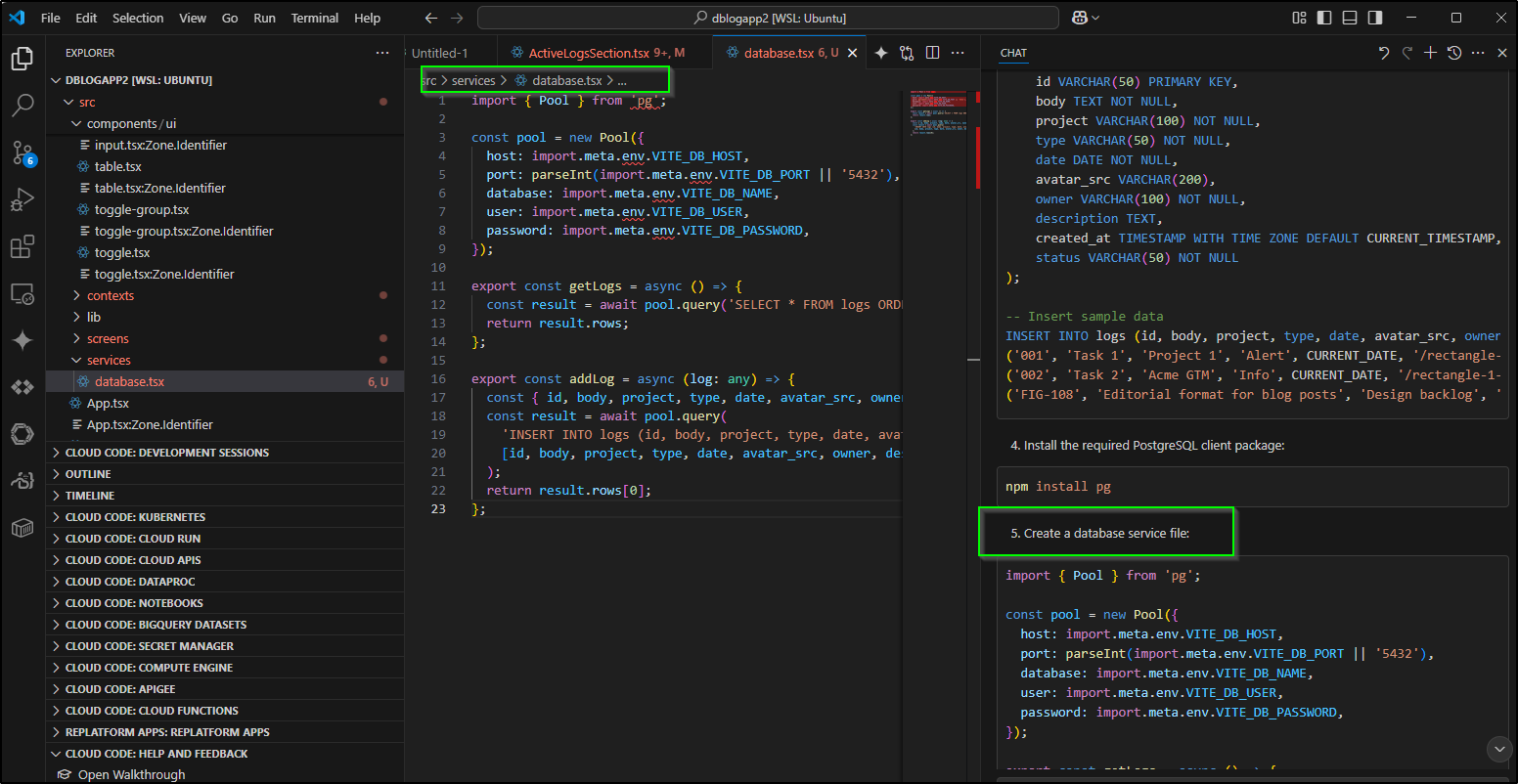

Next, I’ll switch from GPT-4.1 to Claude Sonnet 3.5 to see if it can sort us out a Database instead of static entries

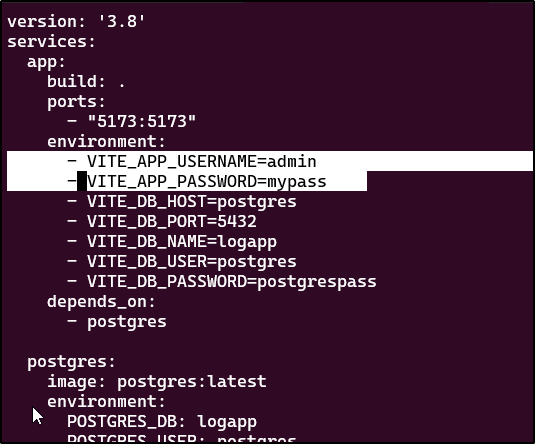

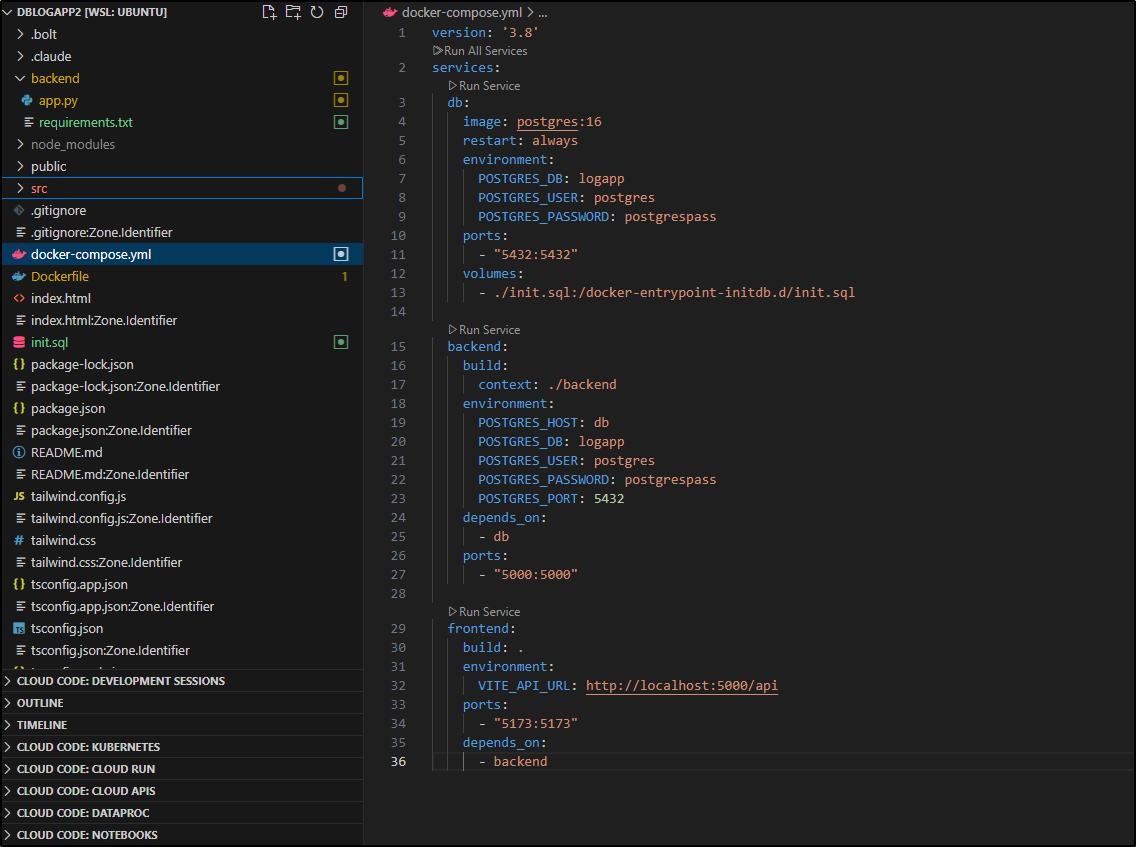

This involved creating a docker-compose.yml to create and initialize a database

version: '3.8'

services:

app:

build: .

ports:

- "5173:5173"

environment:

- VITE_DB_HOST=postgres

- VITE_DB_PORT=5432

- VITE_DB_NAME=logapp

- VITE_DB_USER=postgres

- VITE_DB_PASSWORD=postgrespass

depends_on:

- postgres

postgres:

image: postgres:latest

environment:

POSTGRES_DB: logapp

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgrespass

ports:

- "5432:5432"

volumes:

- ./init.sql:/docker-entrypoint-initdb.d/init.sql

and the init script

CREATE TABLE logs (

id VARCHAR(50) PRIMARY KEY,

body TEXT NOT NULL,

project VARCHAR(100) NOT NULL,

type VARCHAR(50) NOT NULL,

date DATE NOT NULL,

avatar_src VARCHAR(200),

owner VARCHAR(100) NOT NULL,

description TEXT,

created_at TIMESTAMP WITH TIME ZONE DEFAULT CURRENT_TIMESTAMP,

status VARCHAR(50) NOT NULL

);

-- Insert sample data

INSERT INTO logs (id, body, project, type, date, avatar_src, owner, description, status) VALUES

('001', 'Task 1', 'Project 1', 'Alert', CURRENT_DATE, '/rectangle-1-15.png', 'John Doe', 'This is a detailed description of Task 1', 'Active'),

('002', 'Task 2', 'Acme GTM', 'Info', CURRENT_DATE, '/rectangle-1-15.png', 'Jane Smith', 'Task 2 focuses on implementing core functionality', 'In Progress'),

('FIG-108', 'Editorial format for blog posts', 'Design backlog', 'High', CURRENT_DATE, '/rectangle-1-15.png', 'Rachel Green', 'Design and implement editorial formatting standards', 'High Priority');

When following steps, one still needs to watch for mistakes. For instance, just to ensure it is saved in a package.json for later Docker builds, I will add --save to my npm install pg

Sometimes the AI response can leave out important details like the filename, so you have to sort of figure that out yourself

It also forgot about our username and password when proposing a Docker compose file

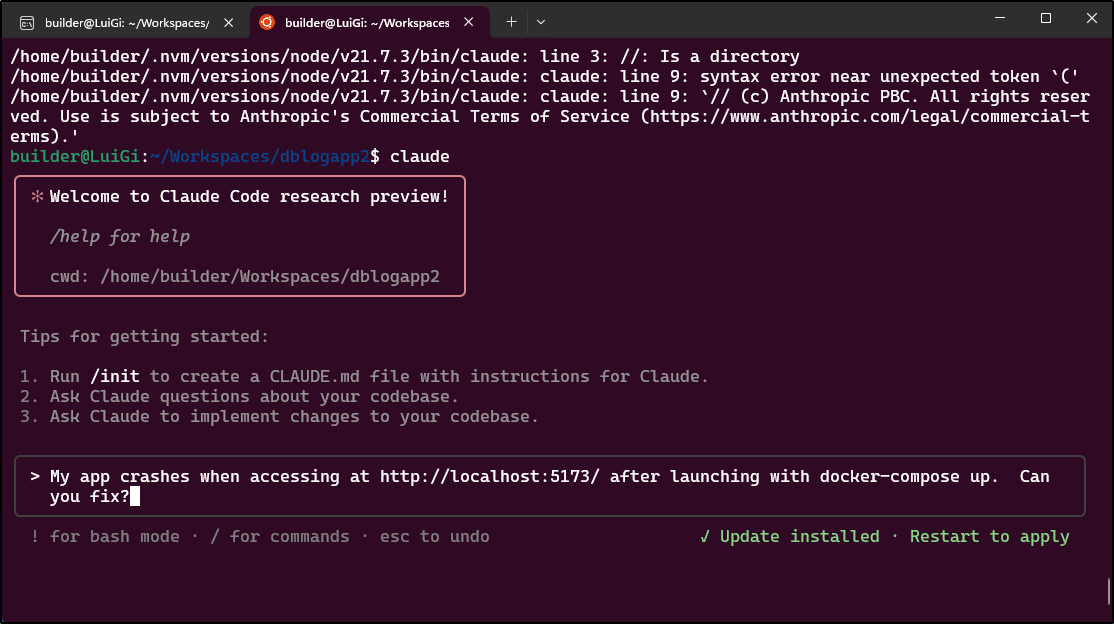

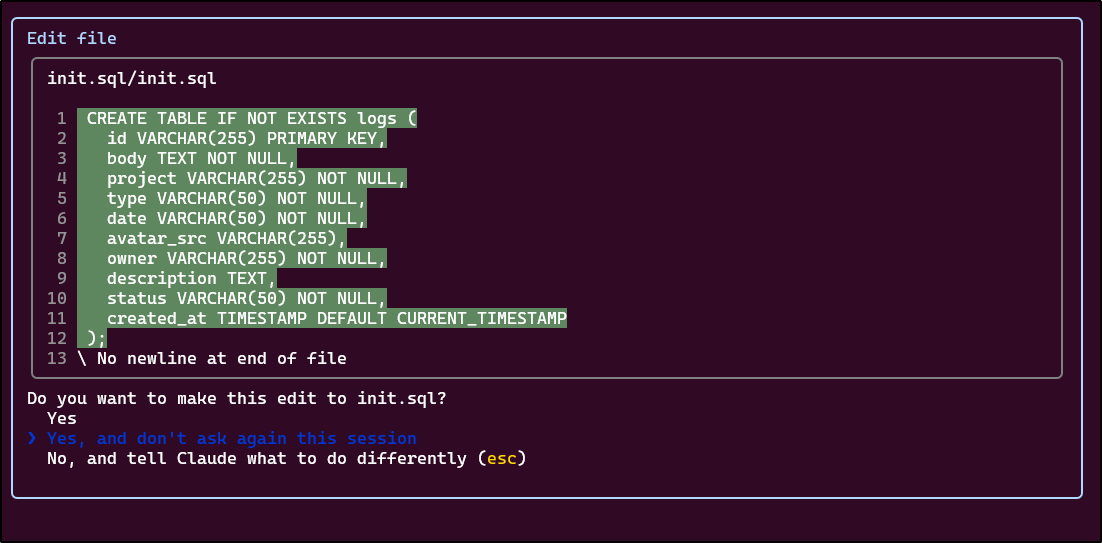

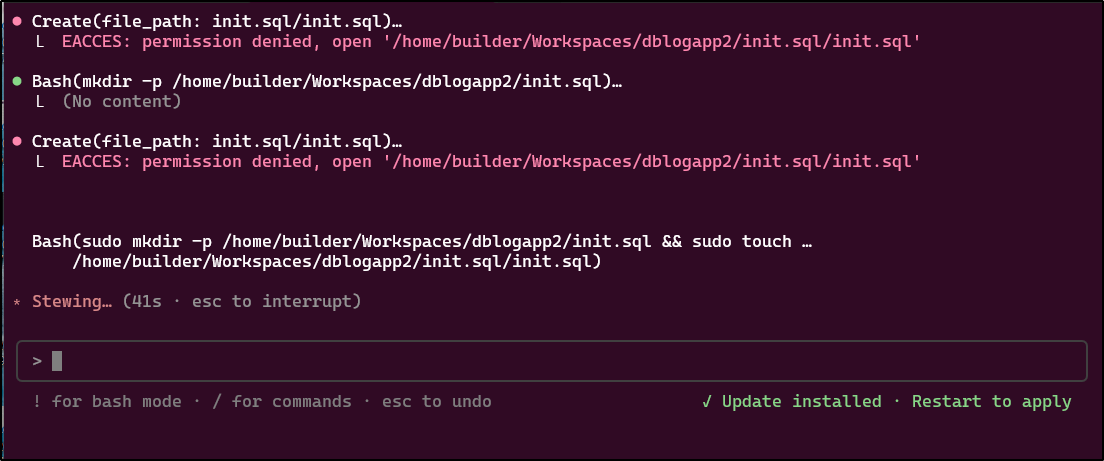

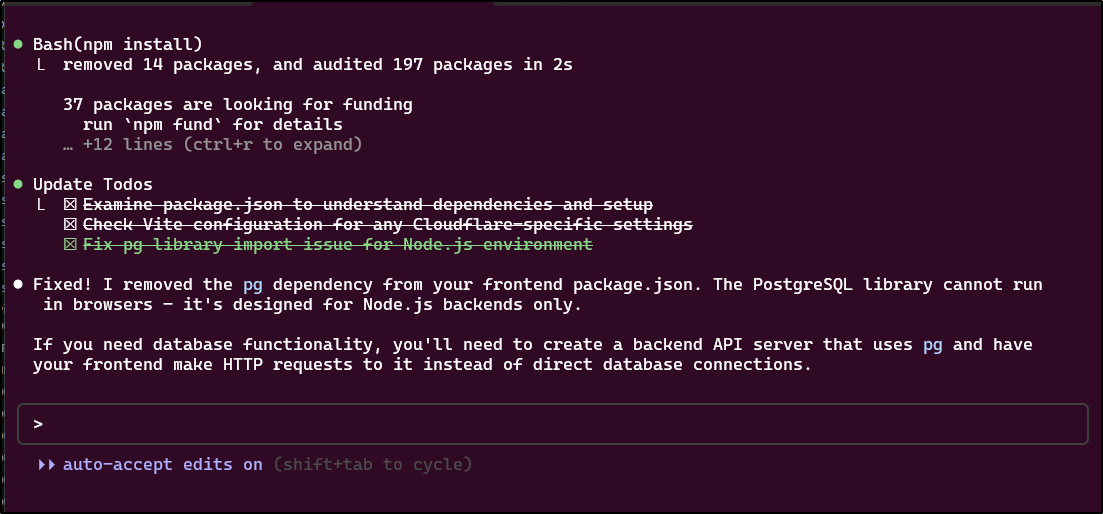

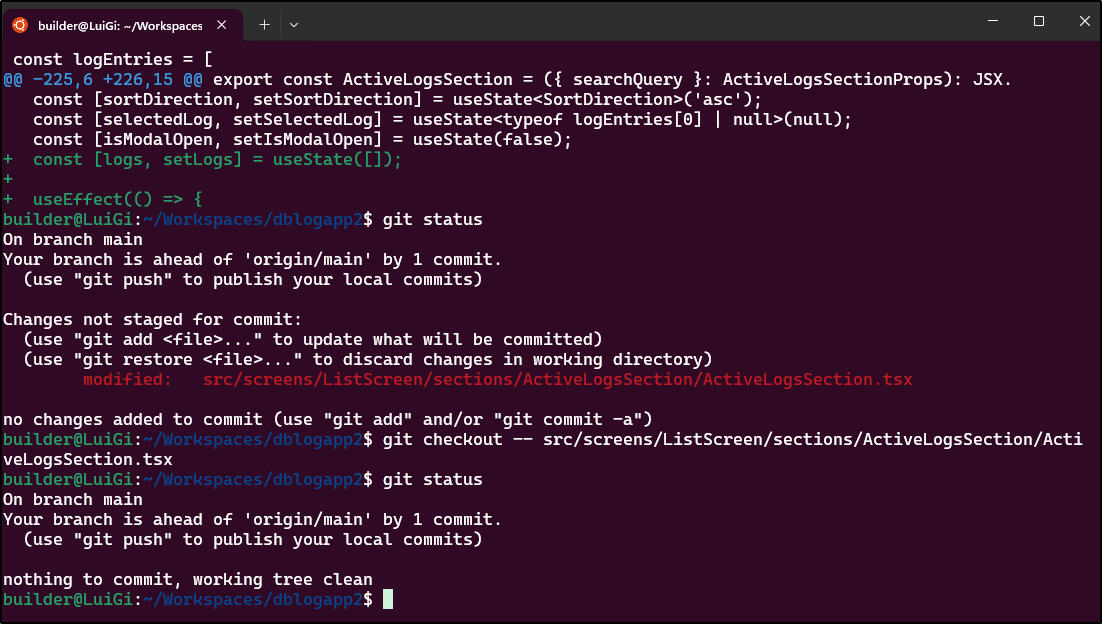

I’m going to pivot to Claude Code to fix up the Docker Compose issues

I have no idea why Claude Code wants to make nested SQL files, but I’ll offer some wide latitude to start

It seems to be timing out.. and I don’t know why it wants to use sudo for this

It is very wrong. That does not even work in the docker-compose. I’ll have to move that back

-rw-r--r-- 1 builder builder 124150 Jun 13 16:49 package-lock.json

drwxr-xr-x 7 builder builder 4096 Jun 13 16:54 src

-rw-r--r-- 1 builder builder 594 Jun 13 16:58 docker-compose.yml

drwxr-xr-x 2 root root 4096 Jun 13 17:00 init.sql

I’ll give Claude one more try

As it moves along, I definately see it start to address what I think might be the issue

But it’s big answer was just to remove pg from the package.json. I cannot see how that helps

That was a wasted 15c

● Fixed! I removed the pg dependency from your frontend package.json. The PostgreSQL library cannot run

in browsers - it's designed for Node.js backends only.

If you need database functionality, you'll need to create a backend API server that uses pg and have

your frontend make HTTP requests to it instead of direct database connections.

Total cost: $0.1457

Total duration (API): 2m 22.0s

Total duration (wall): 3m 56.1s

Total code changes: 0 lines added, 1 line removed

Token usage by model:

claude-3-5-haiku: 10.5k input, 421 output, 0 cache read, 0 cache write

claude-sonnet: 46 input, 1.9k output, 137.5k cache read, 17.6k cache write

At some point we need to scratch and start over. This is a good point to do that

I’ll pivot to another approach

I want to create a PostgreSQL database that will hold the logEntries we have hardcoded presently in ActiveLogSection.tsx and we should serve it with docker. There should be a python flask app that serves up the database logentries on an API and lastly our VITE code should be changed to query that service

This then builds out the app

The first test found the backend was missing a Dockerfile

builder@LuiGi:~/Workspaces/dblogapp2$ docker compose up

WARN[0000] /home/builder/Workspaces/dblogapp2/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

[+] Running 15/15

✔ db Pulled 38.2s

✔ dad67da3f26b Pull complete 17.0s

✔ 3cb5663a2cfc Pull complete 17.2s

✔ 5645870ddfdf Pull complete 18.0s

✔ 2b394d233eb5 Pull complete 18.4s

✔ 90cc0ca7a799 Pull complete 20.7s

✔ 8f3f4fa66428 Pull complete 21.2s

✔ 2031aa8ef9b1 Pull complete 21.3s

✔ f427288209f2 Pull complete 21.5s

✔ 97d4026b1ac7 Pull complete 35.4s

✔ 113b71174c6e Pull complete 35.5s

✔ c5bc88ab0d97 Pull complete 35.6s

✔ bfadd549156d Pull complete 35.7s

✔ 2534a56ca63b Pull complete 35.8s

✔ 9cdc3fa4c969 Pull complete 35.9s

Compose can now delegate builds to bake for better performance.

To do so, set COMPOSE_BAKE=true.

[+] Building 0.4s (1/1) FINISHED docker:default

=> [backend internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 2B 0.0s

failed to solve: failed to read dockerfile: open Dockerfile: no such file or directory

I added that

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY app.py .

CMD ["python", "app.py"]

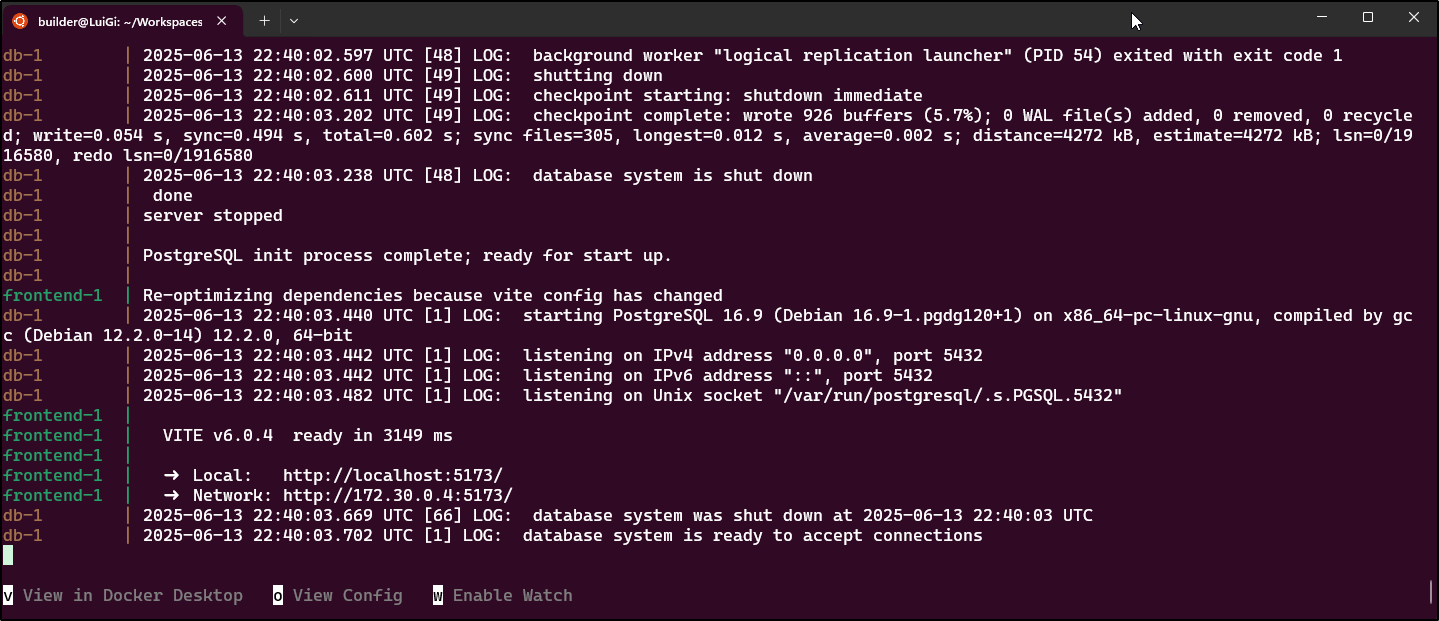

This time it launched

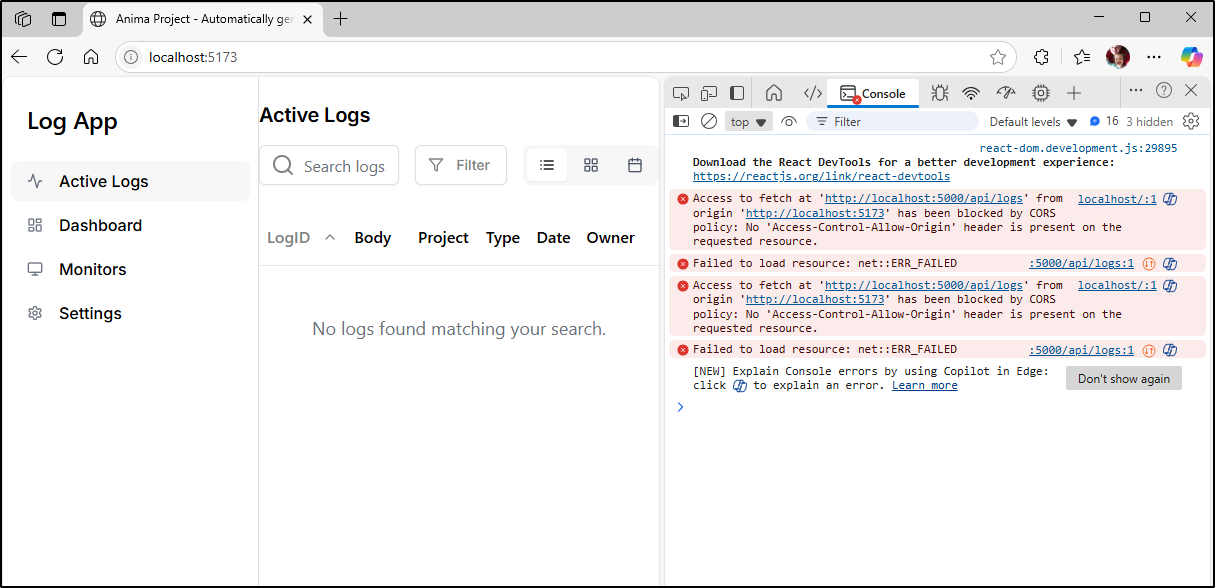

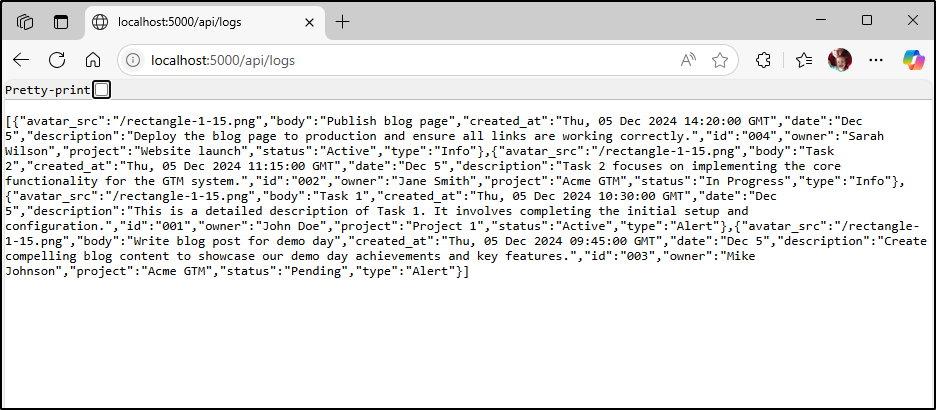

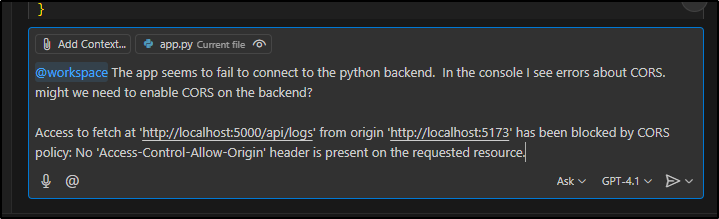

The new issue seems CORS related - namely no logs shown

However, I can see logs being returned by the Python REST API

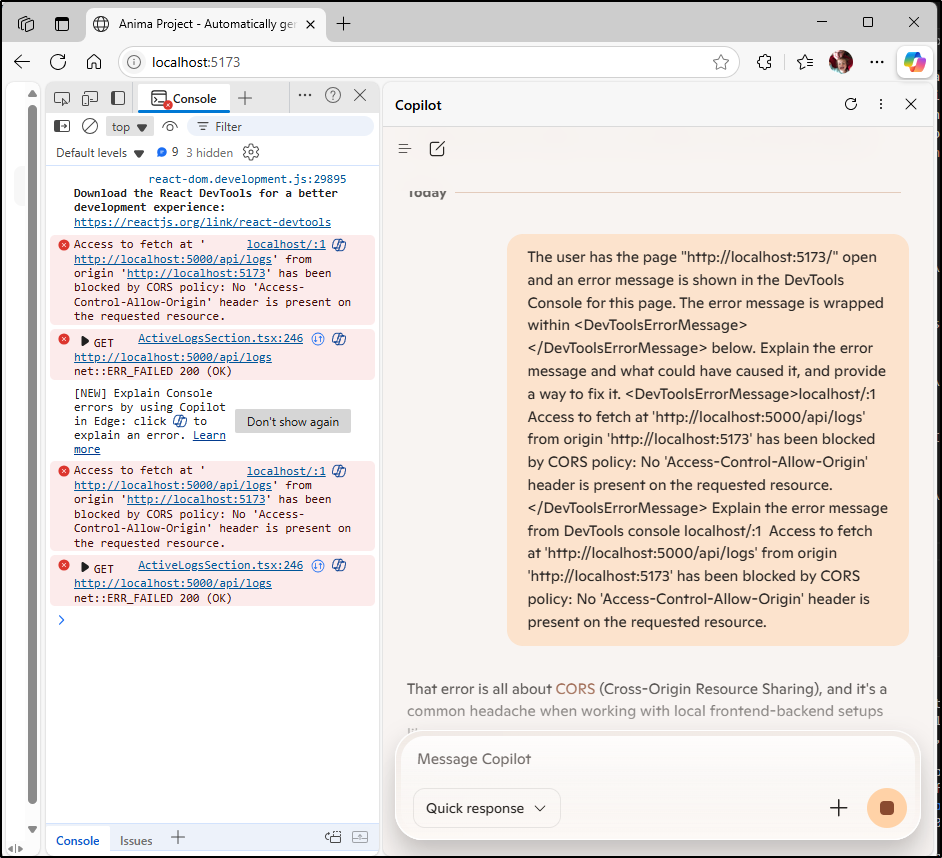

I’ll use the Developer Tools in Edge to kick the error to Microsoft Copilot (distinct from Github Copilot)

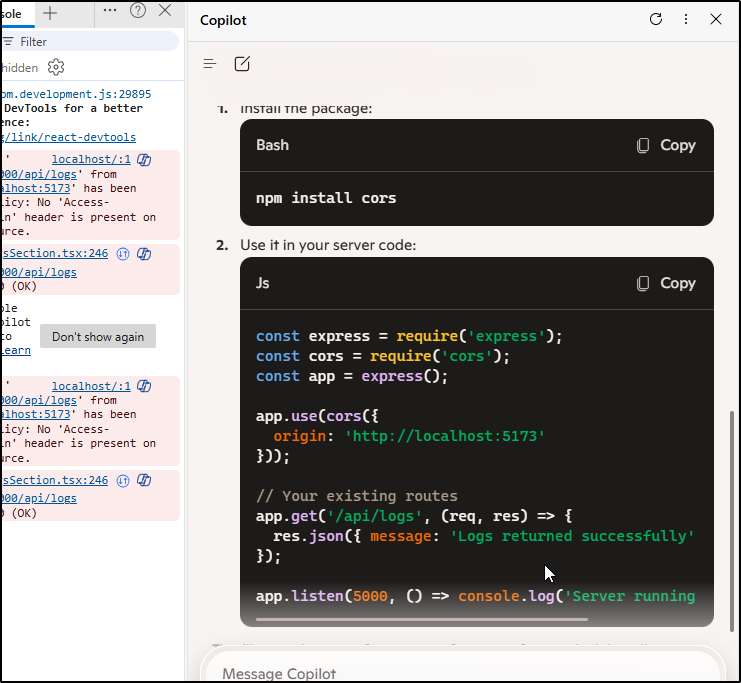

However, the suggested fix is for Express not Vite so we can’t use it, but it is good to feed to (Github) Copilot next

Used in Github Copilot with GPT 4.1

Sometimes I find it handy to dance between assistents. THis is why having Gemini on the left, while it blocks my file picker, does make it work when on this smaller laptop screen.

PEBKAC ERROR

Oh man, so much work (and money) spent fighting that CORS issue when the real issue was me.

If you use docker compose up and docker compose down back and forth it will NOT rebuild the images if they exist.

So my app changes were not reflected between up and downs.

You need to use docker compose build to force a new build or if I had wanted to just build the backend service, I could use docker-compose up -d --no-deps --build backend.

Once I realized that, debugging went a lot faster!

builder@LuiGi:~/Workspaces/dblogapp2$ docker compose up

WARN[0000] /home/builder/Workspaces/dblogapp2/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

WARN[0000] Found orphan containers ([dblogapp2-app-1 dblogapp2-postgres-1]) for this project. If you removed or renamed this service in your compose file, you can run this command with the --remove-orphans flag to clean it up.

[+] Running 3/3

✔ Container dblogapp2-db-1 Created 0.0s

✔ Container dblogapp2-backend-1 Recreated 0.4s

✔ Container dblogapp2-frontend-1 Recreated 0.4s

Attaching to backend-1, db-1, frontend-1

db-1 |

db-1 | PostgreSQL Database directory appears to contain a database; Skipping initialization

db-1 |

db-1 | 2025-06-14 12:57:35.427 UTC [1] LOG: starting PostgreSQL 16.9 (Debian 16.9-1.pgdg120+1) on x86_64-pc-linux-gnu, compiled by gcc (Debian 12.2.0-14) 12.2.0, 64-bit

db-1 | 2025-06-14 12:57:35.429 UTC [1] LOG: listening on IPv4 address "0.0.0.0", port 5432

db-1 | 2025-06-14 12:57:35.429 UTC [1] LOG: listening on IPv6 address "::", port 5432

db-1 | 2025-06-14 12:57:35.444 UTC [1] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

db-1 | 2025-06-14 12:57:35.465 UTC [30] LOG: database system was shut down at 2025-06-14 12:55:54 UTC

db-1 | 2025-06-14 12:57:35.524 UTC [1] LOG: database system is ready to accept connections

backend-1 | * Serving Flask app 'app'

backend-1 | * Debug mode: off

backend-1 | WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

backend-1 | * Running on all addresses (0.0.0.0)

backend-1 | * Running on http://127.0.0.1:5000

backend-1 | * Running on http://172.30.0.3:5000

backend-1 | Press CTRL+C to quit

frontend-1 |

frontend-1 | > anima-project@1.0.0 dev

frontend-1 | > vite --host 0.0.0.0

frontend-1 |

frontend-1 | Re-optimizing dependencies because vite config has changed

frontend-1 |

frontend-1 | VITE v6.0.4 ready in 1867 ms

frontend-1 |

frontend-1 | ➜ Local: http://localhost:5173/

frontend-1 | ➜ Network: http://172.30.0.4:5173/

backend-1 | 172.30.0.1 - - [14/Jun/2025 12:57:53] "GET /api/logs HTTP/1.1" 200 -

backend-1 | 172.30.0.1 - - [14/Jun/2025 12:57:53] "GET /api/logs HTTP/1.1" 200 -

Gracefully stopping... (press Ctrl+C again to force)

[+] Stopping 3/3

✔ Container dblogapp2-frontend-1 Stopped 1.0s

✔ Container dblogapp2-backend-1 Stopped 10.6s

✔ Container dblogapp2-db-1 Stopped 0.6s

builder@LuiGi:~/Workspaces/dblogapp2$ docker compose build

WARN[0000] /home/builder/Workspaces/dblogapp2/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

Compose can now delegate builds to bake for better performance.

To do so, set COMPOSE_BAKE=true.

[+] Building 12.7s (22/22) FINISHED docker:default

=> [backend internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 194B 0.0s

=> [backend internal] load metadata for docker.io/library/python:3.11-slim 0.6s

=> [backend internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [backend 1/5] FROM docker.io/library/python:3.11-slim@sha256:9e1912aab0a30bbd9488eb79063f68f42a68ab0946cbe98fecf197fe5b085506 0.0s

=> [backend internal] load build context 0.0s

=> => transferring context: 1.55kB 0.0s

=> CACHED [backend 2/5] WORKDIR /app 0.0s

=> CACHED [backend 3/5] COPY requirements.txt . 0.0s

=> CACHED [backend 4/5] RUN pip install --no-cache-dir -r requirements.txt 0.0s

=> [backend 5/5] COPY app.py . 0.1s

=> [backend] exporting to image 0.2s

=> => exporting layers 0.1s

=> => writing image sha256:f0dd86adca26330cb8f6478103f0a472b55c7bf86dba3ecde41df46d7bfae7d6 0.0s

=> => naming to docker.io/library/dblogapp2-backend 0.0s

=> [backend] resolving provenance for metadata file 0.0s

=> [frontend internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 422B 0.0s

=> [frontend internal] load metadata for docker.io/library/node:lts-alpine 0.5s

=> [frontend internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [frontend 1/5] FROM docker.io/library/node:lts-alpine@sha256:41e4389f3d988d2ed55392df4db1420ad048ae53324a8e2b7c6d19508288107e 0.0s

=> [frontend internal] load build context 0.6s

=> => transferring context: 611.55kB 0.6s

=> CACHED [frontend 2/5] WORKDIR /app 0.0s

=> CACHED [frontend 3/5] COPY package*.json ./ 0.0s

=> CACHED [frontend 4/5] RUN npm ci 0.0s

=> [frontend 5/5] COPY . . 7.2s

=> [frontend] exporting to image 2.7s

=> => exporting layers 2.6s

=> => writing image sha256:bca43e5ab5fcbfd511c79dae607707be8cf0bc65d3fda384b2246ccbdb44d0f7 0.0s

=> => naming to docker.io/library/dblogapp2-frontend 0.0s

=> [frontend] resolving provenance for metadata file 0.0s

[+] Building 2/2

✔ backend Built 0.0s

✔ frontend Built 0.0s

builder@LuiGi:~/Workspaces/dblogapp2$ docker compose up

WARN[0000] /home/builder/Workspaces/dblogapp2/docker-compose.yml: the attribute `version` is obsolete, it will be ignored, please remove it to avoid potential confusion

WARN[0000] Found orphan containers ([dblogapp2-app-1 dblogapp2-postgres-1]) for this project. If you removed or renamed this service in your compose file, you can run this command with the --remove-orphans flag to clean it up.

[+] Running 3/3

✔ Container dblogapp2-db-1 Created 0.0s

✔ Container dblogapp2-backend-1 Recreated 0.3s

✔ Container dblogapp2-frontend-1 Recreated 0.3s

Attaching to backend-1, db-1, frontend-1

db-1 |

db-1 | PostgreSQL Database directory appears to contain a database; Skipping initialization

db-1 |

db-1 | 2025-06-14 12:59:16.458 UTC [1] LOG: starting PostgreSQL 16.9 (Debian 16.9-1.pgdg120+1) on x86_64-pc-linux-gnu, compiled by gcc (Debian 12.2.0-14) 12.2.0, 64-bit

db-1 | 2025-06-14 12:59:16.460 UTC [1] LOG: listening on IPv4 address "0.0.0.0", port 5432

db-1 | 2025-06-14 12:59:16.460 UTC [1] LOG: listening on IPv6 address "::", port 5432

db-1 | 2025-06-14 12:59:16.483 UTC [1] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

db-1 | 2025-06-14 12:59:16.509 UTC [29] LOG: database system was shut down at 2025-06-14 12:58:42 UTC

db-1 | 2025-06-14 12:59:16.544 UTC [1] LOG: database system is ready to accept connections

backend-1 | * Serving Flask app 'app'

backend-1 | * Debug mode: off

backend-1 | WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

backend-1 | * Running on all addresses (0.0.0.0)

backend-1 | * Running on http://127.0.0.1:5000

backend-1 | * Running on http://172.30.0.3:5000

backend-1 | Press CTRL+C to quit

frontend-1 |

frontend-1 | > anima-project@1.0.0 dev

frontend-1 | > vite --host 0.0.0.0

frontend-1 |

frontend-1 | Re-optimizing dependencies because vite config has changed

frontend-1 |

frontend-1 | VITE v6.0.4 ready in 1183 ms

frontend-1 |

frontend-1 | ➜ Local: http://localhost:5173/

frontend-1 | ➜ Network: http://172.30.0.4:5173/

backend-1 | 172.30.0.1 - - [14/Jun/2025 12:59:34] "GET /api/logs HTTP/1.1" 200 -

backend-1 | 172.30.0.1 - - [14/Jun/2025 12:59:34] "GET /api/logs HTTP/1.1" 200 -

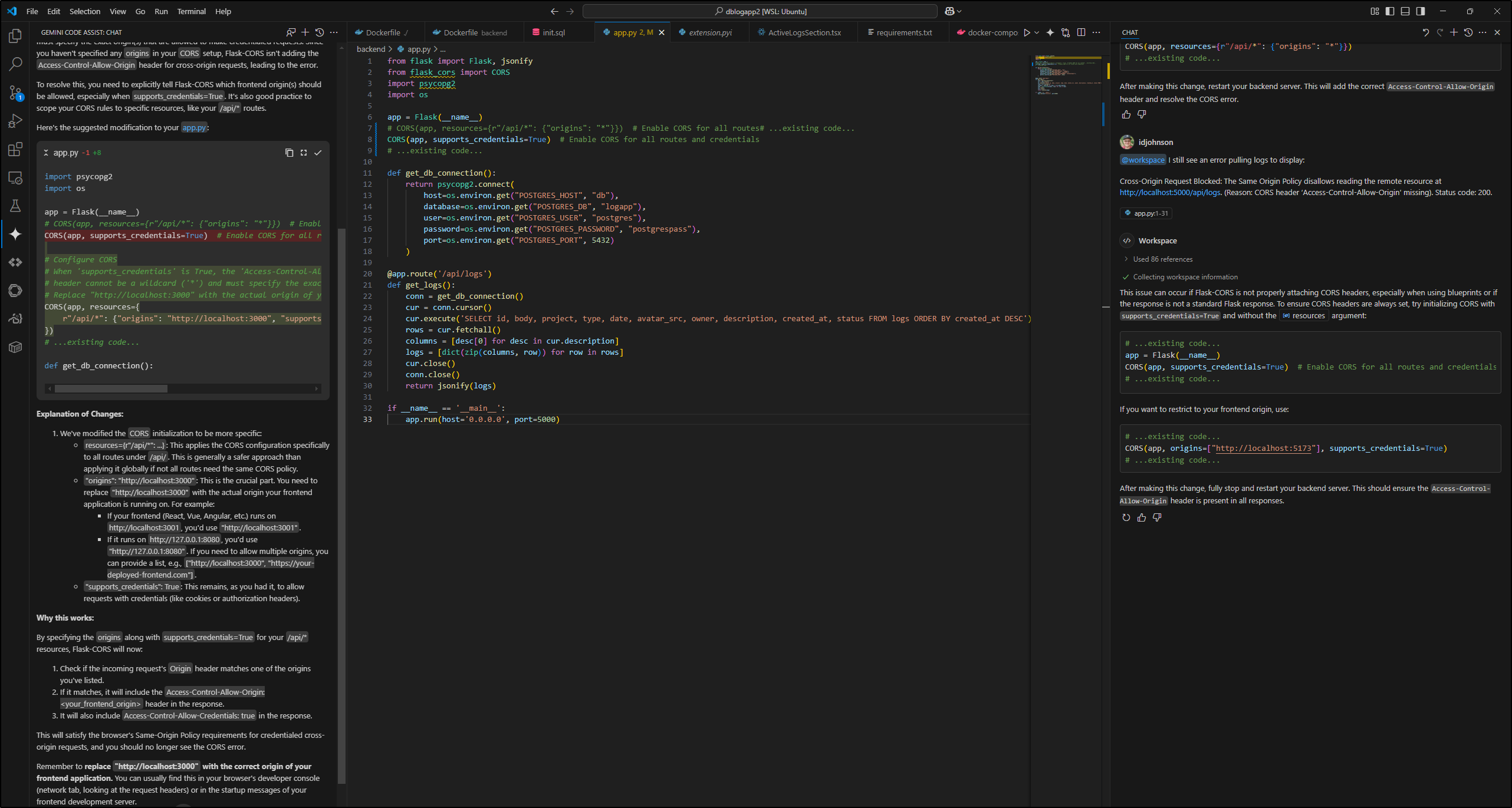

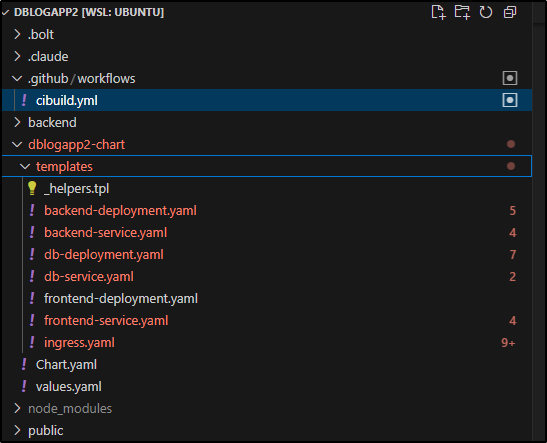

CICD

I moved on from Dockerhub to creating a full chart. It is just an MVP as it has a DB deployment and service and of course it would make more snese to source those in from Bitnami instead

I asked Copilot (GPT-4.1) to help build out a Github Action

name: Build and Push Docker Images and Helm Chart

on:

push:

branches:

- main

env:

REGISTRY: docker.io

DOCKERHUB_REPO_BACKEND: idjohnson/dblogapp2-backend

DOCKERHUB_REPO_FRONTEND: idjohnson/dblogapp2-frontend

HELM_CHART_REPO: idjohnson

jobs:

build-and-push:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Log in to Docker Hub

uses: docker/login-action@v3

with:

username: $

password: $

# Build and push backend image

- name: Build and push backend image

uses: docker/build-push-action@v5

with:

context: ./backend

file: ./backend/Dockerfile

push: true

tags: $:latest

# Build and push frontend image

- name: Build and push frontend image

uses: docker/build-push-action@v5

with:

context: .

file: ./Dockerfile

push: true

tags: $:latest

# Set up Helm

- name: Set up Helm

uses: azure/setup-helm@v4

# Package Helm chart

- name: Package Helm chart

run: |

helm package dblogapp2-chart --destination packaged-chart

# Log in to Helm OCI registry (Docker Hub)

- name: Helm OCI login

run: |

echo $ | helm registry login $ -u $ --password-stdin

# Push Helm chart to Docker Hub as OCI

- name: Push Helm chart to Docker Hub OCI

run: |

CHART_VERSION=$(helm show chart dblogapp2-chart | grep '^version:' | awk '{print $2}')

helm push packaged-chart/dblogapp2-${CHART_VERSION}.tgz oci://$/$

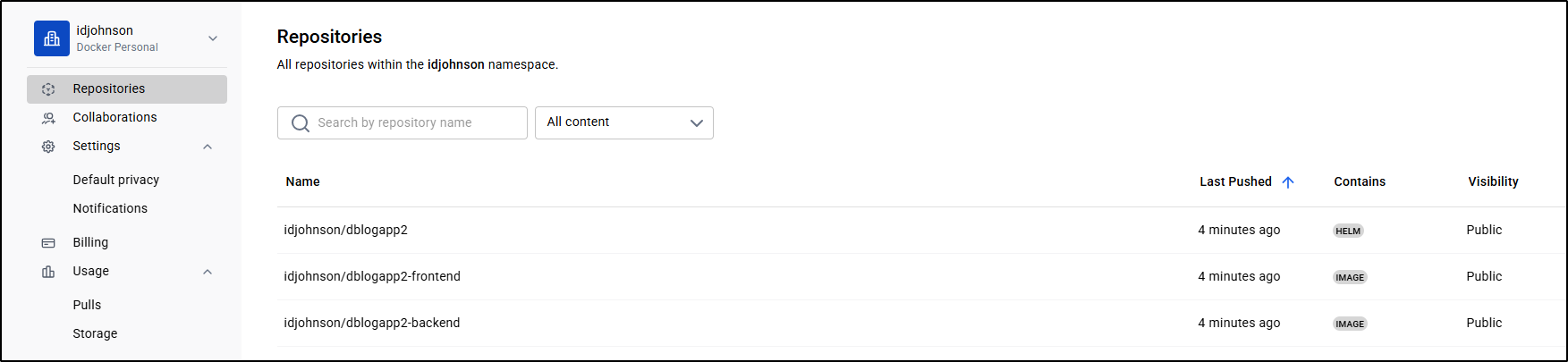

I can now see them in Docker hub as public images

I can view the details page to see the commands we can use with the chart

e.g.

$ helm install <my-release> oci://registry-1.docker.io/idjohnson/dblogapp2 --version 0.1.0

Putting that together, we should be able to create a namespace and deploy our latest build into it.

$ helm install -n dblogapp2 --create-namespace dblogapp2 \

--set frontend.image=idjohnson/dblogapp2-frontend:latest \

--set backend.image=idjohnson/dblogapp2-backend:latest \

oci://registry-1.docker.io/idjohnson/dblogapp2 --version 0.1.0

Obviously, for the ingress to work I’ll need an A Record first

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n dbapp

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "c785f761-626f-4bf2-982f-0ba76422d12b",

"fqdn": "dbapp.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/dbapp",

"name": "dbapp",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

The test invokation seemed to work

$ helm install -n dblogapp2 --create-namespace dblogapp2 --set frontend.image=idjohnson/dblogapp2-frontend:latest --set backend.image=idjohnson/dblogapp2-backend:latest oci://registry-1.docker.io/idjohnson/dblogapp2 --version 0.1.0

Pulled: registry-1.docker.io/idjohnson/dblogapp2:0.1.0

Digest: sha256:eb14f44e79ecf389048d3e81e6eecde9efaea074c8f3a39c4b3e70a63f00288f

NAME: dblogapp2

LAST DEPLOYED: Sat Jun 14 10:42:14 2025

NAMESPACE: dblogapp2

STATUS: deployed

REVISION: 1

TEST SUITE: None

I really was not expecting them to come up on first try but they launched right away (probably should include some readiness probes next)

$ kubectl get po -n dblogapp2

NAME READY STATUS RESTARTS AGE

backend-dc6c4c656-2qwvt 1/1 Running 0 25s

frontend-7dd57fdcf6-5k42z 1/1 Running 0 25s

db-57464c6f55-d94bk 1/1 Running 0 25s

The ingress looks right

$ kubectl get ingress -n dblogapp2

NAME CLASS HOSTS ADDRESS PORTS AGE

dbappingress nginx dbapp.tpk.pw 80, 443 86s

$ kubectl get cert -n dblogapp2

NAME READY SECRET AGE

dbapp-tls True dbapp-tls 99s

Logging in worked, but there was that damnable CORS issue again

Of course, the issue here is that our frontend which is served now externally on HTTPS cannot call back (from your browser) to the backend http service (“backend”). We only exposed the frontend on the Ingress.

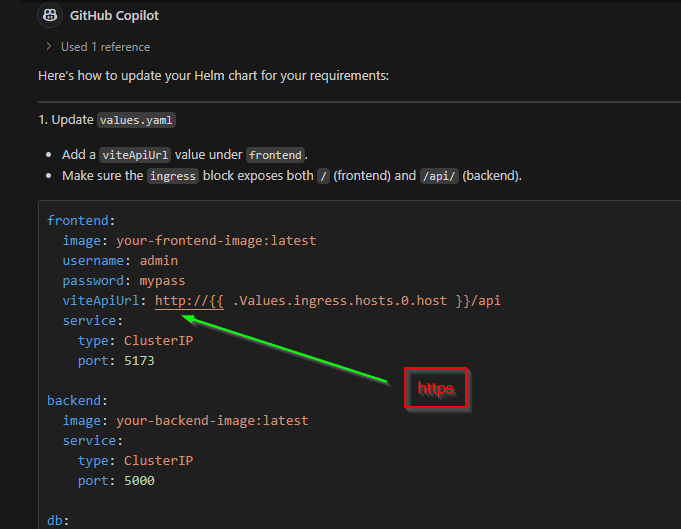

I’ll ask Copilot for some help

| I need to update my helm chart ingress to expose the front end service as it does on http 80, but any routes that start with /api/ need to route to the backend service. I’ll want to update the helm chart version accordingly and the VITE_API_URL wil need to be exposed in the chart values now (which matches this /api endpoint) |

A quick note: Always make sure to read through the replies and not just take them blindly. For instance, right off the bat I saw it forgot about the protocol and was going to insert “http” where it should be “https”. It also wanted to remove all my necessary annotations.

With the changes applied, as this did not affect anything other than the chart, we can test locally first

$ helm upgrade --install -n dblogapp2 --create-namespace \

dblogapp2 --set frontend.image=idjohnson/dblogapp2-frontend:latest \

--set backend.image=idjohnson/dblogapp2-backend:latest \

./dblogapp2-chart/

Release "dblogapp2" has been upgraded. Happy Helming!

NAME: dblogapp2

LAST DEPLOYED: Sat Jun 14 10:57:59 2025

NAMESPACE: dblogapp2

STATUS: deployed

REVISION: 2

TEST SUITE: None

I did a quick redo as I recalled my cluster sometimes doesnt recreate ingress definitions unless i whack them first

builder@LuiGi:~/Workspaces/dblogapp2$ kubectl delete ingress dbappingress -n dblogapp2

ingress.networking.k8s.io "dbappingress" deleted

builder@LuiGi:~/Workspaces/dblogapp2$ helm upgrade --install -n dblogapp2 --create-namespace dblogapp2 --set frontend.image=idjohnson/dblogapp2-frontend:latest --set backend.image=idjohnson/dblogapp2-backend:latest ./dblogapp2-chart/

Release "dblogapp2" has been upgraded. Happy Helming!

NAME: dblogapp2

LAST DEPLOYED: Sat Jun 14 10:59:02 2025

NAMESPACE: dblogapp2

STATUS: deployed

REVISION: 3

TEST SUITE: None

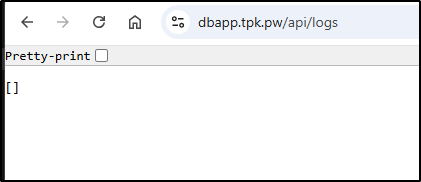

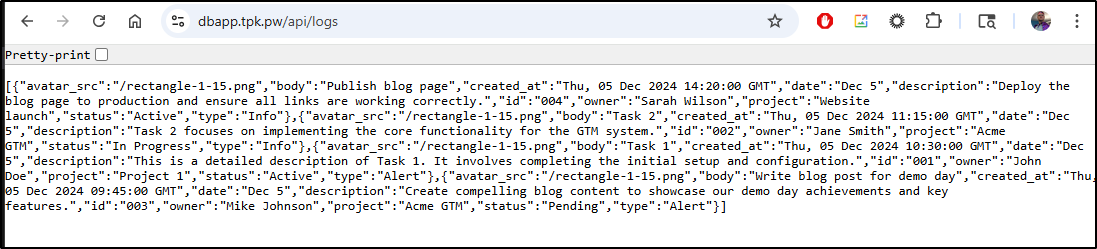

Now I saw an error in the browser console that suggests our helm chart is still not right

ActiveLogsSection.tsx:51

GET https://{{%20.values.ingress.hosts.0.host%20}}/api/logs net::ERR_NAME_NOT_RESOLVED

I worked through the CORS and ingress issues only to find 500s from the backned - seems the database was not initialized

File "/usr/local/lib/python3.11/site-packages/flask/app.py", line 902, in dispatch_request

return self.ensure_sync(self.view_functions[rule.endpoint])(**view_args) # type: ignore[no-any-return]

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/app/app.py", line 31, in get_logs

cur.execute('SELECT id, body, project, type, date, avatar_src, owner, description, created_at, status FROM logs ORDER BY created_at DESC'

)

psycopg2.errors.UndefinedTable: relation "logs" does not exist

LINE 1: ..._src, owner, description, created_at, status FROM logs ORDER...

Checking shows no tables

builder@LuiGi:~$ kubectl exec -it db-57464c6f55-d94bk -n dblogapp2 -- /bin/bash

root@db-57464c6f55-d94bk:/# psql

root@db-57464c6f55-d94bk:/# su - postgres

postgres@db-57464c6f55-d94bk:~$ psql

psql (16.9 (Debian 16.9-1.pgdg120+1))

Type "help" for help.

postgres=#

postgres=# \dt

Did not find any relations.

I also don’t see the init script

postgres@db-57464c6f55-d94bk:~$ cat /docker-entrypoint-initdb.d/init.sql

postgres@db-57464c6f55-d94bk:~$

I will fix the init script, but for now, let’s just see if that corrects the issue by updating the database directly

postgres@db-57464c6f55-d94bk:~$ psql -d logapp

psql (16.9 (Debian 16.9-1.pgdg120+1))

Type "help" for help.

logapp=# \dt;

Did not find any relations.

logapp=# CREATE TABLE IF NOT EXISTS logs (

id VARCHAR(20) PRIMARY KEY,

body TEXT NOT NULL,

project TEXT NOT NULL,

type TEXT NOT NULL,

date TEXT NOT NULL,

avatar_src TEXT,

owner TEXT NOT NULL,

description TEXT,

created_at TIMESTAMP,

status TEXT NOT NULL

);

CREATE TABLE

logapp=# \dt;

List of relations

Schema | Name | Type | Owner

--------+------+-------+----------

public | logs | table | postgres

(1 row)

Now I don’t get an error, but I also have no sample data

I can now insert the sample data

logapp=# INSERT INTO logs (id, body, project, type, date, avatar_src, owner, description, created_at, status) VALUES

('001', 'Task 1', 'Project 1', 'Alert', 'Dec 5', '/rectangle-1-15.png', 'John Doe', 'This is a detailed description of Task 1. It involves completing the initial setup and configuration.', '2024-12-05T10:30:00Z', 'Active'),

('002', 'Task 2', 'Acme GTM', 'Info', 'Dec 5', '/rectangle-1-15.png', 'Jane Smith', 'Task 2 focuses on implementing the core functionality for the GTM system.', '2024-12-05T11:15:00Z', 'In Progress'),

('003', 'Write blog post for demo day', 'Acme GTM', 'Alert', 'Dec 5', '/rectangle-1-15.png', 'Mike Johnson', 'Create compelling blog content to showcase our demo day achievements and key features.', '2024-12-05T09:45:00Z', 'Pending'),

('004', 'Publish blog page', 'Website launch', 'Info', 'Dec 5', '/rectangle-1-15.png', 'Sarah Wilson', 'Deploy the blog page to production and ensure all links are working correctly.', '2024-12-05T14:20:00Z', 'Active');

INSERT 0 4

logapp=# select count(*) from logs;

count

-------

4

(1 row)

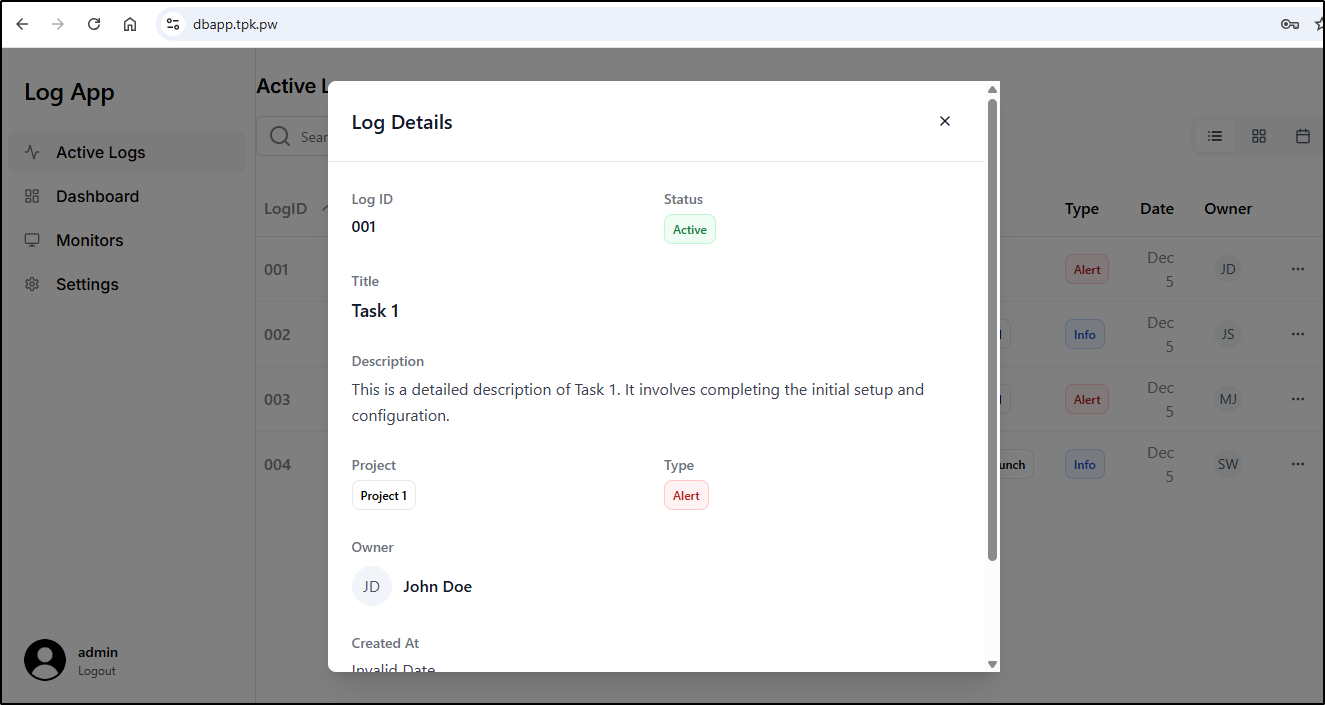

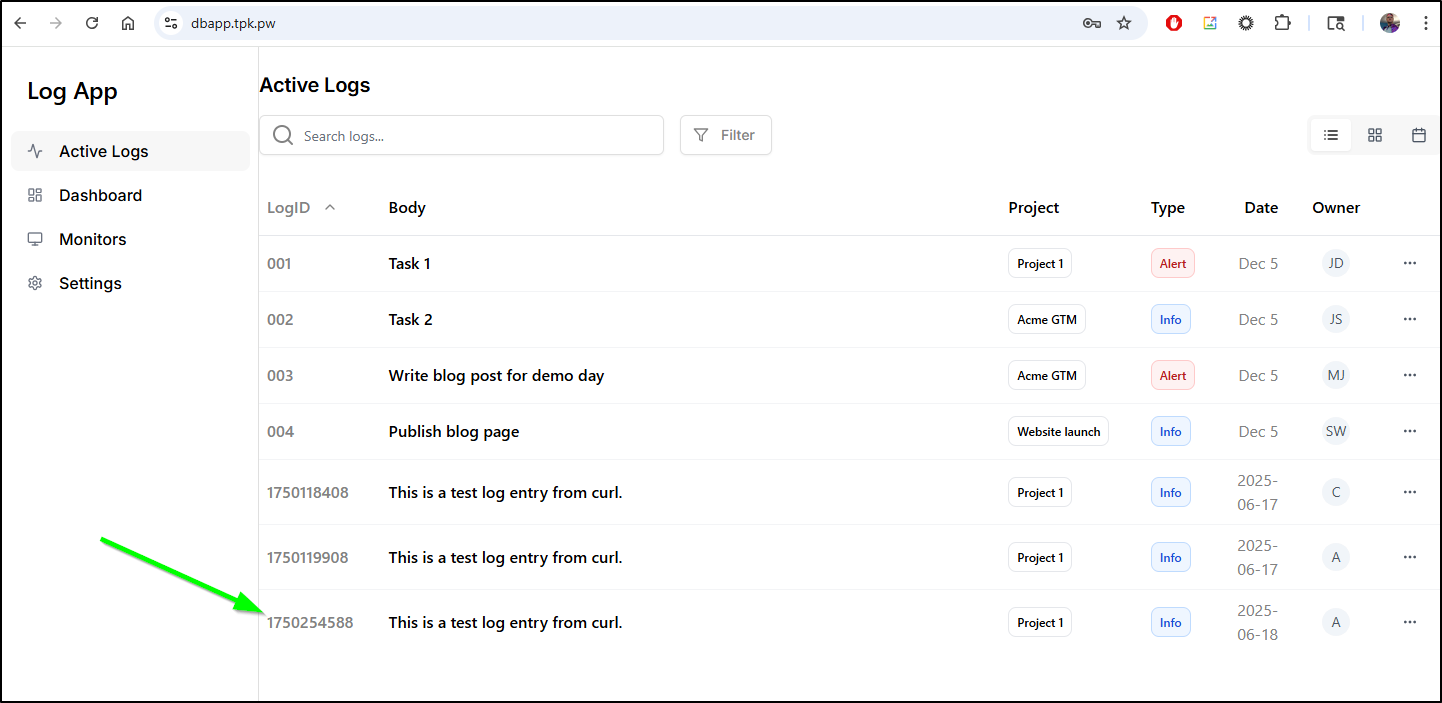

and we can see data base now in the browser

The app now works!

Ingestion

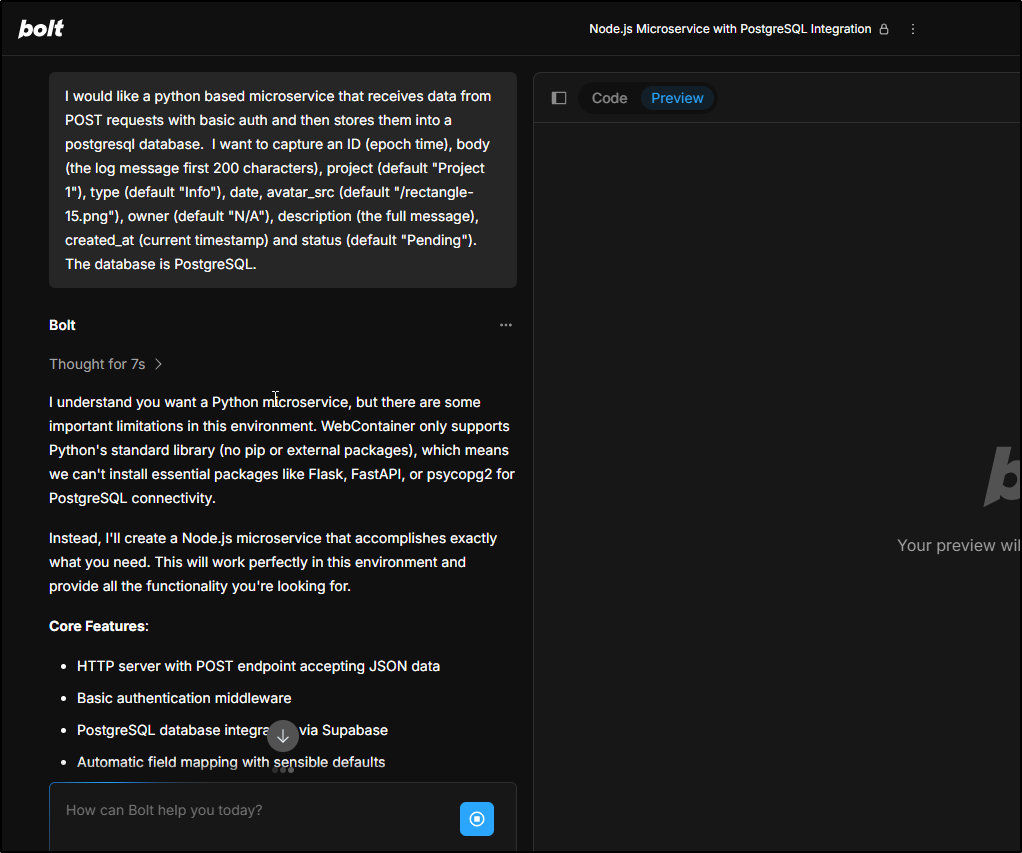

I’ll come back to Bolt.new now with a new ask

I would like a python based microservice that receives data from POST requests with basic auth and then stores them into a postgresql database. I want to capture an ID (epoch time), body (the log message first 200 characters), project (default “Project 1”), type (default “Info”), date, avatar_src (default “/rectangle-15.png”), owner (default “N/A”), description (the full message), created_at (current timestamp) and status (default “Pending”). The database is PostgreSQL.

Interestingly, Bolt.new responded with a “yeah… but no..” and pivoted to NodeJS

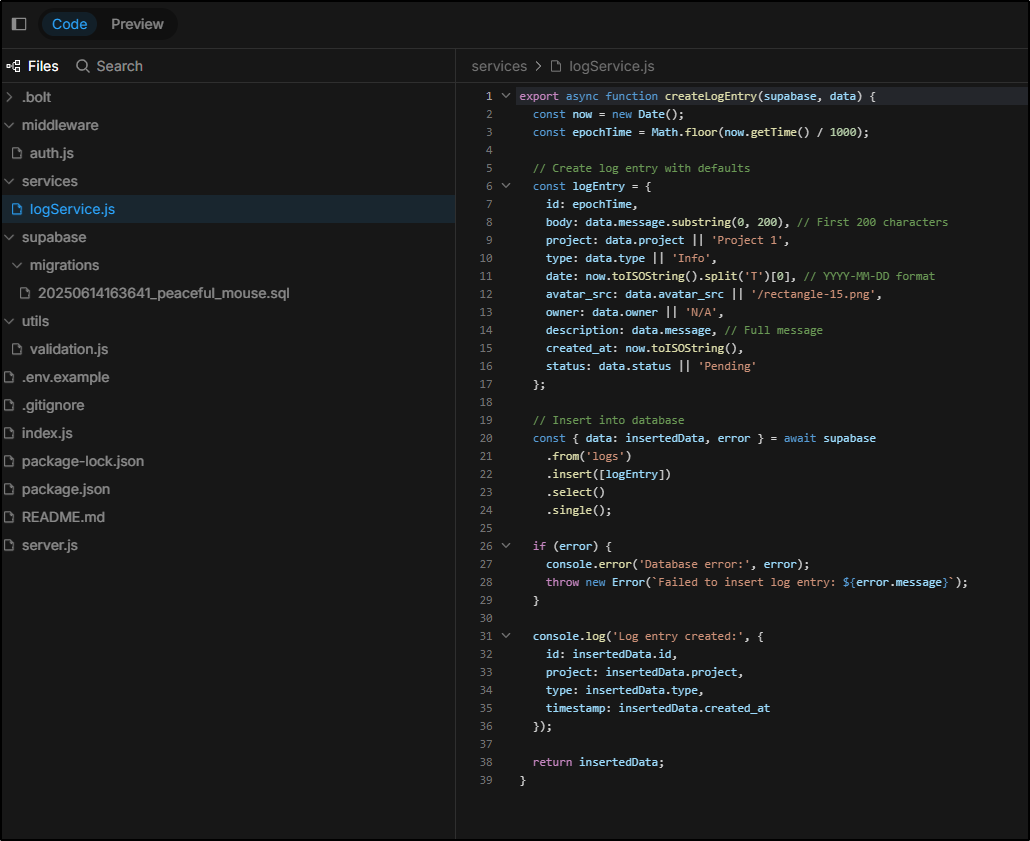

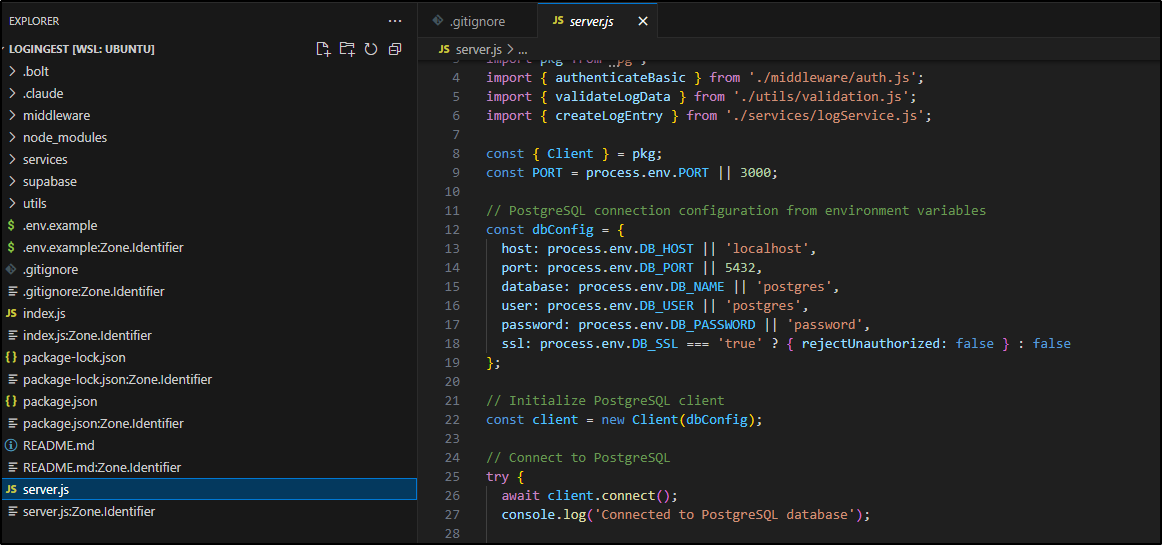

I can live with NodeJS, but I did not desire to have it switch to Supabase. I have a PostgreSQL db already

I’ll use Claude Code to try and change it over

I was worried about costs with how many tokens got used above, but when I exited, this operation just cost US$0.25

Dependencies: Replaced @supabase/supabase-js with pg in package.json

2. Database Connection: Updated server.js to use PostgreSQL with environment variables:

- DB_HOST (default: localhost)

- DB_PORT (default: 5432)

- DB_NAME (default: postgres)

- DB_USER (default: postgres)

- DB_PASSWORD (default: password)

- DB_SSL (set to 'true' for SSL connections)

3. Database Queries: Updated logService.js to use raw PostgreSQL queries instead of Supabase client

4. Table Creation: Added automatic table creation on startup

Usage:

Launch with PostgreSQL connection info:

DB_HOST=your-host DB_PORT=5432 DB_NAME=your-db DB_USER=your-user DB_PASSWORD=your-pass npm start

The app will automatically create the logs table if it doesn't exist and connect to your PostgreSQL instance at launch time.

I can see it left some supabase stuff around like settings in the .env.example and supabase folder. However, the NodeJS code does look correct

I can now set some env vars and test

builder@LuiGi:~/Workspaces/logingest$ export DB_HOST=pg-295c4a9c-isaac-1040.aivencloud.com

builder@LuiGi:~/Workspaces/logingest$ export DB_PORT=11996

builder@LuiGi:~/Workspaces/logingest$ export DB_NAME=defaultdb

builder@LuiGi:~/Workspaces/logingest$ export DB_USER=avnadmin

builder@LuiGi:~/Workspaces/logingest$ export DB_PASSWORD=xxxxxxxxxxxxxxxxxxx

builder@LuiGi:~/Workspaces/logingest$ export DB_SSL=true

builder@LuiGi:~/Workspaces/logingest$ export PORT=3033

builder@LuiGi:~/Workspaces/logingest$ npm run start

> log-microservice@1.0.0 start

> node server.js

Connected to PostgreSQL database

Logs table ready

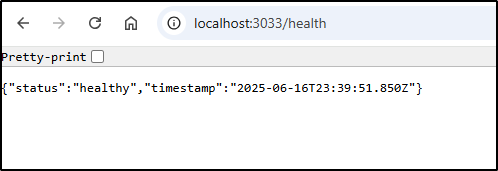

Log microservice running on port 3033

Health check available at: http://localhost:3033/health

Log endpoint available at: http://localhost:3033/logs

which worked

Let’s test POSTing a message

$ curl -X POST http://localhost:3033/logs \

-H "Content-Type: application/json" \

-u admin:password123 \

-d '{

"message": "This is a test log entry from curl.",

"project": "Project 1",

"type": "Info",

"avatar_src": "/rectangle-15.png",

"owner": "Chuck",

"status": "Pending"

}'

{"error":"Internal server error"}

After some work that involved me confusing “message” and “body” and then using the wrong databse (used the one for my other dblogapp), I got it working

$ curl -X POST http://localhost:3033/logs -H "Content-Type: application/json" -u admin:password123 -d '{

"body": "This is a test log entry from curl.",

"project": "Project 1",

"type": "Info",

"avatar_src": "/rectangle-15.png",

"owner": "Alice",

"status": "Pending"

}'

{"success":true,"message":"Log entry created successfully","id":"1750119908"}

I needed to portforward to the db pod, of course

$ kubectl port-forward svc/db -n dblogapp2 5434:5432

Forwarding from 127.0.0.1:5434 -> 5432

Forwarding from [::1]:5434 -> 5432

and relaunch the server with

builder@LuiGi:~/Workspaces/logingest$ export DB_HOST=localhost

builder@LuiGi:~/Workspaces/logingest$ export DB_PORT=5434

builder@LuiGi:~/Workspaces/logingest$ export DB_NAME=logapp

builder@LuiGi:~/Workspaces/logingest$ export DB_USER=postgres

builder@LuiGi:~/Workspaces/logingest$ export DB_PASSWORD=postgrespass

builder@LuiGi:~/Workspaces/logingest$ export DB_SSL=false

builder@LuiGi:~/Workspaces/logingest$ npm run start

> log-microservice@1.0.0 start

> node server.js

Connected to PostgreSQL database

Logs table ready

Log microservice running on port 3033

Health check available at: http://localhost:3033/health

Log endpoint available at: http://localhost:3033/logs

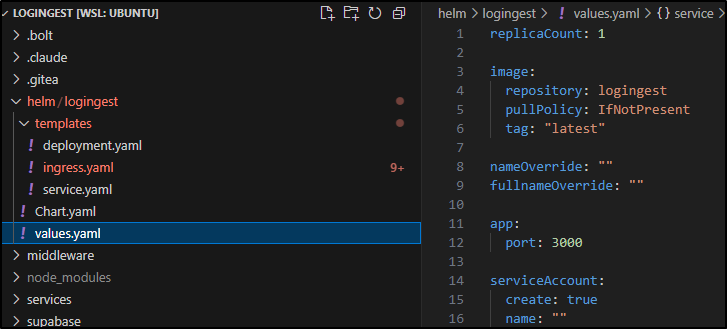

My next steps involved setting up Docker and Helm.

The Dockerfile was pretty straightforward:

FROM node:20-slim

WORKDIR /app

# Copy package files

COPY package*.json ./

# Install dependencies

RUN npm install --production

# Copy application code

COPY . .

# Environment variables with defaults

ENV PORT=3000 \

DB_HOST=postgres-svc \

DB_PORT=5432 \

DB_NAME=logapp \

DB_USER=postgres \

DB_PASSWORD=postgrespass \

DB_SSL=false \

AUTH_USERNAME=admin \

AUTH_PASSWORD=password123

EXPOSE 3000

CMD ["node", "server.js"]

And the Helm charts included just a basic service, deployment, and ingress

My thought here was that my “Ingest Service” really could be hosted somewhere else and I might not want to bundle it with the app layer.

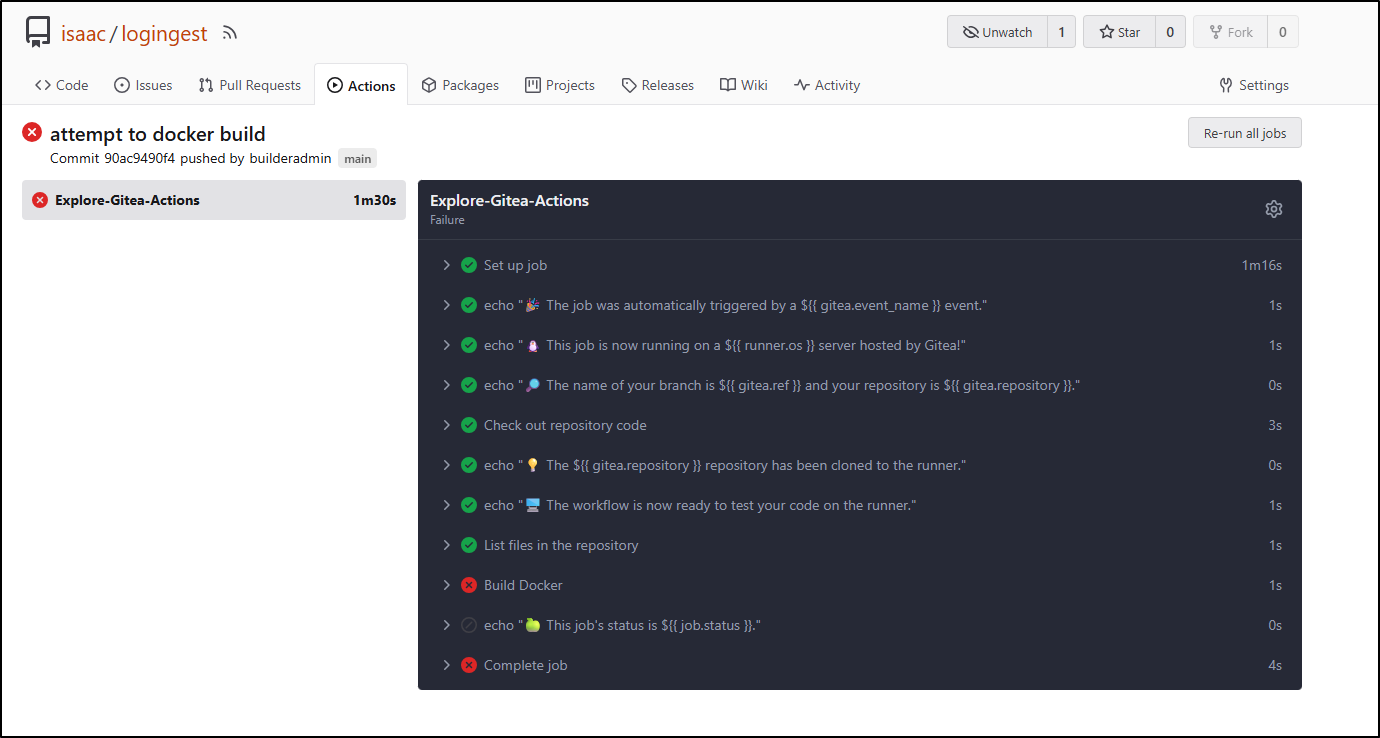

I then realized a minor issue. My Gitea runners don’t include Docker

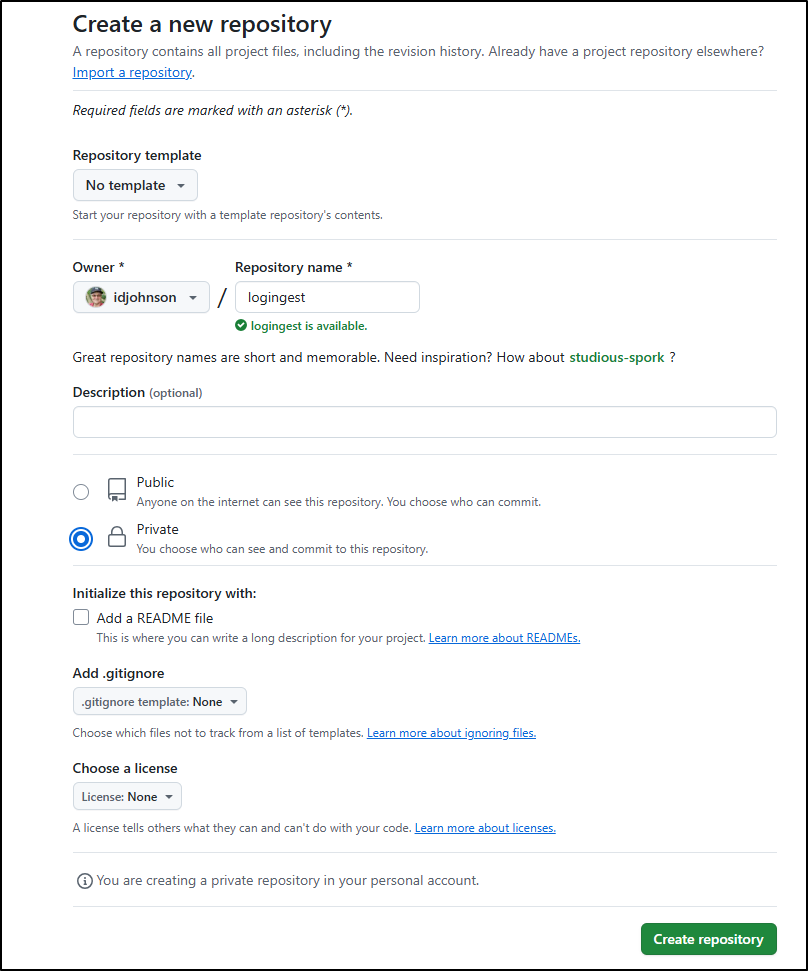

Since this is for a Hackathon, primarily, let’s sync over to Github where this won’t be an issue.

The key step in pushing to a second Git repository service is to use a unique origin. Here, I’ll use origin2 for Github

builder@LuiGi:~/Workspaces/logingest$ git remote add origin2 https://github.com/idjohnson/logingest.git

builder@LuiGi:~/Workspaces/logingest$ git push -u origin2 main

Enumerating objects: 44, done.

Counting objects: 100% (44/44), done.

Delta compression using up to 16 threads

Compressing objects: 100% (31/31), done.

Writing objects: 100% (44/44), 11.90 KiB | 2.97 MiB/s, done.

Total 44 (delta 6), reused 0 (delta 0), pack-reused 0 (from 0)

remote: Resolving deltas: 100% (6/6), done.

To https://github.com/idjohnson/logingest.git

* [new branch] main -> main

branch 'main' set up to track 'origin2/main'.

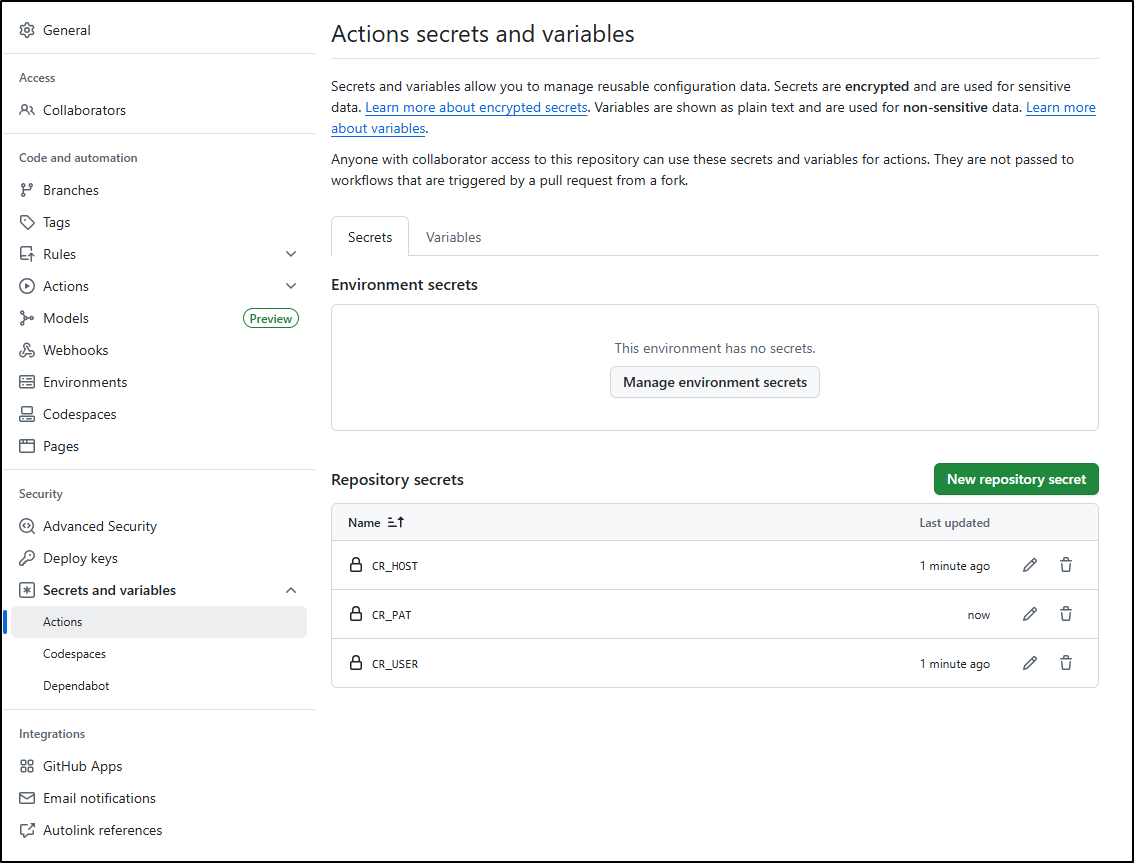

I’ll need to set some secrets for the workflow to be able to login and push a built container to my Harbor CR

With a bit of debugging sorted, I now have a working Github workflow

name: PR And Main Build

on:

push:

branches:

- main

pull_request:

jobs:

build_deploy:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Build Dockerfile

run: |

export BUILDIMGTAG="`cat Dockerfile | tail -n1 | sed 's/^.*\///g'`"

docker build -t $BUILDIMGTAG .

docker images

- name: Tag and Push

run: |

export BUILDIMGTAG="`cat Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FINALBUILDTAG="`cat Dockerfile | tail -n1 | sed 's/^#//g'`"

docker tag $BUILDIMGTAG $FINALBUILDTAG

docker images

echo $CR_PAT | docker login harbor.freshbrewed.science -u $CR_USER --password-stdin

docker push $FINALBUILDTAG

env: # Or as an environment variable

CR_PAT: $

CR_USER: $

- name: Helm Install

run: |

curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

helm version

- name: Helm Package

run: |

export CHARTVERSION="`cat helm/logingest/Chart.yaml | grep version | head -n1 | sed 's/^version: //g'`"

helm package helm/logingest

helm push logingest-$CHARTVERSION.tgz oci://harbor.freshbrewed.science/chartrepo

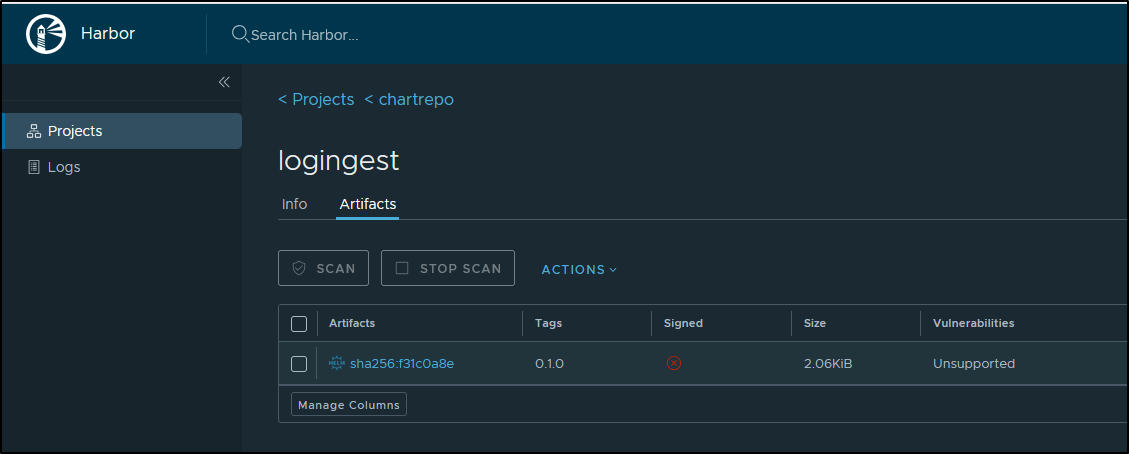

This includes an OCI Chart

In a departure from my past, I decided to publish the chart and container in public libraries.

So anyone can pull the 0.0.1 tag

$ docker pull harbor.freshbrewed.science/library/logingest@sha256:2f17a0f350835b202c749bbe686738c8a10a5591358cabbc44f55e73d3b0bd4d

As well as the chart for 0.1.0 (which is the Chart version)

$ docker pull harbor.freshbrewed.science/library/logingest@sha256:2f17a0f350835b202c749bbe686738c8a10a5591358cabbc44f55e73d3b0bd4d

This means we should be able to install locally with

$ helm install logingest -n dblogapp2 --set image.repository=harbor.freshbrewed.science/library/logingest --set image.tag=0.0.

1 --set db.host=db --set db.port=5432 --set db.name=logapp --set db.user=postgres --set db.password=postgrespass --set db.ssl=false oci://harbor.fres

hbrewed.science/library/logingest --version 0.1.0

Error: INSTALLATION FAILED: failed to perform "FetchReference" on source: harbor.freshbrewed.science/library/logingest:0.1.0: not found

I’m a bit confused by the error as pull works just fine

builder@LuiGi:/tmp/test$ helm pull oci://harbor.freshbrewed.science/chartrepo/logingest --version 0.1.0

Pulled: harbor.freshbrewed.science/chartrepo/logingest:0.1.0

Digest: sha256:e55ec954f6e86c15ec2575c844a930f5a6353f2cb6ee303d1dbf3132eff420f7

I’ll try using the tgz pulled from the helm pull

$ helm install logingest -n dblogapp2 --set image.repository=harbor.freshbrewed.science/library/logingest --set image.tag=0.0.1 --set db.host=db --set db.port=5432 --set db.name=logapp --set db.user=postgres --set db.password=postgrespass --set db.ssl=false ./logingest-0.1.0.tgz

Error: INSTALLATION FAILED: template: logingest/templates/service.yaml:4:11: executing "logingest/templates/service.yaml" at <include "logingest.fullname" .>: error calling include: template: no template "logingest.fullname" associated with template "gotpl"

I did a quick fix to add the missing _helpers.tpl and tried again

builder@LuiGi:/tmp/test$ helm pull oci://harbor.freshbrewed.science/chartrepo/logingest --version 0.1.3

Pulled: harbor.freshbrewed.science/chartrepo/logingest:0.1.3

Digest: sha256:b70549c36d4f40d120ad21fc0a58d89ae14ac2400f1f7935527d3eaedc6cd3be

builder@LuiGi:/tmp/test$ helm install logingest -n dblogapp2 --set image.repository=harbor.freshbrewed.science/library/logingest --set image.tag=0.0.1 --set db.host=db --set db.port=5432 --set db.name=logapp --set db.user=postgres --set db.password=postgrespass --set db.ssl=false ./logingest-0.1.3.tgz

NAME: logingest

LAST DEPLOYED: Wed Jun 18 08:19:33 2025

NAMESPACE: dblogapp2

STATUS: deployed

REVISION: 1

TEST SUITE: None

If we want to upgrade, we can do that as well with an OCI chart:

builder@LuiGi:/tmp/test$ helm pull oci://harbor.freshbrewed.science/chartrepo/logingest --version 0.1.4

Pulled: harbor.freshbrewed.science/chartrepo/logingest:0.1.4

Digest: sha256:63de55b4eacc008280acf83b520799f239bf4e8d5435cdf1a5040d80d3696771

builder@LuiGi:/tmp/test$ helm upgrade logingest -n dblogapp2 --set image.repository=harbor.freshbrewed.science/library/logingest --set image.tag=0.0.

1 --set db.host=db --set db.port=5432 --set db.name=logapp --set db.user=postgres --set db.password=postgrespass --set db.ssl=false ./logingest-0.1.4

.tgz

Release "logingest" has been upgraded. Happy Helming!

NAME: logingest

LAST DEPLOYED: Wed Jun 18 08:24:34 2025

NAMESPACE: dblogapp2

STATUS: deployed

REVISION: 2

TEST SUITE: None

Now that I see the pod running

$ kubectl get po -n dblogapp2

NAME READY STATUS RESTARTS AGE

backend-dc6c4c656-2qwvt 1/1 Running 0 3d21h

frontend-68478c959f-bbdhn 1/1 Running 0 3d21h

db-57464c6f55-d94bk 1/1 Running 0 3d21h

logingest-logingest-87b969469-4mtck 1/1 Running 0 12s

I’ll make an A Record so we can sort out Ingress next

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n logingest

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "cac0ae56-a113-4f19-89ee-0a57a961b9f6",

"fqdn": "logingest.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/logingest",

"name": "logingest",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I’ll pull the values and add the ingress settings

builder@LuiGi:/tmp/test$ helm get values logingest -n dblogapp2 -o yaml > my-ingest.values.yaml

builder@LuiGi:/tmp/test$ helm get values logingest -n dblogapp2 -o yaml > my-ingest.values.yaml.bak

builder@LuiGi:/tmp/test$ helm get values logingest --all -n dblogapp2 -o yaml > my-ingest.values.yaml.all

builder@LuiGi:/tmp/test$ code .

builder@LuiGi:/tmp/test$ diff my-ingest.values.yaml my-ingest.values.yaml.bak

11,28d10

< ingress:

< annotations:

< cert-manager.io/cluster-issuer: azuredns-tpkpw

< ingress.kubernetes.io/ssl-redirect: "true"

< kubernetes.io/tls-acme: "true"

< nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

< nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

< className: nginx

< enabled: true

< hosts:

< - host: logingest.tpk.pw

< paths:

< - path: /

< pathType: Prefix

< tls:

< - hosts:

< - logingest.tpk.pw

< secretName: logingest-tls

I had another bug to sort out, but could then upgrade with these values

builder@LuiGi:/tmp/test$ helm pull oci://harbor.freshbrewed.science/chartrepo/logingest --version 0.1.5

Pulled: harbor.freshbrewed.science/chartrepo/logingest:0.1.5

Digest: sha256:1edaba40fdda34d348793e6c59b2181fa7600d022e88706243c15fd686900dc1

builder@LuiGi:/tmp/test$ helm upgrade logingest -f ./my-ingest.values.yaml -n dblogapp2 ./logingest-0.1.5.tgz

Release "logingest" has been upgraded. Happy Helming!

NAME: logingest

LAST DEPLOYED: Wed Jun 18 08:46:14 2025

NAMESPACE: dblogapp2

STATUS: deployed

REVISION: 2

TEST SUITE: None

I now have two ingress’s

$ kubectl get ingress -n dblogapp2

NAME CLASS HOSTS ADDRESS PORTS AGE

dbappingress nginx dbapp.tpk.pw 80, 443 3d21h

logingest-logingest nginx logingest.tpk.pw 80, 443 75s

The pod seems good

$ kubectl logs -n dblogapp2 logingest-logingest-87b969469-bxhgx

Connected to PostgreSQL database

Logs table ready

Log microservice running on port 3000

Health check available at: http://localhost:3000/health

Log endpoint available at: http://localhost:3000/logs

So let’s push a payload

$ curl -X POST https://logingest.tpk.pw/logs -H "Content-Type: application/json" -u admin:password123 -d '{

"body": "This is a test log entry from curl.",

"project": "Project 1",

"type": "Info",

"avatar_src": "/rectangle-15.png",

"owner": "Alice",

"status": "Pending"

}'

{"success":true,"message":"Log entry created successfully","id":"1750254588"}

We can then login to the frontend to see the log entry reflected

IONOS DNS

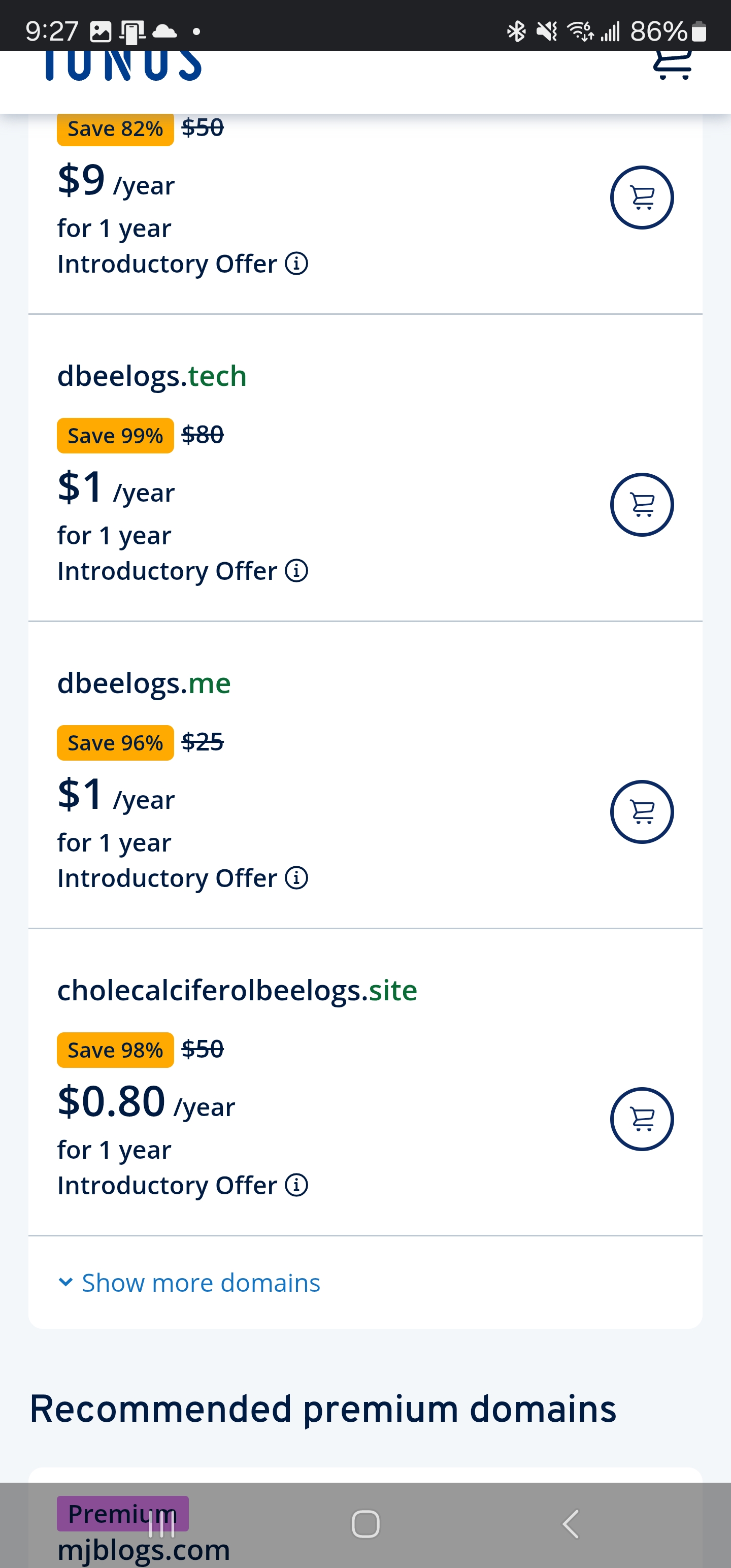

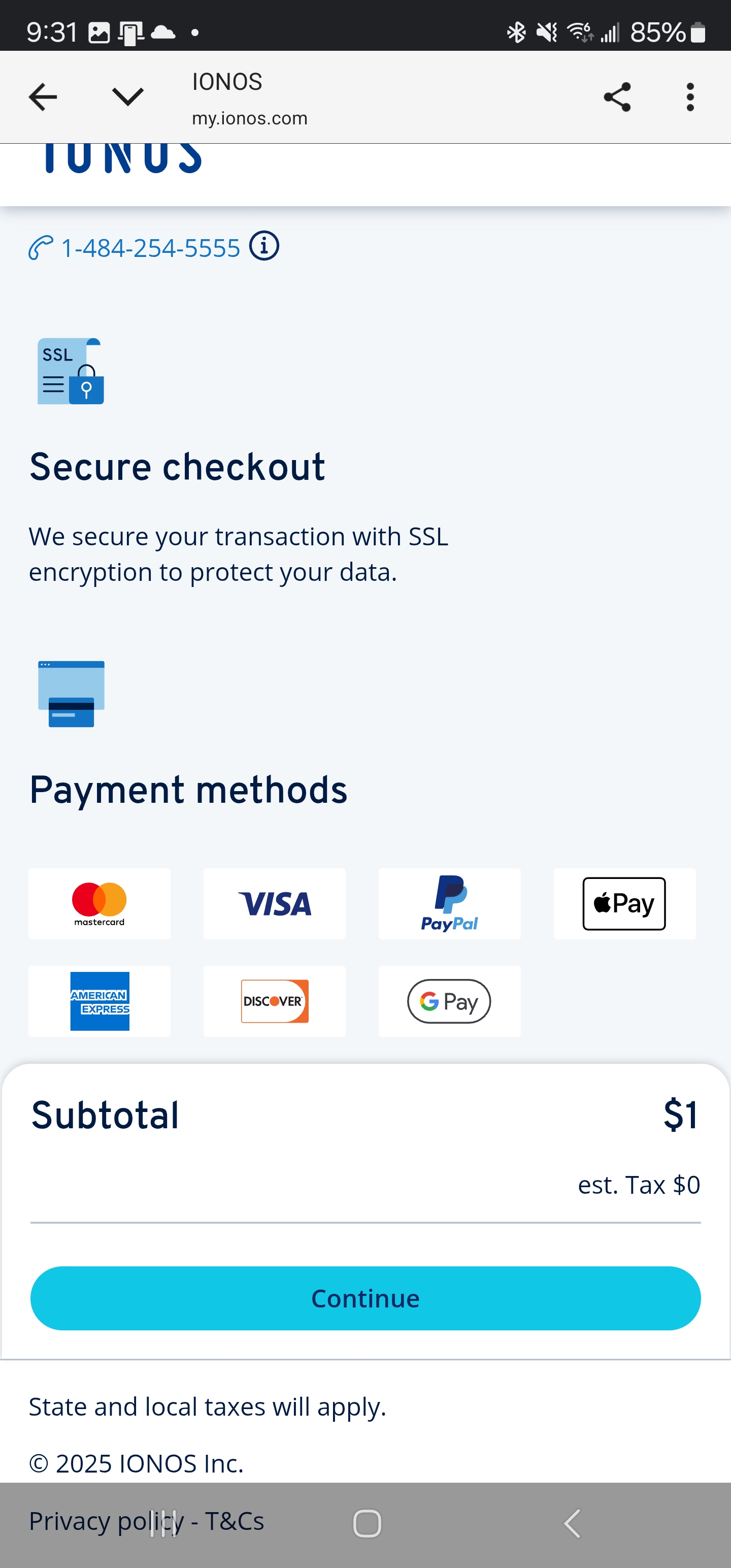

One of the challenges in this Hackathon was to use Ionos for a cheap or free domain.

I’ve essentially been using Gandi, a French Registrar for nearly 20 years so I figured it’s a good idea to try someone new anyhow.

I searched up downs on my phone

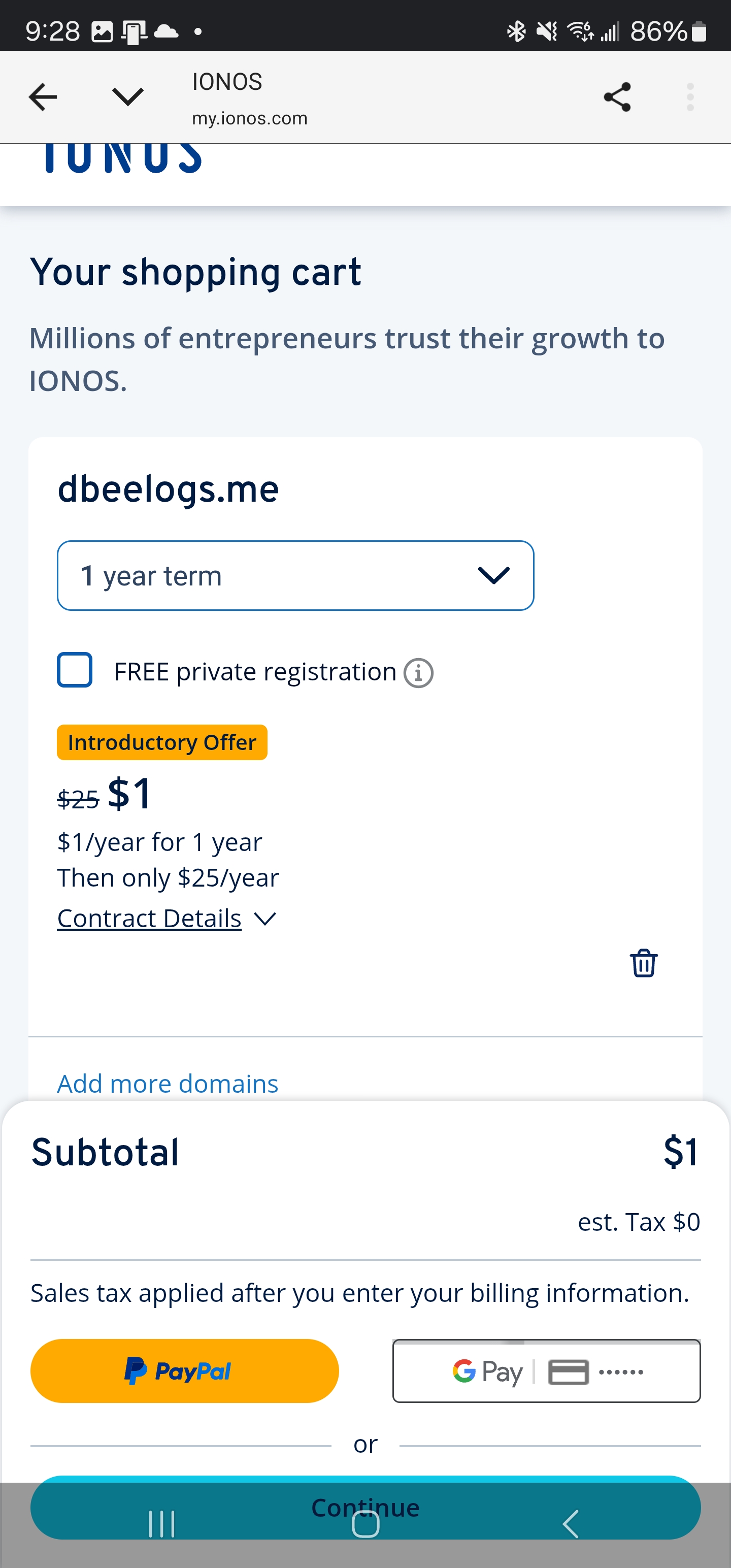

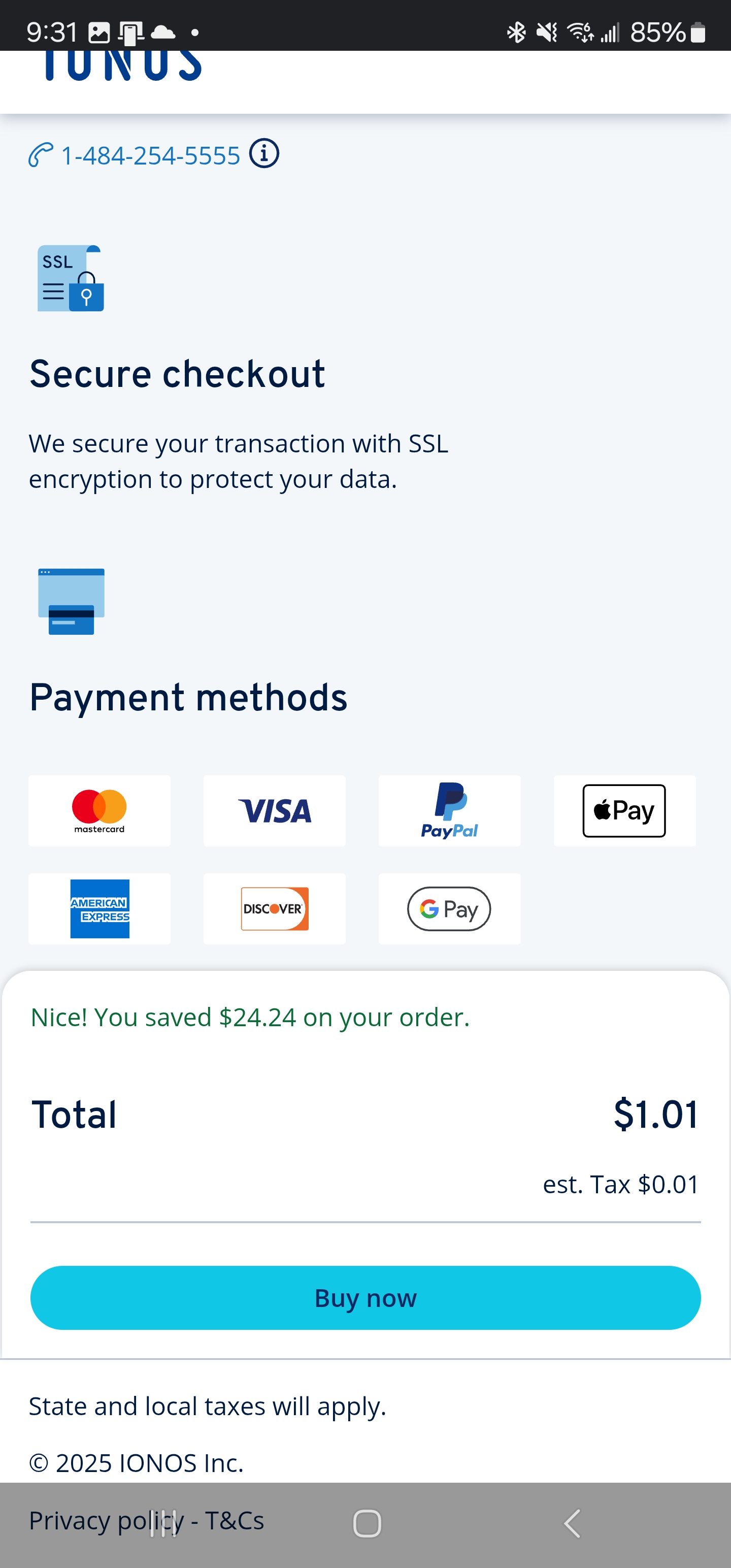

Indeed, it would be cheap - just US$1

They had lots of payment methods including Google Pay, which for online purchases is the one I prefer

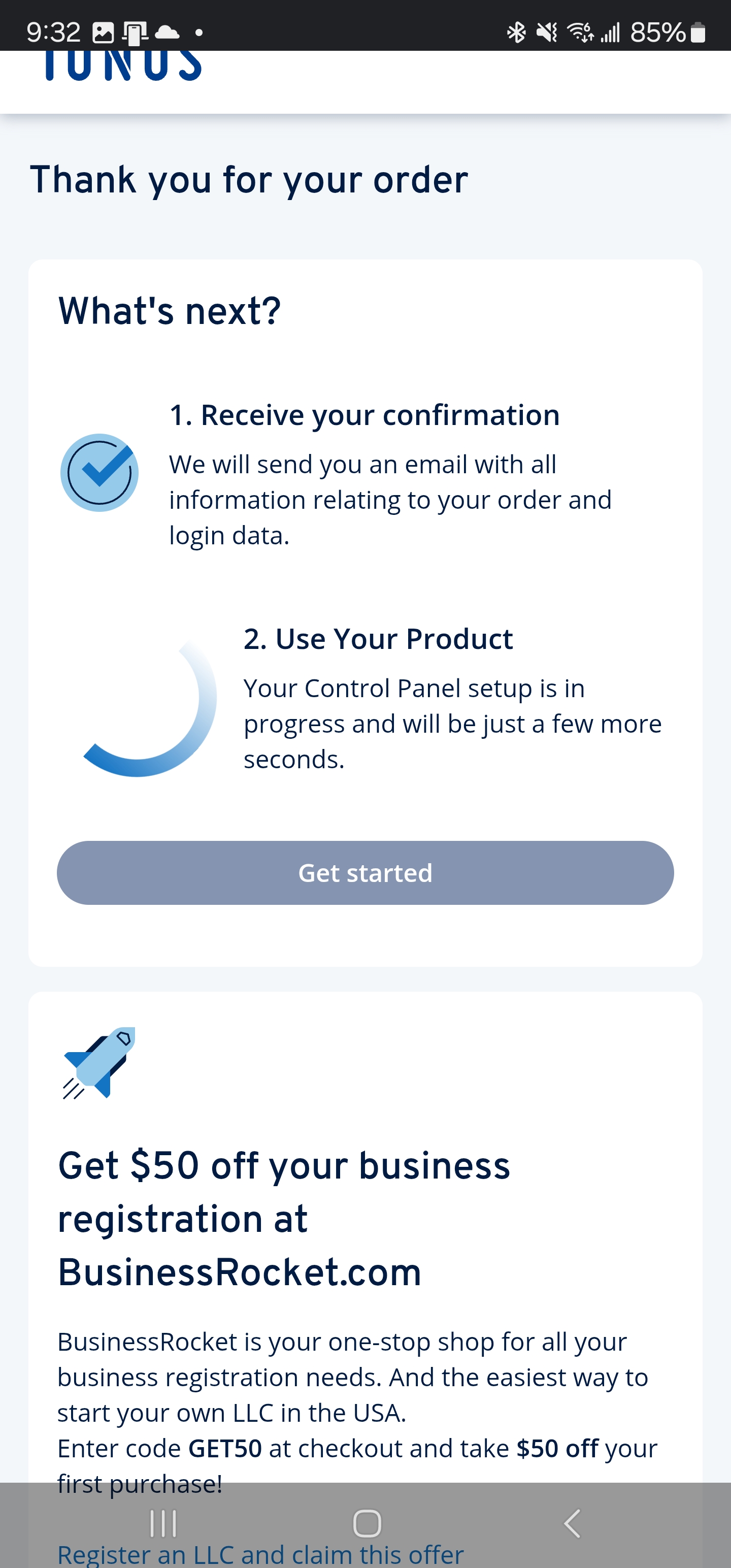

I did my address and Buy Now

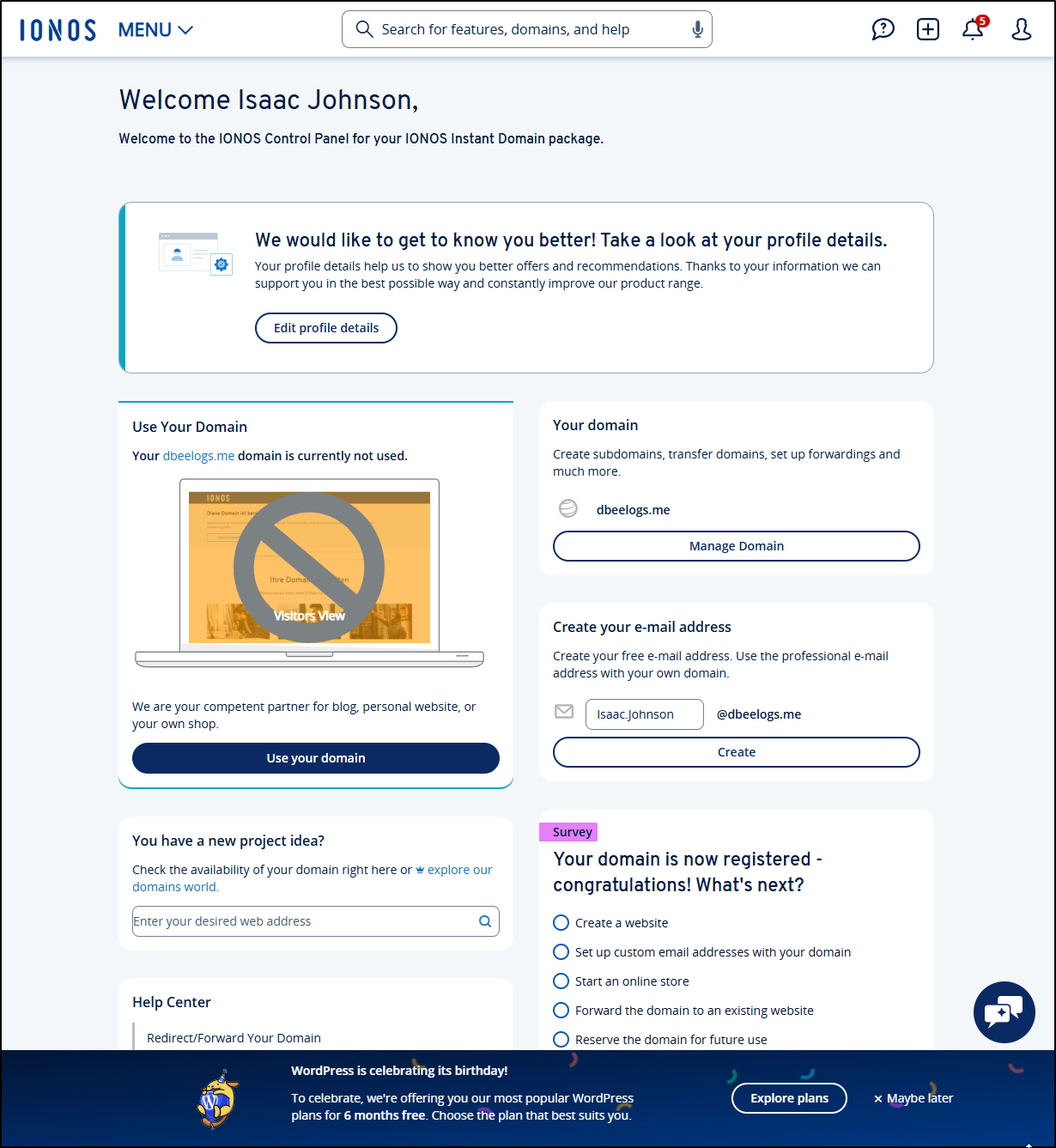

And it then took about 5 minutes to complete and build a dashboard. Since I needed to confirm emails, I switched to my PC for the rest

The dashboard is meant for the novice and will show you at least three ways to use them for hosting

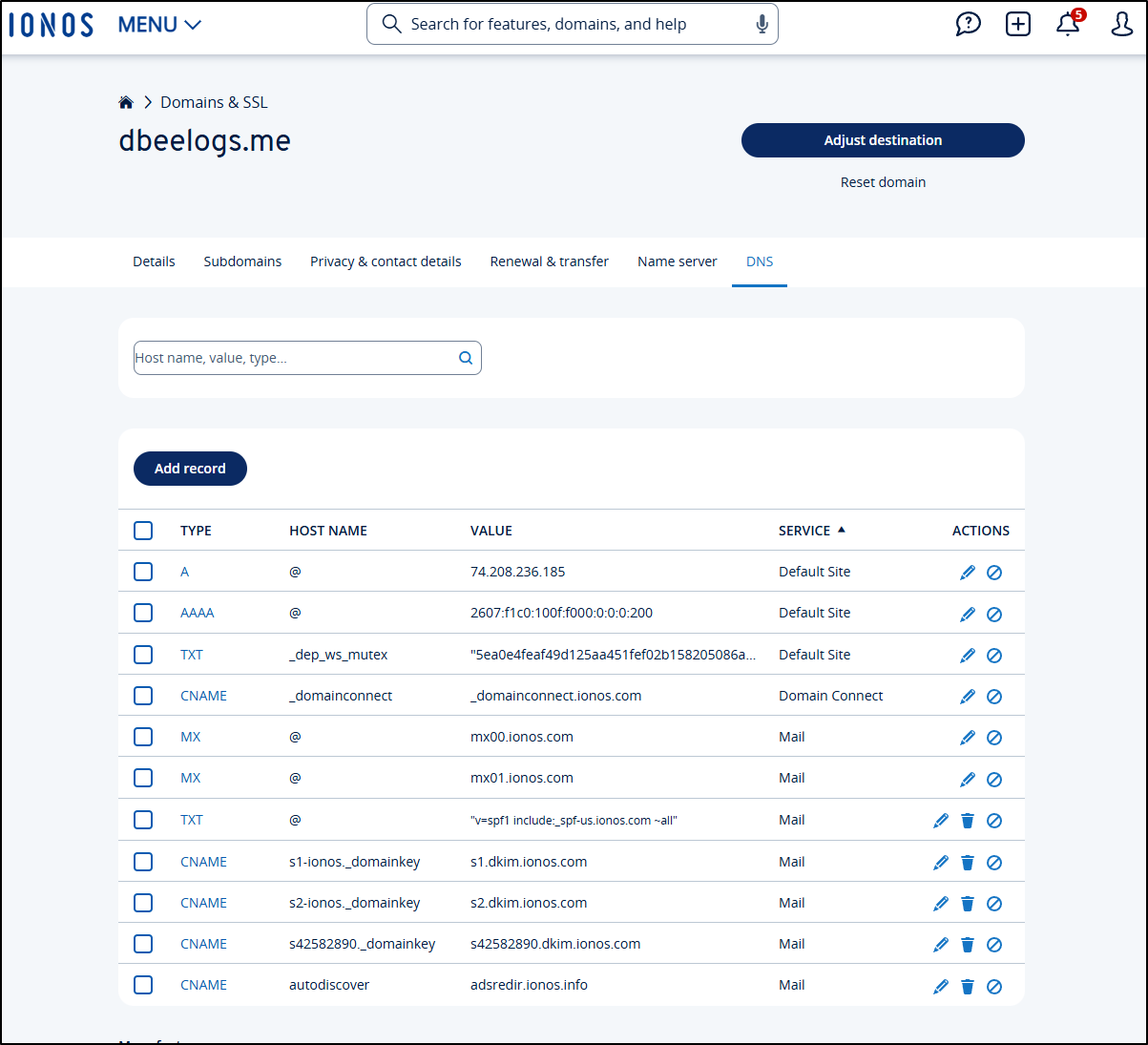

We can click “Manage the Domain” then move to “DNS” to just get right into DNS management

However, We are not ClickOps people, are we? Of course not!

So, let’s follow the Ionos documentation for a Cert Manager Webhook instead.

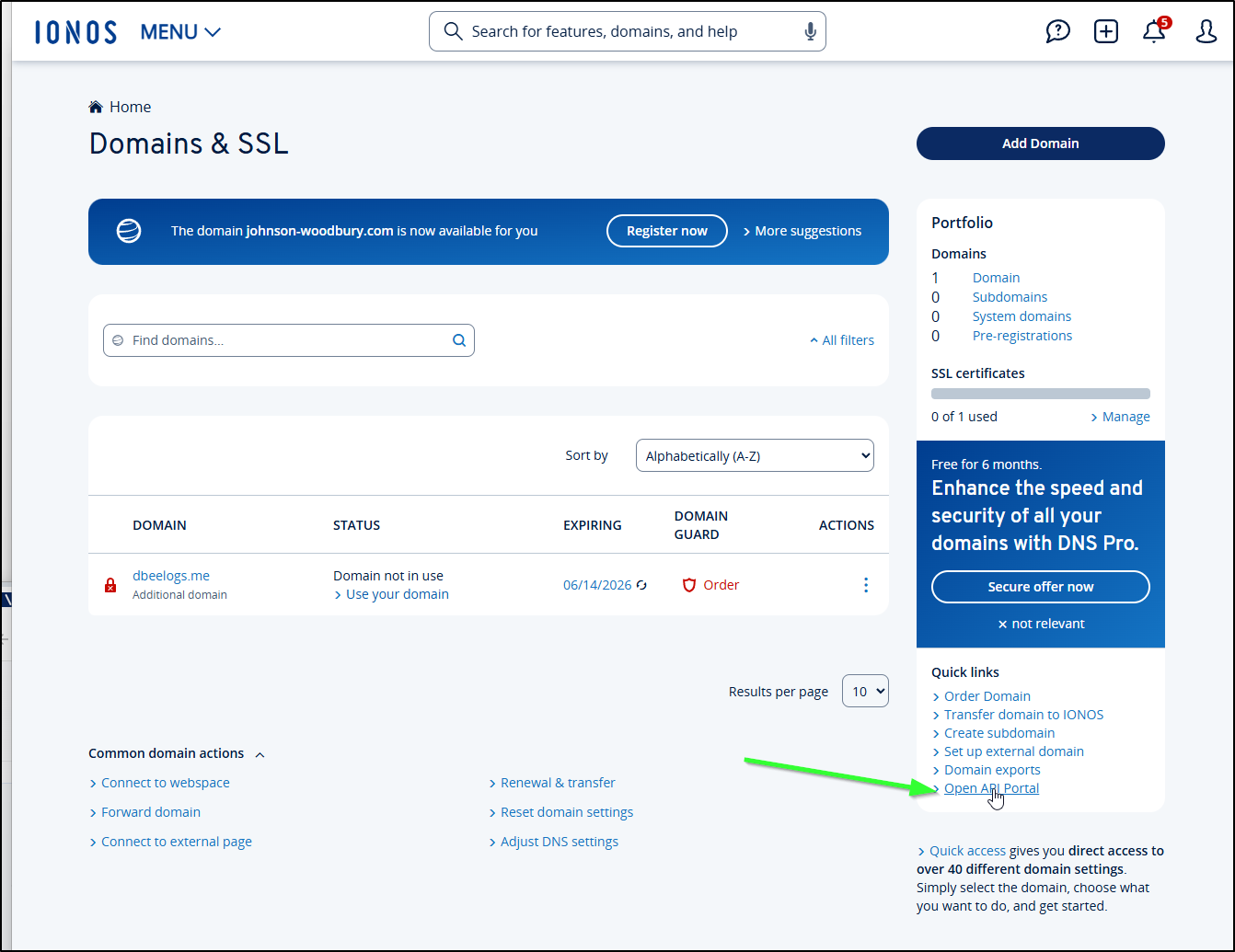

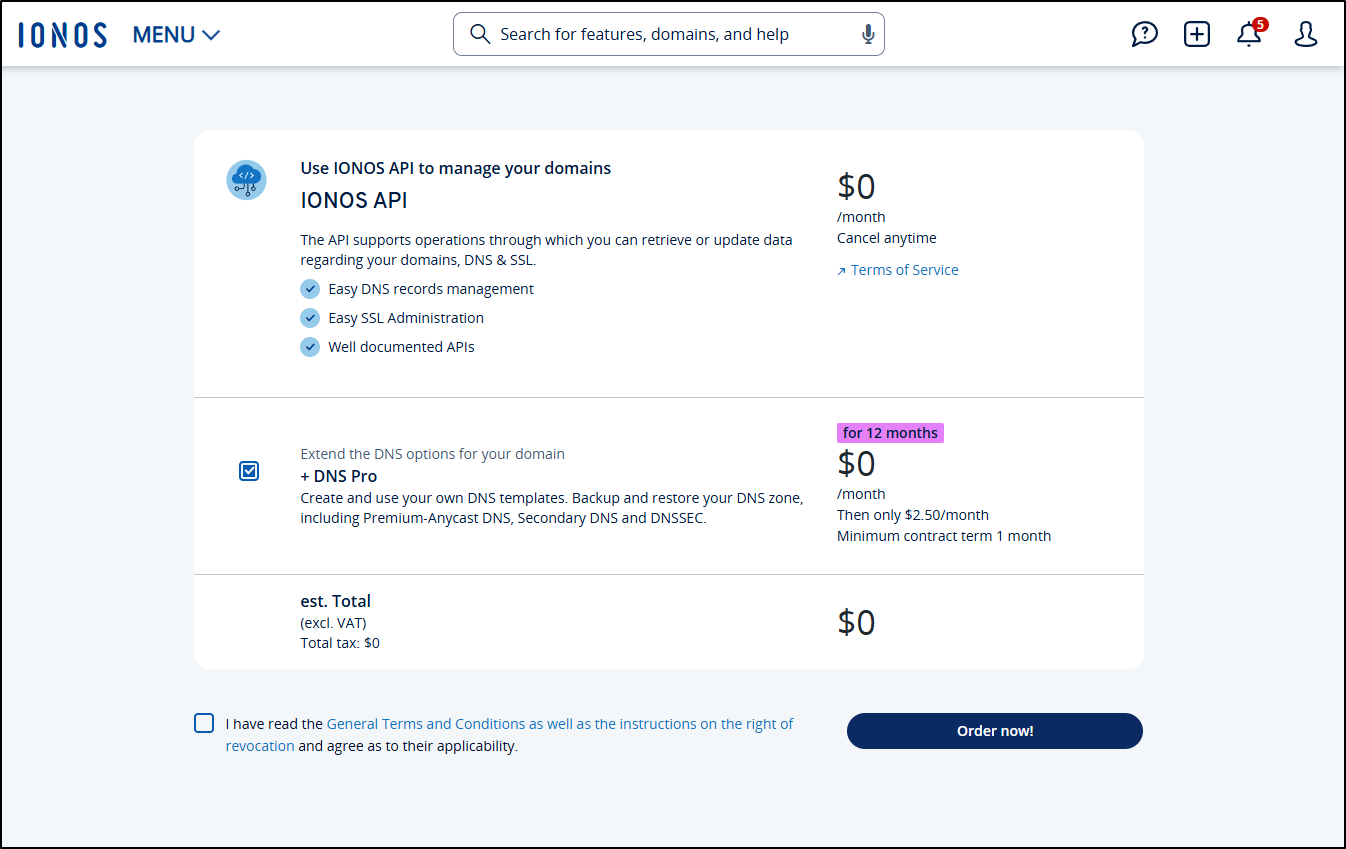

Finding the tokens was a bit of a challenge. And I find a subtle upsell here a bit annoying.

First, find a link to “Open the API Portal”

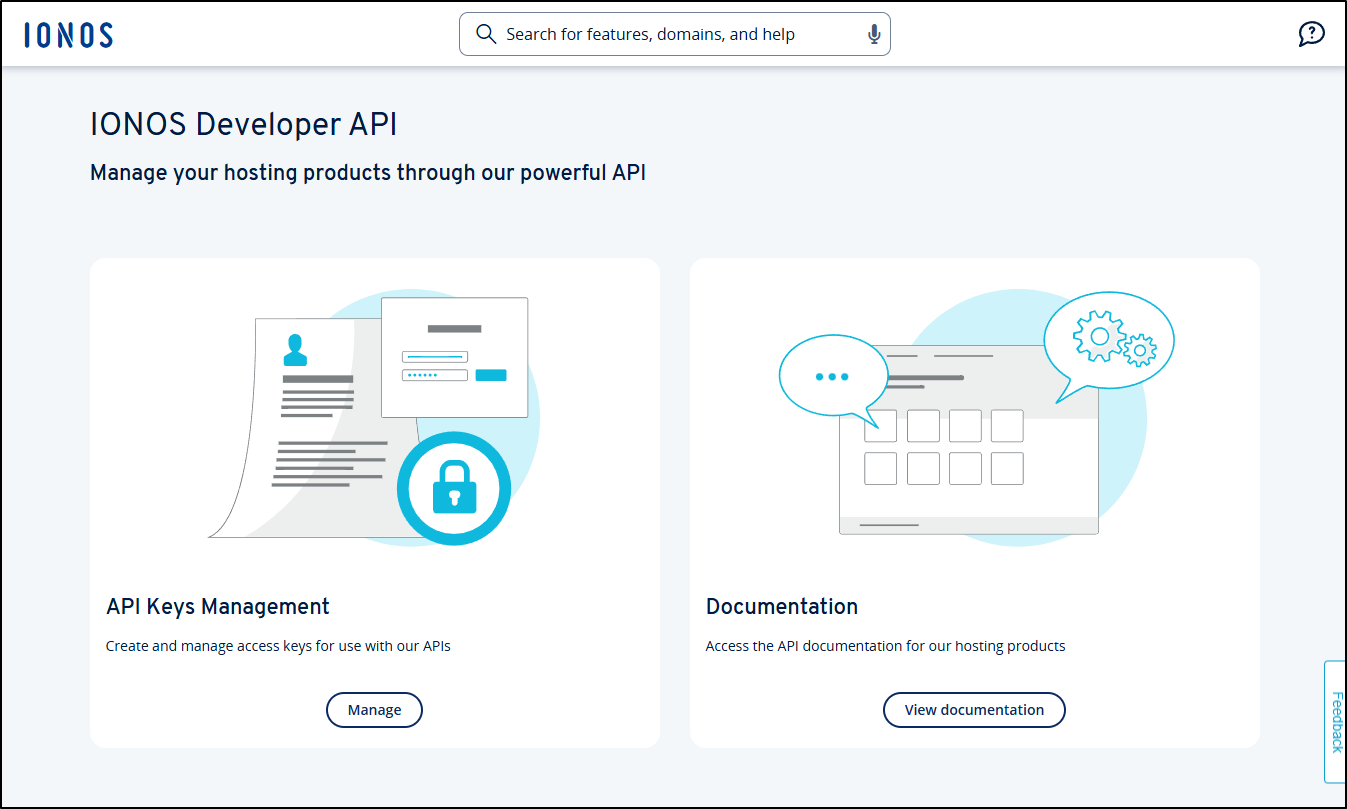

Ah, yes, here I can click to get at my API keys

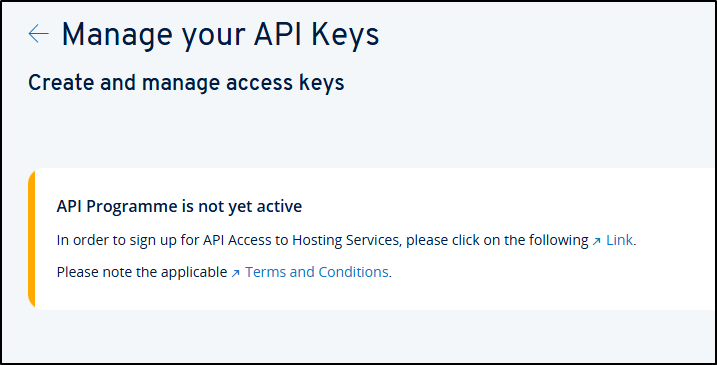

Then it notes you are not signed up for API access, but just follow this link to do so

Here is my irration part: You can get API access for free, but you have no ability to avoid signing up for “DNS Pro” which after a year will be $25/year thereon. I think that is kind of shady.

I signed up, so I’ll have to make an 11mo note in my calendar to find out how to undo all this

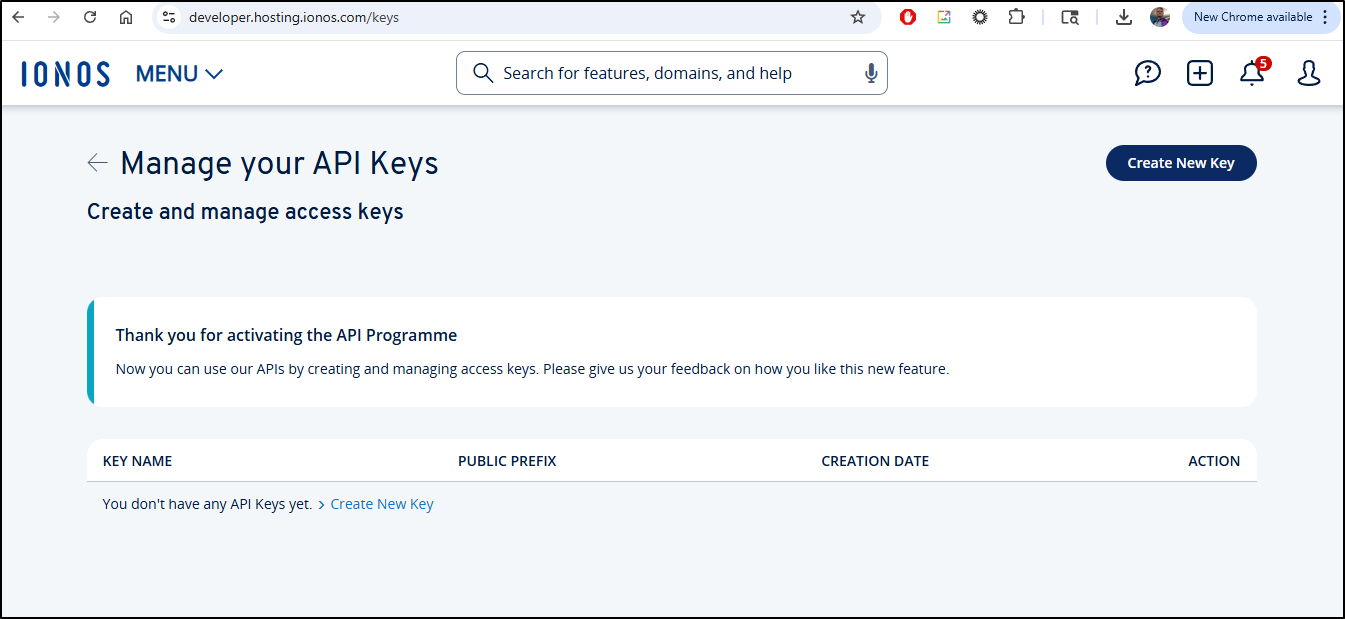

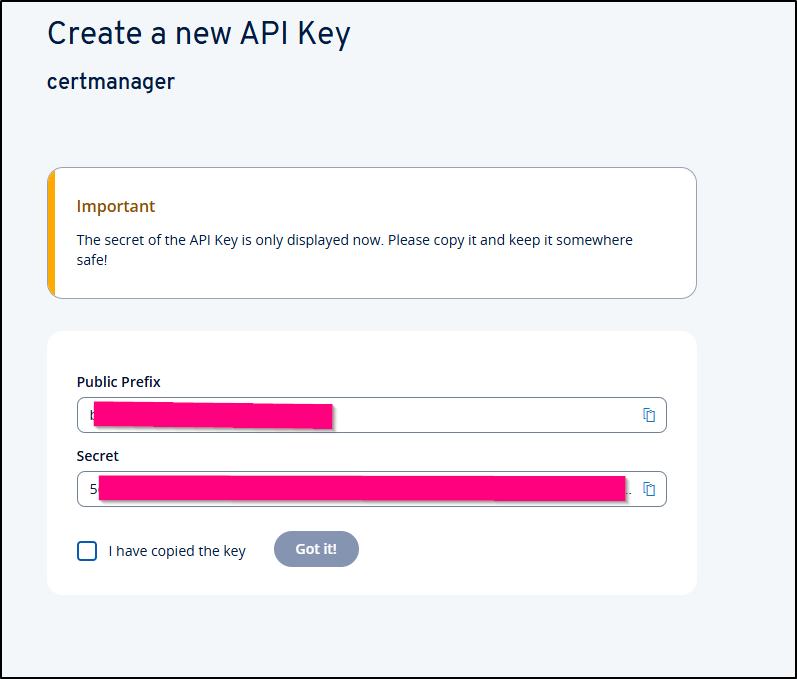

Once done, I can go to API Keys to “Create New Key”

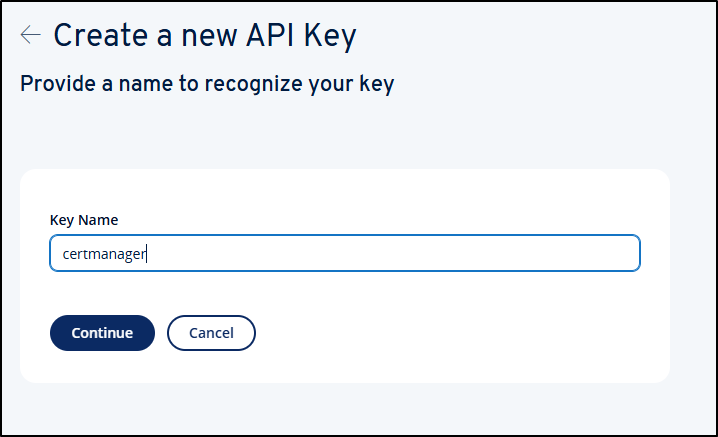

Give it a name

I’ll then note the details

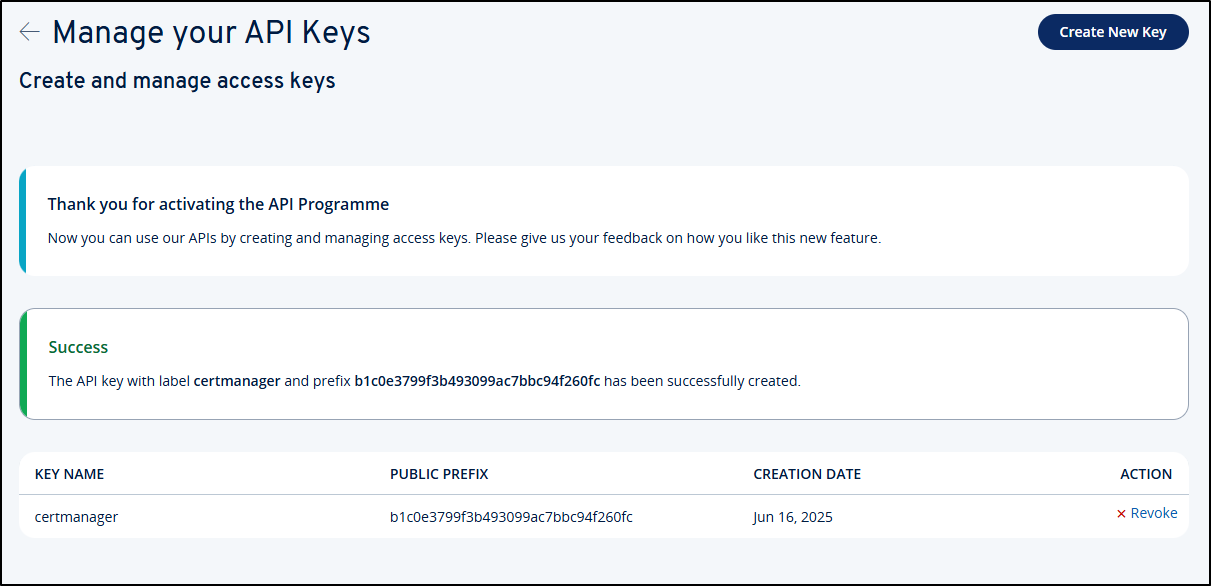

I can see it is live and revoke it at any point

Our next step is back in Kubernetes where we will add the secret. At this point, I’ve already setup cert-manager (years ago at this point).

$ kubectl create secret generic cert-manager-webhook-ionos-cloud \

-n cert-manager \

--from-literal=auth-token=<IONOS Cloud Token>

secret/cert-manager-webhook-ionos-cloud created

I’ll next need to install the IONOS Cloud Cert Manager webhook

$ helm repo add cert-manager-webhook-ionos-cloud https://ionos-cloud.github.io/cert-manager-webhook-ionos-cloud

"cert-manager-webhook-ionos-cloud" has been added to your repositories

$ helm repo update

$ helm upgrade cert-manager-webhook-ionos-cloud \

--namespace cert-manager \

--install cert-manager-webhook-ionos-cloud/cert-manager-webhook-ionos-cloud

Release "cert-manager-webhook-ionos-cloud" does not exist. Installing it now.

NAME: cert-manager-webhook-ionos-cloud

LAST DEPLOYED: Mon Jun 16 06:02:24 2025

NAMESPACE: cert-manager

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Congrats! you have installed the cert-manager-webhook-ionos-cloud chart.

The first step is creating an Issuer or ClusterIssuer.

For more details, check out the official repository: https://github.com/ionos-cloud/cert-manager-webhook-ionos-cloud

I already have ClusterIssuers for the big three:

$ kubectl get clusterissuer

NAME READY AGE

letsencrypt-prod True 470d

azuredns-tpkpw True 469d

gcp-le-prod True 327d

gcpleprod2 True 327d

I want to point out some differences between my ClusterIssuer and the one in the docs

$ cat ./clusterissuer.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: ionos-cloud-issuer

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: isaac.johnson@gmail.com

privateKeySecretRef:

name: letsencrypt-ionos-prod

solvers:

- dns01:

webhook:

groupName: acme.ionos.com

solverName: ionos-cloud

I did not want the same privateKeySecretRef as their suggested letsencrypt-prod is in use already. I set my own email, of course, but the rest is what they suggested (save for the privatekeysecretref).

I’ll apply

$ kubectl apply -f ./clusterissuer.yaml

clusterissuer.cert-manager.io/ionos-cloud-issuer created

Now we can see it added when we list

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ kubectl get clusterissuer

NAME READY AGE

letsencrypt-prod True 470d

azuredns-tpkpw True 469d

gcp-le-prod True 327d

gcpleprod2 True 327d

ionos-cloud-issuer True 3s

We could try just applying a cert object without a resolver.

$ cat certtest.yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: test-dblogs-me

namespace: default

spec:

secretName: test-dblogsme-tls

issuerRef:

name: ionos-cloud-issuer

kind: ClusterIssuer

commonName: 'test.dbeelogs.me'

duration: 8h0m0s

dnsNames:

- test.dblogs.me

To use the API keys with IONOS, be aware the format is “publicprefix.secret”. It took me a bit to figure that out.

I’ll get my Zone

$ curl -X GET -H 'accept: application/json' -H "X-API-KEY: $IONOSAPI" 'https://api.hosting.ionos.com/dns/v1/zones'

[{"name":"dbeelogs.me","id":"71d97303-492c-11f0-b1c9-0a5864440c57","type":"NATIVE"}]

Now I can use that to create an A-Record

curl -X 'POST' \

'https://api.hosting.ionos.com/dns/v1/zones/71d97303-492c-11f0-b1c9-0a5864440c57/records' \

-H 'accept: application/json' \

-H "X-API-KEY: $IONOSAPI" \

-H 'Content-Type: application/json' \

-d '[

{

"name": "test.dbeelogs.me",

"type": "A",

"content": "75.73.224.240",

"ttl": 3600,

"prio": 0,

"disabled": false

}

]'

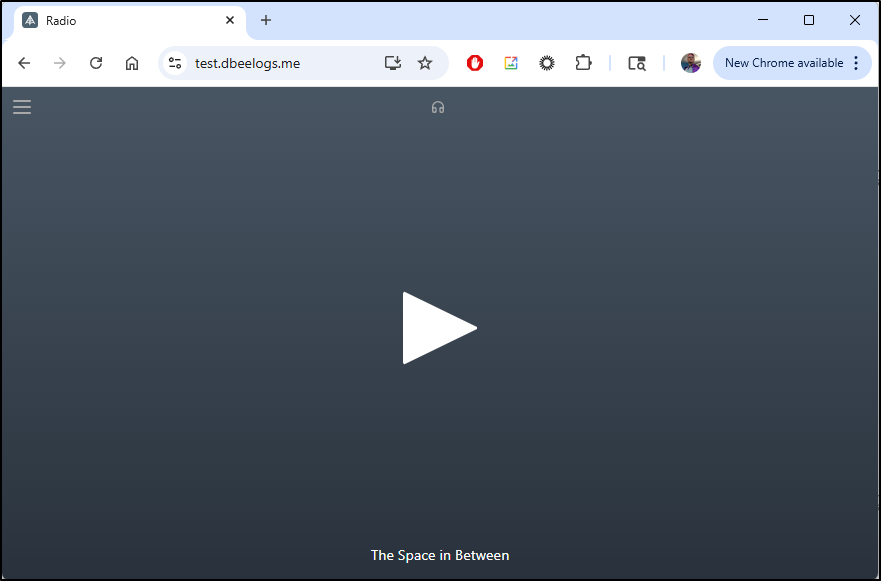

Before I move any farther, I’ll do a quick test

$ cat ./test.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: ionos-cloud-issuer

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: airstation-external-ip

name: testdbeelogsme

spec:

rules:

- host: test.dbeelogs.me

http:

paths:

- backend:

service:

name: airstation-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- test.dbeelogs.me

secretName: testdblogsme-tls

$ kubectl apply -f ./test.ingress.yaml

ingress.networking.k8s.io/testdbeelogsme created

I can see the cert object created

$ kubectl get cert testdblogsme-tls

NAME READY SECRET AGE

testdblogsme-tls False testdblogsme-tls 18s

I gave it a while - but didn’t see it resolve.

I saw an error in the cert-manager from Ionos

$ kubectl logs cert-manager-webhook-ionos-cloud-79c495bb98-6jpzl -n cert-manager | tail -n1

{"level":"error","ts":1750073758.1446373,"caller":"resolver/resolver.go:137","msg":"Error fetching zone","error":"401 Unauthorized: {\"httpStatus\":401,\"messages\":[{\"errorCode\":\"paas-auth-1\",\"message\":\"Unauthorized, wrong or no api key provided to process this request\"}]}\n","stacktrace":"github.com/ionos-cloud/cert-manager-webhook-ionos-cloud/internal/resolver.(*ionosCloudDnsProviderResolver).findZone\n\t/home/runner/work/cert-manager-webhook-ionos-cloud/cert-manager-webhook-ionos-cloud/internal/resolver/resolver.go:137\ngithub.com/ionos-cloud/cert-manager-webhook-ionos-cloud/internal/resolver.(*ionosCloudDnsProviderResolver).Present\n\t/home/runner/work/cert-manager-webhook-ionos-cloud/cert-manager-webhook-ionos-cloud/internal/resolver/resolver.go:82\ngithub.com/cert-manager/cert-manager/pkg/acme/webhook/registry/challengepayload.(*REST).callSolver\n\t/home/runner/go/pkg/mod/github.com/cert-manager/cert-manager@v1.17.1/pkg/acme/webhook/registry/challengepayload/challenge_payload.go:90\ngithub.com/cert-manager/cert-manager/pkg/acme/webhook/registry/challengepayload.(*REST).Create\n\t/home/runner/go/pkg/mod/github.com/cert-manager/cert-manager@v1.17.1/pkg/acme/webhook/registry/challengepayload/challenge_payload.go:70\nk8s.io/apiserver/pkg/endpoints/handlers.(*namedCreaterAdapter).Create\n\t/home/runner/go/pkg/mod/k8s.io/apiserver@v0.32.2/pkg/endpoints/handlers/create.go:254\nk8s.io/apiserver/pkg/endpoints/handlers.CreateResource.createHandler.func1.1\n\t/home/runner/go/pkg/mod/k8s.io/apiserver@v0.32.2/pkg/endpoints/handlers/create.go:184\nk8s.io/apiserver/pkg/endpoints/handlers.CreateResource.createHandler.func1.2\n\t/home/runner/go/pkg/mod/k8s.io/apiserver@v0.32.2/pkg/endpoints/handlers/create.go:209\nk8s.io/apiserver/pkg/endpoints/handlers/finisher.finishRequest.func1\n\t/home/runner/go/pkg/mod/k8s.io/apiserver@v0.32.2/pkg/endpoints/handlers/finisher/finisher.go:117"}

Could we need the same public.secret for the token?

I’ll delete the ingress

$ kubectl delete -f ./test.ingress.yaml

ingress.networking.k8s.io "testdbeelogsme" deleted

I’ll swap out secrets

$ kubectl delete secret cert-manager-webhook-ionos-cloud -n cert-manager && kubectl create secret generic cert-manager-webhook-ionos-cloud -n cert-manager --from-literal=auth-token=$IONOSAPI

Then rotate the cert-manager pod to ensure it fetches the latest secret

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ kubectl get po -n cert-manager

NAME READY STATUS RESTARTS AGE

cert-manager-webhook-6866f7bff4-rhn9k 1/1 Running 2 (74d ago) 471d

cert-manager-cainjector-59d8d796bb-5vzjd 1/1 Running 0 219d

cert-manager-5b65c54cc8-wkwkc 1/1 Running 0 53d

cert-manager-webhook-ionos-cloud-79c495bb98-6jpzl 1/1 Running 0 38m

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ kubectl delete po cert-manager-webhook-ionos-cloud-79c495bb98-6jpzl -n c

ert-manager

pod "cert-manager-webhook-ionos-cloud-79c495bb98-6jpzl" deleted

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ kubectl get po -n cert-manager

NAME READY STATUS RESTARTS AGE

cert-manager-webhook-6866f7bff4-rhn9k 1/1 Running 2 (74d ago) 471d

cert-manager-cainjector-59d8d796bb-5vzjd 1/1 Running 0 219d

cert-manager-5b65c54cc8-wkwkc 1/1 Running 0 53d

cert-manager-webhook-ionos-cloud-79c495bb98-ztfmz 0/1 Running 0 5s

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ kubectl get po -n cert-manager

NAME READY STATUS RESTARTS AGE

cert-manager-webhook-6866f7bff4-rhn9k 1/1 Running 2 (74d ago) 471d

cert-manager-cainjector-59d8d796bb-5vzjd 1/1 Running 0 219d

cert-manager-5b65c54cc8-wkwkc 1/1 Running 0 53d

cert-manager-webhook-ionos-cloud-79c495bb98-ztfmz 1/1 Running 0 7s

This looks good

$ kubectl logs cert-manager-webhook-ionos-cloud-79c495bb98-ztfmz -n cert-manager

{"level":"info","ts":1750074073.3924236,"caller":"webhook/main.go:31","msg":"Starting webhook server"}

I0616 11:41:14.403213 1 handler.go:286] Adding GroupVersion acme.ionos.com v1alpha1 to ResourceManager

I0616 11:41:14.415755 1 requestheader_controller.go:180] Starting RequestHeaderAuthRequestController

I0616 11:41:14.415804 1 shared_informer.go:313] Waiting for caches to sync for RequestHeaderAuthRequestController

I0616 11:41:14.415820 1 configmap_cafile_content.go:205] "Starting controller" name="client-ca::kube-system::extension-apiserver-authentication::client-ca-file"

I0616 11:41:14.415854 1 shared_informer.go:313] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0616 11:41:14.415858 1 configmap_cafile_content.go:205] "Starting controller" name="client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file"

I0616 11:41:14.415871 1 shared_informer.go:313] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0616 11:41:14.416329 1 dynamic_serving_content.go:135] "Starting controller" name="serving-cert::/tls/tls.crt::/tls/tls.key"

I0616 11:41:14.416813 1 secure_serving.go:213] Serving securely on [::]:8443

I0616 11:41:14.416918 1 tlsconfig.go:243] "Starting DynamicServingCertificateController"

{"level":"info","ts":1750074074.4169164,"caller":"resolver/resolver.go:122","msg":"IONOS Cloud resolver initialized"}

I0616 11:41:14.516253 1 shared_informer.go:320] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0616 11:41:14.516257 1 shared_informer.go:320] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0616 11:41:14.516288 1 shared_informer.go:320] Caches are synced for RequestHeaderAuthRequestController

Still an error

{"level":"error","ts":1750074136.5479589,"caller":"resolver/resolver.go:137","msg":"Error fetching zone","error":"401 Unauthorized: {\"httpStatus\":401,\"messages\":[{\"errorCode\":\"paas-auth-1\",\"message\":\"Unauthorized, wrong or no api key provided to process this request\"}]}\n","stacktrace":"github.com/ionos-cloud/cert-manager-webhook-ionos-cloud/internal/resolver.(*ionosCloudDnsProviderResolver).findZone\n\t/home/runner/work/cert-manager-webhook-ionos-cloud/cert-manager-webhook-ionos-cloud/internal/resolver/resolver.go:137\ngithub.com/ionos-cloud/cert-manager-webhook-ionos-cloud/internal/resolver.(*ionosCloudDnsProviderResolver).Present\n\t/home/runner/work/cert-manager-webhook-ionos-cloud/cert-manager-webhook-ionos-cloud/internal/resolver/resolver.go:82\ngithub.com/cert-manager/cert-manager/pkg/acme/webhook/registry/challengepayload.(*REST).callSolver\n\t/home/runner/go/pkg/mod/github.com/cert-manager/cert-manager@v1.17.1/pkg/acme/webhook/registry/challengepayload/challenge_payload.go:90\ngithub.com/cert-manager/cert-manager/pkg/acme/webhook/registry/challengepayload.(*REST).Create\n\t/home/runner/go/pkg/mod/github.com/cert-manager/cert-manager@v1.17.1/pkg/acme/webhook/registry/challengepayload/challenge_payload.go:70\nk8s.io/apiserver/pkg/endpoints/handlers.(*namedCreaterAdapter).Create\n\t/home/runner/go/pkg/mod/k8s.io/apiserver@v0.32.2/pkg/endpoints/handlers/create.go:254\nk8s.io/apiserver/pkg/endpoints/handlers.CreateResource.createHandler.func1.1\n\t/home/runner/go/pkg/mod/k8s.io/apiserver@v0.32.2/pkg/endpoints/handlers/create.go:184\nk8s.io/apiserver/pkg/endpoints/handlers.CreateResource.createHandler.func1.2\n\t/home/runner/go/pkg/mod/k8s.io/apiserver@v0.32.2/pkg/endpoints/handlers/create.go:209\nk8s.io/apiserver/pkg/endpoints/handlers/finisher.finishRequest.func1\n\t/home/runner/go/pkg/mod/k8s.io/apiserver@v0.32.2/pkg/endpoints/handlers/finisher/finisher.go:117"}

And, of course, no cert

$ kubectl get cert testdblogsme-tls

NAME READY SECRET AGE

testdblogsme-tls False testdblogsme-tls 3m7s

I shouldn’t need to, but let’s specify the secret to use

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ kubectl get clusterissuer ionos-cloud-issuer -o yaml > ionos.cluster.issuer.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ kubectl get clusterissuer ionos-cloud-issuer -o yaml > ionos.cluster.issuer.yaml.bak

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ vi ionos.cluster.issuer.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ diff ionos.cluster.issuer.yaml ionos.cluster.issuer.yaml.bak

23,25d22

< config:

< secretRef: cert-manager-webhook-ionos-cloud

< authTokenSecretKey: auth-token

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ kubectl delete -f ./ionos.cluster.issuer.yaml

clusterissuer.cert-manager.io "ionos-cloud-issuer" deleted

builder@DESKTOP-QADGF36:~/Workspaces/dbLogApp2$ kubectl apply -f ./ionos.cluster.issuer.yaml

clusterissuer.cert-manager.io/ionos-cloud-issuer created

Fabmade cert manager

Let’s ditch the one from Ionos and try this one from Fabmade

This can be done while still having the former as Ionos’s one is cert-manager-webhook-ionos-cloud and this one is cert-manager-webhook-ionos

$ helm repo add cert-manager-webhook-ionos https://fabmade.github.io/cert-manager-webhook-ionos

"cert-manager-webhook-ionos" has been added to your repositories

$ helm install cert-manager-webhook-ionos cert-manager-webhook-ionos/cert-manager-webhook-ionos -ncert-manager

NAME: cert-manager-webhook-ionos

LAST DEPLOYED: Mon Jun 16 06:53:24 2025

NAMESPACE: cert-manager

STATUS: deployed

REVISION: 1

TEST SUITE: None

I’ll now try using the secret for the Fabmade issuer

$ cat ./fabmade.secret.yaml

apiVersion: v1

stringData:

IONOS_PUBLIC_PREFIX: b1c0e3799f3b493099ac7bbc94f260fc

IONOS_SECRET: asdfsadfasdfasdf

kind: Secret

metadata:

name: ionos-secret

namespace: cert-manager

type: Opaque

$ kubectl apply -f ./fabmade.secret.yaml

secret/ionos-secret created

Then the ClusterIssuer (using a unique secret)

$ cat ./fabmade.prod.clusterissuer.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-ionos-prod

spec:

acme:

# The ACME server URL

server: https://acme-v02.api.letsencrypt.org/directory

# Email address used for ACME registration

email: isaac.johnson@gmail.com

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

name: letsencrypt-ionos-prod-fabmade

# Enable the dns01 challenge provider

solvers:

- dns01:

webhook:

groupName: acme.fabmade.de

solverName: ionos

config:

apiUrl: https://api.hosting.ionos.com/dns/v1

publicKeySecretRef:

key: IONOS_PUBLIC_PREFIX

name: ionos-secret

secretKeySecretRef:

key: IONOS_SECRET

name: ionos-secret

$ kubectl apply -f ./fabmade.prod.clusterissuer.yaml

clusterissuer.cert-manager.io/letsencrypt-ionos-prod created

This time when applying my ingress, I didn’t see it fall down in the logs

$ kubectl logs cert-manager-webhook-ionos-6b46dcdb5c-d4fw4 -n cert-manager

I0616 11:53:44.442312 1 handler.go:286] Adding GroupVersion acme.fabmade.de v1alpha1 to ResourceManager

I0616 11:53:44.453074 1 configmap_cafile_content.go:202] "Starting controller" name="client-ca::kube-system::extension-apiserver-authentication::client-ca-file"

I0616 11:53:44.453098 1 configmap_cafile_content.go:202] "Starting controller" name="client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file"

I0616 11:53:44.453073 1 requestheader_controller.go:169] Starting RequestHeaderAuthRequestController

I0616 11:53:44.453357 1 shared_informer.go:313] Waiting for caches to sync for RequestHeaderAuthRequestController

I0616 11:53:44.453102 1 shared_informer.go:313] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0616 11:53:44.453119 1 shared_informer.go:313] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file