Published: May 1, 2025 by Isaac Johnson

Fiona Apple famously got on stage and declared to everyone watching “This world is Bullshit!”, and then went on to give a rather crazy awards speech. It’s less about Fiona Apple or any other famous person questioning the reality of the media but more about us recognizing there are times where we must say “the emperor has no clothes”, “this (AI Hype) is Bullshit”; the media is wrong and what you see is not real. I feel that with AI we’re reaching a point where some of us need to decide whether to take the red pill or the blue pill. To be fair, AI has good parts, AI has value, but we are chasing it as the magic elixer to solve all our problems.

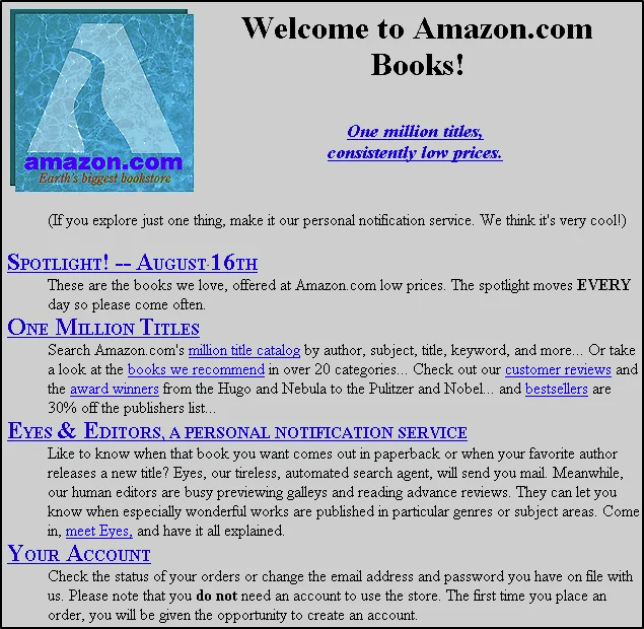

This isn’t the first time. If we turn back the clock and look at a world where everybody had to have a website in the 1990s it created this situation where people were paid enormous salaries to move to Silicon Valley and build websites for things that made no sense whatsoever. We had websites to log parking tickets with the idea that maybe the website, in logging it, could make a business out of that. Something about fixed parking tickets, and, of course, that company shut down and hundreds of companies also failed in the Dotcom bust of the early 2000s because a website isn’t UBIK - it doesn’t solve business problems in and of itself.

That’s not to say the need for websites didn’t exist. They did, and in many cases there’s a lot of Industry now on the web (e.g. a Book seller turned global retailer). However, the ones that survived are the ones that provided some entertainment, utility, or purpose. We found we didn’t need a huge development team for every company’s website.

If we turn the clock a little further forward we look in the early 2000s around 2010 the iPhone gets popular everybody needs an “app”. Every company was then developing an app and you would hear the slogan “there’s an app for that”. Everywhere, people started to build apps; gaming apps, company apps, apps on apps. Some of them survived and some apps are really good; I’m sure there are apps on your phone you use daily. But not every company needs a development team to just build “apps” and eventually the hype began to subsided… then we got to the cloud.

“We all need to be on the cloud”. We started to hear “cloud this… cloud that…”. Around 2016 companies build up shops to “do cloud” and “devops to the cloud”. There was so much cloud hype that didn’t make sense. Sometimes the migration to OpEx over CapEx makes sense. Sometimes managing a NOC isn’t a core competency and I’m sure there are many companies still in their “cloud journey”. That said, the cloud doesn’t solve business problems in and of itself. The cloud can help shift from a Capital to Operational Expense model, but in and of itself the cloud isn’t the magic bullet… and if you can see what I’m trying to get at there’s a pattern of a tech trend getting really popular and everybody believing it’s the solution to all problems.

No more so has it ever been the case with AI. In fact AI, or large language models (LLMs) are basically really powerful math equations and weights that, to most people, seem like magic. You ask the AI a question and it comes up with a very reasonable answer. People start to say “well we could use AI for everything”: we can use AI to assist us writing our code, AI is a chatbot to answer customers. We could build dashboards, apps and tie AI into every single thing – yet no one’s asked the question “does this help?” and “does this make sense?”. In many cases AI will not help. AI hallucinates and is expensive. AI is just a tool and you’re going to find some AI solutions ultimately cost you far more money than it would have to to make a bespoke dynamic coded solution.

Personally, I find AI has tremendous value as a code assistant: you can see patterns and with most code projects, we can look at some code we wrote and extend from it. AI coding assistants can get me started when I have blank page problem and don’t know how to get going. I can help solve a complicated bug especially with tools like Claude Code when I’m stuck, of course, for a price. It is just like hiring more staff or an intern or using TaskRabbit or Fiverr or any other small consulting firm: there’s a price to be paid and there are times where it makes more sense to solve it yourself then try to use to AI to “vibe code” your way out of it.

The other problem that people aren’t really calling out with AI coding is that the assistants are trained on old data and regurgitating the same patterns. The tools aren’t making things better in a new and novel way. So they end up, very often for me, proposing software solutions using dated models, dated code, deprecated APIs, and antipatterns. I’m not sure that that makes our code, in a global sense, better. It just makes it more homogeneous.

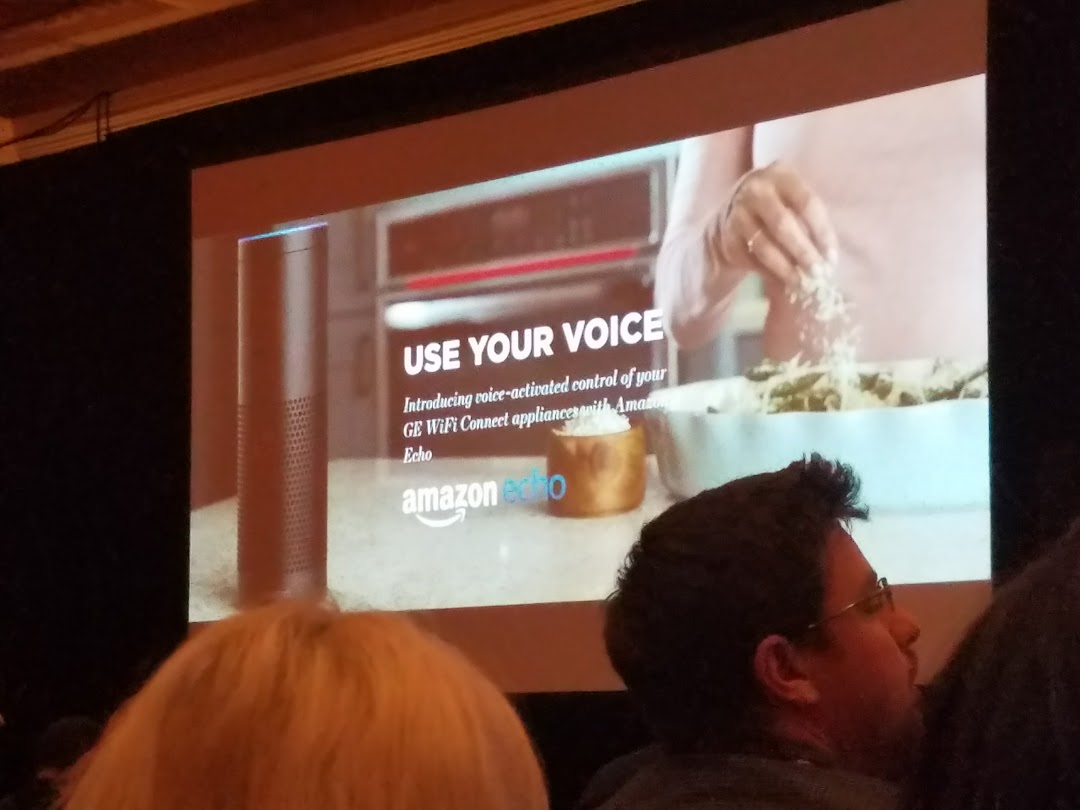

I can distinctly remember my first Tech Conference in Las Vegas. It was 2016 and was Amazon Re:Invent. Amazon rolled a big semi truck up on stage to announce the Snowmobile service. In our swag bags we were all given an Alexa device – which was going to change the world. Werner Vogels got on stage and told us the world would be voice, everything is voice, and everything would be tools, utilities, and apps, all called by talking to our Alexa devices.

It seemed like everything would be “assistant” driven. We had Microsoft coming out with Cortana (pulled from their popular Halo franchise), Google had their own devices rolling out (later buying Nest to expand that), and voice was going to be king.

The “assistent era” really didn’t last that long and part of the reason is no one could figure out how to pay for it. Who is going to pay for an Alexa app? Who’s going to monetize a utility that searches for you from your Cortana enabled headphones? Soon we saw Cortana wind down altogether and just two years ago Google stopped allowing augmentations to their assistant (though you can still do it through an Android app). It really came down to more than anything a lack of being able to pay for these services.

To that extent, who’s going to pay for all these AI tools – especially agentic AI? If you farm out your request to a bunch of services running in the cloud, who is going to pay for that and if these Services have to stay running and listening that can get expensive. If you have an expensive model on GPU-enabled compute, someone’s going to pay that bill. I have a feeling that once all the VC funding runs out we’re going to see a dotcom type crash that sees a whole lot of AI apps and companies wind down, or get bought up with only the most valuable pieces kept.

With all things AI ask yourself, “who’s going to pay for it?” and more importantly “Will the cost be less than or equivalent to which you can do with a non-AI version?”

Another aspect of this whole MCP Agentic AI world I don’t see given enough consideration is credentials/secrets and PHI/PII. Are you comfortable giving your Google account login to some random agent running in a place you don’t know? Do you just trust that they only use it for good? Do you trust that an agent calling an agent calling an agent - all along the chain - has security in mind? With all these agentic workers, are we certain that the developers do not have leaky code or leverage insecure storage? How do you know if they do, or how would they contact you if they did make a mistake, unless they log your credentials?

More importantly, if you work in a regulated market like Finance or Medical, you very much need to know where data has gone and who sees it and what level of authorization they have to see it. If you’re in Europe, with data sovereignty rules, it may become a very big deal if that data leaves a country of origin. Can you control where the agents are actually running?

However, I am not a Luddite. I’m not here to take your AI and trash it. I do think we need to take a chill pill and when we look at our problems, start with the problem, not try to figure out how to jerry rig in a predefined AI solution.

With all things ask yourself, “does adding AI actually improve things or just cause more problems?”. Will AI save us money or ultimately cost us more money? Lastly, ask “what do I really need” not “what I want”. Focus not on “what the developers are wanting” and certainly not on “what’s hip” just to be hip in the socials. Keep the north star on “need” and the real question becomes “what skills and tools do I need to achieve that?”.

I’ve come not to praise AI, but I’m also not here to bury it.