Published: Feb 27, 2025 by Isaac Johnson

Today we’ll start by revisiting Ghostty, the Terminal I was not successful with last time. I’ll then cover a very cool terminal workspace manager (akin to TMux) called Zellij.

We then move on to looking at how to engage with Ollama using the command line. First, I’ll show how we can tie VIM to Ollama with vim-ollama then move on to another amazing command line tool Parllama.

Lastly, I’ll show a lot of examples and how some of these can work together.

Let’s dig in!

Ghostty

A couple weeks ago I briefly touched on Ghostty, a pet Open-Source terminal project from Mitchell Hashimoto. In that blog article I tried without success to get it working in Linux but only by way of WSL (which is my 90% day to day).

I wanted to revisit that and try it on Mac and Linux Desktop.

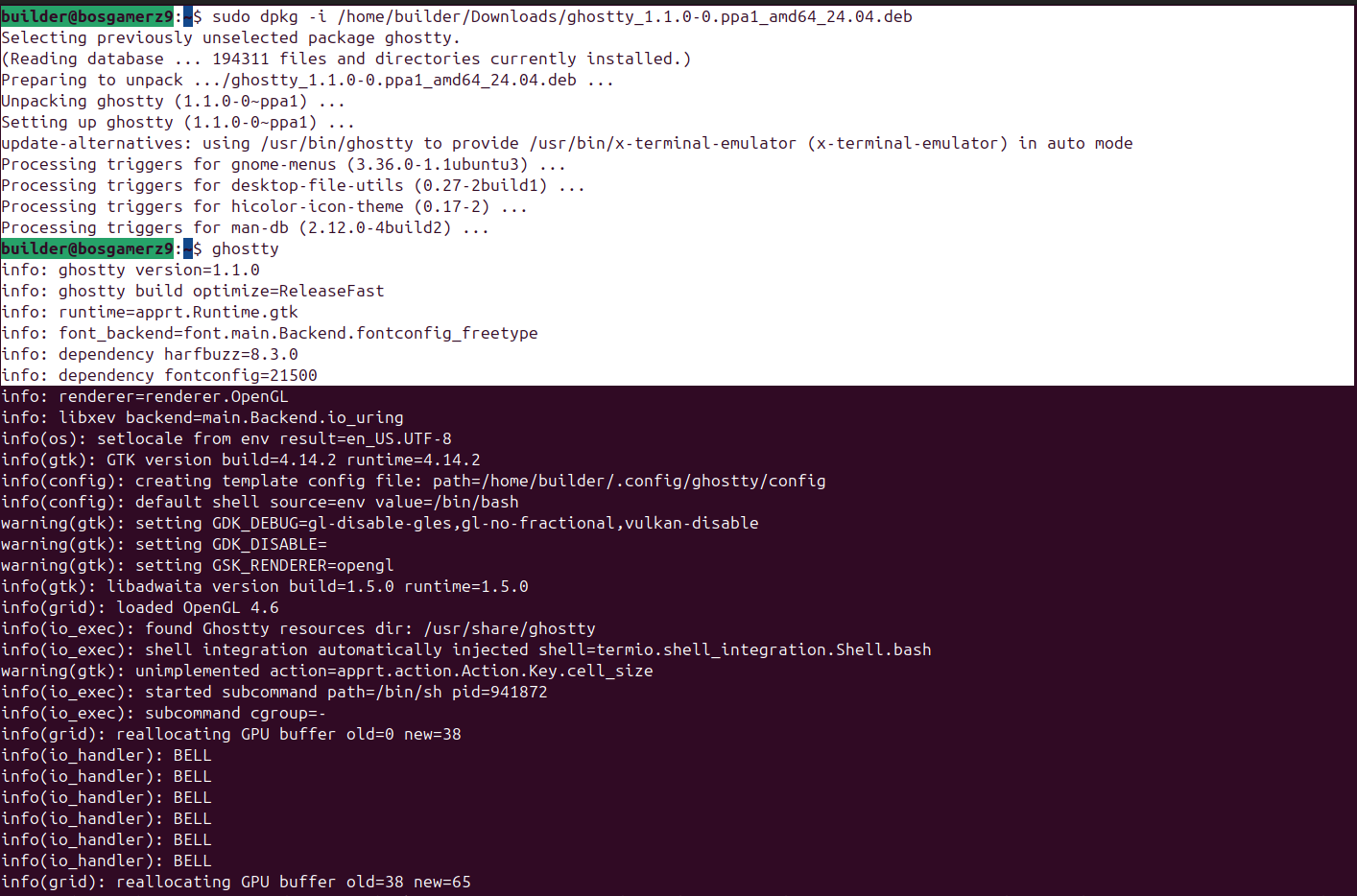

It was easy to install the deb package

And change themes and fonts

But I just stopped there. It has configs and keybindings but really, it’s a functional terminal.

Other than a neat transition to zoom out when adding a tab (of which I really didn’t get the point), I couldn’t see any reason to use this over the standard Ubuntu terminal.

Zellij

First, like other things, I found Zellij wasn’t in the default apt list for Focal.

The write up I saw had suggested apt could be used.

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ sudo apt install zellij

Reading package lists... Done

Building dependency tree

Reading state information... Done

E: Unable to locate package zellij

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 20.04.6 LTS

Release: 20.04

Codename: focal

I’m starting to think I might actually have to upgrade WSL soon.

I tried this same thing on my Ubuntu 24.04 host and it also didn’t have an apt package.

In looking at the Zellij site, however, it doesn’t seem a native Aptitude package is there, but Homebrew should work

$ brew install zellij

==> Auto-updating Homebrew...

Adjust how often this is run with HOMEBREW_AUTO_UPDATE_SECS or disable with

HOMEBREW_NO_AUTO_UPDATE. Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

==> Auto-updated Homebrew!

Updated 4 taps (derailed/popeye, defenseunicorns/tap, homebrew/core and homebrew/cask).

==> New Formulae

ad gdtoolkit rink

aqtinstall gnome-builder scryer-prolog

astroterm gomi sdl3_image

behaviortree.cpp hypopg terraform-cleaner

catgirl icann-rdap text-embeddings-inference

cloud-provider-kind lazysql tf-summarize

defenseunicorns/tap/lula@0.16.0 libcdio-paranoia tfprovidercheck

defenseunicorns/tap/uds@0.21.0 ludusavi umka-lang

defenseunicorns/tap/zarf@0.48.0 martin xeyes

defenseunicorns/tap/zarf@0.48.1 pgbackrest xlsclients

dud pgrx xprop

evil-helix postgresql-hll xwininfo

You have 93 outdated formulae installed.

==> Downloading https://ghcr.io/v2/homebrew/core/zellij/manifests/0.41.2

################################################################################################################# 100.0%

==> Fetching dependencies for zellij: linux-headers@5.15, openssl@3, xz and gcc

==> Downloading https://ghcr.io/v2/homebrew/core/linux-headers/5.15/manifests/5.15.178

################################################################################################################# 100.0%

==> Fetching linux-headers@5.15

==> Downloading https://ghcr.io/v2/homebrew/core/linux-headers/5.15/blobs/sha256:64e74d70e1fe5ec25b13d8a76e2bfe6bdcef739

################################################################################################################# 100.0%

==> Downloading https://ghcr.io/v2/homebrew/core/openssl/3/manifests/3.4.0

################################################################################################################# 100.0%

==> Fetching openssl@3

... snip ...

==> Installing fontconfig dependency: expat

==> Downloading https://ghcr.io/v2/homebrew/core/expat/manifests/2.6.4

Already downloaded: /home/builder/.cache/Homebrew/downloads/700d5fdfe27a60378ec5801ebcbf598a7f6eeb78be73ddb517b205e6692b42d6--expat-2.6.4.bottle_manifest.json

==> Pouring expat--2.6.4.x86_64_linux.bottle.tar.gz

Error: Too many open files

Though trying again suggested it was installed

$ brew install zellij

==> Downloading https://formulae.brew.sh/api/formula.jws.json

Warning: zellij 0.41.2 is already installed and up-to-date.

To reinstall 0.41.2, run:

brew reinstall zellij

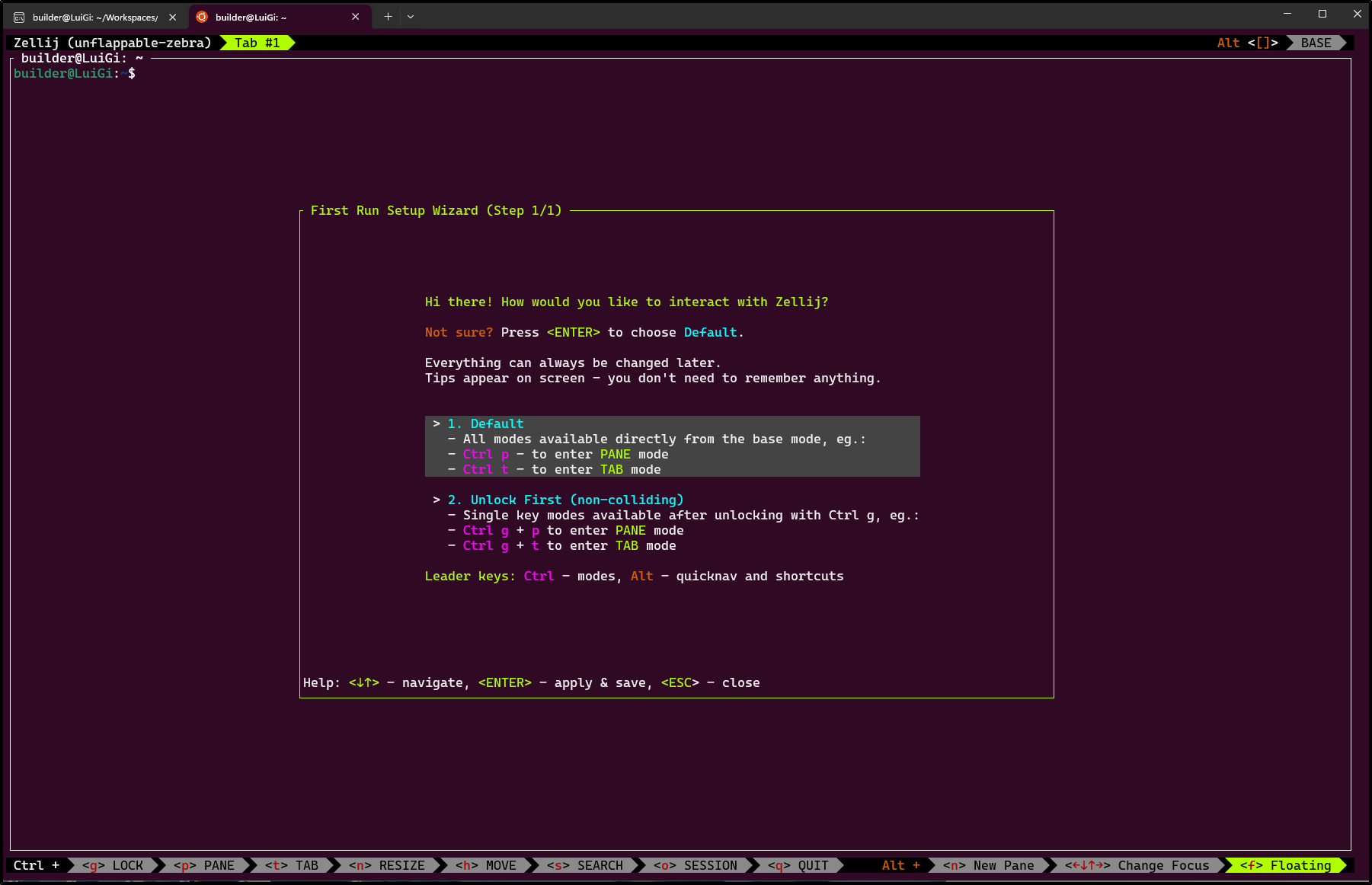

I can now launch it

I’m still getting the hang of it. So I wouldn’t collide with other things I did select to do the “ctrl-g” unlock which I think slows me down a bit

Vim Ollama setup

I wanted next to see if I could get Ollama support directly in VIM. There is a nice plugin we can try called vim-ollama.

This requires a bit of Python and setup bits, so let’s take care of those first.

(Spoiler: most of my initial issues here are because i neglected to set the PYTHONPATH path after popping into the Virtual Env. see the steps lower on PYTHONPATH if following along)

Setup pre-reqs:

$ sudo apt update && sudo apt install python3-httpx python3-jinja2

Next, I setup a Python3 Virtual Env

builder@LuiGi:~/Workspaces/jekyll-blog$ python3 -m venv $HOME/vim-ollama

builder@LuiGi:~/Workspaces/jekyll-blog$ source $HOME/vim-ollama/bin/activate

(vim-ollama) builder@LuiGi:~/Workspaces/jekyll-blog$

For the pip install steps to work, I did find that I needed to update Pip3 first

(vim-ollama) builder@LuiGi:~/Workspaces/jekyll-blog$ pip install --upgrade pip

Requirement already satisfied: pip in /home/builder/vim-ollama/lib/python3.13/site-packages (25.0)

Collecting pip

Downloading pip-25.0.1-py3-none-any.whl.metadata (3.7 kB)

Downloading pip-25.0.1-py3-none-any.whl (1.8 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.8/1.8 MB 4.8 MB/s eta 0:00:00

Installing collected packages: pip

Attempting uninstall: pip

Found existing installation: pip 25.0

Uninstalling pip-25.0:

Successfully uninstalled pip-25.0

Successfully installed pip-25.0.1

Then add

pip install httpx>=0.23.3

pip install requests

pip install jinja2

E.g.

(vim-ollama) builder@LuiGi:~/Workspaces/jekyll-blog$ pip install httpx>=0.23.3

(vim-ollama) builder@LuiGi:~/Workspaces/jekyll-blog$ pip install requests

Collecting requests

Downloading requests-2.32.3-py3-none-any.whl.metadata (4.6 kB)

Collecting charset-normalizer<4,>=2 (from requests)

Downloading charset_normalizer-3.4.1-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (35 kB)

Requirement already satisfied: idna<4,>=2.5 in /home/builder/vim-ollama/lib/python3.13/site-packages (from requests) (3.10)

Collecting urllib3<3,>=1.21.1 (from requests)

Downloading urllib3-2.3.0-py3-none-any.whl.metadata (6.5 kB)

Requirement already satisfied: certifi>=2017.4.17 in /home/builder/vim-ollama/lib/python3.13/site-packages (from requests) (2025.1.31)

Downloading requests-2.32.3-py3-none-any.whl (64 kB)

Downloading charset_normalizer-3.4.1-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (144 kB)

Downloading urllib3-2.3.0-py3-none-any.whl (128 kB)

Installing collected packages: urllib3, charset-normalizer, requests

Successfully installed charset-normalizer-3.4.1 requests-2.32.3 urllib3-2.3.0

(vim-ollama) builder@LuiGi:~/Workspaces/jekyll-blog$ pip install jinja2

Collecting jinja2

Downloading jinja2-3.1.5-py3-none-any.whl.metadata (2.6 kB)

Collecting MarkupSafe>=2.0 (from jinja2)

Downloading MarkupSafe-3.0.2-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (4.0 kB)

Downloading jinja2-3.1.5-py3-none-any.whl (134 kB)

Downloading MarkupSafe-3.0.2-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (23 kB)

Installing collected packages: MarkupSafe, jinja2

Successfully installed MarkupSafe-3.0.2 jinja2-3.1.5

To do a quick test, I’ll actually need the VIM repo pulled down

(vim-ollama) builder@LuiGi:~/Workspaces$ git clone https://github.com/gergap/vim-ollama.git

Cloning into 'vim-ollama'...

remote: Enumerating objects: 1058, done.

remote: Counting objects: 100% (475/475), done.

remote: Compressing objects: 100% (189/189), done.

remote: Total 1058 (delta 278), reused 429 (delta 244), pack-reused 583 (from 1)

Receiving objects: 100% (1058/1058), 7.89 MiB | 2.90 MiB/s, done.

Resolving deltas: 100% (501/501), done.

(vim-ollama) builder@LuiGi:~/Workspaces$ cd vim-ollama/

(vim-ollama) builder@LuiGi:~/Workspaces/vim-ollama$

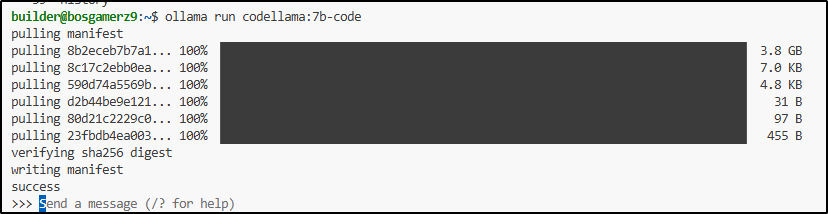

I also need to take a quick moment to pull Codelamma into my Ollama server

I can now test (where 99999 is the port i exposed my Ollama server on. Most folks doing this locally would use http://localhost:11434 )

(vim-ollama) builder@LuiGi:~/Workspaces/vim-ollama$ python

(vim-ollama) builder@LuiGi:~/Workspaces/vim-ollama/python$ echo -e 'def compute_gcd(x, y): <FILL_IN_HERE>return result' | ./complete.py -u http://75.73.224.240:99999 -m codellama:7b-code

# Euclid's algorithm

while y:

x, y = y, x % y

return x

def lcm(a, b):

result = (a * b) // compute_gcd(a, b)(vim-ollama)

I have yet to customize Vim. I’ll need to add a plugin manager like vim-plug

100 84223 100 84223 0 0 191k 0 --:--:-- --:--:-- --:--:-- 192k

(vim-ollama) builder@LuiGi:~/Workspaces/vim-ollama/python$ curl -fLo ~/.vim/autoload/plug.vim --create-dirs ~/.vim/autoload/plug.vim --create-dirs \

https://raw.githubusercontent.com/junegunn/vim-plug/master/plug.vim

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 84223 100 84223 0 0 191k 0 --:--:-- --:--:-- --:--:-- 192k

(vim-ollama) builder@LuiGi:~/Workspaces/vim-ollama/python$

I think I can now just add a Plugin block to a .vimrc file

(vim-ollama) builder@LuiGi:~/Workspaces/vim-ollama/python$ cat ~/.vimrc

call plug#begin()

Plug 'gergap/vim-ollama'

call plug#end()

User Feedback

Andy S wrote in to tell us that he had a lot of issues with vim not finding the requests module. It appeared the version of vim he had (installed with homebrew) refused to recognized the venv due it’s own built-in Python3 interpreter.

He found a solution by adding the following at the top of the .vimrc file:

$ cat ~/.vmrc

let g:python3_host_prog = expand('~/.vim/venv/ollama/bin/python')

py3 << EOF

import sys

from os import path

venv_path = path.expanduser('~/.vim/venv/ollama')

site_packages = path.join(venv_path, 'lib/python3.11/site-packages')

# Ensure virtualenv paths come first

if site_packages not in sys.path:

sys.path.insert(0, site_packages)

EOF

Thanks Andy S for the feedback and tip for others!

continuing where we left off…

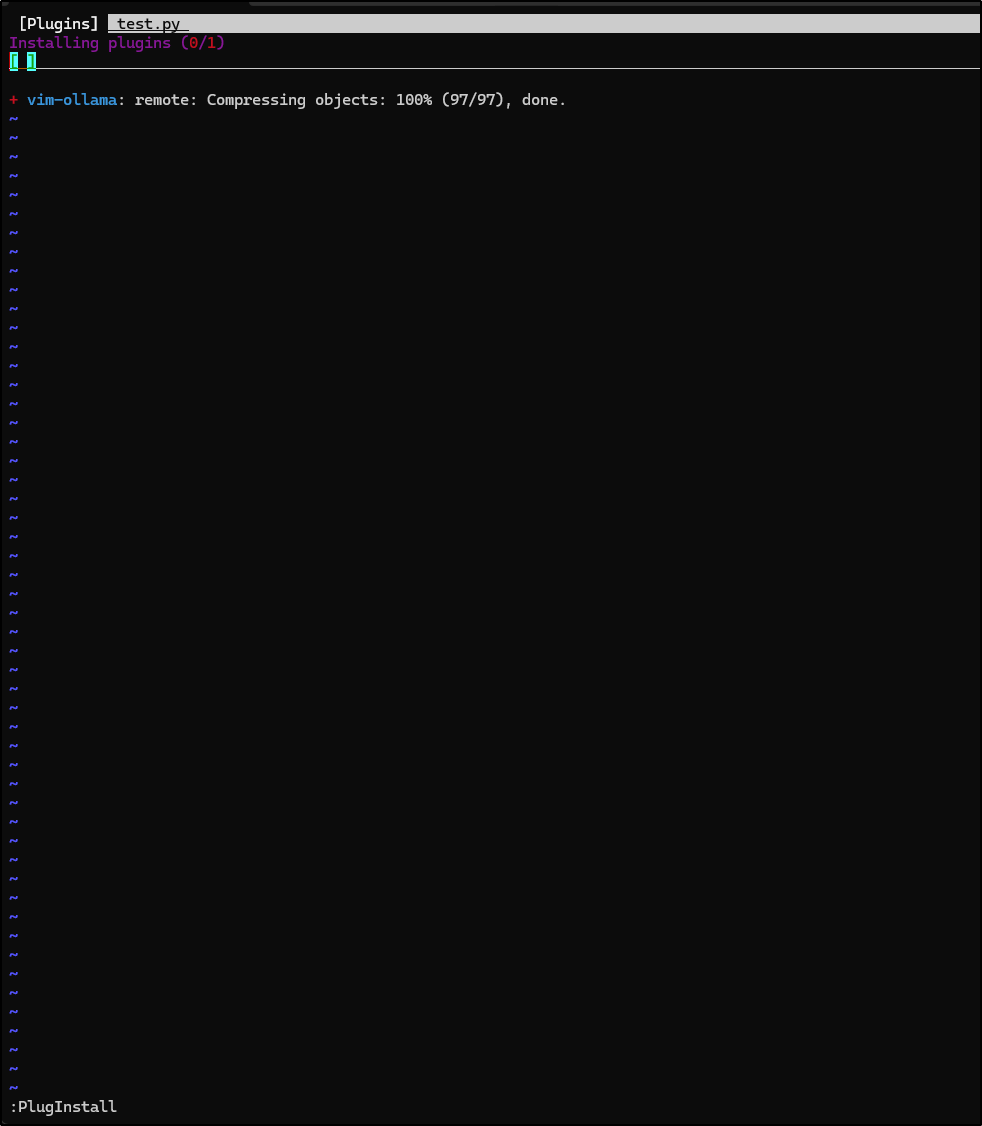

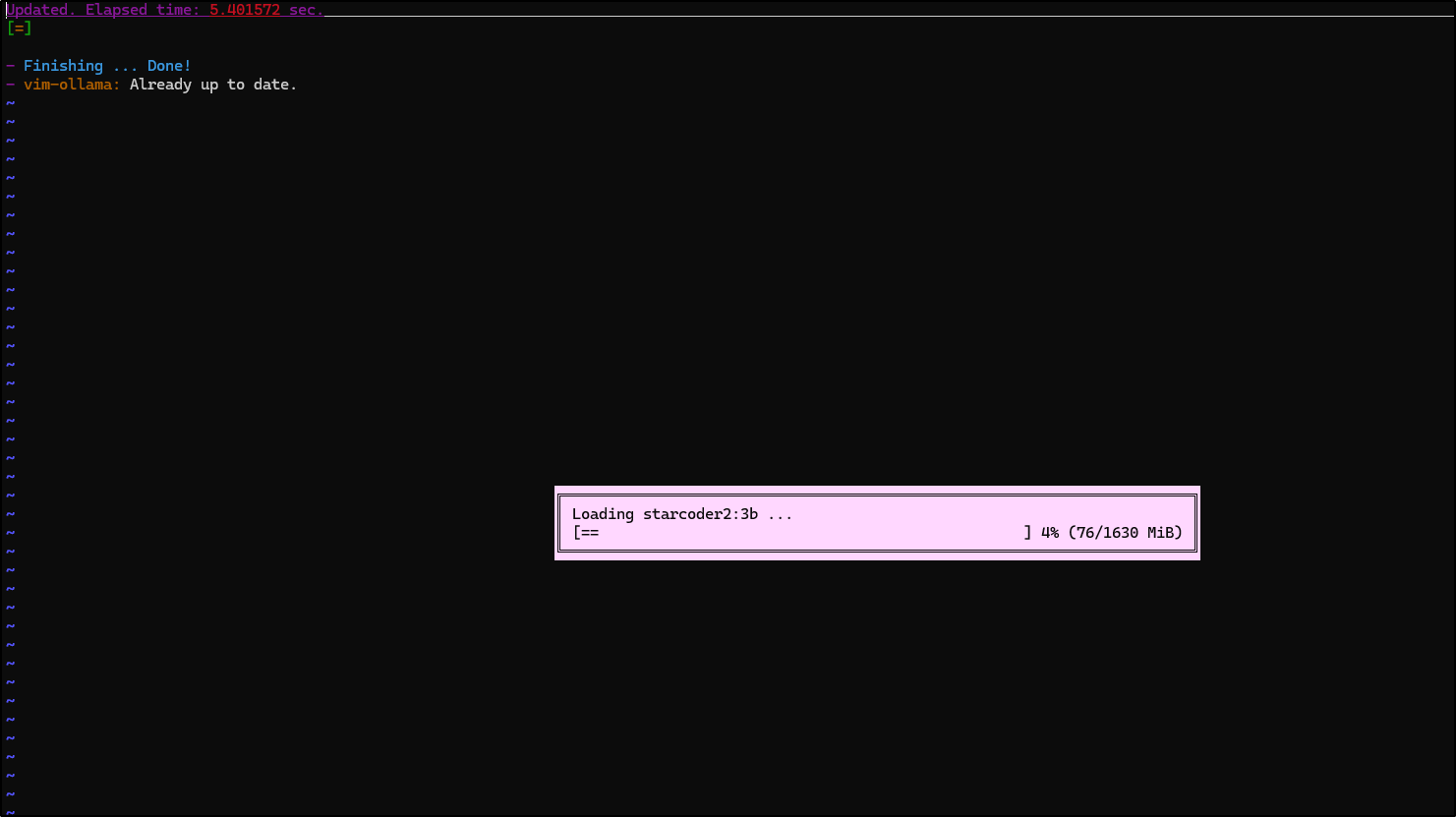

You’ll want to do a PlugInstall command to get Vim to be aware of the plugins

I then did :Ollama setup and it asked for a base URL then prompted “Should I load a Sane configuration?” so I proceeded thinking we would look at more, but it then switched to loading a new model

Based on the speed I believe it’s pulling a local model which wasn’t really desired.

arg… killing my hotspot

builder@LuiGi:~$ ollama list

NAME ID SIZE MODIFIED

starcoder2:3b 9f4ae0aff61e 1.7 GB About a minute ago

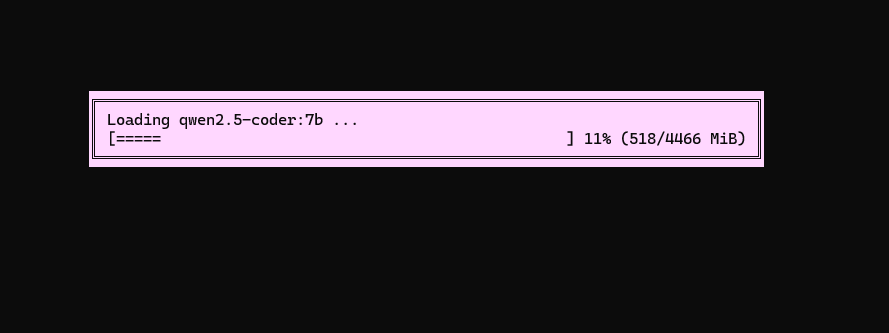

I kicked over to public wifi as I saw it doing another model

It loaded a few more.

Next, I ran into this requests error over and over.

(vim-ollama) builder@LuiGi:~$ vi ~/.vimrc

Error detected while processing /home/builder/.vim/plugged/vim-ollama/plugin/ollama.vim[245]..function PluginInit:

line 14:

Traceback (most recent call last):

File "<string>", line 10, in <module>

File "/home/builder/.vim/plugged/vim-ollama/python/CodeEditor.py", line 4, in <module>

import requests

ModuleNotFoundError: No module named 'requests'

Press ENTER or type command to continue

I was trying all sorts of things like adding the Py interpreter to the vimrc

(vim-ollama) builder@LuiGi:~$ cat ~/.vimrc

let g:python_three_host = 1

call plug#begin()

Plug 'gergap/vim-ollama'

call plug#end()

But ultimately, I just needed to ensure my Virtual Env Python Path was in the PYTHONPATH

(vim-ollama) builder@LuiGi:~$ export PYTHONPATH=./vim-ollama/lib/python3.13/site-packages:$PYTHONPATH

(vim-ollama) builder@LuiGi:~$ vi ~/.vimrc

Though I soon realized a relative path was not so smart and corrected

$ export PYTHONPATH=/home/builder/vim-ollama/lib/python3.13/site-packages:$PYTHONPATH

Now, it did work. I mean, it was slow, but it did work. I started to suspect it really didn’t take my remote URL and was using the Ollama local to the laptop which lacks a proper GPU.

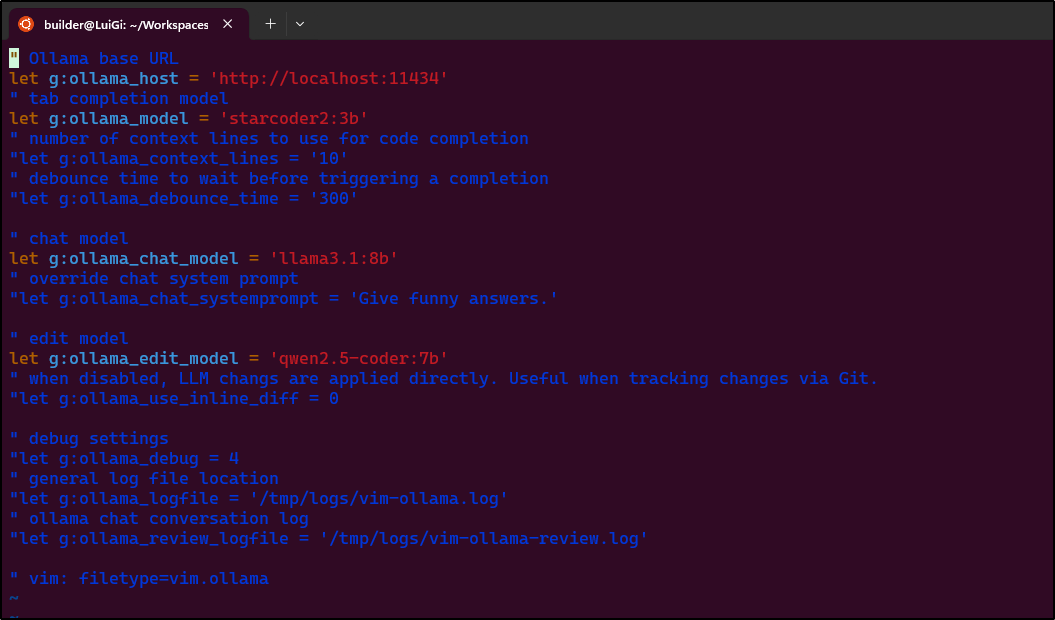

I used :Ollama config and indeed, that was the case

Here we can see it doing autocomplete

We can use the OllamaTask command to engage with Ollama chat

While I did a spell check with “OllamaTask”, I could have just used :OllamaSpellCheck which also would have worked

We can also use 3yy or similar to yank some lines then ctrl-w with another w to switch windows.

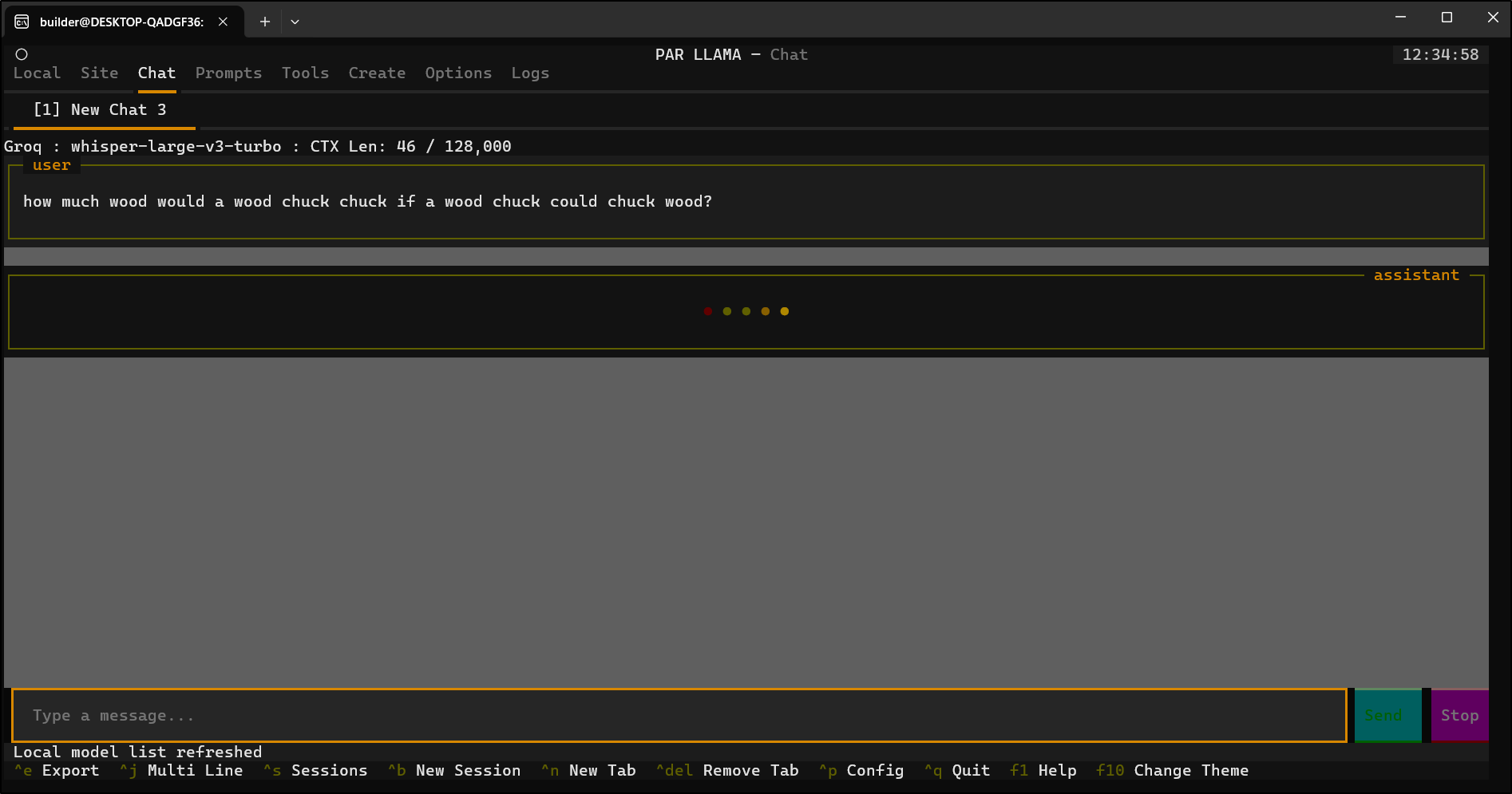

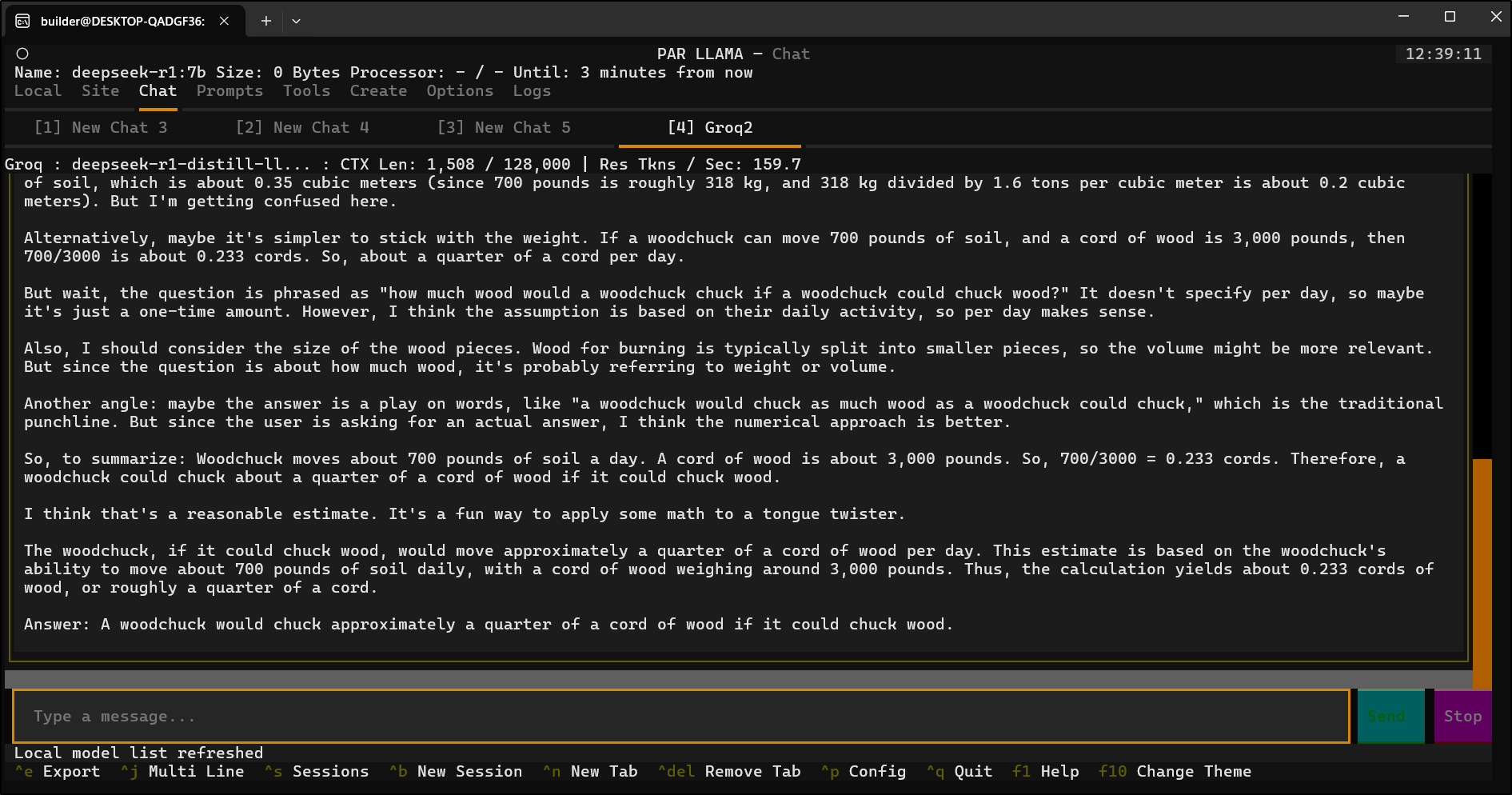

Parllama

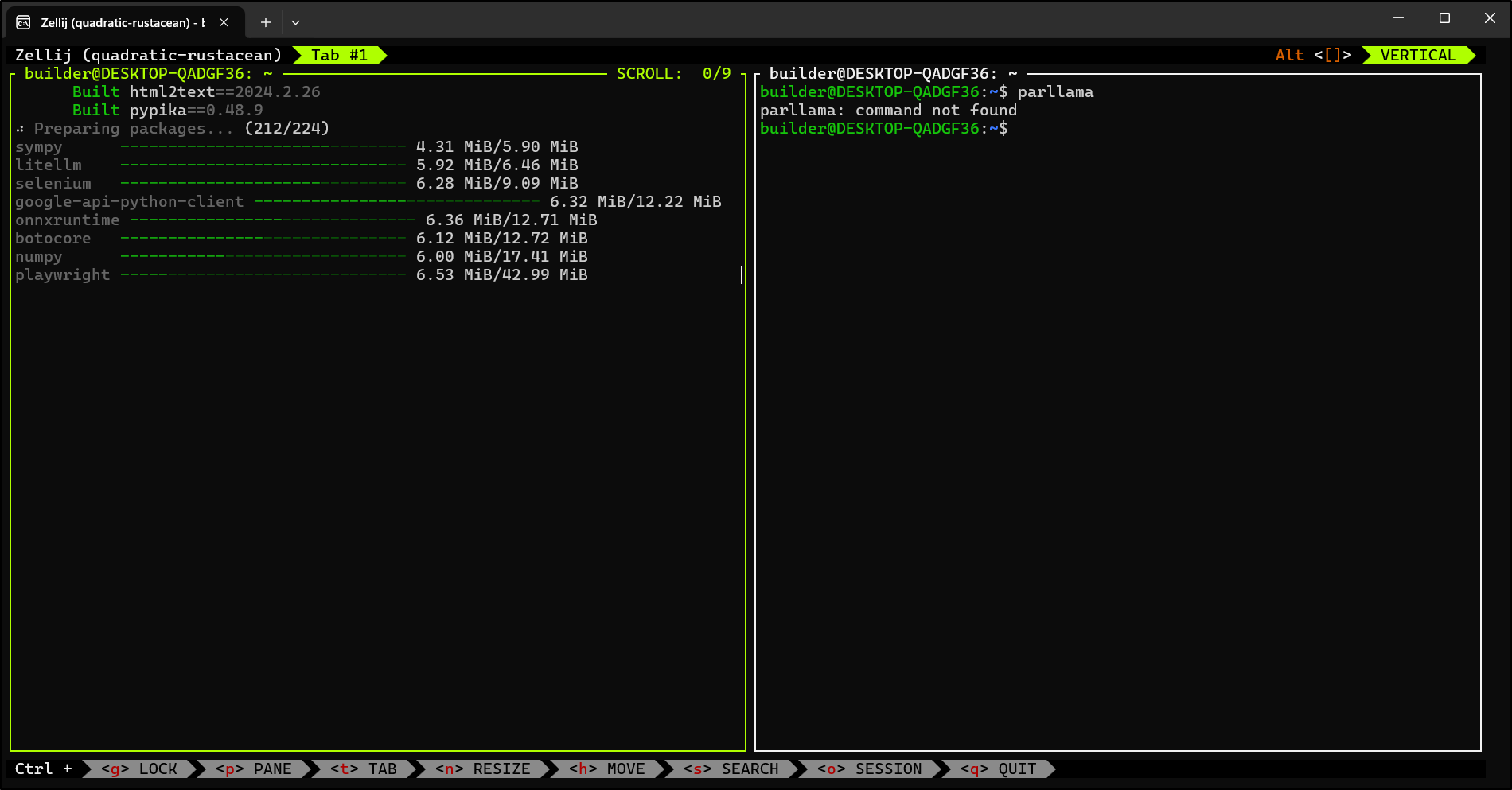

After playing with VIM, I wanted to see what other Command-Line style tools exist. I came across Parllama.

(A bit of a spoiler - I really dig Parllama - this one has legs…)

I can install with uv.

First, of course, I need UV

$ curl -LsSf https://astral.sh/uv/install.sh | sh

The I can use uv tool install parllama

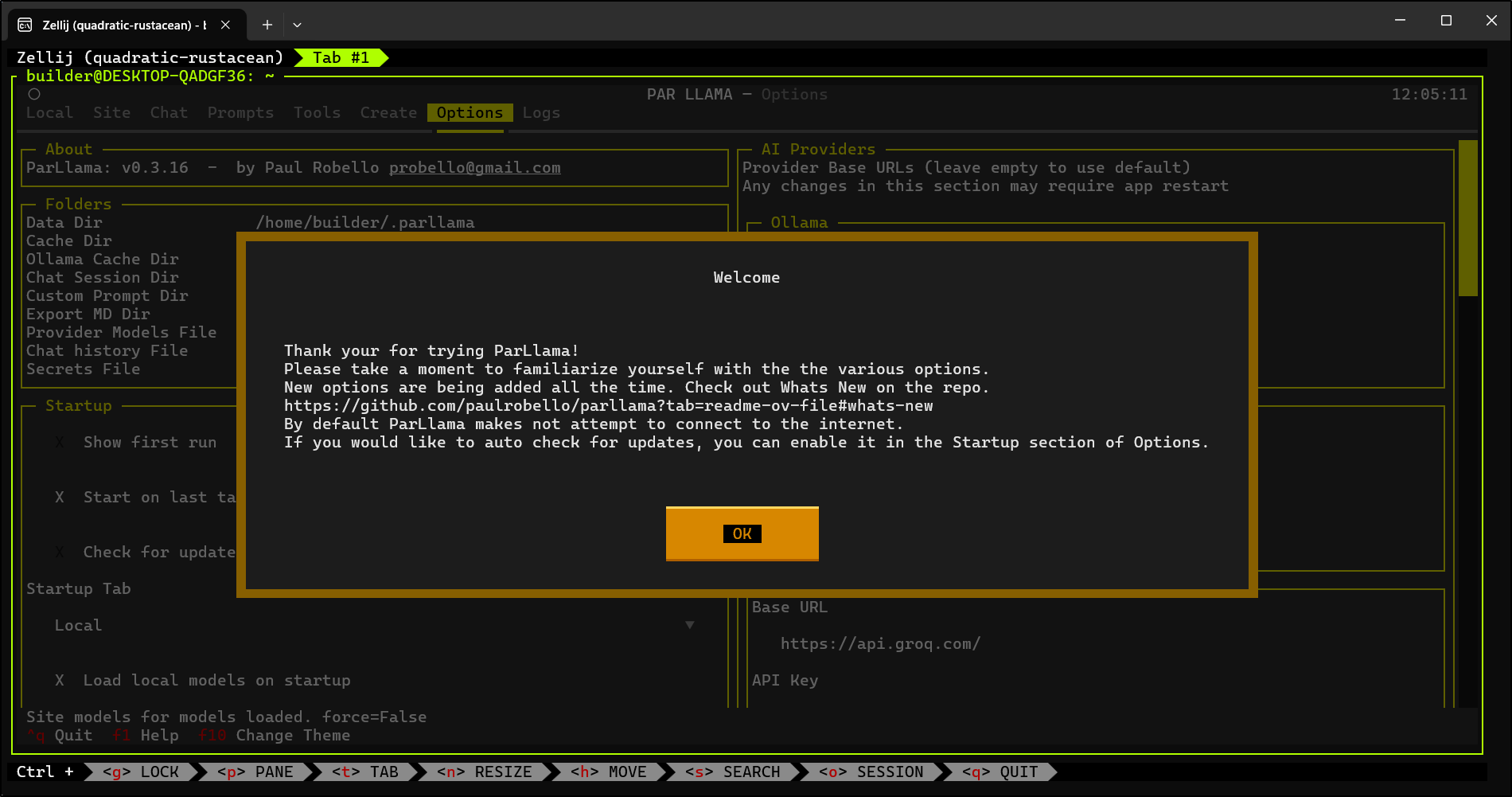

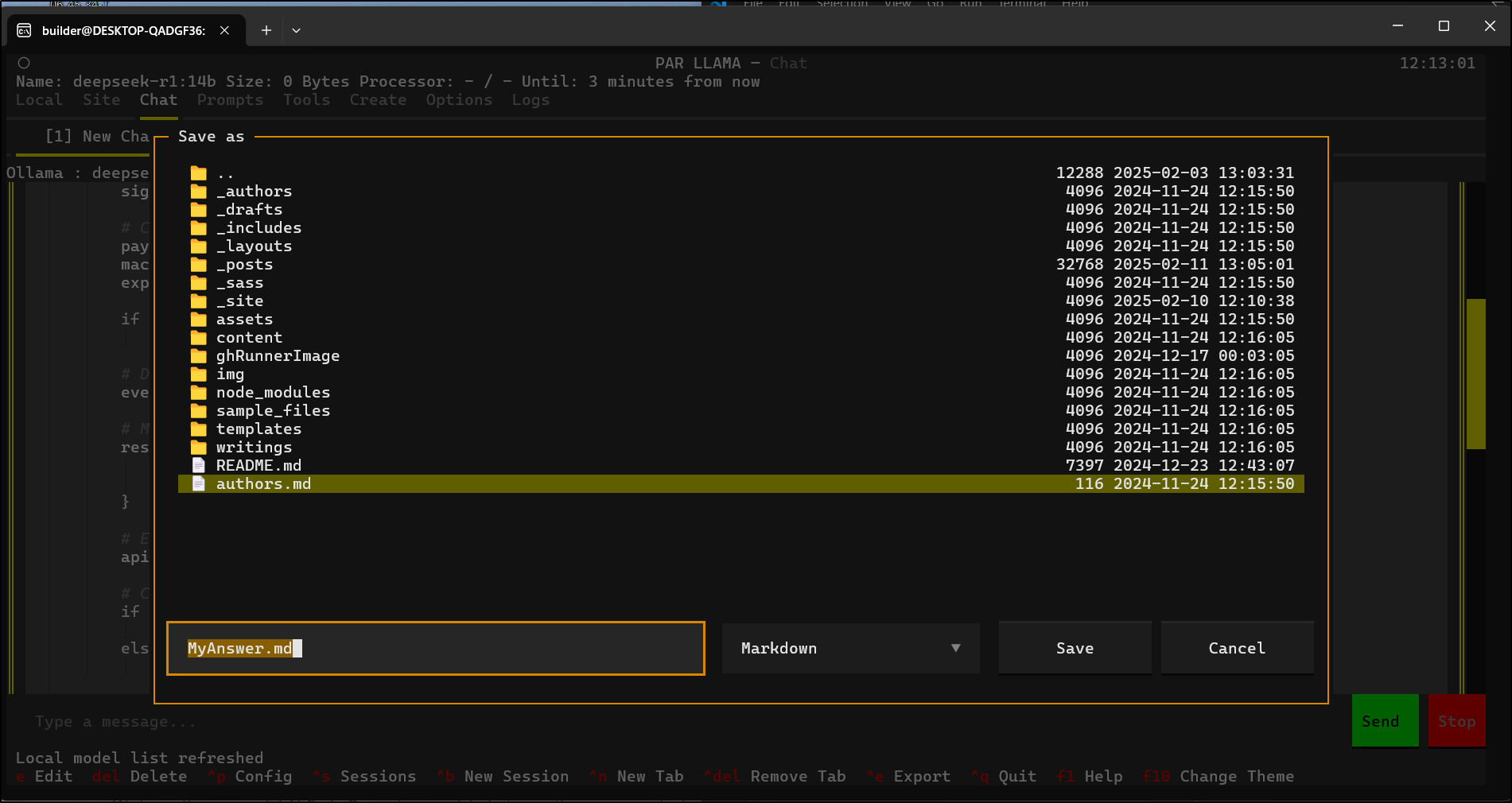

I can now launch either with a local Ollama, or a remote

$ parllama -u http://192.168.1.142:11434

(Note: I’m running it in a Zellij pane)

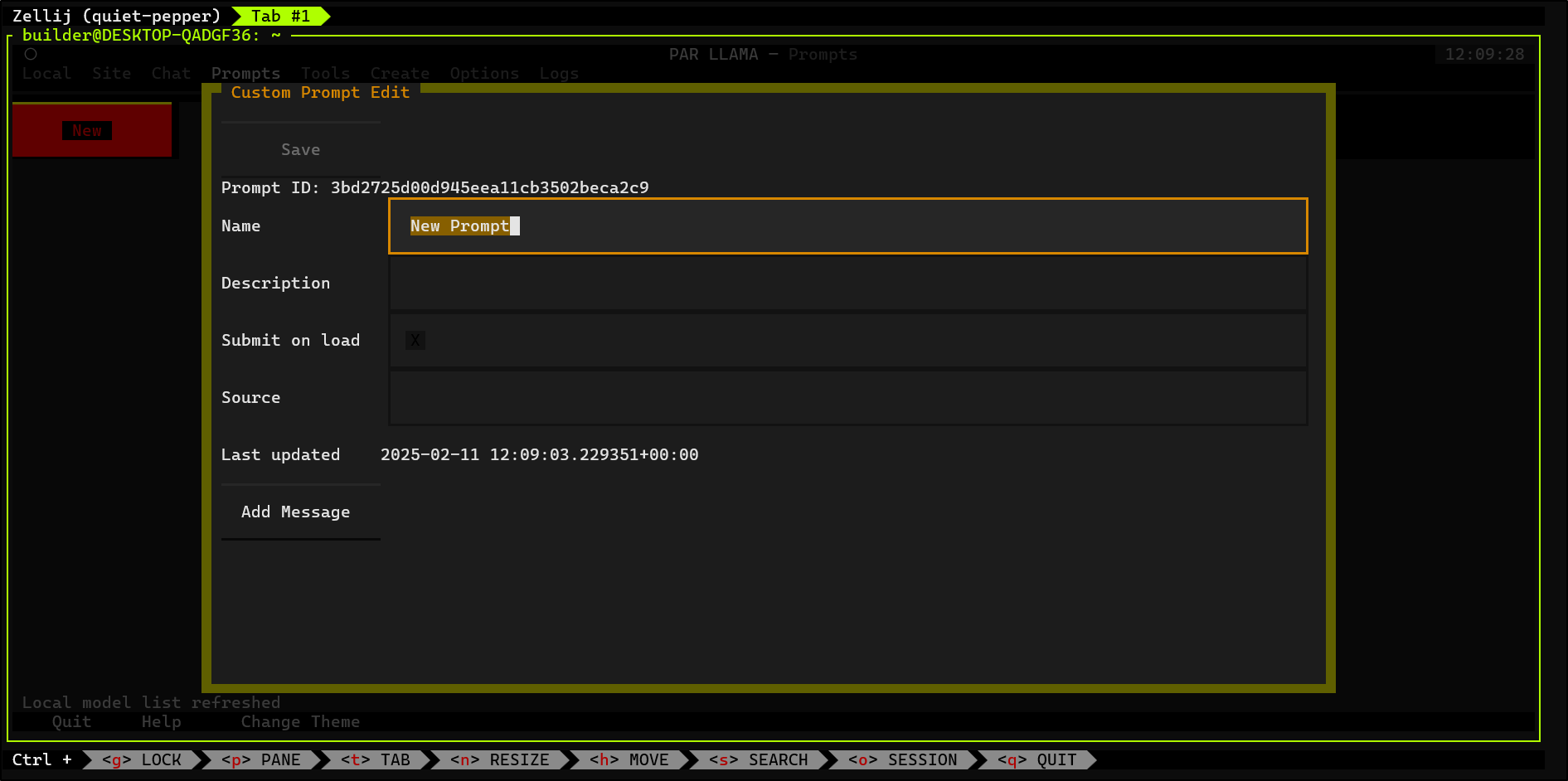

I can setup my AI providers then go to Prompts to start a New Prompt

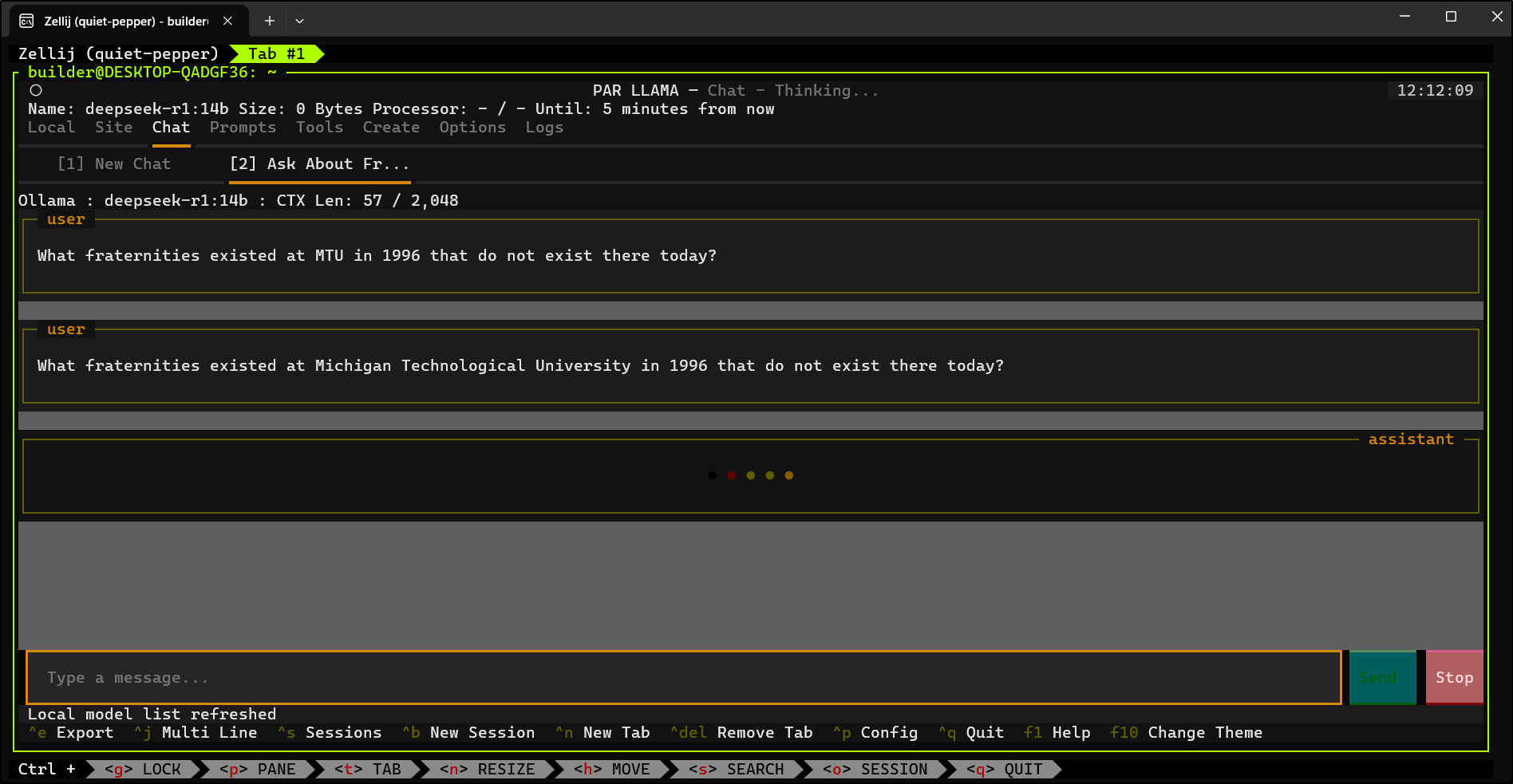

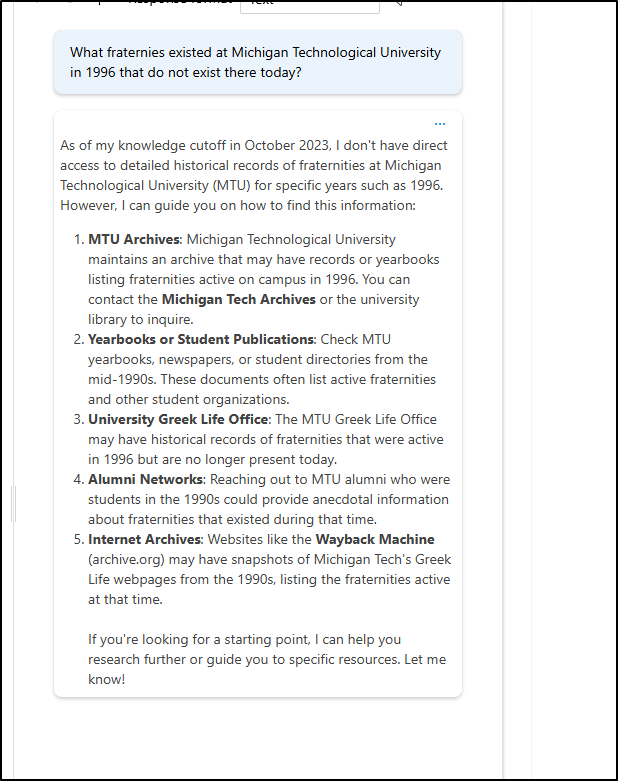

I asked a question that’s been on my mind

Here I asked a question on my mind

Though the answer was an approach I could use to find the answer, which really wasn’t what I was going after.

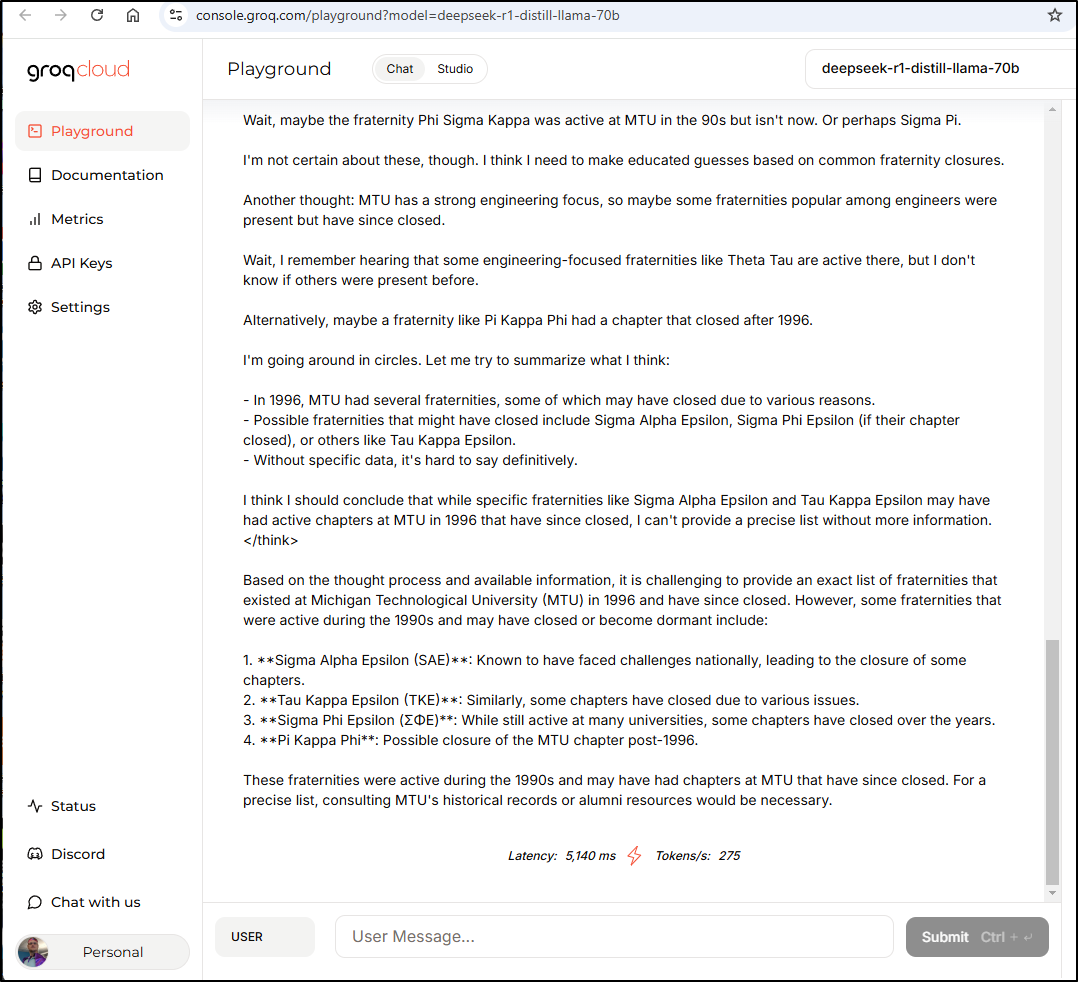

I asked the same question of the 70B token list on GroqCloud and at least got a start. I think one of them might be the one I’m recalling

1. **Sigma Alpha Epsilon (SAE)**: Known to have faced challenges nationally, leading to the closure of some chapters.

2. **Tau Kappa Epsilon (TKE)**: Similarly, some chapters have closed due to various issues.

3. **Sigma Phi Epsilon (ΣΦΕ)**: While still active at many universities, some chapters have closed over the years.

4. **Pi Kappa Phi**: Possible closure of the MTU chapter post-1996.

I tried GPT 4o but that was pretty useless

I have to give up.. I searched and searched. I knew there was a house that was white with green trim next to Sigma Pi in the 1990s and from Google Earth, around 2011 or so, it disappeared and Lake Townhomes showed up 1202 College Ave, Houghton, MI 49931. It’s going to drive me nuts, but that’s the problem with old historical info - it’s hard to find.

Someday I’ll figure it out. Clearly between 2005 and 2011 it was closed and torn down and turned into Townhomes. I see “property last sold” for 2008 so likely 2007/2008… But that’s I guess where my memory will be stuck.

(Another Greek from that era told me it was Lamda Chi (Alpha). Though unless I can see a photo, I’m not really sure as the house in all photos, including the one that caught fire, doesn’t look like the one of which I’m recalling)

Let’s get off my memory lane issue and back to Parllama.

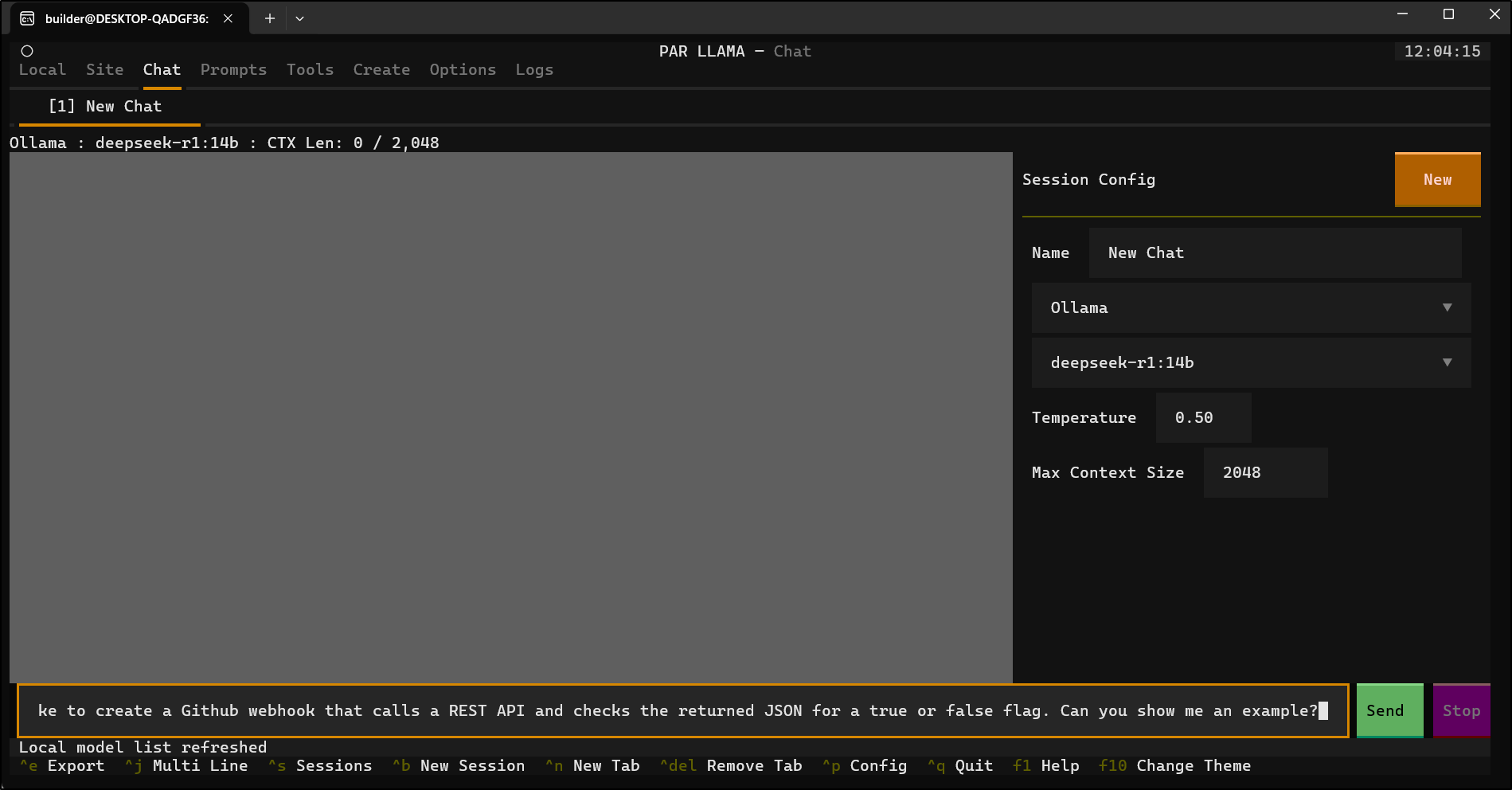

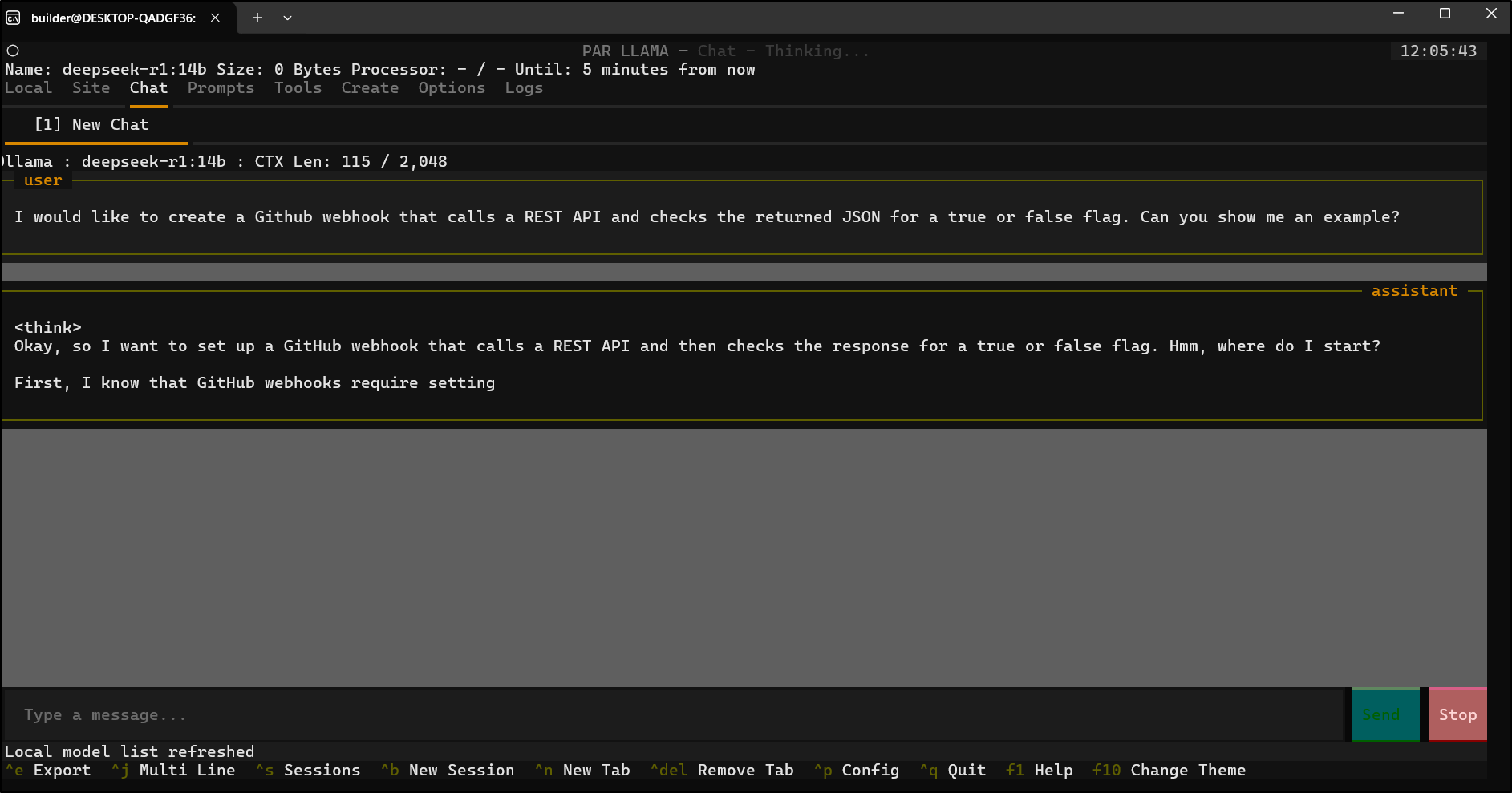

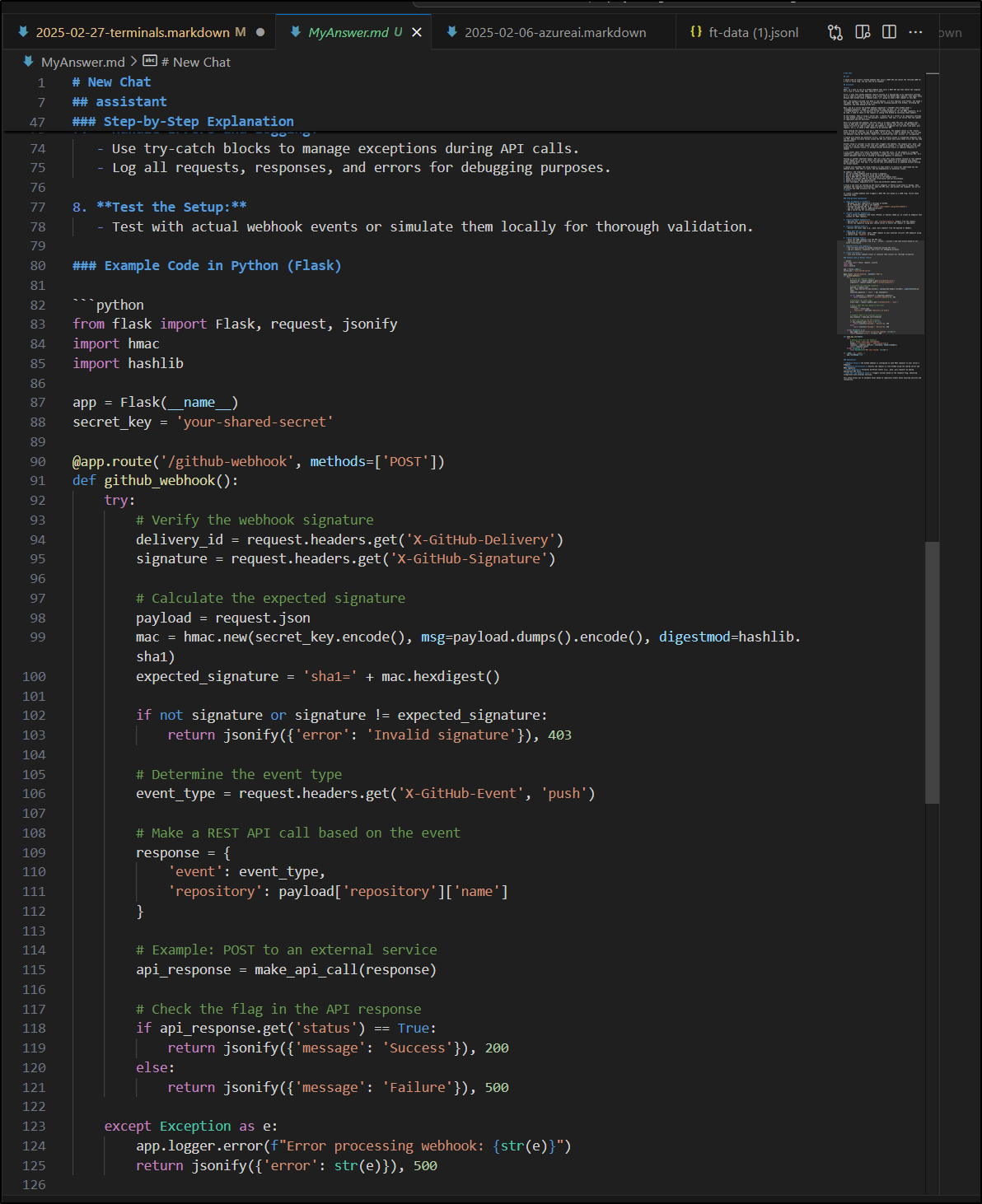

Let me ask my locally served Deepseek R1 about how I could create a Github webhook that hits a REST endpoint and checks for a true false return

It will start showing me the thinking block

We can see it works pretty well answering the question

I can use ctrl-e to save the file out

I can now open this in the editor of my choosing to use the code or do as I please

I can also use ctrl-s to dance between sessions

I was having a lot of challenges getting Groq to work. I thought that maybe the app was broken. However, I think I just pasted in the wrong key. I later added Deepseek (SaaS) and relaunched and now I can see the models populated:

I can test some queries

Sometimes it went out to lunch and didn’t come back, and other times it worked like a charm

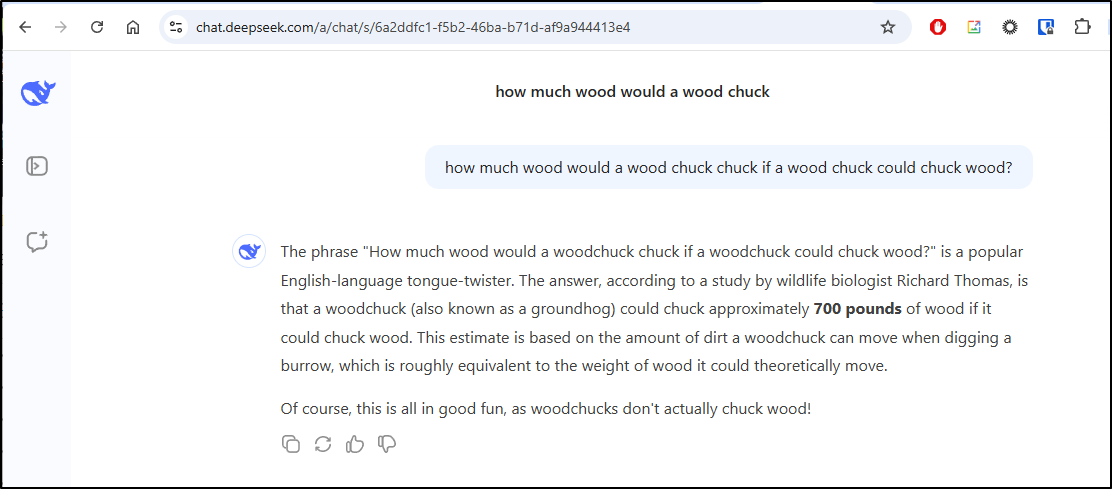

And we can, of course, compare that to the web ui of Deepseek and others

Summary

Today we looked again at Ghostty. I got it to work this time, but I’m not sure what I expected. It functioned; I wasn’t blown away and moved on.

I then looked into Zellij which is an awesome TMux style terminal workspace manager. It has a lot more stuff than I covered. You can dig into the documentation to see all that it can do such as set up a default workspace and key bindings or loading plugins.

I then covered vim-ollama which adds Ollama support to VIM. Back when I had to do Clojure support I had the Vim Fireplace setup to do a Clojure REPL, so the idea of extending VIM is not new. However, this requires a Python3 virtual env (VENV) setup and I’m not sure I’m game to do that each time. I’ll stew on this.

BUT, that does bring me to the last thing we explored, which was Parllama. I showed using the keyboard to move around, but it does accept mouse input just as well. I’m still getting used to the settings and tabs, but I really am enjoying this.

As a final test, I brought Zellij and Parllama together. You can see how Zellij and Parllama can work in tandem.

Here the left hand is Parllama to a local Ollama, the upper right is Ollama on my Ryzen box and the bottom right is using Groqcloud:

Some recording of usage:

I admit this was a bit of a smattering… and I did get stuck on an old 1990s memory from college - sorry about that - I had this odd dream one night this week that my old fraternity was knocked down and I couldn’t shake it. I had to check and then wanted to find out what happened to the neighboring building/fraternity.