Published: Jan 7, 2025 by Isaac Johnson

I was recently turned on to v0 by a YouTube talk that showed how to use it to quickly turn ideas into some wireframe sites that can then be fed into things like ChatGPT, Gemini, CoPilot or CursorAI.

Today I’ll look at creating an App with v0 then using Google Gemini Code Assist to polish it up and get it through to completion of a fully hosted reachable Resume app fed with JSON blocks.

Getting started with v0

I can just use it without signing in if I wanted

However, I plan to experiment with some features later for which being logged in might be of benefit, so I’ll use my Github IDP

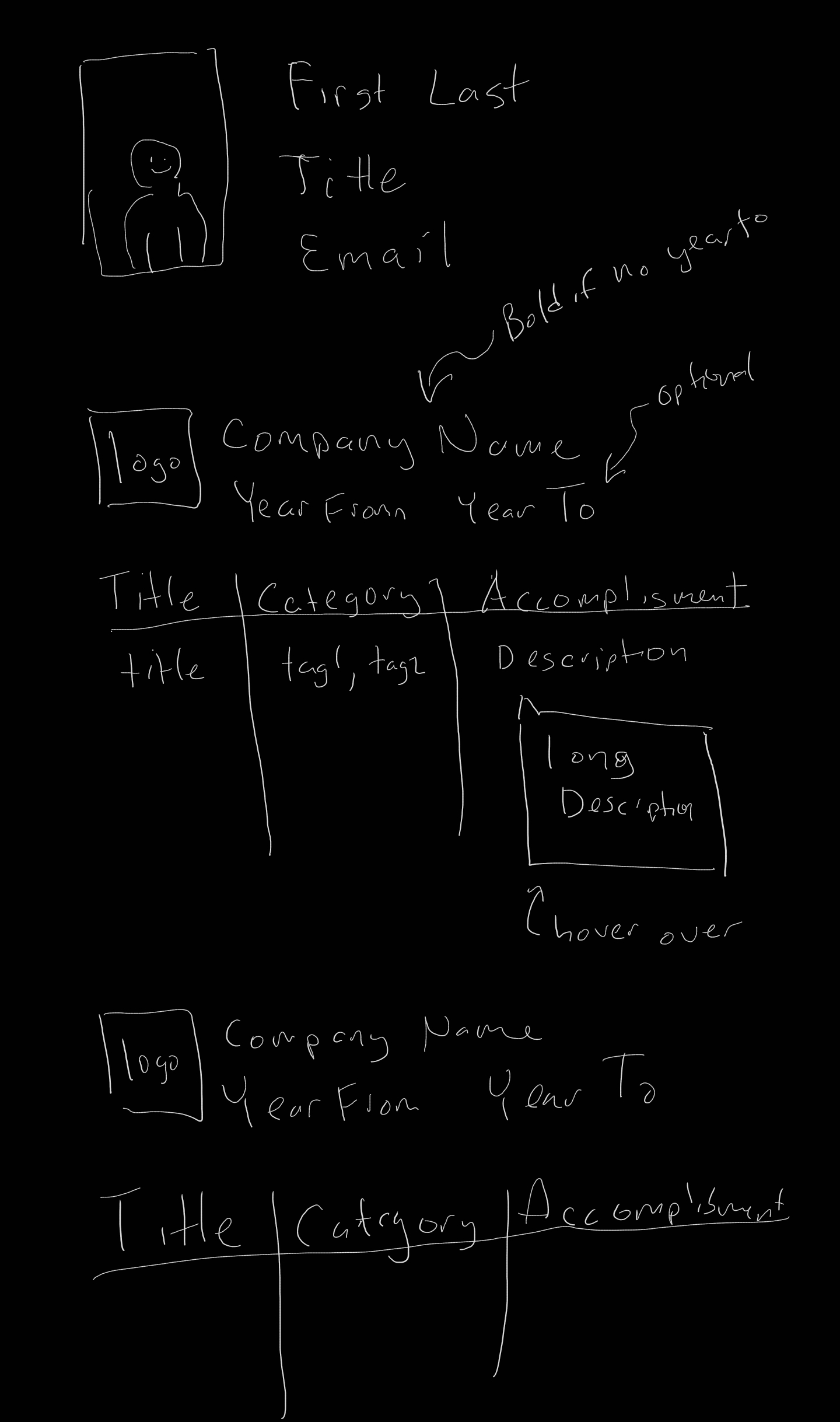

Usually it helps to have some idea of what you want to make.

For instance, I scratched out an idea of a Resume style app using my phone:

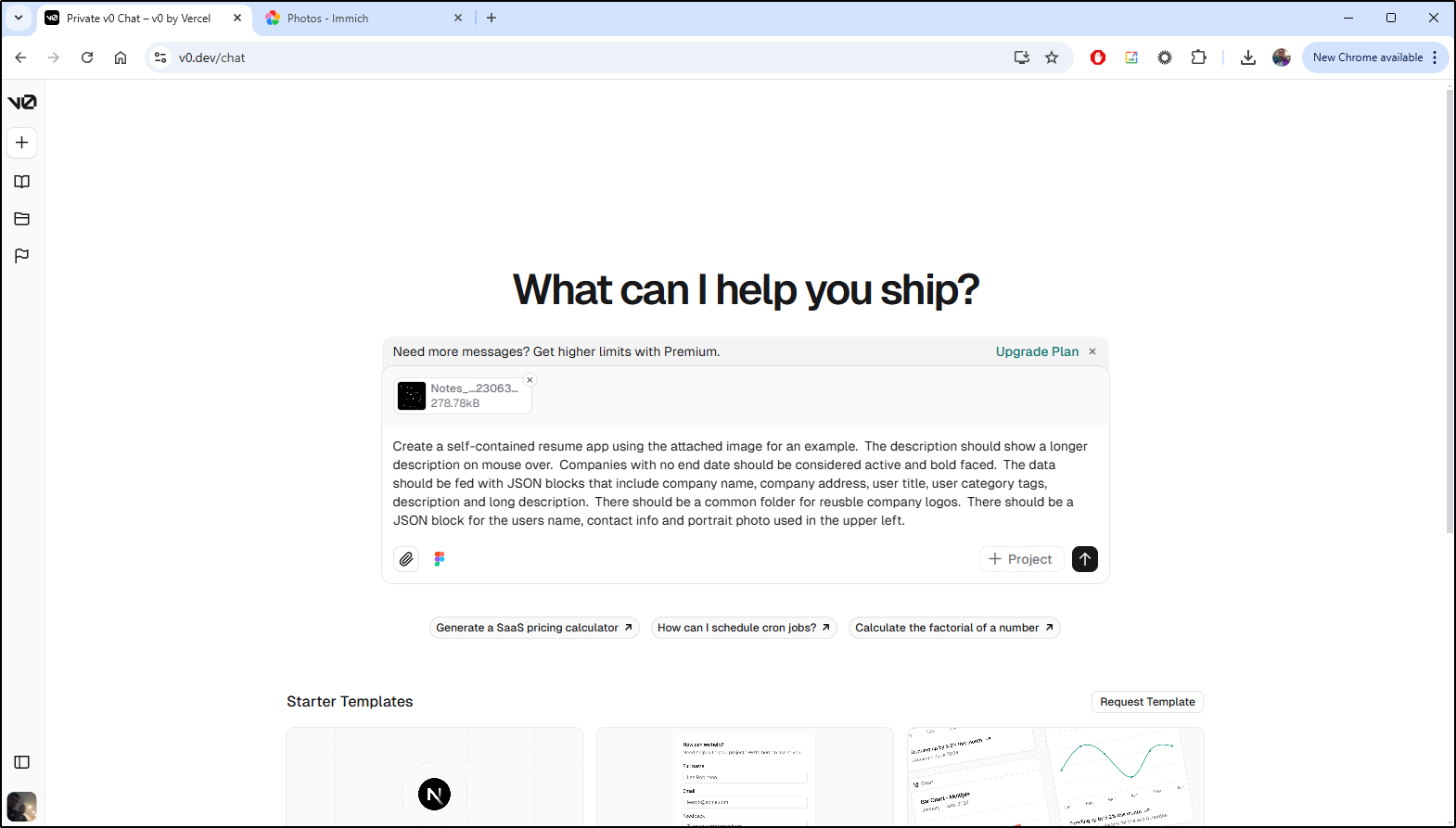

I’m not a v0 expert, but I wanted to give it as much detail as possible after uploading the wireframe

Create a self-contained resume app using the attached image for an example. The description should show a longer description on mouse over. Companies with no end date should be considered active and bold faced. The data should be fed with JSON blocks that include company name, company address, user title, user category tags, description and long description. There should be a common folder for reusable company logos. There should be a JSON block for the users name, contact info and portrait photo used in the upper left.

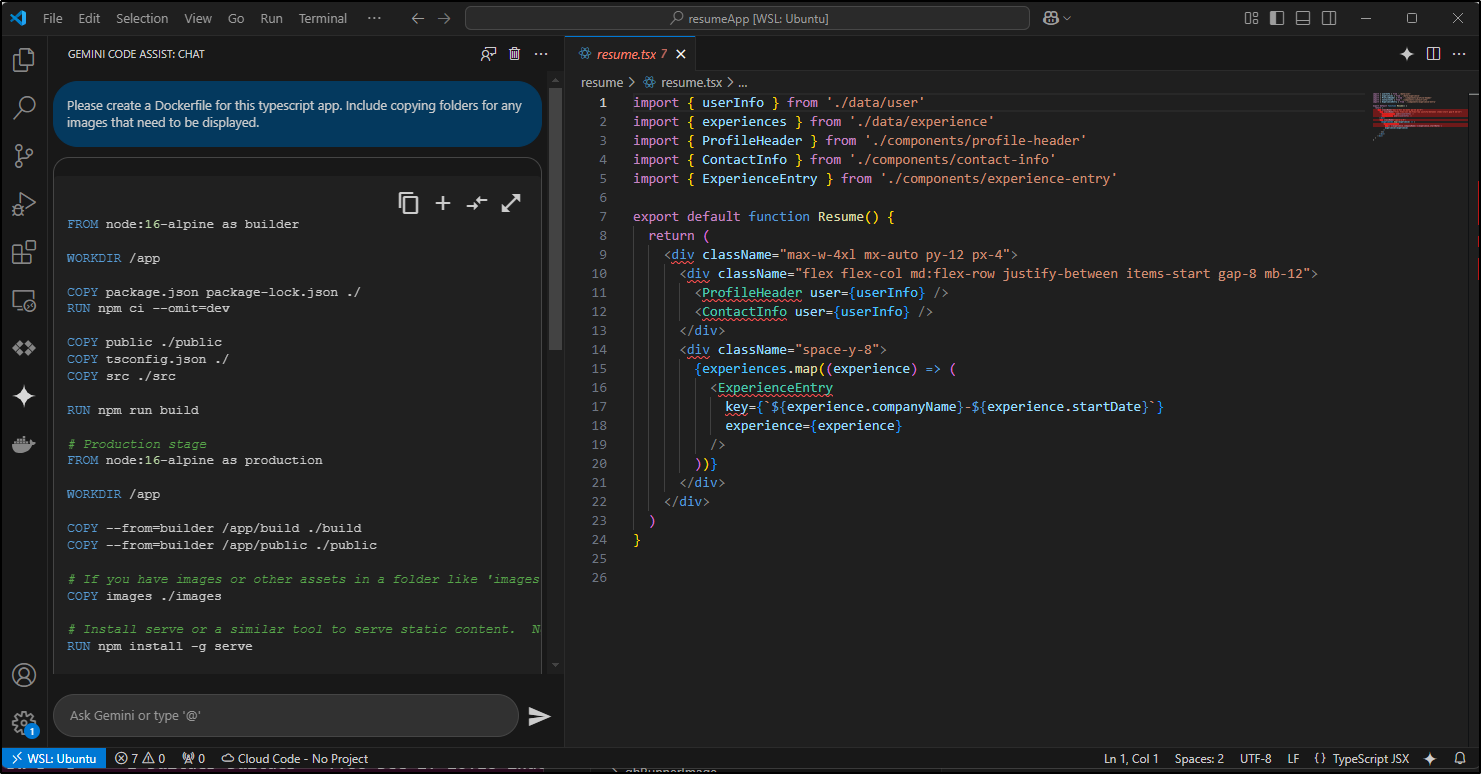

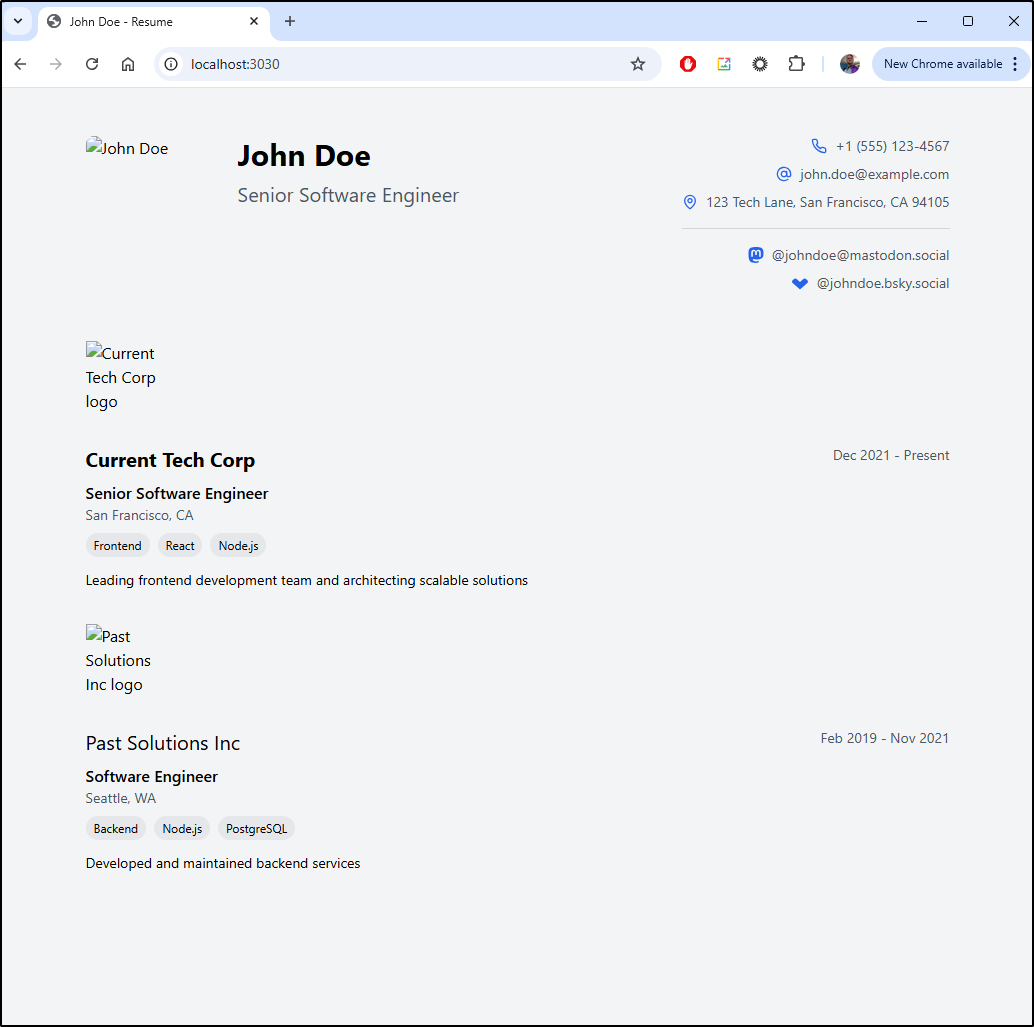

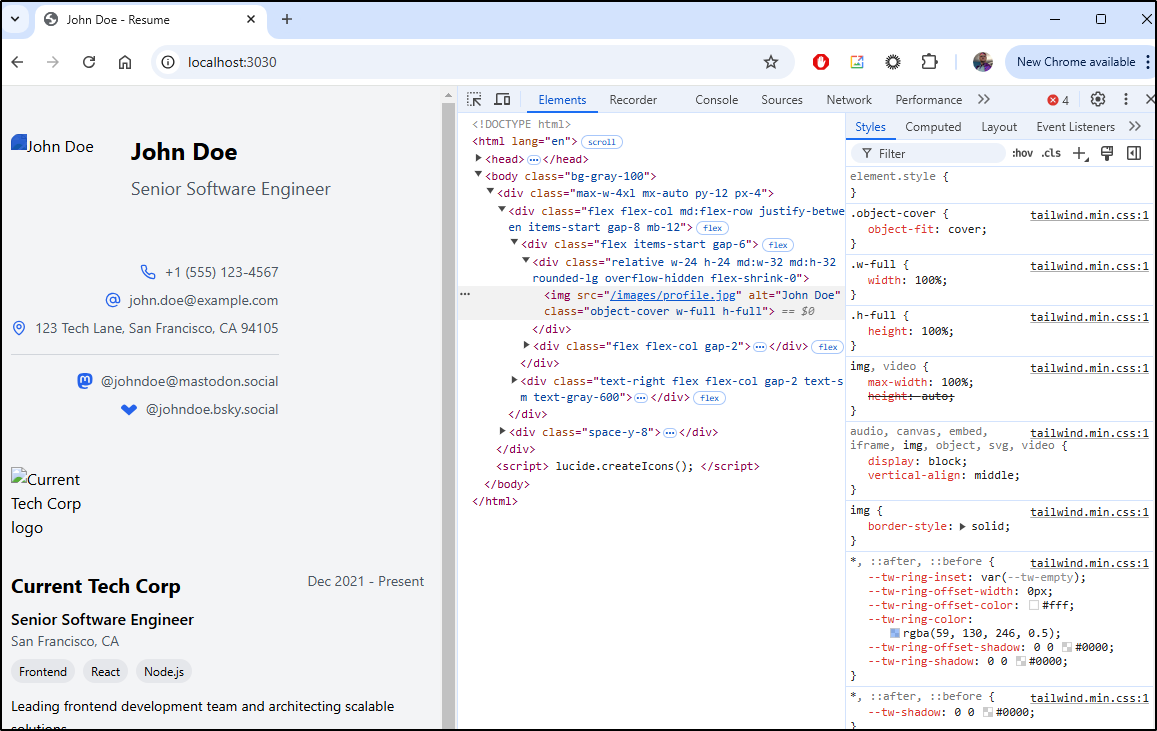

Here you can see me interact with V0 to build that out the way I want

At the end, I downloaded it locally.

I’ll next copy and extract it into a local WSL folder so I can use Gemini Code Assist

builder@LuiGi:~/Workspaces$ cd resumeApp/

builder@LuiGi:~/Workspaces/resumeApp$ cp /mnt/c/Users/isaac/Downloads/resume.zip ./

builder@LuiGi:~/Workspaces/resumeApp$ unzip resume.zip

Archive: resume.zip

extracting: resume/data/user.ts

extracting: resume/data/experience.ts

extracting: resume/components/experience-entry.tsx

extracting: resume/components/contact-info.tsx

extracting: resume/resume.tsx

extracting: resume/components/profile-header.tsx

builder@LuiGi:~/Workspaces/resumeApp$ rm resume.zip

I want to know ask Gemini to create a Dockerfile:

Please create a Dockerfile for this typescript app. Include copying folders for any images that need to be displayed.

I can now ask that to Gemini Code Assist in VS Code

I’m expecting some tweaks, but I did create an images folder then the Dockerfile

My first issue in testing is that it’s clearly not Typescript aware and created a Dockerfile for an OOTB NodeJS 16 app instead

builder@LuiGi:~/Workspaces/resumeApp/resume$ docker build -t resumeapp:0.1 .

[+] Building 3.9s (11/16) docker:default

=> [internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 609B 0.0s

=> [internal] load metadata for docker.io/library/node:16-alpine 3.3s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> CANCELED [builder 1/8] FROM docker.io/library/node:16-alpine@sha2 0.2s

=> => resolve docker.io/library/node:16-alpine@sha256:a1f9d027912b58 0.1s

=> => sha256:2573171e0124bb95d14d128728a52a97bb917ef 6.73kB / 6.73kB 0.0s

=> => sha256:a1f9d027912b58a7c75be7716c97cfbc6d3099f 1.43kB / 1.43kB 0.0s

=> => sha256:72e89a86be58c922ed7b1475e5e6f1515376764 1.16kB / 1.16kB 0.0s

=> [internal] load build context 0.1s

=> => transferring context: 28B 0.0s

=> CACHED [builder 2/8] WORKDIR /app 0.0s

=> ERROR [builder 3/8] COPY package.json package-lock.json ./ 0.0s

=> CACHED [builder 4/8] RUN npm ci --omit=dev 0.0s

=> ERROR [builder 5/8] COPY public ./public 0.0s

=> ERROR [builder 6/8] COPY tsconfig.json ./ 0.0s

=> ERROR [builder 7/8] COPY src ./src 0.0s

------

> [builder 3/8] COPY package.json package-lock.json ./:

------

------

> [builder 5/8] COPY public ./public:

------

------

> [builder 6/8] COPY tsconfig.json ./:

------

------

> [builder 7/8] COPY src ./src:

------

Dockerfile:10

--------------------

8 | COPY public ./public

9 | COPY tsconfig.json ./

10 | >>> COPY src ./src

11 |

12 | RUN npm run build

--------------------

ERROR: failed to solve: failed to compute cache key: failed to calculate checksum of ref 301ef905-4e11-4444-b057-31af8db93ec9::pyfpfbslfj4uoxbjgxdw5dyqq: "/src": not found

I tried to prompt Gemini to switch to TS but it’s pretty insistent i should have a package.json (which i clearly do not)

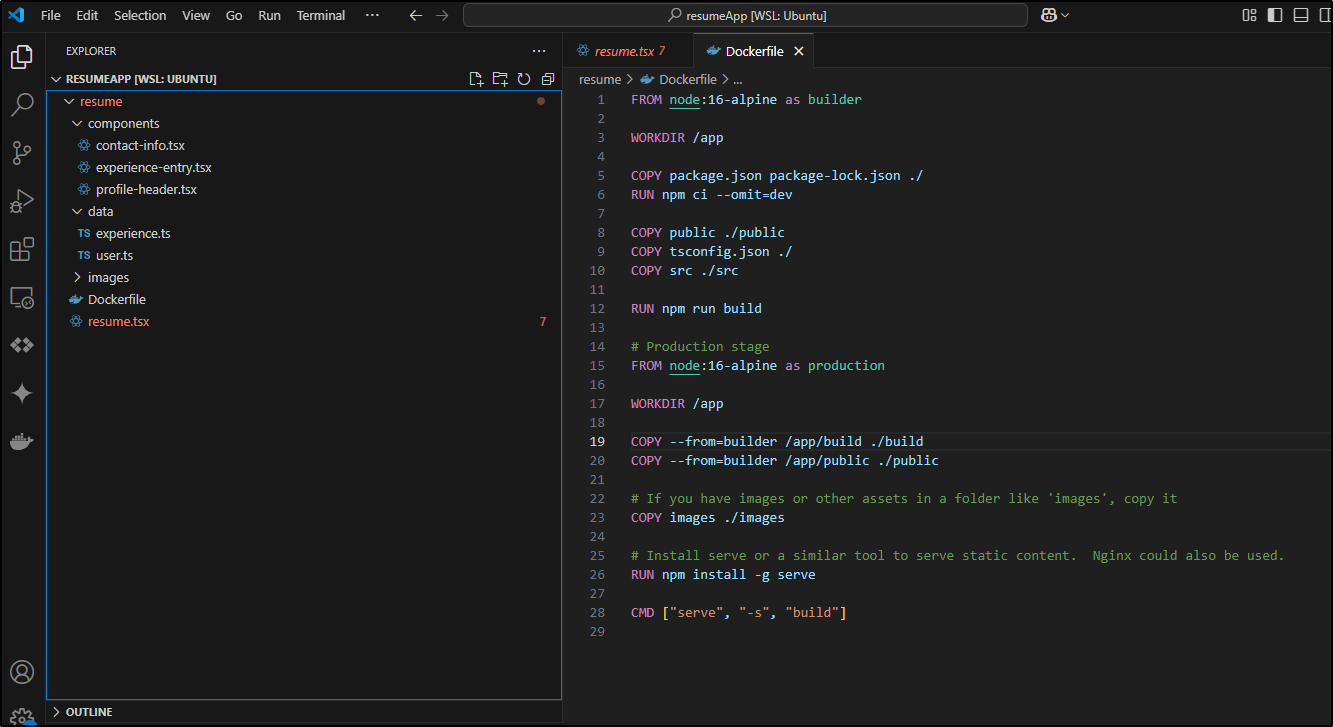

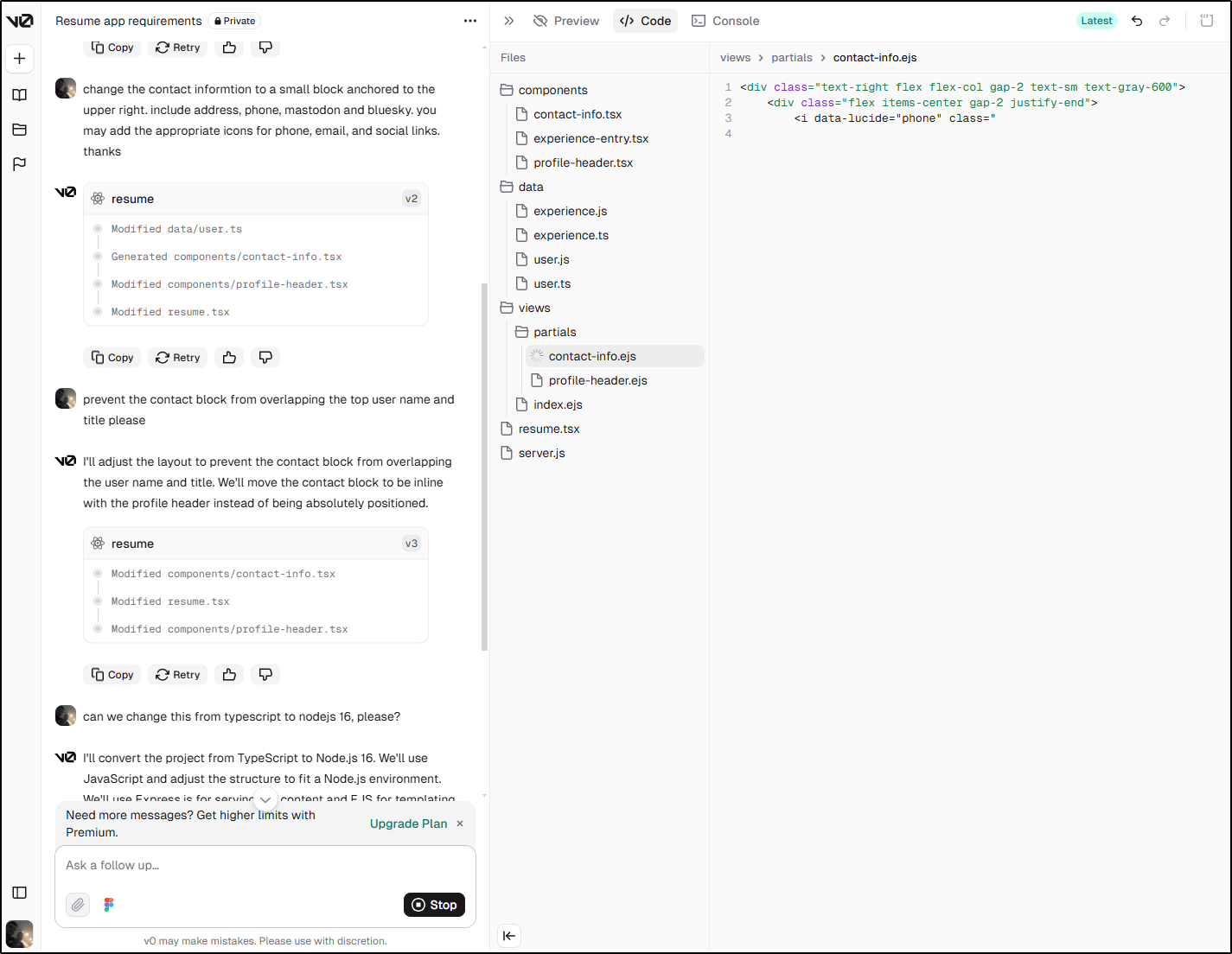

Perhaps I can get v0 to pivot to NodeJS 16

This was actually pretty cool to watch as V0 converted all the files over

However, a consequence of switching to Express is I cannot preview in v0 now

I downloaded then moved over the first draft

builder@LuiGi:~/Workspaces/resumeApp/resume$ cd ..

builder@LuiGi:~/Workspaces/resumeApp$ ls

resume

builder@LuiGi:~/Workspaces/resumeApp$ mv resume resume_1

builder@LuiGi:~/Workspaces/resumeApp$ cp /mnt/c/Users/isaac/Downloads/resume\ \(1\).zip ./resume.zip

builder@LuiGi:~/Workspaces/resumeApp$ unzip resume.zip

Archive: resume.zip

extracting: resume/data/user.ts

extracting: resume/data/experience.ts

extracting: resume/components/profile-header.tsx

extracting: resume/components/experience-entry.tsx

extracting: resume/resume.tsx

extracting: resume/components/contact-info.tsx

extracting: resume/server.js

extracting: resume/data/user.js

extracting: resume/data/experience.js

extracting: resume/views/index.ejs

extracting: resume/views/partials/profile-header.ejs

extracting: resume/views/partials/contact-info.ejs

extracting: resume/views/partials/experience-entry.ejs

extracting: resume/package.json

builder@LuiGi:~/Workspaces/resumeApp$ cp resume_1/Dockerfile ./resume

builder@LuiGi:~/Workspaces/resumeApp$

At this point I needed to step in and guide some things.

I wanted a package-lock.json created, so i used npm install to create that

builder@LuiGi:~/Workspaces/resumeApp/resume$ nvm use 16.20.2

Now using node v16.20.2 (npm v8.19.4)

builder@LuiGi:~/Workspaces/resumeApp/resume$ npm build

Unknown command: "build"

To see a list of supported npm commands, run:

npm help

builder@LuiGi:~/Workspaces/resumeApp/resume$ npm install

added 84 packages, and audited 85 packages in 4s

16 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

I also reworked the Dockerfile to account for the MVC setup

FROM node:16-alpine as builder

WORKDIR /app

COPY package.json package-lock.json ./

RUN npm ci --omit=dev

COPY components ./components

COPY data ./data

COPY images ./images

COPY views ./views

#RUN npm run build

# Production stage

FROM node:16-alpine as production

WORKDIR /app

#COPY --from=builder /app/build ./build

#COPY --from=builder /app/public ./public

# If you have images or other assets in a folder like 'images', copy it

COPY components ./components

COPY data ./data

COPY images ./images

COPY views ./views

# Install serve or a similar tool to serve static content. Nginx could also be used.

RUN npm install -g serve

CMD ["serve", "-s", "start"]

It’s not great. I likely can ditch the Docker build section as we don’t need it. But this does build a container

builder@LuiGi:~/Workspaces/resumeApp/resume$ docker build -t resumeapp:0.1 .

[+] Building 5.0s (12/12) FINISHED docker:default

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 706B 0.0s

=> [internal] load metadata for docker.io/library/node:16-alpine 0.3s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [production 1/7] FROM docker.io/library/node:16-alpine@sha256:a1f9d027912b58a7c75be7716c97cfbc6 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 622B 0.0s

=> CACHED [production 2/7] WORKDIR /app 0.0s

=> [production 3/7] COPY components ./components 0.1s

=> [production 4/7] COPY data ./data 0.1s

=> [production 5/7] COPY images ./images 0.1s

=> [production 6/7] COPY views ./views 0.1s

=> [production 7/7] RUN npm install -g serve 3.8s

=> exporting to image 0.3s

=> => exporting layers 0.3s

=> => writing image sha256:cdb7003eb6a3562cc9032a11ea2280ae4f2da2cbb2810eb5374e9df6a3f06416 0.0s

=> => naming to docker.io/library/resumeapp:0.1 0.0s

What's Next?

View a summary of image vulnerabilities and recommendations → docker scout quickview

I’ll try it next (knowing many images will be blank)

$ docker run -p 3020:3000 resumeapp:0.1

INFO Accepting connections at http://localhost:3000

Local testing

I was about to go down a rabbit hole to convert the code before I decided to just stop for a moment and try locally

$ PORT=3030 npm start

> resume-nodejs@1.0.0 start

> node server.js

Server running at http://localhost:3030

That looks about right

Some of the missing images are things like /images/profile.png

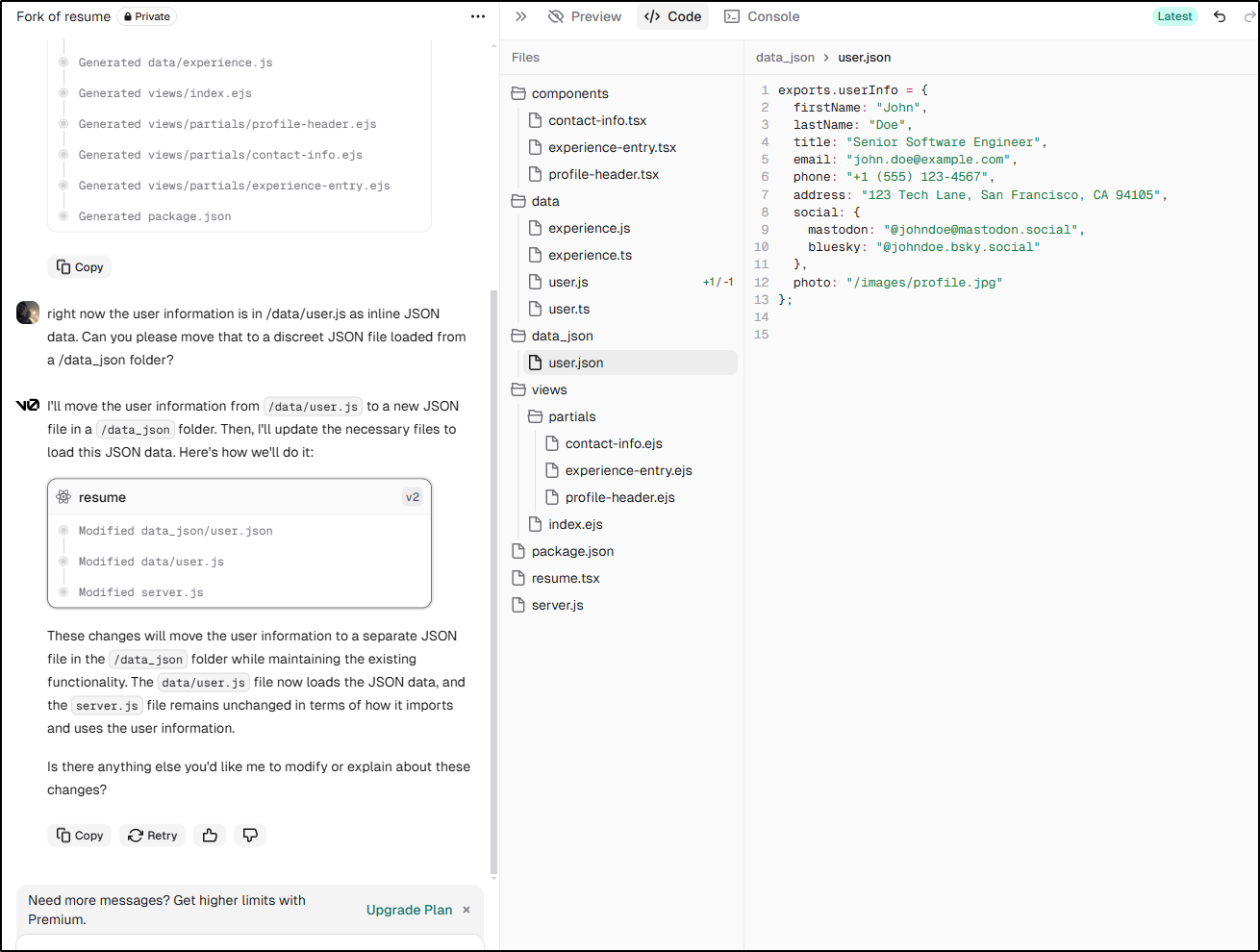

I tried to get v0 to move it into a JSON file, but it put JS contents into a file named JSON

However, coming back to Gemini Code Assist, I got a bit farther

I eventually settled on a user.js that looks like

const fs = require('fs');

const path = require('path');

let userInfo = {}; // Initialize userInfo

const userDataPath = path.join(__dirname, '..', 'data_json', 'user.json');

const userJson = fs.readFileSync(userDataPath, 'utf8');

exports.userInfo = JSON.parse(userJson);

This brings up a good point - AI’s can take us only so far. We have to have some ability to code or debug.

With some tweaking and rework, I managed to get it close to how I wanted it to look

Moving to Github

I want to work more on this, but I’ll need to now kick it to Github so we can start to work out a proper Dockerfile build and push, helm charts and the rest

Which was easy to push

builder@LuiGi:~/Workspaces/resumeApp/resume$ git push origin main

Enumerating objects: 50, done.

Counting objects: 100% (50/50), done.

Delta compression using up to 16 threads

Compressing objects: 100% (49/49), done.

Writing objects: 100% (50/50), 2.08 MiB | 428.00 KiB/s, done.

Total 50 (delta 12), reused 0 (delta 0), pack-reused 0

remote: Resolving deltas: 100% (12/12), done.

To https://github.com/idjohnson/resumeapp.git

* [new branch] main -> main

I can now ask Gemini Code Assist to build out my pipeline:

Create a Github Actions workflow YAML file to build the Dockerfile and push to GHCR (Github Artifacts). Use the commit SHA in the container tag.

Let’s build out this working Github Actions workflow together:

As you can see, there was a default context that had to get sorted out as well as workflow permission for publishing that Gemini Code Assist did correctly fix.

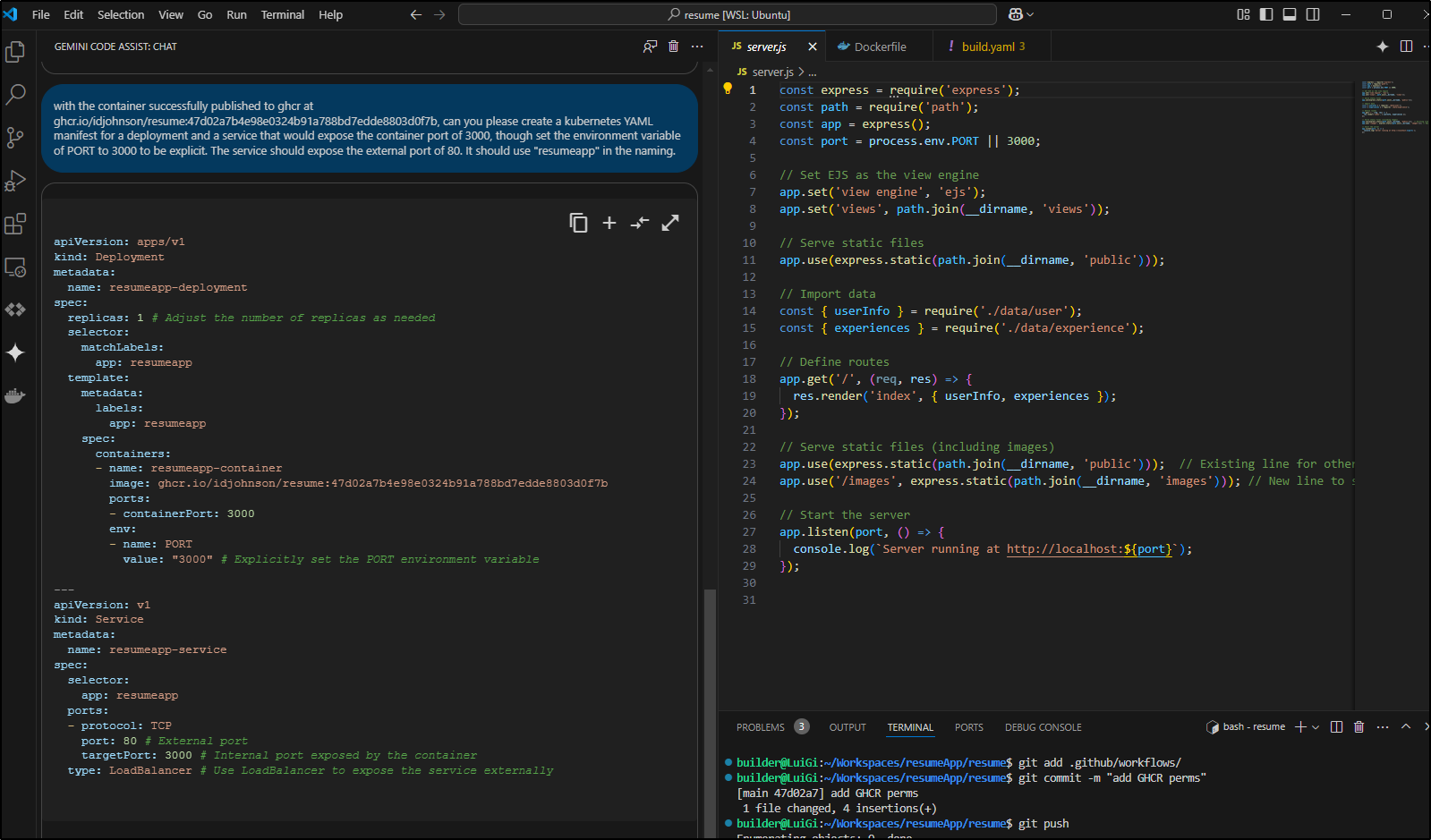

I wnt to test it, so I’ll now ask Gemini Code Assist to help create the manifest

with the container successfully published to ghcr at ghcr.io/idjohnson/resume:47d02a7b4e98e0324b91a788bd7edde8803d0f7b, can you please create a kubernetes YAML manifest for a deployment and a service that would expose the container port of 3000, though set the environment variable of PORT to 3000 to be explicit. The service should expose the external port of 80. It should use “resumeapp” in the naming.

It gives a usable YAML manifest, however I do have some worries about needing to auth for a private Repo to pull an image from GHRC

I’ll try it as it was listed first

builder@LuiGi:~/Workspaces/resumeApp/resume/deployment$ cat k8s-manifest.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: resumeapp-deployment

spec:

replicas: 1 # Adjust the number of replicas as needed

selector:

matchLabels:

app: resumeapp

template:

metadata:

labels:

app: resumeapp

spec:

containers:

- name: resumeapp-container

image: ghcr.io/idjohnson/resume:47d02a7b4e98e0324b91a788bd7edde8803d0f7b

ports:

- containerPort: 3000

env:

- name: PORT

value: "3000" # Explicitly set the PORT environment variable

---

apiVersion: v1

kind: Service

metadata:

name: resumeapp-service

spec:

selector:

app: resumeapp

ports:

- protocol: TCP

port: 80 # External port

targetPort: 3000 # Internal port exposed by the container

type: LoadBalancer # Use LoadBalancer to expose the service externally

builder@LuiGi:~/Workspaces/resumeApp/resume/deployment$ kubectl apply -f ./k8s-manifest.yaml

deployment.apps/resumeapp-deployment created

service/resumeapp-service created

It seemed to work OOTB, which was suprising

$ kubectl describe pod resumeapp-deployment-8697dbb8b8-f5q97 | tail -n 6

---- ------ ---- ---- -------

Normal Scheduled 3m6s default-scheduler Successfully assigned default/resumeapp-deployment-8697dbb8b8-f5q97 to hp-hp-elitebook-850-g2

Normal Pulling 3m6s kubelet Pulling image "ghcr.io/idjohnson/resume:47d02a7b4e98e0324b91a788bd7edde8803d0f7b"

Normal Pulled 2m54s kubelet Successfully pulled image "ghcr.io/idjohnson/resume:47d02a7b4e98e0324b91a788bd7edde8803d0f7b" in 11.372895366s (11.372901915s including waiting)

Normal Created 2m54s kubelet Created container resumeapp-container

Normal Started 2m54s kubelet Started container resumeapp-container

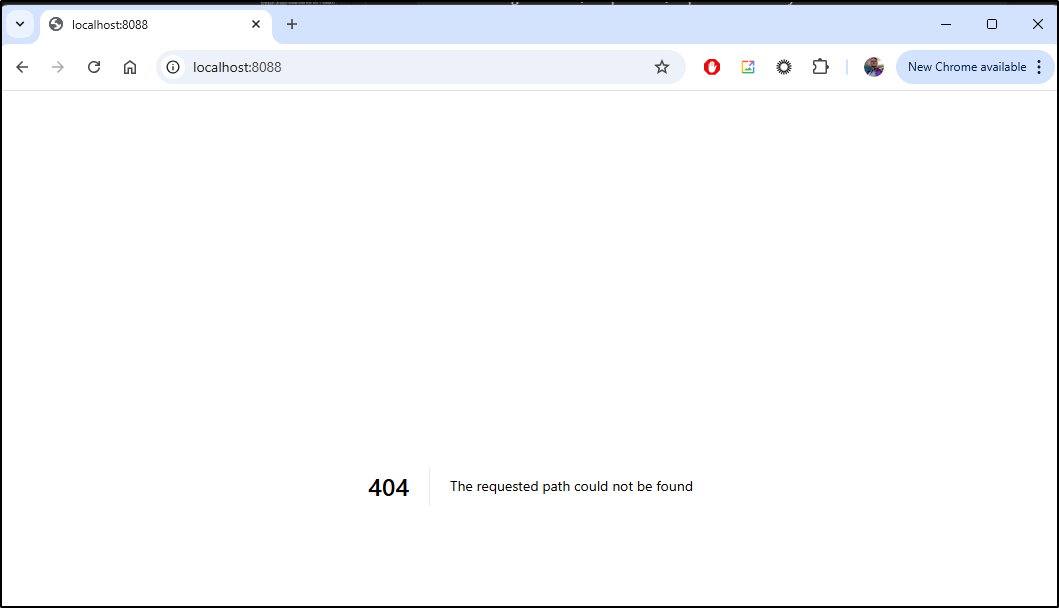

I’ll need to switch the type to ClusterIP later, but for now, it should work just fine. I can do a quick port-forward to test

builder@LuiGi:~/Workspaces/resumeApp/resume/deployment$ kubectl get svc | grep resume

resumeapp-service LoadBalancer 10.43.223.136 <pending>

80:32470/TCP 3m51s

builder@LuiGi:~/Workspaces/resumeApp/resume/deployment$ kubectl port-forward svc/resumeapp-service 8088:80

Forwarding from 127.0.0.1:8088 -> 3000

Forwarding from [::1]:8088 -> 3000

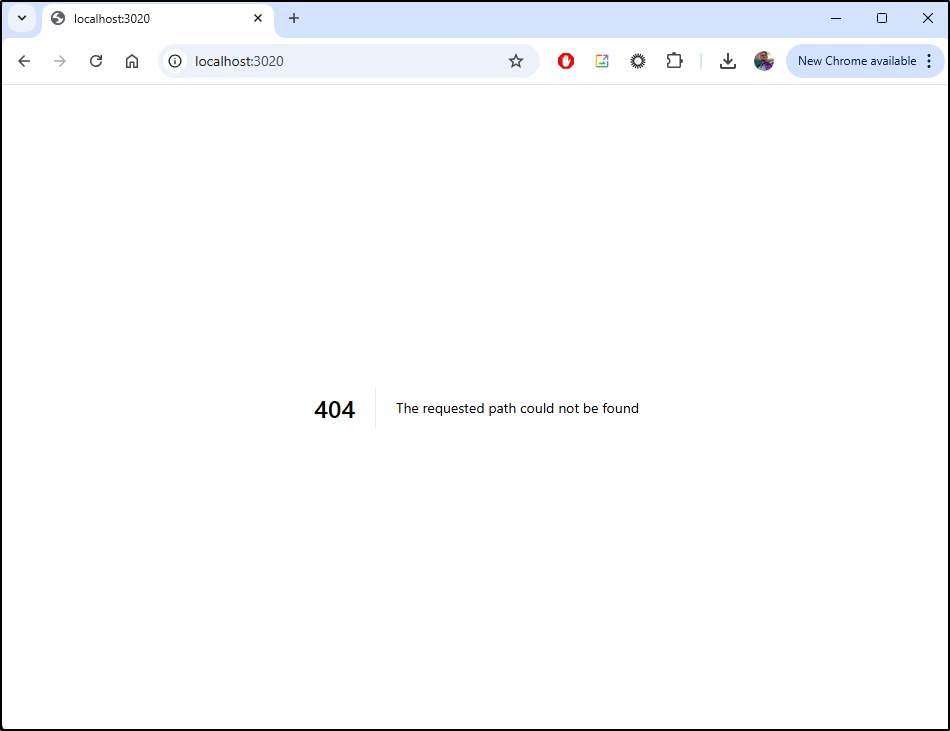

I’m getting that pesky 404 again

I got the same using a port-forward to the pod

$ kubectl port-forward resumeapp-deployment-8697dbb8b8-f5q97 3000:3000

Forwarding from 127.0.0.1:3000 -> 3000

Forwarding from [::1]:3000 -> 3000

Handling connection for 3000

Handling connection for 3000

Handling connection for 3000

I even get that on the pod

builder@LuiGi:~/Workspaces/resumeApp/resume/deployment$ kubectl exec -it resumeapp-deployment-8697dbb8b8-f5q97 -- /bin/sh

/app # wget http://localhost:3000

Connecting to localhost:3000 ([::1]:3000)

wget: server returned error: HTTP/1.1 404 Not Found

/app # wget http://localhost:3000/

Connecting to localhost:3000 ([::1]:3000)

wget: server returned error: HTTP/1.1 404 Not Found

/app # wget http://localhost:3000/resume

Connecting to localhost:3000 ([::1]:3000)

wget: server returned error: HTTP/1.1 404 Not Found

/app # ps -sef

ps: unrecognized option: s

BusyBox v1.36.1 (2023-07-27 17:12:24 UTC) multi-call binary.

Usage: ps [-o COL1,COL2=HEADER] [-T]

Show list of processes

-o COL1,COL2=HEADER Select columns for display

-T Show threads

/app # ps -ef

PID USER TIME COMMAND

1 root 0:00 node /usr/local/bin/serve -s start

41 root 0:00 /bin/sh

52 root 0:00 ps -ef

/app # exit

In tweaking, I did fix the Service Type as well as the port and image

builder@LuiGi:~/Workspaces/resumeApp/resume/deployment$ kubectl apply -f ./k8s-manifest.yaml

deployment.apps/resumeapp-deployment configured

service/resumeapp-service unchanged

builder@LuiGi:~/Workspaces/resumeApp/resume/deployment$ cat k8s-manifest.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: resumeapp-deployment

spec:

replicas: 1 # Adjust the number of replicas as needed

selector:

matchLabels:

app: resumeapp

template:

metadata:

labels:

app: resumeapp

spec:

containers:

- name: resumeapp-container

image: ghcr.io/idjohnson/resume:ff2f9399e4d3563b496053d1e83199cbaac1d8a1

ports:

- containerPort: 3030

env:

- name: PORT

value: "3030" # Explicitly set the PORT environment variable

---

apiVersion: v1

kind: Service

metadata:

name: resumeapp-service

spec:

selector:

app: resumeapp

ports:

- protocol: TCP

port: 80 # External port

targetPort: 3030 # Internal port exposed by the container

type: ClusterIP

With the working Dockerfile:

FROM node:16-alpine

WORKDIR /app

COPY server.js package.json package-lock.json ./

COPY components ./components

COPY data ./data

COPY images ./images

COPY views ./views

# I'll make this dynamic next

COPY data_json ./data_json

# Install dependencies *inside* the container

RUN npm install

# Copy the rest of your application code

COPY . .

# Expose the port your app listens on

EXPOSE 3030

# Define the command to run your app (as before)

CMD ["/bin/sh", "-c", "PORT=3030 npm start"]

I can port-forward

$ kubectl port-forward svc/resumeapp-service 8088:80

Forwarding from 127.0.0.1:8088 -> 3030

Forwarding from [::1]:8088 -> 3030

Handling connection for 8088

Handling connection for 8088

Handling connection for 8088

and see it works!

Let’s now expose this with an Ingress

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n resume

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "94b025d3-ec06-4b5d-ab42-72cfb20ba289",

"fqdn": "resume.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/resume",

"name": "resume",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

I can then create an ingress YAML

builder@LuiGi:~/Workspaces/resumeApp/resume/deployment$ cat resume.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: resumeapp-service

name: resumeappingress

namespace: default

spec:

rules:

- host: resume.tpk.pw

http:

paths:

- backend:

service:

name: resumeapp-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- resume.tpk.pw

secretName: myresumeapp-tls

builder@LuiGi:~/Workspaces/resumeApp/resume/deployment$ kubectl apply -f ./resume.ingress.yaml

ingress.networking.k8s.io/resumeappingress created

Which worked great

I then switched to pushing the JSONs from a configmap

---

apiVersion: v1

kind: ConfigMap

metadata:

name: resume-data

data:

experiences.json: |

[

{

"companyName": "Wellsky",

"companyLogo": "/images/wellsky.jpeg",

"location": "Kansas City, KS",

"startDate": "2022-01",

"title": "Principal SRE Architect",

"categories": [ "SRE", "Architect", "GCP", "Kubernetes" ],

"description": "Leading multiple BU verticals on SRE Architecture and Cloud Migration",

"longDescription": "Principal Architect over SRE Squad (3 Scrum Teams) for Wellsky Home Health and Hospice Product lines. Focus on Azure DevOps, On-prem deployments, Ansible, Artifactory, NewRelic Monitoring (from Sumo). Pivot to Focus on GCP migration (On-prem/AWS). <br/>March 2022 - Oct 2022: Interim Software Director/Delivery Manager over Feature Squad (3 Scrum Team; US, India, and Argentina) (in addition to Architect role)<br/>March 2022 - Wellsky Home Culture Award for High Performance<br/>Handle high priority Subpoena(s) (Grand Jury request(s))<br/>Point person on full GCP Migration for Hospice BU, key resource for Home Health<br/>Part of Diversity and Inclusion working group (DEI)<br/>Point on SkyDev Studio (Learning Series) for DevOps and TechTalks (two different Studio series)<br/>Presented multiple 15m Lighting Talk (mgmt; PunchUp, LiftDown, In Defence of Open-Source), 2 Hour Azure DevOps Workshop at DevDays 2023 internal conference, 45m talk on Scalable Tracing with OpenTelemetry, NewRelic and Dotnet<br/>Help Azure-based BUs with updates (e.g. AKS)<br/>Part of Personal Care SRE, Datadog Monitor, New Relic SME, Ansible AWX configurations."

},

{

"companyName": "CHRobinson",

"companyLogo": "/images/chrobinson.png",

"location": "Eagen, MN",

"startDate": "2021-07",

"endDate": "2022-01",

"title": "Principal DevOps Engineer (Cloud Solutions Architect/Devops Architect)",

"categories": ["Ansible", "Artifactory", "Azure"],

"description": "Focus on North Europe Migration Effort with Artifactory Backups and Restore. Ansible Playbook support. ",

"longDescription": "Focus on North Europe Migration Effort with Artifactory Backups and Restore. Ansible Playbook support. Support for Software Teams primarily in Jenkins using Octopus. Ansible Playbooks for Operational Updates (Timezones, etc). Created and presented a course on Kubernetes to EU and US teams. Worked on APM and Logging suite evaluations for Enterprise Architects. Supported end users in Kubernetes. Engaged and worked with Cloud Team on Terraform updates (Atlantis)."

}

]

user.json: |

{

"firstName": "Isaac",

"lastName": "Johnson",

"title": "Cloud Solutions Architect",

"email": "isaac@freshbrewed.science",

"phone": "+1 (612) 555-5555",

"address": "1234 Schooner Circle, Woodbury MN 55125",

"social": {

"mastodon": "@ijohnson@noc.social",

"bluesky": "@isaacj.bsky.social"

},

"photo": "/images/profile.png"

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: resumeapp-deployment

spec:

replicas: 1 # Adjust the number of replicas as needed

selector:

matchLabels:

app: resumeapp

template:

metadata:

labels:

app: resumeapp

spec:

containers:

- name: resumeapp-container

image: ghcr.io/idjohnson/resume:bfe57d840337a56b7920bdf2d723fc12ae6cfb09

ports:

- containerPort: 3030

env:

- name: PORT

value: "3030" # Explicitly set the PORT environment variable

volumeMounts:

- name: resume-data-volume

mountPath: /app/data_json # Mount path inside the container

volumes:

- name: resume-data-volume

configMap:

name: resume-data # Name of your ConfigMap

---

apiVersion: v1

kind: Service

metadata:

name: resumeapp-service

spec:

selector:

app: resumeapp

ports:

- protocol: TCP

port: 80 # External port

targetPort: 3030 # Internal port exposed by the container

type: ClusterIP

And of course I also needed to stop the Dockerfile from copying in those files at build time

FROM node:16-alpine

WORKDIR /app

COPY server.js package.json package-lock.json ./

COPY components ./components

COPY data ./data

COPY images ./images

COPY views ./views

# I'll make this dynamic next

#COPY data_json ./data_json

# Install dependencies *inside* the container

RUN npm install

# Copy the rest of your application code

COPY . .

# Expose the port your app listens on

EXPOSE 3030

# Define the command to run your app (as before)

CMD ["/bin/sh", "-c", "PORT=3030 npm start"]

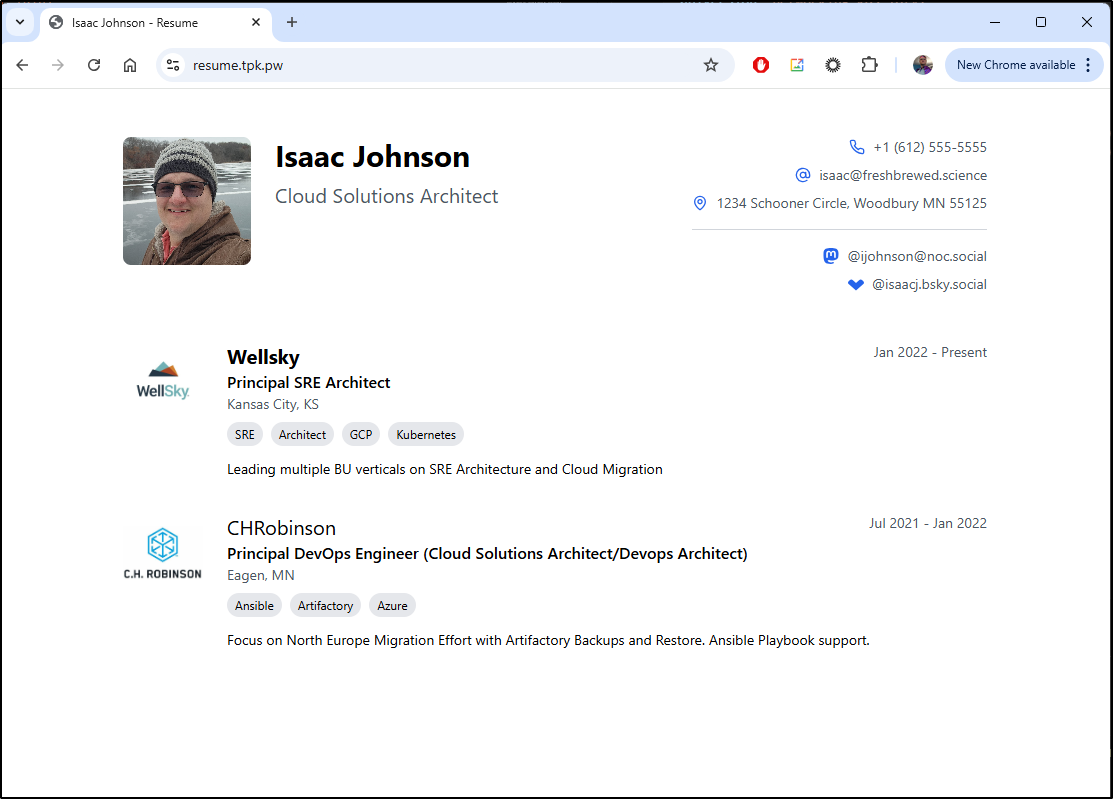

With some fixes and populating my experiences into the ConfigMap:

I now have a fully working Resume app which is live at https://resume.tpk.pw/.

Should I expose this for others as a next step to Open-Source it, I will note that the “companyLogo” works with a URL just as well as a local file.. e.g.

{

"startDate": "2019-09",

"endDate": "2021-07",

"enabled": "true",

"title": "Principal Software Engineer (Azure DevOps)",

"companyLogo": "https://www.eng.ufl.edu/nimet/wp-content/uploads/sites/52/2023/09/Medtronic-Careers.png",

"companyName": "Medtronic",

"categories": [

"Azure",

"SCM",

"AWS",

"AzureDevops",

"Kubernetes"

],

"location": "Mounds View, MN",

"description": "Support Azure DevOps for all Carelink R2 (Cloud) activities with a focus on “right side” containerization, deployment, secrets propagation, builds for .NET and Java, user support.",

"longDescription": "Support Azure DevOps for all Carelink R2 (Cloud) activities with a focus on “right side” containerization, deployment, secrets propagation, builds for .NET and Java, user support. Assist Architecture team on a variety of projects (Metrics, Dashboards). Work with other CRHF teams in their projects (Reports and Metrics for Security, Sonarqube for OneApp, builds for Rhythm). As team reduced to effectively one, sole supporter for all builds/build setup for CL R2 while liaised in with MLife Infrastructure team to support Infrastructure both on-prem and in the clouds (AWS, Azure)."

},

{

"startDate": "2018-06",

"endDate": "2019-09",

"enabled": "true",

"title": "Cloud Solutions Architect and Delivery Manager",

"categories": [

"Architect",

"Azure",

"Cloud",

"SCM",

"AWS"

],

"companyLogo": "https://mms.businesswire.com/media/20200728005145/en/808429/23/AHEAD_logo.jpg",

"companyName": "Ahead",

"location": "Chicago, IL",

"description": "Brought in as Cloud Solutions Architect with DevOps and AWS Experience to focus on Azure DevOps and Azure microservices implementation at Medtronic.",

"longDescription": "Brought in as Cloud Solutions Architect with DevOps and AWS Experience to focus on Azure DevOps and Azure microservices implementation at Medtronic.<br/><br/>For first year focused on Medtronic CareLink future release including setting up Azure DevOps, TFS Migration, Kubernetes implementation and Terraform IaC support. Additionally, during this duration sought and got Azure Cloud (70-535) and Hashi Vault Intermediate certifications. <br/><br/>From May 2019 on, also served as Delivery Manager focusing on delivering automation solutions to Midwest region which included resource planning, contract write ups (SOW, WBS), and workshops. <br/><br/>This included an AKS Foundation implementation at Medline with AAD RBAC <br/><br/>and a similar AKS and DevOps workshop with the University of Minnesota Center for Farm Finance Management.<br/><br/>I’ve also lead an Azure Inno Days at Microsoft campus in the Twin Cities and been at the center of devops calls and scoping discussions with many other potential customers ranging from automotive to beauty supply companies.<br/><br/><br/><br/>"

},

Costs

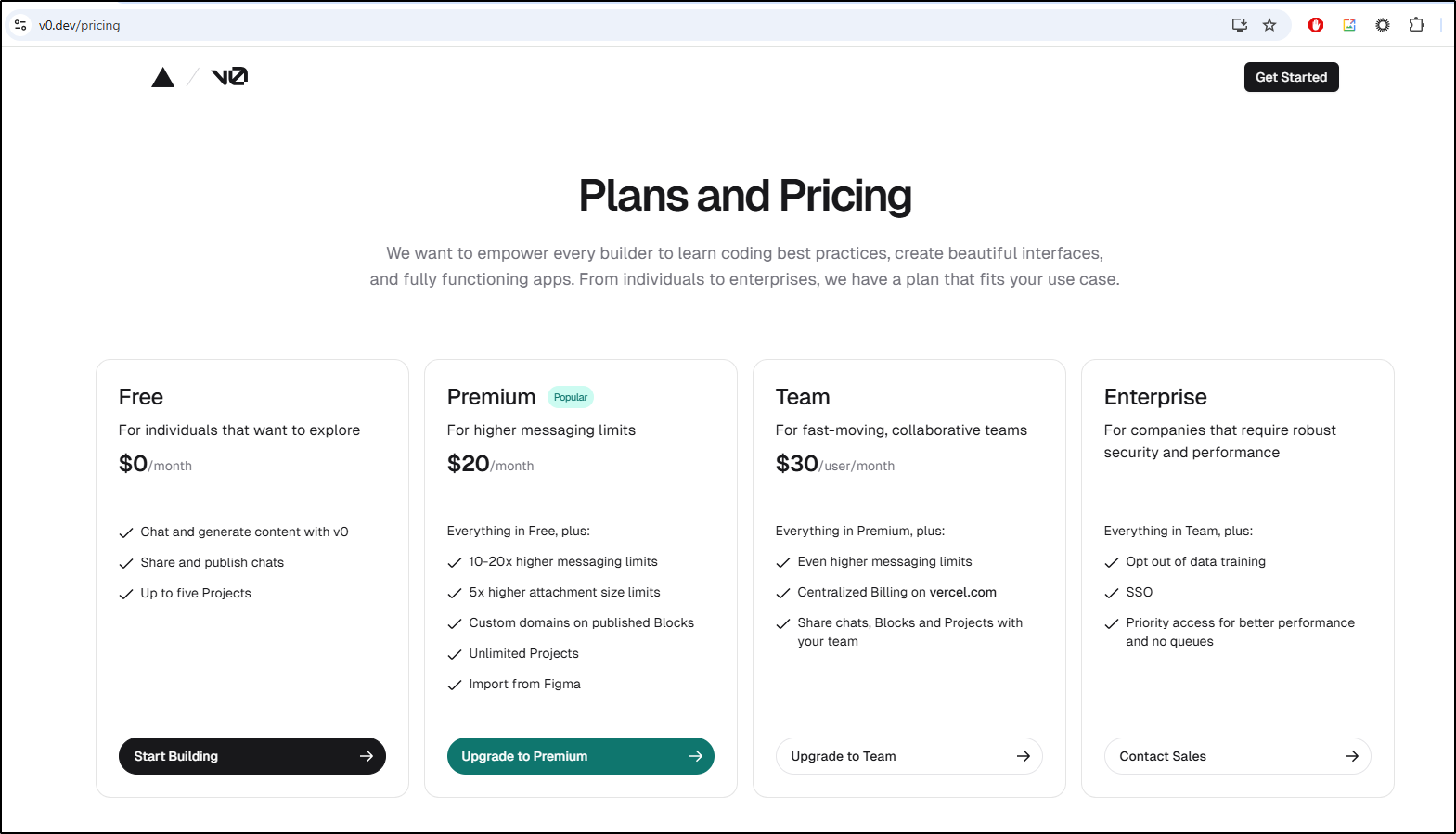

Let’s look at Vercel’s v0 and Google’s Gemini Code Assist by way of costs.

v0

We should talk a bit about pricing. With v0 we now have a generally free plan that gives us a generous 5 projects to use. I’m so far quite impressed with what I can get done within those boundaries.

The next level up, as we can see above, is Premium at $20 a month. This kind of bums me out as I would be very open to something around $15 a month but $20 somehow seems quite a lot (considering I would pair it with other AI costs).

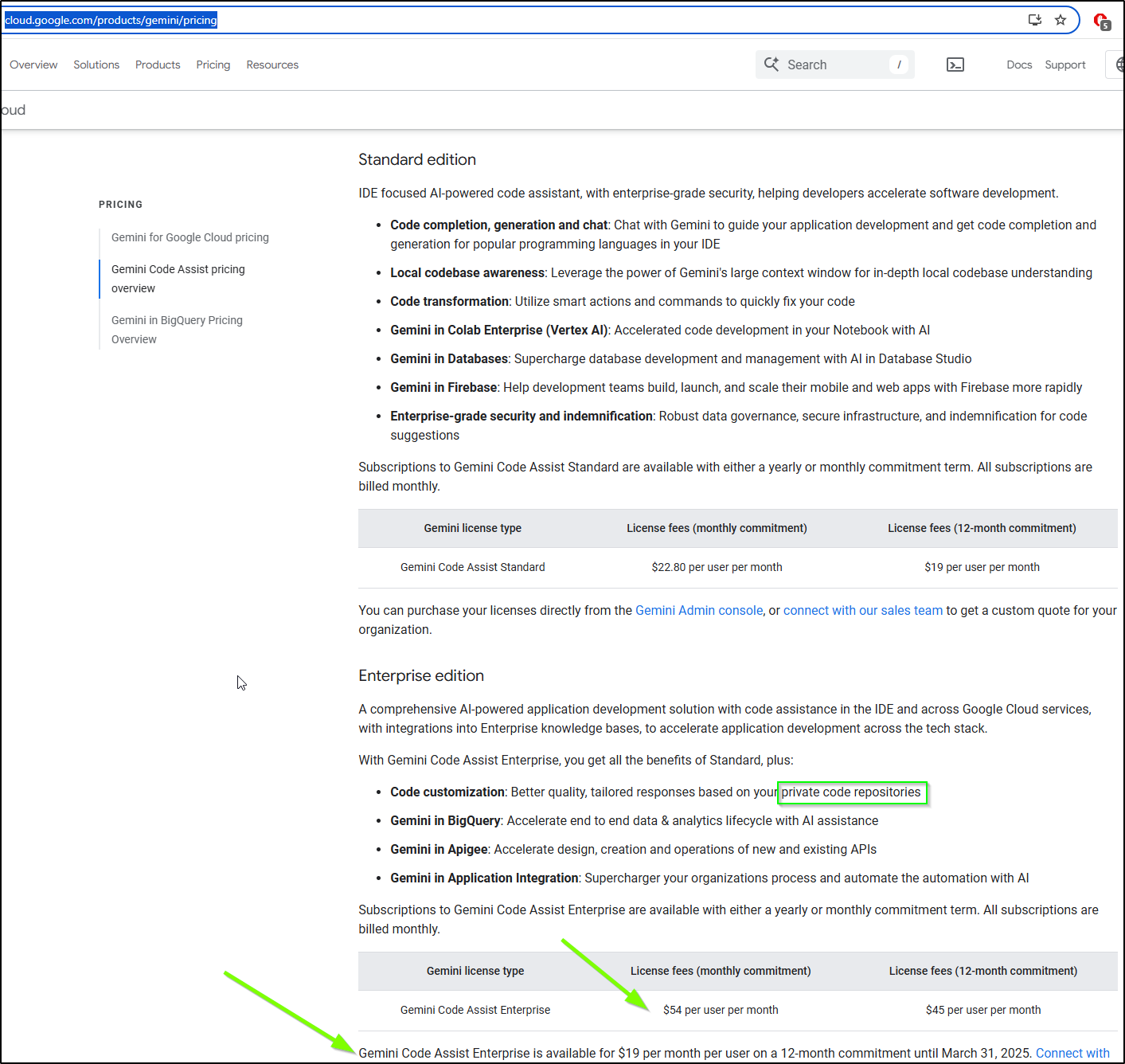

Gemini Code Assist

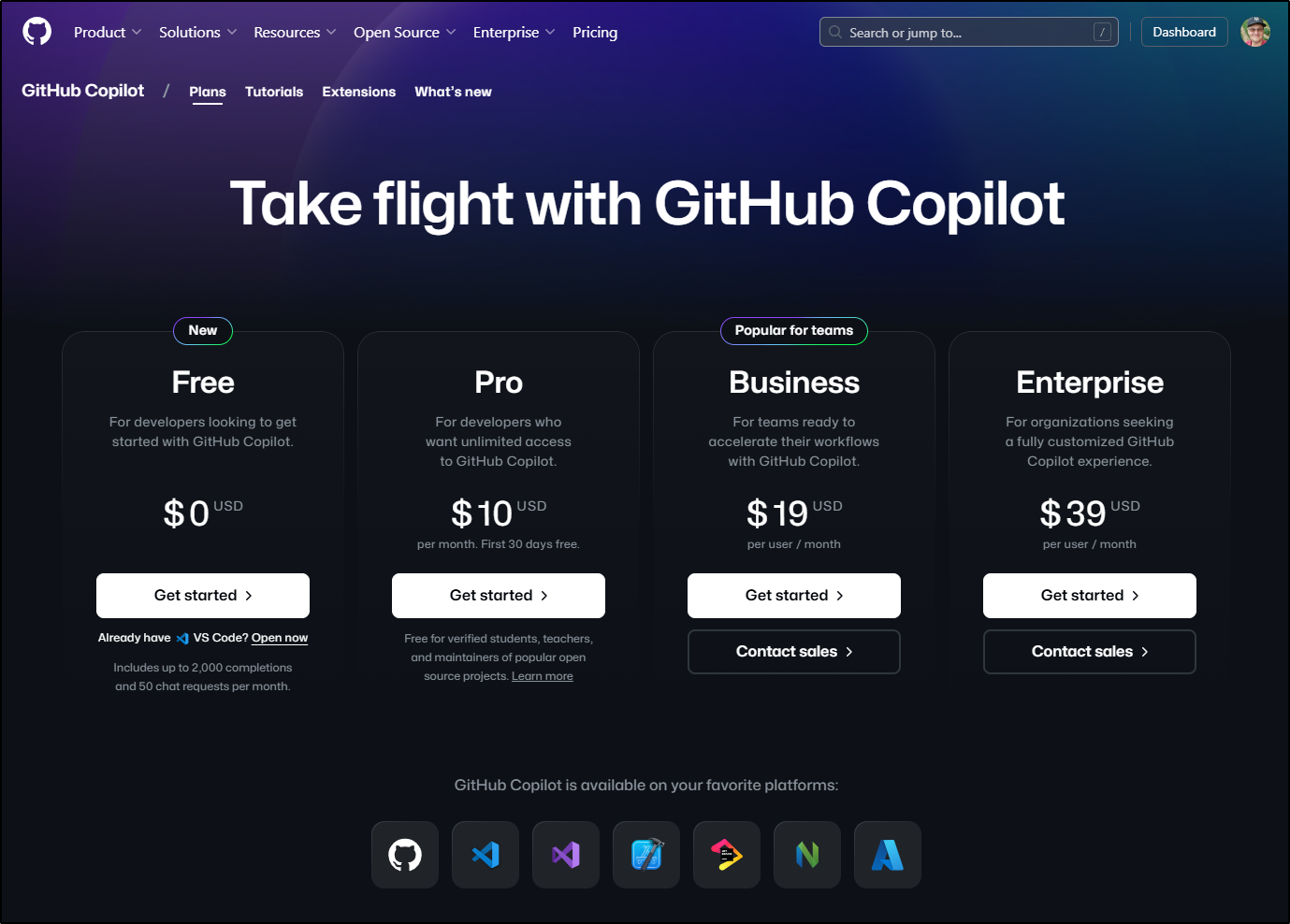

Gemini was basically free for the longest time and on a side-by-side for most coding projects I found very similar results to Copilot.

However, that free period is wrapping up in the next month and it will be between $19/mo and $54/mo (though they are doing a promo to get the pro account if you are good to drop a year’s $230 up front)

I’m not really sure why it’s so expensive when the similar Github Copilot is half that at $10/month

Oddly, there is still no updated pricing on Project IDX which includes Gemini Code Assist. So one could always use that for free Gemini Code Assist usage.

Summary

Today we had an idea (a Resume App) and used Vercel’s v0 paired with Google Gemini Code Assist to work through a full solution that was deployed to a local Kubernetes and is live on the URL https://resume.tpk.pw/.

With the exception of using Copilot just to solve a challenging sed/jq substitution, I did all of the work and rework with Google’s Gemini Code Assist. I think I might have spent 3 hours total to build this out which is pretty awesome.

I’m debating the cost of v0 even as I write this. Perhaps if they had a discounted pay-for-a-year-upfront option I could sell it to myself. So far the free tier is quite excellent and I highly recommend it.