Published: Nov 26, 2024 by Isaac Johnson

In our last post we checked out OneDev setting it up locally with their helm chart and adding an HTTPS TLS ingress.

Today, we will expand on that by setting up a proper HA DB backend and configuring full CICD pipelines. We also only glossed over artifacts so let’s dig into those as well.

Managed HA Database

According to the values we can use MySQL, MariaDB, PostgreSQL and MSSQL.

My newer NAS has MariaDB running natively so I’ll look to use that.

ijohnson@sirnasilot:~$ mysql -u root -p

Enter password:

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 69293

Server version: 10.11.6-MariaDB Source distribution

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE onedev CHARACTER SET 'utf8mb4' COLLATE 'utf8mb4_unicode_ci';

Query OK, 1 row affected (0.001 sec)

MariaDB [(none)]> create user 'onedev'@'%' identified by 'asdfasdsadf';

Query OK, 0 rows affected (1.049 sec)

MariaDB [(none)]> grant all privileges on onedev.* to 'onedev'@'%';

Query OK, 0 rows affected (0.085 sec)

MariaDB [(none)]> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.001 sec)

MariaDB [(none)]> \q

Bye

I’m going to try and upgrade, but do not expect it to actually migrate over to an external database. My guess is this will reset the instance.

$ helm upgrade onedev --install -n onedev --create-namespace --set database.external=true --set database.type=mariadb --set database.host=192.168.1.116 --set database.port=3306 --set database.name=onedev --set database.user=onedev --set database.password=asdfasfasdf onedev/onedev

Release "onedev" has been upgraded. Happy Helming!

NAME: onedev

LAST DEPLOYED: Sat Nov 16 16:35:48 2024

NAMESPACE: onedev

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

CHART NAME: onedev

CHART VERSION: 11.5.2

APP VERSION: 11.5.2

###################################################################

#

# CAUTION: If you are upgrading from version <= 9.0.0, please make

# sure to follow https://docs.onedev.io/upgrade-guide/deploy-to-k8s

# to migrate your data

#

###################################################################

** Please be patient while the chart is being deployed **

Get the OneDev URL by running:

kubectl port-forward --namespace onedev svc/onedev 6610:80 &

URL: http://127.0.0.1:6610

It errored, but I’m not sure why

$ kubectl logs onedev-0 -n onedev

--> Wrapper Started as Console

Java Service Wrapper Standard Edition 64-bit 3.5.51

Copyright (C) 1999-2022 Tanuki Software, Ltd. All Rights Reserved.

http://wrapper.tanukisoftware.com

Licensed to OneDev for Service Wrapping

Launching a JVM...

WrapperManager: Initializing...

INFO - Launching application from '/app'...

INFO - Starting application...

ERROR - Error booting application

com.google.inject.CreationException: Unable to create injector, see the following errors:

1) [Guice/MissingImplementation]: No implementation for Set<ServerConfigurator> was bound.

Requested by:

1 : DefaultJettyLauncher.<init>(DefaultJettyLauncher.java:52)

\_ for 3rd parameter

at CoreModule.configure(CoreModule.java:176)

\_ installed by: Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> CoreModule

Learn more:

https://github.com/google/guice/wiki/MISSING_IMPLEMENTATION

2) [Guice/MissingImplementation]: No implementation for Set<ProjectNameReservation> was bound.

Requested by:

1 : DefaultProjectManager.<init>(DefaultProjectManager.java:208)

\_ for 24th parameter

at CoreModule.configure(CoreModule.java:212)

\_ installed by: Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> CoreModule

Learn more:

https://github.com/google/guice/wiki/MISSING_IMPLEMENTATION

3) An exception was caught and reported. Message: For input string: "%!s(int64=3306)"

at Modules$OverrideModule.configure(Modules.java:236)

4) [Guice/MissingConstructor]: No injectable constructor for type ServerConfig.

class ServerConfig does not have a @Inject annotated constructor or a no-arg constructor.

Requested by:

1 : ServerConfig.class(ServerConfig.java:23)

at ProductModule.configure(ProductModule.java:23)

\_ installed by: Modules$OverrideModule -> Modules$OverrideModule -> ProductModule

Learn more:

https://github.com/google/guice/wiki/MISSING_CONSTRUCTOR

4 errors

======================

Full classname legend:

======================

CoreModule: "io.onedev.server.CoreModule"

DefaultJettyLauncher: "io.onedev.server.jetty.DefaultJettyLauncher"

DefaultProjectManager: "io.onedev.server.entitymanager.impl.DefaultProjectManager"

Modules$OverrideModule: "com.google.inject.util.Modules$OverrideModule"

ProductModule: "io.onedev.server.product.ProductModule"

ProjectNameReservation: "io.onedev.server.util.ProjectNameReservation"

ServerConfig: "io.onedev.server.ServerConfig"

ServerConfigurator: "io.onedev.server.jetty.ServerConfigurator"

========================

End of classname legend:

========================

at com.google.inject.internal.Errors.throwCreationExceptionIfErrorsExist(Errors.java:576)

at com.google.inject.internal.InternalInjectorCreator.initializeStatically(InternalInjectorCreator.java:163)

at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:110)

at com.google.inject.Guice.createInjector(Guice.java:87)

at com.google.inject.Guice.createInjector(Guice.java:69)

at com.google.inject.Guice.createInjector(Guice.java:59)

at io.onedev.commons.loader.AppLoader.start(AppLoader.java:52)

at io.onedev.commons.bootstrap.Bootstrap.main(Bootstrap.java:200)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.tanukisoftware.wrapper.WrapperSimpleApp.run(WrapperSimpleApp.java:349)

at java.base/java.lang.Thread.run(Thread.java:829)

Caused by: java.lang.NumberFormatException: For input string: "%!s(int64=3306)"

at java.base/java.lang.NumberFormatException.forInputString(NumberFormatException.java:65)

at java.base/java.lang.Integer.parseInt(Integer.java:638)

at java.base/java.lang.Integer.parseInt(Integer.java:770)

at io.onedev.server.ServerConfig.<init>(ServerConfig.java:98)

at io.onedev.server.product.ProductModule.configure(ProductModule.java:23)

at com.google.inject.AbstractModule.configure(AbstractModule.java:66)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:409)

at com.google.inject.spi.Elements.getElements(Elements.java:108)

at com.google.inject.util.Modules$OverrideModule.configure(Modules.java:236)

at com.google.inject.AbstractModule.configure(AbstractModule.java:66)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:409)

at com.google.inject.spi.Elements.getElements(Elements.java:108)

at com.google.inject.util.Modules$OverrideModule.configure(Modules.java:213)

at com.google.inject.AbstractModule.configure(AbstractModule.java:66)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:409)

at com.google.inject.spi.Elements.getElements(Elements.java:108)

at com.google.inject.internal.InjectorShell$Builder.build(InjectorShell.java:160)

at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:107)

... 11 common frames omitted

INFO - Stopping application...

<-- Wrapper Stopped

I killed it and it came back up

$ kubectl delete pod onedev-0 -n onedev

pod "onedev-0" deleted

$ kubectl get pods -n onedev

NAME READY STATUS RESTARTS AGE

onedev-0 1/1 Running 0 5s

$ kubectl get pods -n onedev

NAME READY STATUS RESTARTS AGE

onedev-0 0/1 Error 1 (22s ago) 31s

I’m okay starting over at the moment, so I will uninstall

$ helm delete onedev -n onedev

release "onedev" uninstalled

then delete the PVC manually

$ kubectl get pvc -n onedev

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-onedev-0 Bound pvc-a86b22bc-f10f-4faa-8dd5-28554eefd2c4 100Gi RWO local-path 8h

$ kubectl delete pvc data-onedev-0 -n onedev

persistentvolumeclaim "data-onedev-0" deleted

$ kubectl get pvc -n onedev

No resources found in onedev namespace.

Now to try fresh

$ helm upgrade onedev --install -n onedev --create-namespace --set database.external=true --set database.type=mariadb --set database.host=192.168.1.116 --set database.port=3306 --set database.name=onedev --set database.user=onedev --set database.password=OneDev_88432211 onedev/onedev

Release "onedev" does not exist. Installing it now.

NAME: onedev

LAST DEPLOYED: Sat Nov 16 16:43:26 2024

NAMESPACE: onedev

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: onedev

CHART VERSION: 11.5.2

APP VERSION: 11.5.2

###################################################################

#

# CAUTION: If you are upgrading from version <= 9.0.0, please make

# sure to follow https://docs.onedev.io/upgrade-guide/deploy-to-k8s

# to migrate your data

#

###################################################################

** Please be patient while the chart is being deployed **

Get the OneDev URL by running:

kubectl port-forward --namespace onedev svc/onedev 6610:80 &

URL: http://127.0.0.1:6610

Same error again. I’ll try with “mysql” instead of “mariadb”

$ helm upgrade onedev --install -n onedev --create-namespace --set database.external=true --set database.type=mysql --set database.host=192.168.1.116 --set database.port=3306 --set database.name=onedev --set database.user=onedev --set database.password=OneDev_88432211 onedev/onedev

Release "onedev" does not exist. Installing it now.

NAME: onedev

LAST DEPLOYED: Sat Nov 16 16:44:57 2024

NAMESPACE: onedev

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: onedev

CHART VERSION: 11.5.2

APP VERSION: 11.5.2

###################################################################

#

# CAUTION: If you are upgrading from version <= 9.0.0, please make

# sure to follow https://docs.onedev.io/upgrade-guide/deploy-to-k8s

# to migrate your data

#

###################################################################

** Please be patient while the chart is being deployed **

Get the OneDev URL by running:

kubectl port-forward --namespace onedev svc/onedev 6610:80 &

URL: http://127.0.0.1:6610

Still no luck.

Let’s attempt a PSQL database

builder@isaac-MacBookAir:~$ sudo su - postgres

postgres@isaac-MacBookAir:~$ psql

psql (14.13 (Ubuntu 14.13-0ubuntu0.22.04.1))

Type "help" for help.

postgres=# create user onedev with password 'one@dev1234555';

CREATE ROLE

postgres=# create database onedev;

CREATE DATABASE

postgres=# grant all privileges on database onedev to onedev;

GRANT

postgres=# \q

I’ll try PostgreSQL now

$ helm upgrade onedev --install -n onedev --create-namespace --set database.external=true --set database.type=postgresql --set database.host=192.168.1.78 --set database.port=5432 --set database.name=onedev --set database.user=onedev --set database.password=one@dev1234555 onedev/onedev

Release "onedev" does not exist. Installing it now.

NAME: onedev

LAST DEPLOYED: Sat Nov 16 16:54:01 2024

NAMESPACE: onedev

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: onedev

CHART VERSION: 11.5.2

APP VERSION: 11.5.2

###################################################################

#

# CAUTION: If you are upgrading from version <= 9.0.0, please make

# sure to follow https://docs.onedev.io/upgrade-guide/deploy-to-k8s

# to migrate your data

#

###################################################################

** Please be patient while the chart is being deployed **

Get the OneDev URL by running:

kubectl port-forward --namespace onedev svc/onedev 6610:80 &

URL: http://127.0.0.1:6610

But that too is in a crash loop

builder@DESKTOP-QADGF36:~$ kubectl logs onedev-0 -n onedev

--> Wrapper Started as Console

Java Service Wrapper Standard Edition 64-bit 3.5.51

Copyright (C) 1999-2022 Tanuki Software, Ltd. All Rights Reserved.

http://wrapper.tanukisoftware.com

Licensed to OneDev for Service Wrapping

Launching a JVM...

WrapperManager: Initializing...

INFO - Launching application from '/app'...

INFO - Starting application...

ERROR - Error booting application

com.google.inject.CreationException: Unable to create injector, see the following errors:

1) [Guice/MissingImplementation]: No implementation for Set<ServerConfigurator> was bound.

Requested by:

1 : DefaultJettyLauncher.<init>(DefaultJettyLauncher.java:52)

\_ for 3rd parameter

at CoreModule.configure(CoreModule.java:176)

\_ installed by: Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> CoreModule

Learn more:

https://github.com/google/guice/wiki/MISSING_IMPLEMENTATION

2) [Guice/MissingImplementation]: No implementation for Set<ProjectNameReservation> was bound.

Requested by:

1 : DefaultProjectManager.<init>(DefaultProjectManager.java:208)

\_ for 24th parameter

at CoreModule.configure(CoreModule.java:212)

\_ installed by: Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> Modules$OverrideModule -> CoreModule

Learn more:

https://github.com/google/guice/wiki/MISSING_IMPLEMENTATION

3) An exception was caught and reported. Message: For input string: "%!s(int64=5432)"

at Modules$OverrideModule.configure(Modules.java:236)

4) [Guice/MissingConstructor]: No injectable constructor for type ServerConfig.

class ServerConfig does not have a @Inject annotated constructor or a no-arg constructor.

Requested by:

1 : ServerConfig.class(ServerConfig.java:23)

at ProductModule.configure(ProductModule.java:23)

\_ installed by: Modules$OverrideModule -> Modules$OverrideModule -> ProductModule

Learn more:

https://github.com/google/guice/wiki/MISSING_CONSTRUCTOR

4 errors

======================

Full classname legend:

======================

CoreModule: "io.onedev.server.CoreModule"

DefaultJettyLauncher: "io.onedev.server.jetty.DefaultJettyLauncher"

DefaultProjectManager: "io.onedev.server.entitymanager.impl.DefaultProjectManager"

Modules$OverrideModule: "com.google.inject.util.Modules$OverrideModule"

ProductModule: "io.onedev.server.product.ProductModule"

ProjectNameReservation: "io.onedev.server.util.ProjectNameReservation"

ServerConfig: "io.onedev.server.ServerConfig"

ServerConfigurator: "io.onedev.server.jetty.ServerConfigurator"

========================

End of classname legend:

========================

at com.google.inject.internal.Errors.throwCreationExceptionIfErrorsExist(Errors.java:576)

at com.google.inject.internal.InternalInjectorCreator.initializeStatically(InternalInjectorCreator.java:163)

at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:110)

at com.google.inject.Guice.createInjector(Guice.java:87)

at com.google.inject.Guice.createInjector(Guice.java:69)

at com.google.inject.Guice.createInjector(Guice.java:59)

at io.onedev.commons.loader.AppLoader.start(AppLoader.java:52)

at io.onedev.commons.bootstrap.Bootstrap.main(Bootstrap.java:200)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.tanukisoftware.wrapper.WrapperSimpleApp.run(WrapperSimpleApp.java:349)

at java.base/java.lang.Thread.run(Thread.java:829)

Caused by: java.lang.NumberFormatException: For input string: "%!s(int64=5432)"

at java.base/java.lang.NumberFormatException.forInputString(NumberFormatException.java:65)

at java.base/java.lang.Integer.parseInt(Integer.java:638)

at java.base/java.lang.Integer.parseInt(Integer.java:770)

at io.onedev.server.ServerConfig.<init>(ServerConfig.java:118)

at io.onedev.server.product.ProductModule.configure(ProductModule.java:23)

at com.google.inject.AbstractModule.configure(AbstractModule.java:66)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:409)

at com.google.inject.spi.Elements.getElements(Elements.java:108)

at com.google.inject.util.Modules$OverrideModule.configure(Modules.java:236)

at com.google.inject.AbstractModule.configure(AbstractModule.java:66)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:409)

at com.google.inject.spi.Elements.getElements(Elements.java:108)

at com.google.inject.util.Modules$OverrideModule.configure(Modules.java:213)

at com.google.inject.AbstractModule.configure(AbstractModule.java:66)

at com.google.inject.spi.Elements$RecordingBinder.install(Elements.java:409)

at com.google.inject.spi.Elements.getElements(Elements.java:108)

at com.google.inject.internal.InjectorShell$Builder.build(InjectorShell.java:160)

at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:107)

... 11 common frames omitted

INFO - Stopping application...

<-- Wrapper Stopped

builder@DESKTOP-QADGF36:~$ kubectl get pods -n onedev

NAME READY STATUS RESTARTS AGE

onedev-0 0/1 CrashLoopBackOff 4 (37s ago) 2m56s

I’m stumped - I used this database for other K8s workloads so it’s not the pg_hba.conf or anything.

I tested the connection and it’s fine

builder@DESKTOP-QADGF36:~$ psql -h 192.168.1.78 -U onedev

Password for user onedev:

psql (12.20 (Ubuntu 12.20-0ubuntu0.20.04.1), server 14.13 (Ubuntu 14.13-0ubuntu0.22.04.1))

WARNING: psql major version 12, server major version 14.

Some psql features might not work.

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, bits: 256, compression: off)

Type "help" for help.

onedev=> \dt;

Did not find any relations.

onedev=>

I’m going to try one last attempt - this time deleting the namespace and starting with a unique release name and namespace (incase there are left over local-path files)

$ kubectl delete pvc data-onedev-0 -n onedev

persistentvolumeclaim "data-onedev-0" deleted

$ kubectl delete ns onedev

namespace "onedev" deleted

$ helm install onedevprd -n onedevprd --create-namespace --set database.external=true --set database.type=postgresql --set database.host=192.168.1.78 --set database.port=5432 --set database.name=onedev --set database.user=onedev --set database.password=one@dev1234555 onedev/onedev

NAME: onedevprd

LAST DEPLOYED: Sat Nov 16 17:01:10 2024

NAMESPACE: onedevprd

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: onedev

CHART VERSION: 11.5.2

APP VERSION: 11.5.2

###################################################################

#

# CAUTION: If you are upgrading from version <= 9.0.0, please make

# sure to follow https://docs.onedev.io/upgrade-guide/deploy-to-k8s

# to migrate your data

#

###################################################################

** Please be patient while the chart is being deployed **

Get the OneDev URL by running:

kubectl port-forward --namespace onedevprd svc/onedevprd 6610:80 &

URL: http://127.0.0.1:6610

I’m going to try the Multiple Cluster option, though this requires a license, but it does use a different Database syntax

builder@builder-T100:~$ docker run -h builder-T100 -it --rm -v $(pwd)/onedev:/opt/onedev -v /var/run/docker.sock:/var/run/docker.sock -e hibernate_dialect=io.onedev.server.persistence.PostgreSQLDialect -e hibernate_connection_driver_class=org.postgresql.Driver -e hibernate_connection_url=jdbc:postgresql://192.168.1.78:5432/onedev -e hibernate_connection_username=onedev -e hibernate_connection_password=one@dev1234555 -e cluster_ip=192.168.1.100 -p 6610:6610 -p 6611:6611 -p 5710:5710 1dev/server

Unable to find image '1dev/server:latest' locally

latest: Pulling from 1dev/server

ff65ddf9395b: Already exists

6e8b471a48b7: Pull complete

613cee5b4025: Pull complete

8f5c9b4e2d9d: Pull complete

f65a5d829bbe: Pull complete

e29167235ec0: Pull complete

9142d3ec8237: Pull complete

2644f6e4afcf: Pull complete

Digest: sha256:e37c8bf9902cb643bbb2f6bda698290204f8297149d49f8630b749c63fa89aee

Status: Downloaded newer image for 1dev/server:latest

--> Wrapper Started as Console

Java Service Wrapper Standard Edition 64-bit 3.5.51

Copyright (C) 1999-2022 Tanuki Software, Ltd. All Rights Reserved.

http://wrapper.tanukisoftware.com

Licensed to OneDev for Service Wrapping

Launching a JVM...

WrapperManager: Initializing...

INFO - Launching application from '/app'...

INFO - Starting application...

INFO - Successfully checked /opt/onedev

INFO - Stopping application...

<-- Wrapper Stopped

--> Wrapper Started as Console

Java Service Wrapper Standard Edition 64-bit 3.5.51

Copyright (C) 1999-2022 Tanuki Software, Ltd. All Rights Reserved.

http://wrapper.tanukisoftware.com

Licensed to OneDev for Service Wrapping

Launching a JVM...

WrapperManager: Initializing...

12:07:42 INFO i.onedev.commons.bootstrap.Bootstrap - Launching application from '/opt/onedev'...

12:07:42 INFO i.onedev.commons.bootstrap.Bootstrap - Cleaning temp directory...

12:07:44 INFO io.onedev.commons.loader.AppLoader - Starting application...

That seemed to go nowhere, so i tried on a larger host (note: coming back i found it crashed the dockerhost…)

builder@isaac-MacBookAir:~$ sudo docker run -h isaac-MacBookAir -d -v /home/builder/onedev:/opt/onedev -v /var/run/docker.sock:/var/run/docker.sock -e hibernate_dialect=io.onedev.server.persistence.PostgreSQLDialect -e hibernate_connection_driver_class=org.postgresql.Driver -e hibernate_connection_url=jdbc:postgresql://192.168.1.78:5432/onedev -e hibernate_connection_username=onedev -e hibernate_connection_password=one@dev1234555 -e cluster_ip=192.168.1.100 -p 6610:6610 -p 6611:6611 -p 5710:5710 1dev/server

Unable to find image '1dev/server:latest' locally

latest: Pulling from 1dev/server

ff65ddf9395b: Pull complete

6e8b471a48b7: Pull complete

613cee5b4025: Pull complete

8f5c9b4e2d9d: Pull complete

f65a5d829bbe: Pull complete

e29167235ec0: Pull complete

9142d3ec8237: Pull complete

2644f6e4afcf: Pull complete

Digest: sha256:e37c8bf9902cb643bbb2f6bda698290204f8297149d49f8630b749c63fa89aee

Status: Downloaded newer image for 1dev/server:latest

42c42805716d415c13b9827007526d60cbce125990d41447c644739626607263

That seemed to be going better, but then suggested the Docker VNet was blocked from posgres access

builder@isaac-MacBookAir:~$ sudo docker logs unruffled_blackburn

--> Wrapper Started as Console

Java Service Wrapper Standard Edition 64-bit 3.5.51

Copyright (C) 1999-2022 Tanuki Software, Ltd. All Rights Reserved.

http://wrapper.tanukisoftware.com

Licensed to OneDev for Service Wrapping

Launching a JVM...

WrapperManager: Initializing...

INFO - Launching application from '/app'...

INFO - Starting application...

INFO - Populating /opt/onedev...

INFO - Successfully populated /opt/onedev

INFO - Stopping application...

<-- Wrapper Stopped

--> Wrapper Started as Console

Java Service Wrapper Standard Edition 64-bit 3.5.51

Copyright (C) 1999-2022 Tanuki Software, Ltd. All Rights Reserved.

http://wrapper.tanukisoftware.com

Licensed to OneDev for Service Wrapping

Launching a JVM...

WrapperManager: Initializing...

12:15:06 INFO i.onedev.commons.bootstrap.Bootstrap - Launching application from '/opt/onedev'...

12:15:07 INFO io.onedev.commons.loader.AppLoader - Starting application...

12:15:12 ERROR i.onedev.commons.bootstrap.Bootstrap - Error booting application

java.lang.RuntimeException: org.postgresql.util.PSQLException: FATAL: no pg_hba.conf entry for host "172.17.0.2", user "onedev", database "onedev", SSL encryption

at io.onedev.commons.bootstrap.Bootstrap.unchecked(Bootstrap.java:319)

at io.onedev.commons.utils.ExceptionUtils.unchecked(ExceptionUtils.java:31)

at io.onedev.server.persistence.PersistenceUtils.openConnection(PersistenceUtils.java:38)

at io.onedev.server.data.DefaultDataManager.openConnection(DefaultDataManager.java:241)

at io.onedev.server.ee.clustering.DefaultClusterManager.start(DefaultClusterManager.java:102)

at io.onedev.server.OneDev.start(OneDev.java:140)

at io.onedev.commons.loader.DefaultPluginManager.start(DefaultPluginManager.java:44)

at io.onedev.commons.loader.AppLoader.start(AppLoader.java:60)

at io.onedev.commons.bootstrap.Bootstrap.main(Bootstrap.java:200)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.tanukisoftware.wrapper.WrapperSimpleApp.run(WrapperSimpleApp.java:349)

at java.base/java.lang.Thread.run(Thread.java:829)

Caused by: org.postgresql.util.PSQLException: FATAL: no pg_hba.conf entry for host "172.17.0.2", user "onedev", database "onedev", SSL encryption

at org.postgresql.core.v3.ConnectionFactoryImpl.doAuthentication(ConnectionFactoryImpl.java:698)

at org.postgresql.core.v3.ConnectionFactoryImpl.tryConnect(ConnectionFactoryImpl.java:207)

at org.postgresql.core.v3.ConnectionFactoryImpl.openConnectionImpl(ConnectionFactoryImpl.java:262)

at org.postgresql.core.ConnectionFactory.openConnection(ConnectionFactory.java:54)

at org.postgresql.jdbc.PgConnection.<init>(PgConnection.java:273)

at org.postgresql.Driver.makeConnection(Driver.java:446)

at org.postgresql.Driver.connect(Driver.java:298)

at io.onedev.server.persistence.PersistenceUtils.openConnection(PersistenceUtils.java:36)

... 12 common frames omitted

Suppressed: org.postgresql.util.PSQLException: FATAL: no pg_hba.conf entry for host "172.17.0.2", user "onedev", database "onedev", no encryption

at org.postgresql.core.v3.ConnectionFactoryImpl.doAuthentication(ConnectionFactoryImpl.java:698)

at org.postgresql.core.v3.ConnectionFactoryImpl.tryConnect(ConnectionFactoryImpl.java:207)

at org.postgresql.core.v3.ConnectionFactoryImpl.openConnectionImpl(ConnectionFactoryImpl.java:271)

... 17 common frames omitted

12:15:12 INFO io.onedev.commons.loader.AppLoader - Stopping application...

<-- Wrapper Stopped

I updated the pga_hba.conf

$ sudo cat /var/lib/postgresql/14/main/pg_hba.conf

hostssl onedev onedev 172.17.0.1/24 md5

host onedev onedev 172.17.0.1/24 md5

The reloaded the changes

postgres@isaac-MacBookAir:~$ psql

psql (14.13 (Ubuntu 14.13-0ubuntu0.22.04.1))

Type "help" for help.

postgres=# SELECT pg_reload_conf();

pg_reload_conf

----------------

t

(1 row)

postgres=# \q

postgres@isaac-MacBookAir:~$ exit

logout

## Restored

And tried again

builder@isaac-MacBookAir:~$ sudo docker stop unruffled_blackburn

unruffled_blackburn

builder@isaac-MacBookAir:~$ sudo docker start unruffled_blackburn

unruffled_blackburn

I still saw the error so I’ll delete and retry

builder@isaac-MacBookAir:~$ sudo docker stop unruffled_blackburn

unruffled_blackburn

builder@isaac-MacBookAir:~$ sudo docker rm unruffled_blackburn

unruffled_blackburn

builder@isaac-MacBookAir:~$ sudo docker run -h isaac-MacBookAir -d -v /home/builder/onedev:/opt/onedev -v /var/run/docker.sock:/var/run/docker.sock -e hibernate_dialect=io.onedev.server.persistence.PostgreSQLDialect -e hibernate_connection_driver_class=org.postgresql.Driver -e hibernate_connection_url=jdbc:postgresql://192.168.1.78:5432/onedev -e hibernate_connection_username=onedev -e hibernate_connection_password=one@dev1234555 -e cluster_ip=192.168.1.100 -p 6610:6610 -p 6611:6611 -p 5710:5710 1dev/server

ec0964b8c3f4e53eb03412cc3d65e63255f872f14b8d223dad01ea1a30389bc6

But still no go

builder@isaac-MacBookAir:~$ sudo docker logs jovial_jennings

--> Wrapper Started as Console

Java Service Wrapper Standard Edition 64-bit 3.5.51

Copyright (C) 1999-2022 Tanuki Software, Ltd. All Rights Reserved.

http://wrapper.tanukisoftware.com

Licensed to OneDev for Service Wrapping

Launching a JVM...

WrapperManager: Initializing...

INFO - Launching application from '/app'...

INFO - Starting application...

INFO - Successfully checked /opt/onedev

INFO - Stopping application...

<-- Wrapper Stopped

--> Wrapper Started as Console

Java Service Wrapper Standard Edition 64-bit 3.5.51

Copyright (C) 1999-2022 Tanuki Software, Ltd. All Rights Reserved.

http://wrapper.tanukisoftware.com

Licensed to OneDev for Service Wrapping

Launching a JVM...

WrapperManager: Initializing...

12:26:19 INFO i.onedev.commons.bootstrap.Bootstrap - Launching application from '/opt/onedev'...

12:26:19 INFO i.onedev.commons.bootstrap.Bootstrap - Cleaning temp directory...

12:26:19 INFO io.onedev.commons.loader.AppLoader - Starting application...

12:26:25 ERROR i.onedev.commons.bootstrap.Bootstrap - Error booting application

java.lang.RuntimeException: org.postgresql.util.PSQLException: FATAL: no pg_hba.conf entry for host "172.17.0.2", user "onedev", database "onedev", SSL encryption

at io.onedev.commons.bootstrap.Bootstrap.unchecked(Bootstrap.java:319)

at io.onedev.commons.utils.ExceptionUtils.unchecked(ExceptionUtils.java:31)

at io.onedev.server.persistence.PersistenceUtils.openConnection(PersistenceUtils.java:38)

at io.onedev.server.data.DefaultDataManager.openConnection(DefaultDataManager.java:241)

at io.onedev.server.ee.clustering.DefaultClusterManager.start(DefaultClusterManager.java:102)

at io.onedev.server.OneDev.start(OneDev.java:140)

at io.onedev.commons.loader.DefaultPluginManager.start(DefaultPluginManager.java:44)

at io.onedev.commons.loader.AppLoader.start(AppLoader.java:60)

at io.onedev.commons.bootstrap.Bootstrap.main(Bootstrap.java:200)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.tanukisoftware.wrapper.WrapperSimpleApp.run(WrapperSimpleApp.java:349)

at java.base/java.lang.Thread.run(Thread.java:829)

Caused by: org.postgresql.util.PSQLException: FATAL: no pg_hba.conf entry for host "172.17.0.2", user "onedev", database "onedev", SSL encryption

at org.postgresql.core.v3.ConnectionFactoryImpl.doAuthentication(ConnectionFactoryImpl.java:698)

at org.postgresql.core.v3.ConnectionFactoryImpl.tryConnect(ConnectionFactoryImpl.java:207)

at org.postgresql.core.v3.ConnectionFactoryImpl.openConnectionImpl(ConnectionFactoryImpl.java:262)

at org.postgresql.core.ConnectionFactory.openConnection(ConnectionFactory.java:54)

at org.postgresql.jdbc.PgConnection.<init>(PgConnection.java:273)

at org.postgresql.Driver.makeConnection(Driver.java:446)

at org.postgresql.Driver.connect(Driver.java:298)

at io.onedev.server.persistence.PersistenceUtils.openConnection(PersistenceUtils.java:36)

... 12 common frames omitted

Suppressed: org.postgresql.util.PSQLException: FATAL: no pg_hba.conf entry for host "172.17.0.2", user "onedev", database "onedev", no encryption

at org.postgresql.core.v3.ConnectionFactoryImpl.doAuthentication(ConnectionFactoryImpl.java:698)

at org.postgresql.core.v3.ConnectionFactoryImpl.tryConnect(ConnectionFactoryImpl.java:207)

at org.postgresql.core.v3.ConnectionFactoryImpl.openConnectionImpl(ConnectionFactoryImpl.java:271)

... 17 common frames omitted

12:26:25 INFO io.onedev.commons.loader.AppLoader - Stopping application...

<-- Wrapper Stopped

Alerts

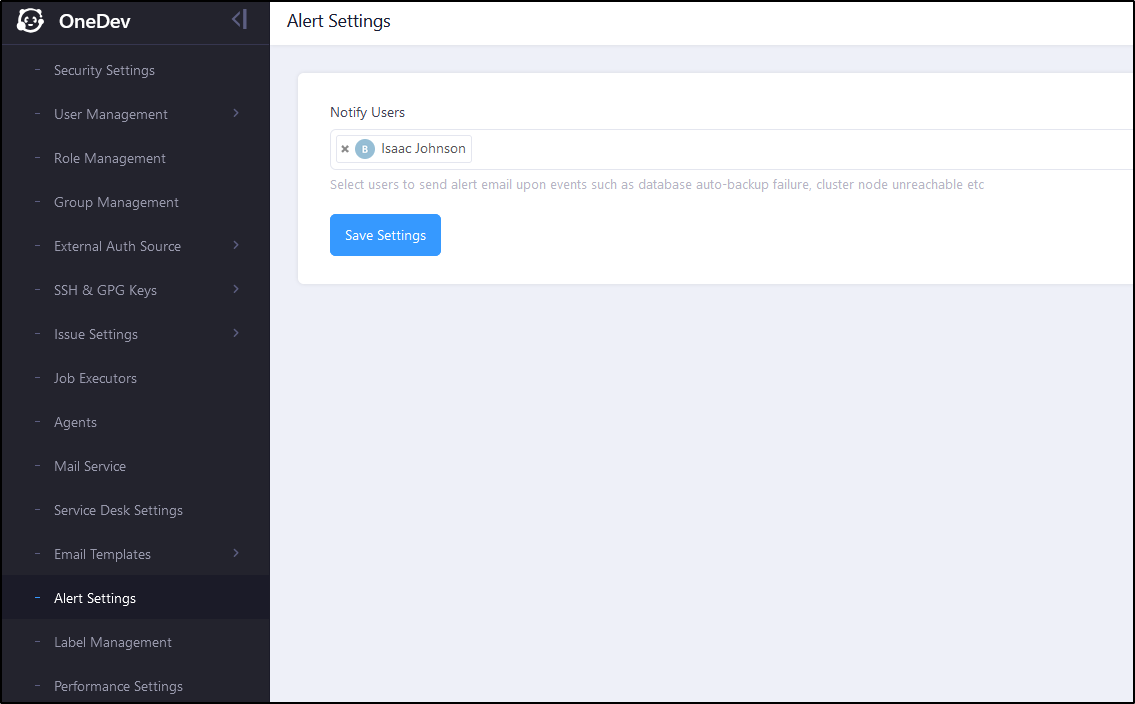

At the very least, I can ensure I get alerted if there are systemic failures.

I added a Notify user (after I re-setup the SendGrid SMTP settings)

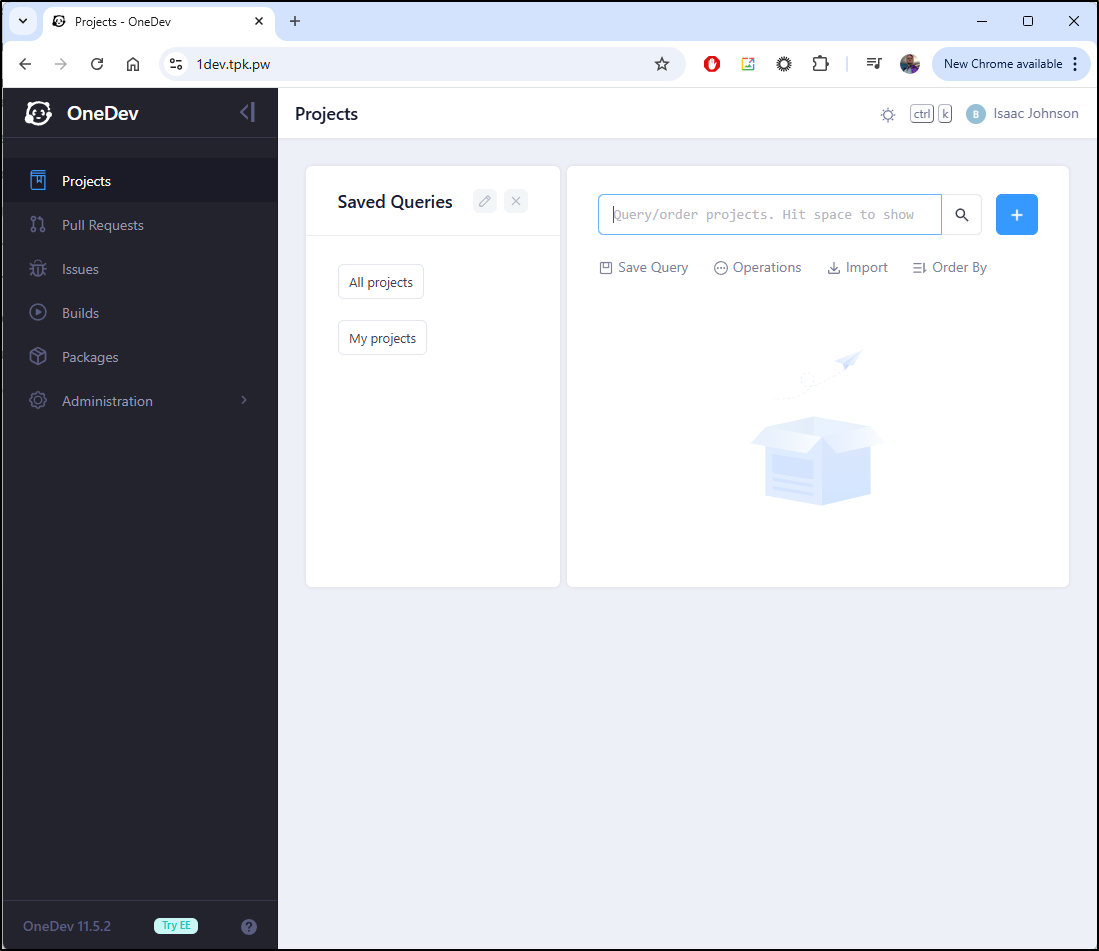

Restoring

I’ll just assume something is busted since I’ve tried everything I can think of at this point.

I’ll restore the self-contained system and see if it comes back up

$ helm install onedev -n onedev --create-namespace onedev/onedev

NAME: onedev

LAST DEPLOYED: Sat Nov 16 17:05:44 2024

NAMESPACE: onedev

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: onedev

CHART VERSION: 11.5.2

APP VERSION: 11.5.2

###################################################################

#

# CAUTION: If you are upgrading from version <= 9.0.0, please make

# sure to follow https://docs.onedev.io/upgrade-guide/deploy-to-k8s

# to migrate your data

#

###################################################################

** Please be patient while the chart is being deployed **

Get the OneDev URL by running:

kubectl port-forward --namespace onedev svc/onedev 6610:80 &

URL: http://127.0.0.1:6610

Indeed it came up without issue. I’ll also add back the ingress

$ kubectl get pods -n onedev

NAME READY STATUS RESTARTS AGE

onedev-0 1/1 Running 0 27s

$ kubectl apply -f 1dev.ingress.yaml

ingress.networking.k8s.io/1devingress created

I had to set it up again, but it did some back

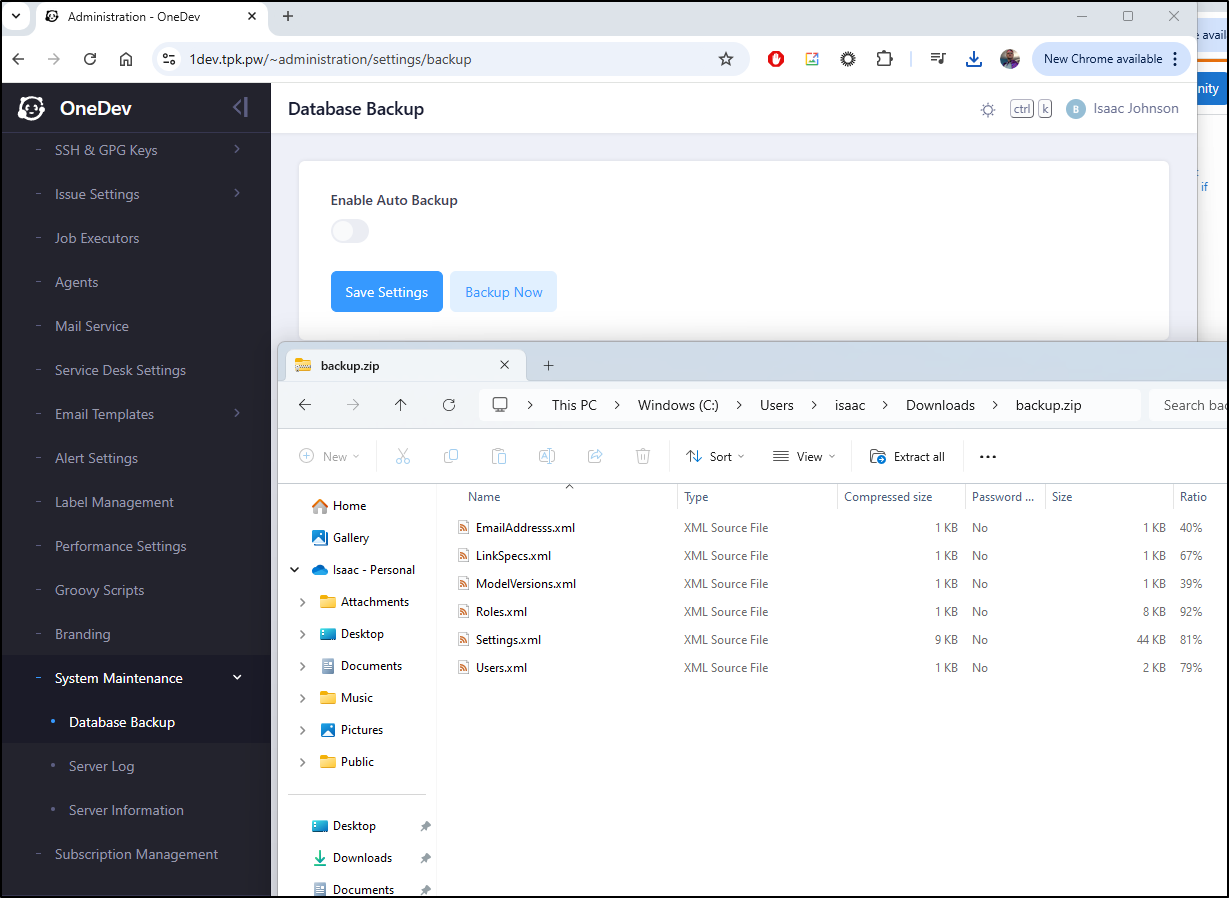

Now, they do have Database Backups, but it seems more of a flatfile system

To use a backup, we would have to transfer it to the pod and restore it using the command line:

builder@DESKTOP-QADGF36:~$ kubectl cp /mnt/c/Users/isaac/Downloads/backup.zip onedev-0:/opt/onedev/bin -n onedev

builder@DESKTOP-QADGF36:~$ kubectl exec -it onedev-0 -n onedev -- /bin/bash

root@onedev-0:/# cd /opt/onedev/bin

root@onedev-0:/opt/onedev/bin# ./restore-db.sh /opt/onedev/bin/backup.zip

Running OneDev Restore Database...

--> Wrapper Started as Console

Java Service Wrapper Standard Edition 64-bit 3.5.51

Copyright (C) 1999-2022 Tanuki Software, Ltd. All Rights Reserved.

http://wrapper.tanukisoftware.com

Licensed to OneDev for Service Wrapping

Launching a JVM...

WrapperManager: Initializing...

INFO - Launching application from '/opt/onedev'...

INFO - Starting application...

INFO - Restoring database from /opt/onedev/bin/backup.zip...

INFO - Waiting for server to stop...

INFO - Cleaning database...

INFO - Creating tables...

INFO - Importing data into database...

INFO - Importing from data file 'ModelVersions.xml'...

INFO - Importing from data file 'Roles.xml'...

INFO - Importing from data file 'Users.xml'...

INFO - Importing from data file 'LinkSpecs.xml'...

INFO - Importing from data file 'EmailAddresss.xml'...

INFO - Importing from data file 'Settings.xml'...

INFO - Applying foreign key constraints...

INFO - Database is successfully restored from /opt/onedev/bin/backup.zip

INFO - Stopping application...

<-- Wrapper Stopped

This blipped out the service while it rebooted internally but it did come back and i could log back in

From a DR perspective, this suffices. Ideally, I would use an external database, but if I took periodic backups I would be okay with it.

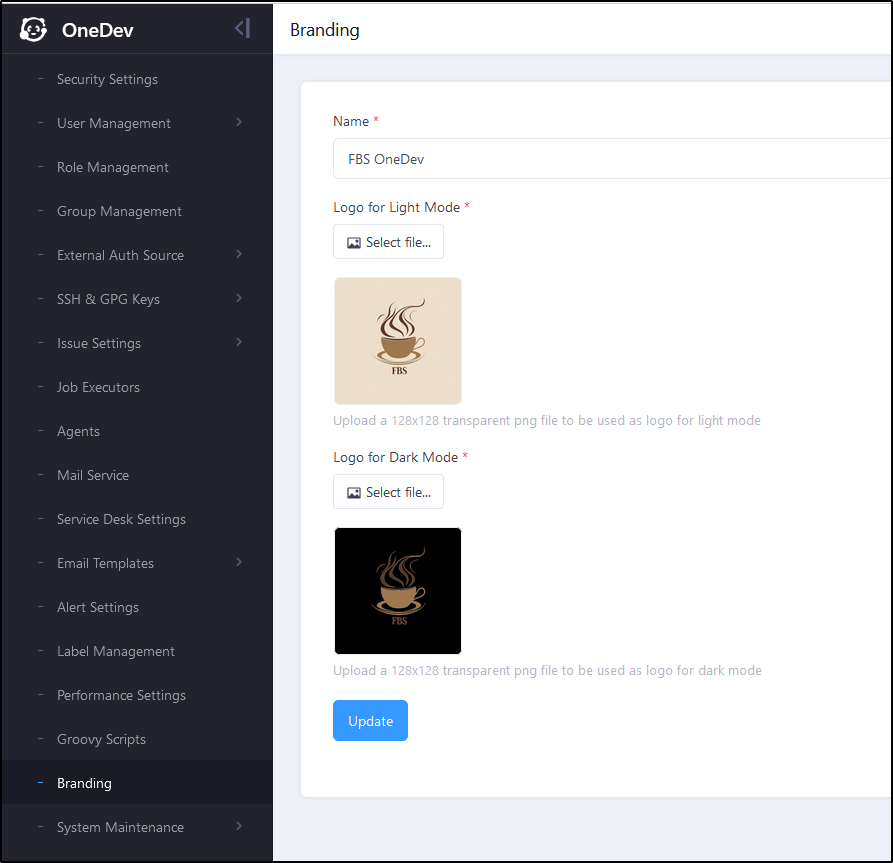

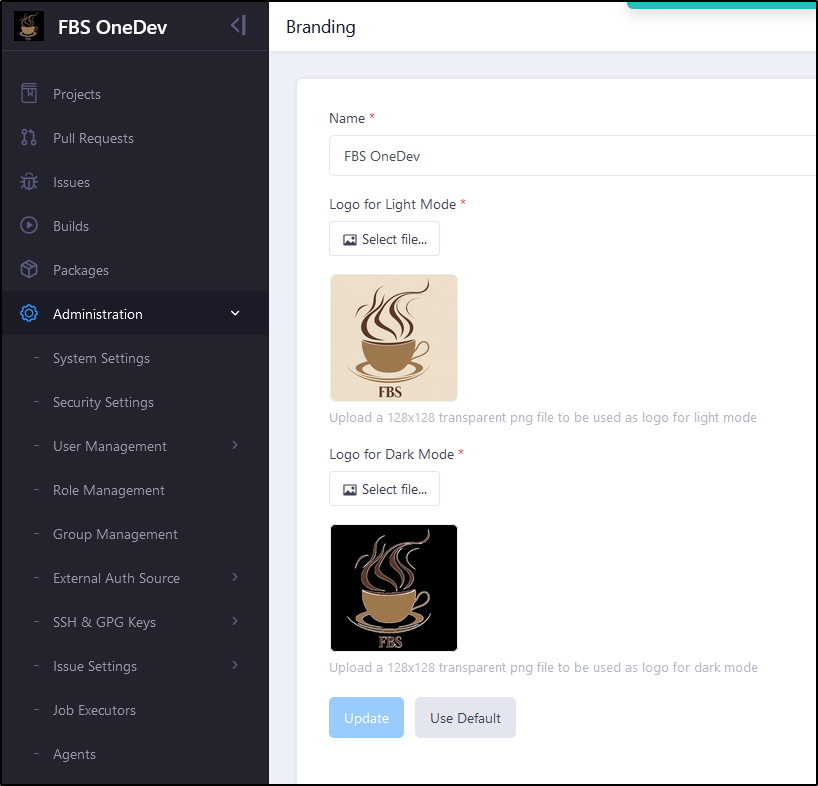

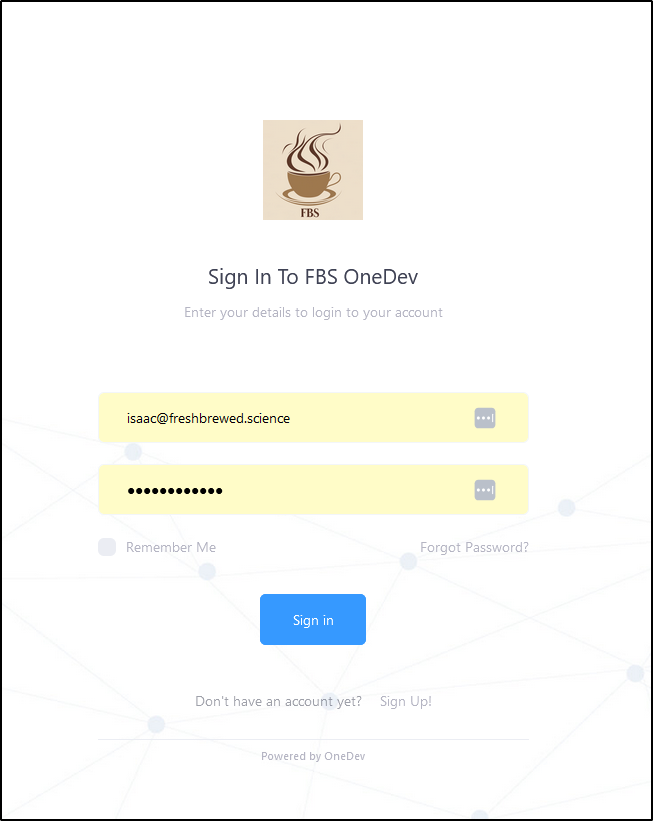

Branding

I can use the Branding area to update branding if desired for our OneDev portal

For me, the icons are quite small so it’s hardly readable

I updated to a bit more zoomed in logo which slightly improved it

That said, it does look nice on the login page

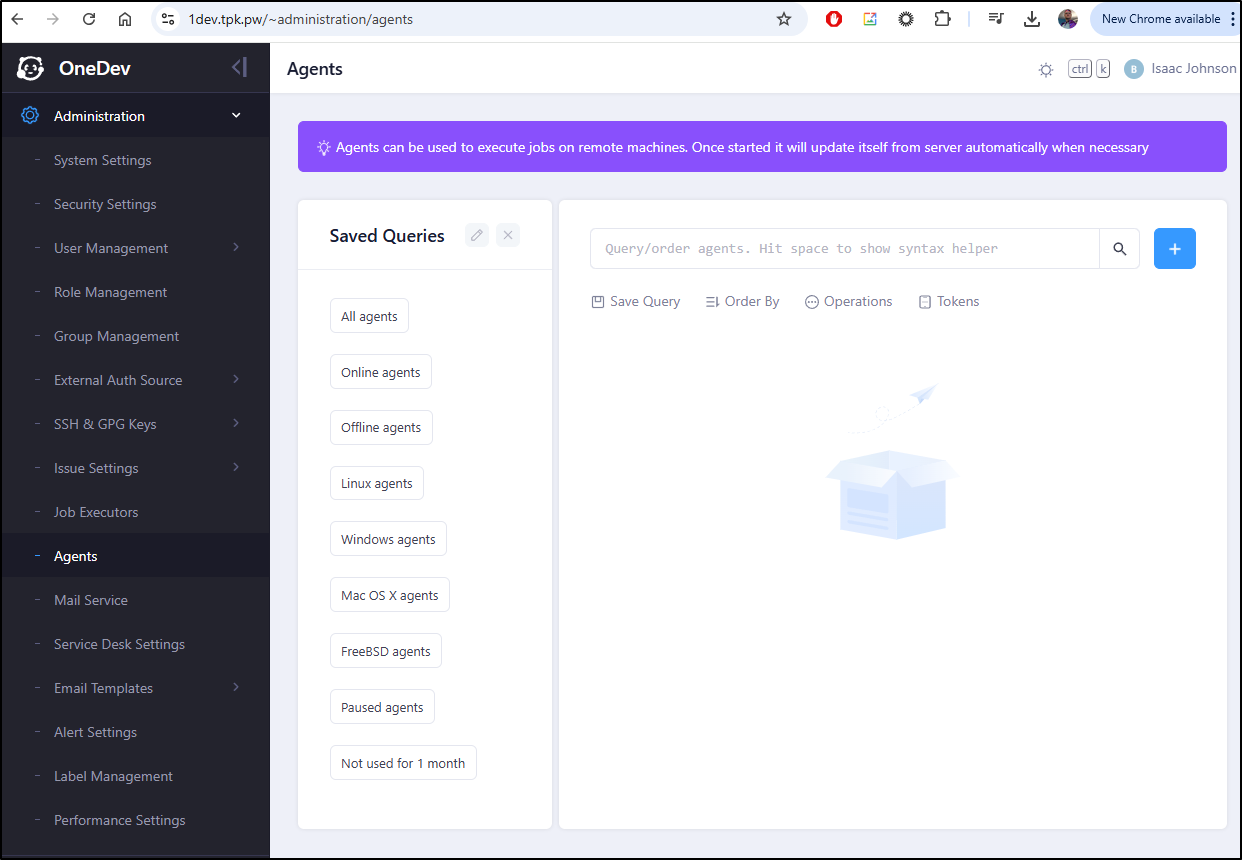

Build Agents

When we talked on pipelines last time, we really just focused on the self-contained setup. But in many systems, including Github Actions, Jenkins, and Azure DevOps, there are occasions that we want to push the build and release work out to controlled agents.

Onedev has the ability to use similar agents.

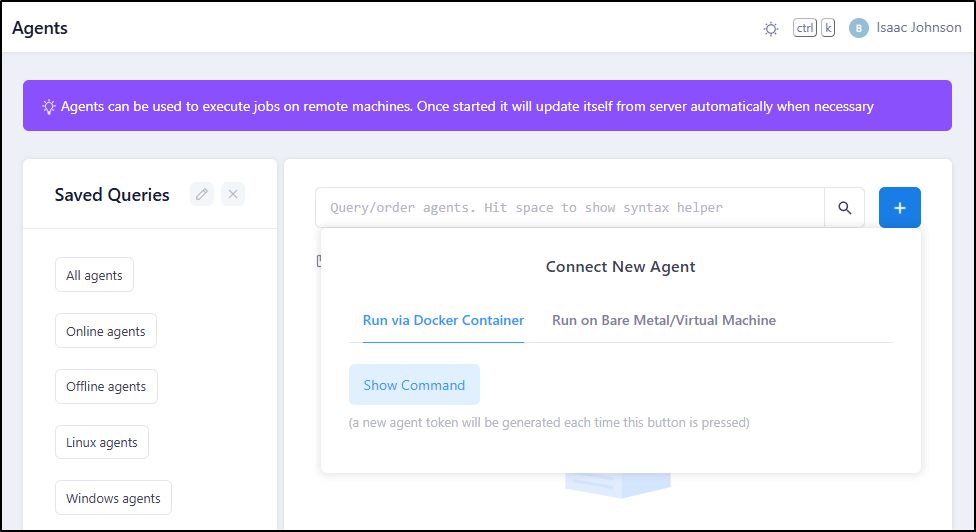

We go to “Administration/Agents”:

I can run via a VM command or in Docker

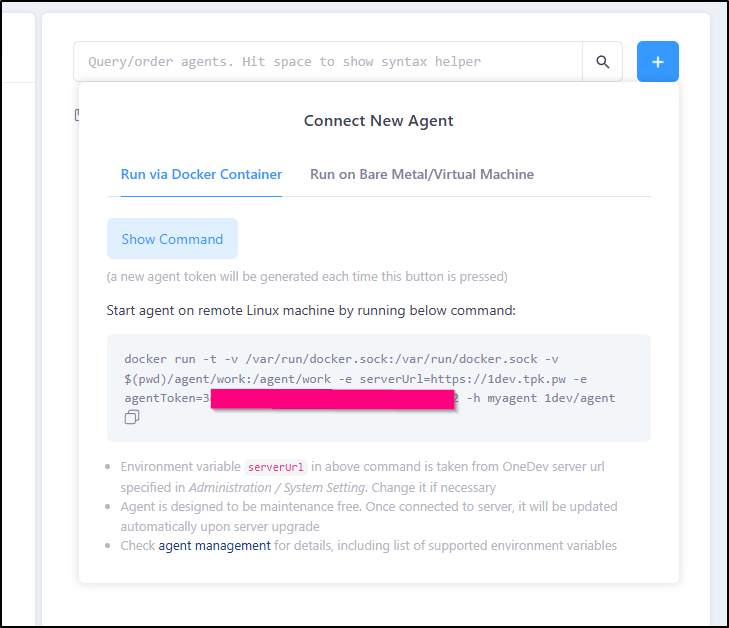

I can now get a command that includes a valid token to add an agent to my Dockerhost

The only difference in my invokation is I added “-d” to make it a daemon and not interactive

$ docker run -d -t -v /var/run/docker.sock:/var/run/docker.sock -v $(pwd)/agent/work:/agent/work -e serverUrl=https://1dev.tpk.pw

-e agentToken=asdfasdfasdfasdfasdfasdfasdfasdfasdf-h myagent 1dev/agent

Unable to find image '1dev/agent:latest' locally

latest: Pulling from 1dev/agent

ff65ddf9395b: Pull complete

ab529e37c023: Pull complete

0025b48f968d: Pull complete

c044fda955bc: Pull complete

8b74244b298f: Pull complete

1a38640cb6ab: Pull complete

6385d7c63af8: Pull complete

4f4fb700ef54: Pull complete

015ae69ebf6b: Pull complete

Digest: sha256:d5ec01c5dc816958927dfa0805fa0dd3853e5967f7857e15549c8bc60663c27a

Status: Downloaded newer image for 1dev/agent:latest

bc2246061a2cd19aee254ccdb55e7afd224eb0fd922eb16da0c31db0894040ef

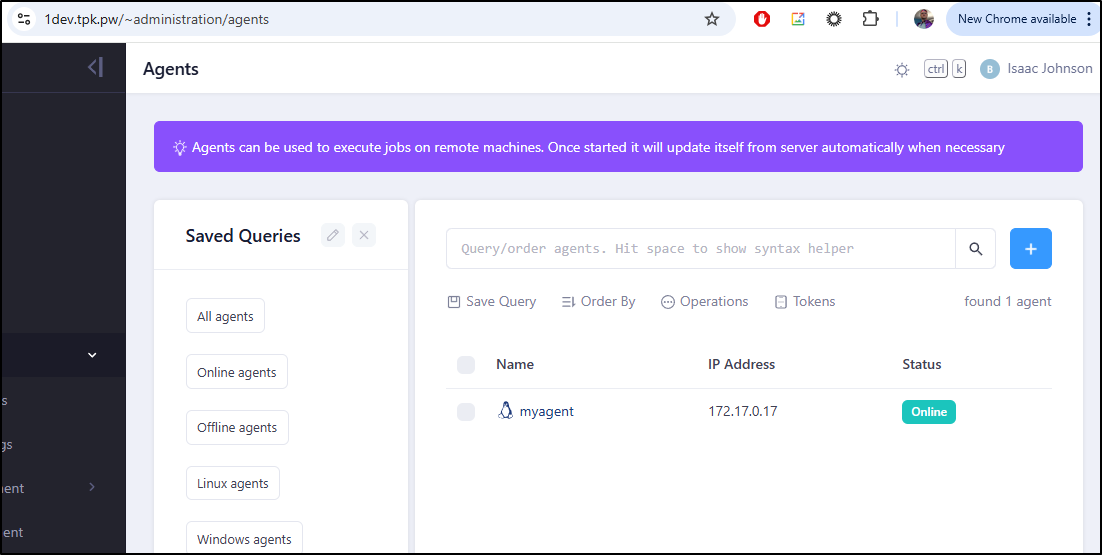

Back in Onedev, we can now see an agent has come online

Thought it would be a log easier if I gave it a better name than “myagent”. Let’s fix that

$ docker ps | head -n2

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bc2246061a2c 1dev/agent "/root/bin/entrypoin…" 13 hours ago Up 13 hours

friendly_tu

I’ll now stop and remove, then readd with the right name

builder@builder-T100:~$ docker stop bc2246061a2c

bc2246061a2c

builder@builder-T100:~$ docker rm bc2246061a2c

bc2246061a2c

builder@builder-T100:~$ docker run -d -t -v /var/run/docker.sock:/var/run/docker.sock -v $(pwd)/agent/work:/agent/work -e serverUrl=https://1dev.tpk.pw -e agentToken=asdfasdasdfsadsdaf -h dockerhost 1dev/agent

f20ac60caa40fc28e1c6ee72933c7e0147f84600bf2f62c1f67d45de35b5281f

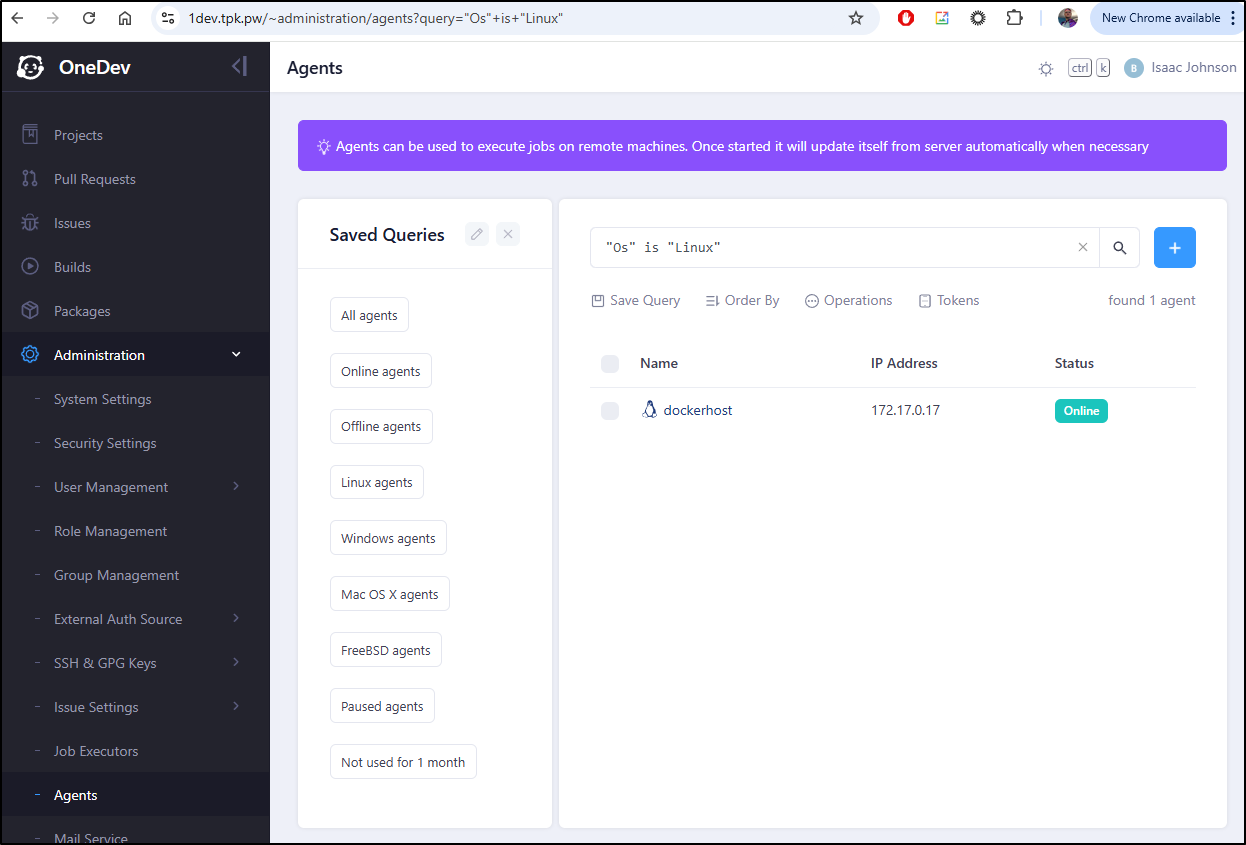

I had hoped to see things like tags or Capabilities (to use Azure DevOps parlance), but unfortunately there is not much extra we can configure (see properties/environments in the docs here)

That said, we do see the proper named agent and it does know that it’s of type Linux

I can see similar, as far as environment variables go, on the container itself

builder@builder-T100:~$ docker exec -it keen_gates /bin/bash

root@dockerhost:/agent/bin# export

declare -x HOME="/root"

declare -x HOSTNAME="dockerhost"

declare -x LANG="en_US.UTF-8"

declare -x LANGUAGE="en_US:en"

declare -x LC_ALL="en_US.UTF-8"

declare -x LESSCLOSE="/usr/bin/lesspipe %s %s"

declare -x LESSOPEN="| /usr/bin/lesspipe %s"

declare -x LS_COLORS="rs=0:di=01;34:ln=01;36:mh=00:pi=40;33:so=01;35:do=01;35:bd=40;33;01:cd=40;33;01:or=40;31;01:mi=00:su=37;41:sg=30;43:ca=00:tw=30;42:ow=34;42:st=37;44:ex=01;32:*.tar=01;31:*.tgz=01;31:*.arc=01;31:*.arj=01;31:*.taz=01;31:*.lha=01;31:*.lz4=01;31:*.lzh=01;31:*.lzma=01;31:*.tlz=01;31:*.txz=01;31:*.tzo=01;31:*.t7z=01;31:*.zip=01;31:*.z=01;31:*.dz=01;31:*.gz=01;31:*.lrz=01;31:*.lz=01;31:*.lzo=01;31:*.xz=01;31:*.zst=01;31:*.tzst=01;31:*.bz2=01;31:*.bz=01;31:*.tbz=01;31:*.tbz2=01;31:*.tz=01;31:*.deb=01;31:*.rpm=01;31:*.jar=01;31:*.war=01;31:*.ear=01;31:*.sar=01;31:*.rar=01;31:*.alz=01;31:*.ace=01;31:*.zoo=01;31:*.cpio=01;31:*.7z=01;31:*.rz=01;31:*.cab=01;31:*.wim=01;31:*.swm=01;31:*.dwm=01;31:*.esd=01;31:*.avif=01;35:*.jpg=01;35:*.jpeg=01;35:*.mjpg=01;35:*.mjpeg=01;35:*.gif=01;35:*.bmp=01;35:*.pbm=01;35:*.pgm=01;35:*.ppm=01;35:*.tga=01;35:*.xbm=01;35:*.xpm=01;35:*.tif=01;35:*.tiff=01;35:*.png=01;35:*.svg=01;35:*.svgz=01;35:*.mng=01;35:*.pcx=01;35:*.mov=01;35:*.mpg=01;35:*.mpeg=01;35:*.m2v=01;35:*.mkv=01;35:*.webm=01;35:*.webp=01;35:*.ogm=01;35:*.mp4=01;35:*.m4v=01;35:*.mp4v=01;35:*.vob=01;35:*.qt=01;35:*.nuv=01;35:*.wmv=01;35:*.asf=01;35:*.rm=01;35:*.rmvb=01;35:*.flc=01;35:*.avi=01;35:*.fli=01;35:*.flv=01;35:*.gl=01;35:*.dl=01;35:*.xcf=01;35:*.xwd=01;35:*.yuv=01;35:*.cgm=01;35:*.emf=01;35:*.ogv=01;35:*.ogx=01;35:*.aac=00;36:*.au=00;36:*.flac=00;36:*.m4a=00;36:*.mid=00;36:*.midi=00;36:*.mka=00;36:*.mp3=00;36:*.mpc=00;36:*.ogg=00;36:*.ra=00;36:*.wav=00;36:*.oga=00;36:*.opus=00;36:*.spx=00;36:*.xspf=00;36:*~=00;90:*#=00;90:*.bak=00;90:*.crdownload=00;90:*.dpkg-dist=00;90:*.dpkg-new=00;90:*.dpkg-old=00;90:*.dpkg-tmp=00;90:*.old=00;90:*.orig=00;90:*.part=00;90:*.rej=00;90:*.rpmnew=00;90:*.rpmorig=00;90:*.rpmsave=00;90:*.swp=00;90:*.tmp=00;90:*.ucf-dist=00;90:*.ucf-new=00;90:*.ucf-old=00;90:"

declare -x OLDPWD

declare -x PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

declare -x PWD="/agent/bin"

declare -x SHLVL="1"

declare -x TERM="xterm"

declare -x agentToken="3asdfasdfsadfasdfasfdasfdsadfsadfsadf"

declare -x serverUrl="https://1dev.tpk.pw"

Usage

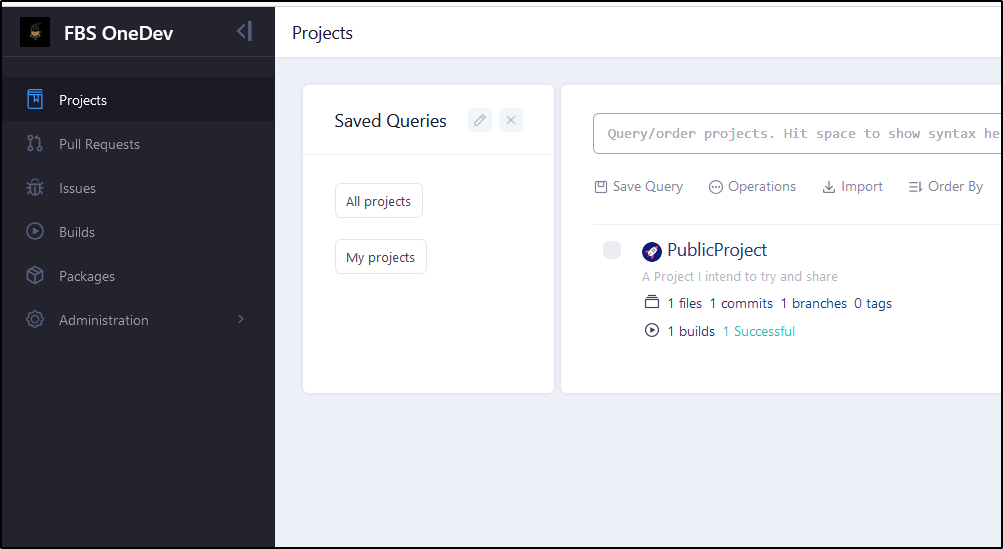

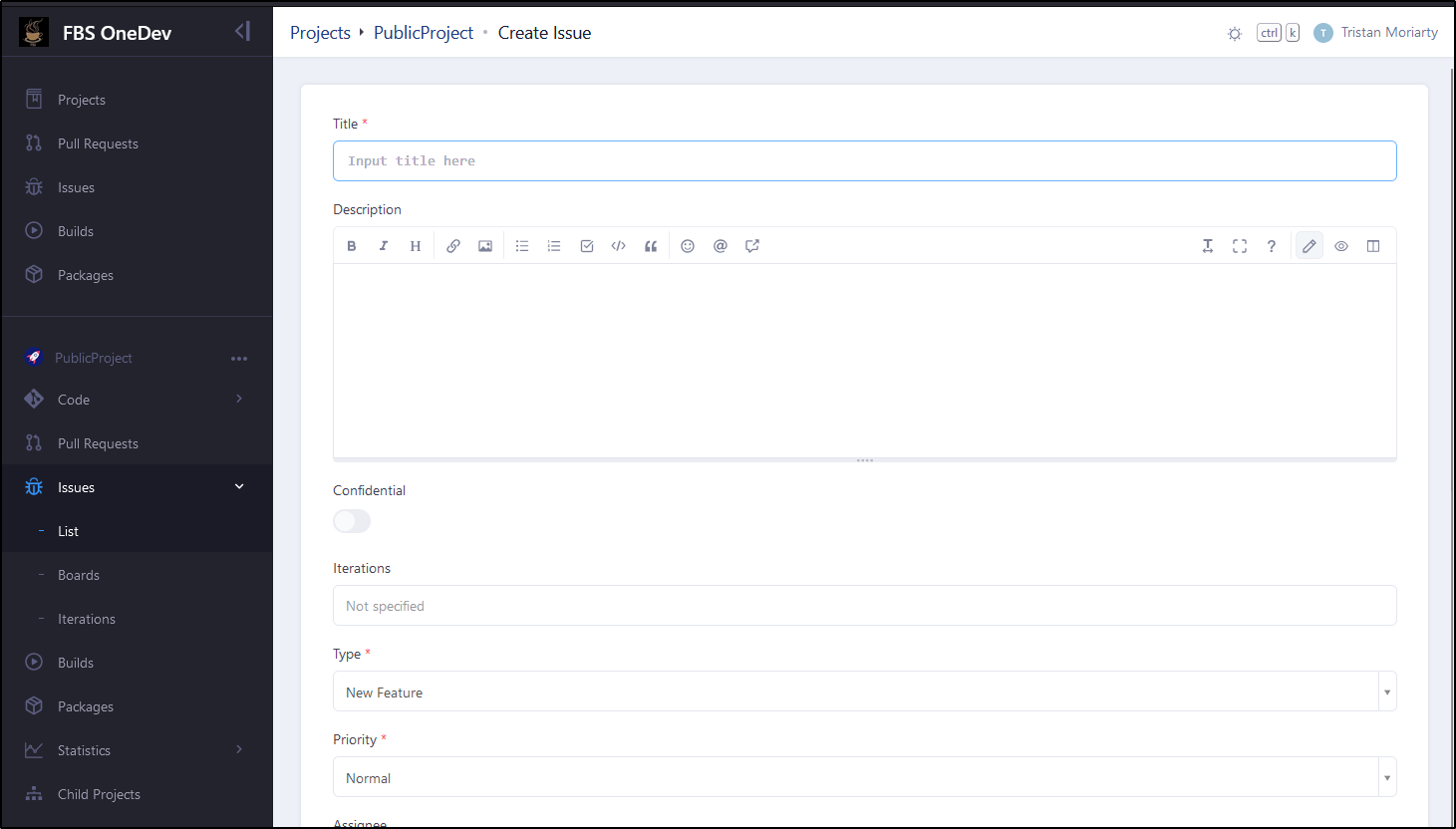

Since we reset everything let’s go ahead and create a project and an initial set of files in a repo

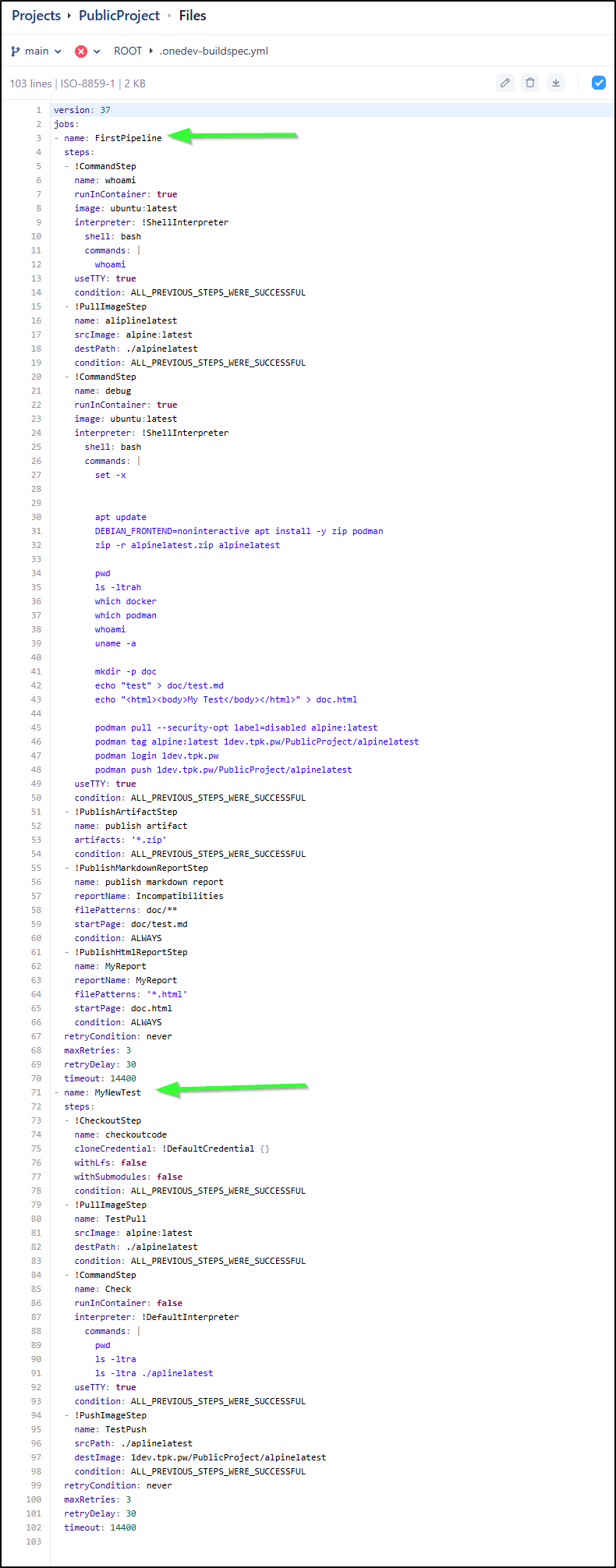

As you saw I created a basic pipeline file

version: 37

jobs:

- name: FirstPipeline

steps:

- !CommandStep

name: whoami

runInContainer: true

image: ubuntu:latest

interpreter: !ShellInterpreter

shell: bash

commands: |

whoami

useTTY: true

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

retryCondition: never

maxRetries: 3

retryDelay: 30

timeout: 14400

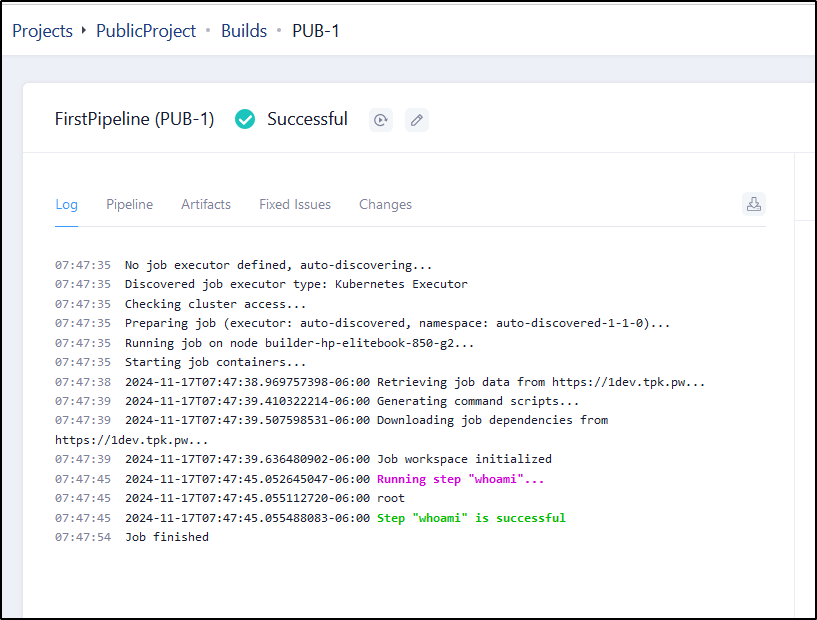

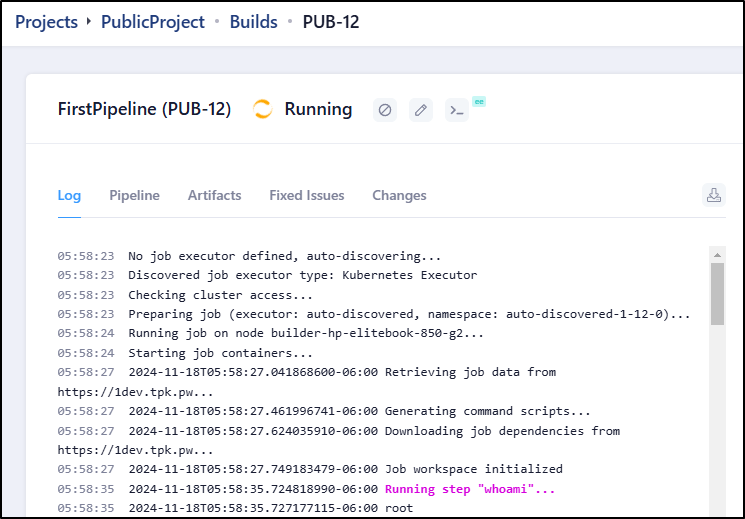

and when run, it’s clear it runs on the default Kubernetes executor (which surprised me it even called out the actual Node it’s on)

07:47:35 No job executor defined, auto-discovering...

07:47:35 Discovered job executor type: Kubernetes Executor

07:47:35 Checking cluster access...

07:47:35 Preparing job (executor: auto-discovered, namespace: auto-discovered-1-1-0)...

07:47:35 Running job on node builder-hp-elitebook-850-g2...

07:47:35 Starting job containers...

07:47:38 2024-11-17T07:47:38.969757398-06:00 Retrieving job data from https://1dev.tpk.pw...

07:47:39 2024-11-17T07:47:39.410322214-06:00 Generating command scripts...

07:47:39 2024-11-17T07:47:39.507598531-06:00 Downloading job dependencies from https://1dev.tpk.pw...

07:47:39 2024-11-17T07:47:39.636480902-06:00 Job workspace initialized

07:47:45 2024-11-17T07:47:45.052645047-06:00 Running step "whoami"...

07:47:45 2024-11-17T07:47:45.055112720-06:00 root

07:47:45 2024-11-17T07:47:45.055488083-06:00 Step "whoami" is successful

07:47:54 Job finished

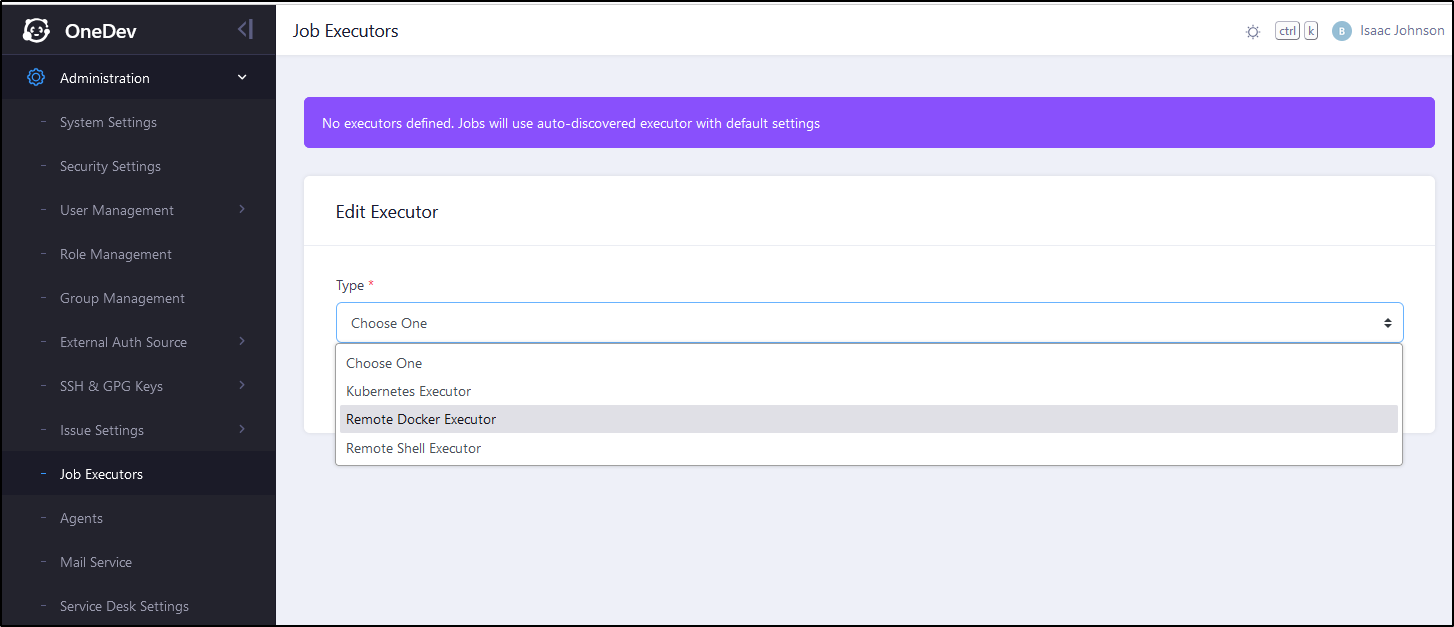

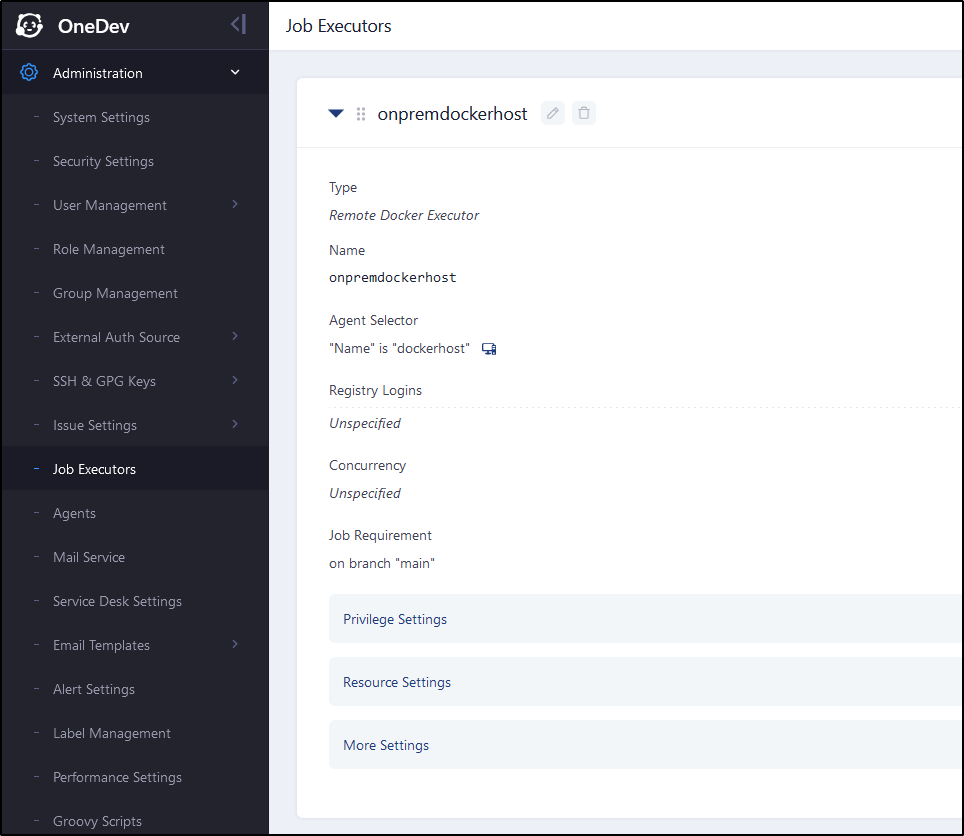

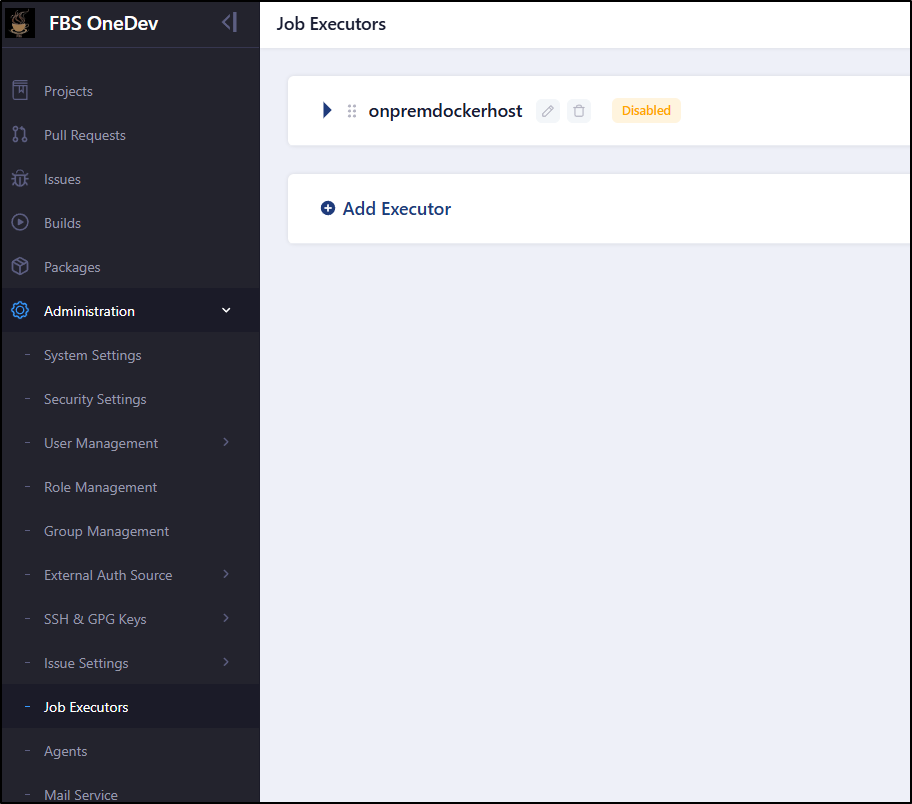

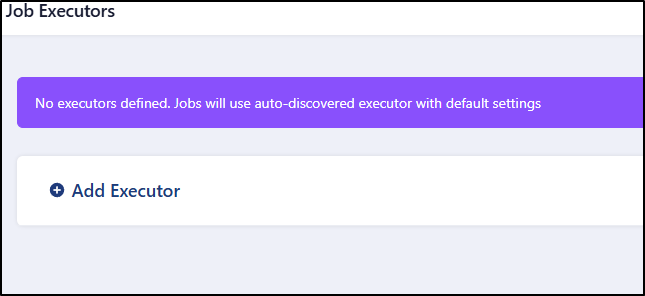

This is because, by default, OneDev will pick any gent that might meet the needs. To control where our pipeline steps are run, we need to adjust the Job Executor settings under Administration

Here I’ll add a remote Docker executor

For example, I want a jobexecutor called “onpredockerhost” that will use only the dockerhost agent if we are on a main branch

With that saved, let’s re-run our Pipeline, which is on main in the PublicProject

I ran a few times and never saw it create a container directly so it must be doing some kind of docker-in-docker

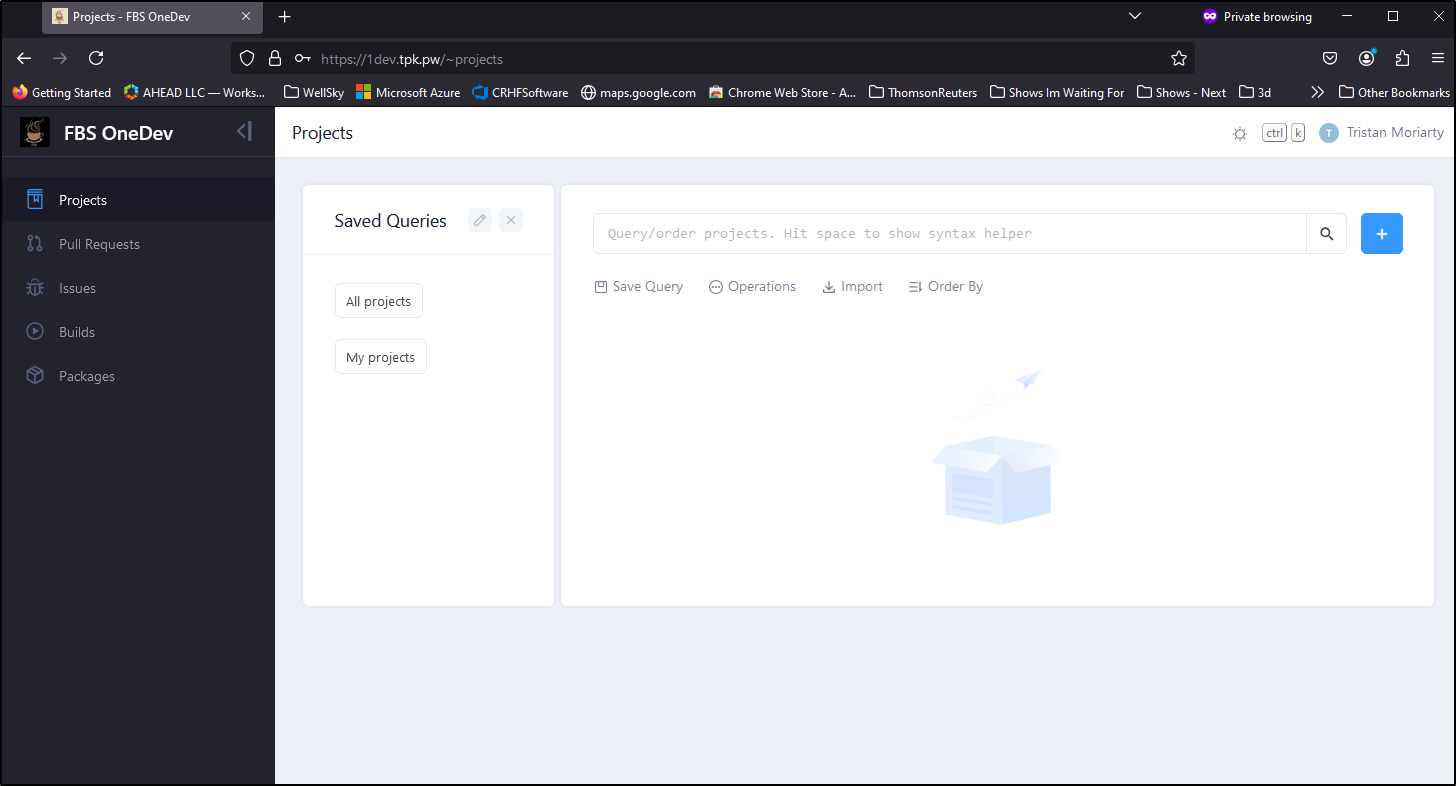

User Access

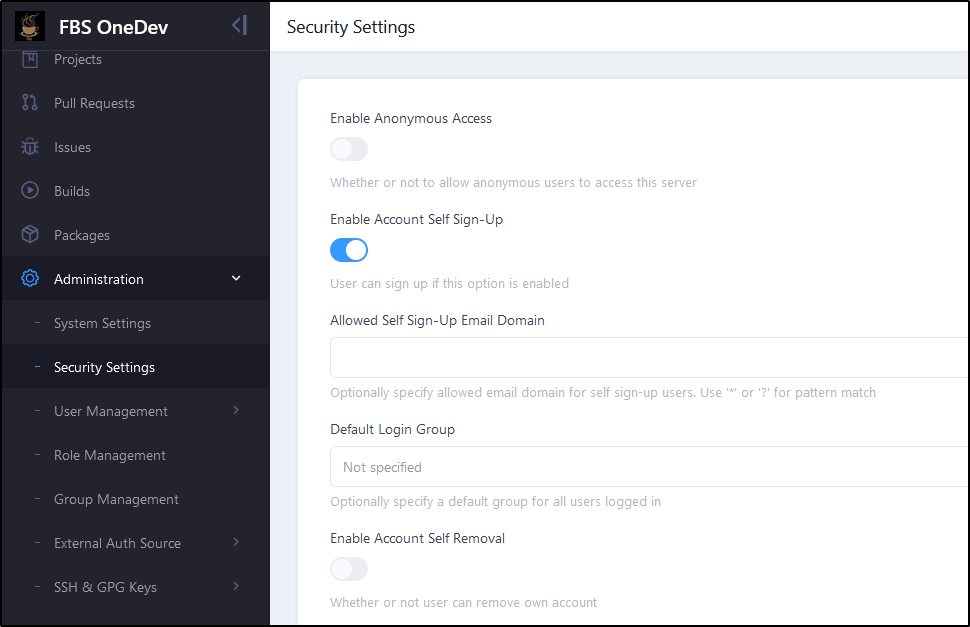

I did not block signup, but it is not anonymous open.

So new users will see just an empty list of projects at the moment

That said, I can enable anonymous as well as disable user signup in settings

I did see some docs on enabling public artifact access but not really about opening a project wide.

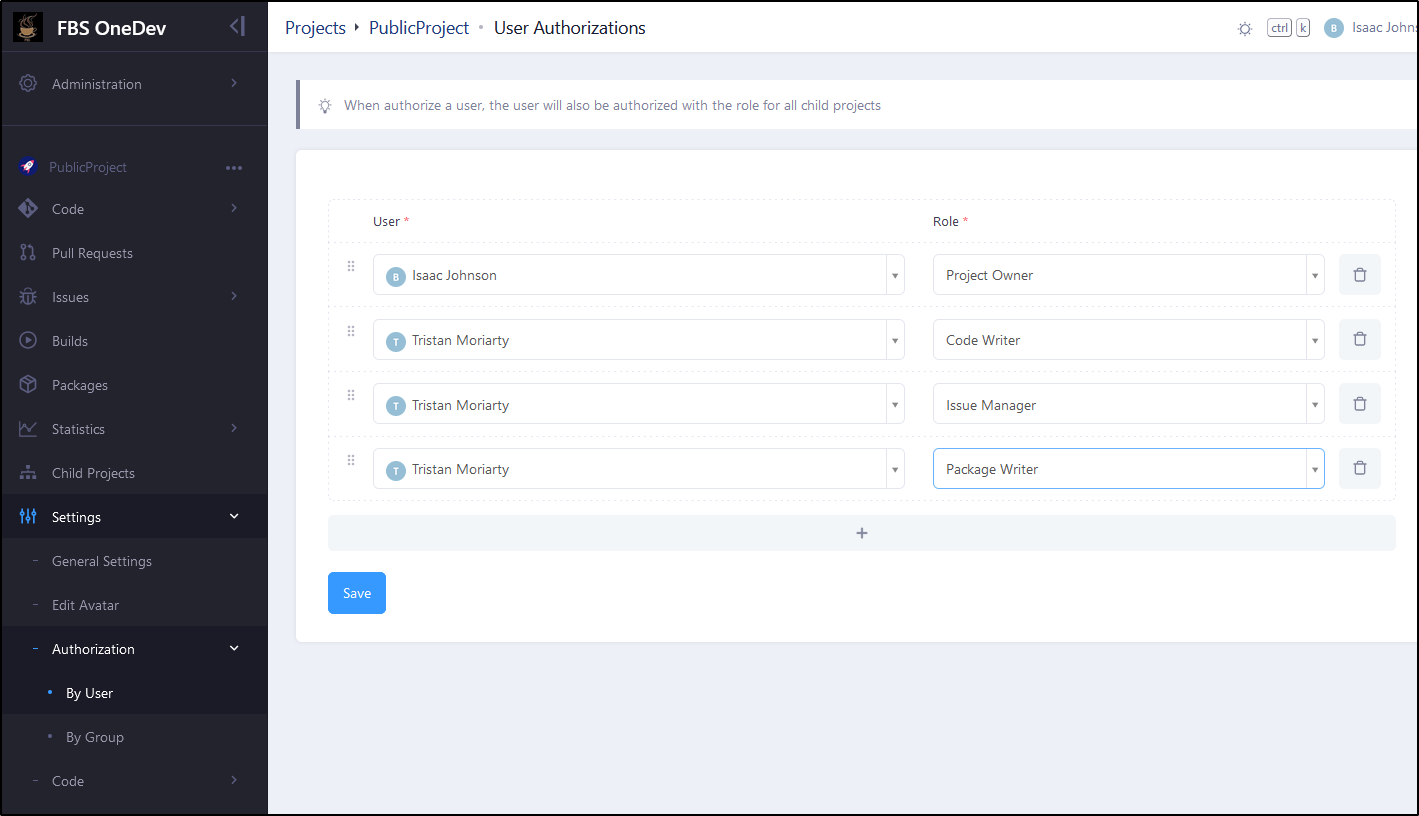

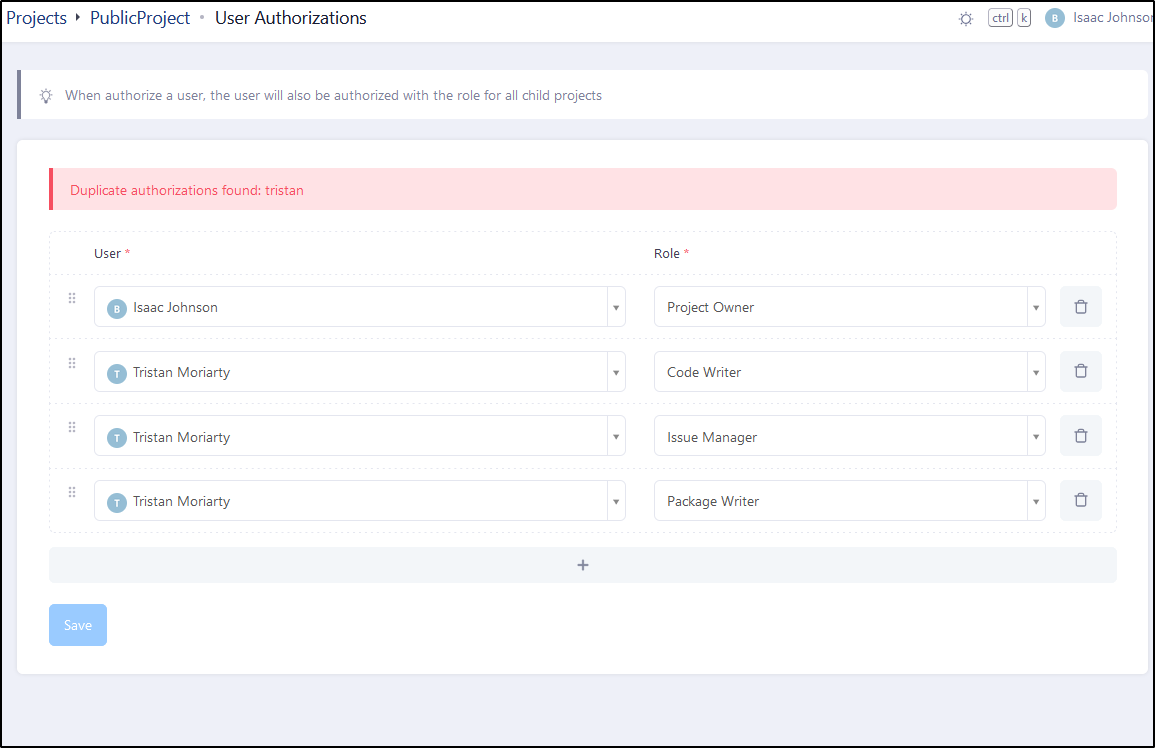

In the case of my new user, I could add him to the project with the contributor permissions on the three main types

Perhaps we have to just pick one?

It must be inclusive as I granted him “Code Writer” and I can see he can update issues

Replication

In our first iteration I lost the GIT repo because I wiped everything. That said, I of course have the local clones from which to rebuild.

One way I minimize the risk with my Gitea and Forgejo systems is to replicate out between themselves and Codeberg.

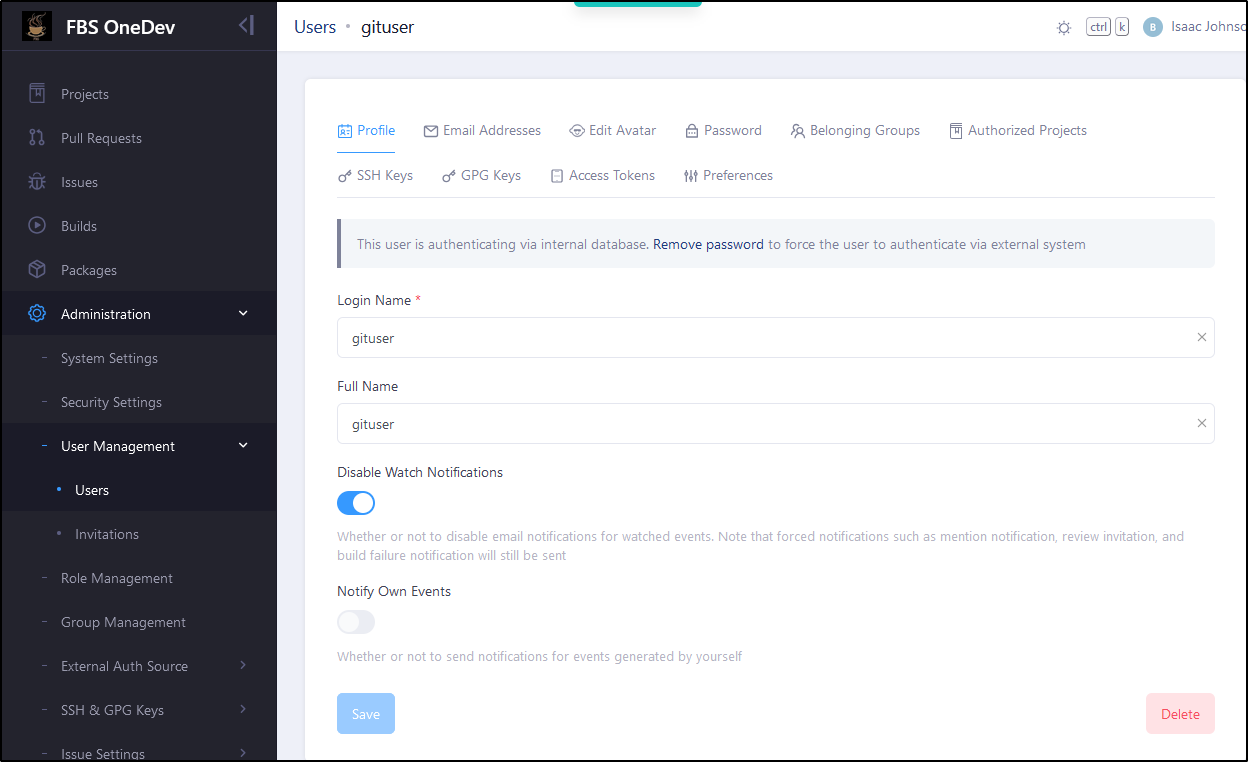

For Onedev, I’ll create a gituser and disable notifications

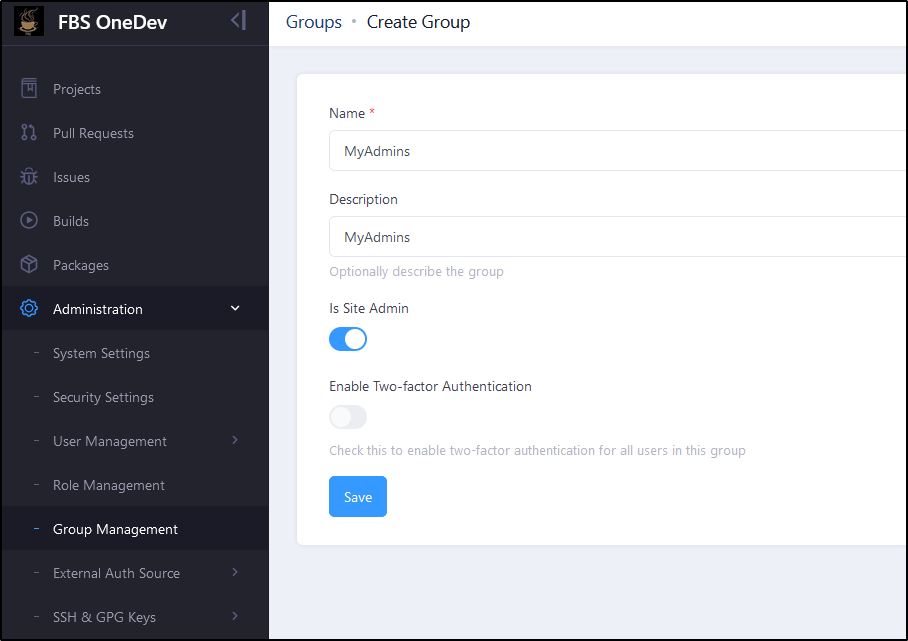

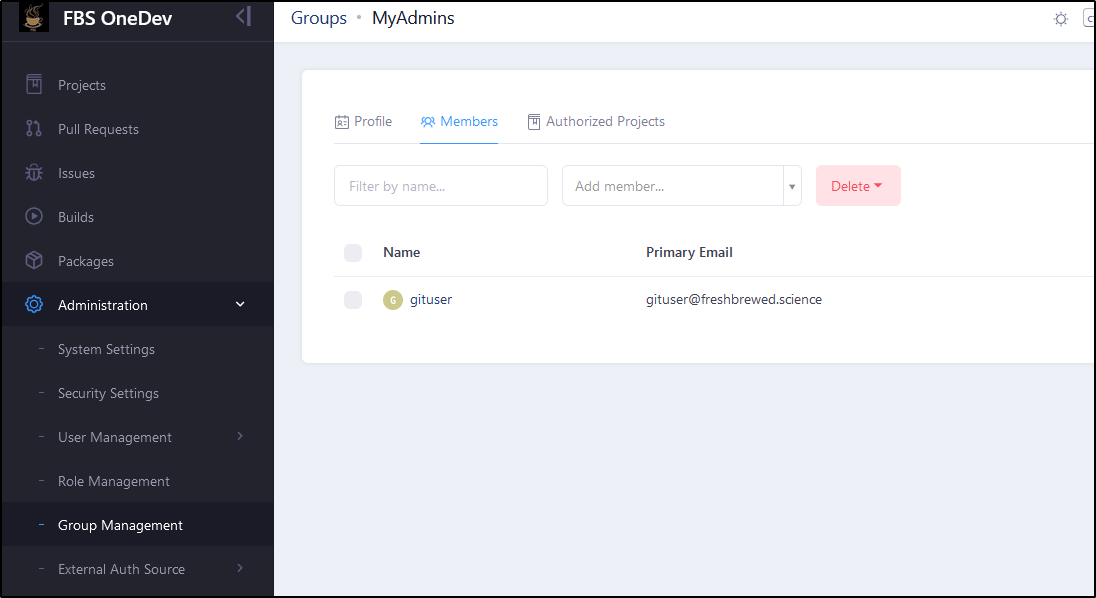

Because I didn’t want to have to hand add our user to each project, I went ahead and made a site admins group

and added gituser

Just to be sure, I did a quick spot check on the user in a different browser

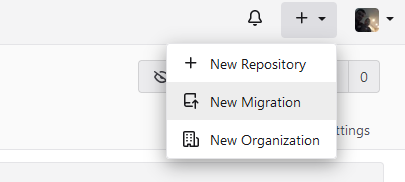

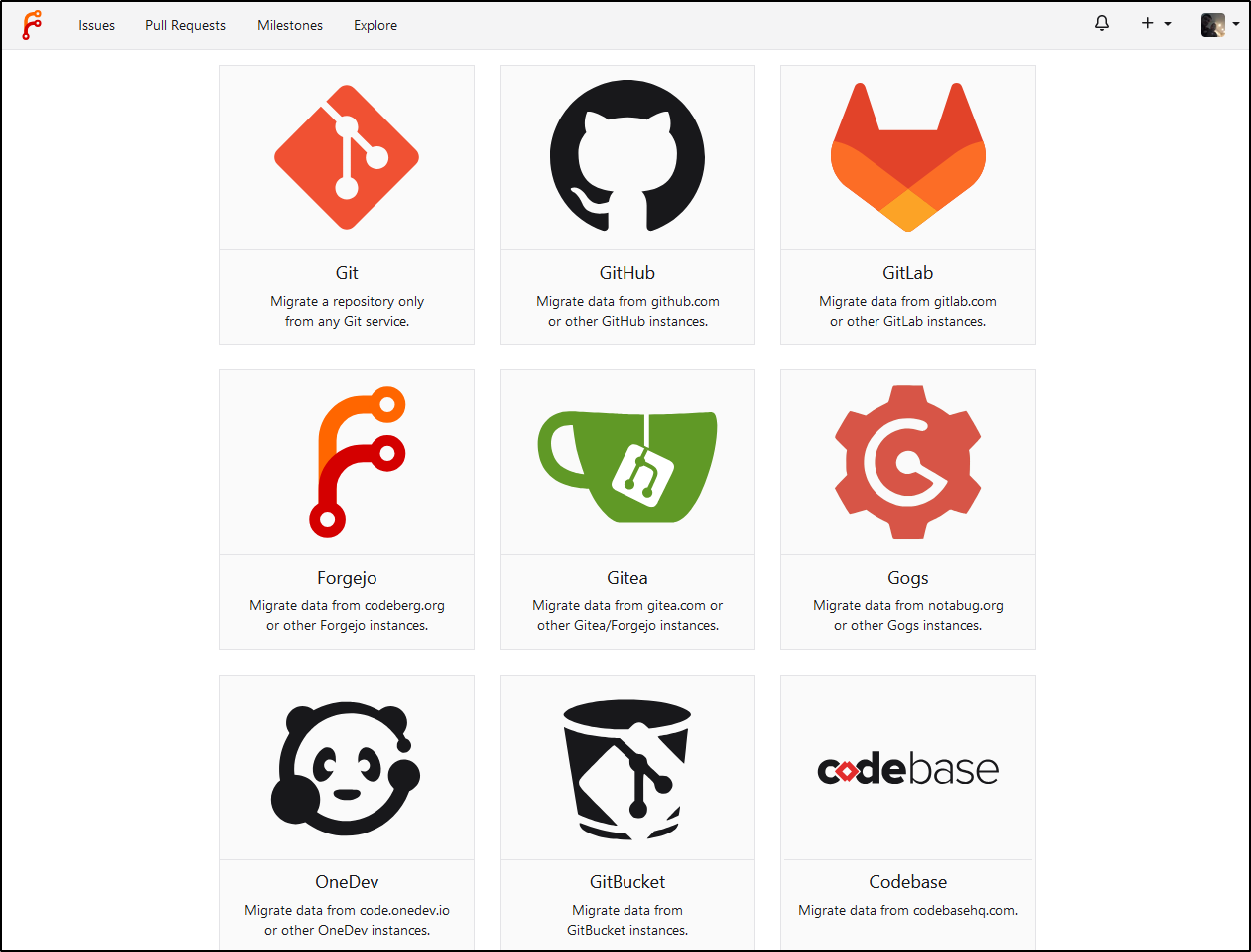

I’ll then go to Forgejo (or Gitea) to create a new repository

Then pick GIT (though there is a OneDev listed)

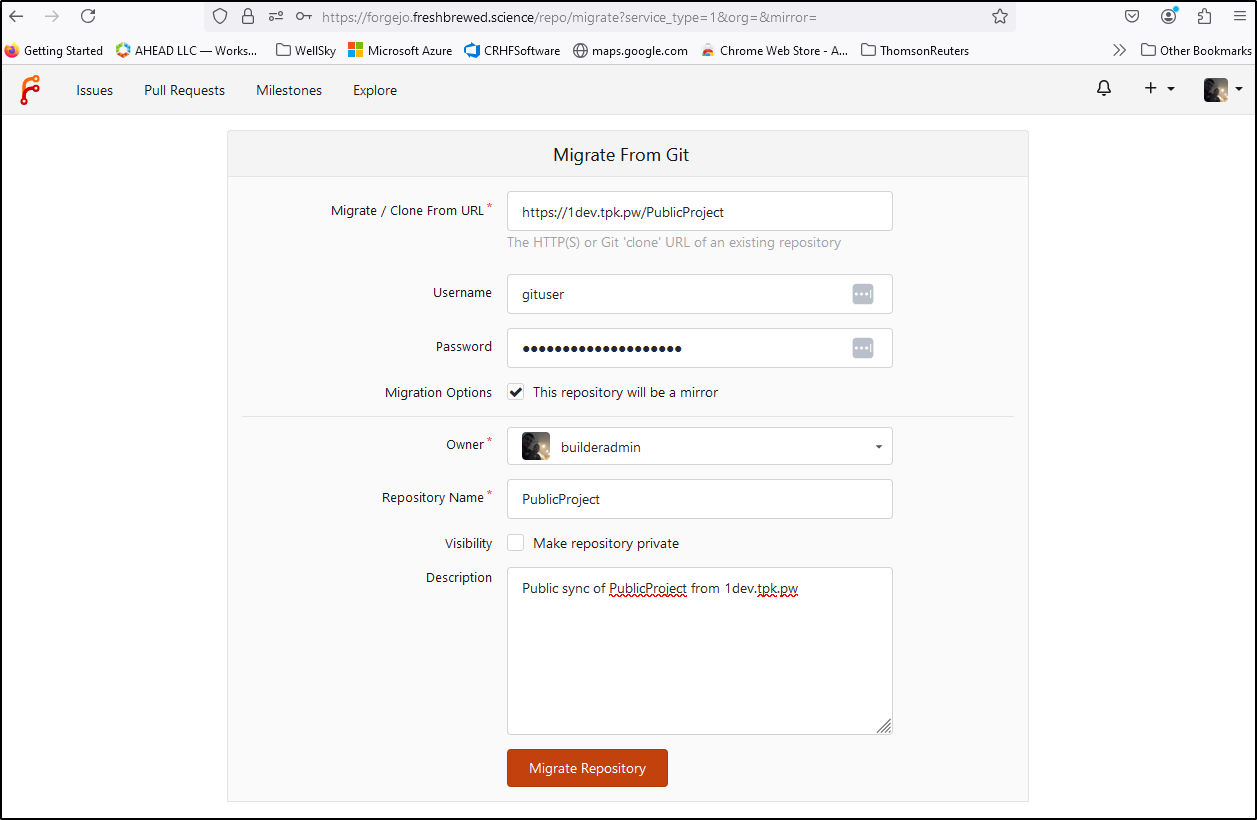

I’ll add details

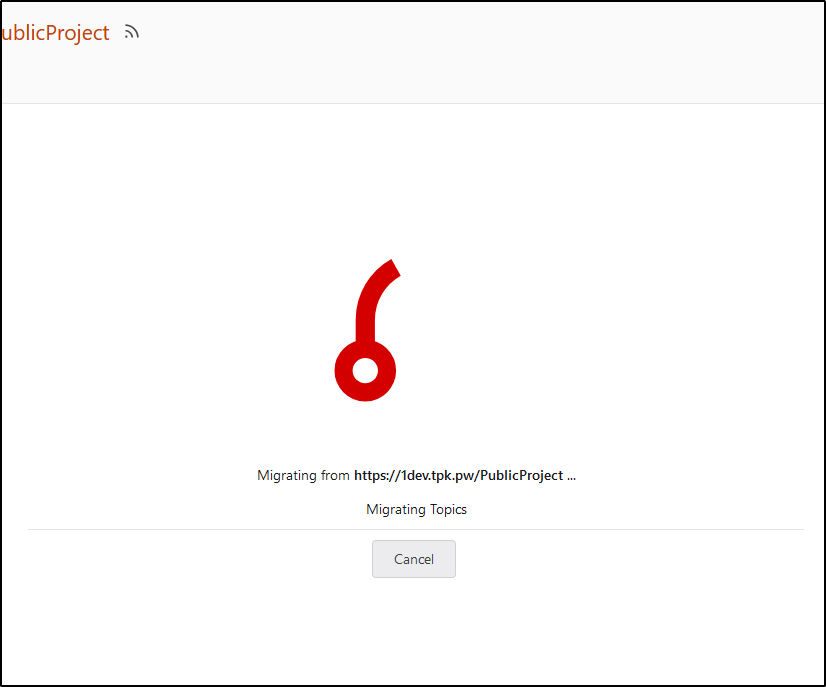

I see a nice animation

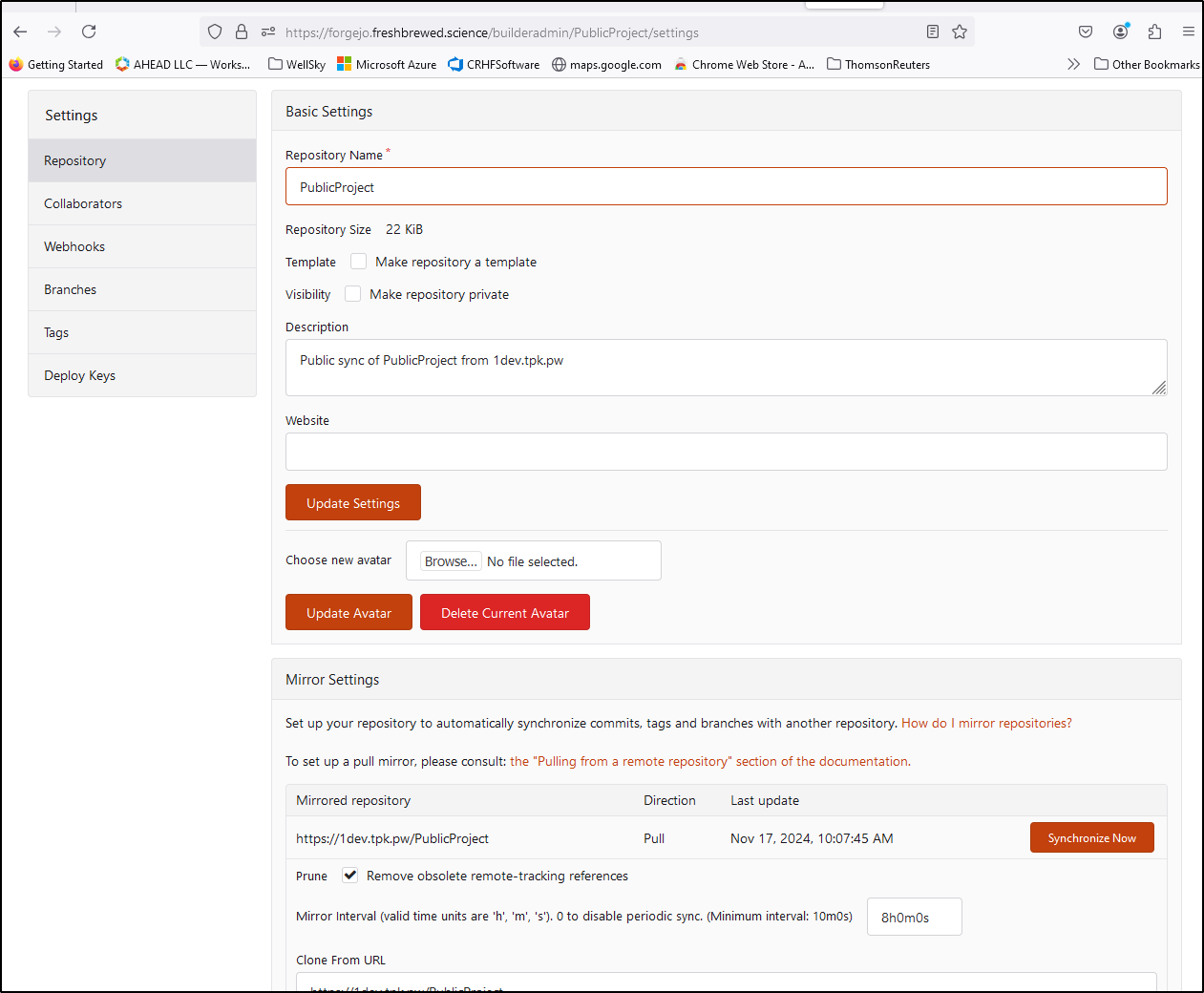

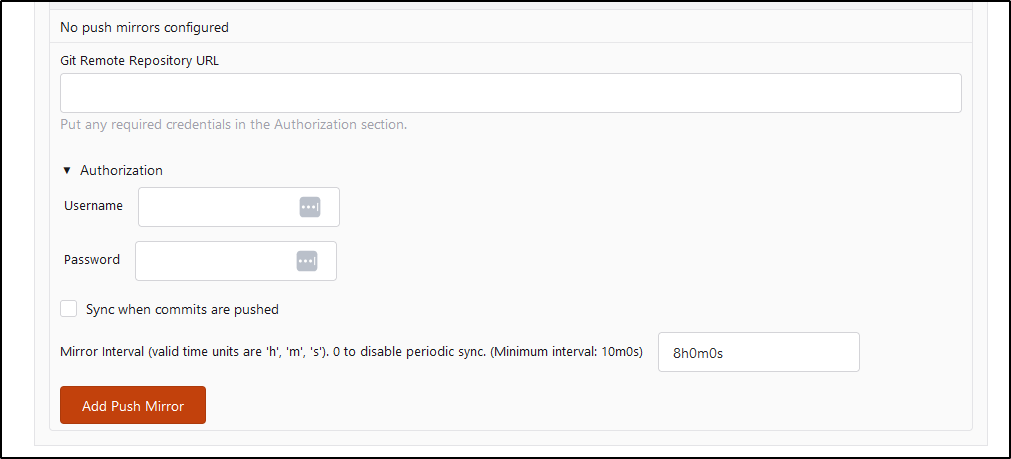

I can see it’s set to pull every 8 hours which should be a good interval for now

Sadly, unlike Forgejo (and Gitea and Codeberg) there is no push option for the reverse direction (push on change).

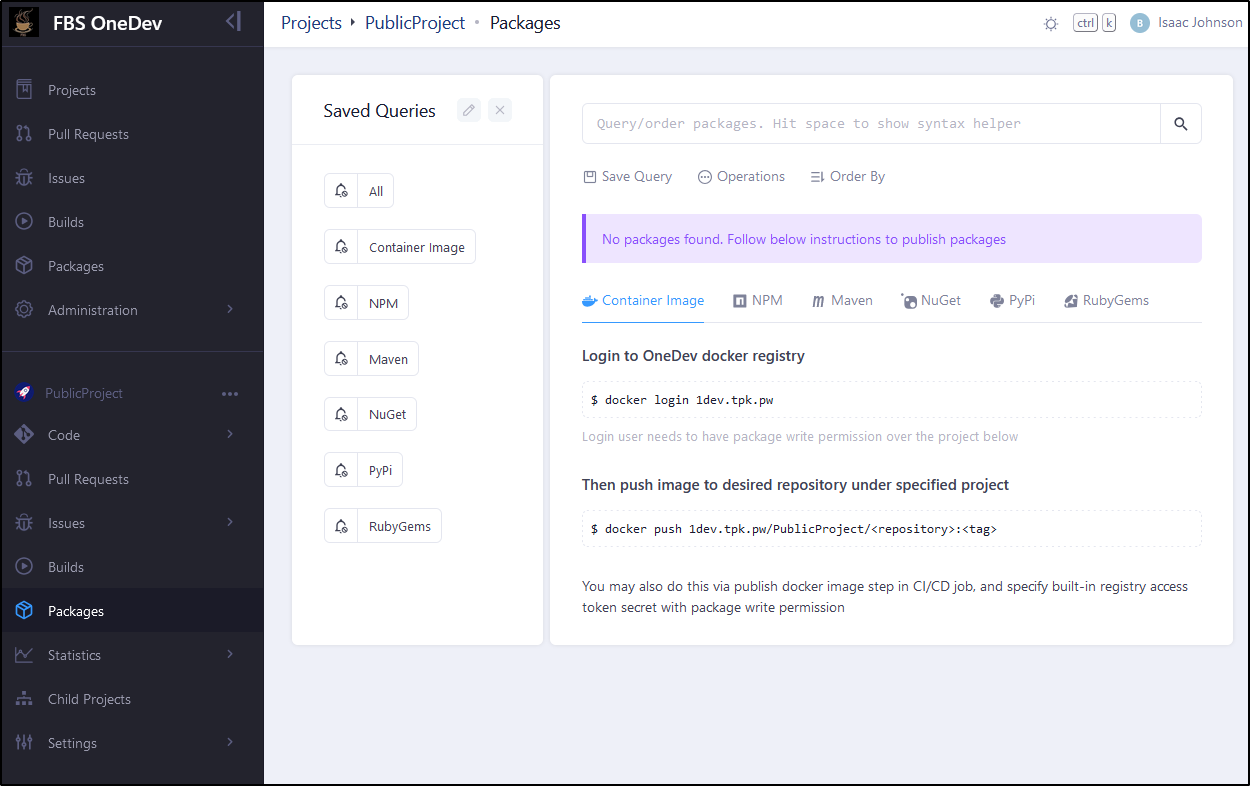

We should be able to push packages using the steps provided in Packages under our Project

I could not get the docker step to work.

I tried building out some zip files to push but even a small 27Mb file was rejected

17:02:50 /onedev-build/workspace

17:02:50 + ls -ltrah

17:02:50 total 27M

17:02:50 drwxr-xr-x 5 root root 4.0K Nov 17 23:02 ..

17:02:50 drwxr-xr-x 3 root root 4.0K Nov 17 23:02 alpinelatest

17:02:50 -rw-r--r-- 1 root root 27M Nov 17 23:02 alpinelatest.zip

17:02:50 drwxr-xr-x 3 root root 4.0K Nov 17 23:02 .

17:02:50 + which docker

17:02:50 + which podman

17:02:50 /usr/bin/podman

17:02:50 + whoami

17:02:50 root

17:02:50 + uname -a

17:02:50 Linux 0049a63c2eb6 6.8.0-48-generic #48~22.04.1-Ubuntu SMP PREEMPT_DYNAMIC Mon Oct 7 11:24:13 UTC 2 x86_64 x86_64 x86_64 GNU/Linux

17:02:50 + mkdir -p doc

17:02:50 + echo test

17:02:50 + echo '<html><body>My Test</body></html>'

17:02:51 Step "debug" is successful (25 seconds)

17:02:51 Running step "publish artifact"...

17:02:52 java.lang.RuntimeException: Http request failed (status code: 413, error message: <html>

<head><title>413 Request Entity Too Large</title></head>

<body>

<center><h1>413 Request Entity Too Large</h1></center>

<hr><center>nginx/1.25.4</center>

</body>

</html>

)

at io.onedev.k8shelper.KubernetesHelper.checkStatus(KubernetesHelper.java:342)

at io.onedev.k8shelper.KubernetesHelper.runServerStep(KubernetesHelper.java:853)

at io.onedev.k8shelper.KubernetesHelper.runServerStep(KubernetesHelper.java:812)

at io.onedev.agent.AgentSocket$4.doExecute(AgentSocket.java:797)

at io.onedev.agent.AgentSocket$4.lambda$execute$1(AgentSocket.java:673)

at io.onedev.agent.ExecutorUtils.runStep(ExecutorUtils.java:100)

at io.onedev.agent.AgentSocket$4.execute(AgentSocket.java:670)

at io.onedev.k8shelper.LeafFacade.execute(LeafFacade.java:12)

at io.onedev.k8shelper.CompositeFacade.execute(CompositeFacade.java:35)

at io.onedev.agent.AgentSocket.executeDockerJob(AgentSocket.java:585)

at io.onedev.agent.AgentSocket.service(AgentSocket.java:973)

at io.onedev.agent.AgentSocket.lambda$onMessage$2(AgentSocket.java:212)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base/java.lang.Thread.run(Thread.java:829)

``

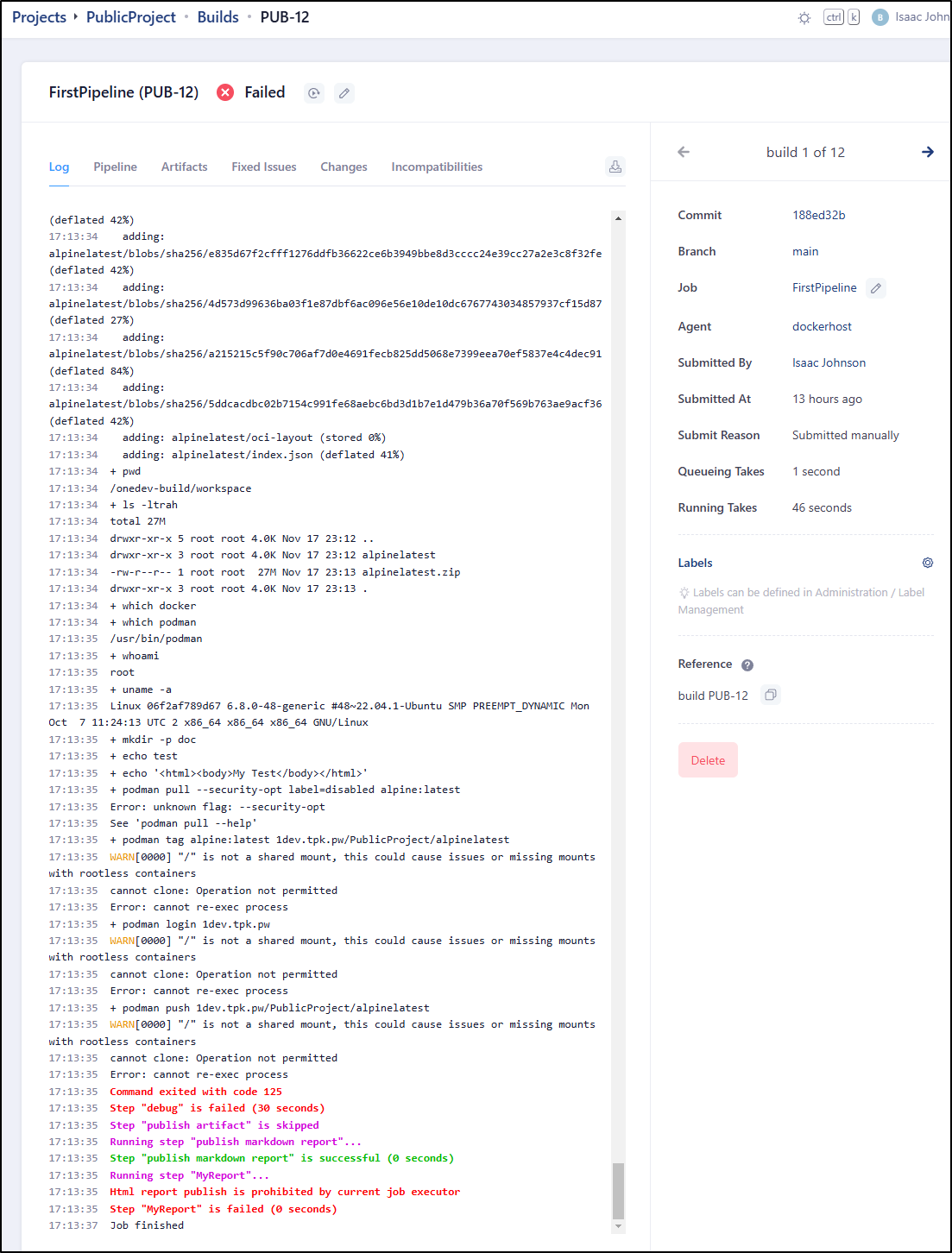

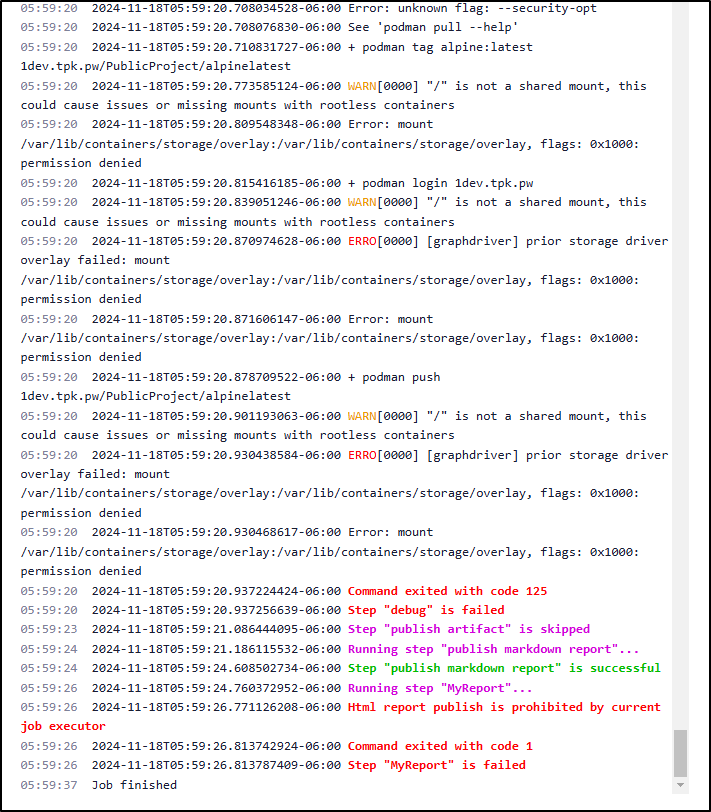

As you can see I tried a few things but could not get the docker or podman steps to work, nor upload of artifacts

```yaml

version: 37

jobs:

- name: FirstPipeline

steps:

- !CommandStep

name: whoami

runInContainer: true

image: ubuntu:latest

interpreter: !ShellInterpreter

shell: bash

commands: |

whoami

useTTY: true

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !PullImageStep

name: aliplinelatest

srcImage: alpine:latest

destPath: ./alpinelatest

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !CommandStep

name: debug

runInContainer: true

image: ubuntu:latest

interpreter: !ShellInterpreter

shell: bash

commands: |

set -x

apt update

DEBIAN_FRONTEND=noninteractive apt install -y zip podman

zip -r alpinelatest.zip alpinelatest

pwd

ls -ltrah

which docker

which podman

whoami

uname -a

mkdir -p doc

echo "test" > doc/test.md

echo "<html><body>My Test</body></html>" > doc.html

podman pull --security-opt label=disabled alpine:latest

podman tag alpine:latest 1dev.tpk.pw/PublicProject/alpinelatest

podman login 1dev.tpk.pw

podman push 1dev.tpk.pw/PublicProject/alpinelatest

useTTY: true

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !PublishArtifactStep

name: publish artifact

artifacts: '*.zip'

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !PublishMarkdownReportStep

name: publish markdown report

reportName: Incompatibilities

filePatterns: doc/**

startPage: doc/test.md

condition: ALWAYS

- !PublishHtmlReportStep

name: MyReport

reportName: MyReport

filePatterns: '*.html'

startPage: doc.html

condition: ALWAYS

retryCondition: never

maxRetries: 3

retryDelay: 30

timeout: 14400

I decided to disable my Job Executor and turn of its branch requirement - perhaps this works better in OneDev as opposed to an agent

I found that just hung out waiting for an executor.

I removed the Dockerhost executor

Then it seemed to fallback on the Kubernetes one

Though that too has storage overlay issues

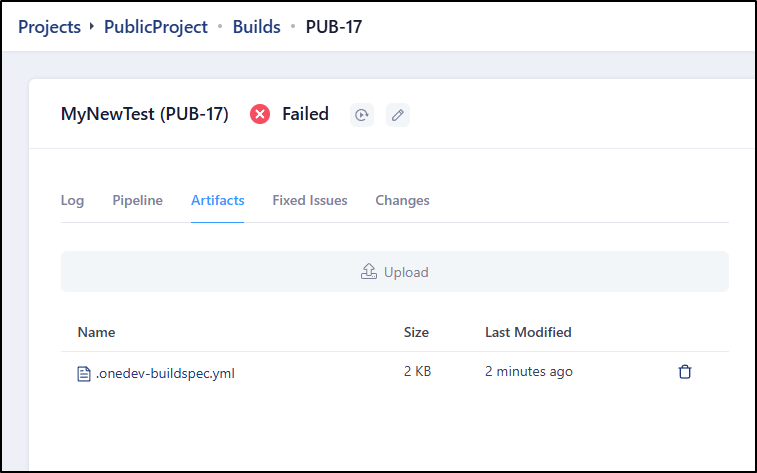

However, If I am pushing just a small file, like a YAML, that worked:

with no issue

06:29:25 Step "TestPush" is skipped

06:29:25 Running step "TestArtifacts"...

06:29:25 Step "TestArtifacts" is successful (0 seconds)

And I can see the file there

Multiple Jobs in a pipeline

Say that we desire more than one manual job in a pipeline - perhaps a specific QA test, or a secondary manual publish.

Our Jobspec can contain any number of jobs all within the same file (this is different to Azure DevOps or Jenkins where a file is a pipeline)

version: 37

jobs:

- name: FirstPipeline

steps:

- !CommandStep

name: whoami

runInContainer: true

image: ubuntu:latest

interpreter: !ShellInterpreter

shell: bash

commands: |

whoami

useTTY: true

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !PullImageStep

name: aliplinelatest

srcImage: alpine:latest

destPath: ./alpinelatest

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !CommandStep

name: debug

runInContainer: true

image: ubuntu:latest

interpreter: !ShellInterpreter

shell: bash

commands: |

set -x

apt update

DEBIAN_FRONTEND=noninteractive apt install -y zip podman

zip -r alpinelatest.zip alpinelatest

pwd

ls -ltrah

which docker

which podman

whoami

uname -a

mkdir -p doc

echo "test" > doc/test.md

echo "<html><body>My Test</body></html>" > doc.html

podman pull --security-opt label=disabled alpine:latest

podman tag alpine:latest 1dev.tpk.pw/PublicProject/alpinelatest

podman login 1dev.tpk.pw

podman push 1dev.tpk.pw/PublicProject/alpinelatest

useTTY: true

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !PublishArtifactStep

name: publish artifact

artifacts: '*.zip'

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !PublishMarkdownReportStep

name: publish markdown report

reportName: Incompatibilities

filePatterns: doc/**

startPage: doc/test.md

condition: ALWAYS

- !PublishHtmlReportStep

name: MyReport

reportName: MyReport

filePatterns: '*.html'

startPage: doc.html

condition: ALWAYS

retryCondition: never

maxRetries: 3

retryDelay: 30

timeout: 14400

- name: MyNewTest

steps:

- !CheckoutStep

name: checkoutcode

cloneCredential: !DefaultCredential {}

withLfs: false

withSubmodules: false

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !PullImageStep

name: TestPull

srcImage: alpine:latest

destPath: ./alpinelatest

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !CommandStep

name: Check

runInContainer: false

interpreter: !DefaultInterpreter

commands: |

pwd

ls -ltra

ls -ltra ./aplinelatest

useTTY: true

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !PushImageStep

name: TestPush

srcPath: ./aplinelatest

destImage: 1dev.tpk.pw/PublicProject/alpinelatest

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

retryCondition: never

maxRetries: 3

retryDelay: 30

timeout: 14400

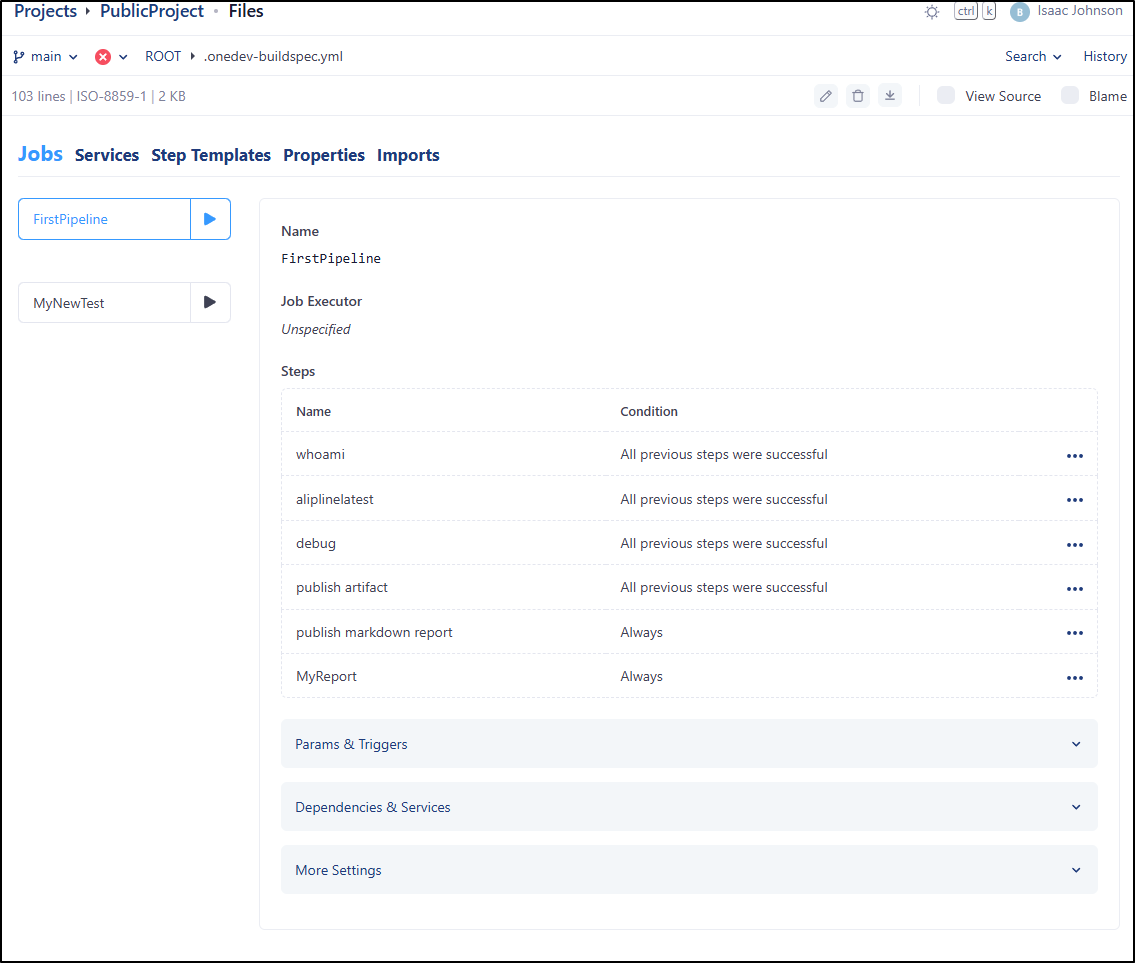

Now when I go to build, I can choose “FirstPipeline” or “MyNewTest” using the above example

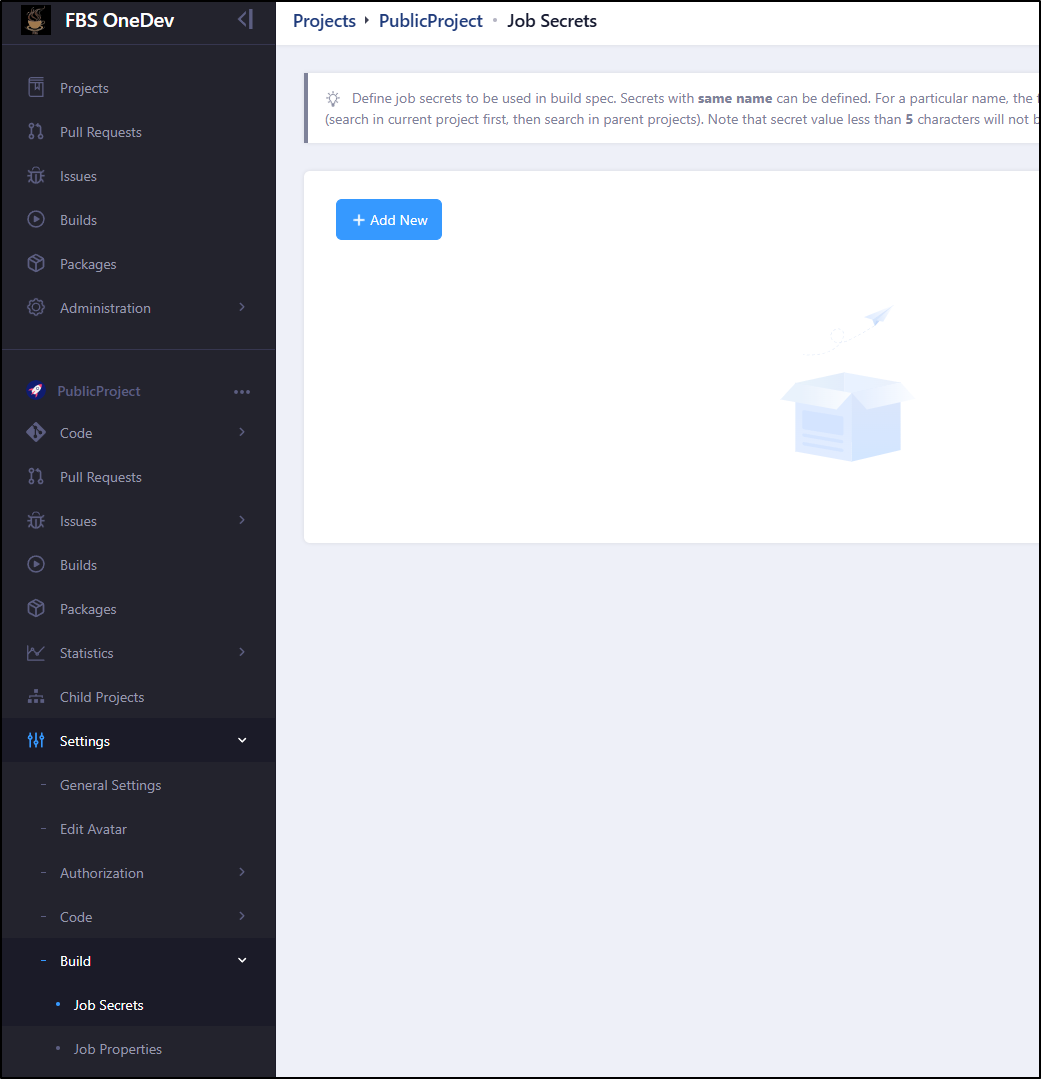

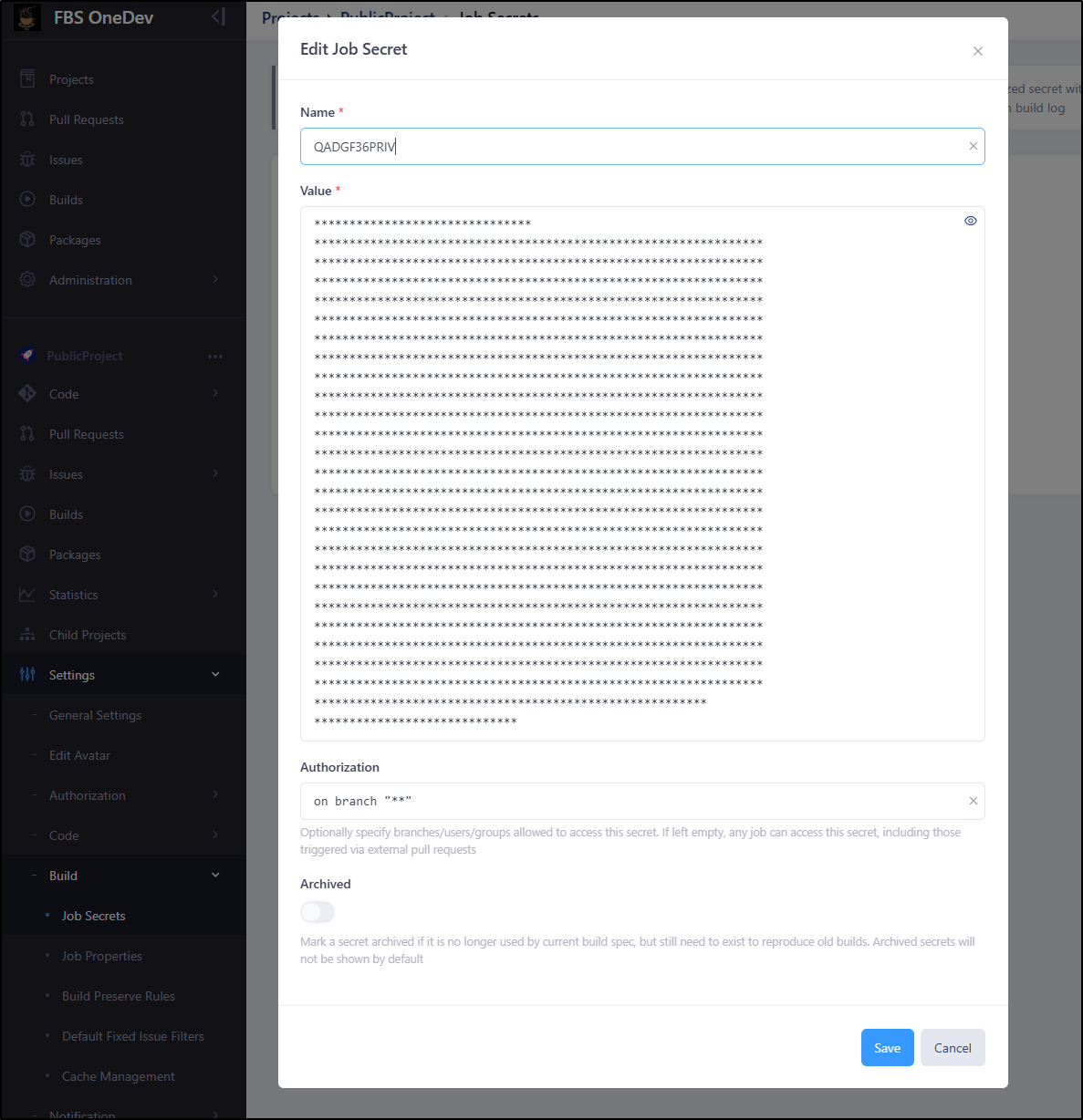

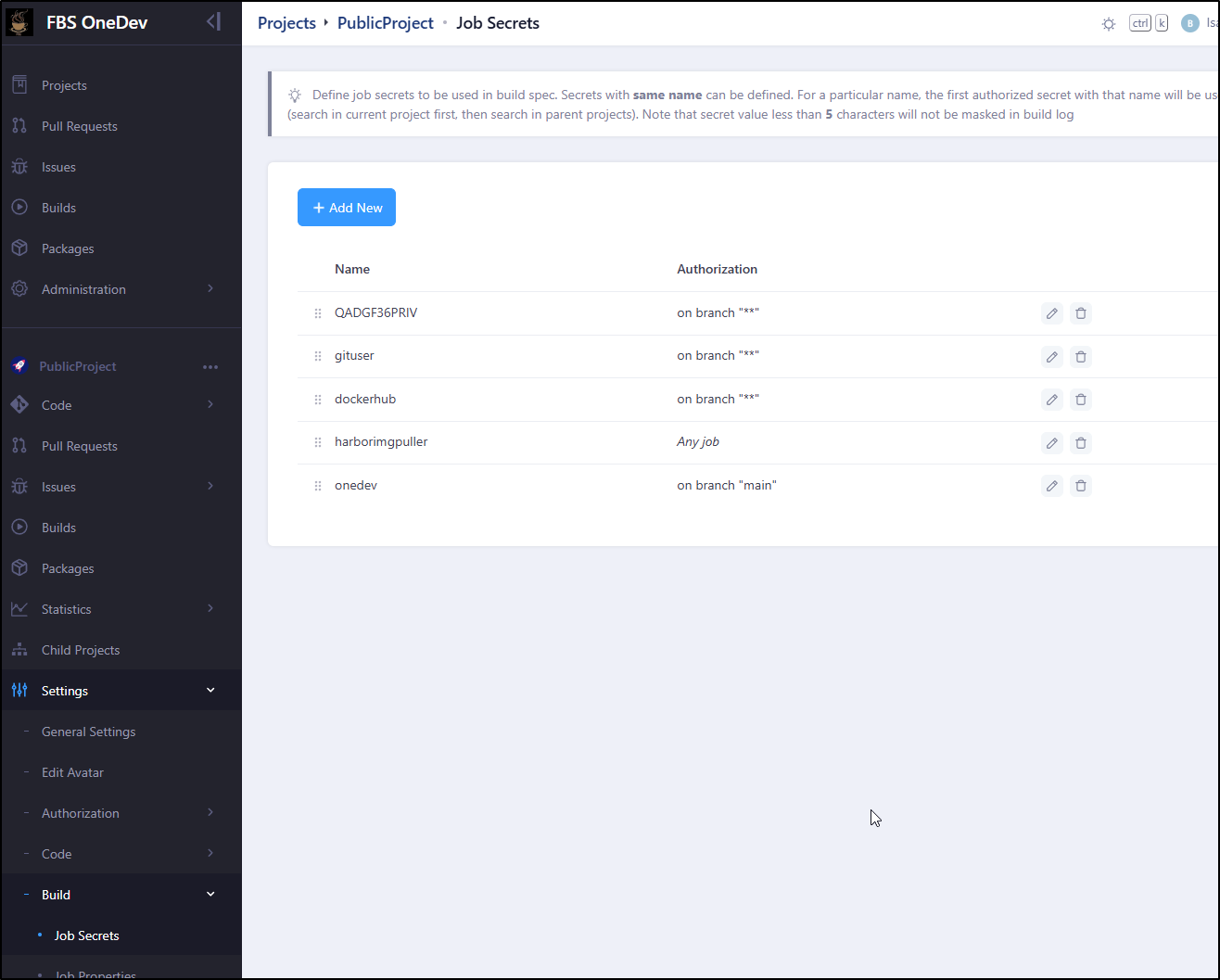

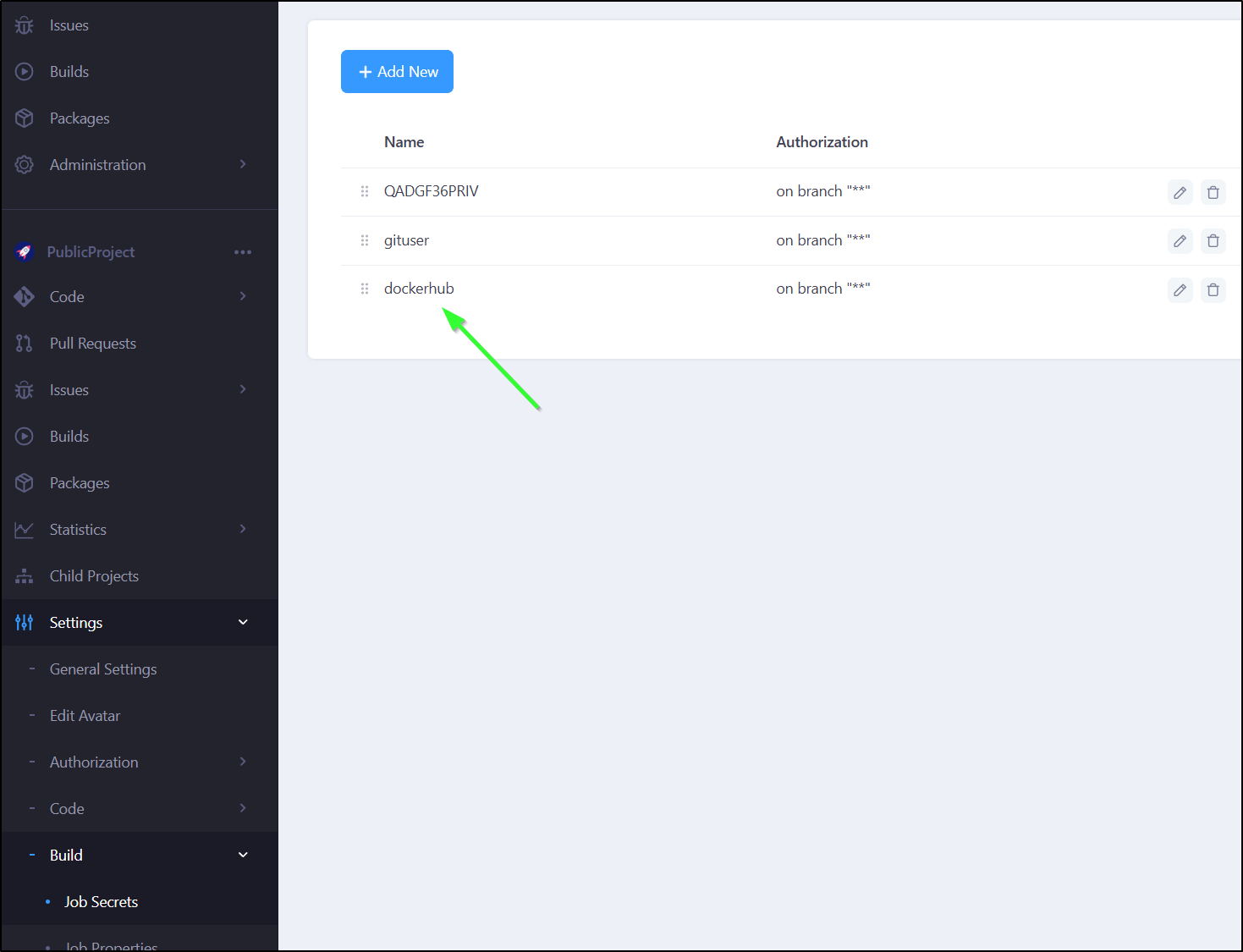

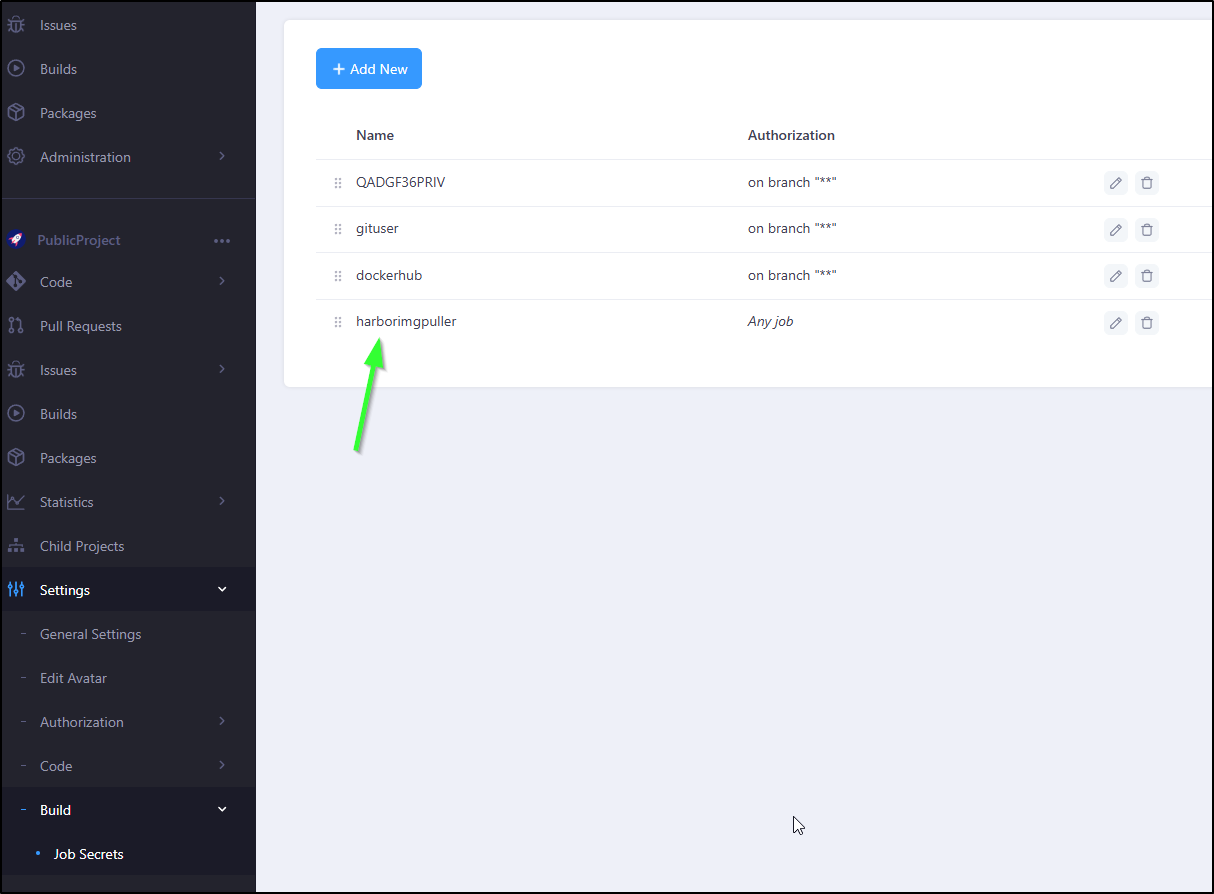

Job Secrets

Let’s say you want to have a secret in a pipeline. You certainly would not commit that to code.

Like other CICD tools, OneDev has pipeline secrets we can see under “Settings/Build/Job Secrets”:

I like how it masks by default the value - this would be helpful for pairing sessions (there is a little eye icon in the upper right to show plain text the value)

Solve Upload issues

Even with proper settings, I was seeing that 413 entity too large

06:45:38 INFO[0002] Taking snapshot of full filesystem...

06:45:38 INFO[0002] Pushing image to 1dev.tpk.pw/publicproject/mytest:0.0.1

06:45:38 error pushing image: failed to push to destination 1dev.tpk.pw/publicproject/mytest:0.0.1: PATCH https://1dev.tpk.pw/v2/publicproject/mytest/blobs/uploads/23706bcc-0b22-4332-b059-114c4f1e8f9c: unexpected status code 413 Request Entity Too Large: <html>

06:45:38 <head><title>413 Request Entity Too Large</title></head>

06:45:38 <body>

06:45:38 <center><h1>413 Request Entity Too Large</h1></center>

06:45:38 <hr><center>nginx/1.25.4</center>

06:45:38 </body>

06:45:38 </html>

06:45:38

06:45:39 Command exited with code 1

06:45:39 Step "TestBuildNPush" is failed (5 seconds)

An issue thread suggested it was not their service (container), rather the NGingx front-door

I updated the NGinx annotations accordingly for our ingress from

$ kubectl get ingress -n onedev -o yaml

apiVersion: v1

items:

- apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.k8s.io/v1","kind":"Ingress","metadata":{"annotations":{"cert-manager.io/cluster-issuer":"azuredns-tpkpw","kubernetes.io/ingress.class":"nginx","kubernetes.io/tls-acme":"true","nginx.ingress.kubernetes.io/proxy-read-timeout":"3600","nginx.ingress.kubernetes.io/proxy-send-timeout":"3600","nginx.org/websocket-services":"onedev"},"creationTimestamp":"2024-11-16T14:24:42Z","generation":1,"name":"1devingress","namespace":"onedev","resourceVersion":"41739849","uid":"1168daf8-c006-47d3-82e3-05d30cb39c2a"},"spec":{"rules":[{"host":"1dev.tpk.pw","http":{"paths":[{"backend":{"service":{"name":"onedev","port":{"number":80}}},"path":"/","pathType":"ImplementationSpecific"}]}}],"tls":[{"hosts":["1dev.tpk.pw"],"secretName":"1dev-tls"}]},"status":{"loadBalancer":{}}}

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: onedev

creationTimestamp: "2024-11-16T23:06:19Z"

generation: 1

name: 1devingress

namespace: onedev

resourceVersion: "41804597"

uid: 974ae410-9a7d-41b8-bf5e-84c7ed82c966

spec:

rules:

- host: 1dev.tpk.pw

http:

paths:

- backend:

service:

name: onedev

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- 1dev.tpk.pw

secretName: 1dev-tls

status:

loadBalancer: {}

kind: List

metadata:

resourceVersion: ""

to a version with a body size of “0” (infinte) - something I have to do for Harbor.

builder@DESKTOP-QADGF36:~$ cat updated.ondevingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/proxy-body-size: "0"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

nginx.org/websocket-services: onedev

name: 1devingress

namespace: onedev

spec:

rules:

- host: 1dev.tpk.pw

http:

paths:

- backend:

service:

name: onedev

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- 1dev.tpk.pw

secretName: 1dev-tls

I then delete and re-added to force it to take

builder@DESKTOP-QADGF36:~$ kubectl delete -f ./updated.ondevingress.yaml

ingress.networking.k8s.io "1devingress" deleted

builder@DESKTOP-QADGF36:~$ kubectl apply -f ./updated.ondevingress.yaml

ingress.networking.k8s.io/1devingress created

builder@DESKTOP-QADGF36:~$ kubectl get ingress -n onedev

NAME CLASS HOSTS ADDRESS PORTS AGE

1devingress <none> 1dev.tpk.pw 80, 443 6s

However, even with the entity size increased, it falls down

07:22:34 INFO[0002] Pushing image to 1dev.tpk.pw/publicproject/mytest:0.0.1

07:22:34 error pushing image: failed to push to destination 1dev.tpk.pw/publicproject/mytest:0.0.1: PATCH https://1dev.tpk.pw/v2/publicproject/mytest/blobs/uploads/d9e9b98e-def1-41e2-832e-9e6eba93c4e7: unexpected status code 413 Request Entity Too Large: <html>

07:22:34 <head><title>413 Request Entity Too Large</title></head>

07:22:34 <body>

07:22:34 <center><h1>413 Request Entity Too Large</h1></center>

07:22:34 <hr><center>nginx/1.25.4</center>

07:22:34 </body>

07:22:34 </html>

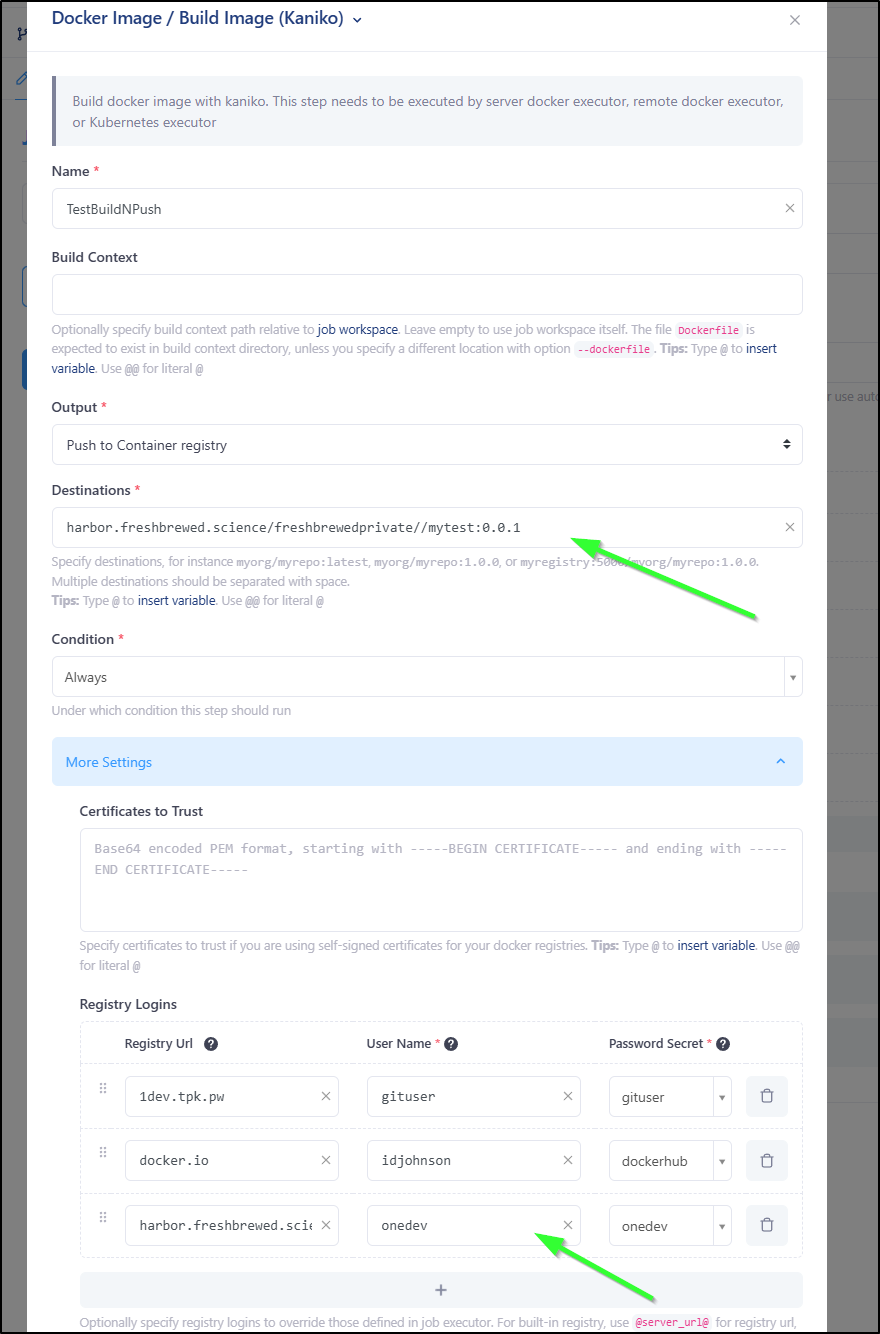

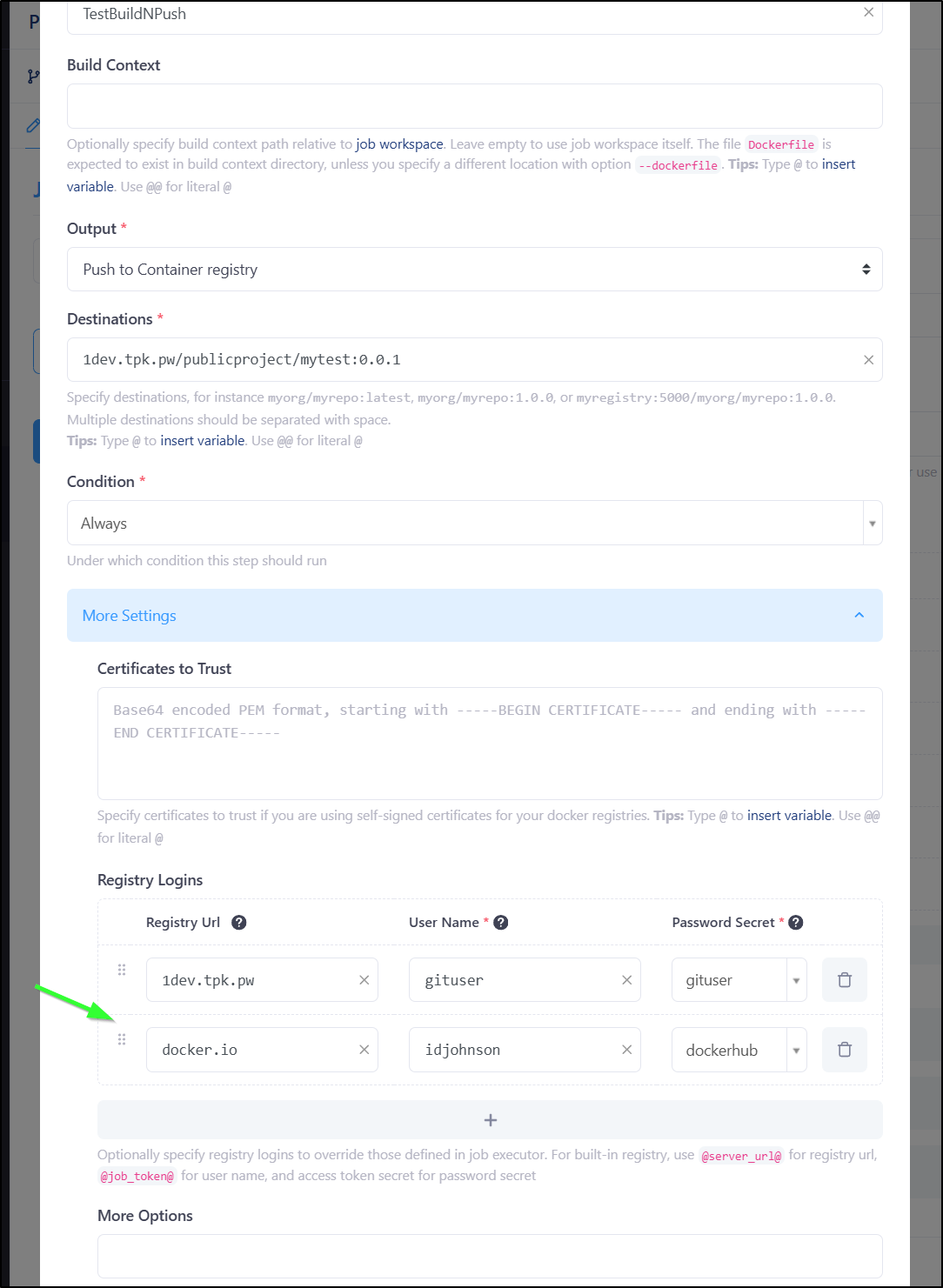

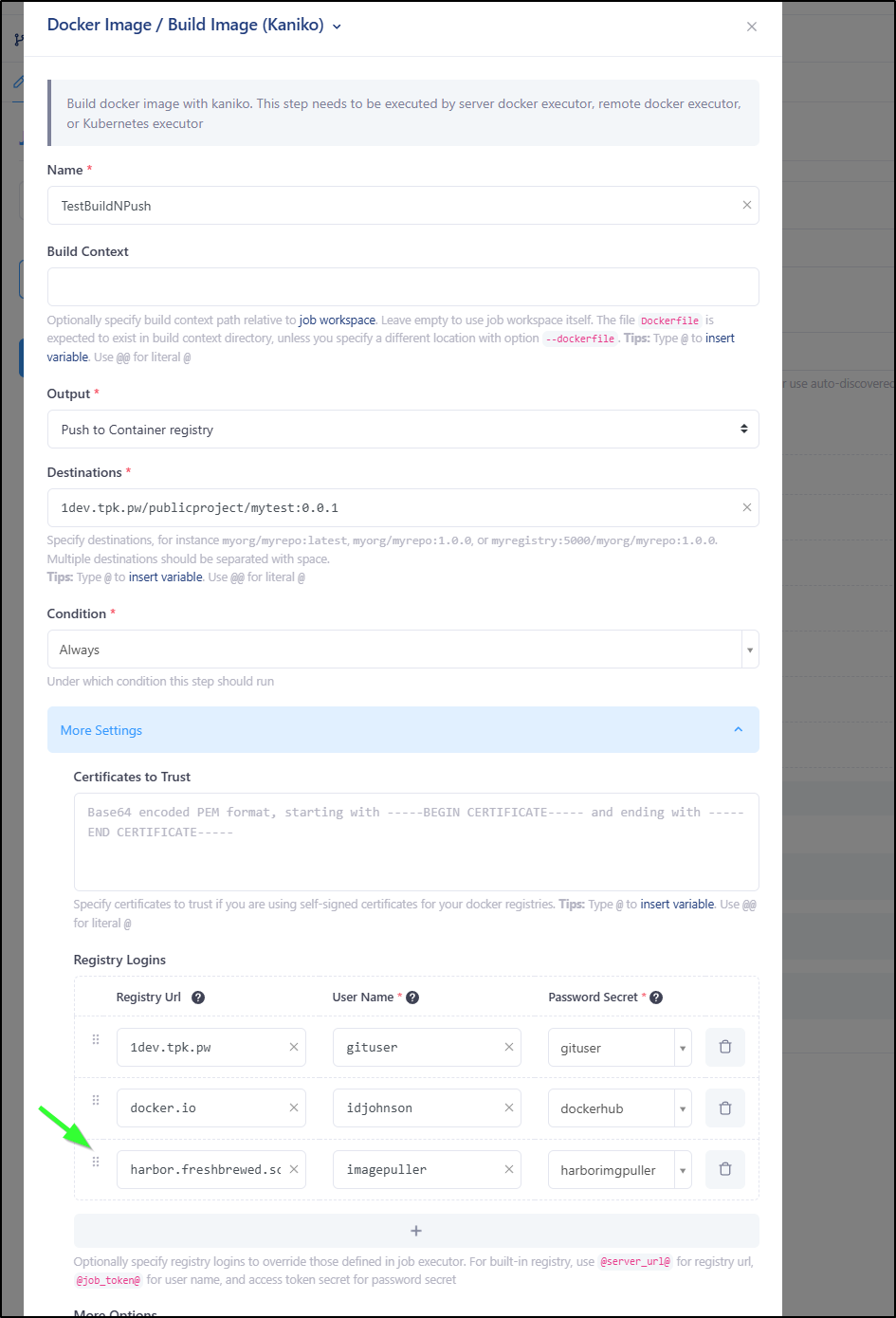

I’m going to try and push to my Harbor CR instead

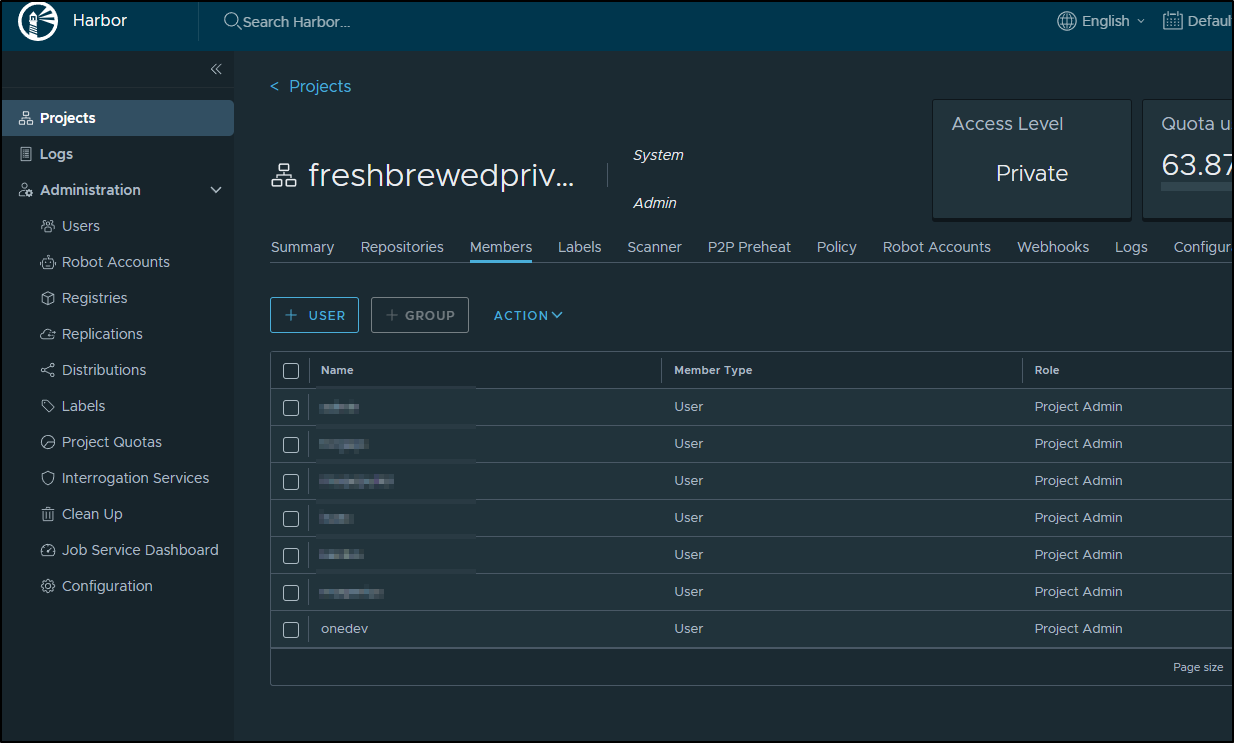

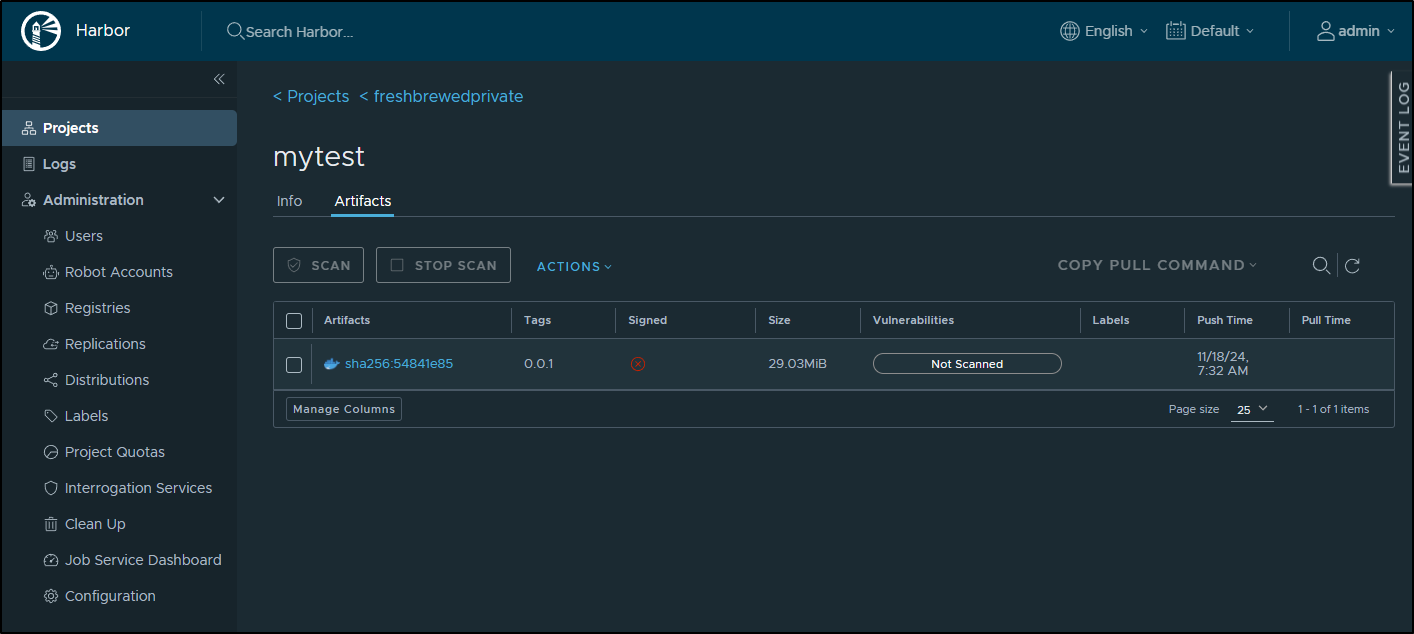

I need to create a user in Harbor that onedev can use and add to the privateregistry with push abilities

I’ll add the password to our job passwords (and this one is limited to main”)

I’ll now update my destination to use Harbor and set (or replace) the existing harbor registry login

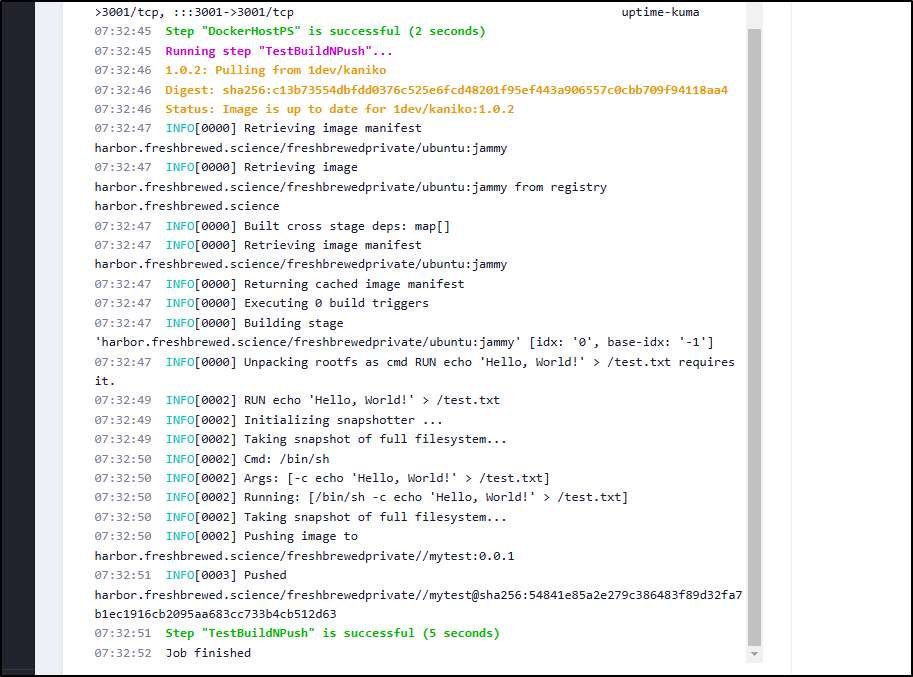

This worked

and I can see “mytest” in Harbor

Using a local registry

Perhaps you find, rather quickly, you butt up against the Dockerhub anonymous rate limit

07:04:07 Running step "TestBuildNPush"...

07:04:08 1.0.2: Pulling from 1dev/kaniko

07:04:08 Digest: sha256:c13b73554dbfdd0376c525e6fcd48201f95ef443a906557c0cbb709f94118aa4

07:04:08 Status: Image is up to date for 1dev/kaniko:1.0.2

07:04:09 INFO[0000] Retrieving image manifest ubuntu:20.04

07:04:09 INFO[0000] Retrieving image ubuntu:20.04 from registry index.docker.io

07:04:09 error building image: unable to complete operation after 0 attempts, last error: GET https://index.docker.io/v2/library/ubuntu/manifests/20.04: TOOMANYREQUESTS: You have reached your pull rate limit. You may increase the limit by authenticating and upgrading: https://www.docker.com/increase-rate-limit

One can solve by logging into Dockerhub. I’ll create a password for my Dockerhub user

Then I’ll add a Dockeruser login to the Build and Push step

However, at least for me, that still blocked me, even with changing the Dockerfile to use docker.io/ubuntu:20.04.

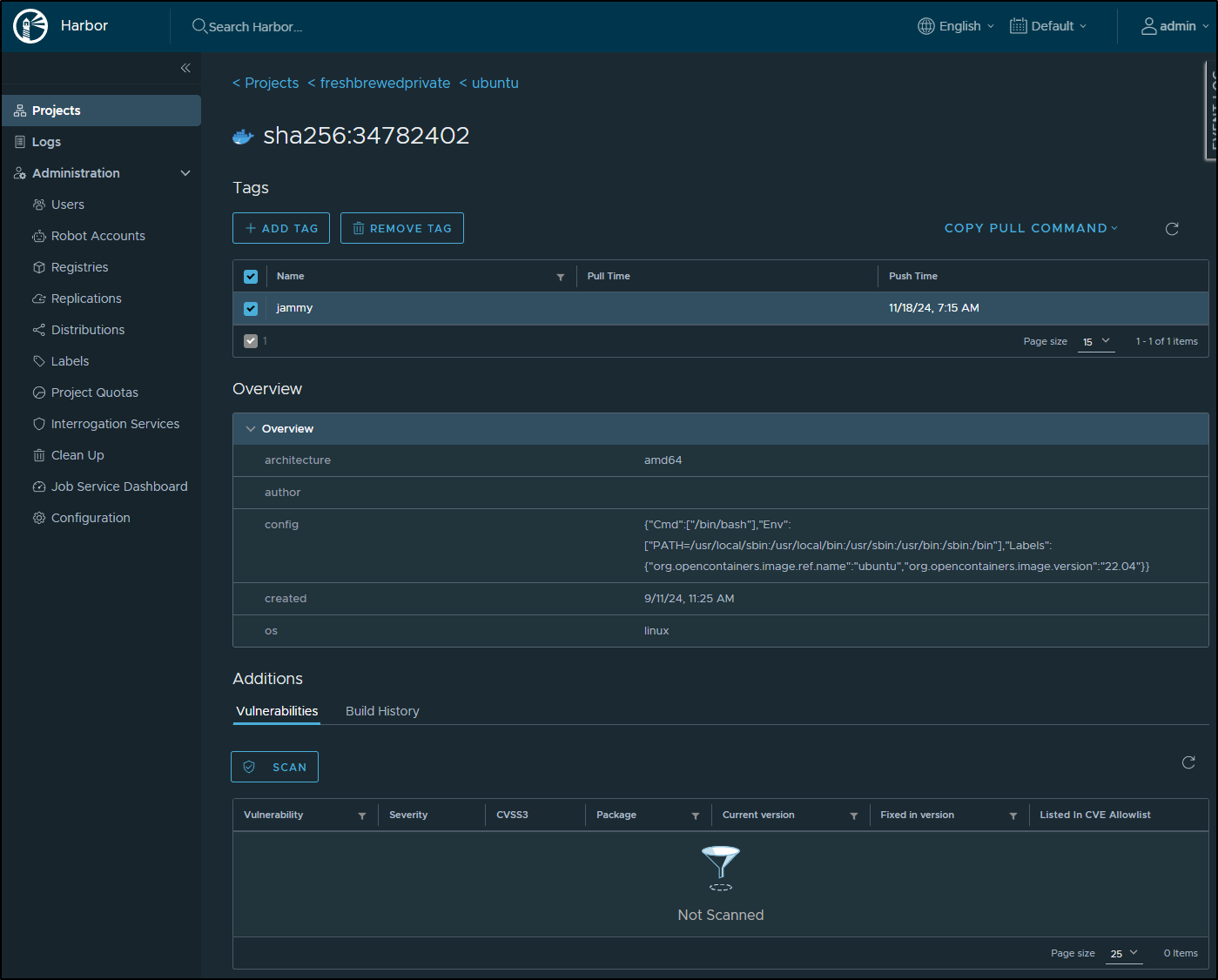

I’ll next try and push to Harbor, my private CR - perhaps i can work around this.

builder@DESKTOP-QADGF36:~$ docker tag ubuntu:jammy harbor.freshbrewed.science/freshbrewedprivate/ubuntu:jammy

builder@DESKTOP-QADGF36:~$ docker push harbor.freshbrewed.science/freshbrewedprivate/ubuntu:jammy

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/ubuntu]

2573e0d81582: Pushed

jammy: digest: sha256:34782402df238275b0bd100009b1d31c512d96392872bae234e1800f3452e33d size: 529

Which I now see in Harbor

I’ll create an imagepuller password secret. Because this is limited to just pulling, I set it to be open to any branch as well

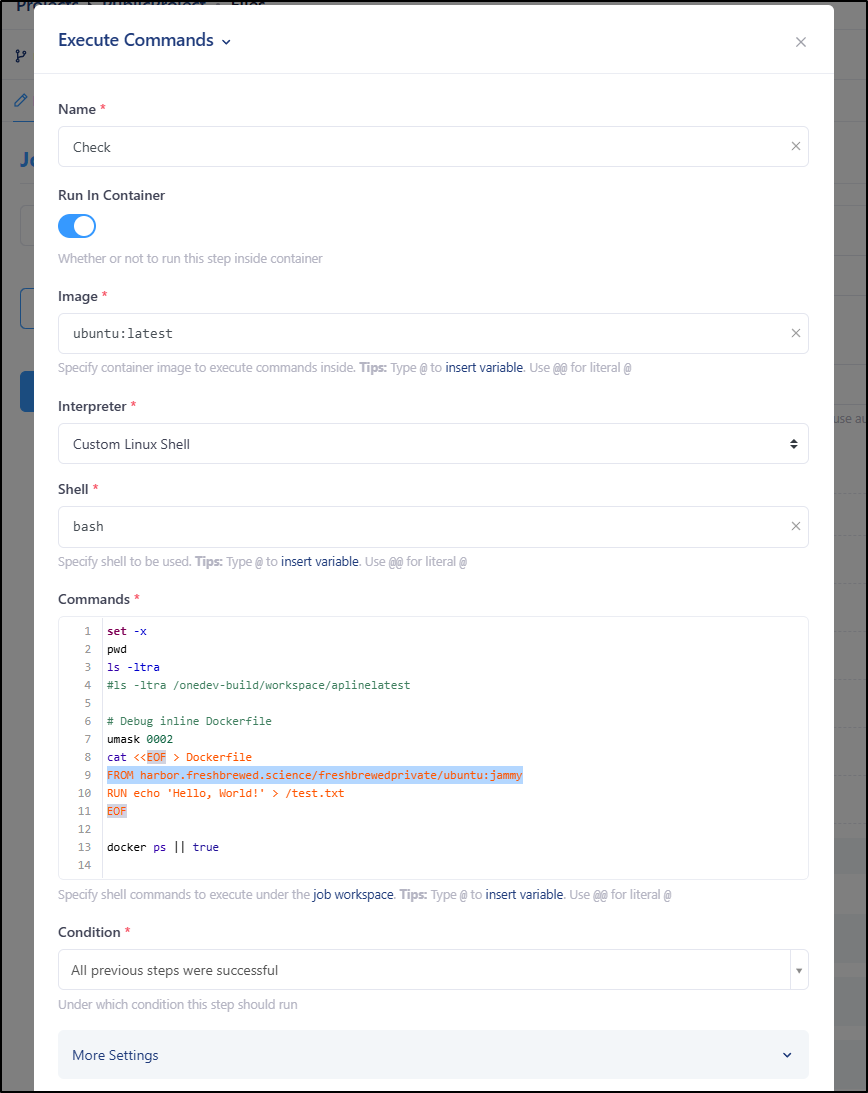

I’ll now change my Dockerfile to use either

FROM harbor.freshbrewed.science/freshbrewedprivate/ubuntu:jammy

or

FROM harbor.freshbrewed.science/freshbrewedprivate/ubuntu@sha256:34782402df238275b0bd100009b1d31c512d96392872bae234e1800f3452e33

Then add the harbor cred to the build and push step

Bringing it together

I covered a lot of Build Pipeline steps.

Let’s look at the relevant section of onedev pipeline with which I ended:

version: 37

jobs:

- name: MyNewTest

steps:

- !CheckoutStep

name: checkoutcode

cloneCredential: !DefaultCredential {}

withLfs: false

withSubmodules: false

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !CommandStep

name: Check

runInContainer: true

image: ubuntu:latest

interpreter: !ShellInterpreter

shell: bash

commands: |

set -x

pwd

ls -ltra

#ls -ltra /onedev-build/workspace/aplinelatest

# Debug inline Dockerfile

umask 0002

cat <<EOF > Dockerfile

FROM harbor.freshbrewed.science/freshbrewedprivate/ubuntu:jammy

RUN echo 'Hello, World!' > /test.txt

EOF

docker ps || true

useTTY: true

condition: ALL_PREVIOUS_STEPS_WERE_SUCCESSFUL

- !PublishArtifactStep

name: TestArtifacts

artifacts: '*.yml'

condition: ALWAYS

- !BuildImageWithKanikoStep

name: TestBuildNPush

output: !RegistryOutput

destinations: harbor.freshbrewed.science/freshbrewedprivate//mytest:0.0.1

registryLogins:

- registryUrl: 1dev.tpk.pw

userName: gituser

passwordSecret: gituser

- registryUrl: docker.io

userName: idjohnson

passwordSecret: dockerhub

- registryUrl: harbor.freshbrewed.science

userName: onedev

passwordSecret: onedev

condition: ALWAYS

- !SSHCommandStep

name: DockerHostPS

remoteMachine: 192.168.1.100

userName: builder

privateKeySecret: QADGF36PRIV

commands: |

docker images

docker pull harbor.freshbrewed.science/freshbrewedprivate/mytest@@sha256:54841e85a2e279c386483f89d32fa7b1ec1916cb2095aa683cc733b4cb512d63

docker images

condition: ALWAYS

retryCondition: never

maxRetries: 3

retryDelay: 30

timeout: 14400

What we have here are steps that:

- checkout code

- Create a Dockerfile inline

- Build it using both a base and destination stored in a controlled private Container Registry (Harbor)

- using SSH step, go to a dockerhost (192.168.1.100) and then execute an image pull to show we can get the image

Logically, in a QA pipeline we would then run and gather results.

In the build output, I could see the Kaniko build pull and push

07:36:14 Running step "TestBuildNPush"...

07:36:15 1.0.2: Pulling from 1dev/kaniko

07:36:15 Digest: sha256:c13b73554dbfdd0376c525e6fcd48201f95ef443a906557c0cbb709f94118aa4

07:36:15 Status: Image is up to date for 1dev/kaniko:1.0.2

07:36:16 INFO[0000] Retrieving image manifest harbor.freshbrewed.science/freshbrewedprivate/ubuntu:jammy

07:36:16 INFO[0000] Retrieving image harbor.freshbrewed.science/freshbrewedprivate/ubuntu:jammy from registry harbor.freshbrewed.science

07:36:16 INFO[0000] Built cross stage deps: map[]

07:36:16 INFO[0000] Retrieving image manifest harbor.freshbrewed.science/freshbrewedprivate/ubuntu:jammy

07:36:16 INFO[0000] Returning cached image manifest

07:36:16 INFO[0000] Executing 0 build triggers

07:36:16 INFO[0000] Building stage 'harbor.freshbrewed.science/freshbrewedprivate/ubuntu:jammy' [idx: '0', base-idx: '-1']

07:36:16 INFO[0000] Unpacking rootfs as cmd RUN echo 'Hello, World!' > /test.txt requires it.

07:36:17 INFO[0001] RUN echo 'Hello, World!' > /test.txt

07:36:17 INFO[0001] Initializing snapshotter ...

07:36:17 INFO[0001] Taking snapshot of full filesystem...

07:36:18 INFO[0002] Cmd: /bin/sh

07:36:18 INFO[0002] Args: [-c echo 'Hello, World!' > /test.txt]

07:36:18 INFO[0002] Running: [/bin/sh -c echo 'Hello, World!' > /test.txt]

07:36:18 INFO[0002] Taking snapshot of full filesystem...

07:36:18 INFO[0002] Pushing image to harbor.freshbrewed.science/freshbrewedprivate//mytest:0.0.1

07:36:19 INFO[0003] Pushed harbor.freshbrewed.science/freshbrewedprivate//mytest@sha256:ea99a91f1e9ed835b49bcd6b04c0d575c76ad454c0c743eb5e348ad1424da4b1

07:36:19 Step "TestBuildNPush" is successful (5 seconds)

The in the testing step I saw image output absent of mytest

07:36:22 REPOSITORY TAG IMAGE ID CREATED SIZE

07:36:22 1dev/server latest e06e8f319a1f 5 days ago 832MB

07:36:22 1dev/agent latest 9dd74c067048 8 days ago 621MB

07:36:22 henrygd/beszel

The docker pull which worked (that host is authed to Harbor already)

07:36:22 harbor.freshbrewed.science/freshbrewedprivate/mytest@sha256:54841e85a2e279c386483f89d32fa7b1ec1916cb2095aa683cc733b4cb512d63: Pulling from freshbrewedprivate/mytest

07:36:22 7478e0ac0f23: Pulling fs layer

07:36:22 46b3050c0303: Pulling fs layer

07:36:22 46b3050c0303: Verifying Checksum

07:36:22 46b3050c0303: Download complete

07:36:24 7478e0ac0f23: Verifying Checksum

07:36:24 7478e0ac0f23: Download complete

07:36:25 7478e0ac0f23: Pull complete

07:36:25 46b3050c0303: Pull complete

07:36:25 Digest: sha256:54841e85a2e279c386483f89d32fa7b1ec1916cb2095aa683cc733b4cb512d63

07:36:25 Status: Downloaded newer image for harbor.freshbrewed.science/freshbrewedprivate/mytest@sha256:54841e85a2e279c386483f89d32fa7b1ec1916cb2095aa683cc733b4cb512d63

07:36:25 harbor.freshbrewed.science/freshbrewedprivate/mytest@sha256:54841e85a2e279c386483f89d32fa7b1ec1916cb2095aa683cc733b4cb512d63

The lastly, the successful presence of mytest

07:36:25 REPOSITORY TAG IMAGE ID CREATED SIZE

07:36:25 harbor.freshbrewed.science/freshbrewedprivate/mytest <none> 1c561921fd9c 3 minutes ago 77.9MB

07:36:25 1dev/server latest e06e8f319a1f 5 days ago 832MB

07:36:25 1dev/agent latest 9dd74c067048 8 days ago 621MB

07:36:25 henrygd/beszel latest fa658a68e719 3 weeks ago 38.1MB

07:36:25 henrygd/beszel-agent

Summary

I realize now that we’ll have to do a part three since there is still more to cover on Issues and Reporting.

Today we tried, albeit unsuccessfully, to setup a proper externalized Database in either MySQL/MariaDB or PostgreSQL. I really did try everything I could imagine - I think there is more to that puzzle like perhaps an assumed plugin or a database configuration as that error seemed to be about a NumberFormatException.

We explored setting up Build agents and how to direct work to them. Digging into the pipelines, we found success in building and pushing docker images to a private CR using Kaniko in Kubernetes, publishing small file artifacts (YAML file) and Shelling out to hosts for additional tests/work.

Where I ran into issues was around larger artifacts (which I’m convinced is some bundled tomcat setting or something in their container) and the self-contained docker steps.

At this point, I find OneDev interesting but I’m not so woo’ed as to give up on other systems. For instance, try as I might, I found no easy way to selectively expose Projects. There is an ‘anonymous’ role I can enable but then I’m setting permissions across the OneDev as a whole. I really just want to put out some projects as Public Read like I do in other systems.

I also didn’t care for the Pipeline flow. I can view my build logs easily enough, but to trigger a build I keep having to click the YAML file to auto-refresh to the pipeline invokation view. From pipeline results, there is no “trigger again” or a “show me the editor” type setting - just results of builds with a QL style search.

There is lots to like overall and so we’ll move on to a dive into Issue flows, templates and reporting in our next post.