Published: Jul 16, 2024 by Isaac Johnson

I’ve been meaning to get back to some Open-Source apps that would allow me to remotely upload and download files. With an eye for simplicity, I decided I would look at SFTPGo and Filegator as both seemed reasonably up to date and active.

SFTPGo

Like others, I found this originally on a MariusHosting Post.

The app is hosted and developed in github.com/drakkan/sftpgo.

That said, I did find an active helm repo that can deliver this to Kubernetes for us.

Let’s add the helm repo

$ helm repo add skm https://charts.sagikazarmark.dev

"skm" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Unable to get an update from the "freshbrewed" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Unable to get an update from the "myharbor" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Successfully got an update from the "nfs" chart repository

...Successfully got an update from the "bitwarden" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "openfunction" chart repository

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "btungut" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "openproject" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "ananace-charts" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "makeplane" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "opencost-charts" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "skm" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "spacelift" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "openzipkin" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Unable to get an update from the "epsagon" chart repository (https://helm.epsagon.com):

Get "https://helm.epsagon.com/index.yaml": dial tcp: lookup helm.epsagon.com on 10.255.255.254:53: server misbehaving

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Let’s try a chart with no extra values

$ helm install sftpgo -n sftpgo --create-namespace skm/sftpgo

NAME: sftpgo

LAST DEPLOYED: Wed Jul 10 08:50:04 2024

NAMESPACE: sftpgo

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace sftpgo -l "app.kubernetes.io/name=sftpgo,app.kubernetes.io/instance=sftpgo" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace sftpgo $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace sftpgo port-forward $POD_NAME 8080:$CONTAINER_PORT

Let’s try a port-forward to the service

$ kubectl port-forward svc/sftpgo -n sftpgo 8888:80

Forwarding from 127.0.0.1:8888 -> 8080

Forwarding from [::1]:8888 -> 8080

Handling connection for 8888

Handling connection for 8888

Handling connection for 8888

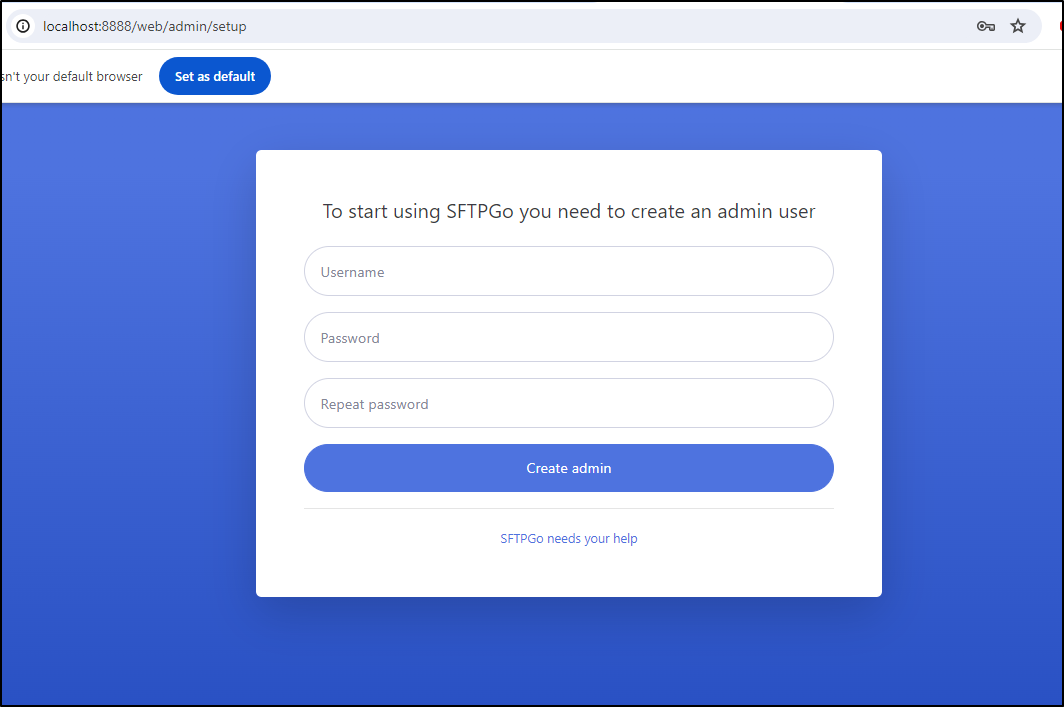

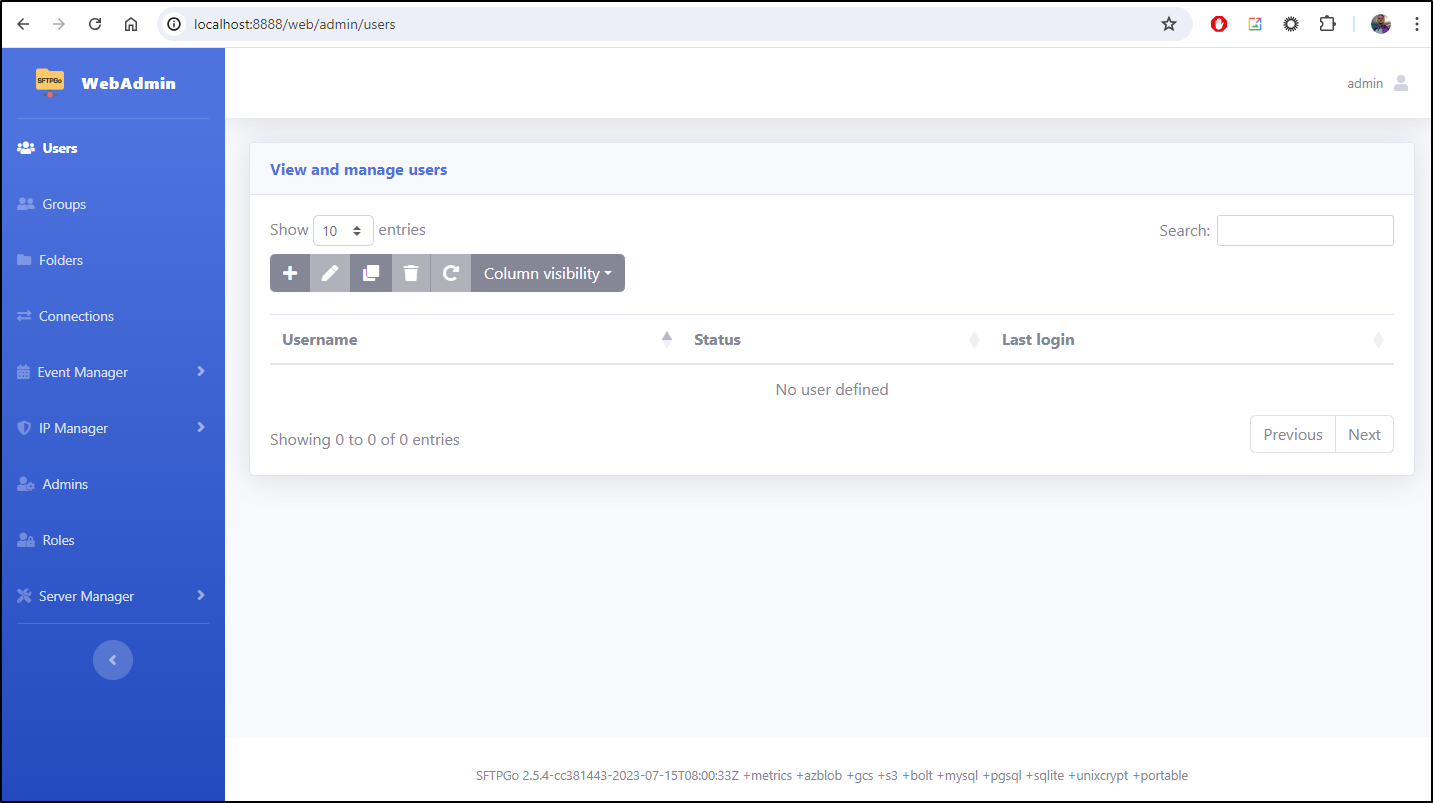

We can see an empty system at this stage, but we do have an admin user

If I log out and come back, we can see that admin account is persisted (so first one in is admin)

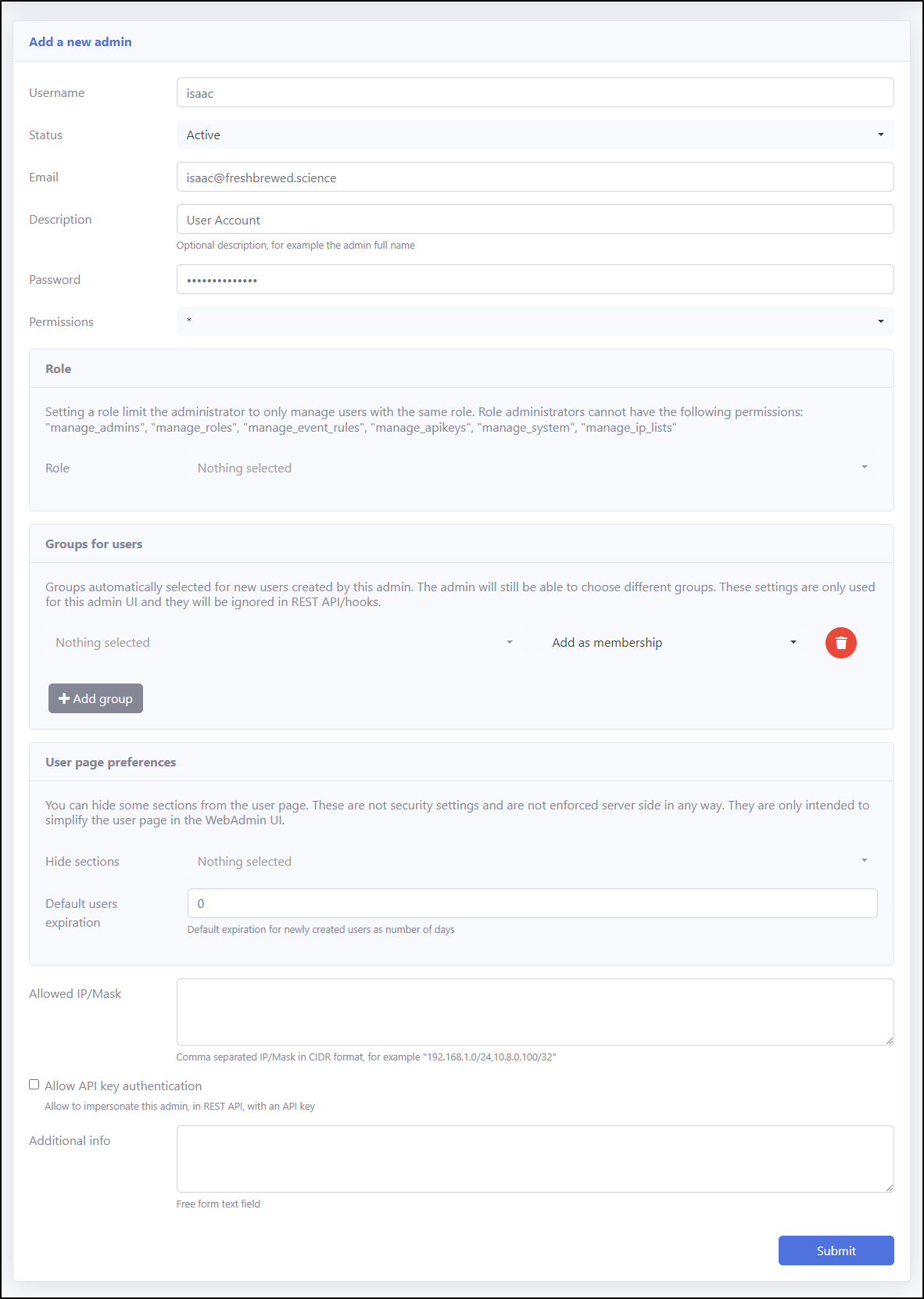

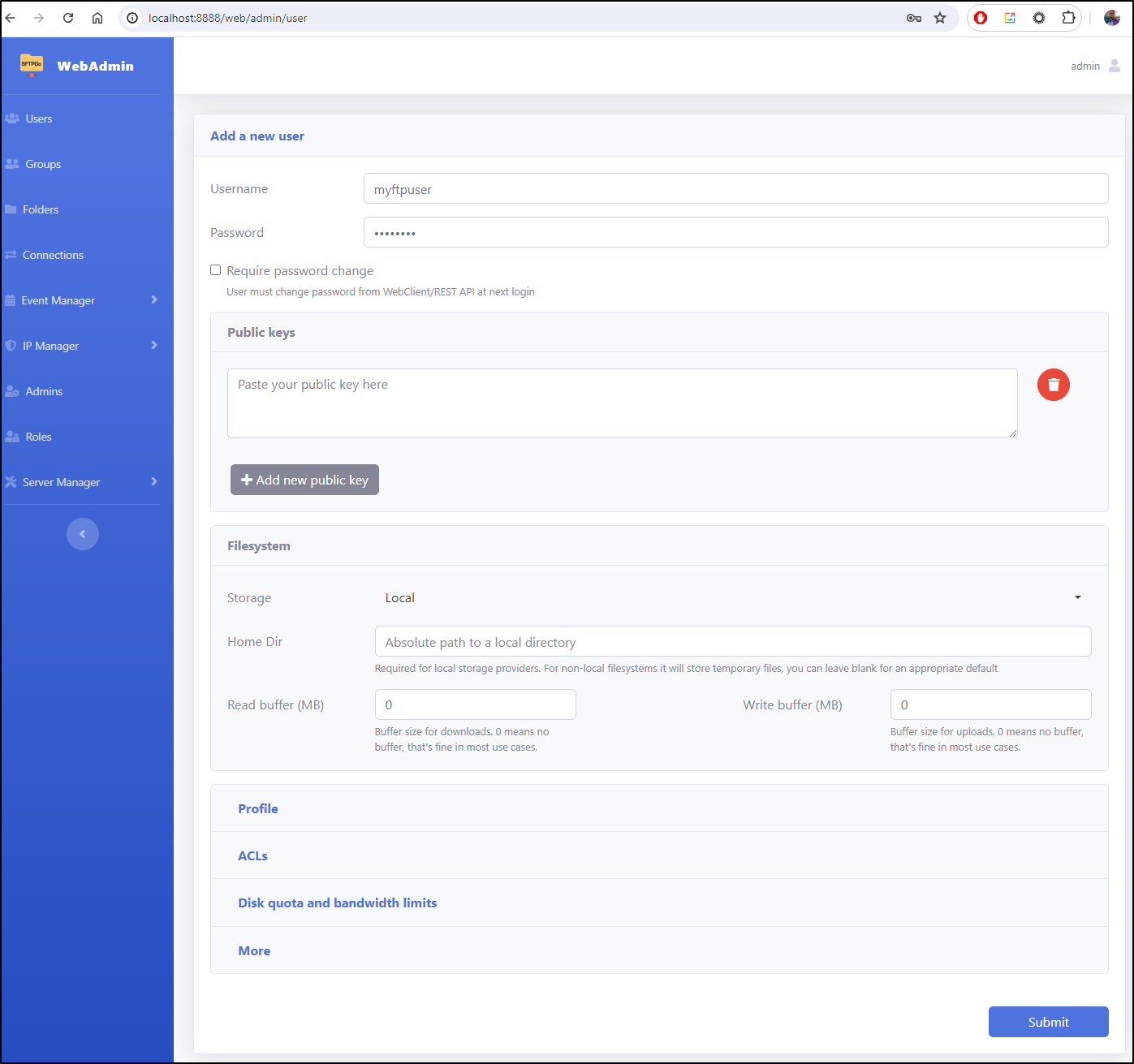

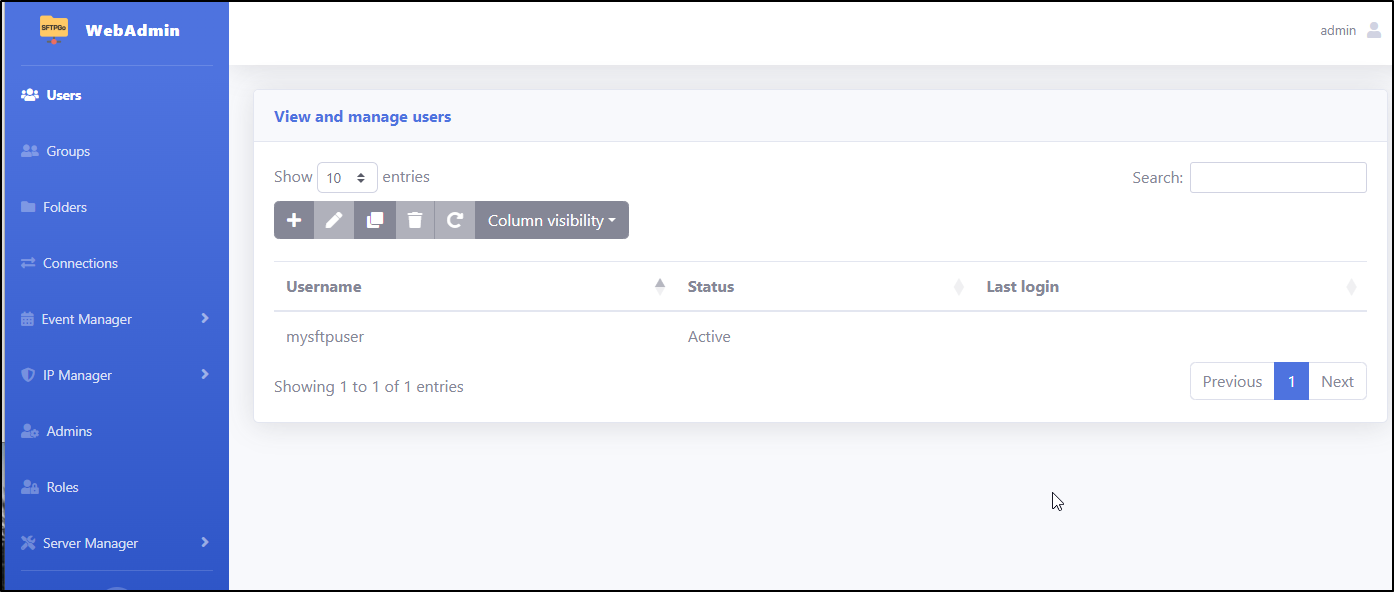

In users, I can add a new user

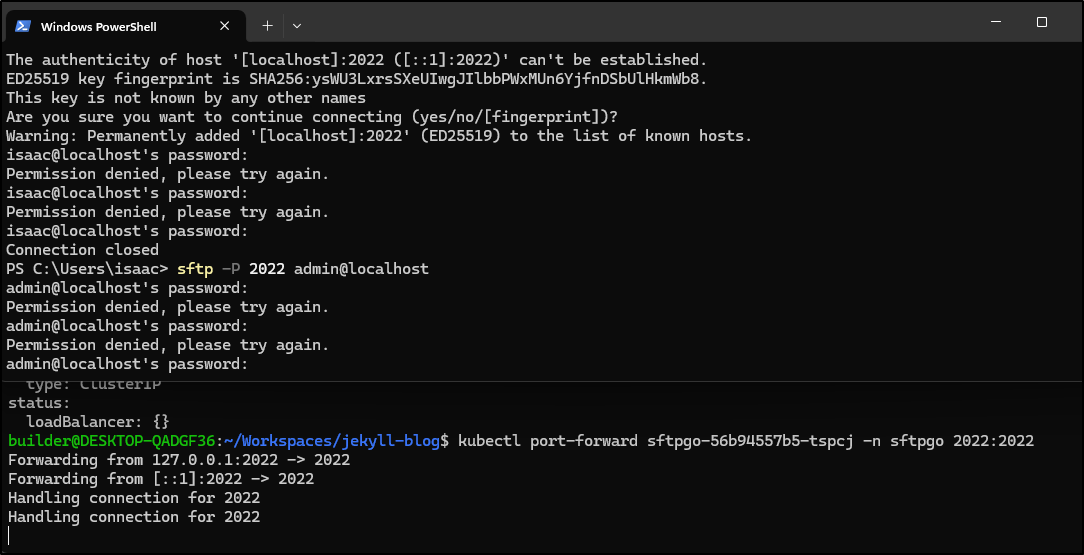

I thought I might be able to port-forward to 2022 on the pod

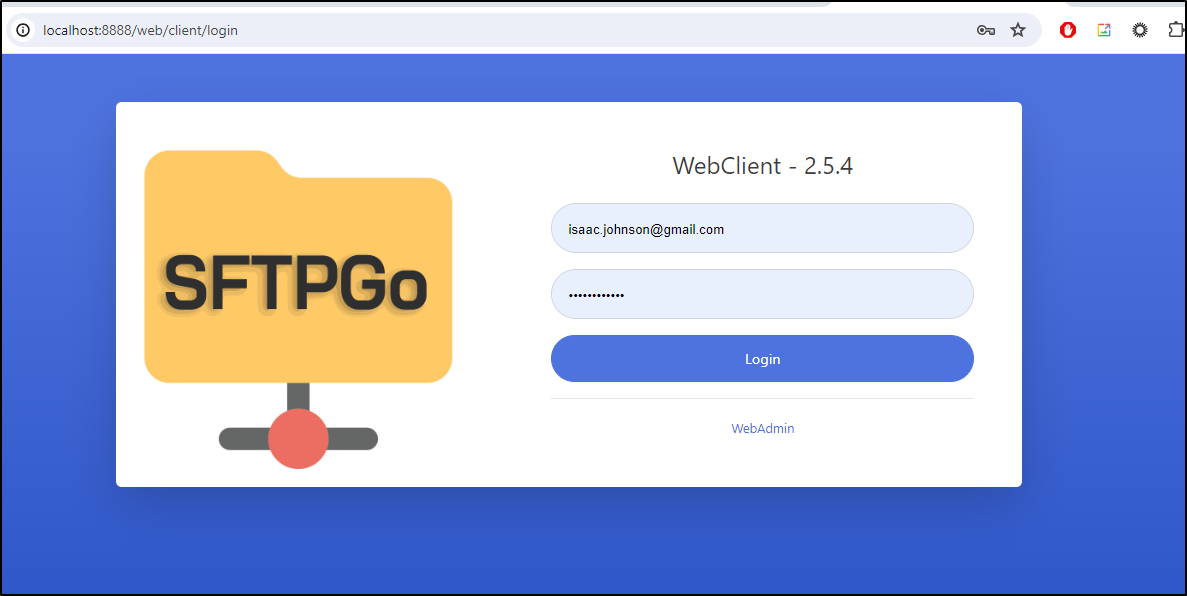

But my admin and “isaac” user does not accept the pass

That’s when I realized the “Users” for FTP need to be defined in “Users” not “Admins”.

Let’s add an ftp user

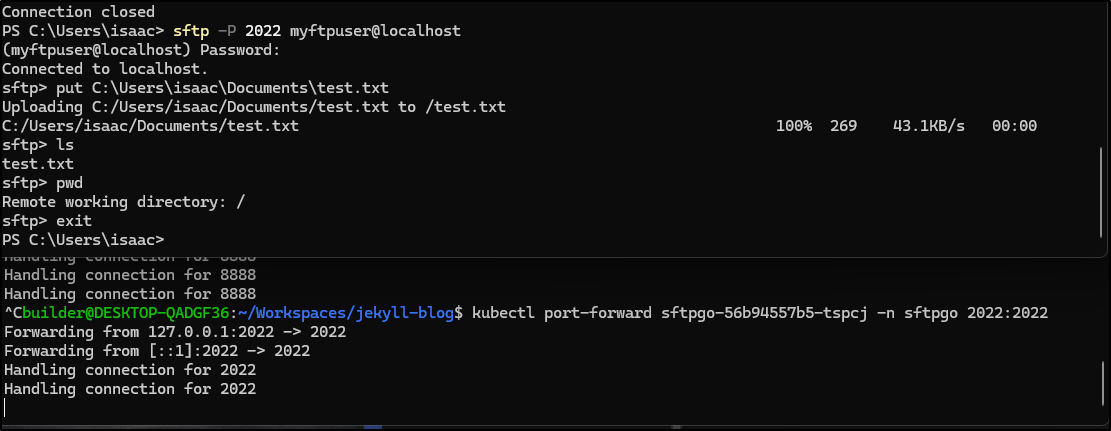

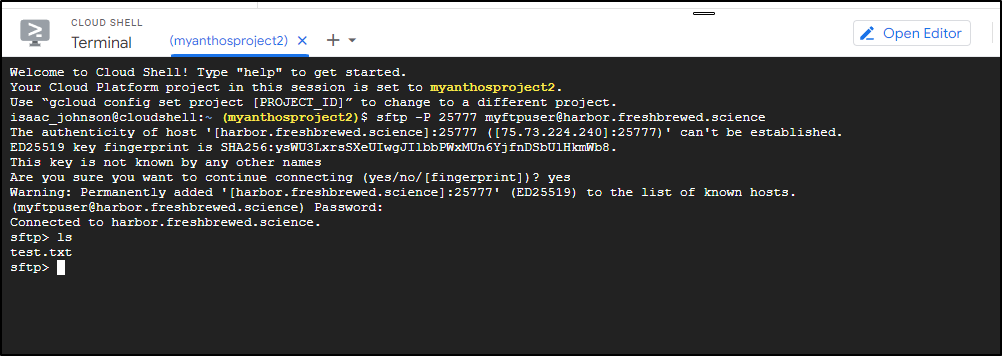

That worked just fine. I could push and list a file

Since my load balancing is done with NGinx, I likely will have some challenges with SFTP.

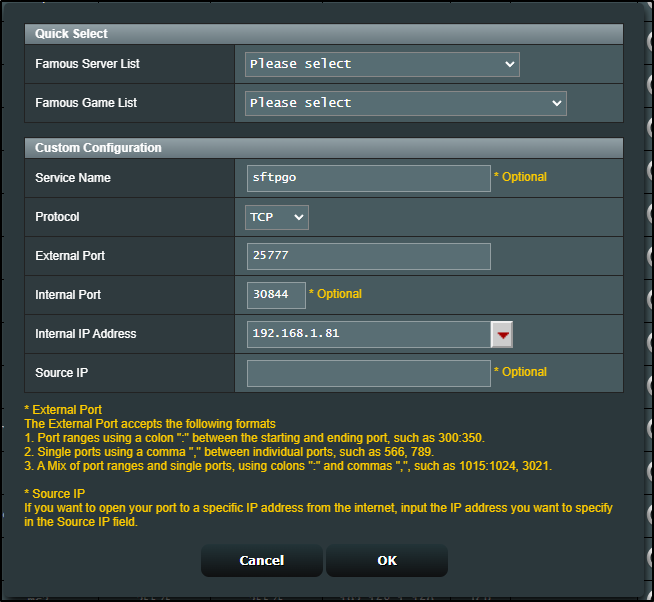

I figured that using a NodePort service might solve this.

I created a new NodePort service with the same selectors to send TCP over to 2022.

$ kubectl get svc -n sftpgo my-sftpgo-service -o yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2024-07-10T14:20:49Z"

labels:

app: my-sftpgo-service

name: my-sftpgo-service

namespace: sftpgo

resourceVersion: "5201202"

uid: 73a95eda-5fd7-49b5-8516-26c758a1c769

spec:

clusterIP: 10.43.82.171

clusterIPs:

- 10.43.82.171

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: 2022-2022

nodePort: 30844

port: 2022

protocol: TCP

targetPort: 2022

selector:

app.kubernetes.io/instance: sftpgo

app.kubernetes.io/name: sftpgo

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}

Which when launch shows up

$ kubectl get svc -n sftpgo

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

sftpgo ClusterIP 10.43.102.30 <none> 22/TCP,80/TCP,10000/TCP 31m

my-sftpgo-service NodePort 10.43.82.171 <none> 2022:30844/TCP 59s

I can now test

$ sftp -P 30844 myftpuser@192.168.1.81

The authenticity of host '[192.168.1.81]:30844 ([192.168.1.81]:30844)' can't be established.

ECDSA key fingerprint is SHA256:gIon3V2MTrSjuKOFIaml3i01hfYW3jZAm+yMTVptQwo.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '[192.168.1.81]:30844' (ECDSA) to the list of known hosts.

Password:

Connected to 192.168.1.81.

sftp> ls

test.txt

I would not even need to move this to my production cluster.

Knowing I have a NodePort, I could expose a port to direct traffic to a Node on that Test Cluster

I’ll now use a GCP Cloud Shell (just to get an external Linux instance) to test SFTP back to my pod in the test cluster

Then I’ll remove that profile from my firewall.

One thing you’ll note is that there are no PVCs.

$ kubectl get pvcs -n sftpgo

error: the server doesn't have a resource type "pvcs"

One quick and easy solution is to mount a dir on the host

We can use the values:

$ cat sftp-values.yaml

volumes:

- name: tmpdir

hostPath:

path: /tmp/

volumeMounts:

- name: tmpdir

mountPath: /tmp/tmpdir

Using those in a helm deploy

$ helm install sftpgo -n sftpgo -f ./sftp-values.yaml skm/sftpgo

I added a user with “/tmp” as the root dir

This will let me see the hostpath mount when I sftp

$ sftp -P 30844 mysftpuser@192.168.1.81

Password:

Connected to 192.168.1.81.

sftp> ls

tmpdir

sftp> cd tmpdir

sftp> put sftp-values.yaml

Uploading sftp-values.yaml to /tmpdir/sftp-values.yaml

sftp-values.yaml 100% 111 60.5KB/s 00:00

sftp> exit

Hopping onto the Kubernetes node, I can see that delivered file

builder@anna-MacBookAir:/tmp$ ls -ltra | tail -n3

drwxrwxr-x 18 builder builder 4096 Jul 10 03:00 jekyll

-rw-r--r-- 1 builder builder 111 Jul 10 09:42 sftp-values.yaml

drwxrwxrwt 29 root root 12288 Jul 10 09:42 .

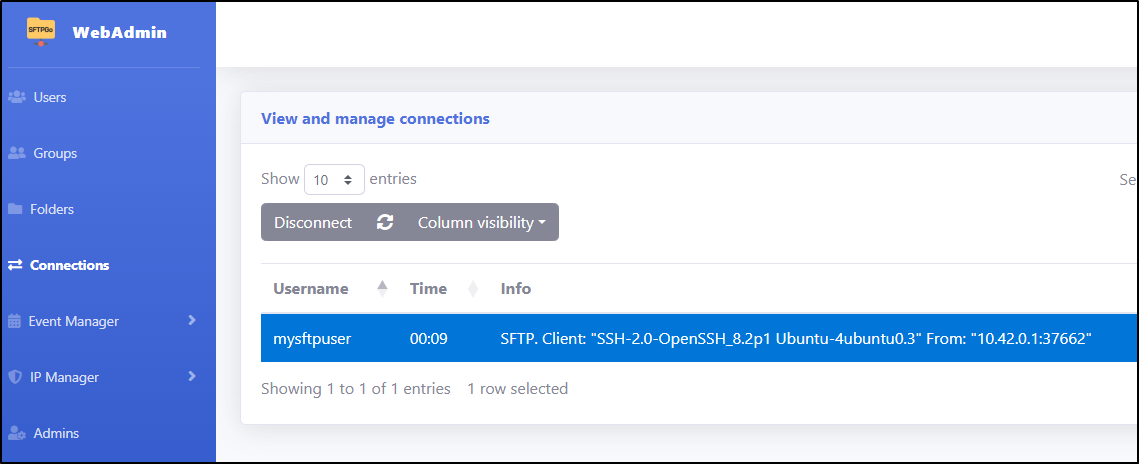

Connections

We can view active connections

Here you can see how a disconnect works

FileGator

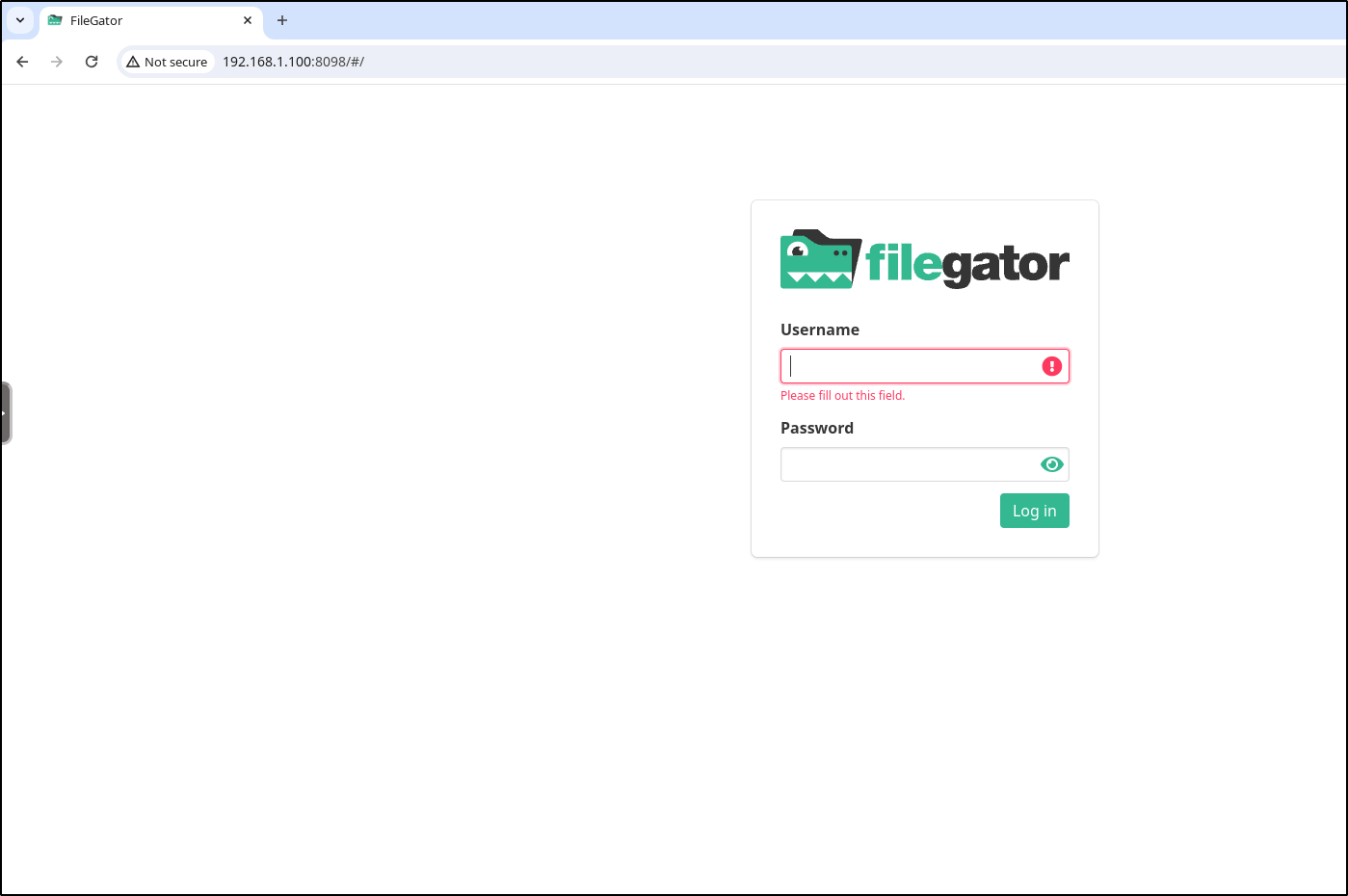

Another tool I was turned on to by a MariusHosting Post is Filegator. The Install Guide has a few docker options.

So let’s start with Docker

builder@builder-T100:~/filegator$ docker run -p 8098:8080 -d filegator/filegator

Unable to find image 'filegator/filegator:latest' locally

latest: Pulling from filegator/filegator

6177a7f9989f: Pull complete

bb4ea0ade393: Pull complete

064c8ff4a89f: Pull complete

835cacc3785c: Pull complete

e34a344384e1: Pull complete

be9df37f9cbb: Pull complete

c0a5847d4744: Pull complete

d60c0388eeb2: Pull complete

c7c72fbfe2e5: Pull complete

671bb7cbb446: Pull complete

82a30a053cc6: Pull complete

20f5530e4bb7: Pull complete

4b774ba9951f: Pull complete

d31e195367d5: Pull complete

d635b61874c0: Pull complete

c2beedeaa9c6: Pull complete

15d7ea74f7fc: Pull complete

4f4fb700ef54: Pull complete

a310975d10d2: Pull complete

6e1b9db332e4: Pull complete

7c5a8edf8f5a: Pull complete

ca5584a87aae: Pull complete

65285fb793ce: Pull complete

1f86e896400f: Pull complete

17726cdda671: Pull complete

d7fde4f92d1a: Pull complete

4f2d7d3472ea: Pull complete

Digest: sha256:9d40cebbeec0c7a8ee605deb6d8b8d44b08621e2158b8b54388e4dffafabd0cb

Status: Downloaded newer image for filegator/filegator:latest

516ac31e2554809419131375d806e8f08e8410433c74a8c66b5b28fae14dbc5c

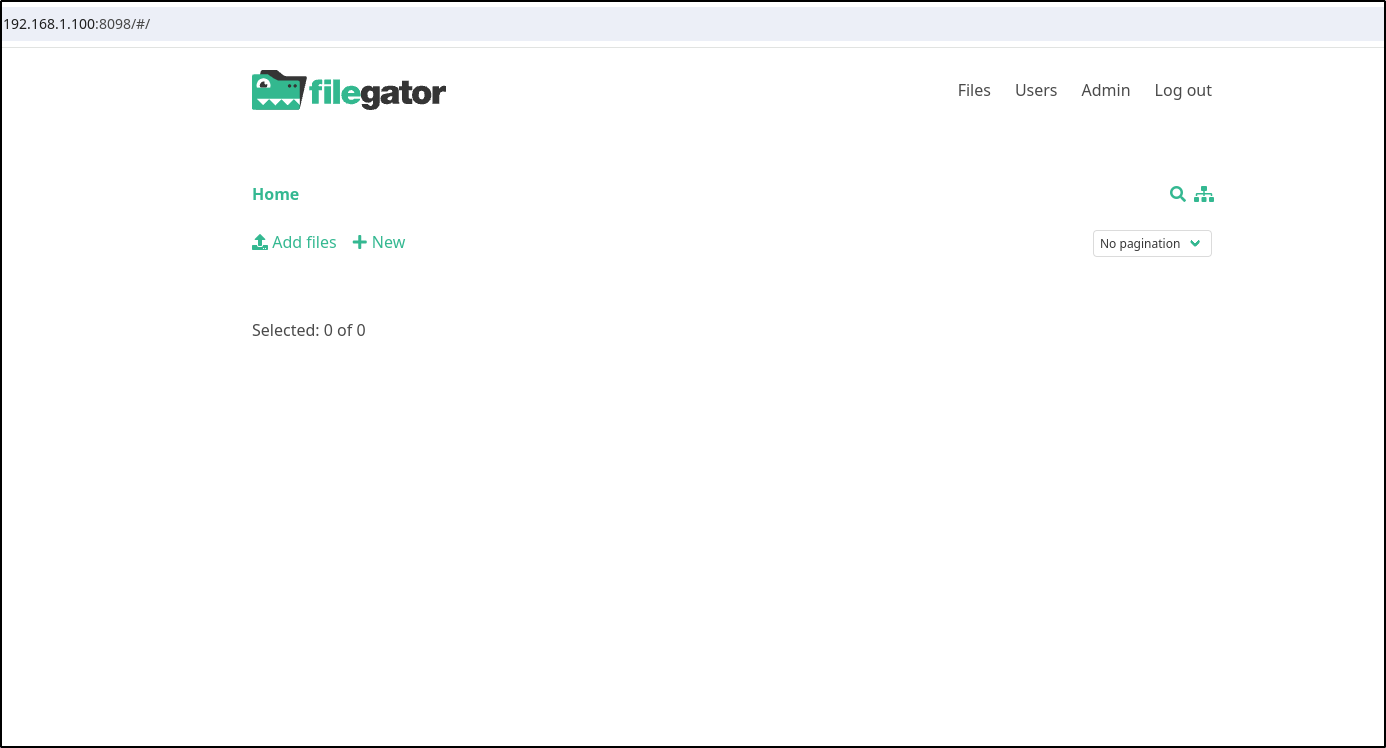

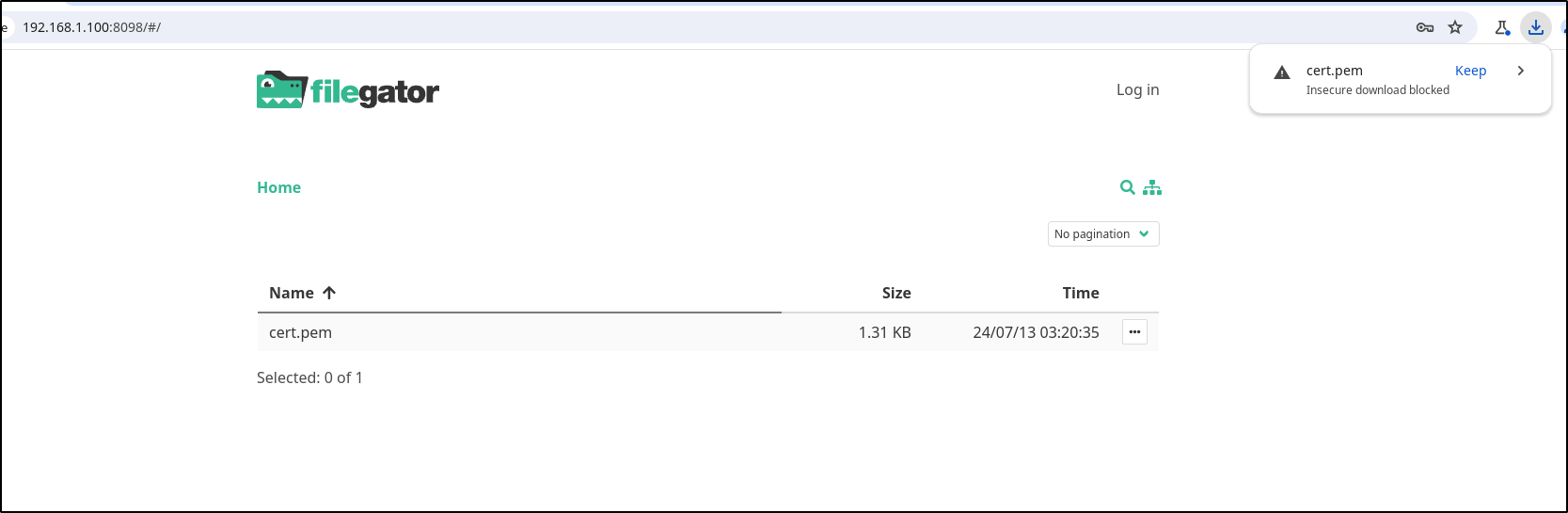

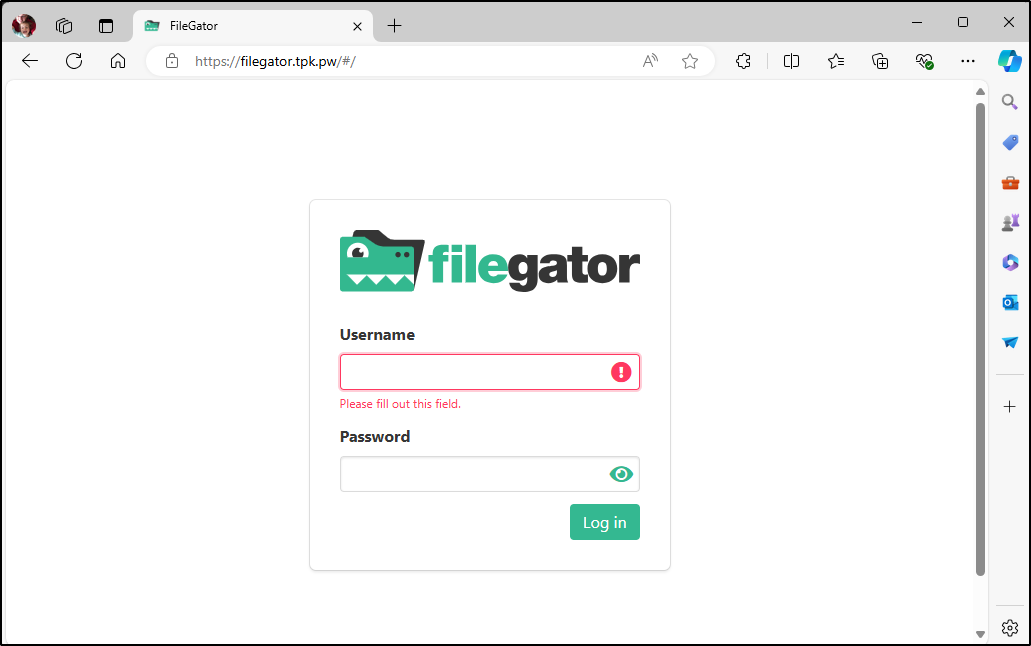

I can then login with the local IP

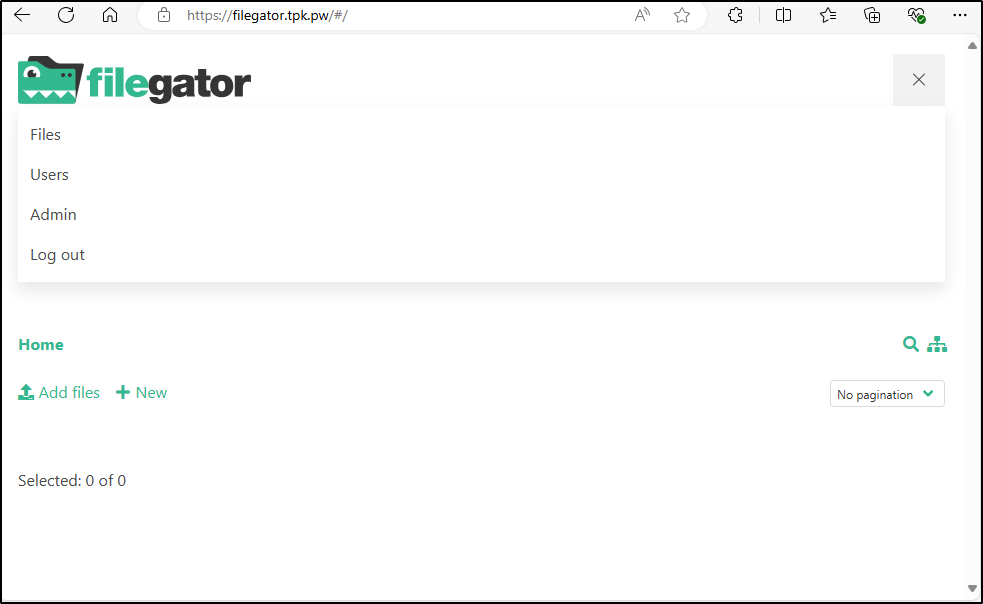

Logging in with admin and password admin123 shows us the main page

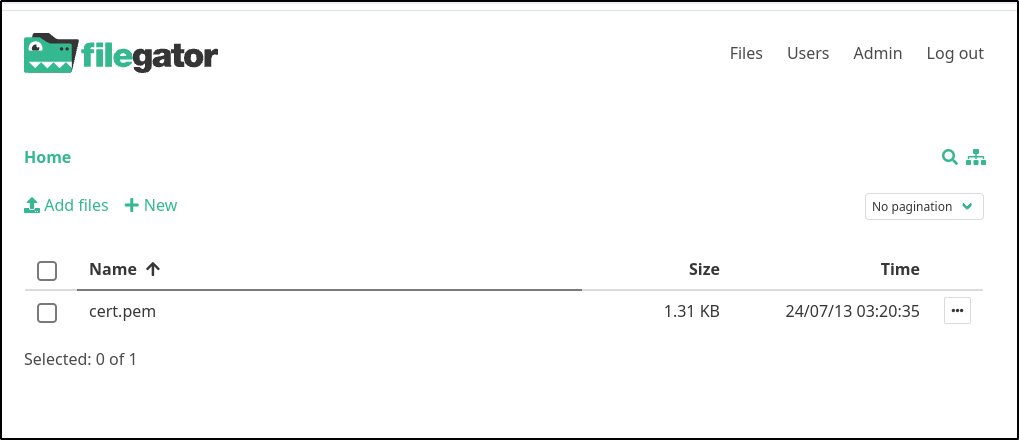

I can upload a file

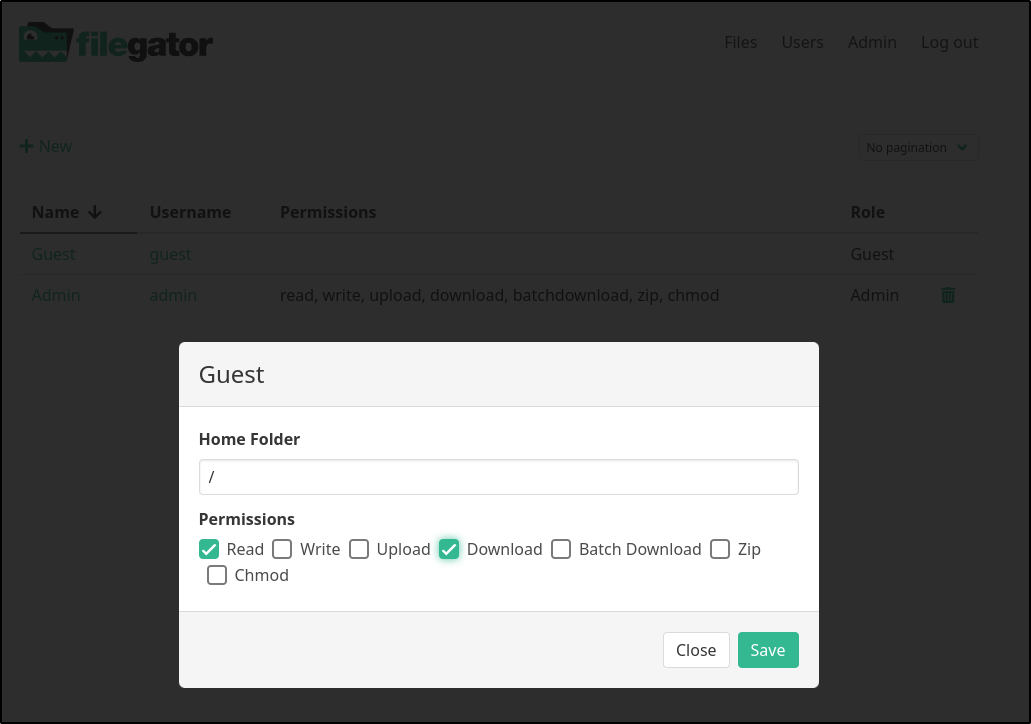

I can add users or change their settings. For instance, I changed the “guest” user to have Read and Download (default is no permissions)

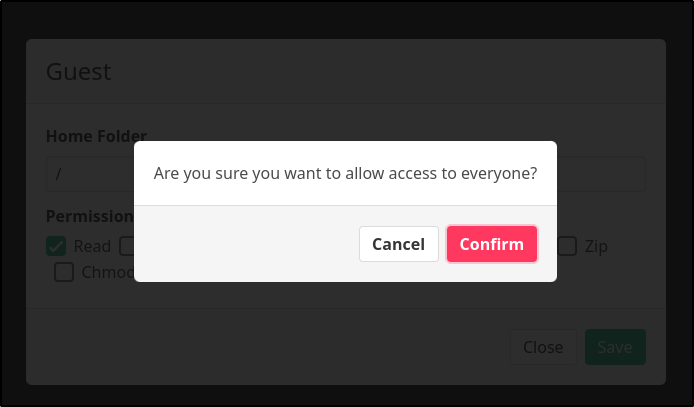

Which will confirm I’m okay opening this up to the world

I can now verify that anonymous users can view and download files

I did try stopping and starting the container. I verified the files persist on a stop/start

builder@builder-T100:~/filegator$ docker ps | grep gator

516ac31e2554 filegator/filegator "docker-php-entrypoi…" 3 hours ago Up 3 hours 80/tcp, 0.0.0.0:8098->8080/tcp, :::8098->8080/tcp thirsty_hawking

builder@builder-T100:~/filegator$ docker stop 516ac31e2554

516ac31e2554

builder@builder-T100:~/filegator$ docker start 516ac31e2554

516ac31e2554

Jumping on the container, it’s clear it uses two folders, /private and /repository to store changes and files

-rw-r--r-- 1 www-data www-data 12214 Apr 24 12:25 .phpunit.result.cache

drwxr-xr-x 1 root root 4096 Apr 24 12:26 ..

drwxr-xr-x 1 www-data www-data 4096 Apr 24 12:26 .

drwxrwxr-x 5 www-data www-data 4096 Jul 13 15:17 private

drwxrwxr-x 2 www-data www-data 4096 Jul 13 15:20 repository

www-data@516ac31e2554:~/filegator$ cd repository/

www-data@516ac31e2554:~/filegator/repository$ ls

cert.pem

www-data@516ac31e2554:~/filegator/repository$ pwd

/var/www/filegator/repository

www-data@516ac31e2554:~/filegator/repository$ ls -lra ../private/

total 36

-rwxrwxr-x 1 www-data www-data 314 Apr 24 12:23 users.json.blank

-rw-r--r-- 1 www-data www-data 326 Jul 13 17:47 users.json

drwxr-xr-x 2 www-data www-data 4096 Jul 13 15:20 tmp

drwxrwxr-x 2 www-data www-data 4096 Jul 13 15:17 sessions

drwxrwxr-x 2 www-data www-data 4096 Jul 13 15:19 logs

-rwxrwxr-x 1 www-data www-data 14 Apr 24 12:23 .htaccess

-rwxrwxr-x 1 www-data www-data 17 Apr 24 12:23 .gitignore

drwxr-xr-x 1 www-data www-data 4096 Apr 24 12:26 ..

drwxrwxr-x 5 www-data www-data 4096 Jul 13 15:17 .

I stopped and removed that container then launched with some volume mounts:

$ mkdir ./repository2 && chmod 777 ./repository2

$ mkdir ./private2 && chmod 777 ./private2

$ docker run -p 8098:8080 -d \

-v /home/builder/filegator/repository2:/var/www/filegator/repository \

-v /home/builder/filegator/private2:/var/www/filegator/private \

filegator/filegator

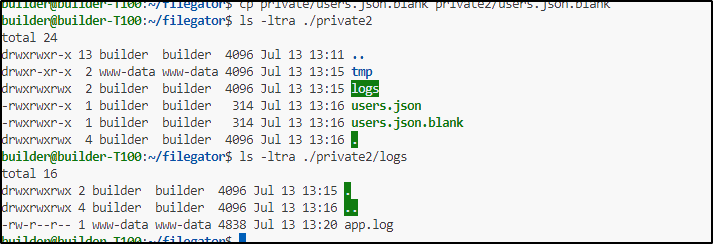

I did need to copy some blank files over. Seems it needs logs and a blank users.json

builder@builder-T100:~/filegator$ mkdir private2/logs

builder@builder-T100:~/filegator$ chmod 777 private2/logs

builder@builder-T100:~/filegator$ cp private/users.json.blank private2/

logs/ tmp/

builder@builder-T100:~/filegator$ cp private/users.json.blank private2/users.json

builder@builder-T100:~/filegator$ cp private/users.json.blank private2/users.json.blank

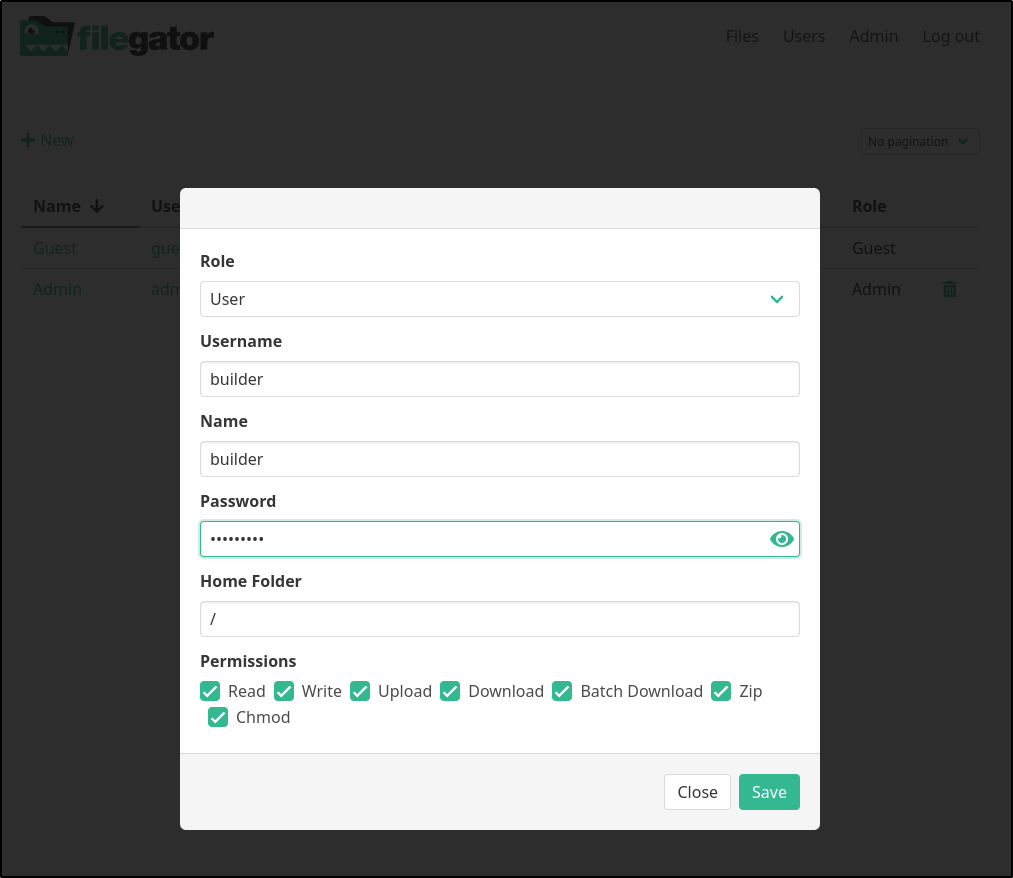

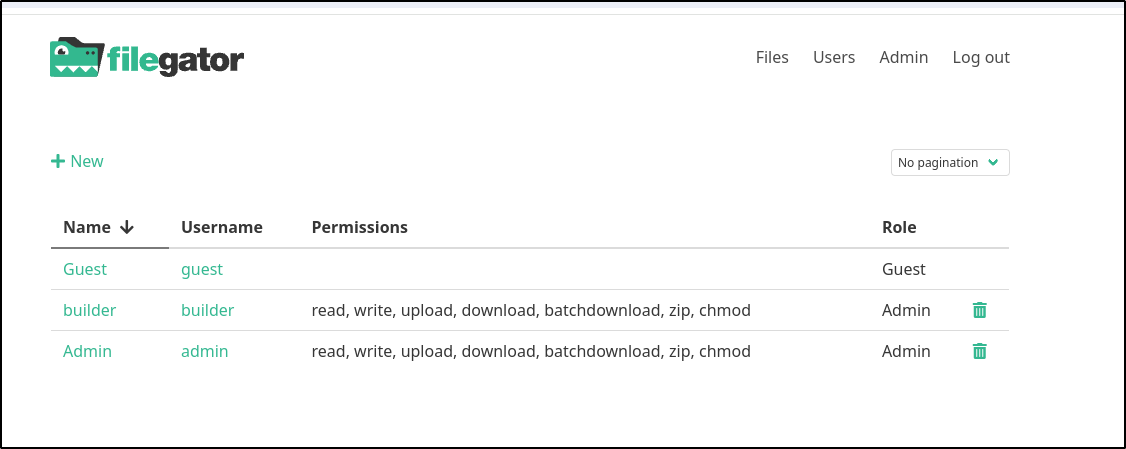

I’m now going to log in as admin and add a new user

I noticed the users did not persist. I’m guessing it’s permissions related

Once I set the perms

builder@builder-T100:~/filegator$ ls -ltra ./private2

total 24

drwxrwxr-x 13 builder builder 4096 Jul 13 13:11 ..

drwxr-xr-x 2 www-data www-data 4096 Jul 13 13:15 tmp

drwxrwxrwx 2 builder builder 4096 Jul 13 13:15 logs

-rwxrwxr-x 1 builder builder 314 Jul 13 13:16 users.json

-rwxrwxr-x 1 builder builder 314 Jul 13 13:16 users.json.blank

drwxrwxrwx 4 builder builder 4096 Jul 13 13:16 .

builder@builder-T100:~/filegator$ ls -ltra ./private2/logs

total 16

drwxrwxrwx 2 builder builder 4096 Jul 13 13:15 .

drwxrwxrwx 4 builder builder 4096 Jul 13 13:16 ..

-rw-r--r-- 1 www-data www-data 4838 Jul 13 13:20 app.log

builder@builder-T100:~/filegator$ chmod 777 ./private2/users.json

builder@builder-T100:~/filegator$ chmod 777 ./private2/users.json.blank

It worked

I tested a stop and remove, then recreating the container and found the users persisted. So now i have a solution that might be a bit more durable.

External ingress

Let’s expose this through the production cluster using an external endpoint.

First, I’ll need an A Record

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n filegator

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "7967af16-b75d-4114-924e-158157ed572f",

"fqdn": "filegator.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/filegator",

"name": "filegator",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

We can then apply an ingress

$ cat filegator.yaml

---

apiVersion: v1

kind: Endpoints

metadata:

name: filegator-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: filegatorint

port: 8098

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: filegator-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: filegator

port: 80

protocol: TCP

targetPort: 8098

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: filegator-external-ip

generation: 1

labels:

app.kubernetes.io/instance: filegatoringress

name: filegatoringress

spec:

rules:

- host: filegator.tpk.pw

http:

paths:

- backend:

service:

name: filegator-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- filegator.tpk.pw

secretName: filegator-tls

$ kubectl apply -f ./filegator.yaml

endpoints/filegator-external-ip created

service/filegator-external-ip created

ingress.networking.k8s.io/filegatoringress created

Once I see the cert satisified

$ kubectl get cert filegator-tls

NAME READY SECRET AGE

filegator-tls True filegator-tls 2m32s

I can now reach it externally with proper TLS

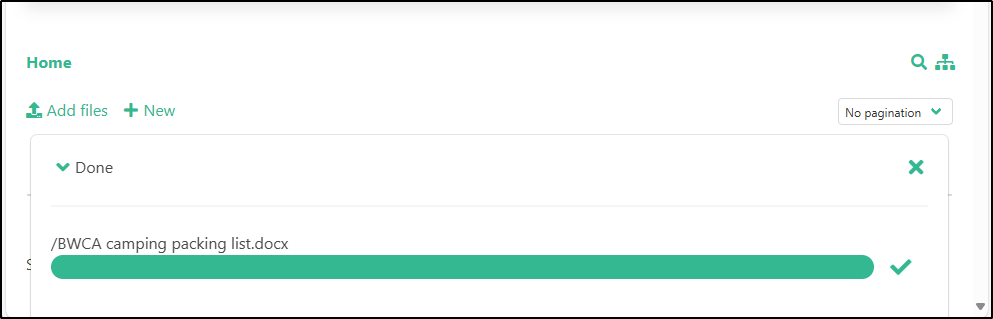

I logged in and changed the admin user password first

I tested uploading a file

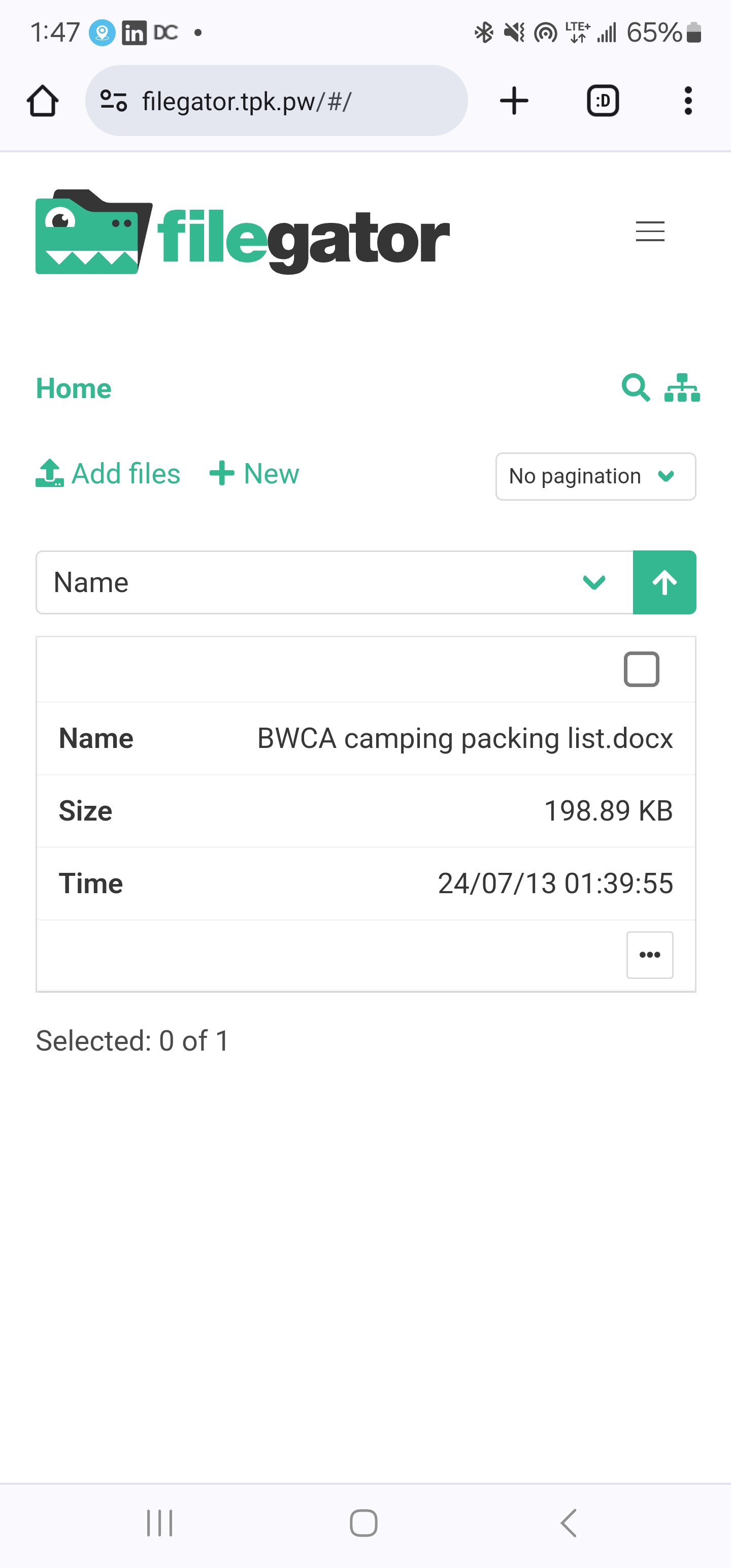

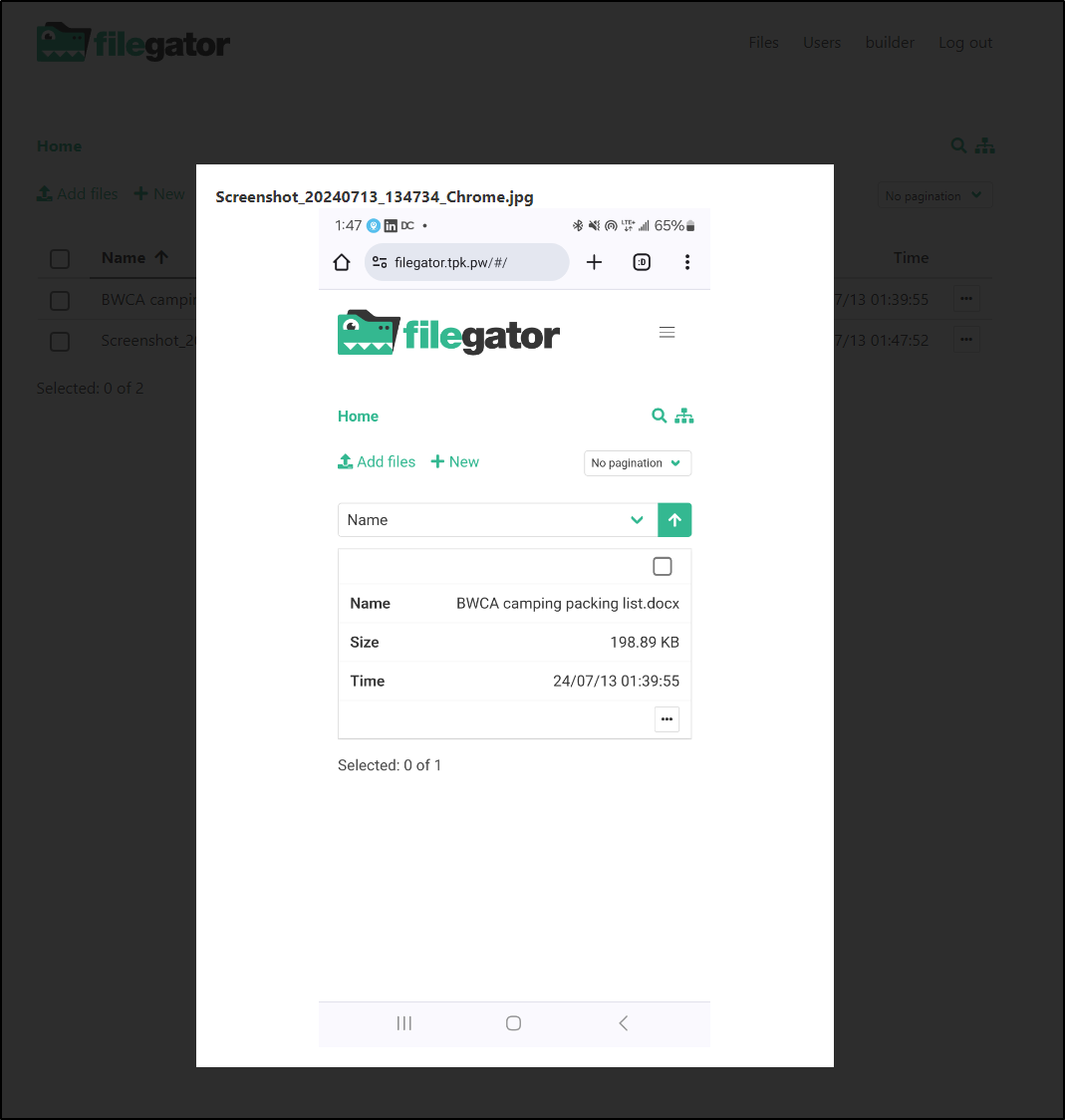

I also went on my phone and uploaded from there

Which back on the PC we can see that for small images, we get an inline preview

The files are ending up on the volume mount on my dockerhost

builder@builder-T100:~/filegator$ ls -ltra repository2/

total 484

drwxrwxr-x 13 builder builder 4096 Jul 13 13:11 ..

-rw-r--r-- 1 www-data www-data 203659 Jul 13 13:39 'BWCA camping packing list.docx'

-rw-r--r-- 1 www-data www-data 282301 Jul 13 13:47 Screenshot_20240713_134734_Chrome.jpg

drwxrwxrwx 2 builder builder 4096 Jul 13 13:47 .

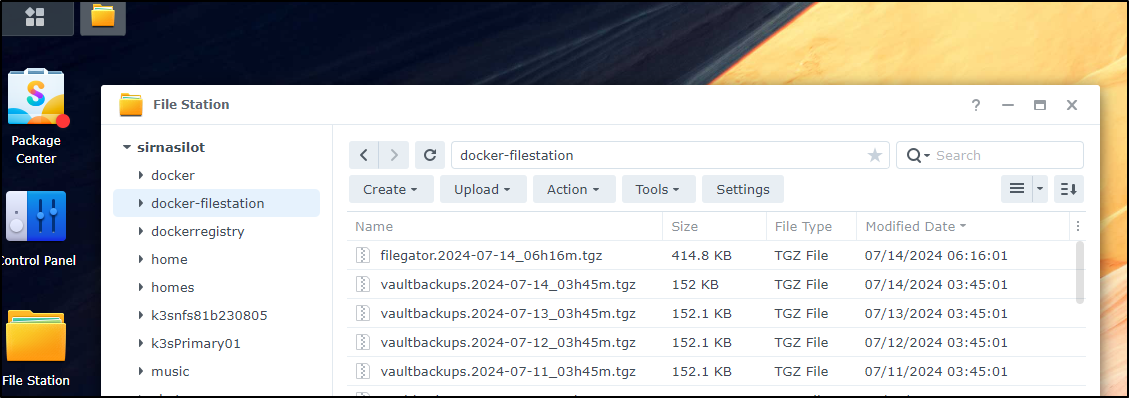

I could stop here and just swap mounts, but I think I will instead follow the pattern I used with Vaultwarden backups and use a crontab to backup periodically to a mounted fileshare to one of the NASes

In our /etc/fstab, we see the mount to a NAS

builder@builder-T100:~/filegator$ cat /etc/fstab | grep filestation

192.168.1.116:/volume1/docker-filestation /mnt/filestation nfs auto,nofail,noatime,nolock,intr,tcp,actimeo=1800 0 0

In my crontab, I’ll add a new backup file

builder@builder-T100:~/filegator/repository2$ crontab -l

@reboot /sbin/swapoff -a

45 3 * * * tar -zcvf /mnt/filestation/vaultbackups.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/vaultwarden/data

55 3 * * * tar -zcvf /mnt/filestation/filegator.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/filegator/repository2

I’ll do a quick test to see if it works

builder@builder-T100:~/filegator/repository2$ date

Sun Jul 14 06:14:59 AM CDT 2024

builder@builder-T100:~/filegator/repository2$ crontab -e

crontab: installing new crontab

builder@builder-T100:~/filegator/repository2$ crontab -l | grep filegator

16 6 * * * tar -zcvf /mnt/filestation/filegator.$(date '+\%Y-\%m-\%d_\%Hh\%Mm').tgz /home/builder/filegator/repository2

And I can confirm I now have a daily backup to the NAS

Summary

We tested out two different but quite nice Open-Source file transfer tools; SFTPGo and Filegator. SFTPGo was easy to setup and because it’s primarily for exposing SFTP, we were able to serve it from a test cluster through the firewall.

With Filegator, we quickly setup a docker instance on a dockerhost and tested locally. We then changed to exposing through a production Kubernetes cluster to allow us TLS ingress with a proper domain. Lastly, we tweaked the volume mounts and added a daily backup to durable storage to provide proper DR in case of a pod crash or docker failure.

Of the two, SFTPGo is far more configurable and could be used with groups and teams. There were a lot of user features I didn’t explore. Filegator, while simple, is also very easy to understand and use. Of the two, I’ll likely keep Filegator in the mix as a simple fast upload tool for on-the-go screenshots and sharing.