Published: Jun 13, 2024 by Isaac Johnson

I had a couple bookmarked apps I wanted to explore. The first being Obsidian which I became aware of from a this MariusHosting post. The second is Timesy which I also saw in my feed from another MariusHosting post.

Obsidian is a note-taking app akin to Trillium which we explored in April or Siyuan which we looked at as well.

Timesy is just a simple timer app, akin to some of the timer apps we looked at in March.

We’ll work to launch both in Kubernetes and expose with TLS. I’ll use AWS Route53 for one and Azure DNS for the other.

Let’s dig in!

Obsidian

Obsidian is a note-taking app from Linuxserver who brought us Codeserver and Darktable.

In their docs, they suggest firing it up in Docker

docker run -d \

--name=obsidian \

--security-opt seccomp=unconfined `#optional` \

-e PUID=1000 \

-e PGID=1000 \

-e TZ=Etc/UTC \

-p 3000:3000 \

-p 3001:3001 \

-v /path/to/config:/config \

--device /dev/dri:/dev/dri `#optional` \

--shm-size="1gb" \

--restart unless-stopped \

lscr.io/linuxserver/obsidian:latest

I’ll create the rough equivelant in Kubernetes

# Persistent Volume Claim

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: obsidian-config-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

# Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: obsidian

spec:

replicas: 1

selector:

matchLabels:

app: obsidian

template:

metadata:

labels:

app: obsidian

spec:

containers:

- name: obsidian

image: lscr.io/linuxserver/obsidian:latest

env:

- name: PUID

value: "1000"

- name: PGID

value: "1000"

- name: TZ

value: "Etc/UTC"

volumeMounts:

- name: config-volume

mountPath: /config

ports:

- containerPort: 3000

name: http

resources:

requests:

memory: "250Mi"

limits:

memory: "1Gi"

restartPolicy: Always # Adjust based on your needs (unless-stopped, Always)

volumes:

- name: config-volume

persistentVolumeClaim:

claimName: obsidian-config-pvc

---

# Service

apiVersion: v1

kind: Service

metadata:

name: obsidian

spec:

selector:

app: obsidian

ports:

- port: 3000

targetPort: 3000

protocol: TCP

name: http

type: ClusterIP

and apply it

$ kubectl apply -f ./obsidian.yaml

persistentvolumeclaim/obsidian-config-pvc unchanged

deployment.apps/obsidian created

service/obsidian unchanged

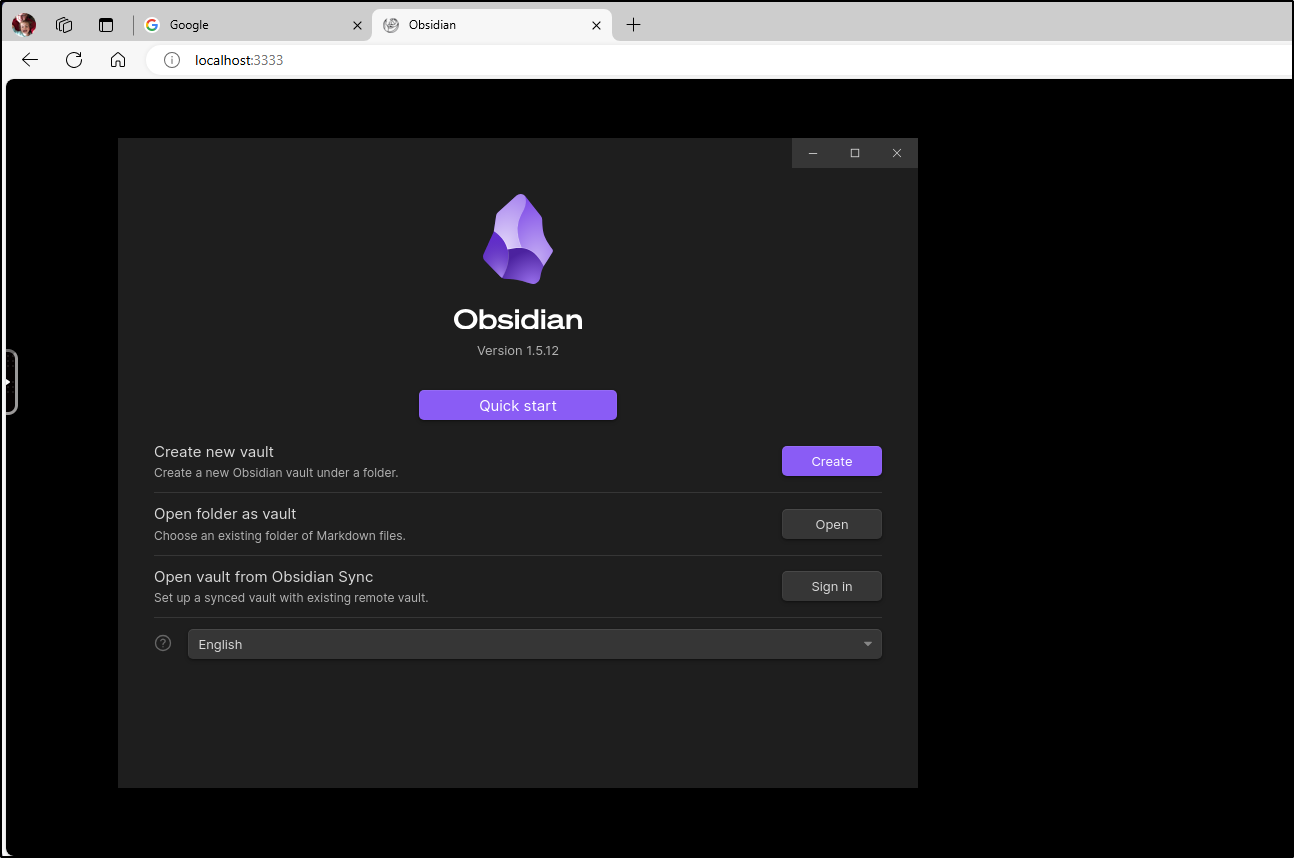

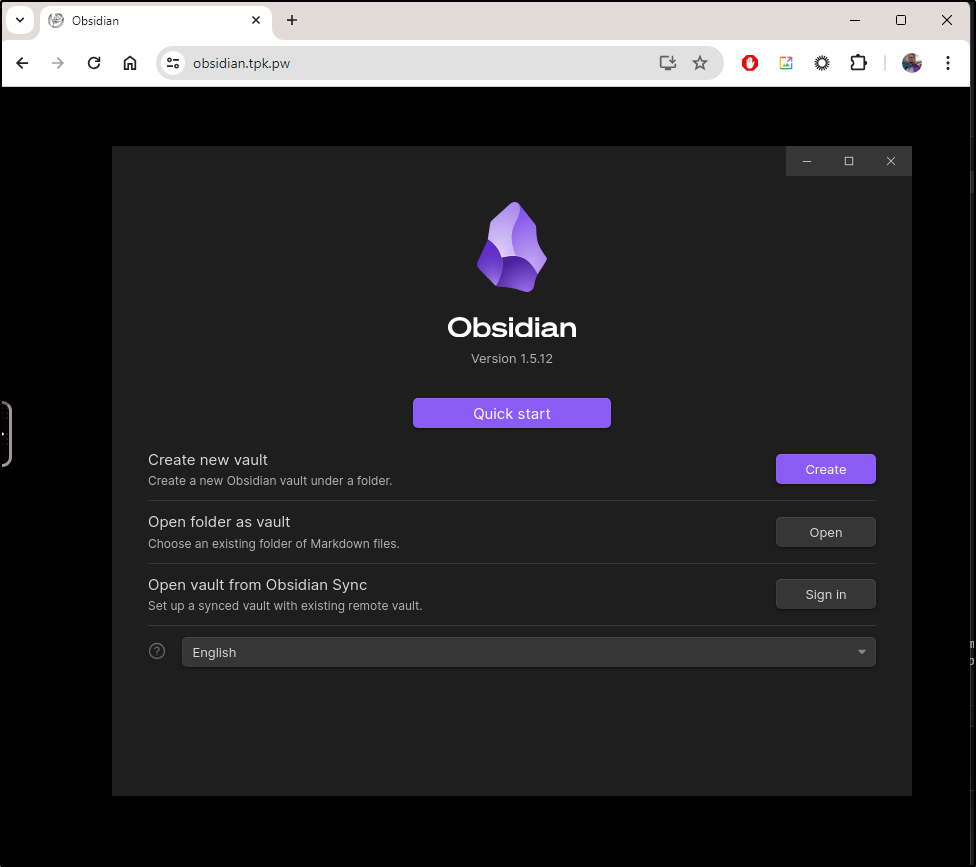

I can then port-forward to test

$ kubectl port-forward svc/obsidian 3333:3000

Forwarding from 127.0.0.1:3333 -> 3000

Forwarding from [::1]:3333 -> 3000

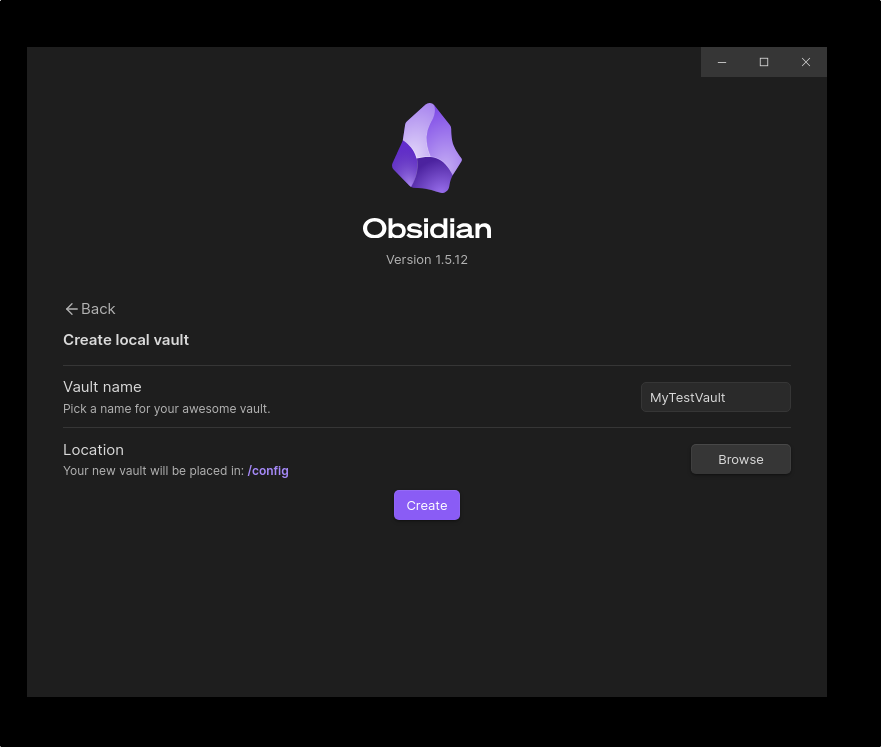

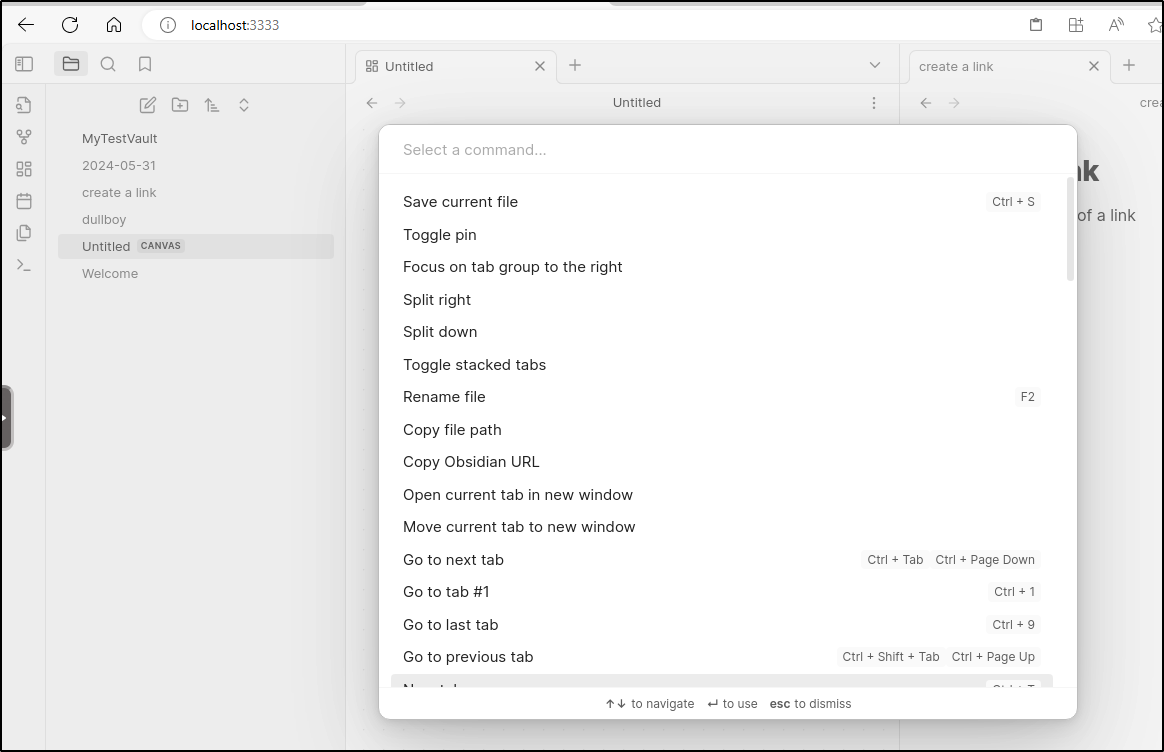

I can then create a new local vault

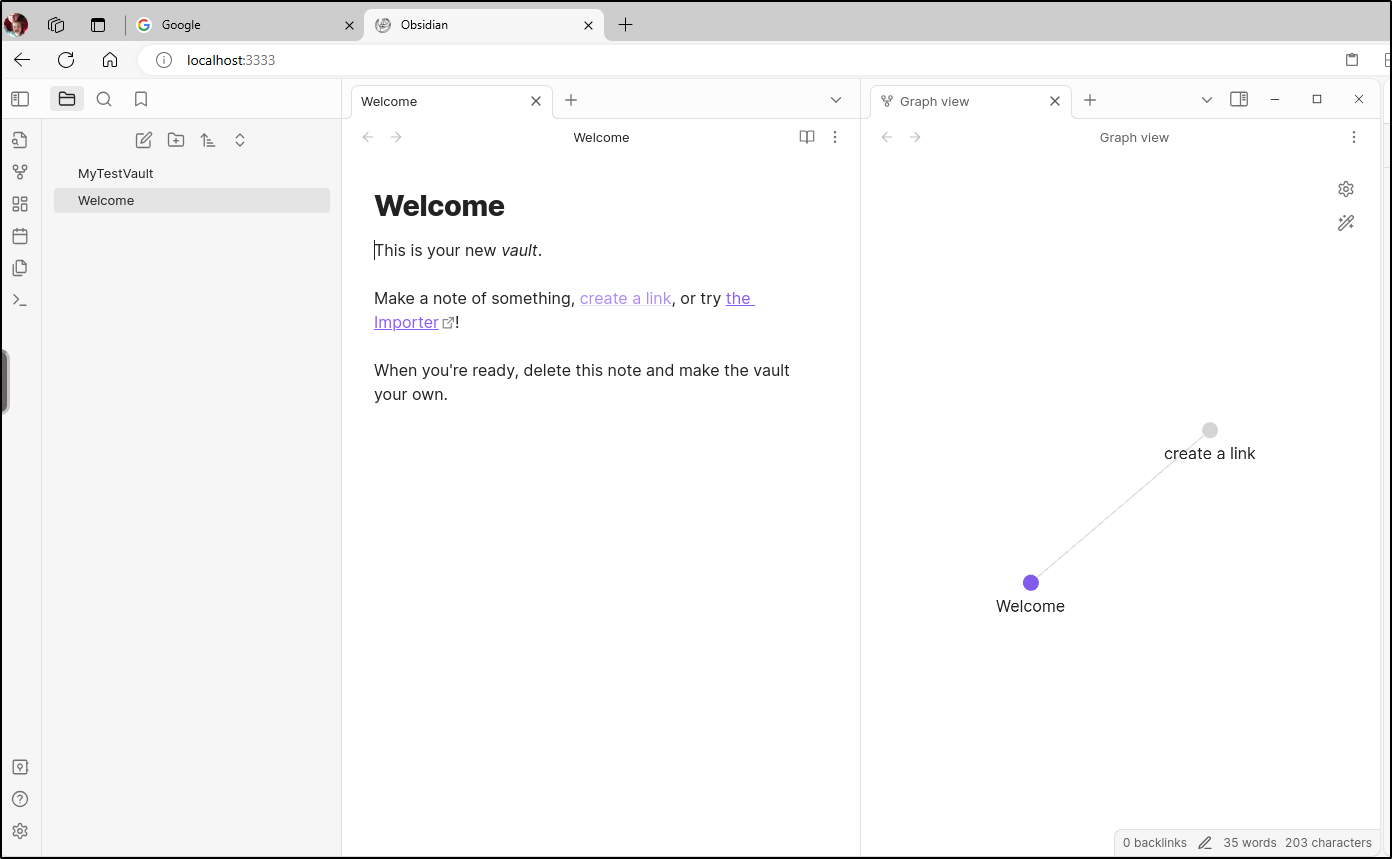

I now have the first page in the vault setup. Just a simple welcome page

Here we can see adding links, tables and editing in general:

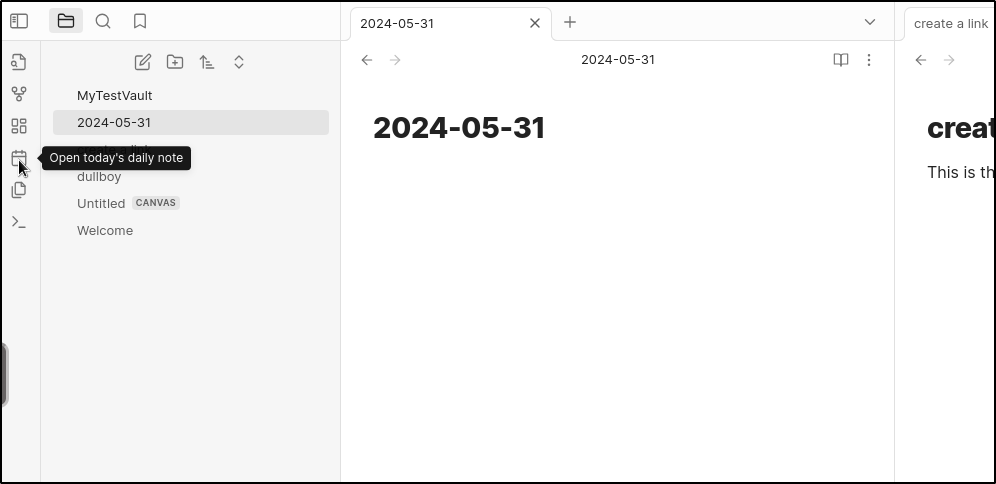

There are a few handy shortcuts like a “daily note”

and a command palette

I can exec into the pod and see, like the other linuxserver apps, it’s ust a desktop VM with VNC. The config is stored in the user home directory

$ kubectl exec -it obsidian-67f5484bd9-ff7b4 -- /bin/bash

root@obsidian-67f5484bd9-ff7b4:/# ls -ltra /config

total 48

drwxr-xr-x 1 root root 4096 May 30 23:52 ..

drwxr-xr-x 2 abc abc 4096 May 30 23:52 ssl

-rw-r--r-- 1 abc abc 37 May 30 23:52 .bashrc

drwx------ 3 abc abc 4096 May 30 23:52 .dbus

drwxr-xr-x 2 abc abc 4096 May 30 23:52 .fontconfig

drwx------ 3 abc abc 4096 May 30 23:52 .pki

drwxr-xr-x 5 abc abc 4096 May 30 23:52 .cache

drwxr-xr-x 4 abc abc 4096 May 30 23:55 .local

drwxr-xr-x 6 abc abc 4096 May 30 23:55 .config

drwxr-xr-x 4 abc abc 4096 May 30 23:55 .XDG

drwxrwxrwx 11 abc abc 4096 May 30 23:56 .

drwxr-xr-x 3 abc abc 4096 May 31 00:05 MyTestVault

root@obsidian-67f5484bd9-ff7b4:/# du -chs /config/MyTestVault/

52K /config/MyTestVault/

52K total

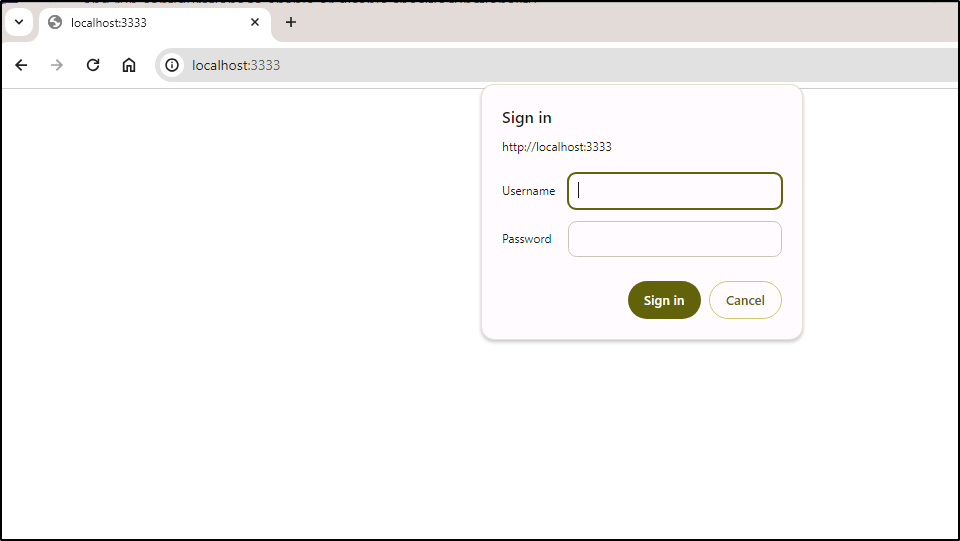

I believe I can add a custom user and password to enable some kind of basic auth

$ cat obsidian.yaml

# Persistent Volume Claim

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: obsidian-config-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

# Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: obsidian

spec:

replicas: 1

selector:

matchLabels:

app: obsidian

template:

metadata:

labels:

app: obsidian

spec:

containers:

- name: obsidian

image: lscr.io/linuxserver/obsidian:latest

env:

- name: PUID

value: "1000"

- name: PGID

value: "1000"

- name: TZ

value: "Etc/UTC"

- name: CUSTOM_USER

value: "builder"

- name: PASSWORD

value: "asdfasdf"

volumeMounts:

- name: config-volume

mountPath: /config

ports:

- containerPort: 3000

name: http

resources:

requests:

memory: "250Mi"

limits:

memory: "1Gi"

restartPolicy: Always # Adjust based on your needs (unless-stopped, Always)

volumes:

- name: config-volume

persistentVolumeClaim:

claimName: obsidian-config-pvc

---

# Service

apiVersion: v1

kind: Service

metadata:

name: obsidian

spec:

selector:

app: obsidian

ports:

- port: 3000

targetPort: 3000

protocol: TCP

name: http

type: ClusterIP

and apply to update

$ kubectl apply -f ./obsidian.yaml

persistentvolumeclaim/obsidian-config-pvc unchanged

deployment.apps/obsidian configured

service/obsidian unchanged

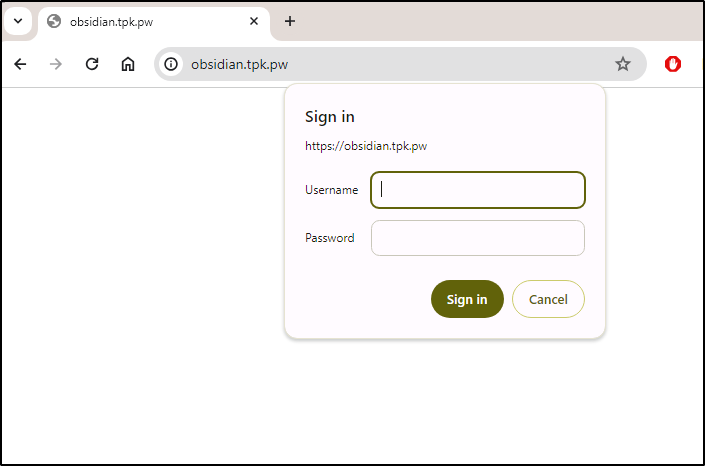

Let’s see if basic auth survives HTTPS ingress (usually its limited to HTTP)

I’ll create an A record

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n obsidian

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "390f3c1d-d23d-40eb-96e5-ecbeded90ae9",

"fqdn": "obsidian.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/obsidian",

"name": "obsidian",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I’ll need to redeploy in my primary cluster as thus far this has just been in test

$ kubectl apply -f ./obsidian.yaml

persistentvolumeclaim/obsidian-config-pvc created

deployment.apps/obsidian created

service/obsidian created

Then I’ll apply an ingress

$ cat obsidian.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

name: obsidian-ingress

spec:

rules:

- host: obsidian.tpk.pw

http:

paths:

- backend:

service:

name: obsidian

port:

number: 3000

path: /

pathType: Prefix

tls:

- hosts:

- obsidian.tpk.pw

secretName: obsidian-tls

$ kubectl apply -f ./obsidian.ingress.yaml

ingress.networking.k8s.io/obsidian-ingress created

When I saw the cert applied

$ kubectl get cert obsidian-tls

NAME READY SECRET AGE

obsidian-tls True obsidian-tls 2m4s

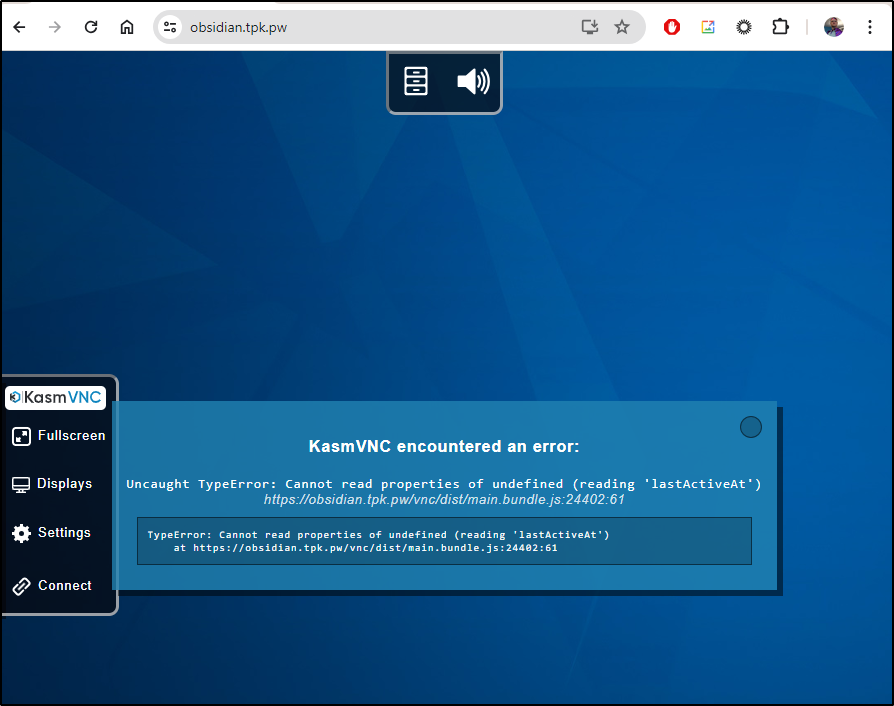

But I get a crash when I log in

The errors in the log show this is a websocket app

[ls.io-init] done.

Obt-Message: Xinerama extension is not present on the server

2024-05-31 00:25:01,333 [INFO] websocket 0: got client connection from 127.0.0.1

2024-05-31 00:25:01,333 [INFO] websocket 0: /websockify request failed websocket checks, not a GET request, or missing Upgrade header

2024-05-31 00:25:01,333 [INFO] websocket 0: 127.0.0.1 198.176.160.161 - "GET /websockify HTTP/1.1" 404 180

2024-05-31 00:25:45,154 [INFO] websocket 1: got client connection from 127.0.0.1

2024-05-31 00:25:45,154 [INFO] websocket 1: /websockify request failed websocket checks, not a GET request, or missing Upgrade header

2024-05-31 00:25:45,155 [INFO] websocket 1: 127.0.0.1 198.176.160.161 - "GET /websockify HTTP/1.1" 404 180

I’ll add the “websocket” annotation

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: obsidian

name: obsidian-ingress

spec:

rules:

- host: obsidian.tpk.pw

http:

paths:

- backend:

service:

name: obsidian

port:

number: 3000

path: /

pathType: Prefix

tls:

- hosts:

- obsidian.tpk.pw

secretName: obsidian-tls

Then apply

builder@LuiGi:~/Workspaces/miscapps-06$ kubectl delete -f obsidian.ingress.yaml

ingress.networking.k8s.io "obsidian-ingress" deleted

builder@LuiGi:~/Workspaces/miscapps-06$ kubectl apply -f ./obsidian.ingress.yaml

ingress.networking.k8s.io/obsidian-ingress created

which works

If we forget our password, we can also fetch it from Kubernetes

$ kubectl get deployments obsidian -o json | jq '.spec.template.spec.containers[] | .env[] | select(.name == "CUSTOM_USER") | .value'

"builder"

$ kubectl get deployments obsidian -o json | jq '.spec.template.spec.containers[] | .env[] | select(.name == "PASSWORD") | .value'

"asdfasdfasdf"

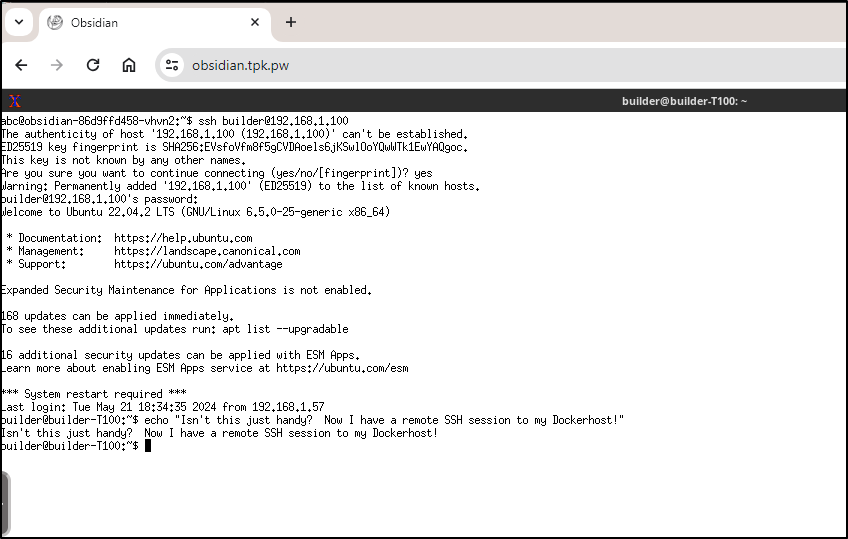

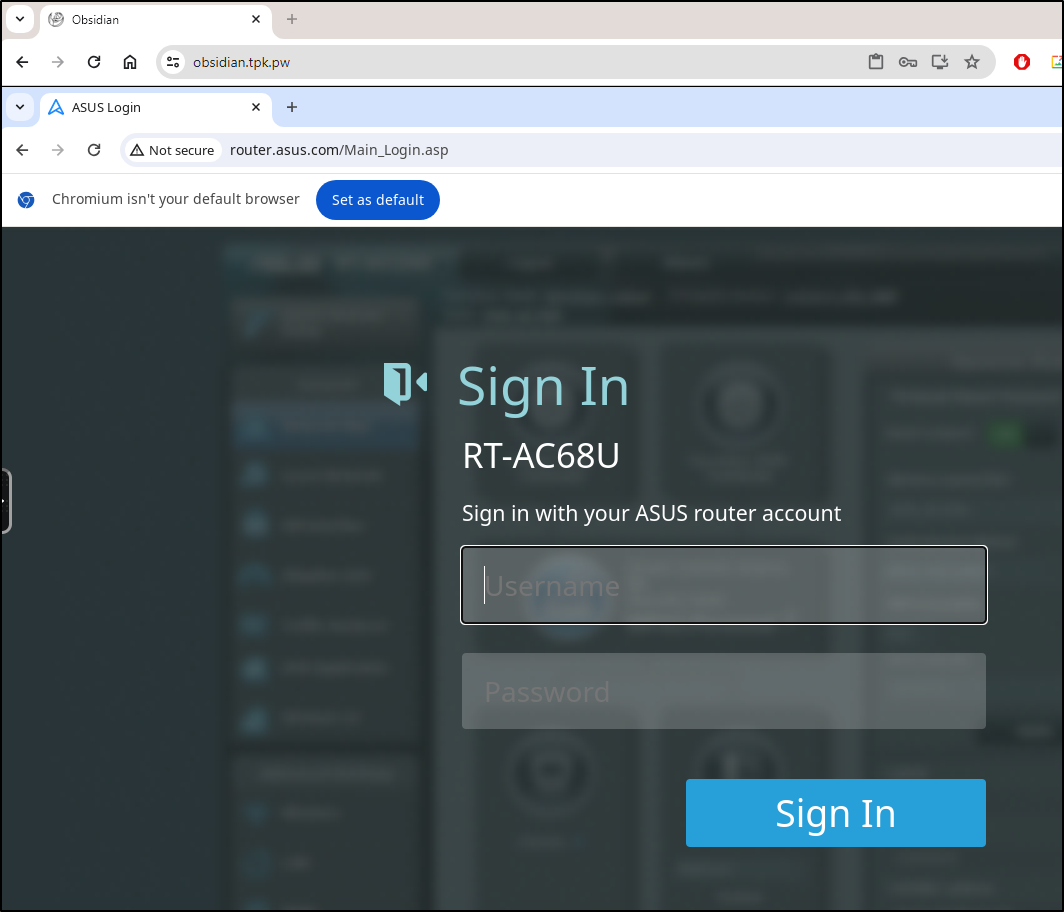

As we can see below, we can easily use it for notes, but one can also just kill the app and launch a local browser and/or a local xterm to be a remote shell:

XTerm shell:

The browser is even handier - I now have an easy way to manage my router which has a web interface, but is restricted to local traffic

If it isn’t clear how I launch the Obsidian app or the Chromium/Xterm, it’s just a “right click” in the middle of the screen to bring up the pop-up launcher menu

Timsy

Another app I bookmarked from a MariusHosting blog was Timesy.

The Marius article suggests using docker compose:

version: "3.9"

services:

timesy:

container_name: Timesy

image: ghcr.io/remvze/timesy:latest

shm_size: 5g

security_opt:

- no-new-privileges:false

ports:

- 3439:8080

restart: on-failure:5

Let’s try and make that into a basic kubernetes manifest

$ cat timsy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: timesy-deployment

spec:

replicas: 1

selector:

matchLabels:

app: timesy

template:

metadata:

labels:

app: timesy

spec:

containers:

- name: timesy

image: ghcr.io/remvze/timesy:latest

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: timesy-service

spec:

selector:

app: timesy

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: ClusterIP

we can then apply

$ kubectl apply -f ./timsy.yaml

deployment.apps/timesy-deployment created

service/timesy-service created

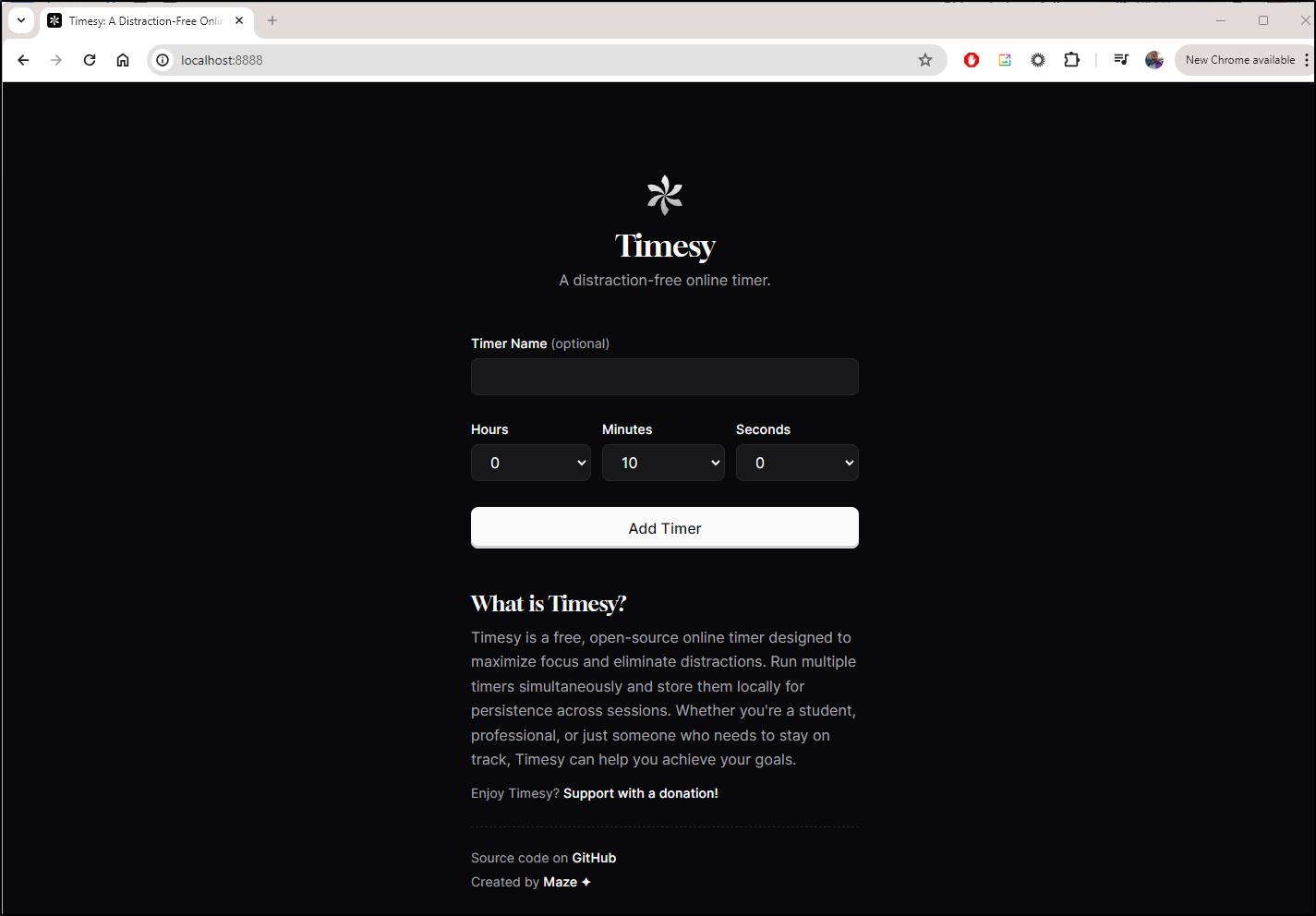

I’ll port-forward

$ kubectl port-forward svc/timesy-service 8888:80

Forwarding from 127.0.0.1:8888 -> 8080

Forwarding from [::1]:8888 -> 8080

Handling connection for 8888

Handling connection for 8888

And see it running without issue

Let’s skip right to routing traffic.

I’ll use AWS Route53 this time

$ cat r53-timesy.json

{

"Comment": "CREATE timesy fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "timesy.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-t

imesy.json

{

"ChangeInfo": {

"Id": "/change/C07953101QG3ZGV6NULX8",

"Status": "PENDING",

"SubmittedAt": "2024-05-31T11:17:28.562Z",

"Comment": "CREATE timesy fb.s A record "

}

}

Even though I doubt it uses Websocket, I’ll leave in the annotation on the Ingress defintion

$ cat timesy.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: timesy-service

name: timesy

spec:

rules:

- host: timesy.freshbrewed.science

http:

paths:

- backend:

service:

name: timesy-service

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- timesy.freshbrewed.science

secretName: timesy-tls

Like before, I’ll watch for the cert to get satisified

$ kubectl get cert timesy-tls

NAME READY SECRET AGE

timesy-tls False timesy-tls 36s

$ kubectl get cert timesy-tls

NAME READY SECRET AGE

timesy-tls False timesy-tls 86s

$ kubectl get cert timesy-tls

NAME READY SECRET AGE

timesy-tls True timesy-tls 110s

We can now hit Timesy on https://timesy.freshbrewed.science

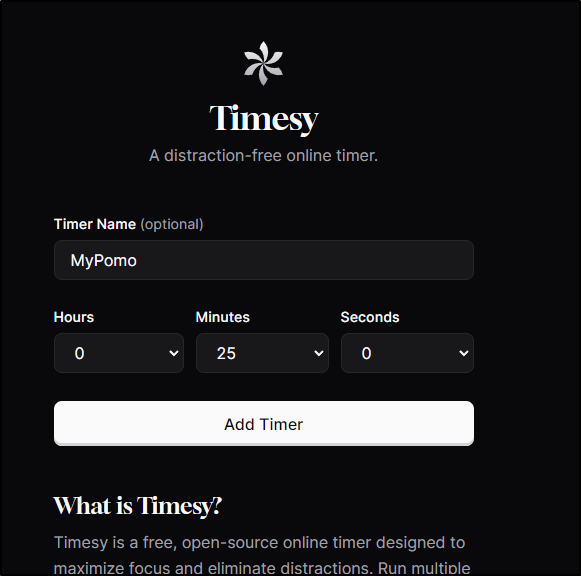

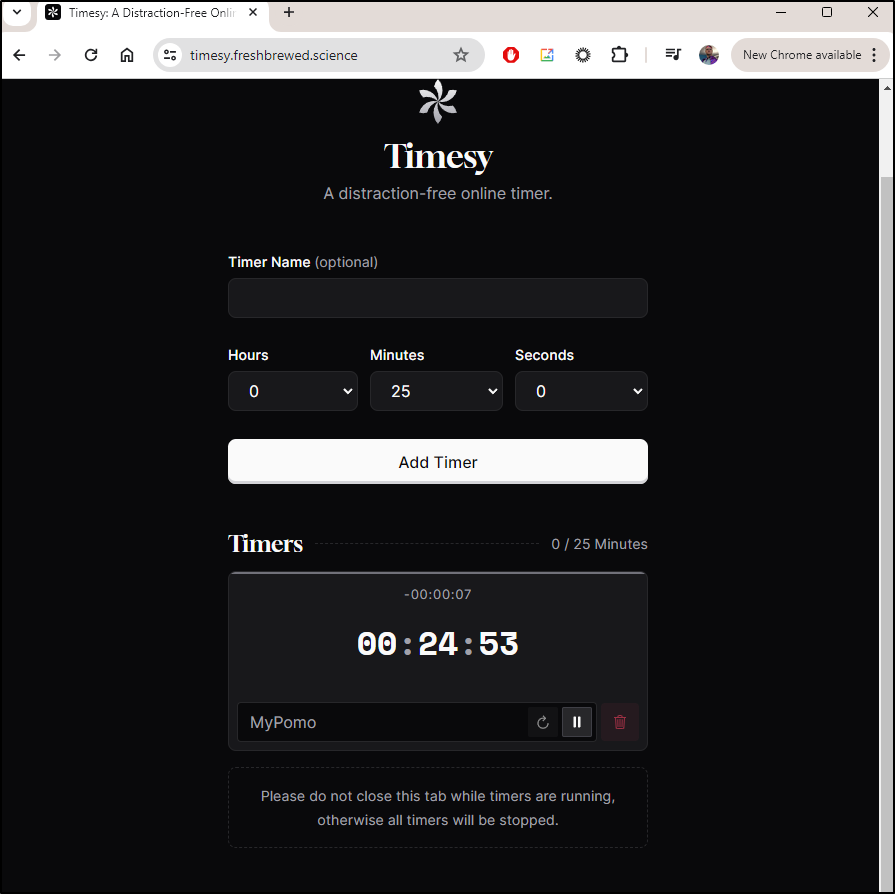

I can add a Pomodoro timer

I now have a timer I can run and reset

I can confirm it persists between browser launches, but using a new browser provider or incognito resets it

I don’t capture audio in ShareX, when it reaches zero it lets out a loud high pitch watch/alarm clock sound with 4 repeating pulses, then stops.

Summary

We started with Obsidian from the LinuxServer Group and tested it locally before exposing it externally. We added password authentication and learned how we can use the VNC box for a general-purpose jump box as well.

We then launched Timsy into the cluster and exposed it with TLS/Ingress. We tested a few timers and while I didn’t show it above, Timesy worked just as well on a mobile interface.

I hope you found one or both of those apps useful. Here are the links (albeit the Obsidian is password restricted)