Published: May 9, 2024 by Isaac Johnson

I love Rundeck. It’s a great utility system for providing webhooks for invoking commands. I was thinking on it recently for addressing a business need at work when I saw an email hit my inbox announcing version 5.2 was just released.

Today, let’s see if we can setup Rundeck in Kubernetes (I’ve always had to fall back to Docker). We’ll explore the latest features and focus primarily on the free/community offering.

Installation

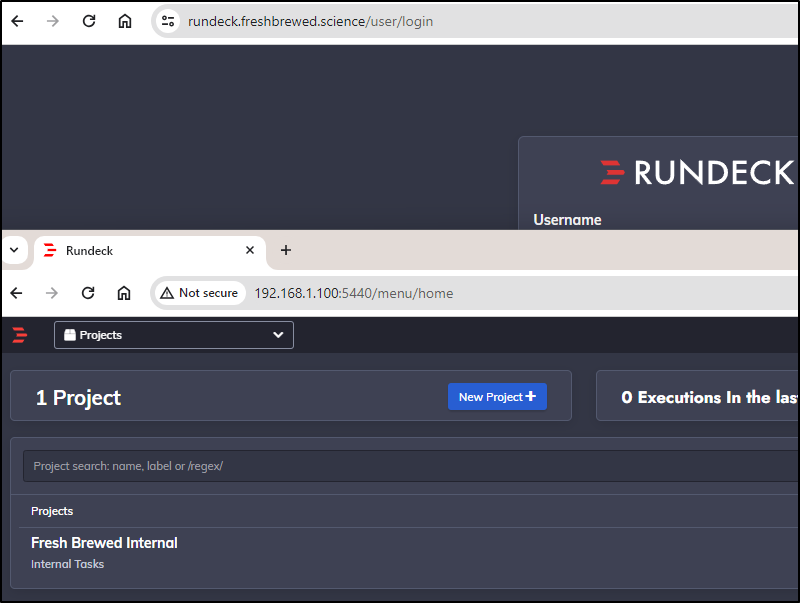

Up till now, the way I’ve run Rundeck is to just expose a Docker version via Kubernetes

This is essentially done via an endpoint object in Kubernetes (one could use endpoint or endpointslice for this)

builder@DESKTOP-QADGF36:~$ kubectl get ingress rundeckingress

NAME CLASS HOSTS ADDRESS PORTS AGE

rundeckingress <none> rundeck.freshbrewed.science 80, 443 55d

builder@DESKTOP-QADGF36:~$ kubectl get service rundeck-external-ip

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rundeck-external-ip ClusterIP None <none> 80/TCP 55d

builder@DESKTOP-QADGF36:~$ kubectl get service rundeck-external-ip

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rundeck-external-ip ClusterIP None <none> 80/TCP 55d

builder@DESKTOP-QADGF36:~$ kubectl get endpoints rundeck-external-ip

NAME ENDPOINTS AGE

rundeck-external-ip 192.168.1.100:5440 55d

I won’t really dig into the specifics of that but you are welcome to review my last writeup that covers upgrading it in Docker.

I don’t want “Enterprise”, but I can use their docs to get an idea how we might run standard using the same constructs.

For instance, this compose shows running Rundeck with PostgreSQL

version: '3'

services:

rundeck:

image: ${RUNDECK_IMAGE:-rundeck/rundeck:SNAPSHOT}

links:

- postgres

environment:

RUNDECK_DATABASE_DRIVER: org.postgresql.Driver

RUNDECK_DATABASE_USERNAME: rundeck

RUNDECK_DATABASE_PASSWORD: rundeck

RUNDECK_DATABASE_URL: jdbc:postgresql://postgres/rundeck?autoReconnect=true&useSSL=false&allowPublicKeyRetrieval=true

RUNDECK_GRAILS_URL: http://localhost:4440

volumes:

- ${RUNDECK_LICENSE_FILE:-/dev/null}:/home/rundeck/etc/rundeckpro-license.key

ports:

- 4440:4440

postgres:

image: postgres

expose:

- 5432

environment:

- POSTGRES_DB=rundeck

- POSTGRES_USER=rundeck

- POSTGRES_PASSWORD=rundeck

volumes:

- dbdata:/var/lib/postgresql/data

volumes:

dbdata:

Converting that to a Kubernetes manifest with the adjusted image and removing the license key:

apiVersion: apps/v1

kind: Deployment

metadata:

name: rundeck

spec:

replicas: 1

selector:

matchLabels:

app: rundeck

template:

metadata:

labels:

app: rundeck

spec:

containers:

- name: rundeck

image: rundeck/rundeck:5.2.0

env:

- name: RUNDECK_DATABASE_DRIVER

value: org.postgresql.Driver

- name: RUNDECK_DATABASE_USERNAME

value: rundeck

- name: RUNDECK_DATABASE_PASSWORD

value: rundeck

- name: RUNDECK_DATABASE_URL

value: jdbc:postgresql://postgres/rundeck?autoReconnect=true&useSSL=false&allowPublicKeyRetrieval=true

- name: RUNDECK_GRAILS_URL

value: http://localhost:4440

ports:

- containerPort: 4440

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: rundeck-service

spec:

selector:

app: rundeck

ports:

- protocol: TCP

port: 4440

targetPort: 4440

type: ClusterIP # Adjust the type as needed (e.g. ClusterIP)

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres

env:

- name: POSTGRES_DB

value: rundeck

- name: POSTGRES_USER

value: rundeck

- name: POSTGRES_PASSWORD

value: rundeck

volumeMounts:

- name: postgres-data

mountPath: /var/lib/postgresql/data

volumes:

- name: postgres-data

persistentVolumeClaim:

claimName: postgres-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi # Adjust storage size as needed

storageClassName: managed-nfs-storage # Replace with your storage class

---

apiVersion: v1

kind: Service

metadata:

name: postgres

spec:

selector:

app: postgres

ports:

- port: 5432

targetPort: 5432

I can now create a namespace and apply it

$ kubectl create ns rundeck

namespace/rundeck created

$ kubectl apply -n rundeck -f ./manifest.yaml

deployment.apps/rundeck created

service/rundeck-service created

deployment.apps/postgres created

persistentvolumeclaim/postgres-pvc created

service/postgres created

I found the NFS provisioner wasn’t jiving with PSQL

Warning BackOff 1s (x4 over 31s) kubelet Back-off restarting failed container postgres in pod postgres-6b984b548d-6vb9w_rundeck(93e755ea-cf66-4f23-95a9-82ce4d898378)

builder@DESKTOP-QADGF36:~/Workspaces/rundeck$ kubectl logs postgres-6b984b548d-6vb9w -n rundeck

chown: changing ownership of '/var/lib/postgresql/data': Operation not permitted

I waited a bit to see if it might clear up

$ kubectl get pods -n rundeck

NAME READY STATUS RESTARTS AGE

postgres-6b984b548d-6vb9w 0/1 CrashLoopBackOff 13 (3m54s ago) 46m

rundeck-6df9fcbbd-vlvw5 0/1 CrashLoopBackOff 12 (75s ago) 46m

I switched to local-path which worked:

$ kubectl get pods -n rundeck

NAME READY STATUS RESTARTS AGE

rundeck-6df9fcbbd-vmfg2 1/1 Running 0 66s

postgres-6b984b548d-9rb85 1/1 Running 0 66s

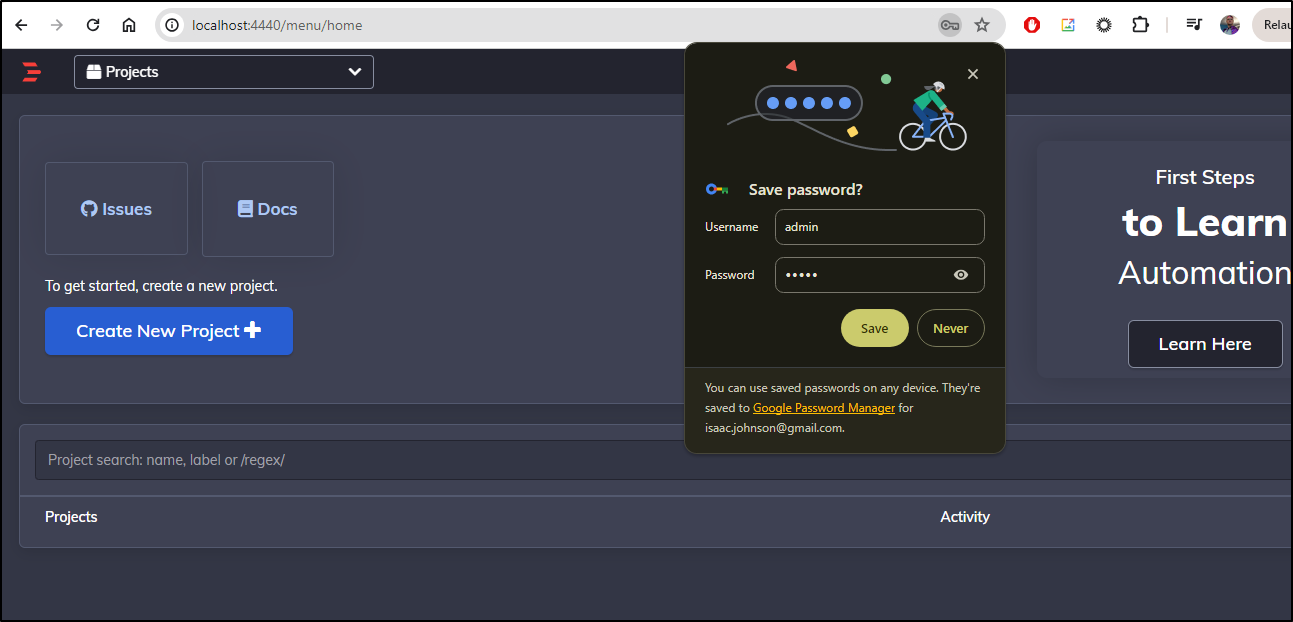

The first step we should be to change the admin password (which has the default password of “admin”)

I’ll port-forward to the service

$ kubectl port-forward svc/rundeck-service -n rundeck 4440:4440

Forwarding from 127.0.0.1:4440 -> 4440

Forwarding from [::1]:4440 -> 4440

Handling connection for 4440

Handling connection for 4440

Handling connection for 4440

Then login

In looking, I found that the user/pass is hardcoded in a file, unfortunately.

They do support SSO and LDAP auth via Env vars (defined here)

But looking, we can see it’s ‘admin:admin’ and ‘user:user’:

builder@DESKTOP-QADGF36:~/Workspaces/rundeck$ kubectl exec -it rundeck-6df9fcbbd-vmfg2 -n rundeck -- /bin/bash

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

rundeck@rundeck-6df9fcbbd-vmfg2:~$ cat /home/rundeck/server/config/realm.properties

#

# This file defines users passwords and roles for a HashUserRealm

#

# The format is

# <username>: <password>[,<rolename> ...]

#

# Passwords may be clear text, obfuscated or checksummed. The class

# org.mortbay.util.Password should be used to generate obfuscated

# passwords or password checksums

#

# This sets the temporary user accounts for the Rundeck app

#

admin:admin,user,admin

user:user,user

#

# example users matching the example aclpolicy template roles

#

#project-admin:admin,user,project_admin

#job-runner:admin,user,job_runner

#job-writer:admin,user,job_writer

#job-reader:admin,user,job_reader

#job-viewer:admin,user,job_viewer

Let’s enter some new territory and solve this using Kuberntes objects.

I’ll first create a configmap

apiVersion: v1

kind: ConfigMap

metadata:

name: realm-properties-configmap

data:

realm.properties: |

#

# This file defines users passwords and roles for a HashUserRealm

#

# The format is

# <username>: <password>[,<rolename> ...]

#

# Passwords may be clear text, obfuscated or checksummed. The class

# org.mortbay.util.Password should be used to generate obfuscated

# passwords or password checksums

#

# This sets the temporary user accounts for the Rundeck app

#

admin:admin,user,admin

user:user,user

#

# example users matching the example aclpolicy template roles

#

#project-admin:admin,user,project_admin

#job-runner:admin,user,job_runner

#job-writer:admin,user,job_writer

#job-reader:admin,user,job_reader

#job-viewer:admin,user,job_viewer

I editted the manifest

apiVersion: v1

kind: ConfigMap

metadata:

name: realm-properties-configmap

data:

realm.properties: |

#

# This file defines users passwords and roles for a HashUserRealm

#

# The format is

# <username>: <password>[,<rolename> ...]

#

# Passwords may be clear text, obfuscated or checksummed. The class

# org.mortbay.util.Password should be used to generate obfuscated

# passwords or password checksums

#

# This sets the temporary user accounts for the Rundeck app

#

admin:newpassword,user,admin

user:newpassword,user

#

# example users matching the example aclpolicy template roles

#

#project-admin:admin,user,project_admin

#job-runner:admin,user,job_runner

#job-writer:admin,user,job_writer

#job-reader:admin,user,job_reader

#job-viewer:admin,user,job_viewer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: rundeck

spec:

replicas: 1

selector:

matchLabels:

app: rundeck

template:

metadata:

labels:

app: rundeck

spec:

containers:

- name: rundeck

image: rundeck/rundeck:5.2.0

volumeMounts:

- name: config-volume

mountPath: /home/rundeck/server/config/

subPath: realm.properties

env:

- name: RUNDECK_DATABASE_DRIVER

value: org.postgresql.Driver

- name: RUNDECK_DATABASE_USERNAME

value: rundeck

- name: RUNDECK_DATABASE_PASSWORD

value: rundeck

- name: RUNDECK_DATABASE_URL

value: jdbc:postgresql://postgres/rundeck?autoReconnect=true&useSSL=false&allowPublicKeyRetrieval=true

- name: RUNDECK_GRAILS_URL

value: http://localhost:4440

ports:

- containerPort: 4440

protocol: TCP

volumes:

- name: config-volume

configMap:

name: realm-properties-configmap

---

apiVersion: v1

kind: Service

metadata:

name: rundeck-service

spec:

selector:

app: rundeck

ports:

- protocol: TCP

port: 4440

targetPort: 4440

type: ClusterIP # Adjust the type as needed (e.g. ClusterIP)

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres

env:

- name: POSTGRES_DB

value: rundeck

- name: POSTGRES_USER

value: rundeck

- name: POSTGRES_PASSWORD

value: rundeck

volumeMounts:

- name: postgres-data

mountPath: /var/lib/postgresql/data

volumes:

- name: postgres-data

persistentVolumeClaim:

claimName: postgres-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi # Adjust storage size as needed

storageClassName: local-path

---

apiVersion: v1

kind: Service

metadata:

name: postgres

spec:

selector:

app: postgres

ports:

- port: 5432

targetPort: 5432

Which I applied

$ kubectl apply -n rundeck -f ./manifest.yaml

configmap/realm-properties-configmap created

deployment.apps/rundeck configured

service/rundeck-service unchanged

deployment.apps/postgres unchanged

persistentvolumeclaim/postgres-pvc unchanged

service/postgres unchanged

Unfortuantely it didn’t work

$ kubectl get pods -n rundeck

NAME READY STATUS RESTARTS AGE

rundeck-6df9fcbbd-vmfg2 1/1 Running 0 33m

postgres-6b984b548d-9rb85 1/1 Running 0 33m

rundeck-7c6c8995cc-wp82k 0/1 CrashLoopBackOff 4 (36s ago) 2m4s

This failed because it could not overwrite the file

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m24s default-scheduler Successfully assigned rundeck/rundeck-7c6c8995cc-wp82k to builder-hp-elitebook-745-g5

Normal Pulled 57s (x5 over 2m24s) kubelet Container image "rundeck/rundeck:5.2.0" already present on machine

Normal Created 57s (x5 over 2m24s) kubelet Created container rundeck

Warning Failed 57s (x5 over 2m24s) kubelet Error: failed to create containerd task: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error mounting "/var/lib/kubelet/pods/02c9840e-9880-4f72-aa1b-c7fe99ec1195/volume-subpaths/config-volume/rundeck/0" to rootfs at "/home/rundeck/server/config/": mount /var/lib/kubelet/pods/02c9840e-9880-4f72-aa1b-c7fe99ec1195/volume-subpaths/config-volume/rundeck/0:/home/rundeck/server/config/ (via /proc/self/fd/6), flags: 0x5001: not a directory: unknown

Warning BackOff 31s (x10 over 2m22s) kubelet Back-off restarting failed container rundeck in pod rundeck-7c6c8995cc-wp82k_rundeck(02c9840e-9880-4f72-aa1b-c7fe99ec1195)

Let’s switch to copying it over first

apiVersion: v1

kind: ConfigMap

metadata:

name: realm-properties-configmap

data:

realm.properties: |

#

# This file defines users passwords and roles for a HashUserRealm

#

# The format is

# <username>: <password>[,<rolename> ...]

#

# Passwords may be clear text, obfuscated or checksummed. The class

# org.mortbay.util.Password should be used to generate obfuscated

# passwords or password checksums

#

# This sets the temporary user accounts for the Rundeck app

#

admin:newpassword,user,admin

user:newpassword,user

#

# example users matching the example aclpolicy template roles

#

#project-admin:admin,user,project_admin

#job-runner:admin,user,job_runner

#job-writer:admin,user,job_writer

#job-reader:admin,user,job_reader

#job-viewer:admin,user,job_viewer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: rundeck

spec:

replicas: 1

selector:

matchLabels:

app: rundeck

template:

metadata:

labels:

app: rundeck

spec:

containers:

- name: rundeck

image: rundeck/rundeck:5.2.0

volumeMounts:

- name: config-volume

mountPath: /mnt/

subPath: realm.properties

command:

- "/bin/sh"

- "-c"

- "cp -f /mnt/realm.properties /home/rundeck/server/config/realm.properties && java -XX:+UnlockExperimentalVMOptions -XX:MaxRAMPercentage=75 -Dlog4j.configurationFile=/home/rundeck/server/config/log4j2.properties -Dlogging.config=file:/home/rundeck/server/config/log4j2.properties -Dloginmodule.conf.name=jaas-loginmodule.conf -Dloginmodule.name=rundeck -Drundeck.jaaslogin=true -Drundeck.jetty.connector.forwarded=false -jar rundeck.war"

env:

- name: RUNDECK_DATABASE_DRIVER

value: org.postgresql.Driver

- name: RUNDECK_DATABASE_USERNAME

value: rundeck

- name: RUNDECK_DATABASE_PASSWORD

value: rundeck

- name: RUNDECK_DATABASE_URL

value: jdbc:postgresql://postgres/rundeck?autoReconnect=true&useSSL=false&allowPublicKeyRetrieval=true

- name: RUNDECK_GRAILS_URL

value: http://localhost:4440

ports:

- containerPort: 4440

protocol: TCP

volumes:

- name: config-volume

configMap:

name: realm-properties-configmap

---

apiVersion: v1

kind: Service

metadata:

name: rundeck-service

spec:

selector:

app: rundeck

ports:

- protocol: TCP

port: 4440

targetPort: 4440

type: ClusterIP # Adjust the type as needed (e.g. ClusterIP)

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres

env:

- name: POSTGRES_DB

value: rundeck

- name: POSTGRES_USER

value: rundeck

- name: POSTGRES_PASSWORD

value: rundeck

volumeMounts:

- name: postgres-data

mountPath: /var/lib/postgresql/data

volumes:

- name: postgres-data

persistentVolumeClaim:

claimName: postgres-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi # Adjust storage size as needed

storageClassName: local-path

---

apiVersion: v1

kind: Service

metadata:

name: postgres

spec:

selector:

app: postgres

ports:

- port: 5432

targetPort: 5432

Let’s test that

$ kubectl apply -n rundeck -f ./manifest.yaml

configmap/realm-properties-configmap unchanged

deployment.apps/rundeck configured

service/rundeck-service unchanged

deployment.apps/postgres unchanged

persistentvolumeclaim/postgres-pvc unchanged

service/postgres unchanged

That too vommitted

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 34s default-scheduler Successfully assigned rundeck/rundeck-87cbcb44c-4ps2b to builder-hp-elitebook-745-g5

Normal Pulled 19s (x3 over 34s) kubelet Container image "rundeck/rundeck:5.2.0" already present on machine

Normal Created 19s (x3 over 34s) kubelet Created container rundeck

Warning Failed 19s (x3 over 34s) kubelet Error: failed to create containerd task: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error mounting "/var/lib/kubelet/pods/9b0b0143-4593-4feb-851c-fa3ba24e69eb/volume-subpaths/config-volume/rundeck/0" to rootfs at "/mnt/": mount /var/lib/kubelet/pods/9b0b0143-4593-4feb-851c-fa3ba24e69eb/volume-subpaths/config-volume/rundeck/0:/mnt/ (via /proc/self/fd/6), flags: 0x5001: not a directory: unknown

Warning BackOff 8s (x4 over 33s) kubelet Back-off restarting failed container rundeck in pod rundeck-87cbcb44c-4ps2b_rundeck(9b0b0143-4593-4feb-851c-fa3ba24e69eb)

However, this last form seemed to jive with my cluster (using subpaths and item keys):

$ cat manifest.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: realm-properties-configmap

data:

realm.properties: |

#

# This file defines users passwords and roles for a HashUserRealm

#

# The format is

# <username>: <password>[,<rolename> ...]

#

# Passwords may be clear text, obfuscated or checksummed. The class

# org.mortbay.util.Password should be used to generate obfuscated

# passwords or password checksums

#

# This sets the temporary user accounts for the Rundeck app

#

admin:newpassword,user,admin

user:newpassword,user

#

# example users matching the example aclpolicy template roles

#

#project-admin:admin,user,project_admin

#job-runner:admin,user,job_runner

#job-writer:admin,user,job_writer

#job-reader:admin,user,job_reader

#job-viewer:admin,user,job_viewer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: rundeck

spec:

replicas: 1

selector:

matchLabels:

app: rundeck

template:

metadata:

labels:

app: rundeck

spec:

containers:

- name: rundeck

image: rundeck/rundeck:5.2.0

volumeMounts:

- name: config-volume

mountPath: /mnt/realm.properties

subPath: realm.properties

command:

- "/bin/sh"

- "-c"

- "cp -f /mnt/realm.properties /home/rundeck/server/config/realm.properties && java -XX:+UnlockExperimentalVMOptions -XX:MaxRAMPercentage=75 -Dlog4j.configurationFile=/home/rundeck/server/config/log4j2.properties -Dlogging.config=file:/home/rundeck/server/config/log4j2.properties -Dloginmodule.conf.name=jaas-loginmodule.conf -Dloginmodule.name=rundeck -Drundeck.jaaslogin=true -Drundeck.jetty.connector.forwarded=false -jar rundeck.war"

env:

- name: RUNDECK_DATABASE_DRIVER

value: org.postgresql.Driver

- name: RUNDECK_DATABASE_USERNAME

value: rundeck

- name: RUNDECK_DATABASE_PASSWORD

value: rundeck

- name: RUNDECK_DATABASE_URL

value: jdbc:postgresql://postgres/rundeck?autoReconnect=true&useSSL=false&allowPublicKeyRetrieval=true

- name: RUNDECK_GRAILS_URL

value: http://localhost:4440

ports:

- containerPort: 4440

protocol: TCP

volumes:

- name: config-volume

configMap:

name: realm-properties-configmap

items:

- key: realm.properties

path: realm.properties

---

apiVersion: v1

kind: Service

metadata:

name: rundeck-service

spec:

selector:

app: rundeck

ports:

- protocol: TCP

port: 4440

targetPort: 4440

type: ClusterIP # Adjust the type as needed (e.g. ClusterIP)

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres

env:

- name: POSTGRES_DB

value: rundeck

- name: POSTGRES_USER

value: rundeck

- name: POSTGRES_PASSWORD

value: rundeck

volumeMounts:

- name: postgres-data

mountPath: /var/lib/postgresql/data

volumes:

- name: postgres-data

persistentVolumeClaim:

claimName: postgres-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi # Adjust storage size as needed

storageClassName: local-path

---

apiVersion: v1

kind: Service

metadata:

name: postgres

spec:

selector:

app: postgres

ports:

- port: 5432

targetPort: 5432

On issue that is bothering me is that without a readiness probe or health check we show a “live” container well before it’s actually started.

Once the logs show

Grails application running at http://0.0.0.0:4440/ in environment: production

Then I know its good. So let’s fix that too. I also pivotted back to just a direct mount of the properties file

$ cat manifest.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: realm-properties-configmap

data:

realm.properties: |

#

# This file defines users passwords and roles for a HashUserRealm

#

# The format is

# <username>: <password>[,<rolename> ...]

#

# Passwords may be clear text, obfuscated or checksummed. The class

# org.mortbay.util.Password should be used to generate obfuscated

# passwords or password checksums

#

# This sets the temporary user accounts for the Rundeck app

#

admin:newpassword,user,admin

user:newpassword,user

#

# example users matching the example aclpolicy template roles

#

#project-admin:admin,user,project_admin

#job-runner:admin,user,job_runner

#job-writer:admin,user,job_writer

#job-reader:admin,user,job_reader

#job-viewer:admin,user,job_viewer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: rundeck

spec:

replicas: 1

selector:

matchLabels:

app: rundeck

template:

metadata:

labels:

app: rundeck

spec:

containers:

- name: rundeck

image: rundeck/rundeck:5.2.0

volumeMounts:

- name: config-volume

mountPath: /home/rundeck/server/config/realm.properties

subPath: realm.properties

env:

- name: RUNDECK_DATABASE_DRIVER

value: org.postgresql.Driver

- name: RUNDECK_DATABASE_USERNAME

value: rundeck

- name: RUNDECK_DATABASE_PASSWORD

value: rundeck

- name: RUNDECK_DATABASE_URL

value: jdbc:postgresql://postgres/rundeck?autoReconnect=true&useSSL=false&allowPublicKeyRetrieval=true

- name: RUNDECK_GRAILS_URL

value: http://localhost:4440

ports:

- containerPort: 4440

protocol: TCP

readinessProbe:

httpGet:

path: /health

port: 4440

initialDelaySeconds: 10

periodSeconds: 5

failureThreshold: 3

livenessProbe:

httpGet:

path: /health

port: 4440

initialDelaySeconds: 30

periodSeconds: 10

failureThreshold: 5

volumes:

- name: config-volume

configMap:

name: realm-properties-configmap

items:

- key: realm.properties

path: realm.properties

---

apiVersion: v1

kind: Service

metadata:

name: rundeck-service

spec:

selector:

app: rundeck

ports:

- protocol: TCP

port: 4440

targetPort: 4440

type: ClusterIP # Adjust the type as needed (e.g. ClusterIP)

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres

env:

- name: POSTGRES_DB

value: rundeck

- name: POSTGRES_USER

value: rundeck

- name: POSTGRES_PASSWORD

value: rundeck

volumeMounts:

- name: postgres-data

mountPath: /var/lib/postgresql/data

volumes:

- name: postgres-data

persistentVolumeClaim:

claimName: postgres-pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: postgres-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi # Adjust storage size as needed

storageClassName: local-path

---

apiVersion: v1

kind: Service

metadata:

name: postgres

spec:

selector:

app: postgres

ports:

- port: 5432

targetPort: 5432

applied

$ kubectl apply -n rundeck -f ./manifest.yaml

configmap/realm-properties-configmap unchanged

deployment.apps/rundeck configured

service/rundeck-service unchanged

deployment.apps/postgres unchanged

persistentvolumeclaim/postgres-pvc unchanged

service/postgres unchanged

Ingress

Since I already used ‘rundeck’ with my AWS/Route53 address so I’ll use Azure for this instance.

That means I first need an A Record

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n rundeck

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "96b46f0a-7b1c-4380-95df-5668b851ae61",

"fqdn": "rundeck.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/rundeck",

"name": "rundeck",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"type": "Microsoft.Network/dnszones/A"

}

Next I can create and apply an Ingress YAML to use it

$ cat rundeck.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: rundeck-service

name: rundeck-ingress

spec:

rules:

- host: rundeck.tpk.pw

http:

paths:

- backend:

service:

name: rundeck-service

port:

number: 4440

path: /

pathType: Prefix

tls:

- hosts:

- rundeck.tpk.pw

secretName: rundeck-tls

$ kubectl apply -f ./rundeck.ingress.yaml -n rundeck

ingress.networking.k8s.io/rundeck-ingress created

When I saw the cert was good

$ kubectl get cert -n rundeck

NAME READY SECRET AGE

rundeck-tls True rundeck-tls 3m33s

While it works, I still seem to a get a bit of delay redirecting after login to https://rundeck.tpk.pw/menu/home.

Usage

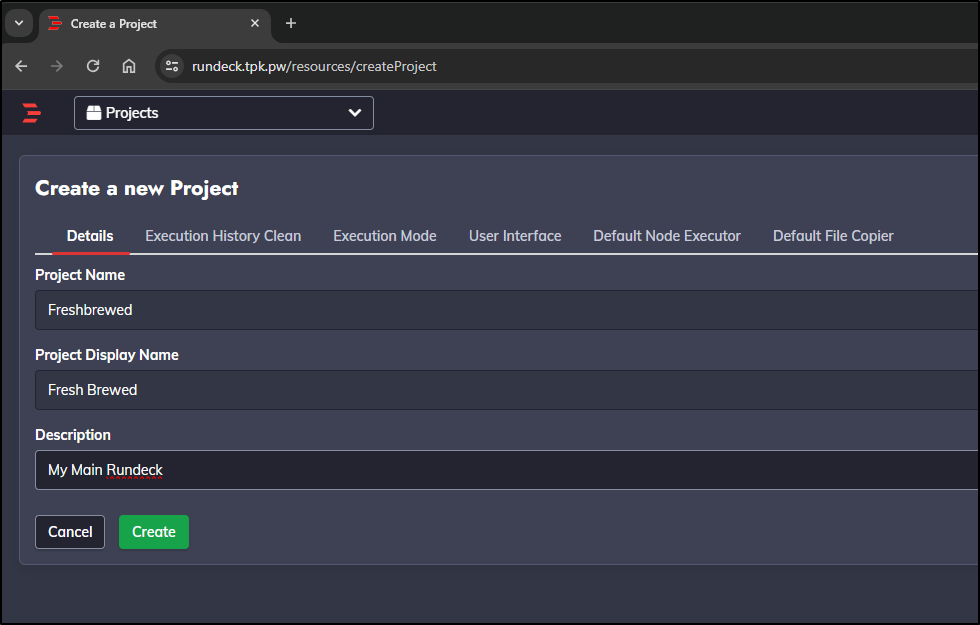

My first step is to create a project

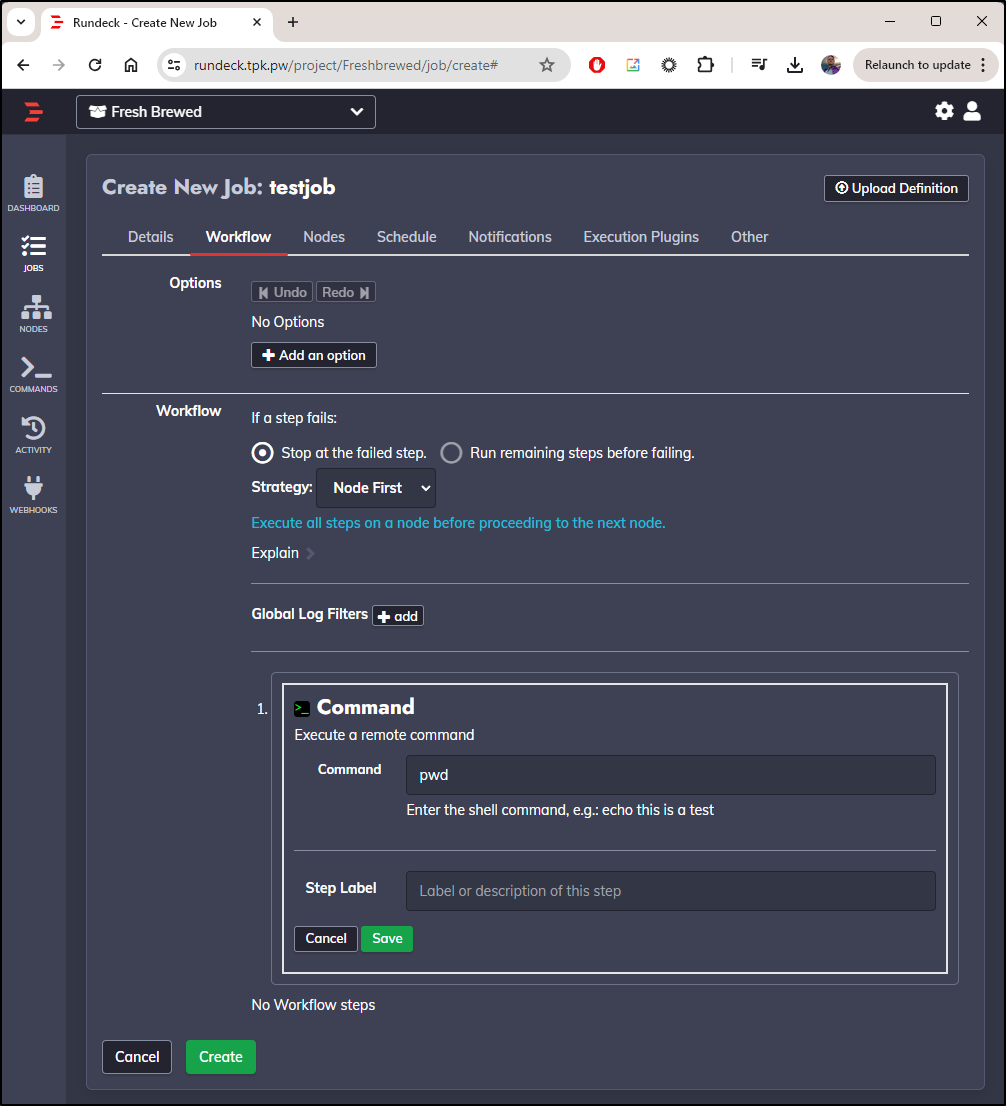

Next I’ll create a job

I’ll give it a name

In the Workflow section, I’ll do a basic test pwd command

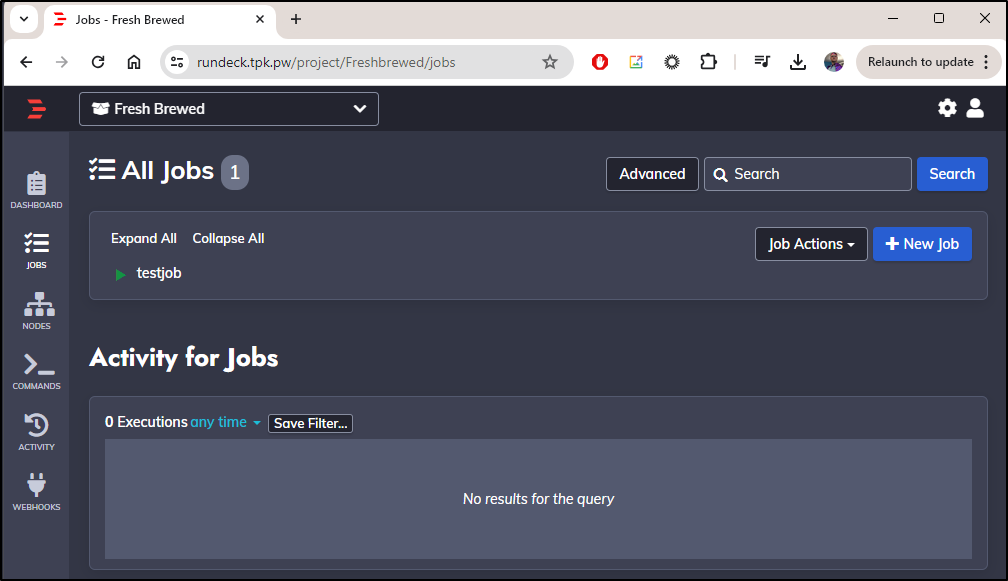

Once saved, I can see it in the All Jobs list

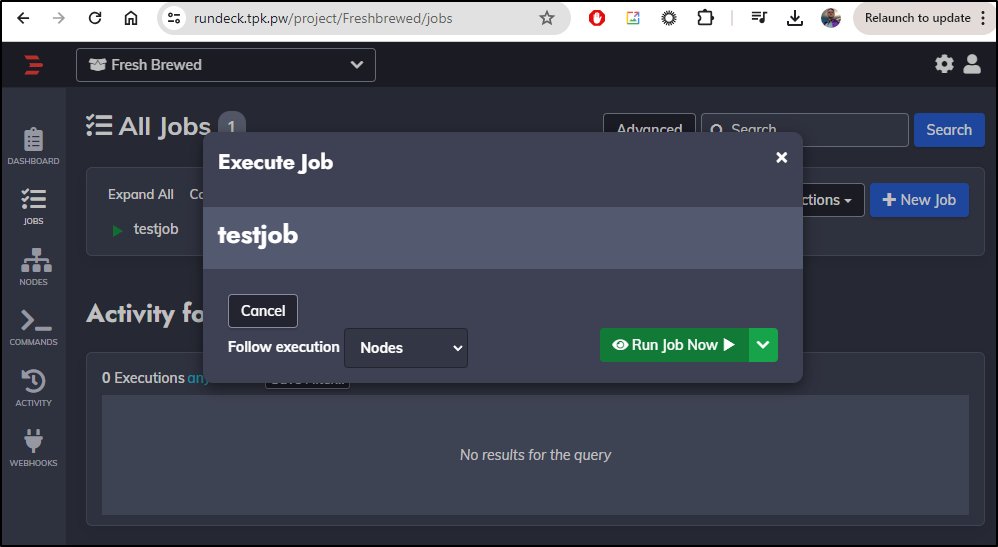

I can click “Run Job Now”

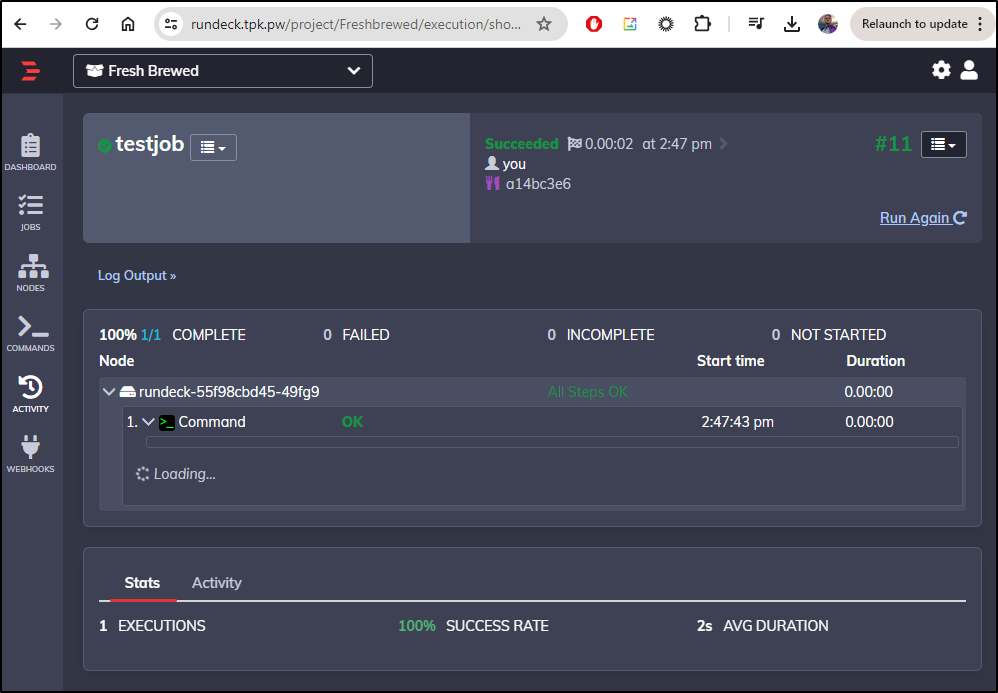

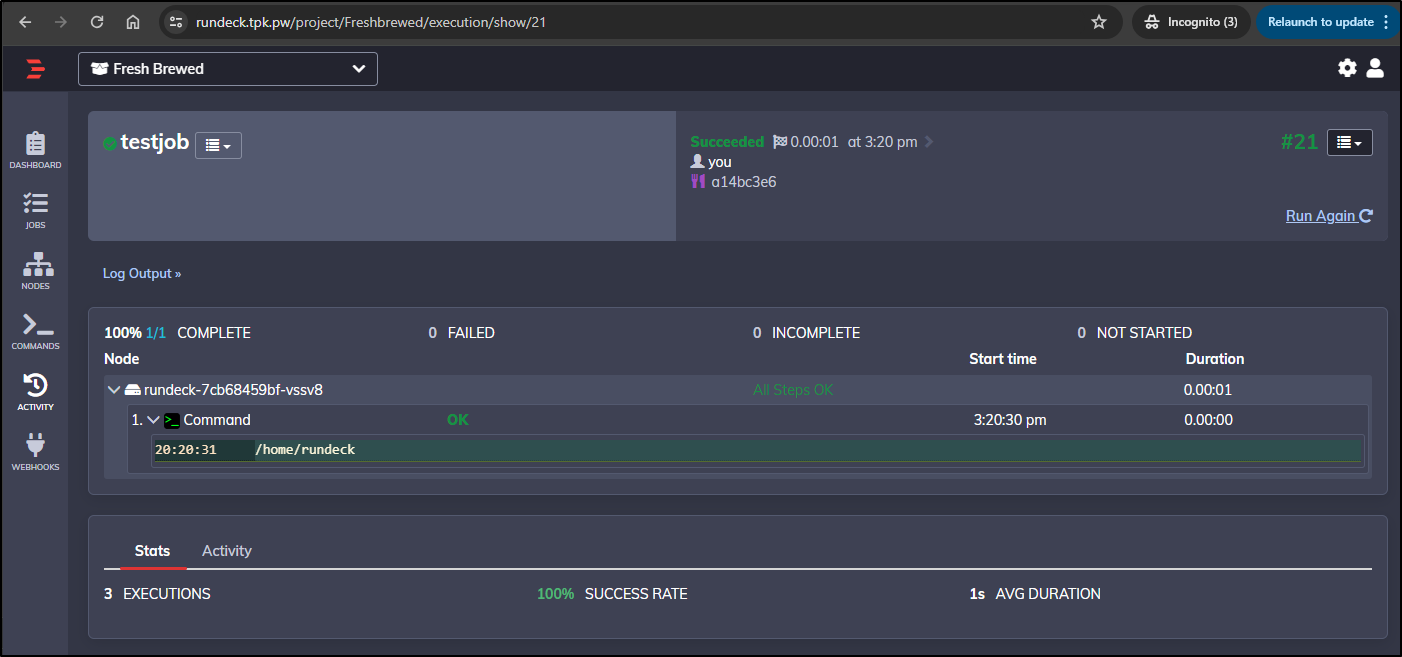

Which I see it succeeded

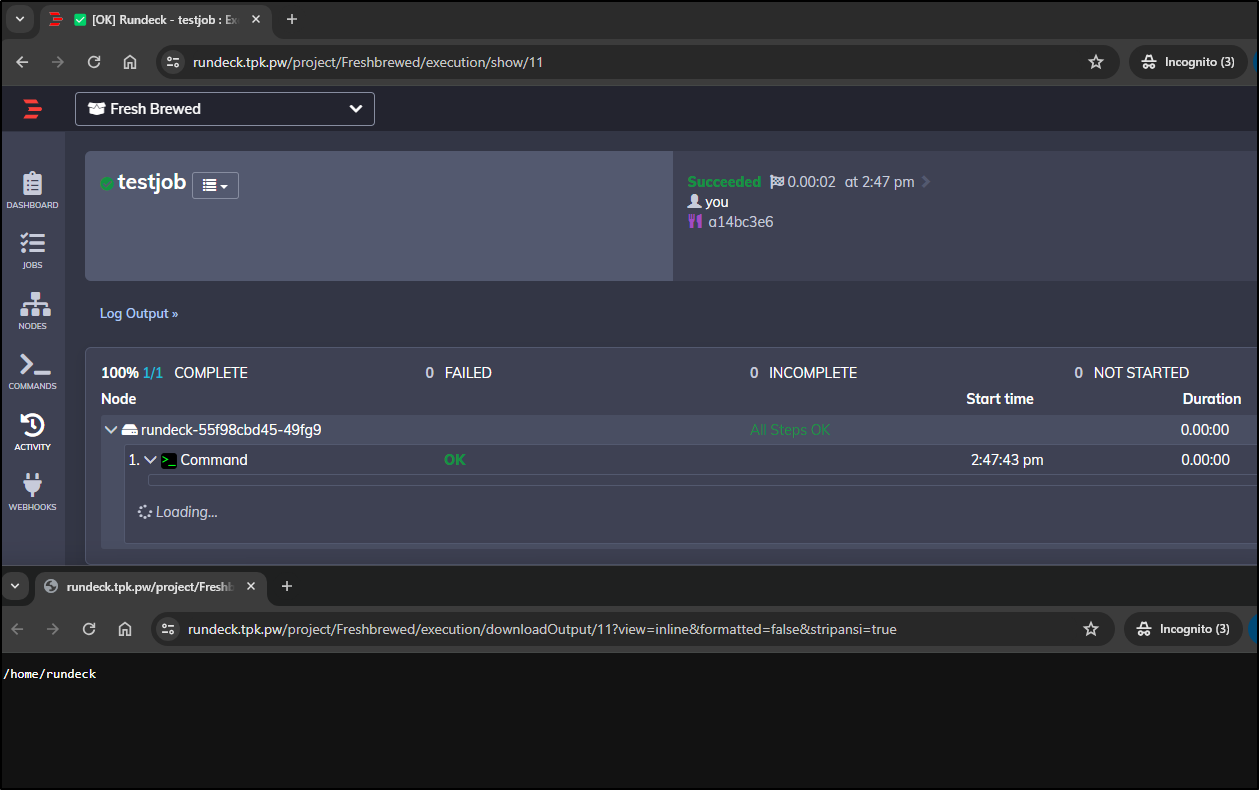

I can see it ran on the main Node

I find it still seems to hang on output in the page, but I can view it with the raw output

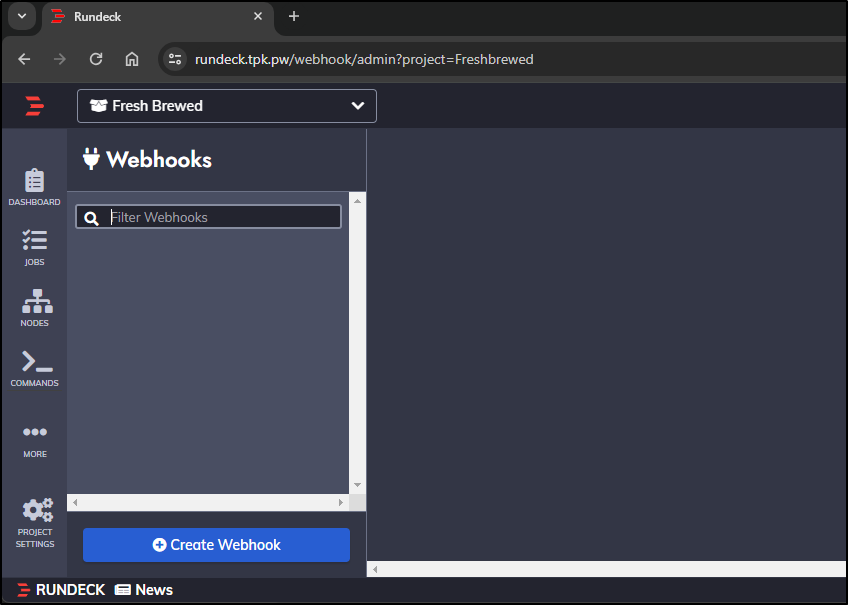

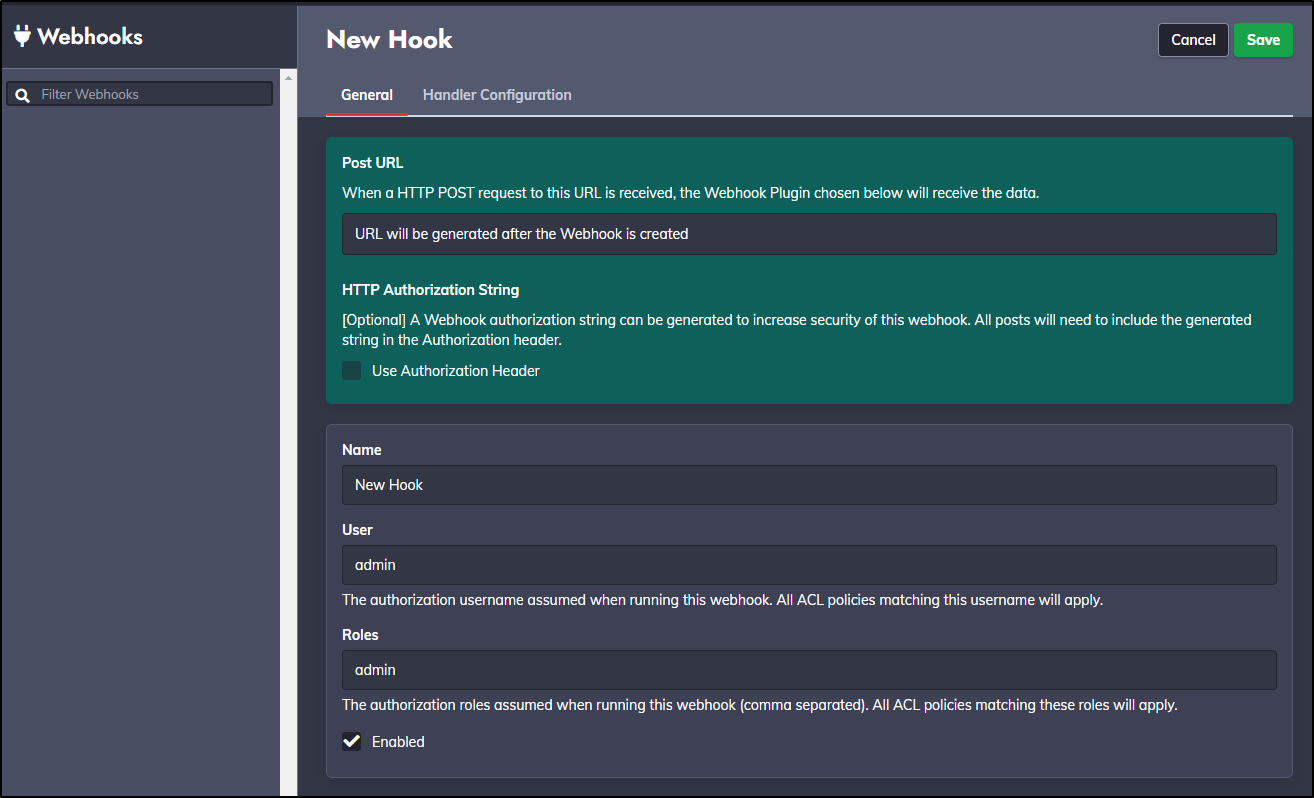

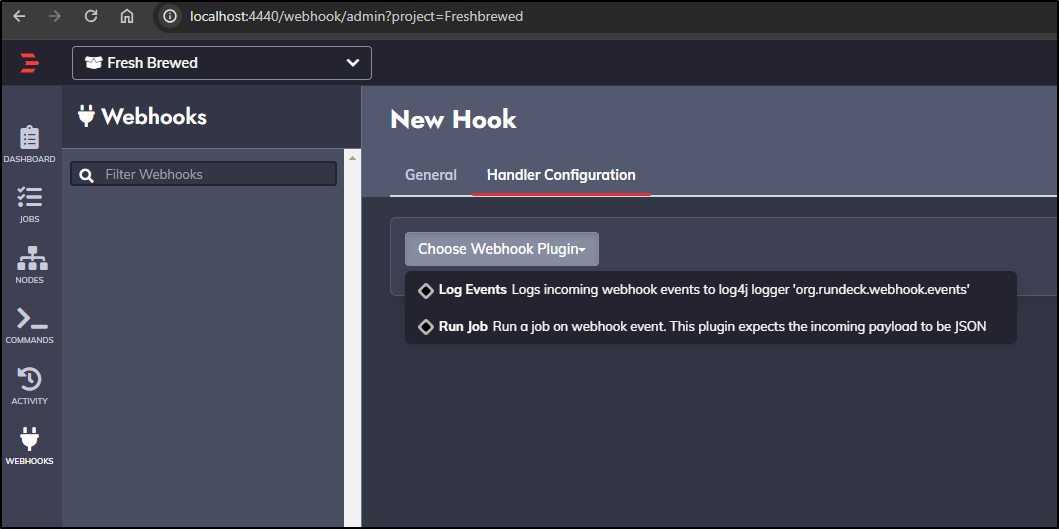

Next, I’ll create a webhook

I decided to give it the admin role

For some reason, yet again the UI doesn’t work for the Web URL, but does with a port-forward

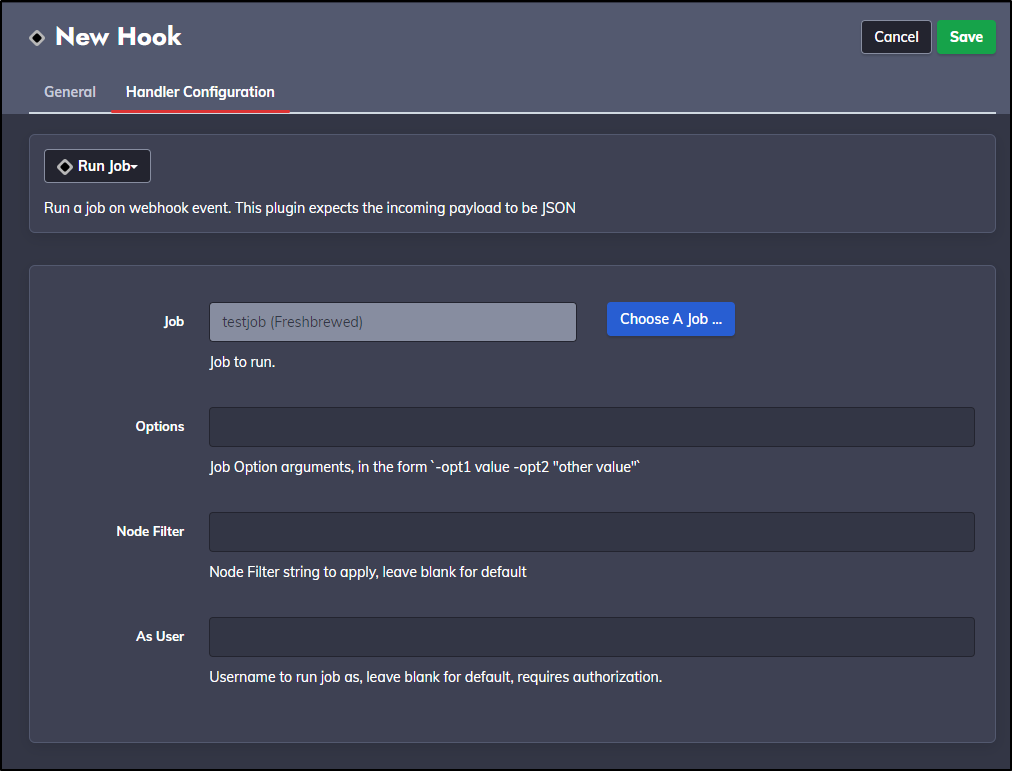

I’ll pick a test job

And I can see URL after I save

I can then test with curl

$ curl -X POST https://rundeck.tpk.pw/api/47/webhook/pee2vJEp2I2OPY22wzAZy0is0SaRKWwO#testhook

{"jobId":"ce88f1bc-77cf-4bf7-aabe-576f4eaa5555","executionId":"17"}

I realized i had left the default URL in the deployment section of Rundeck

spec:

containers:

- name: rundeck

image: rundeck/rundeck:5.2.0

volumeMounts:

- name: config-volume

mountPath: /home/rundeck/server/config/realm.properties

subPath: realm.properties

env:

- name: RUNDECK_DATABASE_DRIVER

value: org.postgresql.Driver

- name: RUNDECK_DATABASE_USERNAME

value: rundeck

- name: RUNDECK_DATABASE_PASSWORD

value: rundeck

- name: RUNDECK_DATABASE_URL

value: jdbc:postgresql://postgres/rundeck?autoReconnect=true&useSSL=false&allowPublicKeyRetrieval=true

- name: RUNDECK_GRAILS_URL

value: http://localhost:4440

I changed that last one to

- name: RUNDECK_GRAILS_URL

value: https://rundeck.tpk.pw

However, a consequence of rotating pods is the output logs get lost

However, the webhooks and URLs persist

$ curl -X POST https://rundeck.tpk.pw/api/47/webhook/pee2vJEp2I2OPY22wzAZy0is0SaRKWwO#testhook

{"jobId":"ce88f1bc-77cf-4bf7-aabe-576f4eaa5555","executionId":"21"}

This time I had no troubles getting output

Nodes

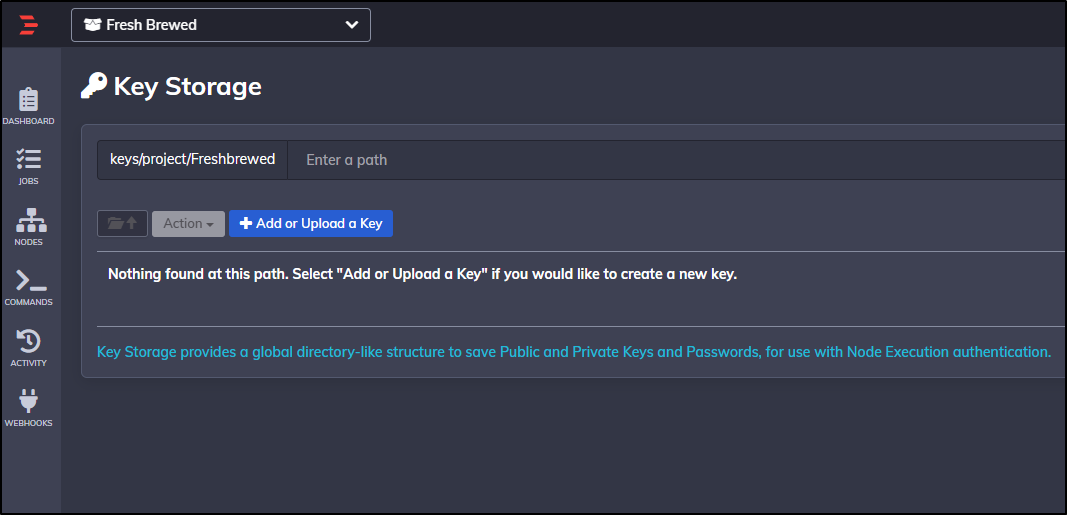

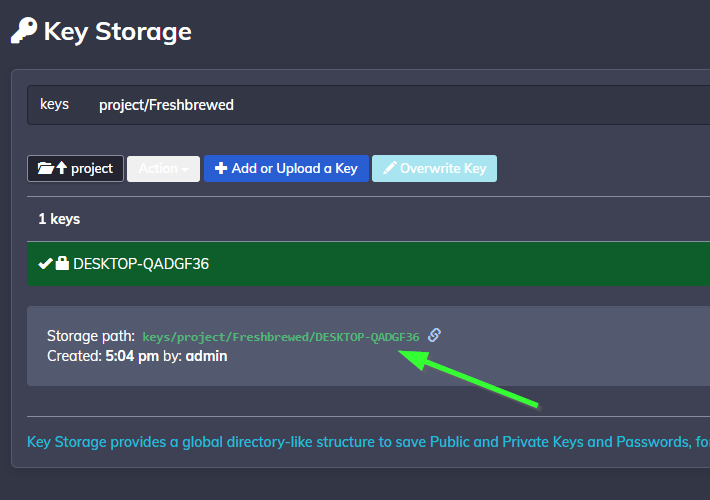

Let’s add a node. First, we need to add an SSH key that will be used to connect to the Node.

We can do this in the Project Settings under Key storage

Click “+ Add or Upload a Key”

I can enter the Private Key and set the name

We can see it now saved

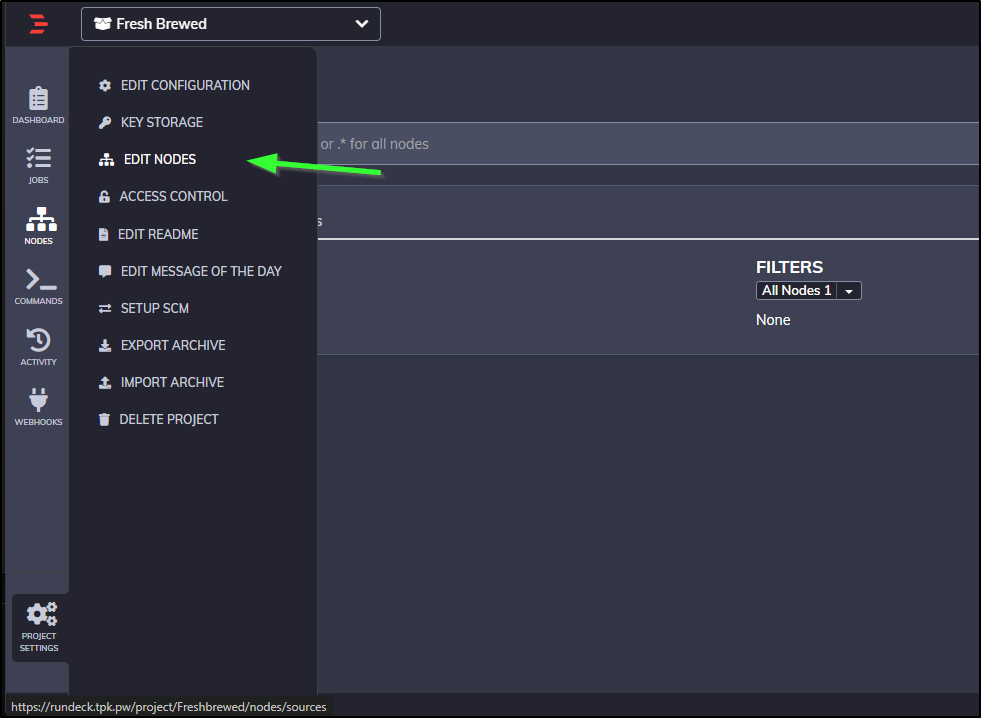

Next, we’ll choose to Edit Nodes

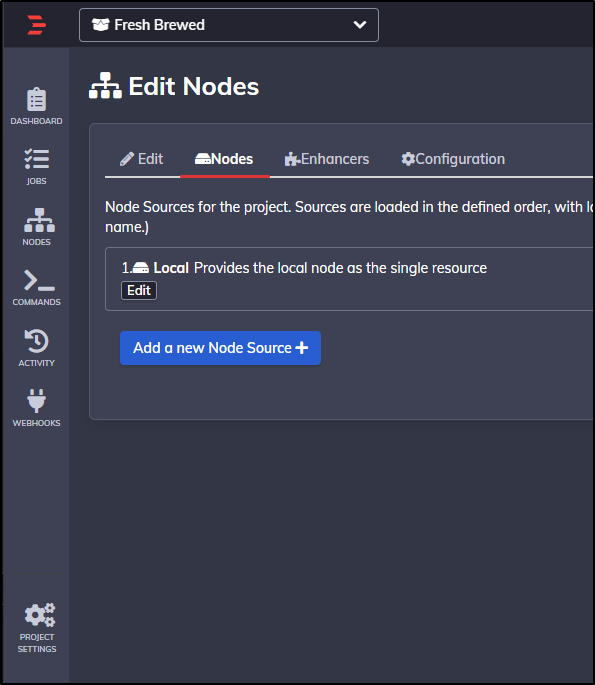

Where we can then add a Node source

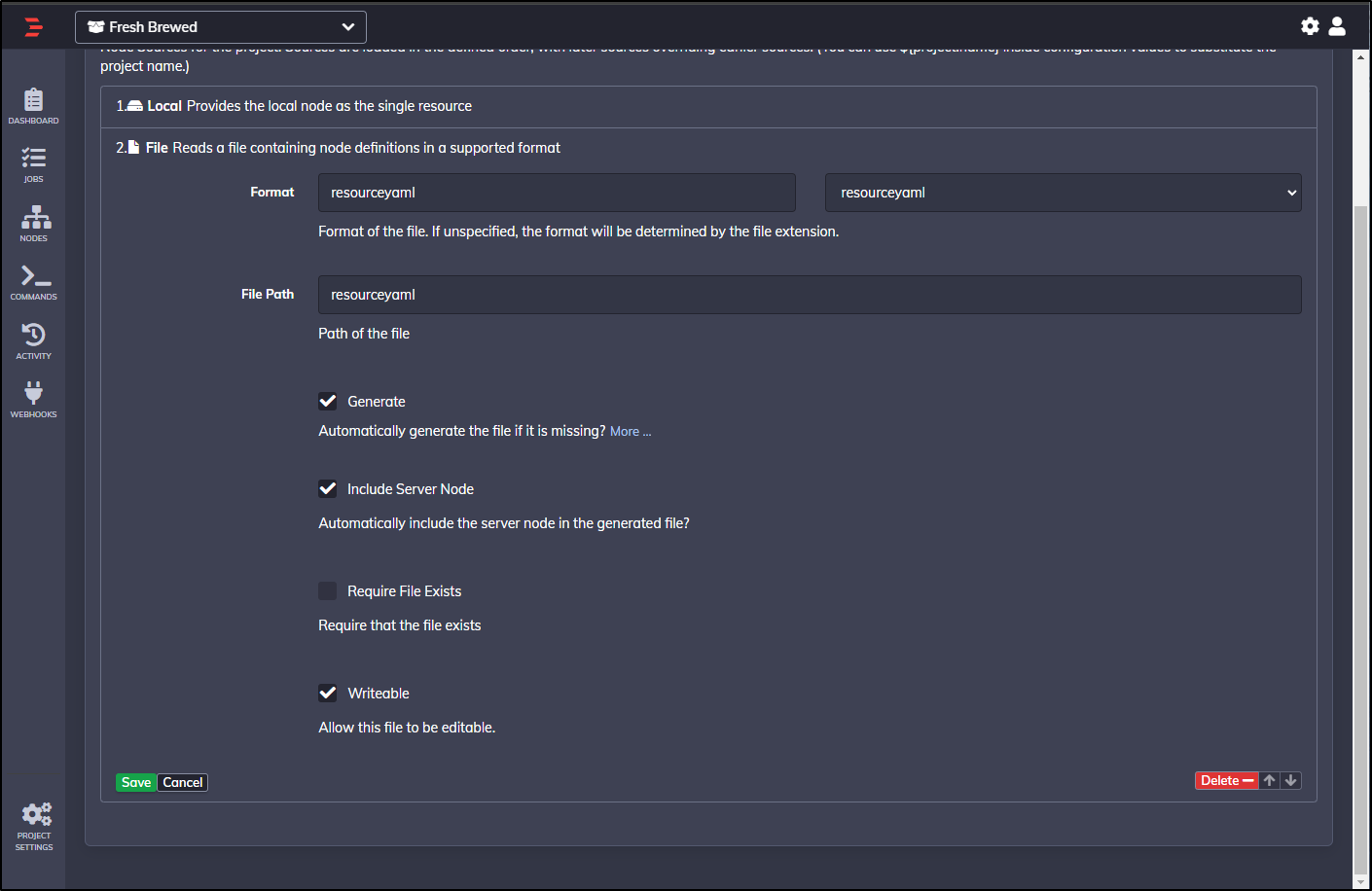

I’ll now add a file node

I’ll chose YAML format and to include localhost

I then need to save it

Which is confirmed

Now, even though I did chose to create it, I found it failed to do so.

So I touched a file on the pod and gave write permissions

builder@DESKTOP-QADGF36:~/Workspaces/rundeck$ kubectl get pods -n rundeck

NAME READY STATUS RESTARTS AGE

postgres-6b984b548d-9rb85 1/1 Running 0 7h21m

rundeck-7cb68459bf-vssv8 1/1 Running 0 117m

builder@DESKTOP-QADGF36:~/Workspaces/rundeck$ kubectl exec -it rundeck-7cb68459bf-vssv8 -n rundeck -- /bin/bash

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

rundeck@rundeck-7cb68459bf-vssv8:~$ pwd

/home/rundeck

rundeck@rundeck-7cb68459bf-vssv8:~$ touch resourceyaml

rundeck@rundeck-7cb68459bf-vssv8:~$ chmod 777 ./resourceyaml

rundeck@rundeck-7cb68459bf-vssv8:~$

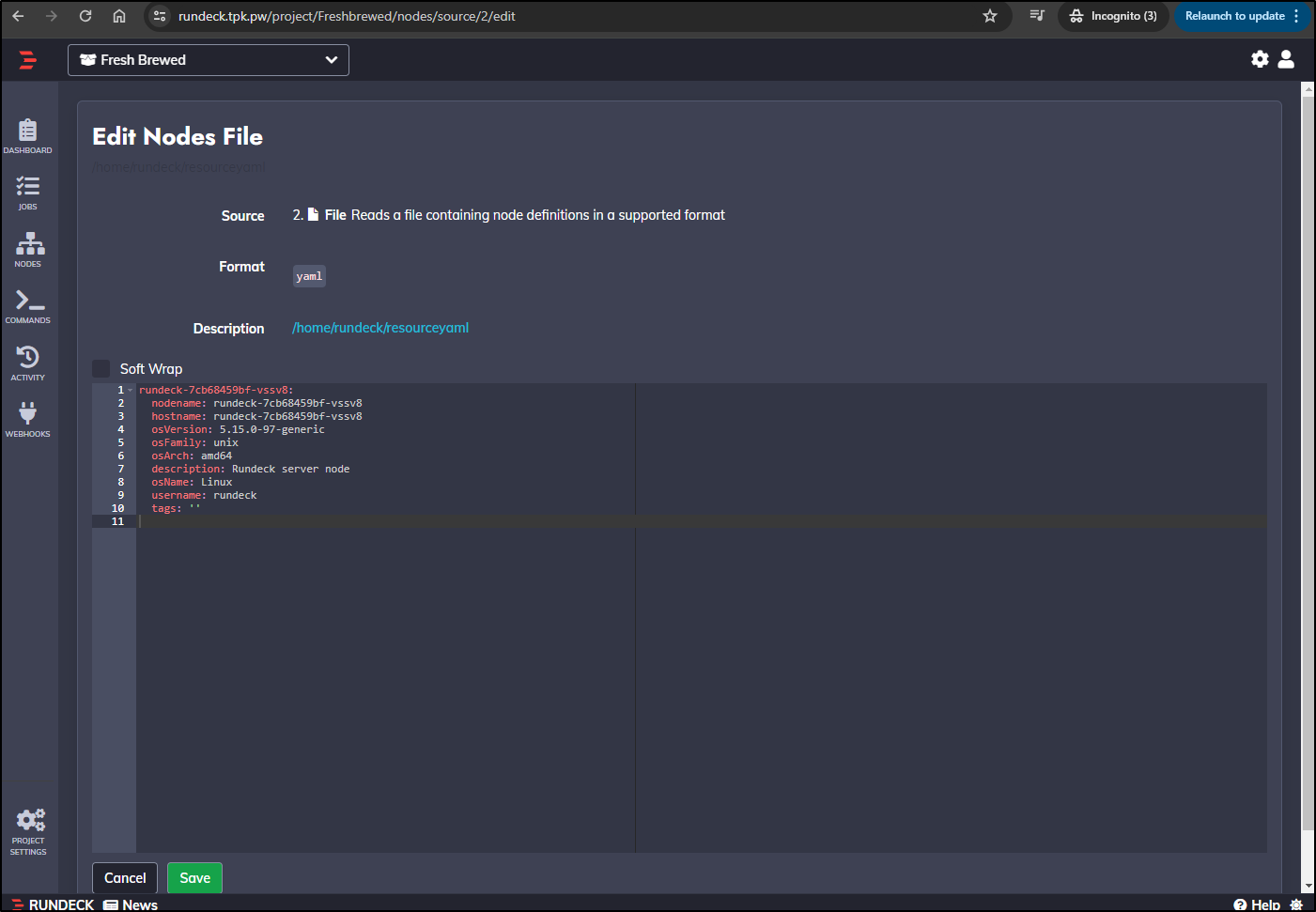

Now when I clicked Edit under Nodes, I can see some basic details

I clicked save. If I cat it on the pods, I can see its contents now

rundeck@rundeck-7cb68459bf-vssv8:~$ cat resourceyaml

rundeck-7cb68459bf-vssv8:

nodename: rundeck-7cb68459bf-vssv8

hostname: rundeck-7cb68459bf-vssv8

osVersion: 5.15.0-97-generic

osFamily: unix

osArch: amd64

description: Rundeck server node

osName: Linux

username: rundeck

tags: ''

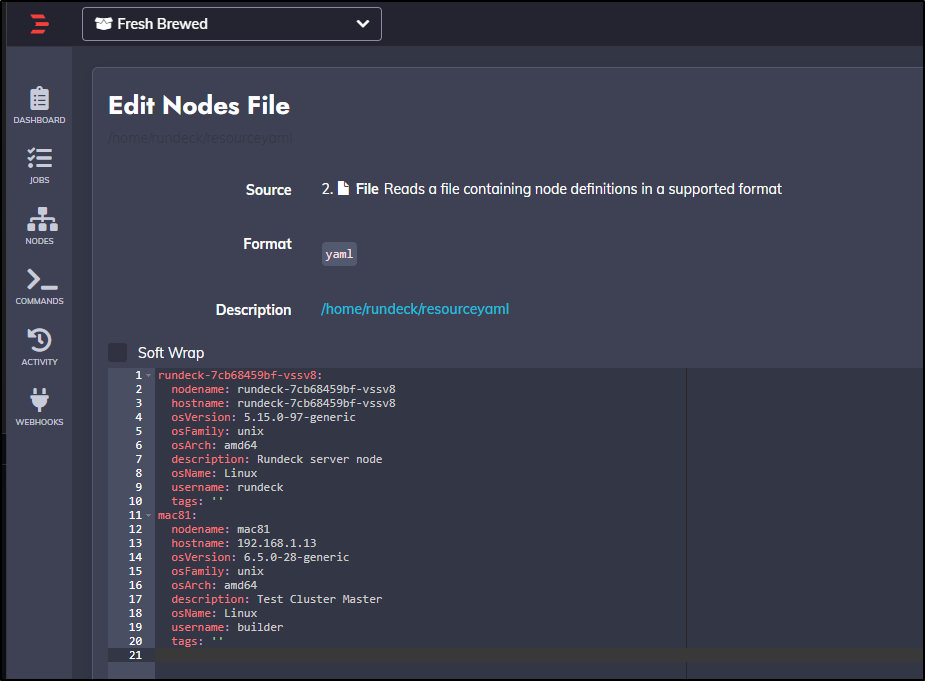

Next, I’ll add a node to the list I can use

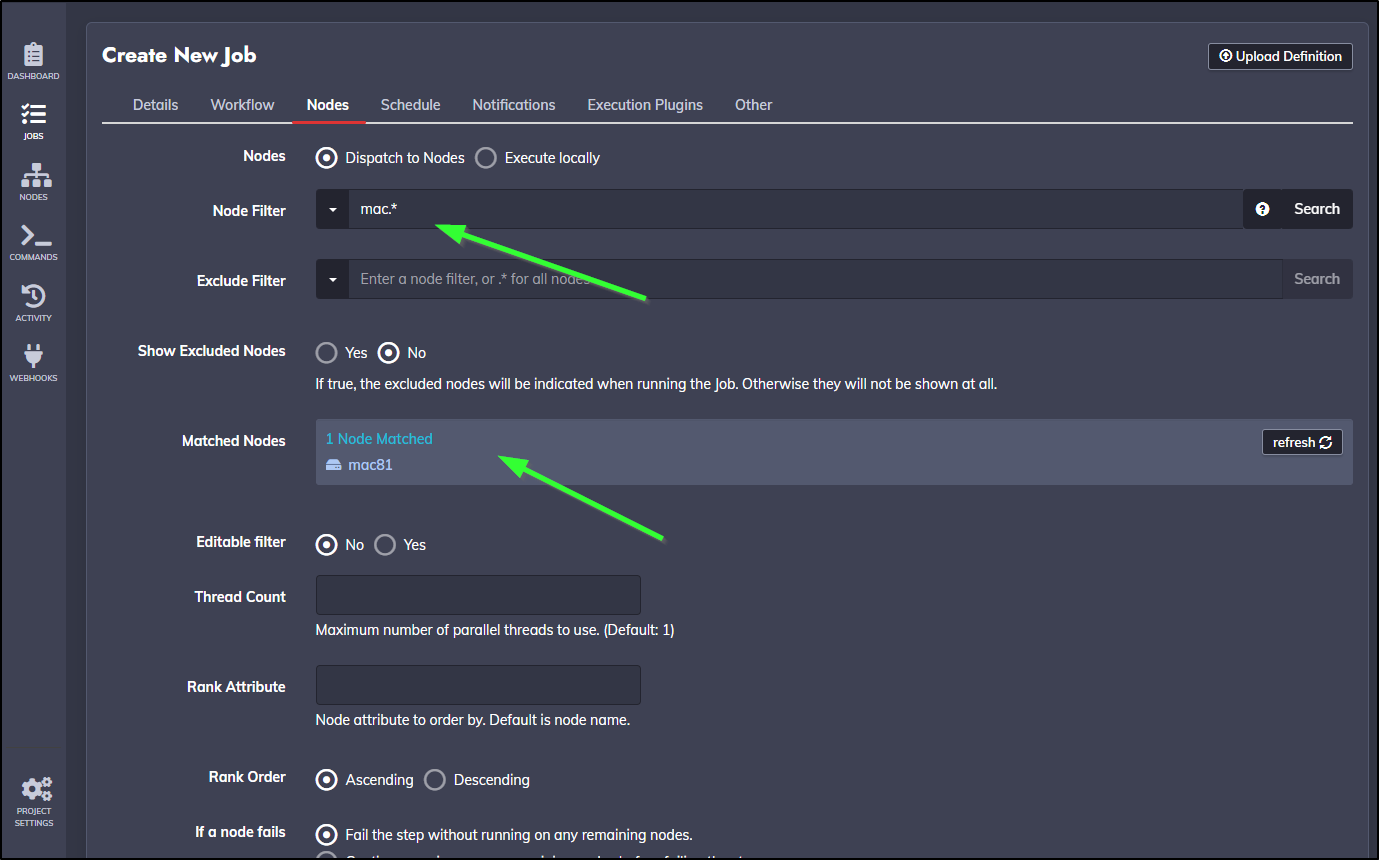

To test, I’ll create a new Job and use ‘mac.*’ in my filter. We can see ‘mac81’ was matched

The problem is I’ll really need to fix auth before we use it.

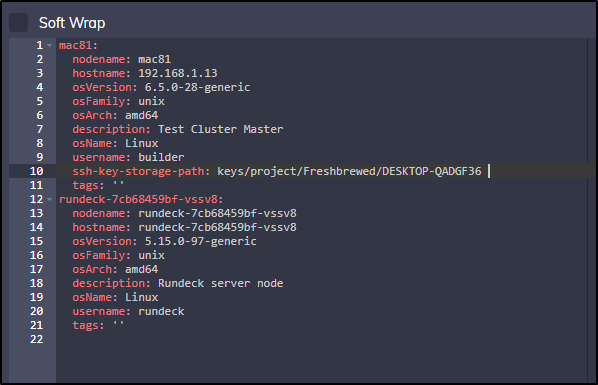

Let’s first get the path to that private key we created

We can now edit the resourceyaml and add the line

ssh-key-storage-path: keys/project/Freshbrewed/DESKTOP-QADGF36

Which we can see below at lien 10

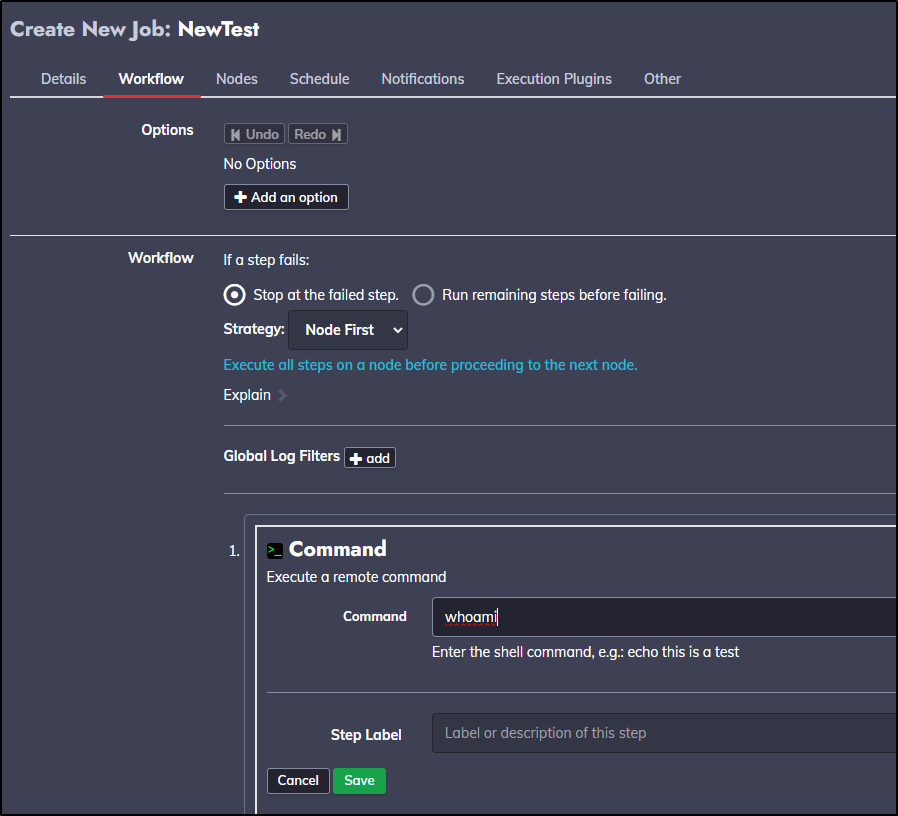

On this new test job, I’ll use the command ‘whoami’ to show we should be “builder” on that host

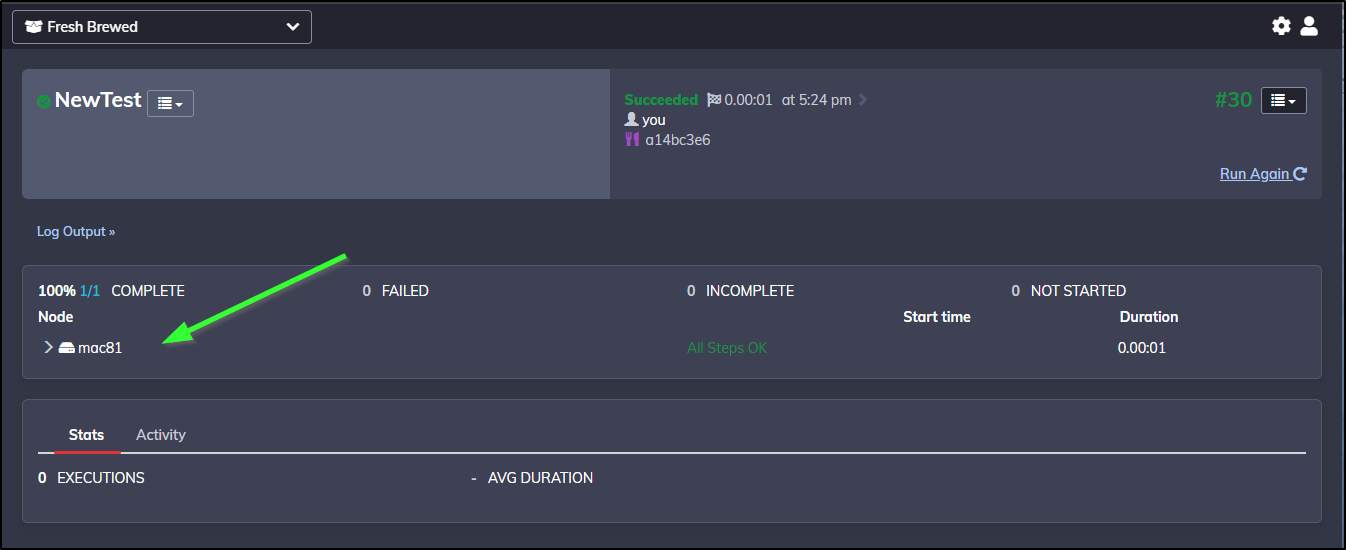

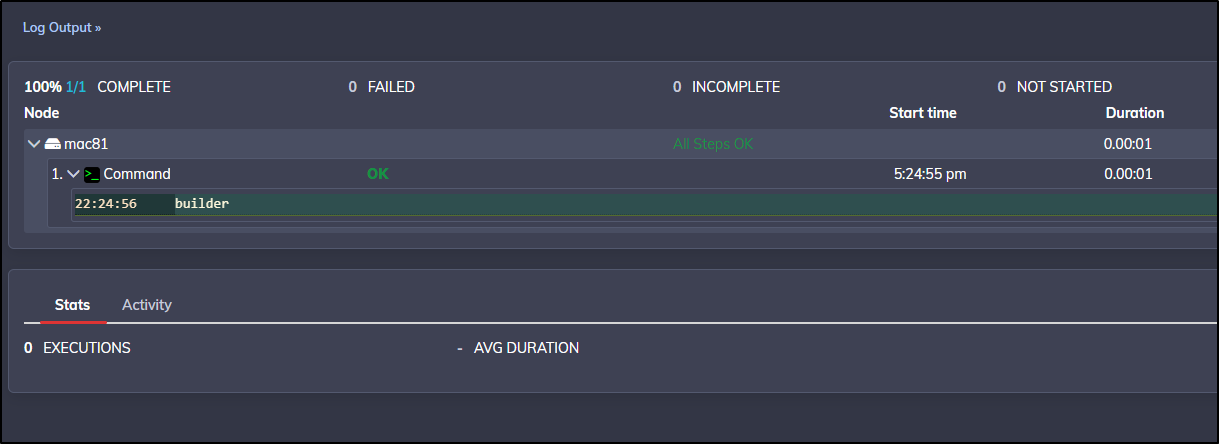

I saved and ran and we can see it ran the job on mac81

Expanding the log output shows, indeed, we are running as “builder” on that host

Summary

In this first part we setup Rundeck in Kubernetes. Since there is no official chart (of which I’m aware), we built out our own solving some configmaps and user/password issues. Once running, we sorted out TLS and Ingress before diving in to setup. In our first test, we ran a test job against the local executor.

Our goal today was getting Rundeck to use external nodes as we’ll need that for some ansible work coming next. We figured out SSH passwords and then how to use the “File” approach to add nodes. Lastly, we proved it work by running a “whoami” on a destination host.

In our next post, we’ll address how to use Commands to run arbitrary steps on hosts, how the SCM connectivity works (leveraging GIT with Forgejo). Imports of the XML files stored in GIT (using an alternate host) and Ansible two ways.