Published: Oct 26, 2023 by Isaac Johnson

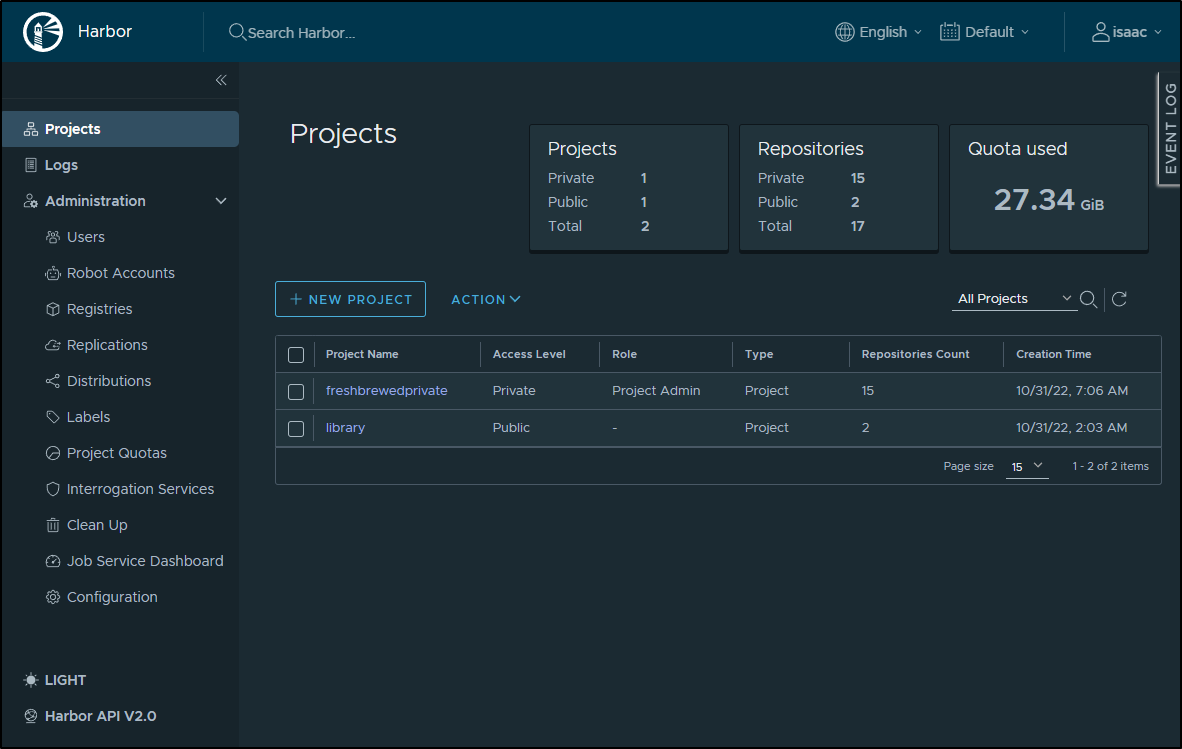

Harbor CR has moved up a lot since we last installed it nearly a year ago.

In that blog post we setup PostgreSQL as the HA backend (which is a good thing as my clusters tend to use consumer grade hardware that crashes).

Let’s take the plung and upgrade Harbor CR from v2.6.1 to the latest (as of this writing), v2.7.3.

Once done, we can move on to Uptime Kuma and Pagerduty Rundeck.

Database backup

I can remind myself which PostreSQL database I used from helm

$ helm get values harbor-registry2

USER-SUPPLIED VALUES:

database:

external:

host: 192.168.1.78

password: xxxxxxxx

port: 5432

username: harbor

type: external

Hopping on the host I can see I already have a daily cron to backup Harbor CR databases

postgres@isaac-MacBookAir:~$ crontab -l

# Edit this file to introduce tasks to be run by cron.

#

# Each task to run has to be defined through a single line

# indicating with different fields when the task will be run

# and what command to run for the task

#

# To define the time you can provide concrete values for

# minute (m), hour (h), day of month (dom), month (mon),

# and day of week (dow) or use '*' in these fields (for 'any').

#

# Notice that tasks will be started based on the cron's system

# daemon's notion of time and timezones.

#

# Output of the crontab jobs (including errors) is sent through

# email to the user the crontab file belongs to (unless redirected).

#

# For example, you can run a backup of all your user accounts

# at 5 a.m every week with:

# 0 5 * * 1 tar -zcf /var/backups/home.tgz /home/

#

# For more information see the manual pages of crontab(5) and cron(8)

#

# m h dom mon dow command

CURRENT_DATE=date +%Y_%m_%d

0 0 * * * pg_dump -U postgres postgres > /mnt/nfs/k3snfs/postgres-backups/postgres-$(${CURRENT_DATE}).bak

5 0 * * * pg_dump -U postgres registry > /mnt/nfs/k3snfs/postgres-backups/harbor-registry-$(${CURRENT_DATE}).bak

10 0 * * * pg_dump -U postgres notary_signer > /mnt/nfs/k3snfs/postgres-backups/harbor-notary-signer-$(${CURRENT_DATE}).bak

20 0 * * * pg_dump -U postgres notary_server > /mnt/nfs/k3snfs/postgres-backups/harbor-notary-server-$(${CURRENT_DATE}).bak

And of course, I can see evidence they have been backing up

postgres@isaac-MacBookAir:~$ ls -ltrah /mnt/nfs/k3snfs/postgres-backups/ | tail -n 10

-rwxrwxrwx 1 1024 users 5.7K Oct 19 00:20 harbor-notary-server-2023_10_19.bak

-rwxrwxrwx 1 1024 users 1.3K Oct 20 00:00 postgres-2023_10_20.bak

-rwxrwxrwx 1 1024 users 1.4M Oct 20 00:05 harbor-registry-2023_10_20.bak

-rwxrwxrwx 1 1024 users 3.6K Oct 20 00:10 harbor-notary-signer-2023_10_20.bak

-rwxrwxrwx 1 1024 users 5.7K Oct 20 00:20 harbor-notary-server-2023_10_20.bak

-rwxrwxrwx 1 1024 users 1.3K Oct 21 00:01 postgres-2023_10_21.bak

-rwxrwxrwx 1 1024 users 1.4M Oct 21 00:06 harbor-registry-2023_10_21.bak

-rwxrwxrwx 1 1024 users 3.6K Oct 21 00:10 harbor-notary-signer-2023_10_21.bak

drwxrwxrwx 2 1024 users 68K Oct 21 00:20 .

-rwxrwxrwx 1 1024 users 5.7K Oct 21 00:20 harbor-notary-server-2023_10_21.bak

If you haven’t backed up your Harbor CR Database, you can use the example from the crontab shown above.

Next, I’ll save aside the values for my current registry helm deploy

$ helm get values harbor-registry2 -o yaml > harbor-registry2.yaml

$ wc -l harbor-registry2.yaml

28 harbor-registry2.yaml

Next, add the Helm repo (if not already there) and update the charts

$ helm repo add harbor https://helm.goharbor.io

"harbor" already exists with the same configuration, skipping

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Unable to get an update from the "epsagon" chart repository (https://helm.epsagon.com):

Get "https://helm.epsagon.com/index.yaml": dial tcp: lookup helm.epsagon.com on 172.22.64.1:53: no such host

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "freshbrewed" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "myharbor" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "openzipkin" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "nfs" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "btungut" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "newrelic" chart repository

Update Complete. ⎈Happy Helming!⎈

IF we are at all concerned which chart is in the repo, we can just search that repo to see the updated version exists

$ helm search repo harbor

NAME CHART VERSION APP VERSION DESCRIPTION

bitnami/harbor 19.0.5 2.9.0 Harbor is an open source trusted cloud-native r...

harbor/harbor 1.13.0 2.9.0 An open source trusted cloud native registry th...

myharbor/pykasa 0.1.0 1.16.0 A Helm chart for PyKasa

We can see above we have two charts that provide Harbor (in my rather ridiculously long list of chart repos):

- bitnami/harbor : chart version 19.0.5 and App version 2.9.0

- harbor/harbor : chart version 1.13.0 and App version 2.9.0

From a numbering perspective, it’s pretty evident that I used the official Harbor chart repo the last time, so i would do the same

$ helm list | grep harbor

harbor-registry2 default 2 2023-01-25 08:23:10.93378476 -0600 CST deployed harbor-1.10.1

Before I upgrade from Chart version 1.10.1 (App version 2.6.1) to Chart version 1.13.0 (App version 2.9.0), I like to do a dry run first. It creates quite a lot of YAML so I’ll just look at the Kind’s

$ helm upgrade --dry-run -f harbor-registry2.yaml harbor-registry2 harbor/harbor | grep -i ^kind

kind: Secret

kind: Secret

kind: Secret

kind: Secret

kind: Secret

kind: Secret

kind: Secret

kind: ConfigMap

kind: ConfigMap

kind: ConfigMap

kind: ConfigMap

kind: ConfigMap

kind: ConfigMap

kind: ConfigMap

kind: PersistentVolumeClaim

kind: PersistentVolumeClaim

kind: Service

kind: Service

kind: Service

kind: Service

kind: Service

kind: Service

kind: Service

kind: Deployment

kind: Deployment

kind: Deployment

kind: Deployment

kind: Deployment

kind: StatefulSet

kind: StatefulSet

kind: Ingress

I think the only part of concern is the Ingress it wants to create

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: "harbor-registry2-ingress"

labels:

heritage: Helm

release: harbor-registry2

chart: harbor

app: "harbor"

annotations:

cert-manager.io/cluster-issuer: letsencrypt-production

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

spec:

ingressClassName: nginx

tls:

- secretName: harbor.freshbrewed.science-cert

hosts:

- harbor.freshbrewed.science

rules:

- http:

paths:

- path: /api/

pathType: Prefix

backend:

service:

name: harbor-registry2-core

port:

number: 80

- path: /service/

pathType: Prefix

backend:

service:

name: harbor-registry2-core

port:

number: 80

- path: /v2/

pathType: Prefix

backend:

service:

name: harbor-registry2-core

port:

number: 80

- path: /chartrepo/

pathType: Prefix

backend:

service:

name: harbor-registry2-core

port:

number: 80

- path: /c/

pathType: Prefix

backend:

service:

name: harbor-registry2-core

port:

number: 80

- path: /

pathType: Prefix

backend:

service:

name: harbor-registry2-portal

port:

number: 80

host: harbor.freshbrewed.science

The reason why is that I use annotations to allow larger payloads and timeouts. Let’s set that aside first:

$ kubectl get ingress harbor-registry2-ingress -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-production

field.cattle.io/publicEndpoints: '[{"addresses":["192.168.1.215","192.168.1.36","192.168.1.57","192.168.1.78"],"port":443,"protocol":"HTTPS","serviceName":"default:harbor-registry2-core","ingressName":"default:harbor-registry2-ingress","hostname":"harbor.freshbrewed.science","path":"/api/","allNodes":false},{"addresses":["192.168.1.215","192.168.1.36","192.168.1.57","192.168.1.78"],"port":443,"protocol":"HTTPS","serviceName":"default:harbor-registry2-core","ingressName":"default:harbor-registry2-ingress","hostname":"harbor.freshbrewed.science","path":"/service/","allNodes":false},{"addresses":["192.168.1.215","192.168.1.36","192.168.1.57","192.168.1.78"],"port":443,"protocol":"HTTPS","serviceName":"default:harbor-registry2-core","ingressName":"default:harbor-registry2-ingress","hostname":"harbor.freshbrewed.science","path":"/v2/","allNodes":false},{"addresses":["192.168.1.215","192.168.1.36","192.168.1.57","192.168.1.78"],"port":443,"protocol":"HTTPS","serviceName":"default:harbor-registry2-core","ingressName":"default:harbor-registry2-ingress","hostname":"harbor.freshbrewed.science","path":"/chartrepo/","allNodes":false},{"addresses":["192.168.1.215","192.168.1.36","192.168.1.57","192.168.1.78"],"port":443,"protocol":"HTTPS","serviceName":"default:harbor-registry2-core","ingressName":"default:harbor-registry2-ingress","hostname":"harbor.freshbrewed.science","path":"/c/","allNodes":false},{"addresses":["192.168.1.215","192.168.1.36","192.168.1.57","192.168.1.78"],"port":443,"protocol":"HTTPS","serviceName":"default:harbor-registry2-portal","ingressName":"default:harbor-registry2-ingress","hostname":"harbor.freshbrewed.science","path":"/","allNodes":false}]'

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"networking.k8s.io/v1","kind":"Ingress","metadata":{"annotations":{"cert-manager.io/cluster-issuer":"letsencrypt-production","ingress.kubernetes.io/proxy-body-size":"0","ingress.kubernetes.io/ssl-redirect":"true","meta.helm.sh/release-name":"harbor-registry2","meta.helm.sh/release-namespace":"default","nginx.ingress.kubernetes.io/proxy-body-size":"0","nginx.ingress.kubernetes.io/proxy-read-timeout":"600","nginx.ingress.kubernetes.io/proxy-send-timeout":"600","nginx.ingress.kubernetes.io/ssl-redirect":"true","nginx.org/client-max-body-size":"0","nginx.org/proxy-connect-timeout":"600","nginx.org/proxy-read-timeout":"600"},"creationTimestamp":"2022-10-31T11:57:10Z","generation":1,"labels":{"app":"harbor","app.kubernetes.io/managed-by":"Helm","chart":"harbor","heritage":"Helm","release":"harbor-registry2"},"name":"harbor-registry2-ingress","namespace":"default","resourceVersion":"37648959","uid":"688c907f-ed71-47b3-9512-c4c9508ab5ac"},"spec":{"ingressClassName":"nginx","rules":[{"host":"harbor.freshbrewed.science","http":{"paths":[{"backend":{"service":{"name":"harbor-registry2-core","port":{"number":80}}},"path":"/api/","pathType":"Prefix"},{"backend":{"service":{"name":"harbor-registry2-core","port":{"number":80}}},"path":"/service/","pathType":"Prefix"},{"backend":{"service":{"name":"harbor-registry2-core","port":{"number":80}}},"path":"/v2/","pathType":"Prefix"},{"backend":{"service":{"name":"harbor-registry2-core","port":{"number":80}}},"path":"/chartrepo/","pathType":"Prefix"},{"backend":{"service":{"name":"harbor-registry2-core","port":{"number":80}}},"path":"/c/","pathType":"Prefix"},{"backend":{"service":{"name":"harbor-registry2-portal","port":{"number":80}}},"path":"/","pathType":"Prefix"}]}}],"tls":[{"hosts":["harbor.freshbrewed.science"],"secretName":"harbor.freshbrewed.science-cert"}]},"status":{"loadBalancer":{"ingress":[{"ip":"192.168.1.214"},{"ip":"192.168.1.38"},{"ip":"192.168.1.57"},{"ip":"192.168.1.77"}]}}}

meta.helm.sh/release-name: harbor-registry2

meta.helm.sh/release-namespace: default

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "600"

nginx.org/proxy-read-timeout: "600"

creationTimestamp: "2022-10-31T11:57:10Z"

generation: 1

labels:

app: harbor

app.kubernetes.io/managed-by: Helm

chart: harbor

heritage: Helm

release: harbor-registry2

name: harbor-registry2-ingress

namespace: default

resourceVersion: "249214523"

uid: 688c907f-ed71-47b3-9512-c4c9508ab5ac

spec:

ingressClassName: nginx

rules:

- host: harbor.freshbrewed.science

http:

paths:

- backend:

service:

name: harbor-registry2-core

port:

number: 80

path: /api/

pathType: Prefix

- backend:

service:

name: harbor-registry2-core

port:

number: 80

path: /service/

pathType: Prefix

- backend:

service:

name: harbor-registry2-core

port:

number: 80

path: /v2/

pathType: Prefix

- backend:

service:

name: harbor-registry2-core

port:

number: 80

path: /chartrepo/

pathType: Prefix

- backend:

service:

name: harbor-registry2-core

port:

number: 80

path: /c/

pathType: Prefix

- backend:

service:

name: harbor-registry2-portal

port:

number: 80

path: /

pathType: Prefix

tls:

- hosts:

- harbor.freshbrewed.science

secretName: harbor.freshbrewed.science-cert

status:

loadBalancer:

ingress:

- ip: 192.168.1.215

- ip: 192.168.1.36

- ip: 192.168.1.57

- ip: 192.168.1.78

I also have a Notary ingress now but didn’t see it listed in the helm dry run

$ kubectl get ingress harbor-registry2-ingress-notary -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-production

field.cattle.io/publicEndpoints: '[{"addresses":["192.168.1.215","192.168.1.36","192.168.1.57","192.168.1.78"],"port":443,"protocol":"HTTPS","serviceName":"default:harbor-registry2-notary-server","ingressName":"default:harbor-registry2-ingress-notary","hostname":"notary.freshbrewed.science","path":"/","allNodes":false}]'

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

meta.helm.sh/release-name: harbor-registry2

meta.helm.sh/release-namespace: default

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

creationTimestamp: "2022-10-31T11:57:10Z"

generation: 1

labels:

app: harbor

app.kubernetes.io/managed-by: Helm

chart: harbor

heritage: Helm

release: harbor-registry2

name: harbor-registry2-ingress-notary

namespace: default

resourceVersion: "249214548"

uid: e2b7c887-cbca-46d8-bc32-6ad38dc27b74

spec:

ingressClassName: nginx

rules:

- host: notary.freshbrewed.science

http:

paths:

- backend:

service:

name: harbor-registry2-notary-server

port:

number: 4443

path: /

pathType: Prefix

tls:

- hosts:

- notary.freshbrewed.science

secretName: notary.freshbrewed.science-cert

status:

loadBalancer:

ingress:

- ip: 192.168.1.215

- ip: 192.168.1.36

- ip: 192.168.1.57

- ip: 192.168.1.78

I can double check the versions

image: goharbor/harbor-core:v2.9.0

image: goharbor/harbor-exporter:v2.9.0

image: goharbor/harbor-jobservice:v2.9.0

image: goharbor/harbor-portal:v2.9.0

image: goharbor/registry-photon:v2.9.0

image: goharbor/harbor-registryctl:v2.9.0

image: goharbor/redis-photon:v2.9.0

image: goharbor/trivy-adapter-photon:v2.9.0

compared to current

$ helm get values harbor-registry2 --all | grep 'tag: '

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

tag: v2.6.1

Upgrade

Enough stalling, time to pull the trigger and upgrade. There is no ‘undo’ due to database migrations. This means if things fail really bad, I would need to restore from the PostgreSQL backups.

$ helm upgrade -f harbor-registry2.yaml harbor-registry2 harbor/harbor

Release "harbor-registry2" has been upgraded. Happy Helming!

NAME: harbor-registry2

LAST DEPLOYED: Sun Oct 22 13:19:27 2023

NAMESPACE: default

STATUS: deployed

REVISION: 3

TEST SUITE: None

NOTES:

Please wait for several minutes for Harbor deployment to complete.

Then you should be able to visit the Harbor portal at https://harbor.freshbrewed.science

For more details, please visit https://github.com/goharbor/harbor

We can see it upgraded really fast

$ kubectl get pods | grep harbor

harbor-registry2-jobservice-865f8f8b67-rflnx 1/1 Running 182 (23h ago) 81d

harbor-registry2-portal-5c45d99f69-nt6sj 1/1 Running 0 80s

harbor-registry2-redis-0 1/1 Running 0 76s

harbor-registry2-registry-6fcf6fdf49-glbg2 2/2 Running 0 80s

harbor-registry2-core-5886799cd6-58szd 1/1 Running 0 80s

harbor-registry2-trivy-0 1/1 Running 0 75s

harbor-registry2-exporter-59755fb475-trkqv 1/1 Running 0 80s

harbor-registry2-registry-d887cbd98-wlt7x 0/2 Terminating 0 156d

harbor-registry2-jobservice-6bf7d6f5d6-76khw 0/1 Running 2 (29s ago) 80s

I can see it listed

$ helm list | grep harbor

harbor-registry2 default 3 2023-10-22 13:19:27.905767777 -0500 CDT deployed harbor-1.13.0

2.9.0

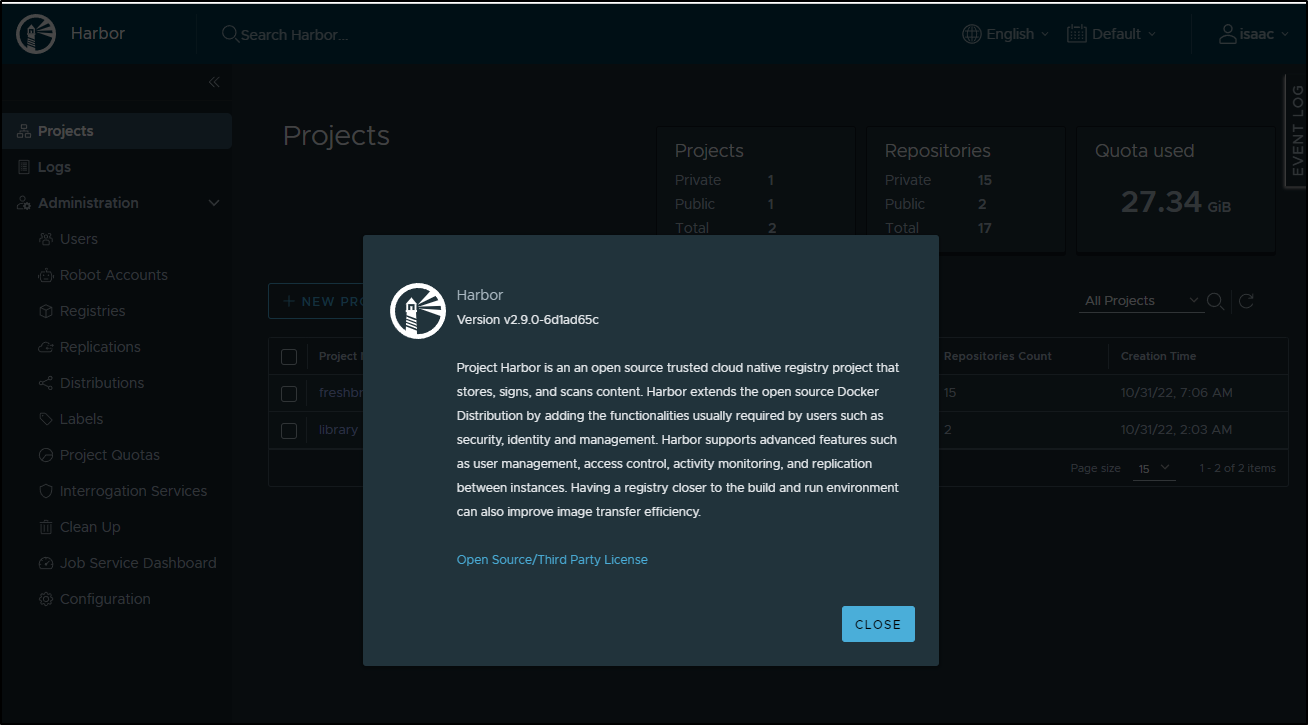

I can see the new version in the About page

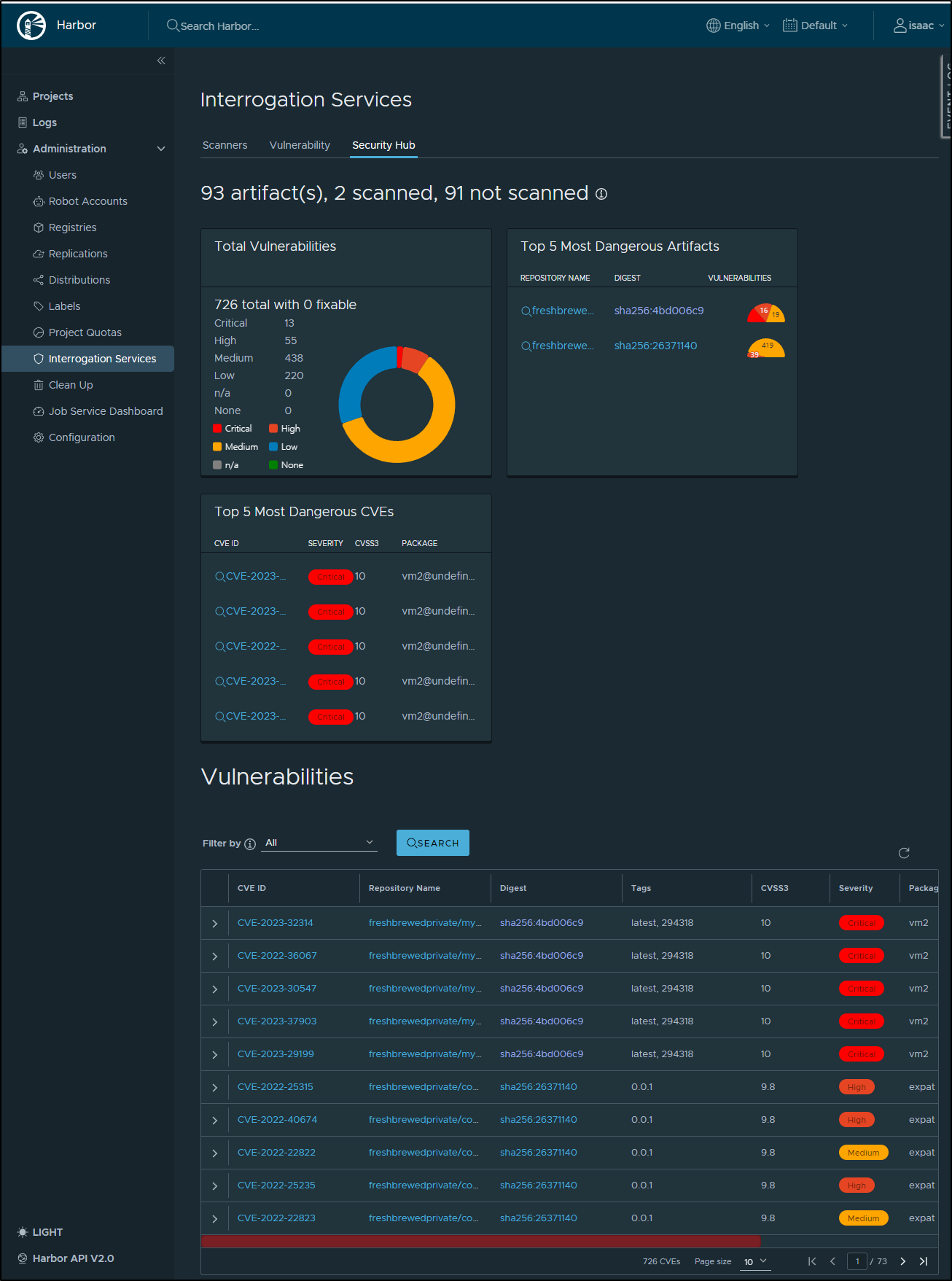

Let’s check out some of the new features such as the Security Hub

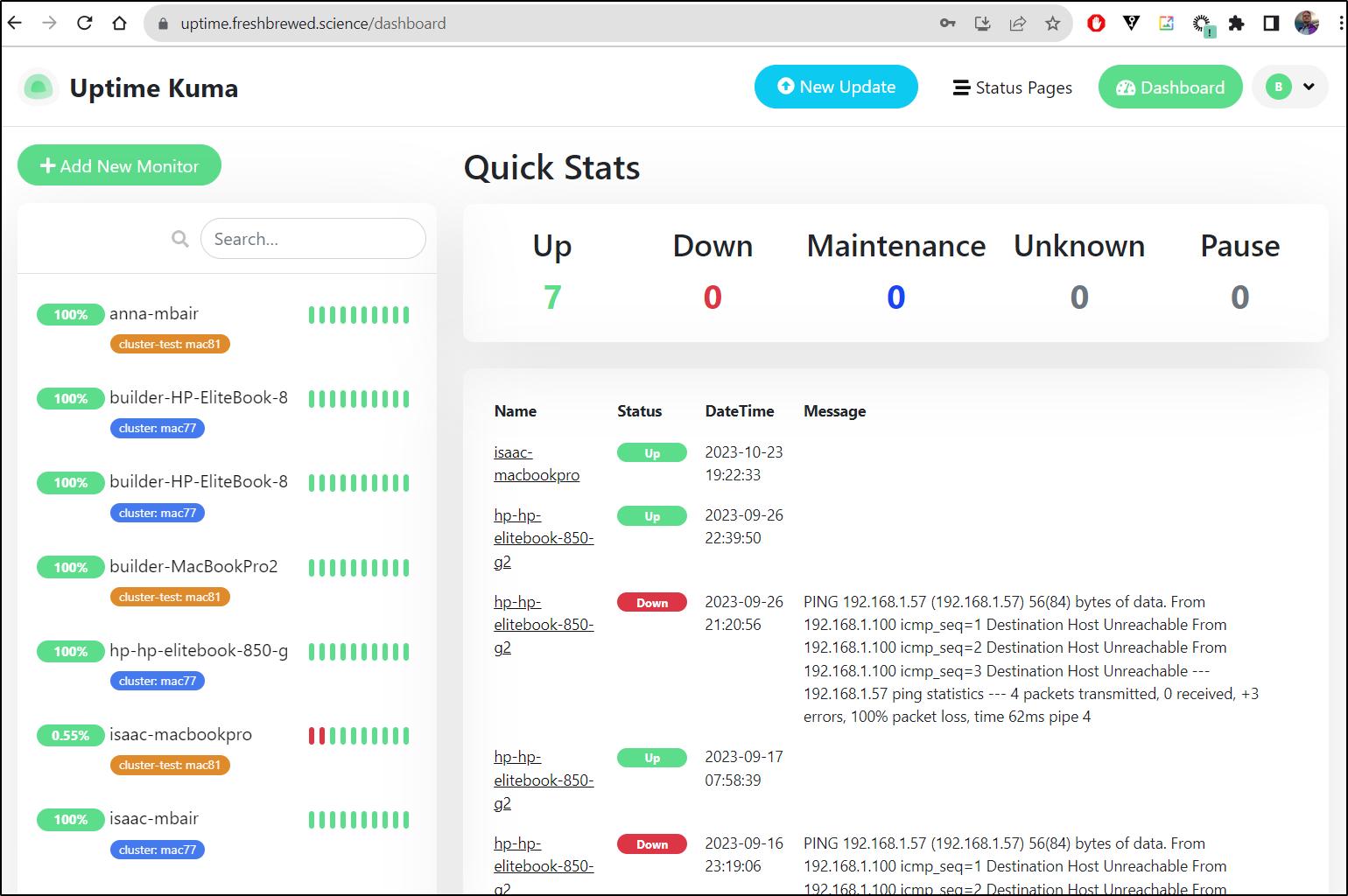

Upgrading Uptime

My Uptime Kuma actually runs in docker on a micro PC and fronted by K8s through an external IP endpoint.

The way that Ingress works is we define a service

$ kubectl get svc uptime-external-ip -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"uptime-external-ip","namespace":"default"},"spec":{"clusterIP":"None","ports":[{"name":"utapp","port":80,"protocol":"TCP","targetPort":3001}],"type":"ClusterIP"}}

creationTimestamp: "2023-05-09T11:49:17Z"

name: uptime-external-ip

namespace: default

resourceVersion: "134542833"

uid: 1a4909ce-d5de-4b4f-ae24-9f8fb7df6f63

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: utapp

port: 80

protocol: TCP

targetPort: 3001

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

However, you’ll notice a lack of selectors.

Instead, we pair it with an externalip using “endpoints”

$ kubectl get endpoints uptime-external-ip -o yaml

apiVersion: v1

kind: Endpoints

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Endpoints","metadata":{"annotations":{},"name":"uptime-external-ip","namespace":"default"},"subsets":[{"addresses":[{"ip":"192.168.1.100"}],"ports":[{"name":"utapp","port":3001,"protocol":"TCP"}]}]}

creationTimestamp: "2023-05-09T11:49:17Z"

name: uptime-external-ip

namespace: default

resourceVersion: "134542834"

uid: 6863a4d8-b0dc-4e07-8e2c-3fd29f0ac5f1

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: utapp

port: 3001

protocol: TCP

I’ll hop over to that host and see what version we are running.

From the history, I could see we literally are running version “1”

$ docker run -d --restart=always -p 3001:3001 -v uptime-kuma:/app/data --name uptime-kuma louislam/uptime-kuma:1

Try as I might, I can’t find an existing ‘1’ tag image from 5 months ago on dockerhub (perhaps it was removed)

$ docker image inspect 9a2976449ae2

[

{

"Id": "sha256:9a2976449ae2d48388c8ae920311a49b82b76667042b2007281a77f694c151dd",

"RepoTags": [

"louislam/uptime-kuma:1"

],

"RepoDigests": [

"louislam/uptime-kuma@sha256:1630eb7859c5825a1bc3fcbea9467ab3c9c2ef0d98a9f5f0ab0aec9791c027e8"

],

"Parent": "",

"Comment": "buildkit.dockerfile.v0",

"Created": "2023-05-08T16:29:53.903463708Z",

"Container": "",

This time I’ll use a tag v.1.23.3

$ docker volume create uptime-kuma1233

uptime-kuma1233

$ docker run -d --restart=always -p 3101:3001 -v uptime-kuma1233:/app/data --name uptime-kuma-1233 louislam/uptime-kuma:1.23.3

Unable to find image 'louislam/uptime-kuma:1.23.3' locally

1.23.3: Pulling from louislam/uptime-kuma

91f01557fe0d: Pull complete

ecdd21ad91a4: Pull complete

72f5a0bbd6d5: Pull complete

6d65359ecb29: Pull complete

a9db6797916e: Pull complete

fba4a7c70bda: Pull complete

4eae394bbd7b: Pull complete

efe96d40ae58: Pull complete

6133283921eb: Pull complete

e4aabc99e92a: Pull complete

4f4fb700ef54: Pull complete

8bdf079e662e: Pull complete

Digest: sha256:7d8b69a280bd9fa45d21c44a4f9b133b52925212c5d243f27ef03bcad33de2c1

Status: Downloaded newer image for louislam/uptime-kuma:1.23.3

6bff0185cab4567ca08637cf06f3b7f8e56fbd7c611eb9828f1b401f6961465e

We can see it running

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1700cf832175 louislam/uptime-kuma:1.23.3 "/usr/bin/dumb-init …" 3 seconds ago Up 2 seconds (health: starting) 0.0.0.0:3101->3001/tcp, :::3101->3001/tcp uptime-kuma-1233b

767c9377f238 rundeck/rundeck:3.4.6 "/tini -- docker-lib…" 5 months ago Up 2 months 0.0.0.0:4440->4440/tcp, :::4440->4440/tcp rundeck2

0d276b305523 louislam/uptime-kuma:1 "/usr/bin/dumb-init …" 5 months ago Up 2 months (healthy) 0.0.0.0:3001->3001/tcp, :::3001->3001/tcp uptime-kuma

We can then edit the service and endpoint to use 3101

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: utapbp

port: 80

protocol: TCP

targetPort: 3101

sessionAffinity: None

type: ClusterIP

and Endpoints

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: utapbp

port: 3101

protocol: TCP

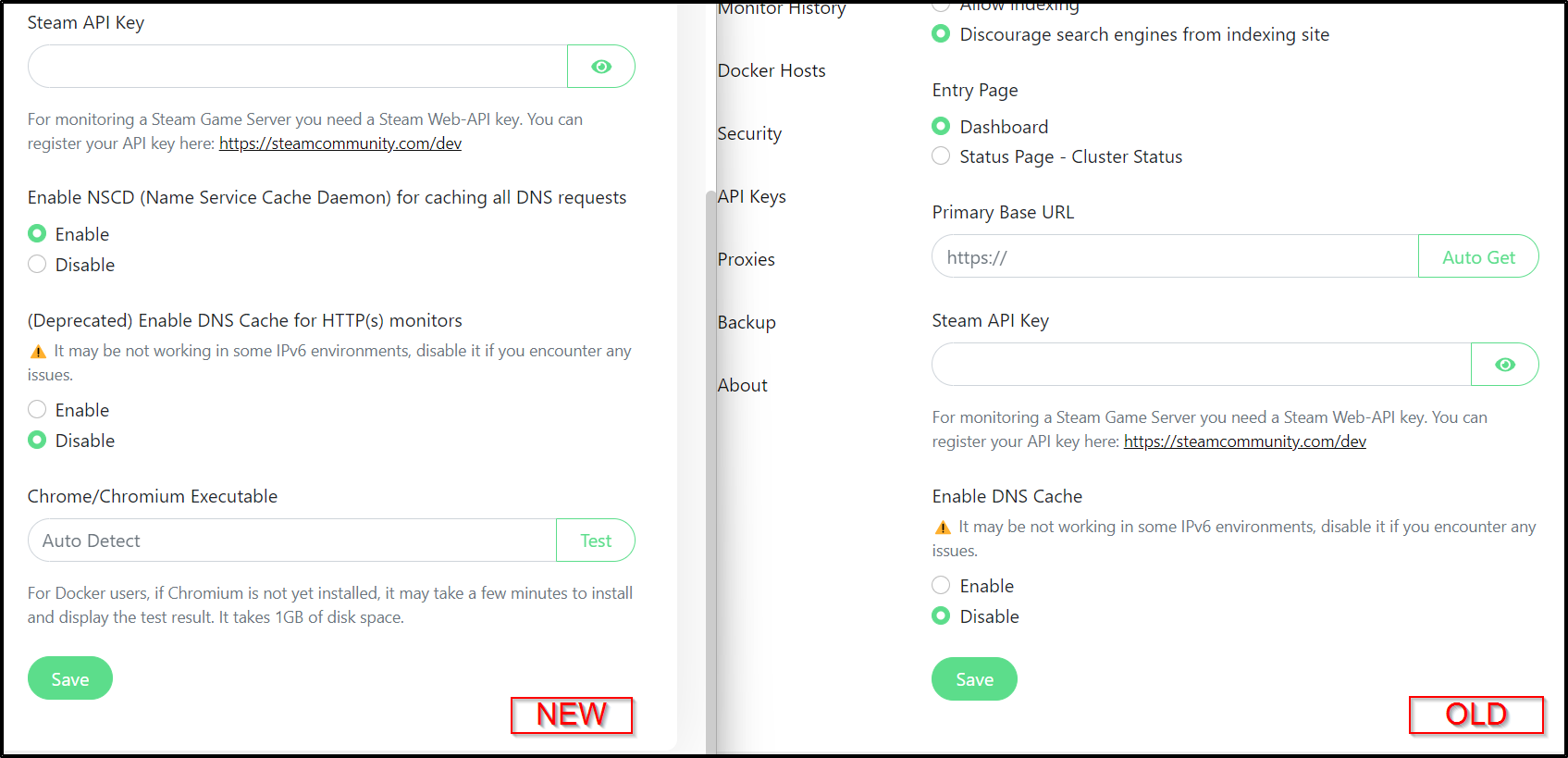

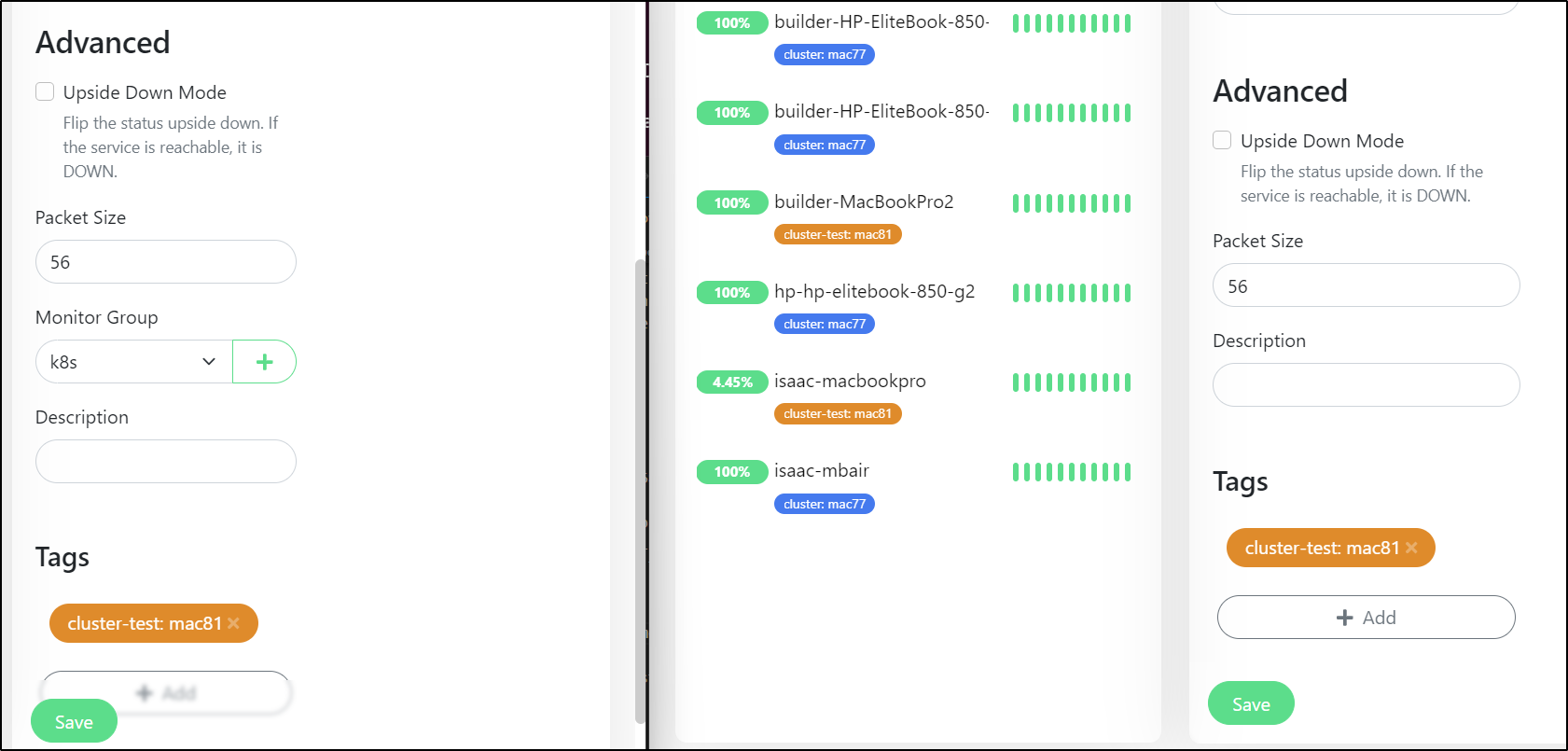

We can see some of the new features and menus, such as in settings

I can see new features like “Monitoring Groups”

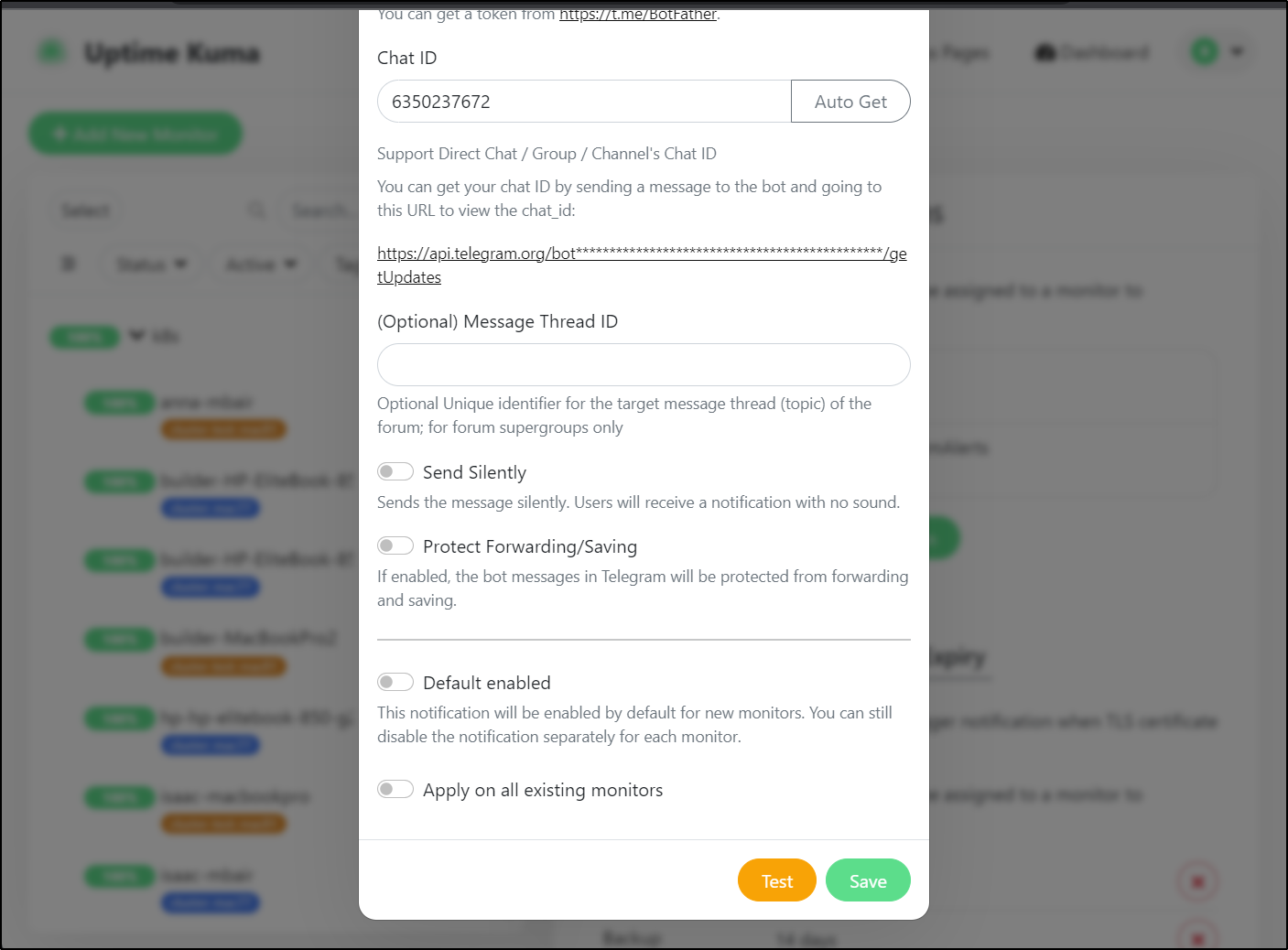

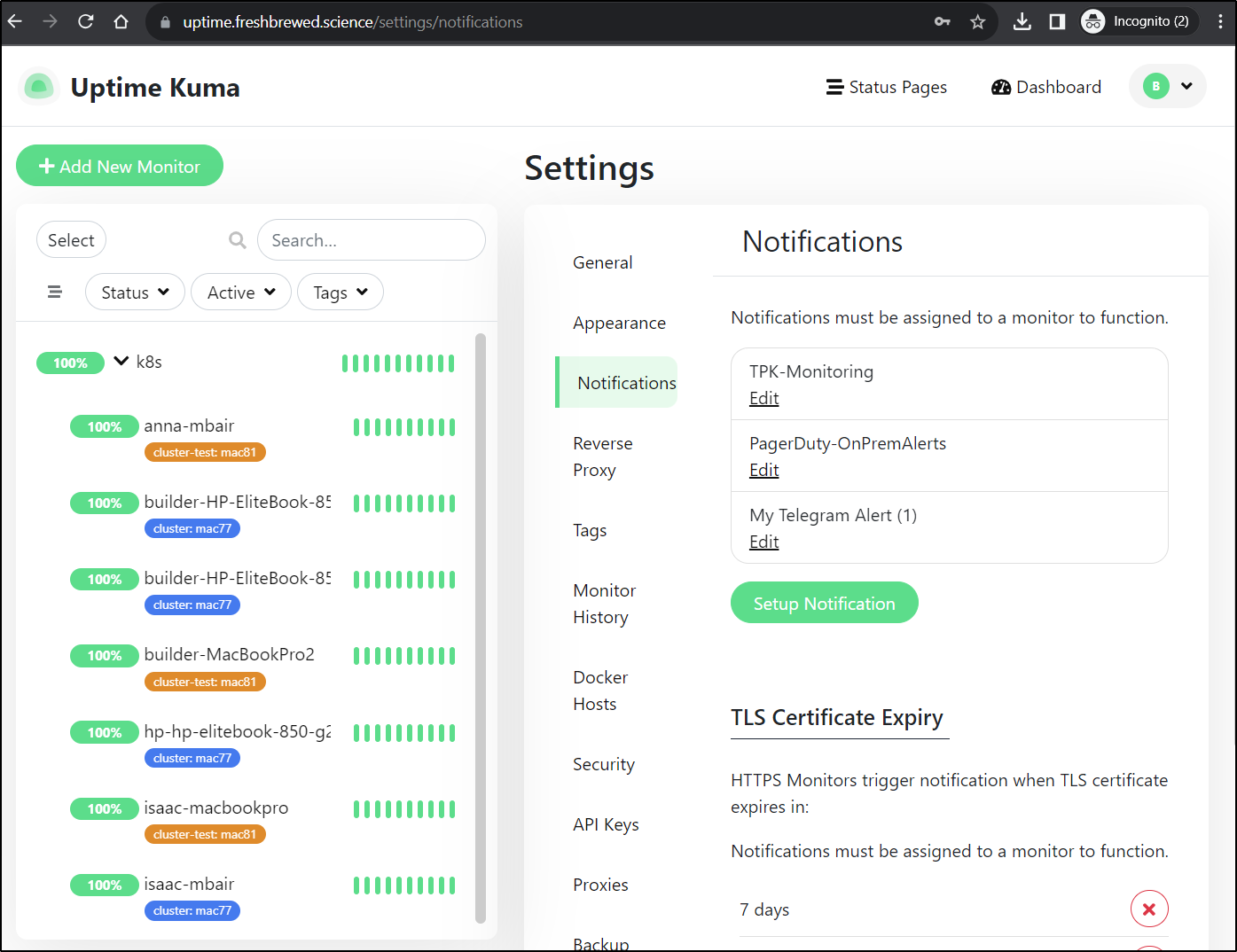

Telegram

Adding Telegram is pretty easy using the ‘BotFather’ (from the device of course)

We can now test

Which sent to the device

We can now see the new Telegram endpoint along with a newer feature that grouped all the Kubernetes notifications into a “k8s” group

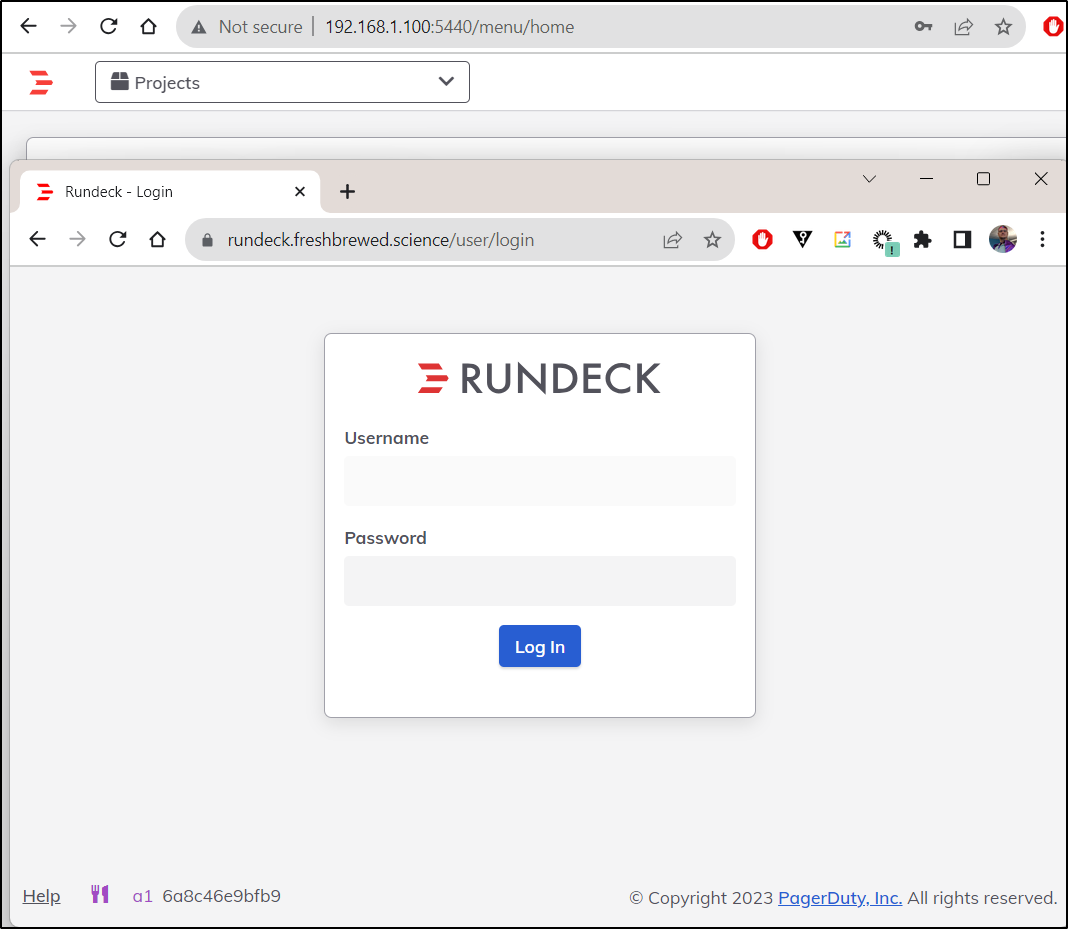

Pagerduty Rundeck

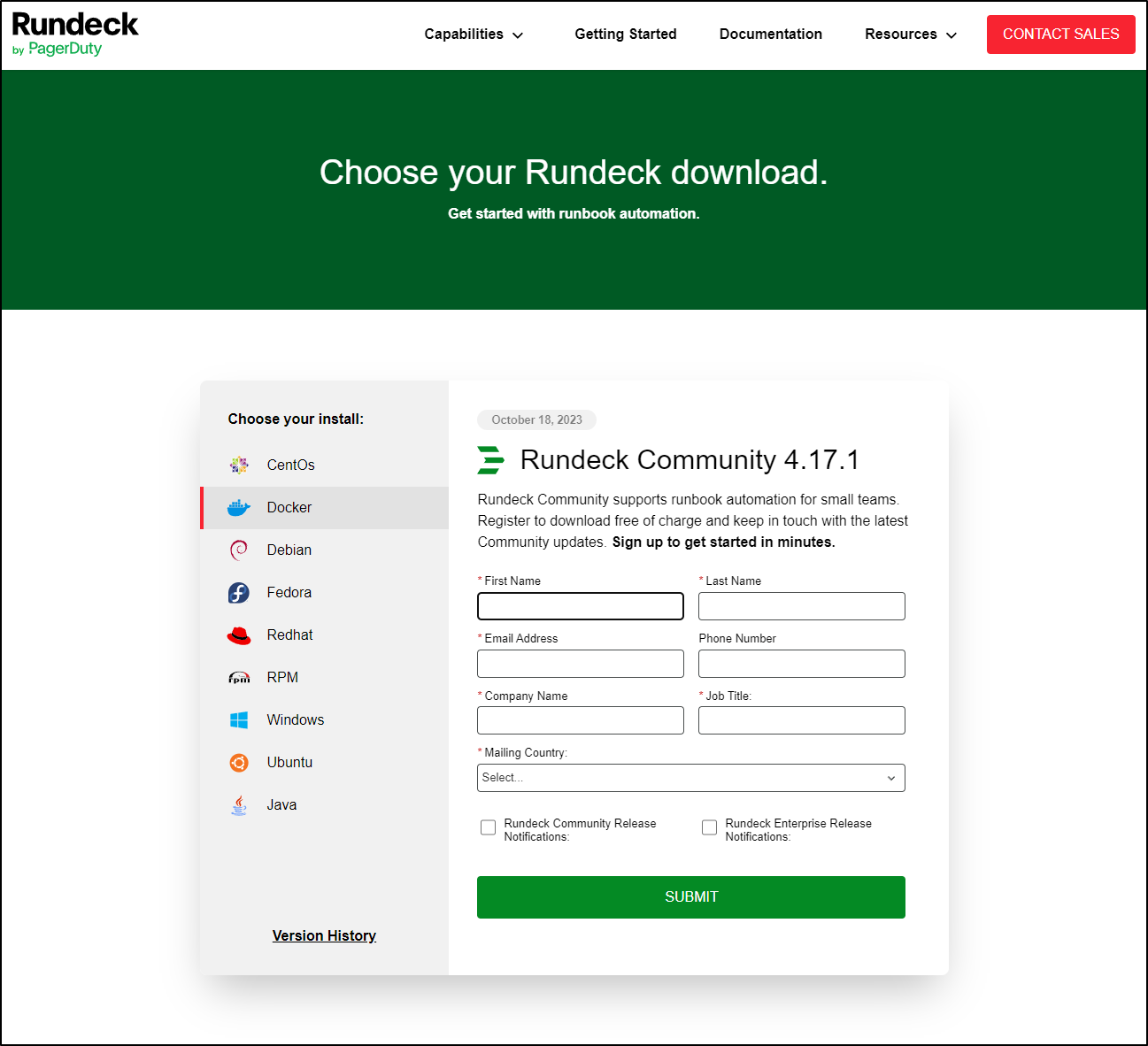

We can get different installers through a PI collection page

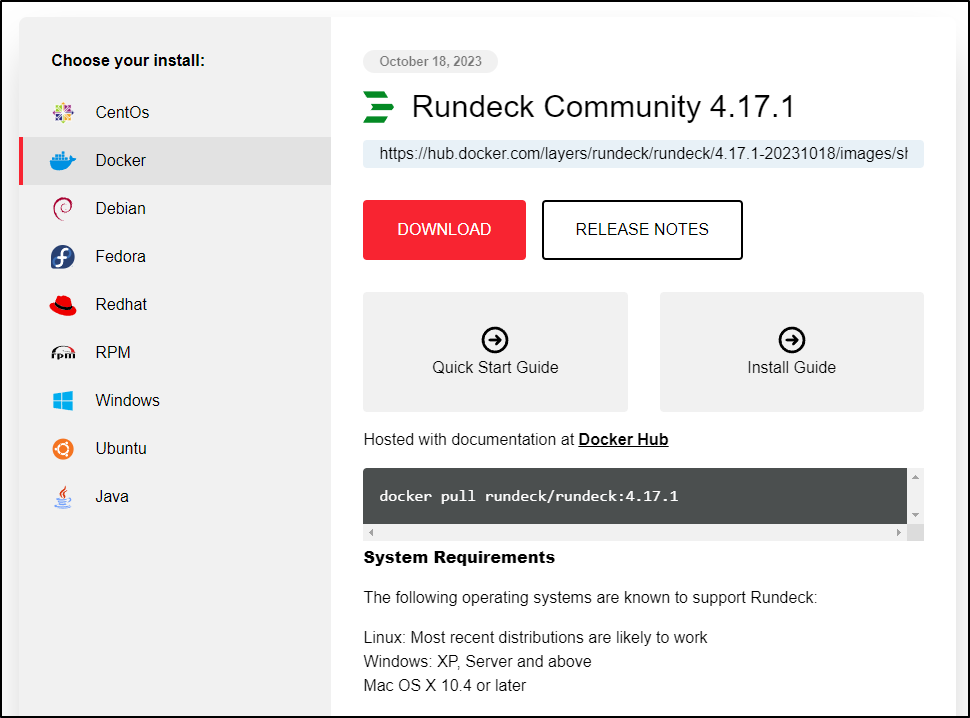

And see the latest image (though we could find it from dockerhub too)

We can see the image and pull down the new one on the Docker host

builder@builder-T100:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS

NAMES

1700cf832175 louislam/uptime-kuma:1.23.3 "/usr/bin/dumb-init …" 20 hours ago Up 20 hours (healthy) 0.0.0.0:3101->3001/tcp, :::3101->3001/tcp uptime-kuma-1233b

767c9377f238 rundeck/rundeck:3.4.6 "/tini -- docker-lib…" 5 months ago Up 2 months 0.0.0.0:4440->4440/tcp, :::4440->4440/tcp rundeck2

builder@builder-T100:~$ docker pull rundeck/rundeck:4.17.1

4.17.1: Pulling from rundeck/rundeck

ca1778b69356: Pull complete

df27435ba8bd: Pull complete

964ef4f85b7c: Pull complete

9ae91f8e216c: Pull complete

19372f3ab2a4: Pull complete

6c0b56e3a393: Pull complete

a2fd243fb3df: Pull complete

a090b7c4c5b3: Pull complete

ba69c7ff8f7b: Pull complete

3f100cde7d05: Pull complete

092341dff557: Pull complete

e4310779d215: Pull complete

2c90e598ac90: Pull complete

Digest: sha256:8c5eac10a0bdc37549a0d90c52dd9b88720a35ad93e4bb2fe7ec775986d5a657

Status: Downloaded newer image for rundeck/rundeck:4.17.1

docker.io/rundeck/rundeck:4.17.1

I’ll then want to create a docker volume

$ docker volume create rundeck3

rundeck3

I can now launch the container

$ docker run -d --restart=always -p 5440::4440 -v rundeck3:/home/rundeck/server/data -e RUNDECK_GRAILS_URL=http://192.168.1.100:5440 --n

ame rundeck3 rundeck/rundeck:4.17.1

docker: Invalid ip address: 5440.

See 'docker run --help'.

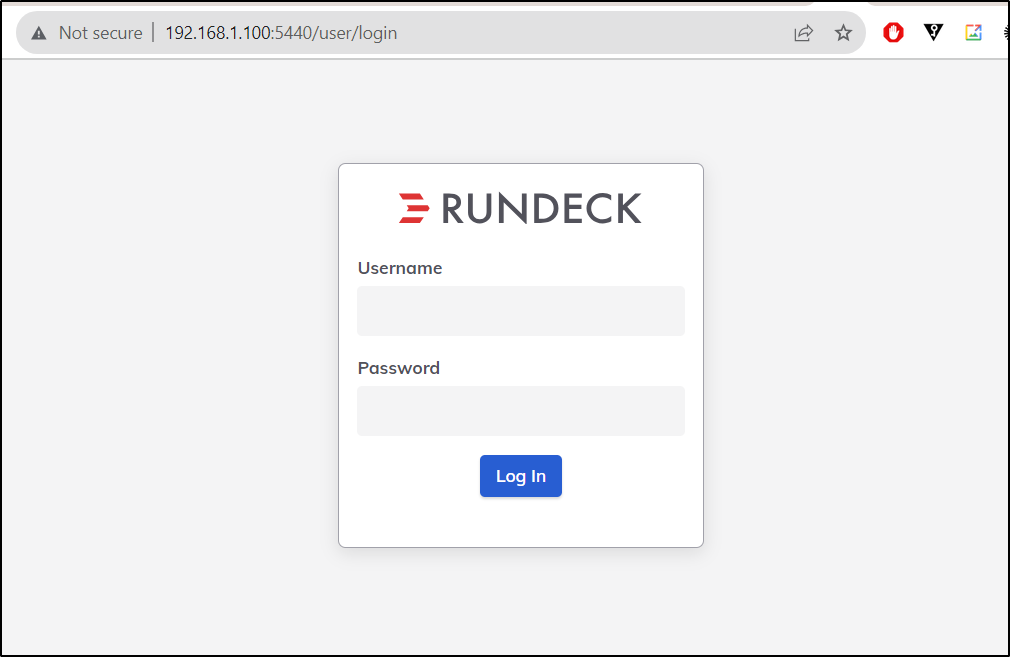

I can tell right away that Rundeck 4 looks a lot different than Rundeck 3

We cannot change the password in the UI, sadly, for admin and user. But we can if we hop into the container and change the realm.properties file

$ docker exec -it rundeck3 /bin/bash

rundeck@6a8c46e9bfb9:~$ cd server/

rundeck@6a8c46e9bfb9:~/server$ cd config/

rundeck@6a8c46e9bfb9:~/server/config$ cat realm.properties | sed 's/admin:admin/admin:mynewadminpassword/' | sed 's/user:user/user:mynewuserpassword/' > realm.properties2

rundeck@6a8c46e9bfb9:~/server/config$ mv realm.properties2 realm.properties

We need to restart the rundeck process. Back in Rundeck 3, there was an /etc/init.d script but I don’t see anything that fits the bill in this latest version.

That said, just killing the tini process seemed to have the effect

rundeck@6a8c46e9bfb9:~$ ps -ef

UID PID PPID C STIME TTY TIME CMD

rundeck 1 0 0 00:03 ? 00:00:00 /tini -- docker-lib/entry.sh

rundeck 7 1 7 00:03 ? 00:02:02 java -XX:+UnlockExperimentalVMOptions -XX:MaxRAMPercentage=75 -Dlog4j.configurationFile=/home/rundeck/serv

rundeck 127 0 0 00:20 pts/0 00:00:00 /bin/bash

rundeck 176 127 0 00:30 pts/0 00:00:00 ps -ef

rundeck@6a8c46e9bfb9:~$ kill 1

rundeck@6a8c46e9bfb9:~$ builder@builder-T100:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6a8c46e9bfb9 rundeck/rundeck:4.17.1 "/tini -- docker-lib…" 26 minutes ago Up 3 seconds 0.0.0.0:5440->4440/tcp, :::5440->4440/tcp rundeck3

I knew it was using the updated passwords as the default admin:admin no longer worked.

Let’s route some traffic via Kubernetes. I already set an A record so rundeck.freshbrewed.science.

$ cat rundeck.ingress.yaml

apiVersion: v1

kind: Service

metadata:

name: rundeck-external-ip

spec:

clusterIP: None

internalTrafficPolicy: Cluster

ports:

- name: rundeckp

port: 80

protocol: TCP

targetPort: 5440

sessionAffinity: None

type: ClusterIP

---

apiVersion: v1

kind: Endpoints

metadata:

name: rundeck-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: rundeckp

port: 5440

protocol: TCP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: rundeck-external-ip

labels:

app.kubernetes.io/instance: rundeckingress

name: rundeckingress

spec:

rules:

- host: rundeck.freshbrewed.science

http:

paths:

- backend:

service:

name: rundeck-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- rundeck.freshbrewed.science

secretName: rundeck-tls

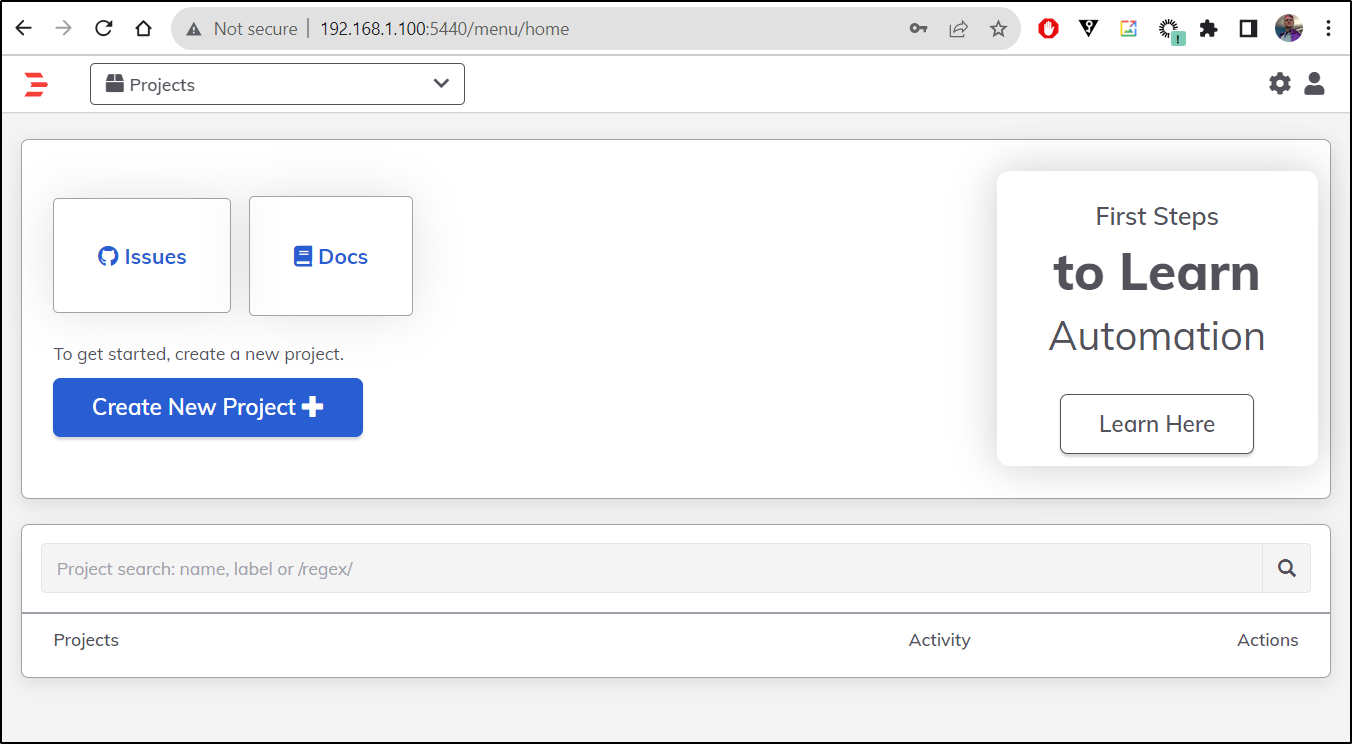

after a moment, it worked

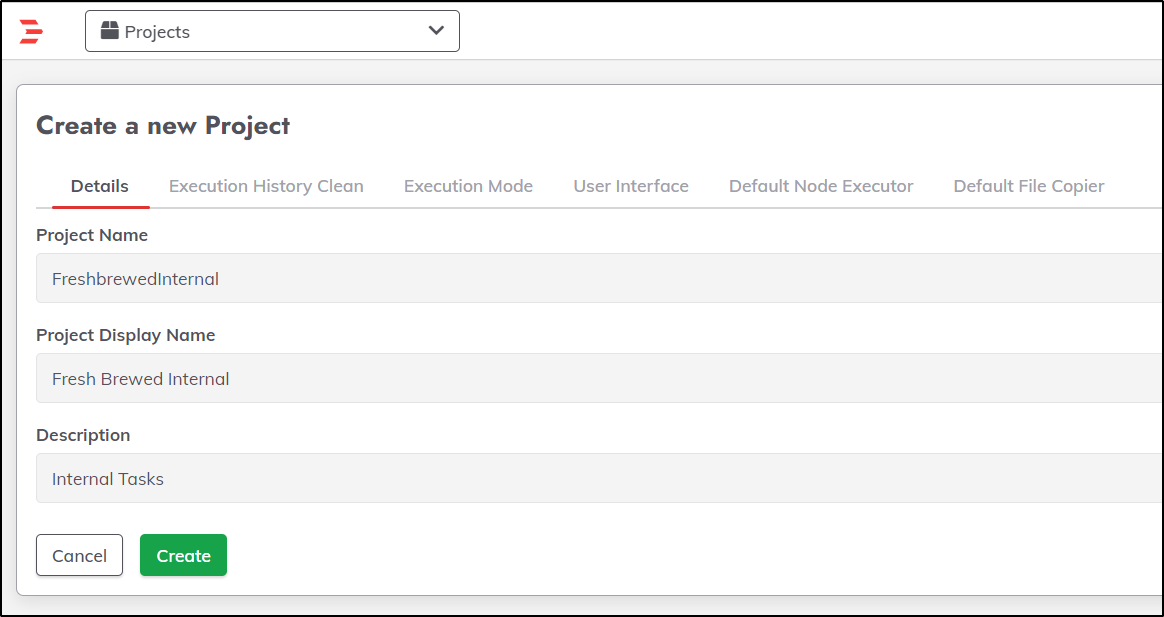

We can now create a project

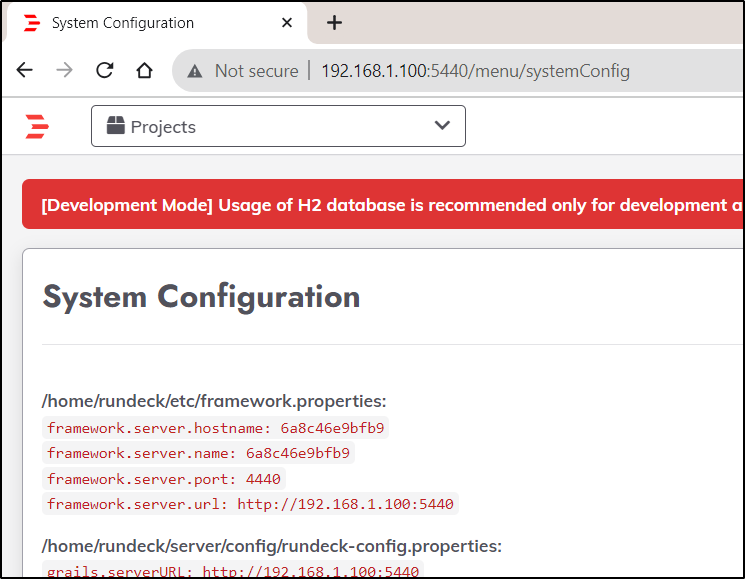

Regardless of what I do, it keeps wanting to redirect to the internal IP on http

rundeck@6a8c46e9bfb9:~/etc$ cat framework.properties | sed 's/http:\/\/192.168.1.100:5440/https:\/\/rundeck.freshbrewed.science/g' > framework.properties2

rundeck@6a8c46e9bfb9:~/etc$ mv framework.properties2 framework.properties

I tried a few times, but it doesn’t stick. I needed to stop the old container and start one with the GRAILS URL set properly

builder@builder-T100:~$ docker volume create rundeck4

rundeck4

builder@builder-T100:~$ docker run -d --restart=always -p 5440:4440 -v rundeck4:/home/rundeck/server/data -e RUNDECK_GRAILS_URL=https://rundeck.freshbrewed.sc

ience --name rundeck4 rundeck/rundeck:4.17.1

8ed93813eeca2dc17205825623fcc0fd8af07b62c771acc0fc309a4356af8ca3

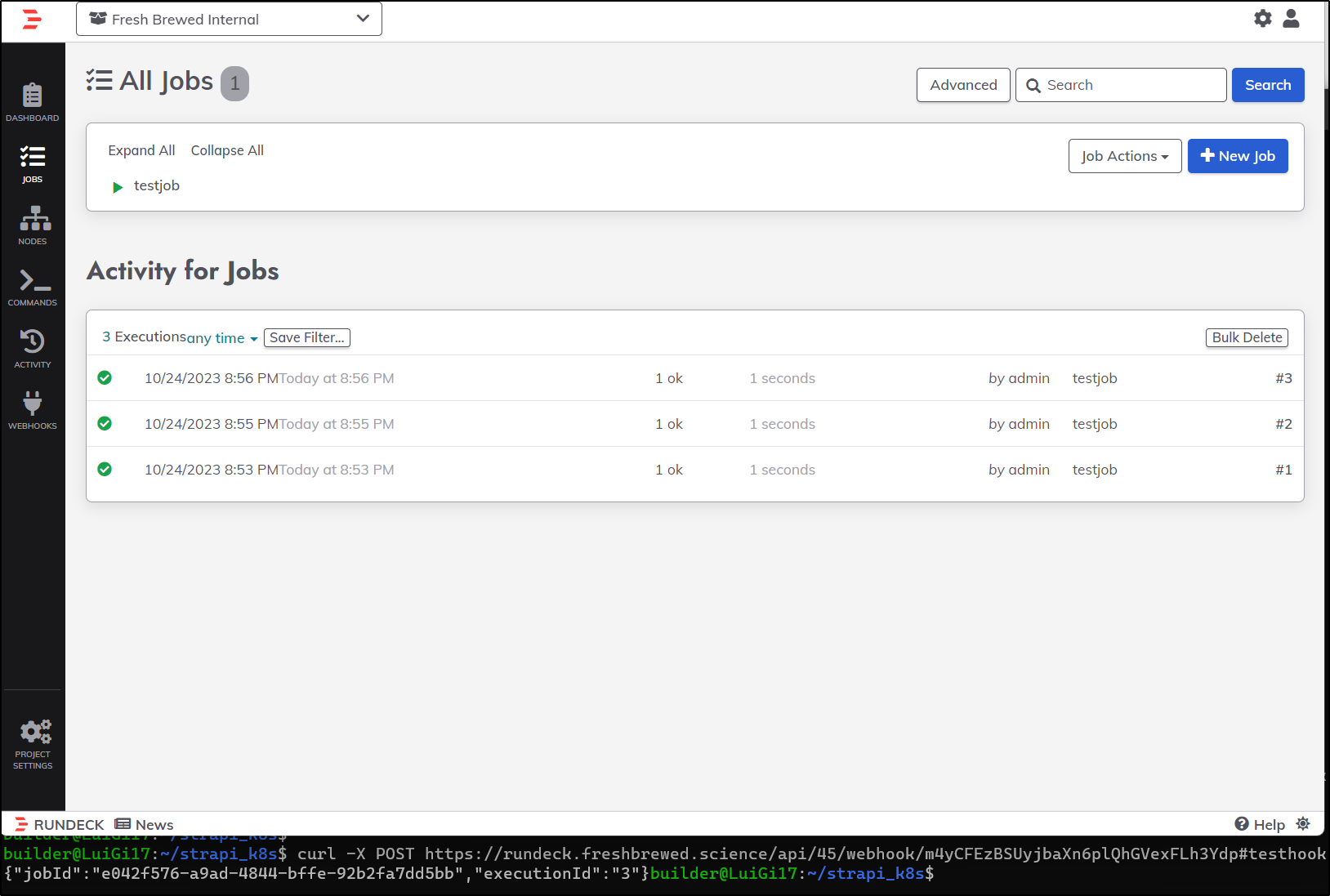

I won’t get into all the ways to use Rundeck. That is likely a project for another day. But I did create a testjob just to execute pwd then a webhook so I could verify that, indeed, webhooks through the K8s ingress would work

Summary

In today’s writeup we upgraded Harbor Container Registry using helm and upgrading in-place. We reviewed how to set regular backups of PostgreSQL in the process then explored some of the new features in v2.9.0.

Tempting fate, we moved on to upgrading Uptime Kuma from an unknown version back in May 2023 to v1.23.3 that is less than a month old. We then explored a few features including adding Telegram notifications.

Lastly, we jumped into Pagerduty Rundeck (Community OS edition) and upgraded from v3.4.6 up to the latest v4.17.1. I covered how to setup Kubernetes Ingress/Service/Endpoints to front traffic into a containerized system. However, I only touched on using it, demoing a webhook. There are so many new features in Rundeck, I plan to save a full deep dive for a follow-up article.

Thank you for sticking through to the bottom of the post! Hopefully you found some value.