Published: Oct 10, 2023 by Isaac Johnson

Today we will focus on updating a common Github Runner container image with Pulumi and OpenTofu binaries. I use this ghRunnerImage in my SummerWind-based RunnerDeployments hosted in my on-prem Kubernetes cluster. As a result, updating this image will enable any private runners I have to use OpenTF and Pulumi without having to download and/or compile with each run.

In this post we’ll show full Terraform automation pipelines using OpenTF in Github workflows with GCP backed remote state management. We’ll then pivot to Pulumi to show running some basic Pulumi deployments in a private GH Runner as well. We’ll wrap with a multi stage Github Workflow that creates a bucket using Pulumi, uses it then cleans up when done.

Dockerfile updates

Our next goal is to update the GhRunner image to include Pulumi and OpenTF.

One thing I realized is that I need to use the proper go build cmd for it to work in docker (not just go build .) which is detailed in Contributing

builder@LuiGi17:~/Workspaces/jekyll-blog/ghRunnerImage$ git diff

diff --git a/Gemfile b/Gemfile

index c4e16c9..a4d46c6 100644

--- a/Gemfile

+++ b/Gemfile

@@ -10,4 +10,5 @@ group :jekyll_plugins do

gem "jekyll-sitemap"

gem "jekyll-paginate"

gem "jekyll-seo-tag"

-end

\ No newline at end of file

+end

+gem "webrick", "~> 1.8"

diff --git a/ghRunnerImage/Dockerfile b/ghRunnerImage/Dockerfile

index d621cdc..4497fd7 100644

--- a/ghRunnerImage/Dockerfile

+++ b/ghRunnerImage/Dockerfile

@@ -15,8 +15,28 @@ RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y azure-cli awscli ruby-full

+# Install Pulumi

+RUN curl -fsSL https://get.pulumi.com | sh

+

+# Install Homebrew

+RUN /bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

+

+# OpenTF

+

+# Install Golang 1.19

+RUN eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)" \

+ && brew install go@1.19

+#echo 'export PATH="/home/linuxbrew/.linuxbrew/opt/go@1.19/bin:$PATH"'

+

+RUN git clone https://github.com/opentofu/opentofu.git /tmp/opentofu \

+ && cd /tmp/opentofu && export PATH="/home/linuxbrew/.linuxbrew/opt/go@1.19/bin:$PATH" \

+ && /home/linuxbrew/.linuxbrew/opt/go@1.19/bin/go install ./cmd/tofu

+

RUN sudo chown runner /usr/local/bin

+RUN cd $(/home/linuxbrew/.linuxbrew/opt/go@1.19/bin/go env GOPATH) \

+ && cd ./bin && pwd && export && cp ./tofu /usr/local/bin/

+

RUN sudo chmod 777 /var/lib/gems/2.7.0

RUN sudo chown runner /var/lib/gems/2.7.0

@@ -37,4 +57,4 @@ RUN umask 0002 \

RUN sudo rm -rf /var/lib/apt/lists/*

-#harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.14

+#harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.15

That meant my full Dockerfile looked as such

$ cat Dockerfile

FROM summerwind/actions-runner:latest

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y ca-certificates curl apt-transport-https lsb-release gnupg

# Install MS Key

RUN curl -sL https://packages.microsoft.com/keys/microsoft.asc | gpg --dearmor | sudo tee /etc/apt/trusted.gpg.d/microsoft.gpg > /dev/null

# Add MS Apt repo

RUN umask 0002 && echo "deb [arch=amd64] https://packages.microsoft.com/repos/azure-cli/ focal main" | sudo tee /etc/apt/sources.list.d/azure-cli.list

# Install Azure CLI

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y azure-cli awscli ruby-full

# Install Pulumi

RUN curl -fsSL https://get.pulumi.com | sh

# Install Homebrew

RUN /bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

# OpenTF

# Install Golang 1.19

RUN eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)" \

&& brew install go@1.19

#echo 'export PATH="/home/linuxbrew/.linuxbrew/opt/go@1.19/bin:$PATH"'

RUN git clone https://github.com/opentofu/opentofu.git /tmp/opentofu \

&& cd /tmp/opentofu && export PATH="/home/linuxbrew/.linuxbrew/opt/go@1.19/bin:$PATH" \

&& /home/linuxbrew/.linuxbrew/opt/go@1.19/bin/go install ./cmd/tofu

RUN sudo chown runner /usr/local/bin

RUN cd $(/home/linuxbrew/.linuxbrew/opt/go@1.19/bin/go env GOPATH) \

&& cd ./bin && pwd && export && cp ./tofu /usr/local/bin/

RUN sudo chmod 777 /var/lib/gems/2.7.0

RUN sudo chown runner /var/lib/gems/2.7.0

# Install Expect and SSHPass

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y sshpass expect

# save time per build

RUN umask 0002 \

&& gem install bundler

# Limitations in newer jekyll

RUN umask 0002 \

&& gem install jekyll --version="~> 4.2.0"

RUN sudo rm -rf /var/lib/apt/lists/*

#harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.15

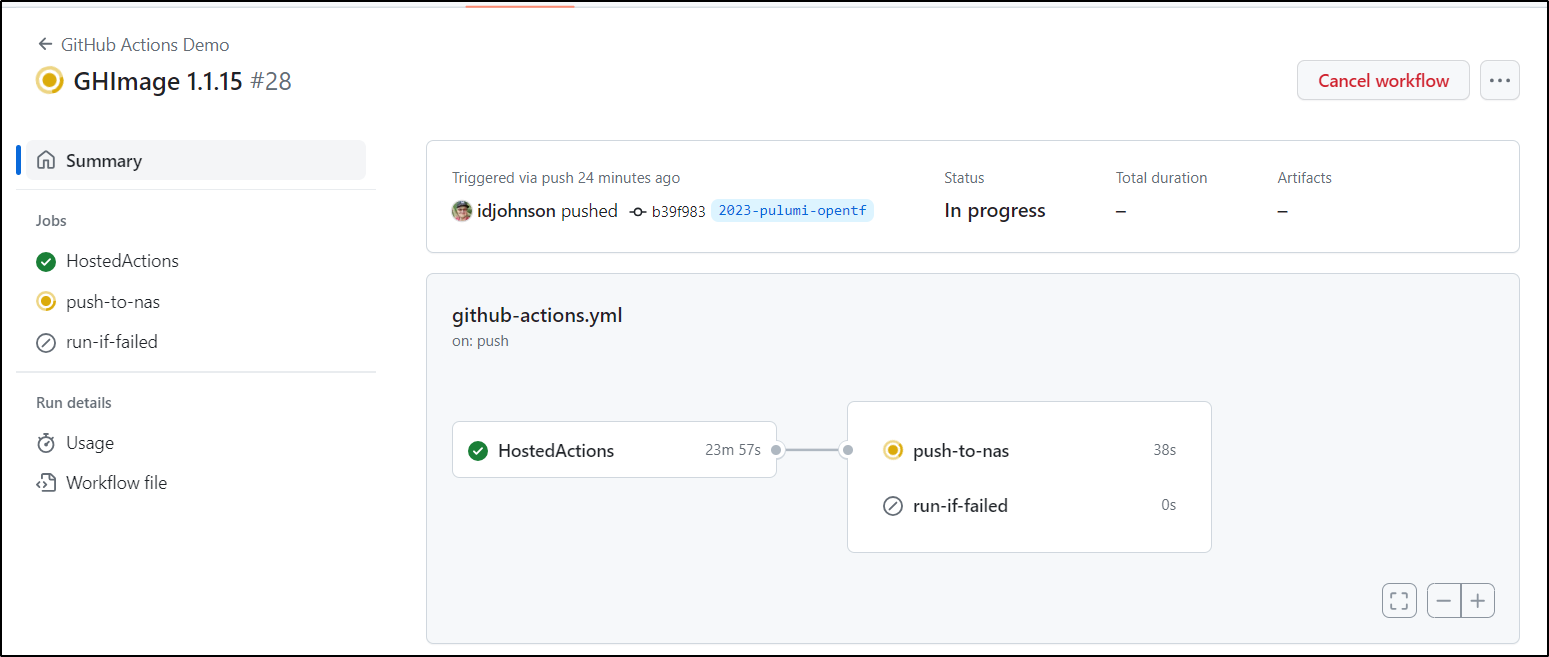

The GH build illustrates why this is important. The full build of my GH Runner image takes upwards of 23m. Can you imagine how long that would extend CI builds?

Once done, I update my RunnerDeployment to use the new image

builder@LuiGi17:~/Workspaces/jekyll-blog/ghRunnerImage$ kubectl get RunnerDeployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

new-jekyllrunner-deployment 5 5 5 5 245d

builder@LuiGi17:~/Workspaces/jekyll-blog/ghRunnerImage$ kubectl edit RunnerDeployment new-jekyllrunner-deployment

runnerdeployment.actions.summerwind.dev/new-jekyllrunner-deployment edited

I dropped it from 5 to 3 standby replicas

new-jekyllrunner-deployment-wt5j7-vr74c 2/2 Running 0 14h

new-jekyllrunner-deployment-wt5j7-t7hfl 2/2 Running 0 12h

new-jekyllrunner-deployment-wt5j7-lhnv9 2/2 Running 0 12h

new-jekyllrunner-deployment-wt5j7-7vx8d 2/2 Running 0 11h

new-jekyllrunner-deployment-wt5j7-cd2zp 2/2 Running 0 4m15s

my-redis-release-redis-cluster-4 0/1 CrashLoopBackOff 246 (3m1s ago) 20h

new-jekyllrunner-deployment-6t44l-w9lt5 0/2 ContainerCreating 0 27s

new-jekyllrunner-deployment-6t44l-h76jl 0/2 ContainerCreating 0 27s

new-jekyllrunner-deployment-6t44l-2h8xd 0/2 ContainerCreating 0 26s

Then it rotates out the old pods

builder@LuiGi17:~/Workspaces/jekyll-blog/ghRunnerImage$ kubectl get pods | grep new | grep jekyll

new-jekyllrunner-deployment-wt5j7-vr74c 2/2 Running 0 14h

new-jekyllrunner-deployment-wt5j7-t7hfl 2/2 Running 0 12h

new-jekyllrunner-deployment-wt5j7-lhnv9 2/2 Running 0 12h

new-jekyllrunner-deployment-wt5j7-7vx8d 2/2 Running 0 11h

new-jekyllrunner-deployment-wt5j7-cd2zp 2/2 Running 0 9m35s

new-jekyllrunner-deployment-6t44l-w9lt5 0/2 ContainerCreating 0 5m47s

new-jekyllrunner-deployment-6t44l-h76jl 0/2 ContainerCreating 0 5m47s

new-jekyllrunner-deployment-6t44l-2h8xd 0/2 ContainerCreating 0 5m46s

builder@LuiGi17:~/Workspaces/jekyll-blog/ghRunnerImage$ kubectl get pods | grep new | grep jekyll

new-jekyllrunner-deployment-6t44l-2h8xd 2/2 Running 0 6m56s

new-jekyllrunner-deployment-6t44l-h76jl 2/2 Running 0 6m57s

new-jekyllrunner-deployment-6t44l-w9lt5 2/2 Running 0 6m57s

new-jekyllrunner-deployment-wt5j7-vr74c 2/2 Running 0 14h

new-jekyllrunner-deployment-wt5j7-7vx8d 2/2 Terminating 0 11h

new-jekyllrunner-deployment-wt5j7-lhnv9 2/2 Terminating 0 12h

new-jekyllrunner-deployment-wt5j7-cd2zp 2/2 Terminating 0 10m

new-jekyllrunner-deployment-wt5j7-t7hfl 2/2 Terminating 0 12h

I can see Pulumi and OpenTF on the images now

builder@LuiGi17:~/Workspaces/jekyll-blog/ghRunnerImage$ kubectl exec -it new-jekyllrunner-deployment-6t44l-w9lt5 -- /bin/bash

Defaulted container "runner" out of: runner, docker

runner@new-jekyllrunner-deployment-6t44l-w9lt5:/$ which tofu

/usr/local/bin/tofu

runner@new-jekyllrunner-deployment-6t44l-w9lt5:/$ tofu version

OpenTofu v1.6.0-dev

on linux_amd64

runner@new-jekyllrunner-deployment-6t44l-w9lt5:/$ /home/runner/.pulumi/bin/pulumi version

v3.86.0

Next we need to test.

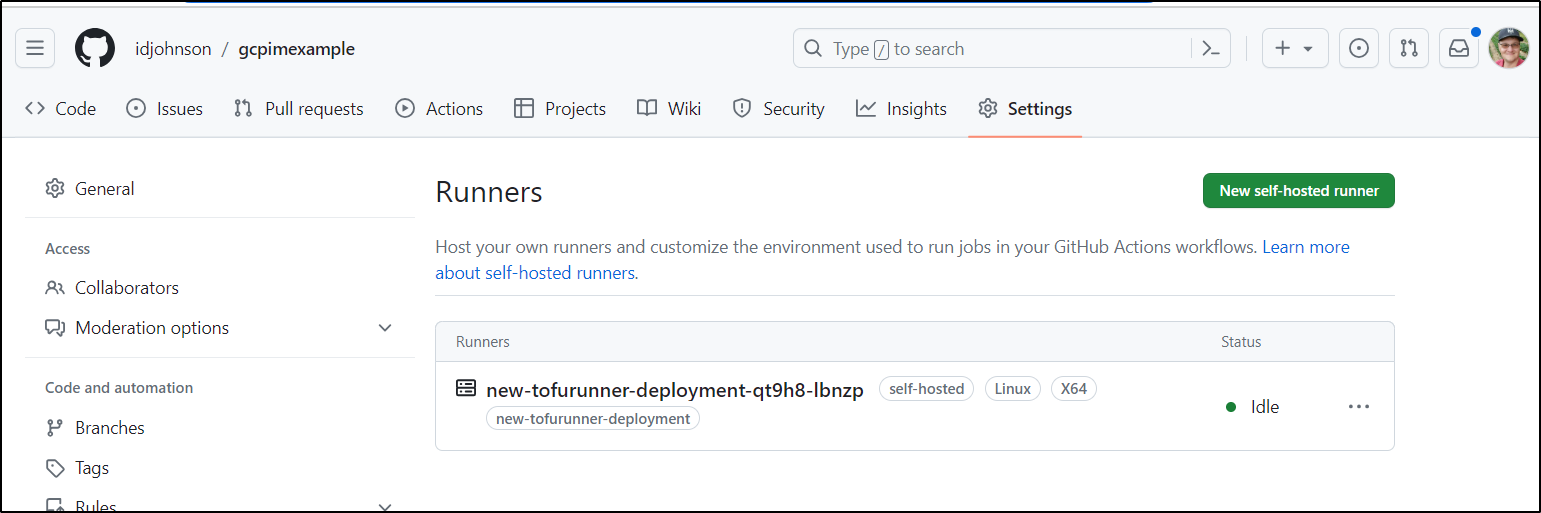

To do that we’ll add a Runner deployment set for the same GCP Infra repo

builder@LuiGi17:~/Workspaces/jekyll-blog$ cat new-ghrunner-infra.yaml

apiVersion: v1

items:

- apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: new-tofurunner-deployment

namespace: default

spec:

effectiveTime: null

replicas: 1

selector: null

template:

metadata: {}

spec:

dockerEnabled: true

dockerdContainerResources: {}

env:

- name: AWS_DEFAULT_REGION

value: us-east-1

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

key: USER_NAME

name: awsjekyll

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

key: PASSWORD

name: awsjekyll

- name: DATADOG_API_KEY

valueFrom:

secretKeyRef:

key: DDAPIKEY

name: ddjekyll

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.15

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: myharborreg

labels:

- new-tofurunner-deployment

repository: idjohnson/gcpimexample

resources: {}

kind: List

metadata:

resourceVersion: ""

builder@LuiGi17:~/Workspaces/jekyll-blog$ kubectl apply -f new-ghrunner-infra.yaml

runnerdeployment.actions.summerwind.dev/new-tofurunner-deployment created

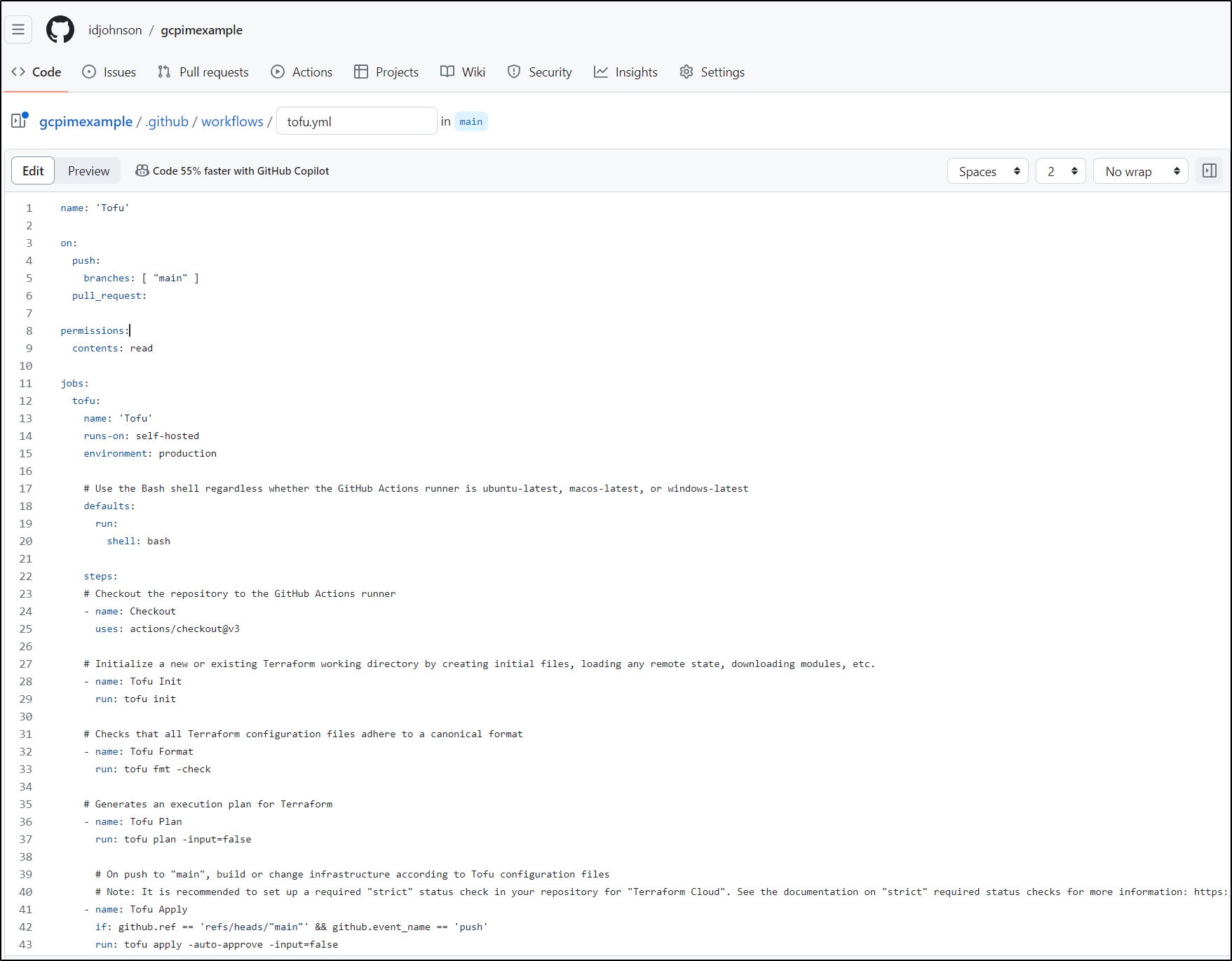

Next, I’ll create a workflow to use it. It’s largely based on the “Terraform” quick start, though I use the self-hosted pool

name: 'Tofu'

on:

push:

branches: [ "main" ]

pull_request:

permissions:

contents: read

jobs:

tofu:

name: 'Tofu'

runs-on: self-hosted

environment: production

# Use the Bash shell regardless whether the GitHub Actions runner is ubuntu-latest, macos-latest, or windows-latest

defaults:

run:

shell: bash

steps:

# Checkout the repository to the GitHub Actions runner

- name: Checkout

uses: actions/checkout@v3

# Initialize a new or existing Terraform working directory by creating initial files, loading any remote state, downloading modules, etc.

- name: Tofu Init

run: tofu init

# Checks that all Terraform configuration files adhere to a canonical format

- name: Tofu Format

run: tofu fmt -check

# Generates an execution plan for Terraform

- name: Tofu Plan

run: tofu plan -input=false

# On push to "main", build or change infrastructure according to Tofu configuration files

# Note: It is recommended to set up a required "strict" status check in your repository for "Terraform Cloud". See the documentation on "strict" required status checks for more information: https://help.github.com/en/github/administering-a-repository/types-of-required-status-checks

- name: Tofu Apply

if: github.ref == 'refs/heads/"main"' && github.event_name == 'push'

run: tofu apply -auto-approve -input=false

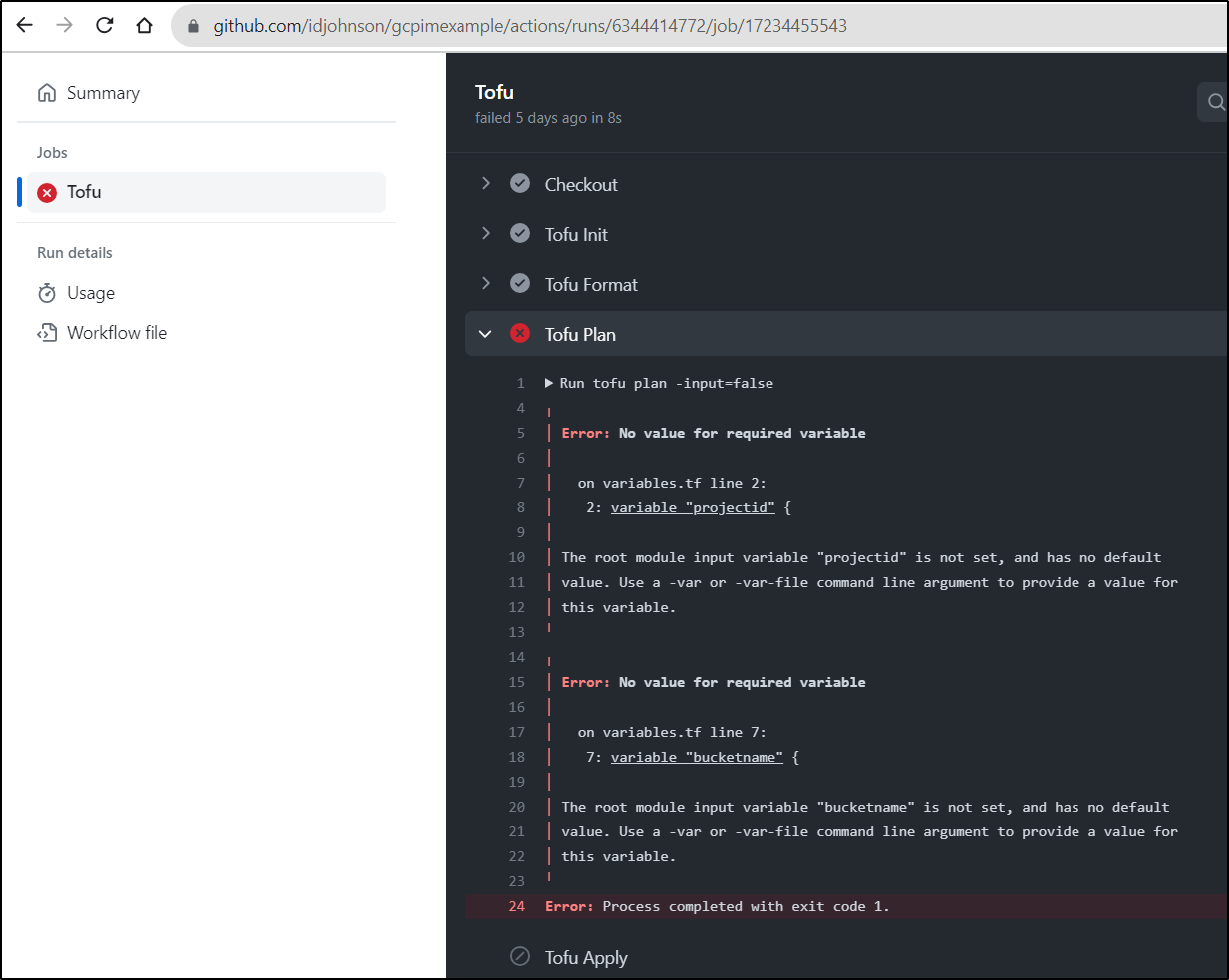

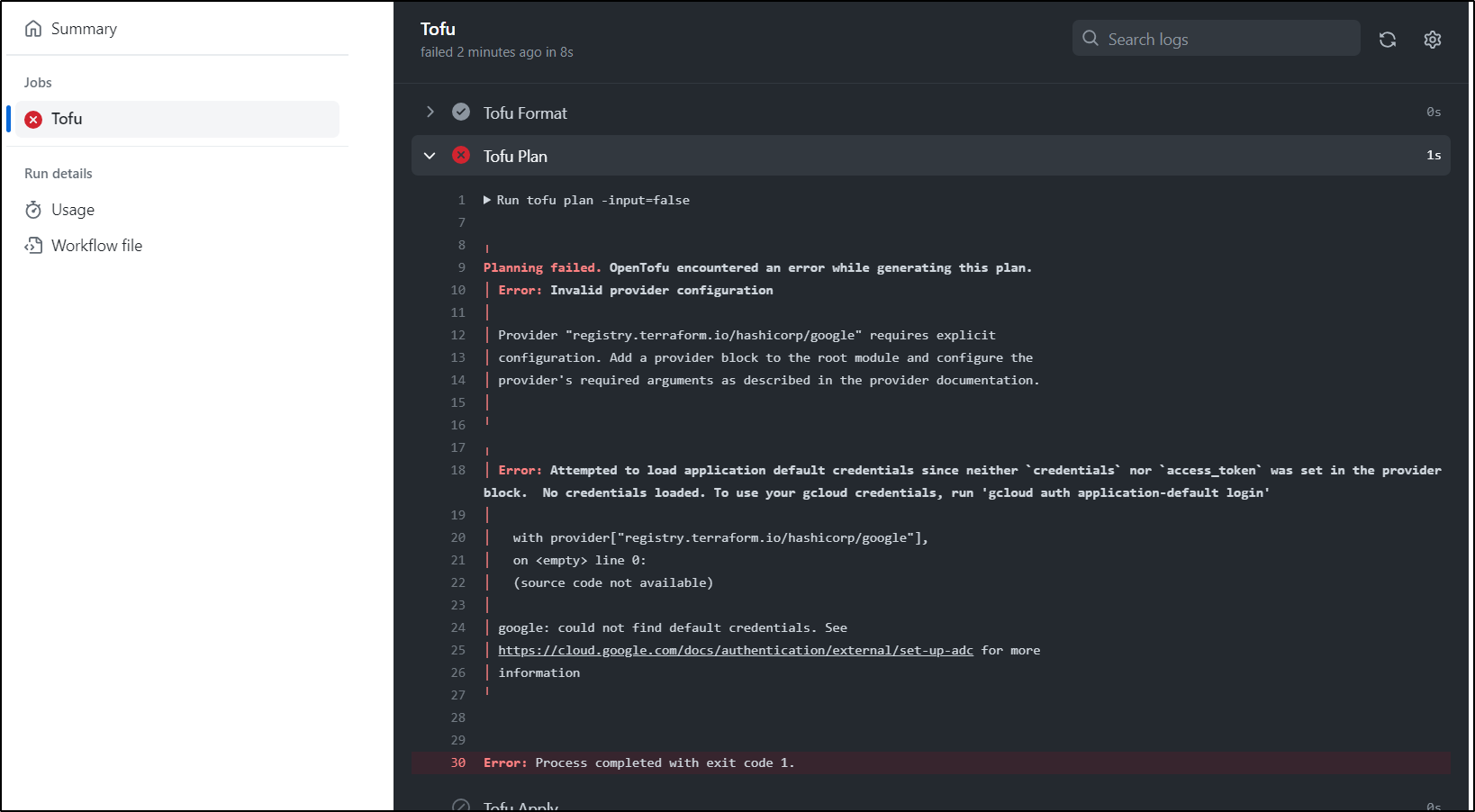

Running it as is shows I neglected to set some vars

I’ll add some env vars at the top, though I imagine I’ll need to solve GCP auth next

$ git diff .github/workflows/tofu.yml

diff --git a/.github/workflows/tofu.yml b/.github/workflows/tofu.yml

index 47df18e..9075165 100644

--- a/.github/workflows/tofu.yml

+++ b/.github/workflows/tofu.yml

@@ -8,6 +8,10 @@ on:

permissions:

contents: read

+env:

+ TF_VAR_projectid: myanthosproject2

+ TF_VAR_bucketname: mytestbucket223344

+

jobs:

tofu:

name: 'Tofu'

I want to use OIDC auth so I needn’t create and manage another SA JSON file.

To use the existing WIF pool, I’ll need to add this repo to the authorized list

$ gcloud iam service-accounts add-iam-policy-binding test-wif@myanthosproject2.iam.gserviceaccount.com --project=myanthosproject2 --role="roles/iam.workloadIdentityUser" --member="principalSet://iam.googleapis.com/projects/511842454269/locations/global/workloadIdentity

Pools/github-wif-pool/attribute.repository/idjohnson/gcpimexample"

Updated IAM policy for serviceAccount [test-wif@myanthosproject2.iam.gserviceaccount.com].

bindings:

- members:

- principalSet://iam.googleapis.com/projects/511842454269/locations/global/workloadIdentityPools/github-wif-pool/attribute.repository/idjohnson/gcpimexample

- principalSet://iam.googleapis.com/projects/511842454269/locations/global/workloadIdentityPools/github-wif-pool/attribute.repository/idjohnson/secretAccessor

role: roles/iam.workloadIdentityUser

etag: BwYG2Hi9mwM=

version: 1

I then could setup the pipeline to use the OIDC auth after adding token permissions to job:

name: 'Tofu'

on:

push:

branches: [ "main" ]

pull_request:

permissions:

contents: read

env:

TF_VAR_projectid: myanthosproject2

TF_VAR_bucketname: mytestbucket223344

jobs:

tofu:

name: 'Tofu'

runs-on: self-hosted

environment: production

permissions:

contents: 'read'

id-token: 'write'

# Use the Bash shell regardless whether the GitHub Actions runner is ubuntu-latest, macos-latest, or windows-latest

defaults:

run:

shell: bash

steps:

# Checkout the repository to the GitHub Actions runner

- name: Checkout

uses: actions/checkout@v3

- id: auth

uses: google-github-actions/auth@v1

with:

token_format: "access_token"

create_credentials_file: true

activate_credentials_file: true

workload_identity_provider: 'projects/511842454269/locations/global/workloadIdentityPools/github-wif-pool/providers/githubwif'

service_account: 'test-wif@myanthosproject2.iam.gserviceaccount.com'

access_token_lifetime: '100s'

# Initialize a new or existing Terraform working directory by creating initial files, loading any remote state, downloading modules, etc.

- name: Tofu Init

run: tofu init

# Checks that all Terraform configuration files adhere to a canonical format

- name: Tofu Format

run: tofu fmt -check

# TF Validate

- name: Tofu Validate

run: tofu validate

# Generates an execution plan for Terraform

- name: Tofu Plan

run: tofu plan -input=false

# On push to "main", build or change infrastructure according to Tofu configuration files

# Note: It is recommended to set up a required "strict" status check in your repository for "Terraform Cloud". See the documentation on "strict" required status checks for more information: https://help.github.com/en/github/administering-a-repository/types-of-required-status-checks

- name: Tofu Apply

if: github.ref == 'refs/heads/"main"' && github.event_name == 'push'

run: tofu apply -auto-approve -input=false

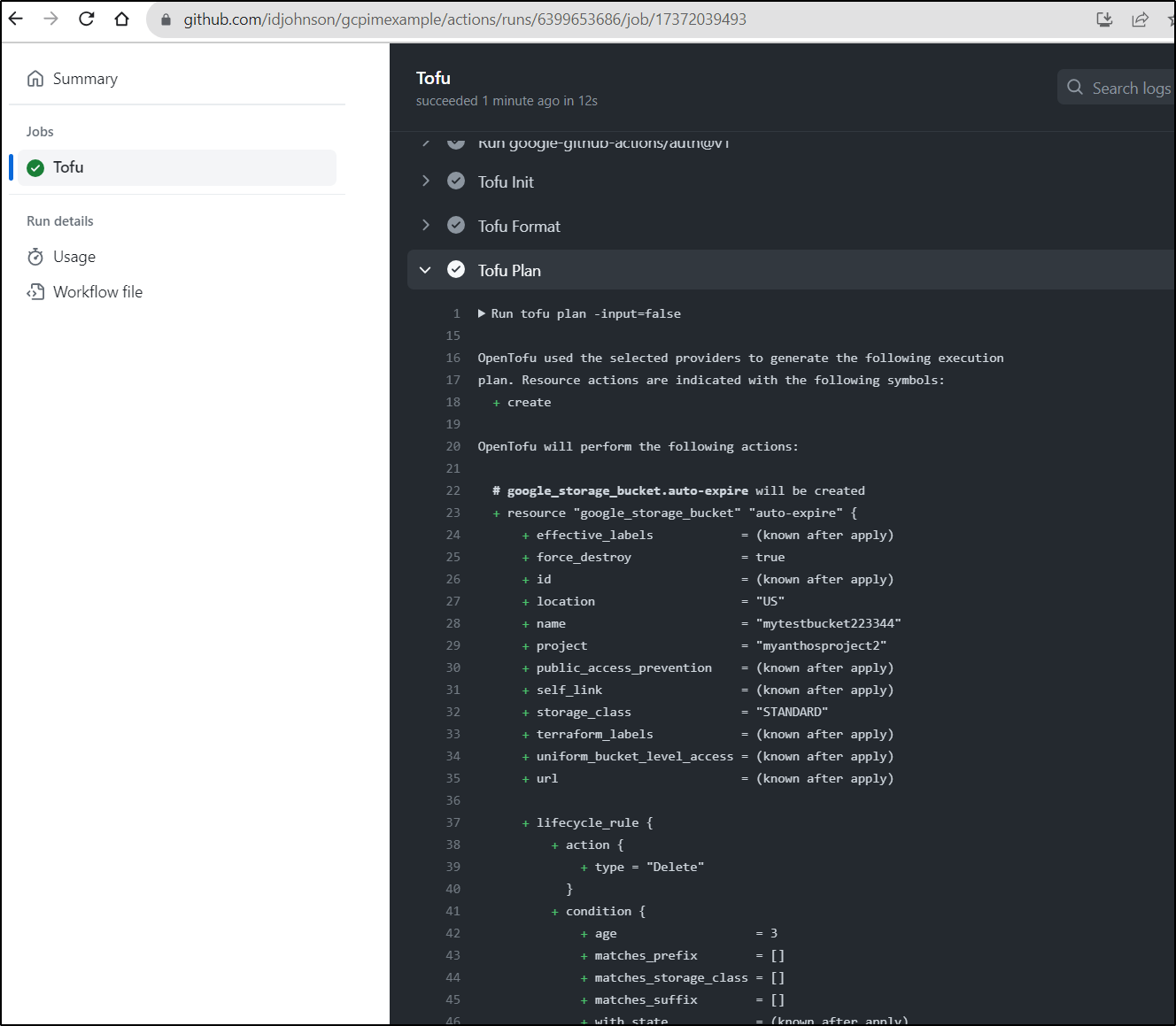

We can now see OpenTofu do a proper plan in the pipeline

Let’s put it together.

Flow

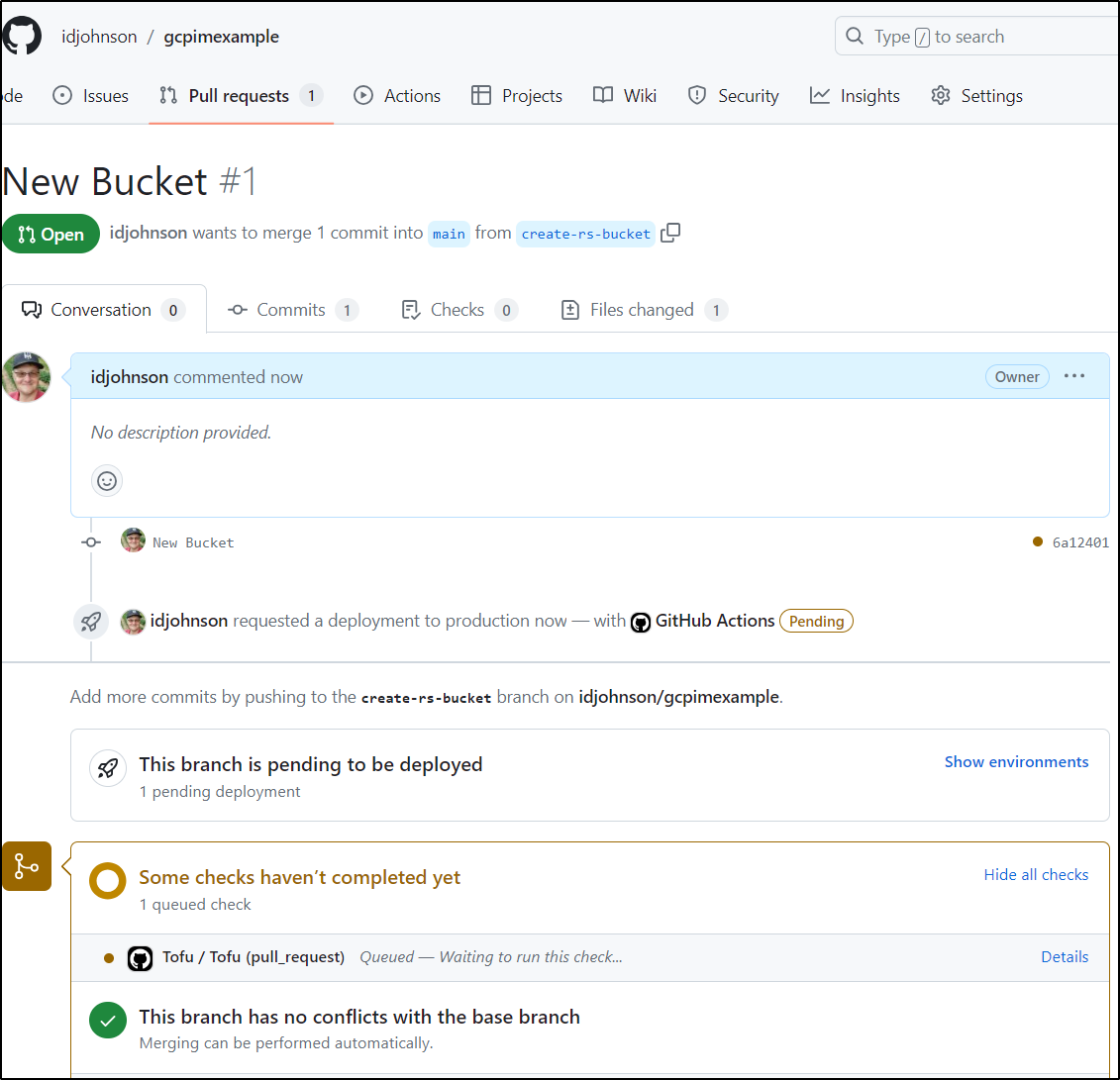

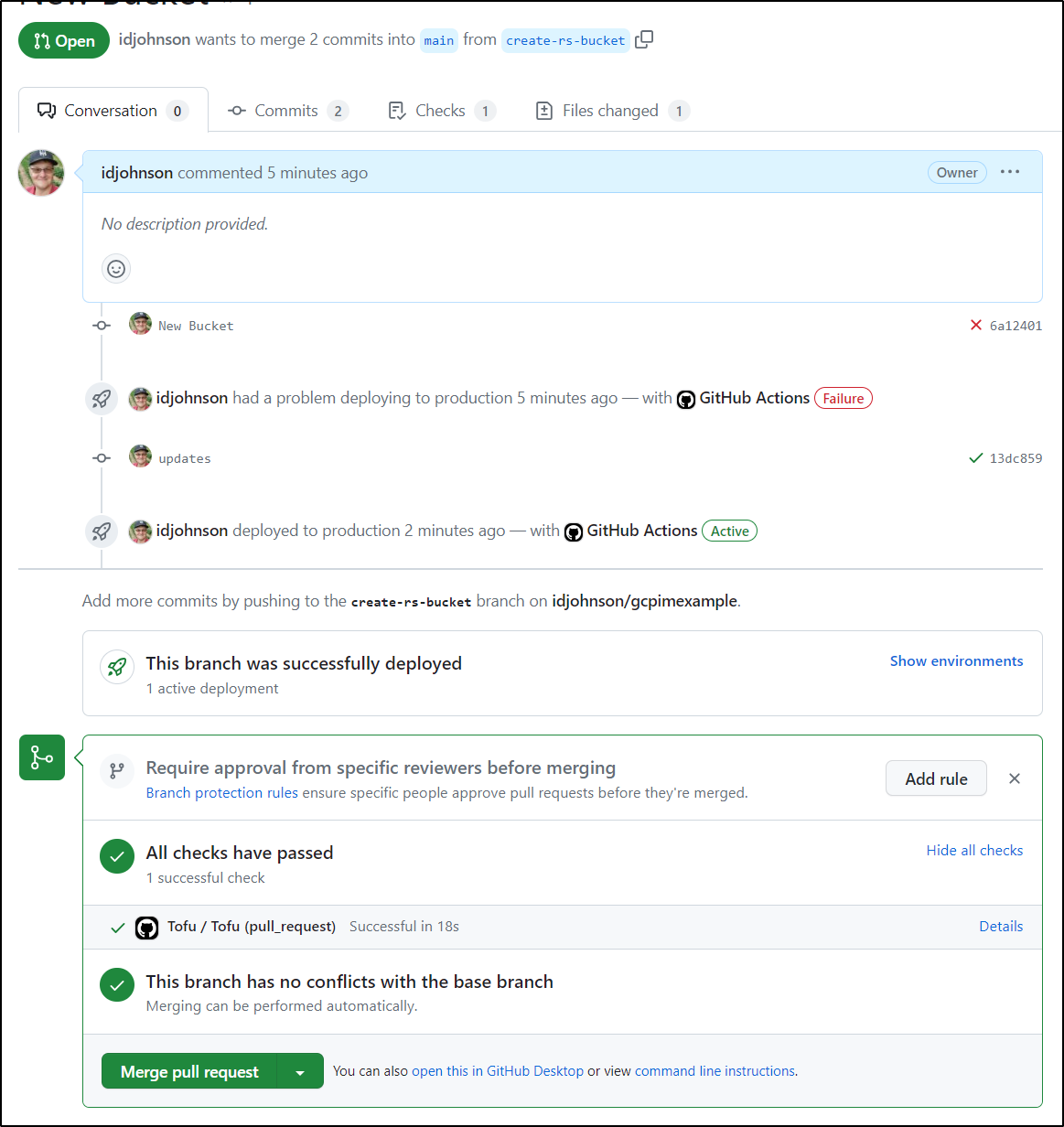

Let’s do a PR, assuming we wanted to add a bucket for remote state management

I’ll go ahead and add that new a branch and push it

builder@LuiGi17:~/Workspaces/gcpimexample$ git checkout -b create-rs-bucket

Switched to a new branch 'create-rs-bucket'

builder@LuiGi17:~/Workspaces/gcpimexample$ git add buckets.tf

builder@LuiGi17:~/Workspaces/gcpimexample$ git commit -m "New Bucket"

[create-rs-bucket 6a12401] New Bucket

1 file changed, 20 insertions(+)

builder@LuiGi17:~/Workspaces/gcpimexample$ git push

fatal: The current branch create-rs-bucket has no upstream branch.

To push the current branch and set the remote as upstream, use

git push --set-upstream origin create-rs-bucket

builder@LuiGi17:~/Workspaces/gcpimexample$ git push --set-upstream origin create-rs-bucket

Enumerating objects: 5, done.

Counting objects: 100% (5/5), done.

Delta compression using up to 16 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (3/3), 635 bytes | 635.00 KiB/s, done.

Total 3 (delta 1), reused 0 (delta 0), pack-reused 0

remote: Resolving deltas: 100% (1/1), completed with 1 local object.

remote:

remote: Create a pull request for 'create-rs-bucket' on GitHub by visiting:

remote: https://github.com/idjohnson/gcpimexample/pull/new/create-rs-bucket

remote:

To https://github.com/idjohnson/gcpimexample.git

* [new branch] create-rs-bucket -> create-rs-bucket

Branch 'create-rs-bucket' set up to track remote branch 'create-rs-bucket' from 'origin'.

builder@LuiGi17:~/Workspaces/gcpimexample$

I can create a PR now and see the Workflow kicks in

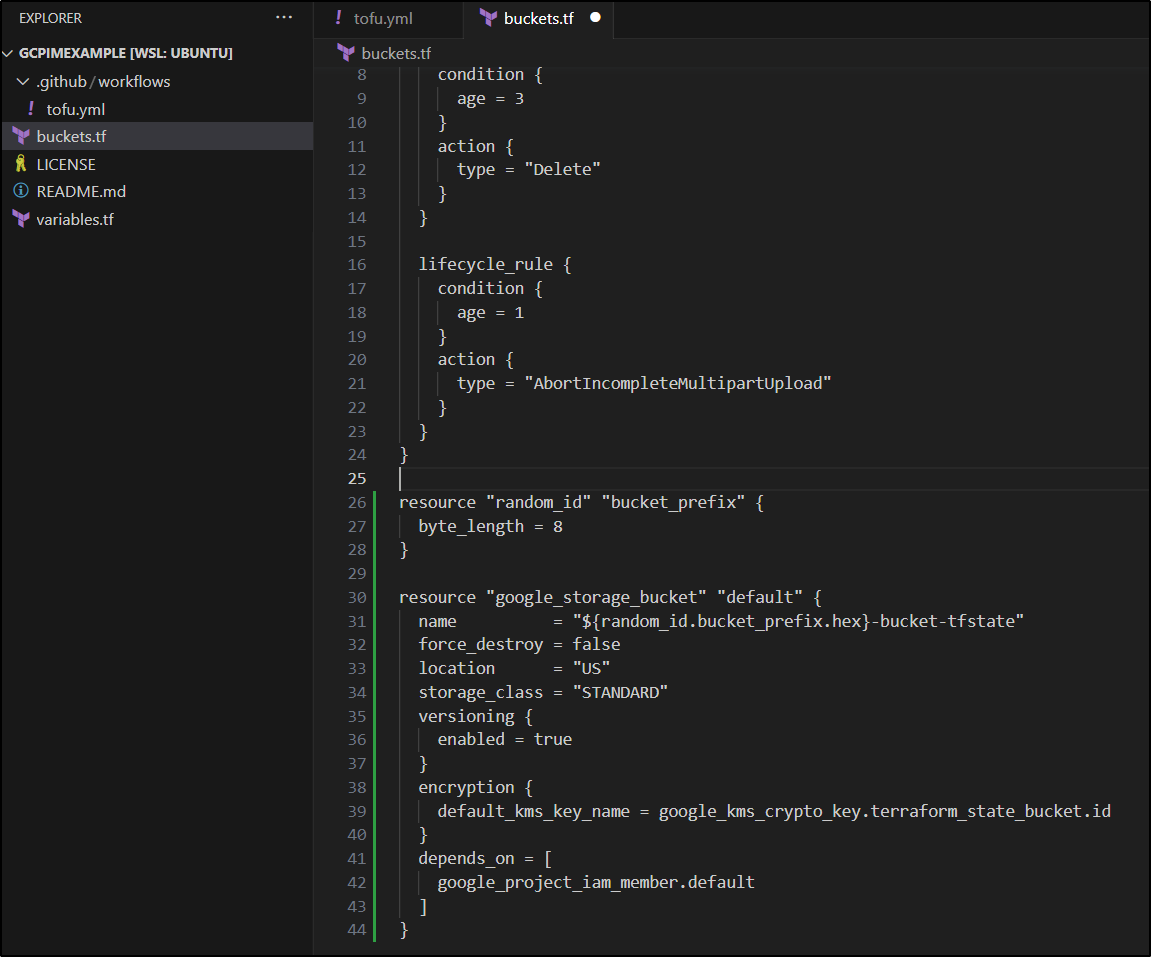

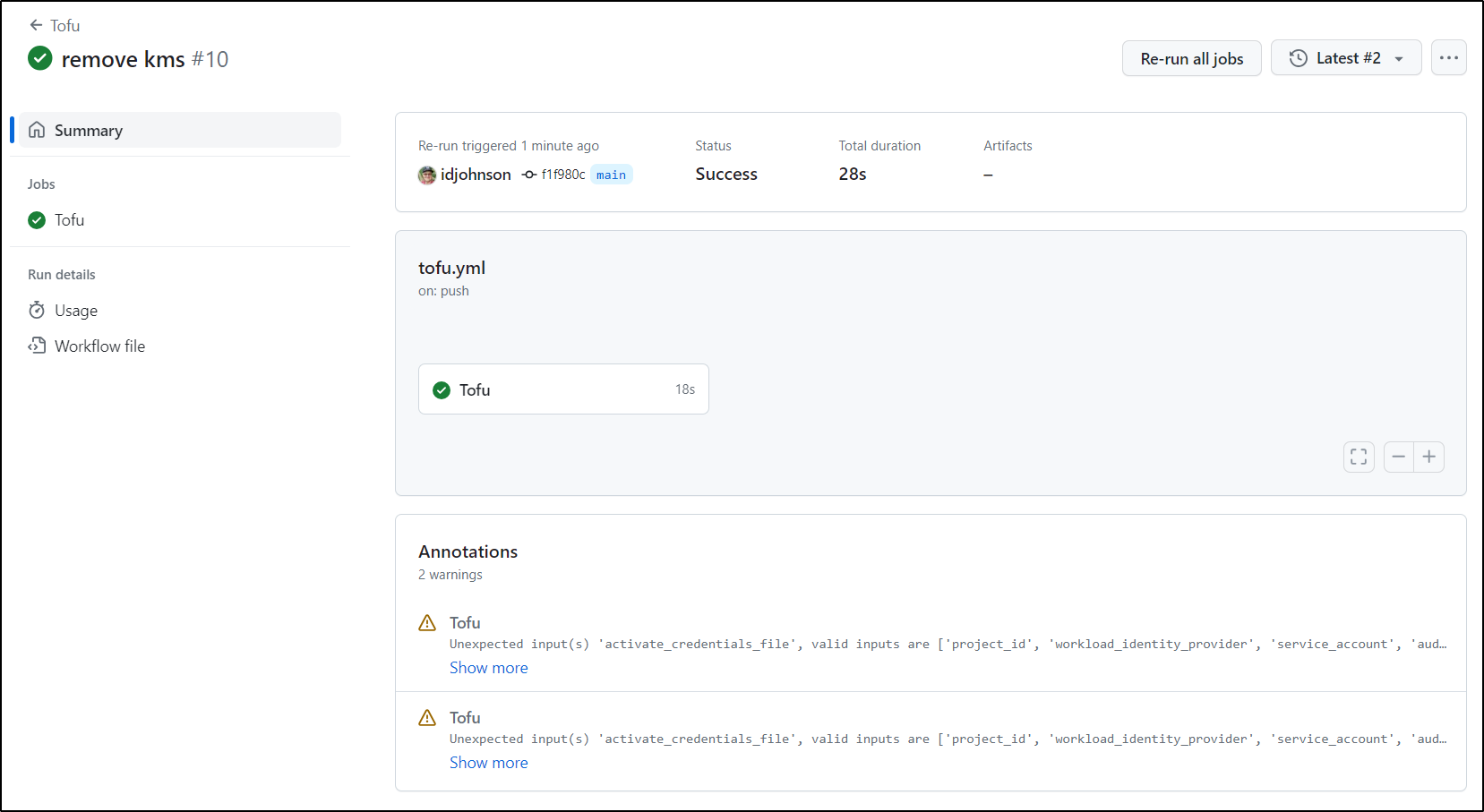

Once I fixed the TF error, The PR checks passed

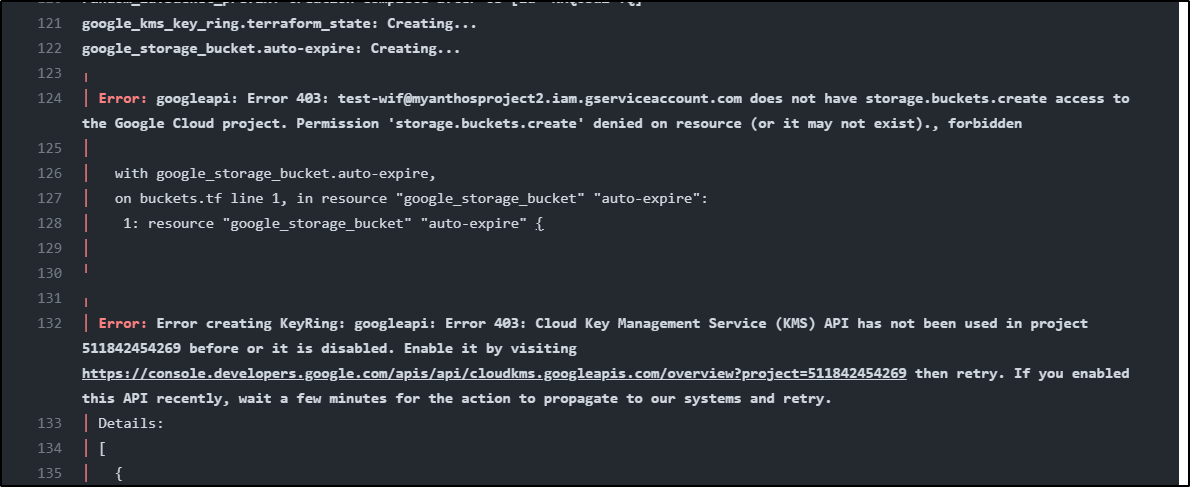

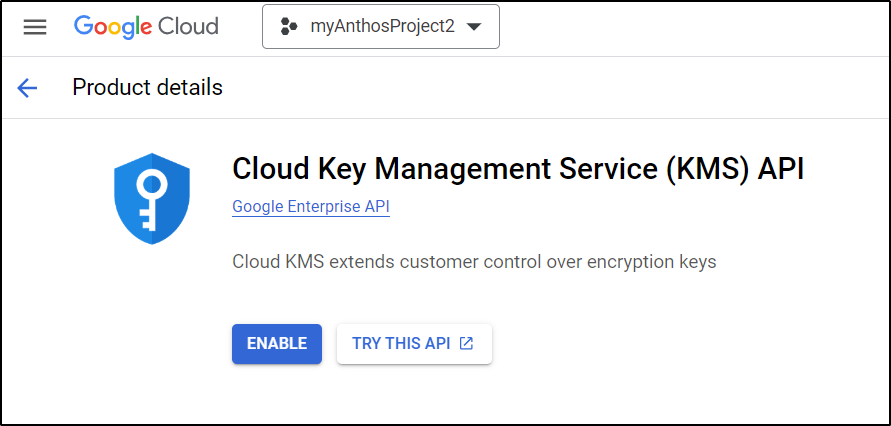

Two issues came up in Applying. The first was I hadn’t enabled the KMS API yet. The second was that the SA did not have permissions to create buckets

I first enabled KMS

Then I’ll grant the SA storage.admin permissions

$ gcloud projects add-iam-policy-binding myanthosproject2 --member='serviceAccount:test-wif@myanthosproject2.iam.gserviceaccount.com' --role="roles/storage.admin"

Updated IAM policy for project [myanthosproject2].

bindings:

- members:

... snip...

The thing is, the moment I enabled KMS, i noted it’s $3.00 a key. Why am I going to piss away 3 bucks for a damn key.

So I yanked that nonsense out.

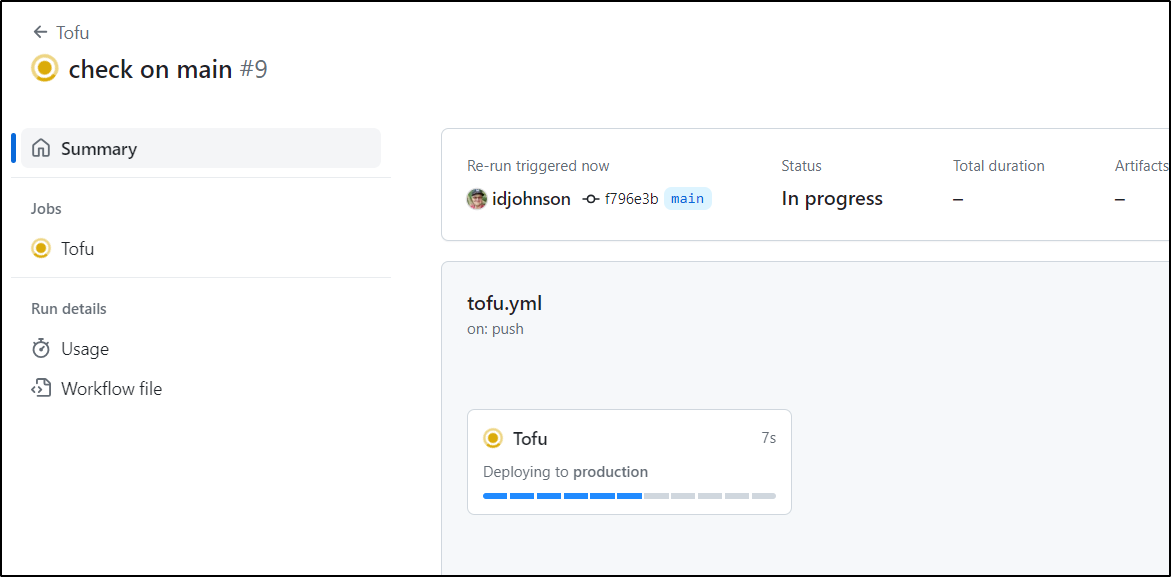

I pushed a change

And saw it apply

with the buckets.tf as

resource "google_storage_bucket" "auto-expire" {

name = var.bucketname

location = "US"

project = var.projectid

force_destroy = true

lifecycle_rule {

condition {

age = 3

}

action {

type = "Delete"

}

}

lifecycle_rule {

condition {

age = 1

}

action {

type = "AbortIncompleteMultipartUpload"

}

}

}

resource "random_id" "bucket_prefix" {

byte_length = 8

}

resource "google_storage_bucket" "default" {

name = "${random_id.bucket_prefix.hex}-bucket-tfstate"

force_destroy = false

location = "US"

storage_class = "STANDARD"

versioning {

enabled = true

}

}

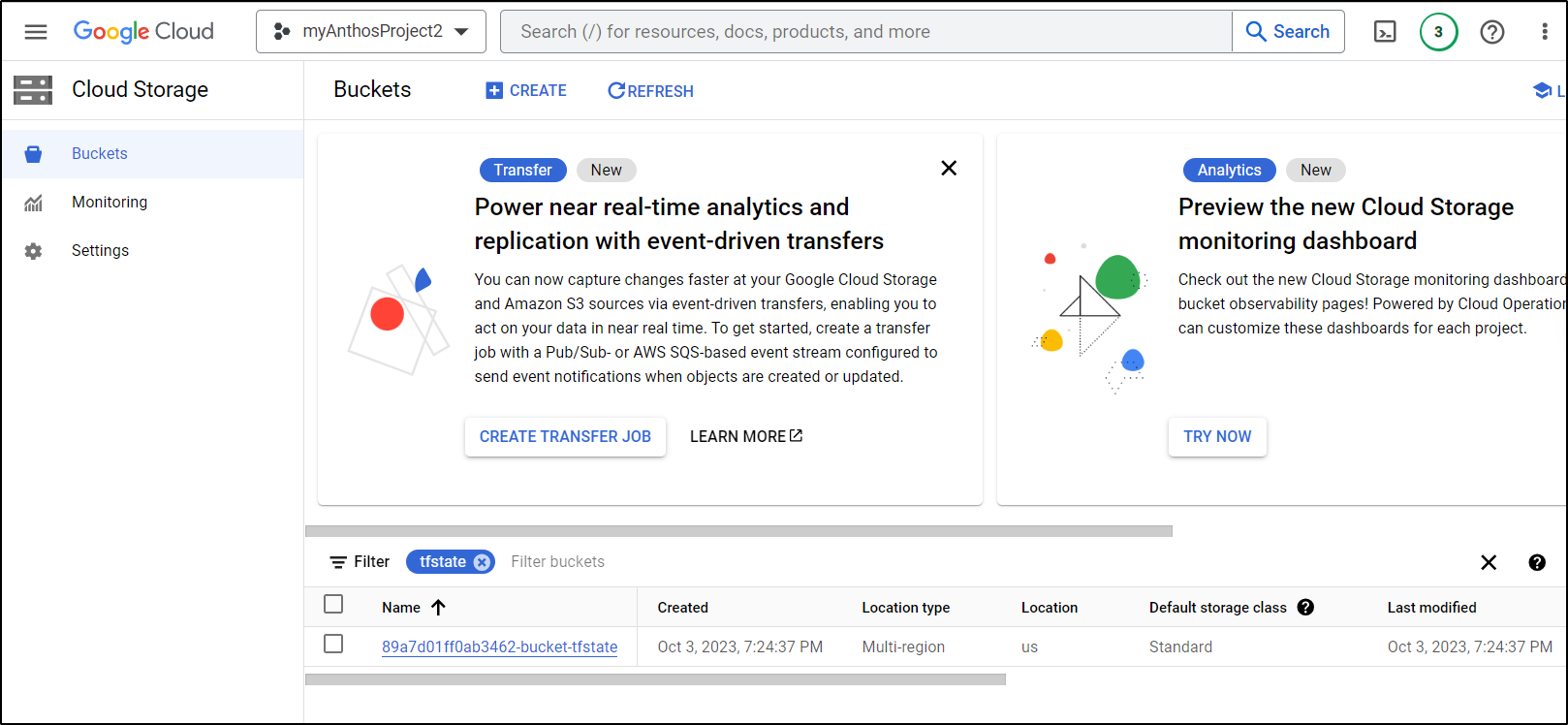

And in the Console, I can verify it was created

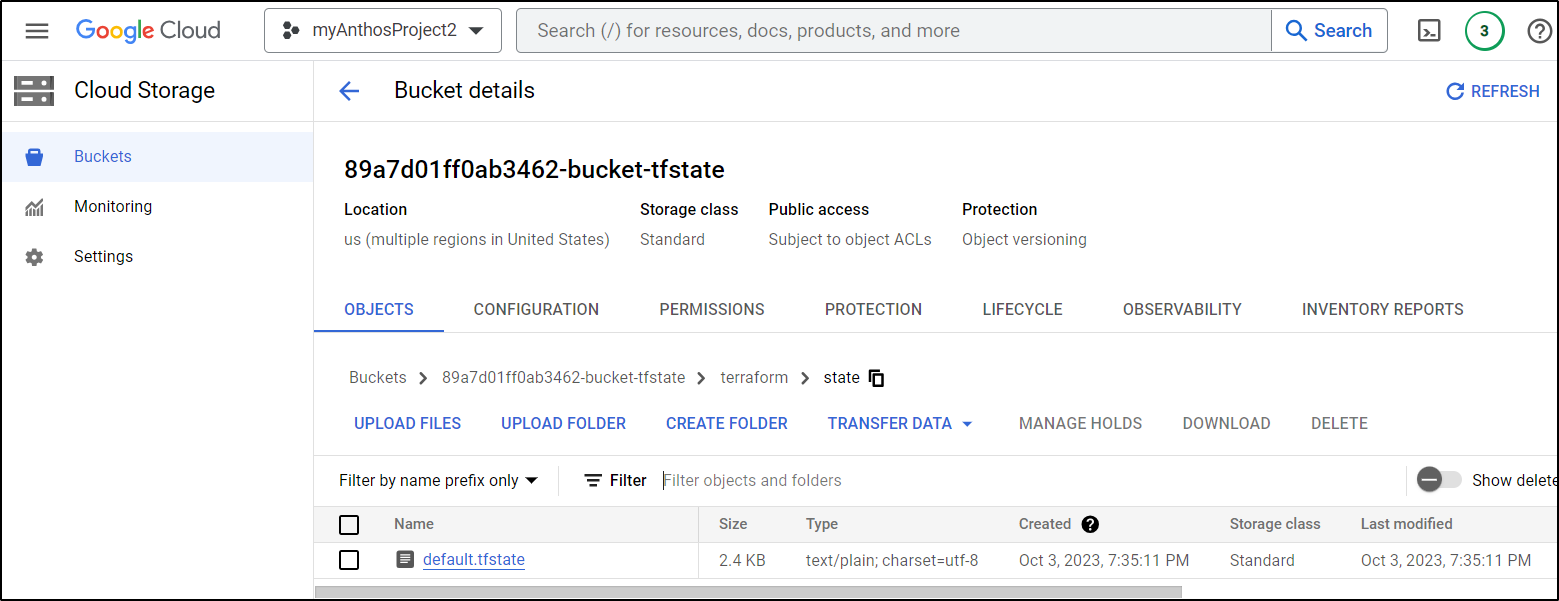

I’ll now add a backend.tf file to use the bucket

builder@LuiGi17:~/Workspaces/gcpimexample$ cat backend.tf

terraform {

backend "gcs" {

bucket = "89a7d01ff0ab3462-bucket-tfstate"

prefix = "terraform/state"

}

}builder@LuiGi17:~/Workspaces/gcpimexample$ git add backend.tf

builder@LuiGi17:~/Workspaces/gcpimexample$ git commit -m "Add backend to use bucket we created"

[main e2a4497] Add backend to use bucket we created

1 file changed, 6 insertions(+)

create mode 100644 backend.tf

builder@LuiGi17:~/Workspaces/gcpimexample$ git push

Enumerating objects: 4, done.

Counting objects: 100% (4/4), done.

Delta compression using up to 16 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (3/3), 373 bytes | 373.00 KiB/s, done.

Total 3 (delta 1), reused 0 (delta 0), pack-reused 0

remote: Resolving deltas: 100% (1/1), completed with 1 local object.

To https://github.com/idjohnson/gcpimexample.git

f1f980c..e2a4497 main -> main

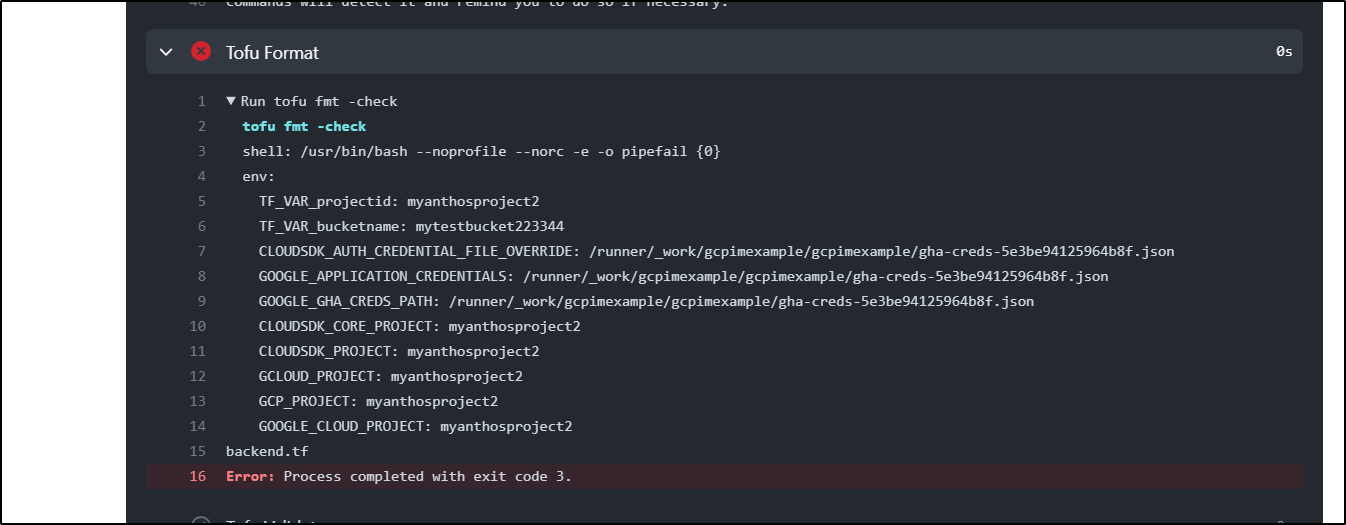

Even though I was skipping the PR process, the tofu fmt -check caught improper formatting in the backend.tf

Once I corrected

git diff backend.tf

diff --git a/backend.tf b/backend.tf

index 993b02e..0ea3a66 100644

--- a/backend.tf

+++ b/backend.tf

@@ -1,6 +1,6 @@

terraform {

- backend "gcs" {

- bucket = "89a7d01ff0ab3462-bucket-tfstate"

- prefix = "terraform/state"

- }

+ backend "gcs" {

+ bucket = "89a7d01ff0ab3462-bucket-tfstate"

+ prefix = "terraform/state"

+ }

}

\ No newline at end of file

Then I could push

I now have a proper state file in GCP

Pulumi

Now that we demonstrated OpenTF, let’s do similar with Pulumi

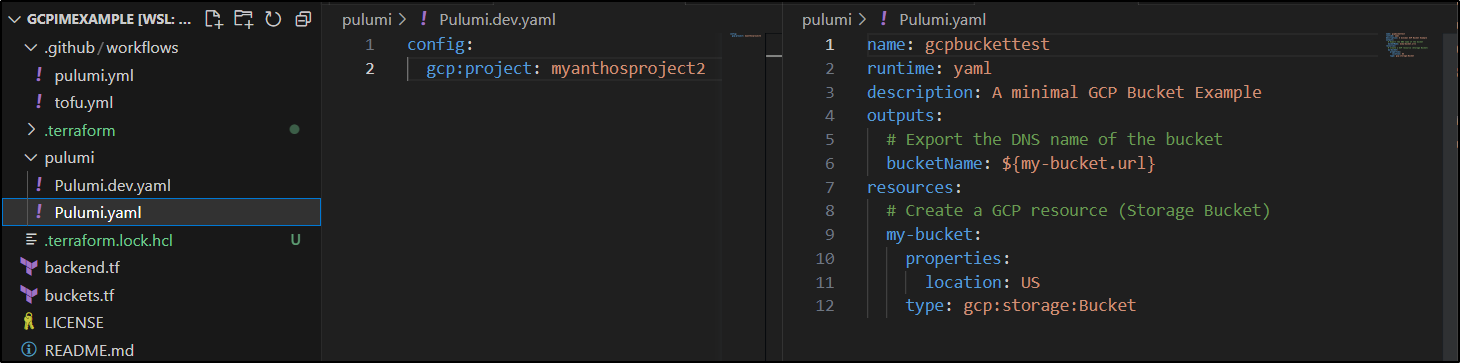

As you recall, we built out a Pulumi YAML project that had a pulumi.dev.yaml file

config:

gcp:project: myanthosproject2

and a Pulumi.yaml file that would create a bucket

name: gcpbuckettest

runtime: yaml

description: A minimal GCP Bucket Example

outputs:

# Export the DNS name of the bucket

bucketName: ${my-bucket.url}

resources:

# Create a GCP resource (Storage Bucket)

my-bucket:

properties:

location: US

type: gcp:storage:Bucket

This would be enough to get started. But I would like to track this in Pulumi cloud as well.

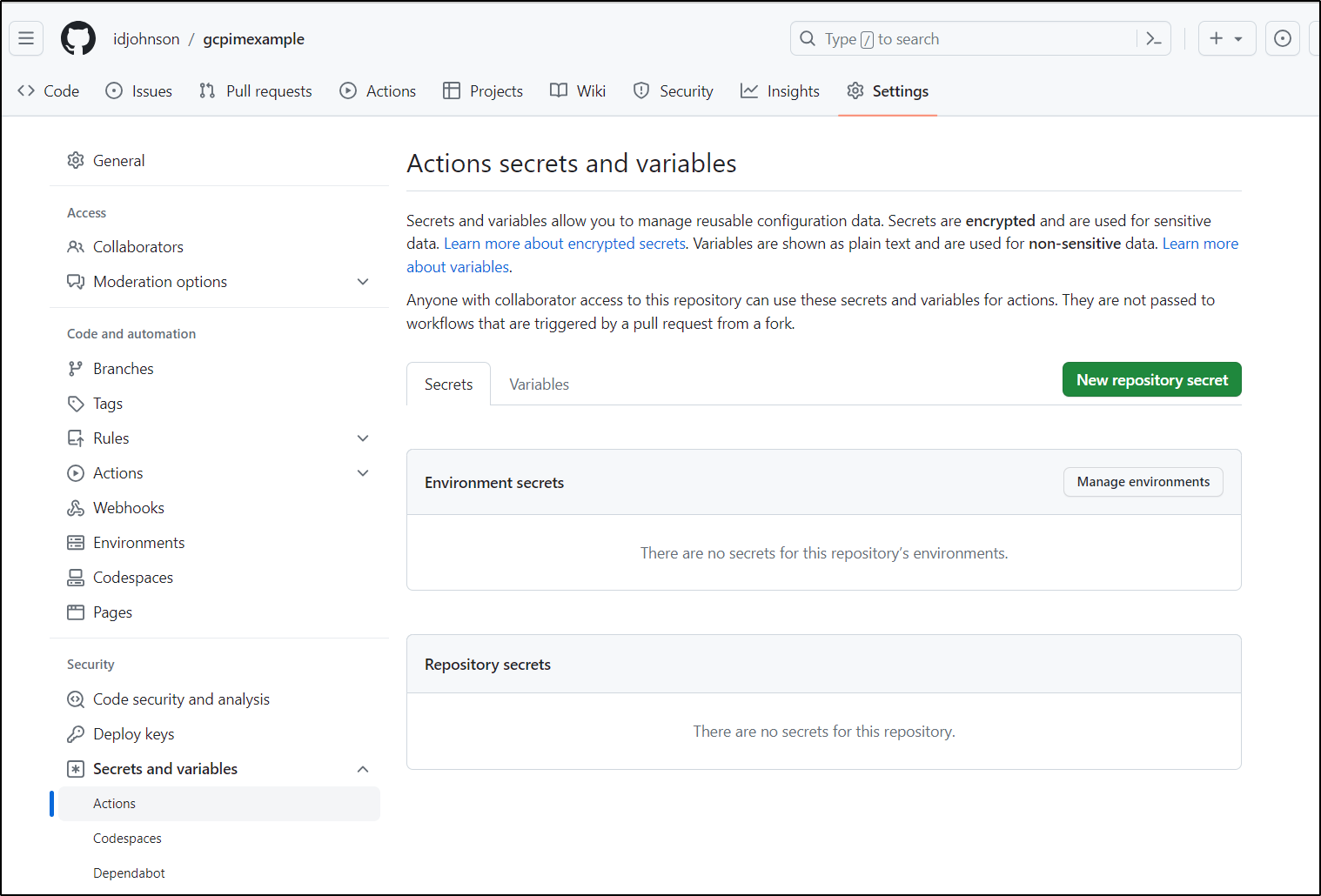

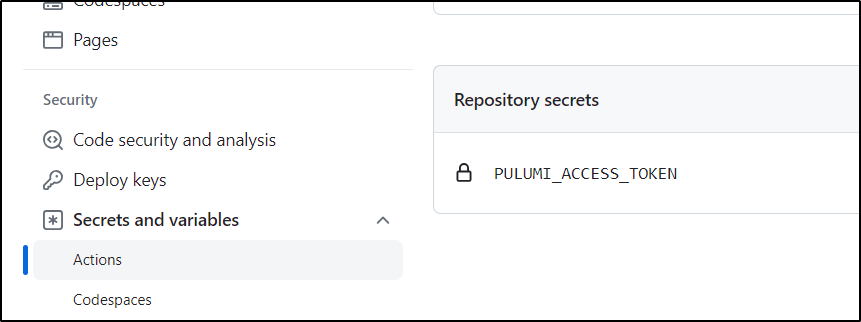

I can use an access to set in an env var for that PULUMI_ACCESS_TOKEN.

I’ll want to save that into a “New repository secret” in Github

Which I can see once set:

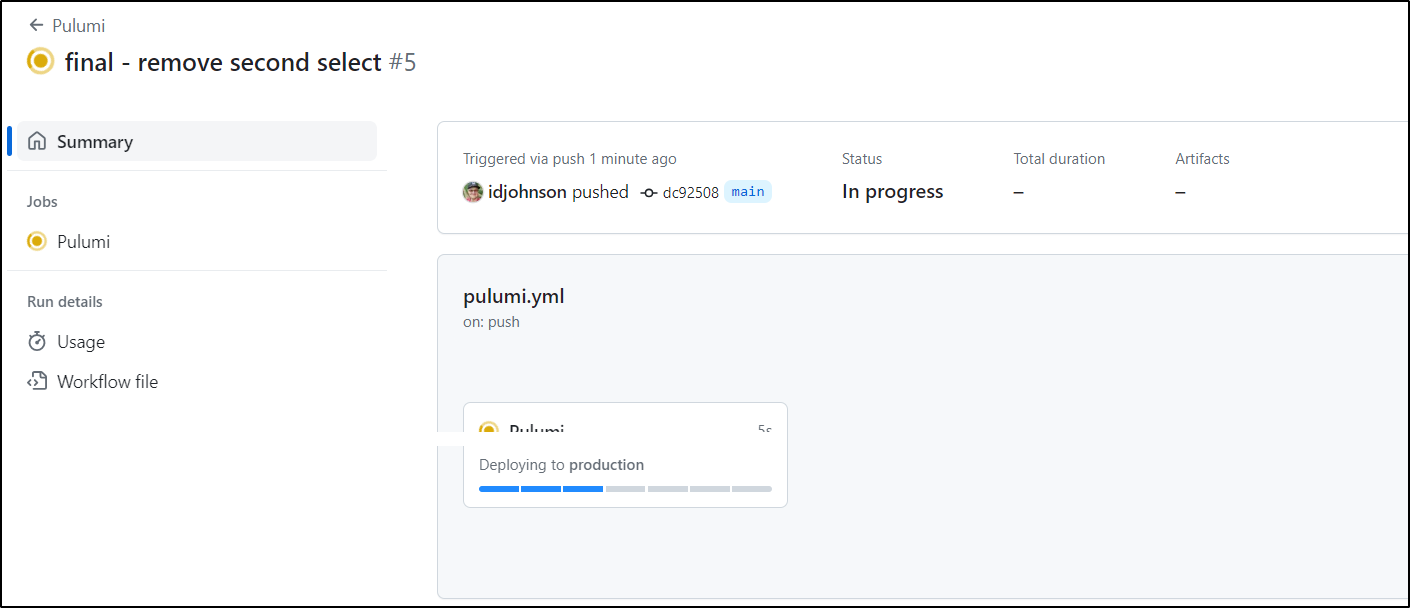

After I tweaked the YAML a bit, I launched the workflow

The Workflow YAML looks like

name: 'Pulumi'

on:

push:

branches: [ "main" ]

pull_request:

permissions:

contents: read

jobs:

pulumi:

name: 'Pulumi'

runs-on: self-hosted

environment: production

permissions:

contents: 'read'

id-token: 'write'

# Use the Bash shell regardless whether the GitHub Actions runner is ubuntu-latest, macos-latest, or windows-latest

defaults:

run:

shell: bash

steps:

# Checkout the repository to the GitHub Actions runner

- name: Checkout

uses: actions/checkout@v3

- id: auth

uses: google-github-actions/auth@v1

with:

token_format: "access_token"

create_credentials_file: true

activate_credentials_file: true

workload_identity_provider: 'projects/511842454269/locations/global/workloadIdentityPools/github-wif-pool/providers/githubwif'

service_account: 'test-wif@myanthosproject2.iam.gserviceaccount.com'

access_token_lifetime: '100s'

# Initialize a new or existing Terraform working directory by creating initial files, loading any remote state, downloading modules, etc.

- name: pulumi up

run: |

PATH="/home/runner/.pulumi/bin:$PATH"

pulumi stack select idjohnson/gcpbuckettest/dev

pulumi up --yes

working-directory: ./pulumi

env:

PULUMI_ACCESS_TOKEN: $

Using those Pulumi YAML files

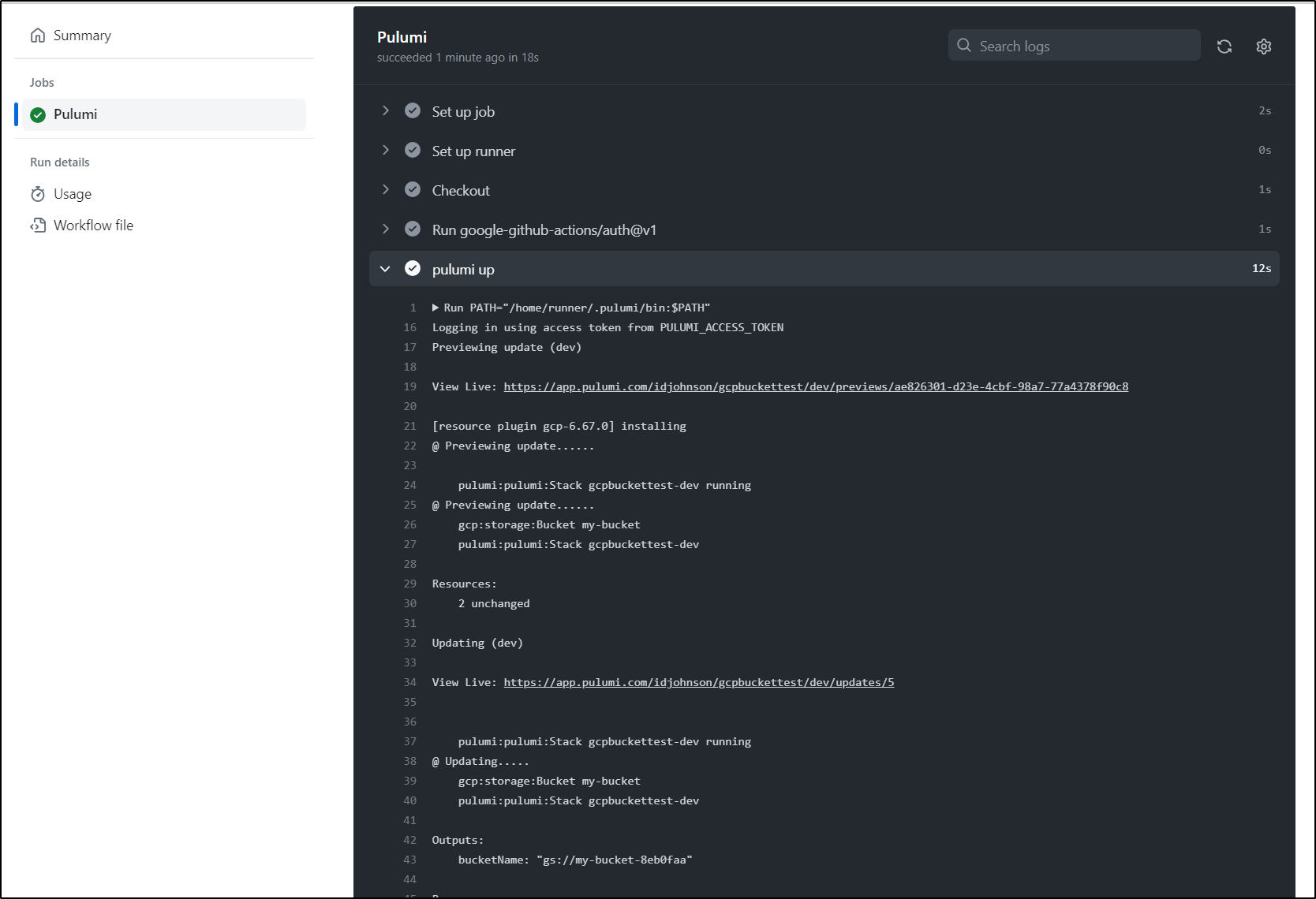

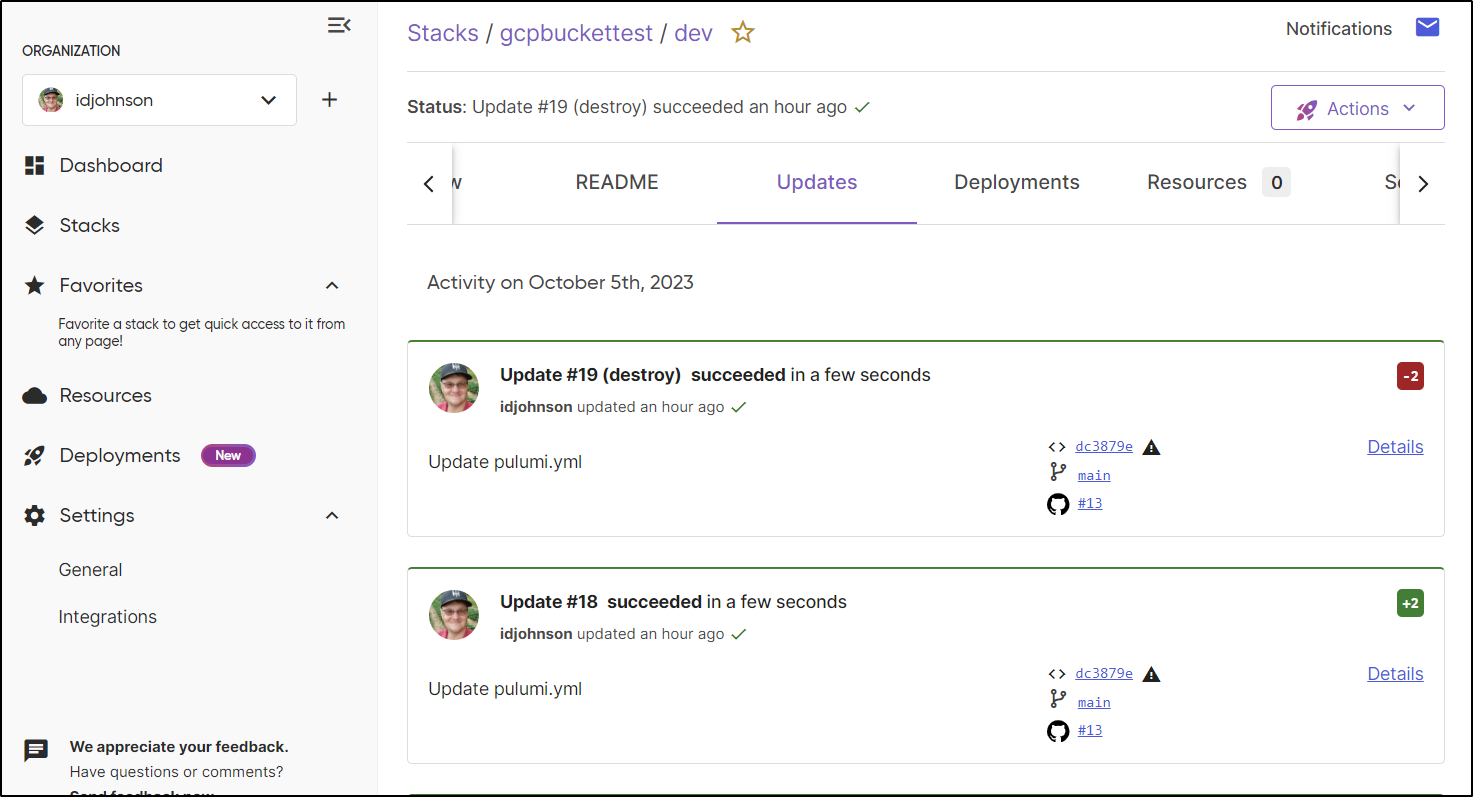

We can see it was created (or updated)

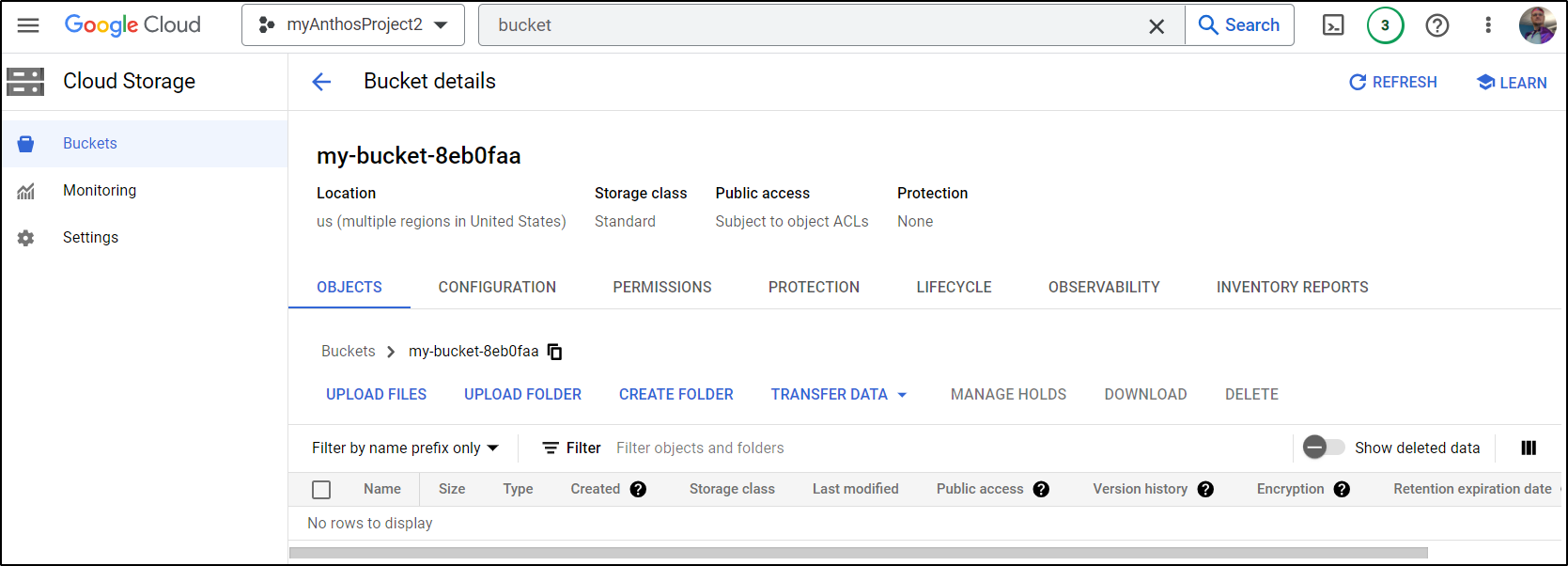

I can verify it exists, albeit with out contents, using the gcloud CLI

builder@LuiGi17:~/Workspaces/jekyll-blog$ gsutil ls gs://my-bucket-8eb0faa

builder@LuiGi17:~/Workspaces/jekyll-blog$ gsutil ls gs://my-bucket-8eb0faaxxx

BucketNotFoundException: 404 gs://my-bucket-8eb0faaxxx bucket does not exist.

as well as the cloud console

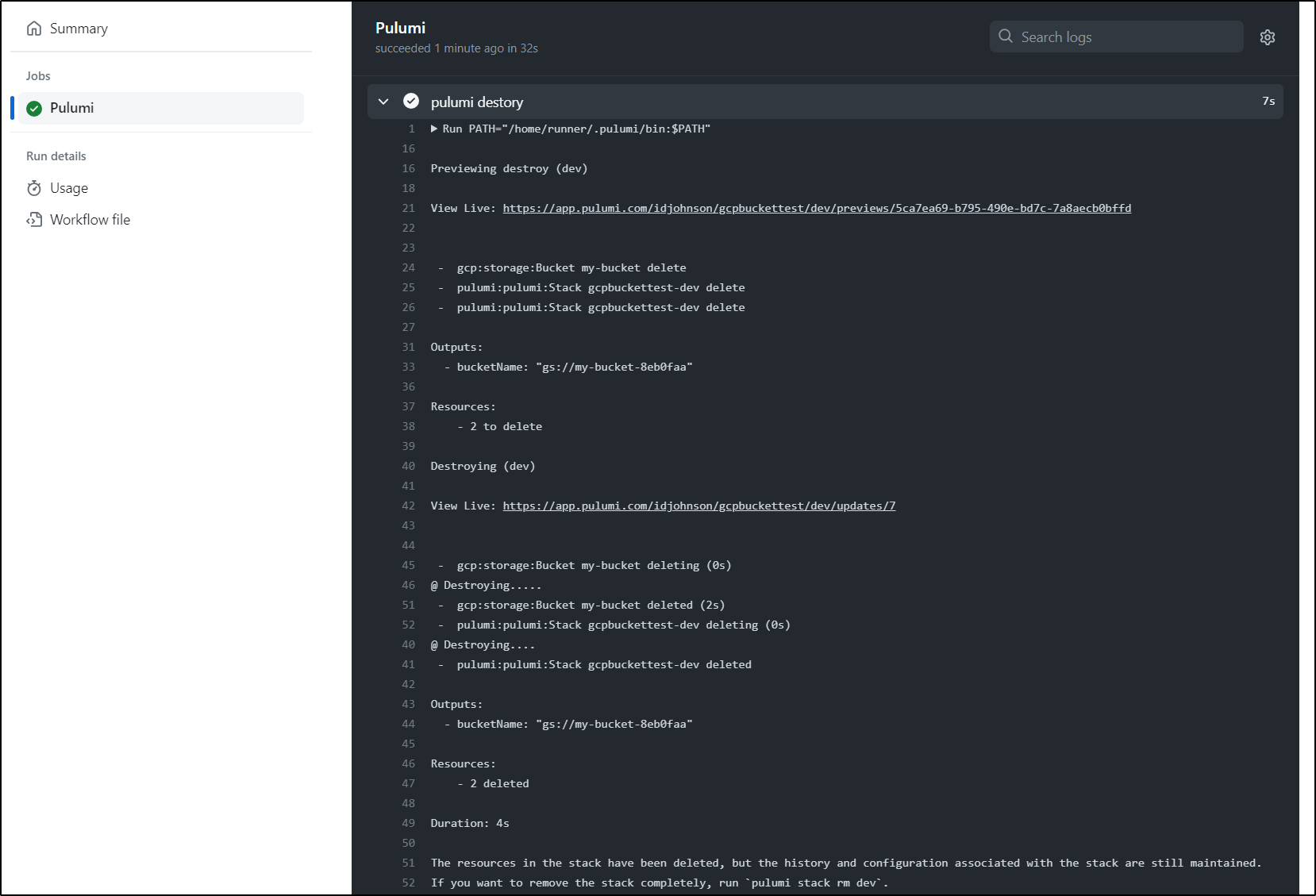

Let’s say we wanted to use a bucket for a bit, but then destroy it after. We can use the Pulumi Destroy command

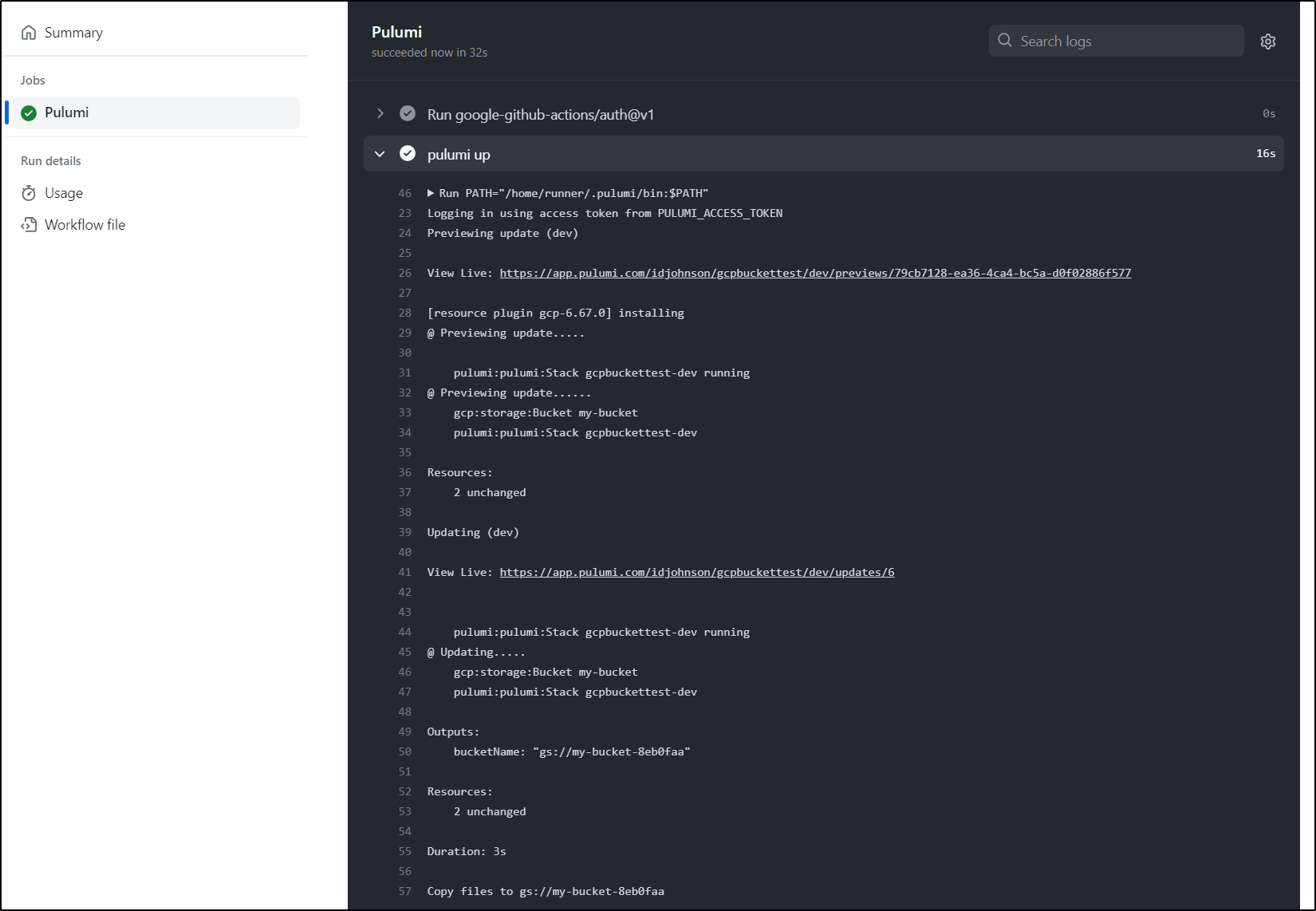

You can see it launch and create a bucket, then wait. The sleep is to mimick an activity such as copying files.

Then destroy

Temp Storage Example

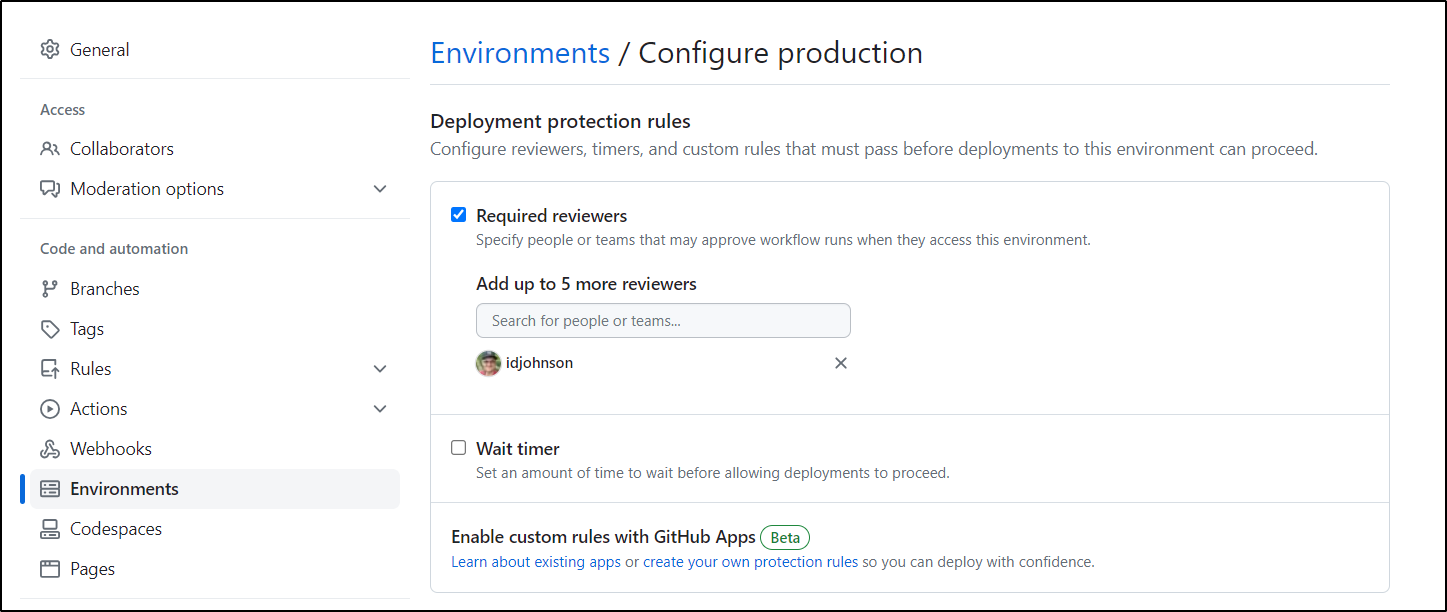

I want to create a workflow that creates a bucket, uses it, then waits for confirmation before deleting.

I will use a GH Environment to gate on removal

I then split my job into two jobs, the second using the ‘production’ environment

name: 'Pulumi'

on:

push:

branches: [ "main" ]

pull_request:

permissions:

contents: read

jobs:

pulumi:

name: 'Pulumi'

runs-on: self-hosted

permissions:

contents: 'read'

id-token: 'write'

# Use the Bash shell regardless whether the GitHub Actions runner is ubuntu-latest, macos-latest, or windows-latest

defaults:

run:

shell: bash

steps:

# Checkout the repository to the GitHub Actions runner

- name: Checkout

uses: actions/checkout@v3

- id: auth

uses: google-github-actions/auth@v1

with:

token_format: "access_token"

create_credentials_file: true

activate_credentials_file: true

workload_identity_provider: 'projects/511842454269/locations/global/workloadIdentityPools/github-wif-pool/providers/githubwif'

service_account: 'test-wif@myanthosproject2.iam.gserviceaccount.com'

access_token_lifetime: '100s'

# Initialize a new or existing Terraform working directory by creating initial files, loading any remote state, downloading modules, etc.

- name: pulumi up

run: |

PATH="/home/runner/.pulumi/bin:$PATH"

pulumi stack select idjohnson/gcpbuckettest/dev

pulumi up --yes

# Get the bucket

pulumi stack output -j | jq -r '.bucketName' | tr -d '\n' | sed 's/^gs:\/\///' > bucketName

echo "Copy files to `cat ./bucketName`"

# set as an env var to use in other actions

echo "CREATEDBUCKET=`cat ./bucketName`" >> $GITHUB_ENV

working-directory: ./pulumi

env:

PULUMI_ACCESS_TOKEN: $

- id: 'upload-file'

uses: 'google-github-actions/upload-cloud-storage@v1'

with:

path: 'pulumi/bucketName'

destination: "$/bucketName"

deploy-cleanup:

runs-on: self-hosted

environment: production

needs: [pulumi]

permissions:

contents: 'read'

id-token: 'write'

steps:

# Checkout the repository to the GitHub Actions runner

- name: Checkout

uses: actions/checkout@v3

- id: auth

uses: google-github-actions/auth@v1

with:

token_format: "access_token"

create_credentials_file: true

activate_credentials_file: true

workload_identity_provider: 'projects/511842454269/locations/global/workloadIdentityPools/github-wif-pool/providers/githubwif'

service_account: 'test-wif@myanthosproject2.iam.gserviceaccount.com'

access_token_lifetime: '100s'

- name: pulumi destory

run: |

PATH="/home/runner/.pulumi/bin:$PATH"

pulumi stack select idjohnson/gcpbuckettest/dev

pulumi destroy --yes

working-directory: ./pulumi

env:

PULUMI_ACCESS_TOKEN: $

I also needed to add “forceDestroy” to the GCP parameters otherwise, by default, Pulumi won’t destroy a bucket with contents

name: gcpbuckettest

runtime: yaml

description: A minimal GCP Bucket Example

outputs:

# Export the DNS name of the bucket

bucketName: ${my-bucket.url}

resources:

# Create a GCP resource (Storage Bucket)

my-bucket:

properties:

forceDestroy: true

location: US

type: gcp:storage:Bucket

Let’s see it all in action

And while I didn’t show it in the recording above, you can see below that the actions to the stack were tracked in Pulumi cloud

The Github Repo is public, so feel free to example the code at https://github.com/idjohnson/gcpimexample

Summary

Today we built up container image with both OpenToFu as well as Pulumi. We could have certainly installed on the fly during a build job, but that would add a lot of time. Having the binaries pre-installed makes for a nice fast Infrastructure workflow.

In our first use case, we leveraged OpenToFu in a Github Workflow to create a GCP Bucket and save the statefile to a GCP bucket (which we also created with OpenToFu).

In our second example, we used Pulumi in a Github workflow to do the same thing, including updating Pulumi Cloud. Lastly, we built a job using environments that would create a bucket in GCP, use it, then wait for approvals before cleaning up.