Published: Sep 5, 2023 by Isaac Johnson

Let’s extend the forms demo we did a week ago and try and use JQL over Work Item Queries to drive an automation.

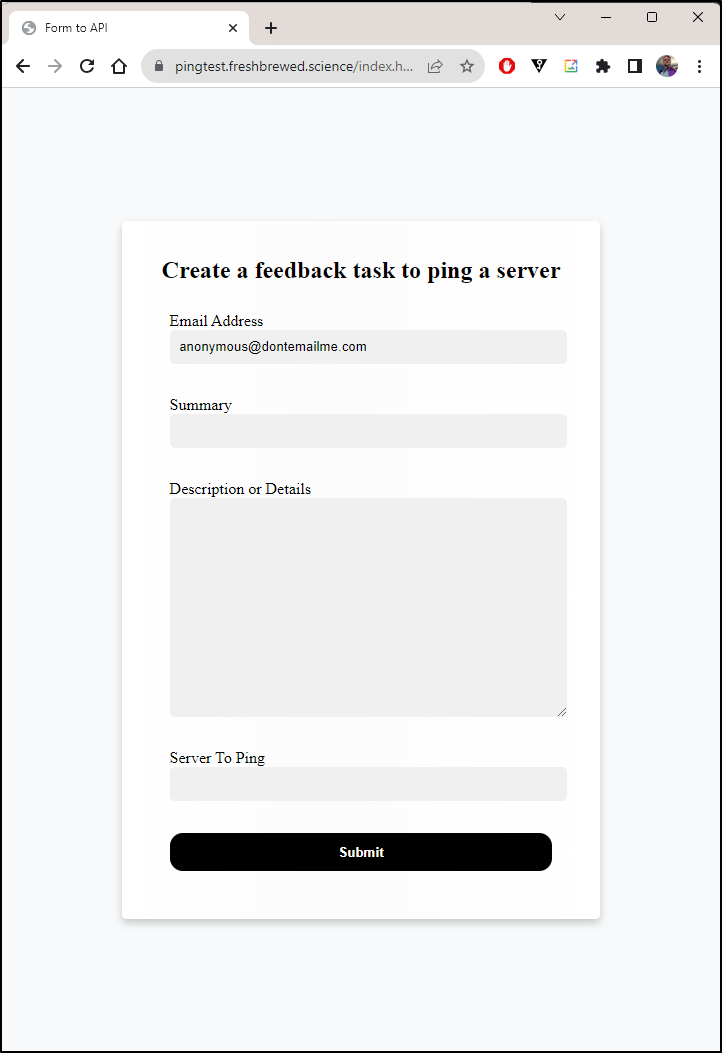

Containerizing a static web form

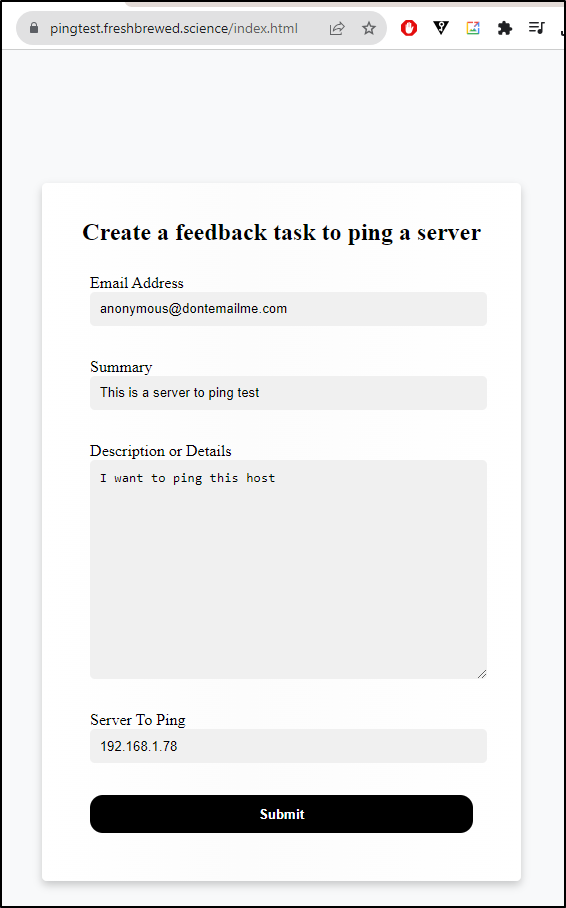

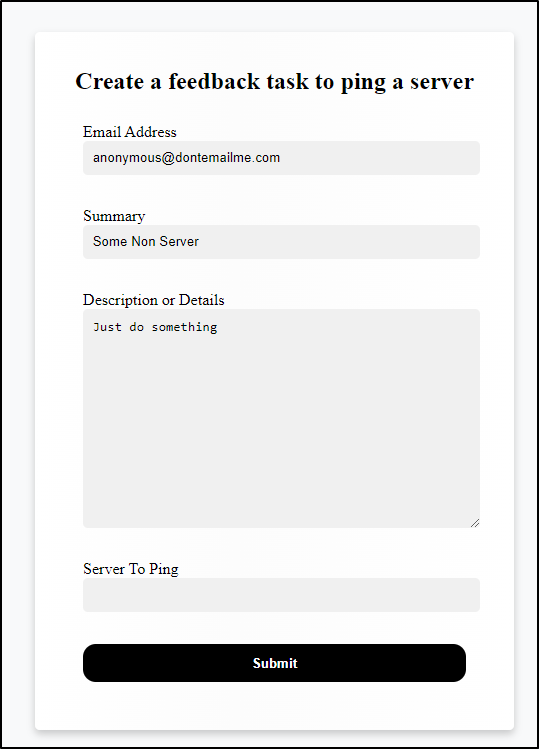

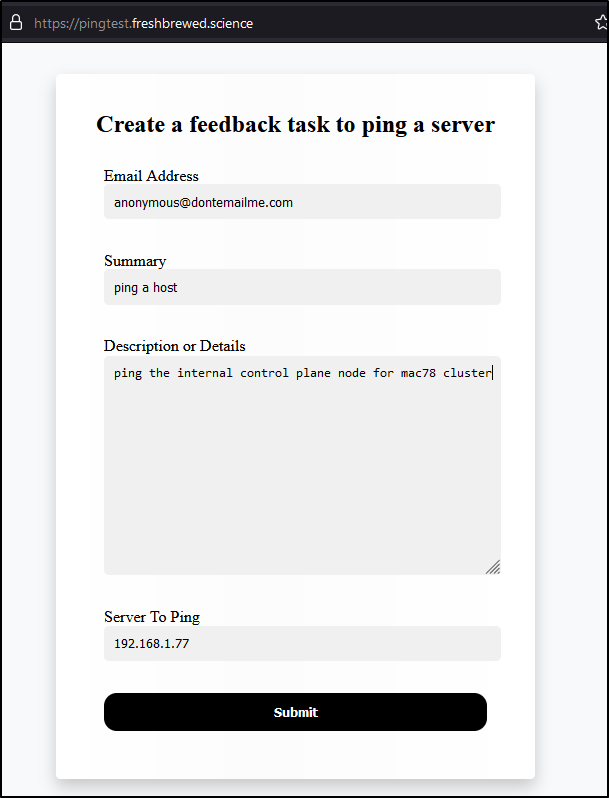

First thing I want to do is to expand my form to add a “server to ping”

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<link rel="stylesheet" href="css/styles.css">

<title>Form to API</title>

</head>

<body>

<div class="container card card-color">

<form action="" id="sampleForm">

<h2>Create a feedback task to ping a server</h2>

<div class="form-row">

<label for="userId">Email Address</label>

<input type="email" class="input-text input-text-block w-100" id="userId" name="userId" value="anonymous@dontemailme.com">

</div>

<div class="form-row">

<label for="summary">Summary</label>

<input type="text" class="input-text input-text-block w-100" id="summary" name="summary">

</div>

<div class="form-row">

<label for="description">Description or Details</label>

<textarea class="input-text input-text-block ta-100" id="description" name="description"></textarea>

</div>

<div class="form-row">

<label for="server">Server To Ping</label>

<input type="text" class="input-text input-text-block w-100" id="server" name="server">

</div>

<div class="form-row mx-auto">

<button type="submit" class="btn-submit" id="btnSubmit">

Submit

</button>

</div>

</form>

</div>

<script src="js/app.js"></script>

</body>

</html>

I’ll enable the standard build

trigger:

- master

pool:

vmImage: ubuntu-latest

steps:

- task: NodeTool@0

inputs:

versionSpec: '10.x'

displayName: 'Install Node.js'

- script: |

npm install

npm run build

# copy to artifact staging

cp -rf ./dist $(Build.ArtifactStagingDirectory)

displayName: 'npm install and build'

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: '$(Build.ArtifactStagingDirectory)'

ArtifactName: 'drop'

publishLocation: 'Container'

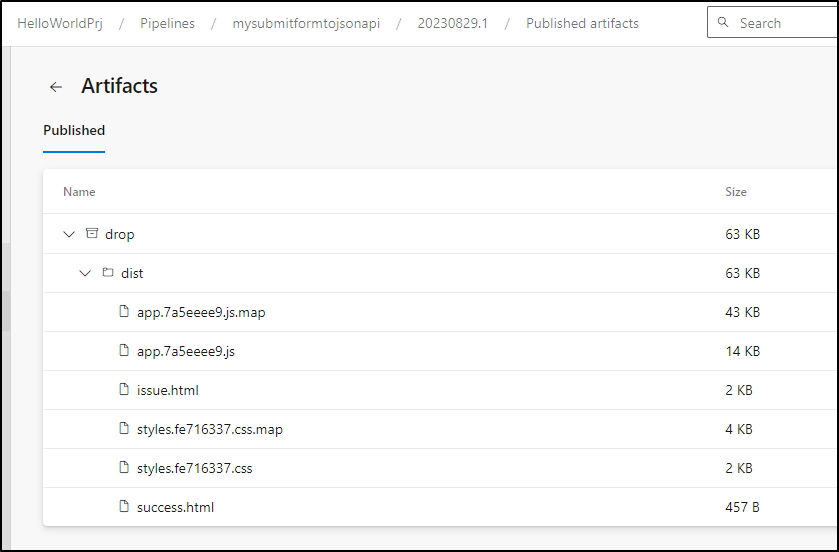

and download the built artifact

I’ll now use a Dockerfile

FROM nginx

COPY ./dist /usr/share/nginx/html

#harbor.freshbrewed.science/freshbrewedprivate/quickform:0.0.1

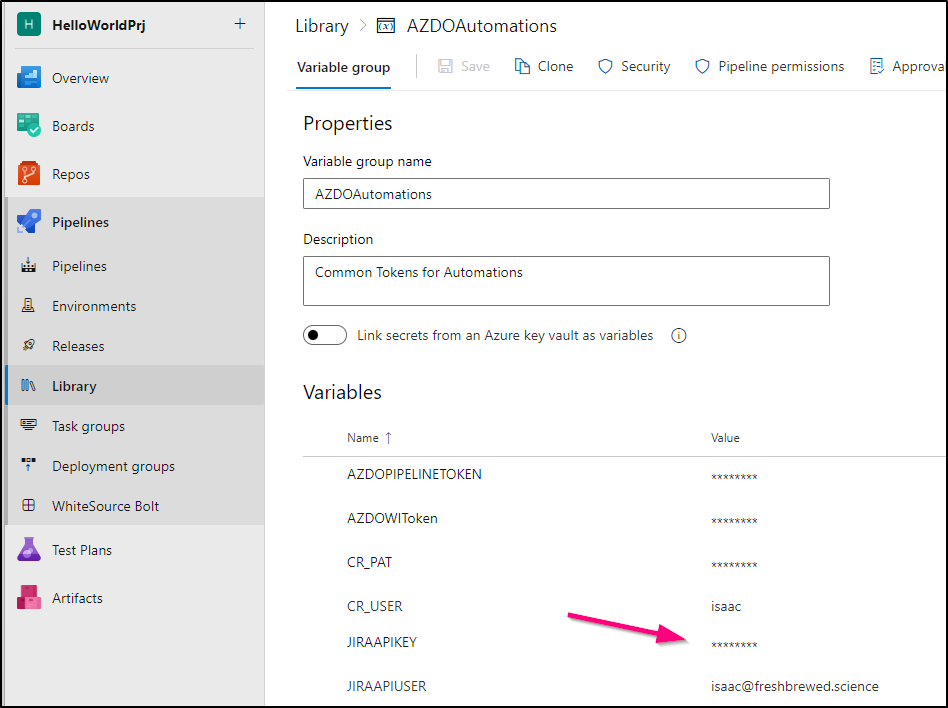

Add some variables to the library then update the pipeline to build and push to my Harbor CR

# Node.js

# Build a general Node.js project with npm.

# Add steps that analyze code, save build artifacts, deploy, and more:

# https://docs.microsoft.com/azure/devops/pipelines/languages/javascript

trigger:

- master

pool:

vmImage: ubuntu-latest

variables:

- group: AZDOAutomations

steps:

- task: NodeTool@0

inputs:

versionSpec: '10.x'

displayName: 'Install Node.js'

- script: |

npm install

npm run build

# copy to artifact staging

cp -rf ./dist $(Build.ArtifactStagingDirectory)

displayName: 'npm install and build'

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: '$(Build.ArtifactStagingDirectory)'

ArtifactName: 'drop'

publishLocation: 'Container'

- script: |

export BUILDIMGTAG="`cat Dockerfile | tail -n1 | sed 's/^.*\///g'`"

docker build -t $BUILDIMGTAG .

docker images

displayName: 'docker build'

- script: |

export BUILDIMGTAG="`cat Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FINALBUILDTAG="`cat Dockerfile | tail -n1 | sed 's/^#//g'`"

docker tag $BUILDIMGTAG $FINALBUILDTAG

docker images

echo $(CR_PAT) | docker login harbor.freshbrewed.science -u $(CR_USER) --password-stdin

docker push $FINALBUILDTAG

displayName: 'docker login and push'

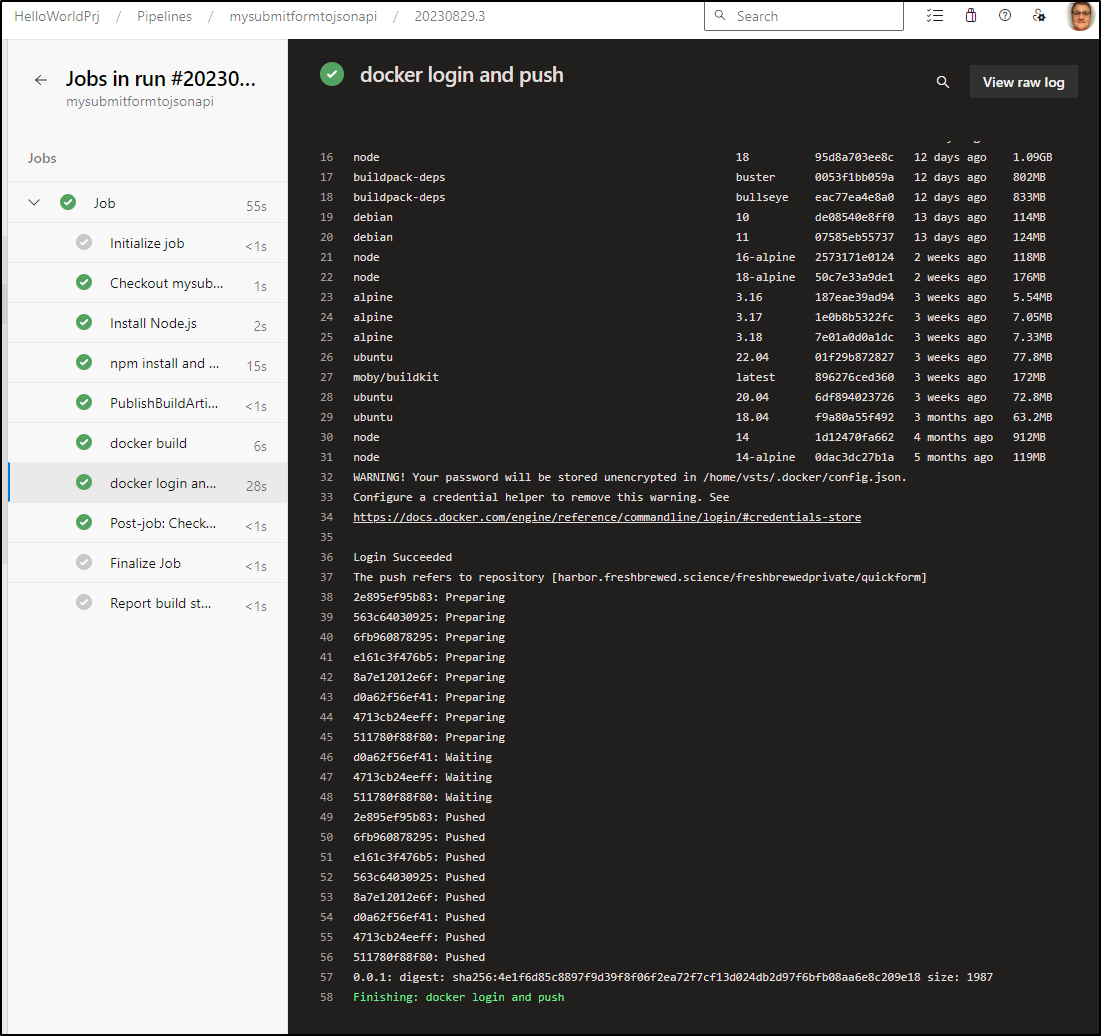

I can see it built and pushed

My first test will be a manual helm deploy - basically, use the Nginx helm chart to deploy my new image

$ helm install nginxtest --set image.pullSecrets[0]=myharborreg --set image.registry=harbor.freshbrewed.science --set image.repository=freshbrewedprivate/quickform --set

image.tag=0.0.1 bitnami/nginx

NAME: nginxtest

LAST DEPLOYED: Mon Aug 28 20:38:51 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: nginx

CHART VERSION: 15.1.2

APP VERSION: 1.25.1

** Please be patient while the chart is being deployed **

NGINX can be accessed through the following DNS name from within your cluster:

nginxtest.default.svc.cluster.local (port 80)

To access NGINX from outside the cluster, follow the steps below:

1. Get the NGINX URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

Watch the status with: 'kubectl get svc --namespace default -w nginxtest'

export SERVICE_PORT=$(kubectl get --namespace default -o jsonpath="{.spec.ports[0].port}" services nginxtest)

export SERVICE_IP=$(kubectl get svc --namespace default nginxtest -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

echo "http://${SERVICE_IP}:${SERVICE_PORT}"

I can see the pod created

$ kubectl get pod -l app.kubernetes.io/instance=nginxtest

NAME READY STATUS RESTARTS AGE

nginxtest-578c869b58-qfzlg 0/1 Running 0 92s

and the service

$ kubectl get svc nginxtest

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginxtest LoadBalancer 10.43.53.163 <pending> 80:31524/TCP 39s

I’m not sure why that chart assumes routing to 8080 for liveness and readiness but this simple service really doesnt need health checks so I’ll disable them

$ helm upgrade nginxtest --set image.pullSecrets[0]=myharborreg --set image.registry=harbor.freshbrewed.science --set image.repository=freshbrewedprivate/quickform --set image.tag=0.0.1 --set livenessProbe.enabled=false --set readinessProbe.enabled=false bitnami/nginx

Release "nginxtest" has been upgraded. Happy Helming!

NAME: nginxtest

LAST DEPLOYED: Mon Aug 28 20:42:24 2023

NAMESPACE: default

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

CHART NAME: nginx

CHART VERSION: 15.1.2

APP VERSION: 1.25.1

** Please be patient while the chart is being deployed **

NGINX can be accessed through the following DNS name from within your cluster:

nginxtest.default.svc.cluster.local (port 80)

To access NGINX from outside the cluster, follow the steps below:

1. Get the NGINX URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

Watch the status with: 'kubectl get svc --namespace default -w nginxtest'

export SERVICE_PORT=$(kubectl get --namespace default -o jsonpath="{.spec.ports[0].port}" services nginxtest)

export SERVICE_IP=$(kubectl get svc --namespace default nginxtest -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

echo "http://${SERVICE_IP}:${SERVICE_PORT}"

$ kubectl get pod -l app.kubernetes.io/instance=nginxtest

NAME READY STATUS RESTARTS AGE

nginxtest-7ddc4d767c-bkfnp 1/1 Running 0 50s

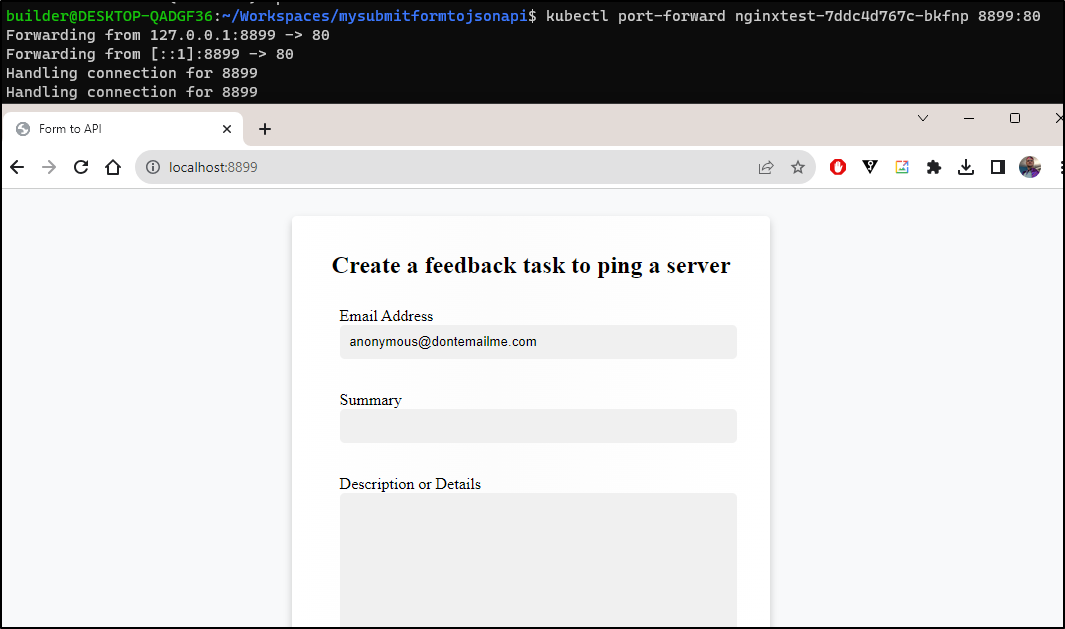

A quick port-forward shows it works

Lastly, I want to ingress to it.

I quick double check my external ip (has changed a bit lately)

$ curl ifconfig.me

75.73.224.240

Then use that in a DNS entry

$ cat r53-nginxtest.yaml

{

"Comment": "CREATE pingtest fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "pingtest.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "75.73.224.240"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-nginxtest.yaml

{

"ChangeInfo": {

"Id": "/change/C0521963320LQPJ5M5TV0",

"Status": "PENDING",

"SubmittedAt": "2023-08-29T10:55:45.838Z",

"Comment": "CREATE pingtest fb.s A record "

}

}

I’ll use the service to craft the ingress

$ kubectl get svc nginxtest -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

meta.helm.sh/release-name: nginxtest

meta.helm.sh/release-namespace: default

creationTimestamp: "2023-08-29T01:38:52Z"

labels:

app.kubernetes.io/instance: nginxtest

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: nginx

helm.sh/chart: nginx-15.1.2

name: nginxtest

namespace: default

resourceVersion: "211236276"

uid: 3dafba42-9793-4f3d-8733-4c1c04725f8e

spec:

allocateLoadBalancerNodePorts: true

clusterIP: 10.43.53.163

clusterIPs:

- 10.43.53.163

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: http

nodePort: 31524

port: 80

protocol: TCP

targetPort: http

selector:

app.kubernetes.io/instance: nginxtest

app.kubernetes.io/name: nginx

sessionAffinity: None

type: LoadBalancer

status:

loadBalancer: {}

$ cat pingtest.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "600"

nginx.org/proxy-read-timeout: "600"

labels:

app: pingtest

name: pingtest

spec:

rules:

- host: pingtest.freshbrewed.science

http:

paths:

- backend:

service:

name: nginxtest

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- pingtest.freshbrewed.science

secretName: pingtest-tls

I’ll quick apply

$ kubectl apply -f pingtest.ingress.yaml

ingress.networking.k8s.io/pingtest created

$ kubectl get ingress pingtest

NAME CLASS HOSTS ADDRESS PORTS AGE

pingtest <none> pingtest.freshbrewed.science 192.168.1.215,192.168.1.36,192.168.1.57,192.168.1.78 80, 443 56s

$ kubectl get cert pingtest-tls

NAME READY SECRET AGE

pingtest-tls False pingtest-tls 72s

$ kubectl get cert pingtest-tls

NAME READY SECRET AGE

pingtest-tls True pingtest-tls 2m12s

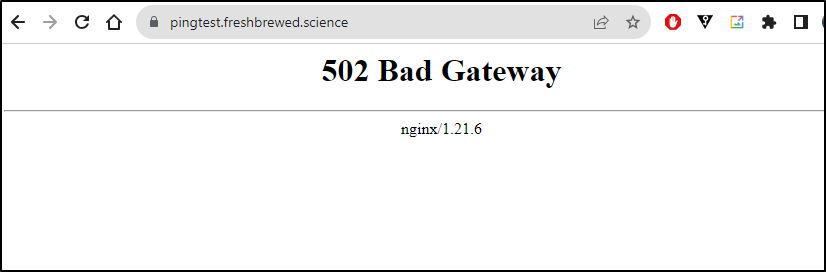

At first I got a 502

Then realized the helm chart defaulted to LoadBalancer not ClusterIP

I’ll fix with an upgrade and --set service.type=ClusterIP. I also realized the reason my health checks (readiness and liveness) failed was the container port http was set to 8080, not 80. I fixed that as well

$ helm upgrade nginxtest --set image.pullSecrets[0]=myharborreg --set image.registry=harbor.freshbrewed.science --set image.repository=freshbrewedprivate/quickform --set image.tag=0.0.1 --set service.type=ClusterIP --set containerPorts.http=80 bitnami/nginx

Release "nginxtest" has been upgraded. Happy Helming!

NAME: nginxtest

LAST DEPLOYED: Tue Aug 29 06:13:03 2023

NAMESPACE: default

STATUS: deployed

REVISION: 4

TEST SUITE: None

NOTES:

CHART NAME: nginx

CHART VERSION: 15.1.2

APP VERSION: 1.25.1

** Please be patient while the chart is being deployed **

NGINX can be accessed through the following DNS name from within your cluster:

nginxtest.default.svc.cluster.local (port 80)

To access NGINX from outside the cluster, follow the steps below:

1. Get the NGINX URL by running these commands:

export SERVICE_PORT=$(kubectl get --namespace default -o jsonpath="{.spec.ports[0].port}" services nginxtest)

kubectl port-forward --namespace default svc/nginxtest ${SERVICE_PORT}:${SERVICE_PORT} &

echo "http://127.0.0.1:${SERVICE_PORT}"

$ kubectl get pods | grep nginx

nginx-ingress-release-nginx-ingress-5bb8867c98-pjdkr 1/1 Running 3 (101d ago) 397d

nginxtest-69b8c5b4cd-dzq7l 1/1 Running 0 15s

Now we have a functional, albeit rather plain Form as hosted out of k8s

JIRA

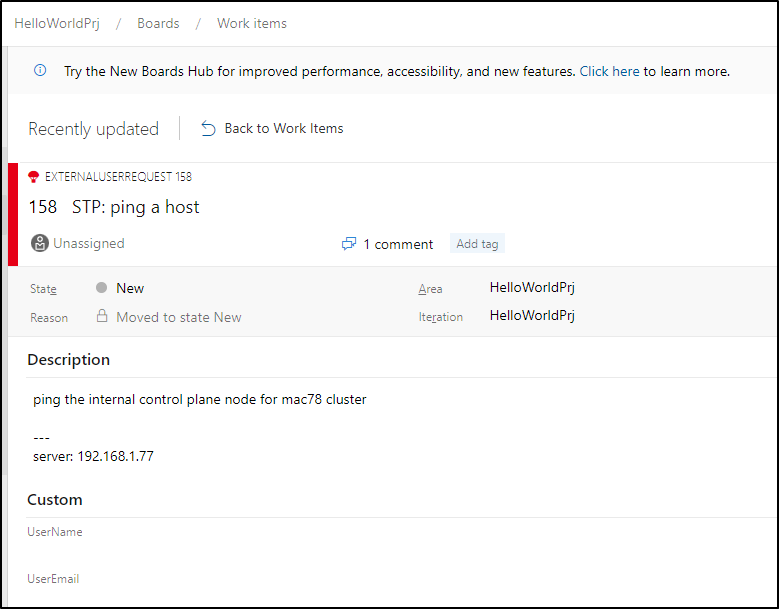

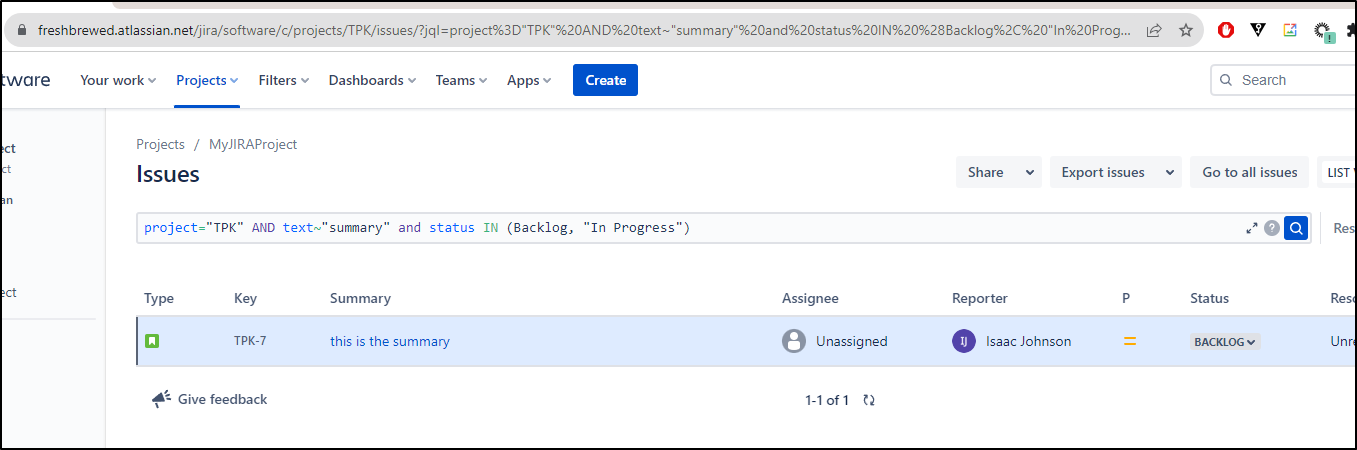

Let’s see if we can use a basic JQL to query for issue IDs

$ curl --request GET -u isaac@freshbrewed.science:c28geWVhaCwgaSBhY2NpZGVudGFsbHkgcGFzdGVkIHRoZSBrZXkgZWFybGllci4gZHVtYiB0aGluZyB0byBkby4gYWggd2VsbCwgcm90YXRlIGFuZCBtb3ZlIG9u -H "Content-Type: application/json" --url https://freshbrewed.atlassian.net/rest/api/2/search?jql=project%3D"TPK"%20AND%20text~"summary" | jq '.issues[] | .id'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 5744 0 5744 0 0 12736 0 --:--:-- --:--:-- --:--:-- 12736

"10006"

Or KEY

$ curl --request GET -u isaac@freshbrewed.science:c28geWVhaCwgaSBhY2NpZGVudGFsbHkgcGFzdGVkIHRoZSBrZXkgZWFybGllci4gZHVtYiB0aGluZyB0byBkby4gYWggd2VsbCwgcm90YXRlIGFuZCBtb3ZlIG9u -H "Content-Type: application/json" --url https://freshbrewed.atlassian.net/rest/api/2/search?jql=project%3D"TPK"%20AND%20text~"summary" | jq '.issues[] | .key'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 5744 0 5744 0 0 9937 0 --:--:-- --:--:-- --:--:-- 9920

"TPK-7"

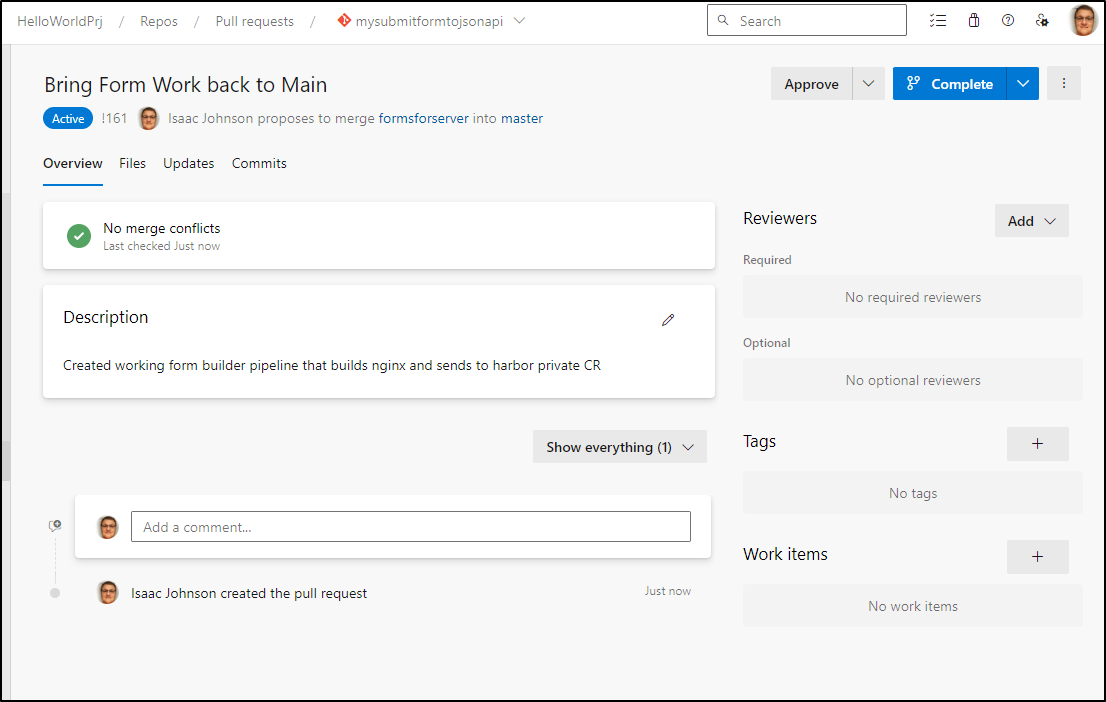

Thus far, I’ve been operating in a branch, but the form trigger will kick off from main.

I needed to PR it back to main.

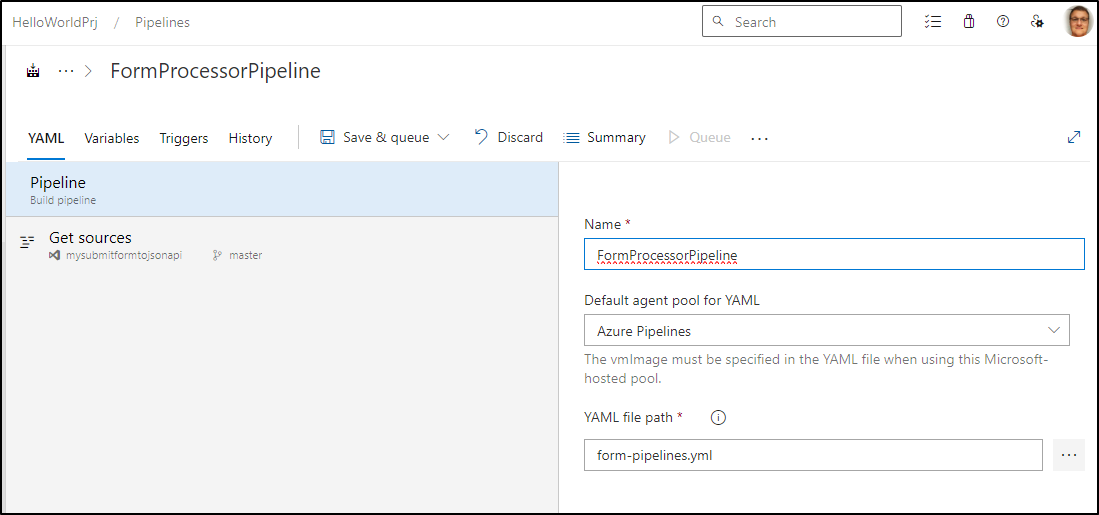

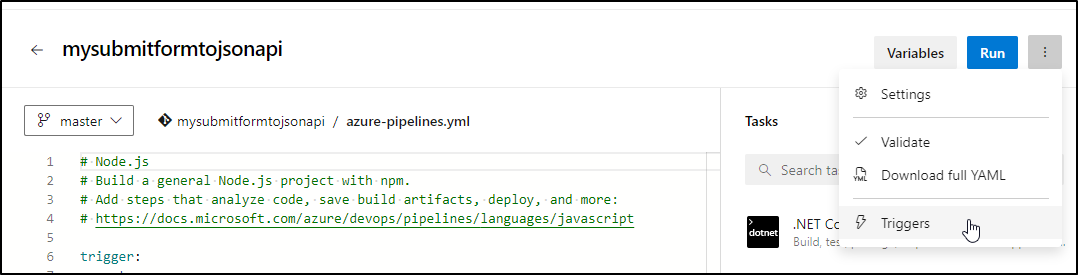

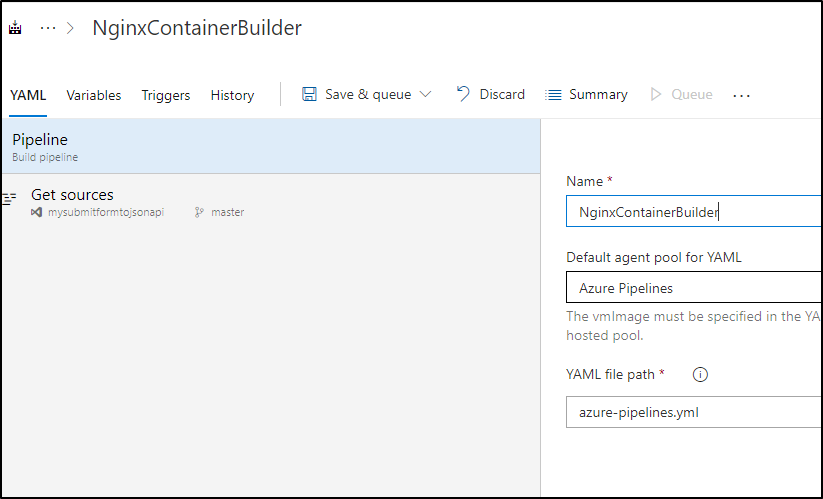

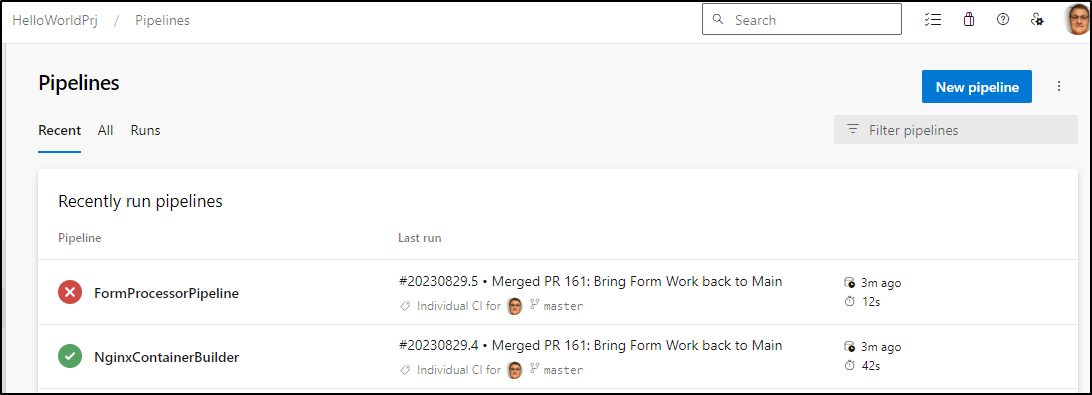

I’m also going to take a moment to name the pipelines something reasonable. We can get there via “triggers” in settings and popping over to the YAML tab

and the other

This small bit of housekeeping makes it a lot easier to read and identify which pipelines are doing what

I’ll start by experimenting with two ways to prefix and test for variables

export SERVERTOPING=`echo "$"`

# IF server to ping, this is an action type

if [ ! -z "${SERVERTOPING}" ]; then

echo -e "\n---\nserver: $SERVERTOPING\n" >> rawDescription

sed -i '1iSTP:' rawSummary

fi

# just another test

if [[ $SERVERTOPING ]]; then

echo -e "\n---\nserverToPing: $SERVERTOPING\n" >> rawDescription

sed -i '1s/^/PST: /' rawSummary

fi

Now let’s test it

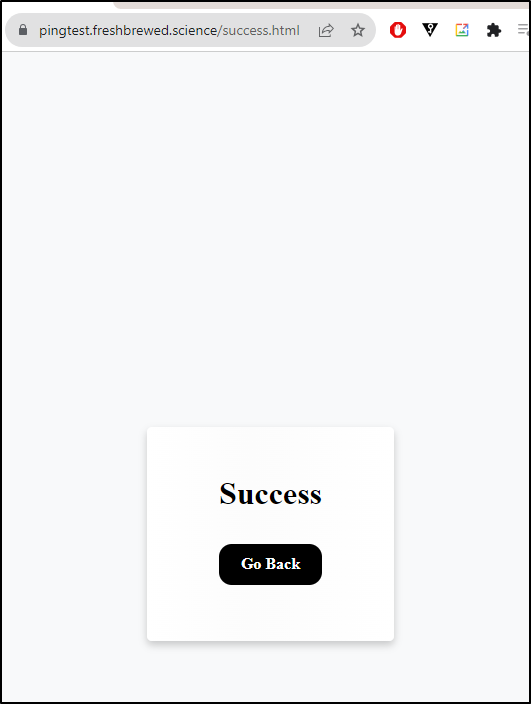

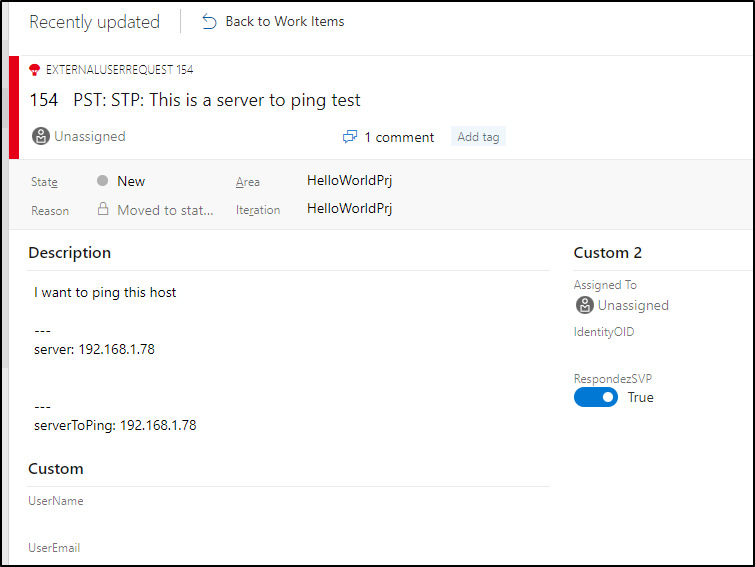

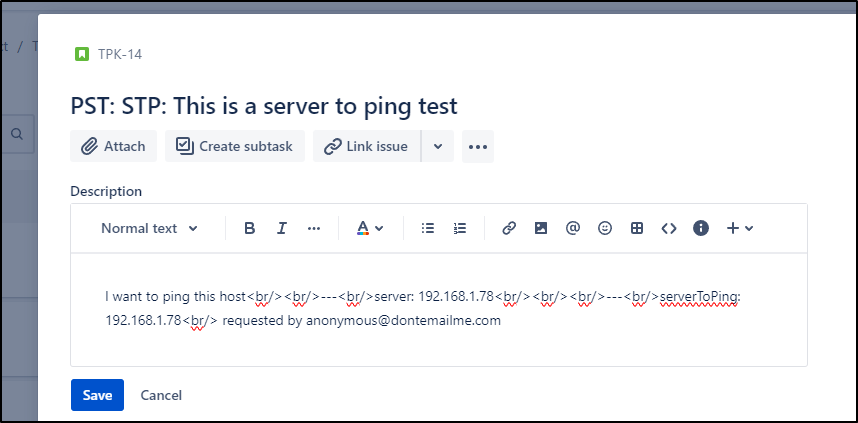

that gives me a success

which showed both checks worked

and the inverse

also does expected

The prefixing on summary worked in JIRA

but the body was a fail

I will save you the lots and lots of debug. The short version is that the JSON block for JIRA needs to be one line, with escaped newlines and the sed needs to be double escaped with sed ':a;N;$!ba;s/\n/\\\\n/g'

# Payload as sent by Web Form

resources:

webhooks:

- webhook: issuecollector

connection: issuecollector

pool:

vmImage: ubuntu-latest

variables:

- group: AZDOAutomations

steps:

- script: |

echo Add other tasks to build, test, and deploy your project.

echo "userId: $"

echo "summary: $"

echo "description: $"

cat >rawDescription <<EOOOOL

$

EOOOOL

cat >rawSummary <<EOOOOT

$

EOOOOT

export SERVERTOPING=`echo "$"`

# IF server to ping, this is an action type

if [ ! -z "${SERVERTOPING}" ]; then

echo -e "\n---\nserver: $SERVERTOPING\n" >> rawDescription

sed -i '1iSTP:' rawSummary

fi

cat rawDescription | sed ':a;N;$!ba;s/\n/<br\/>/g' | sed "s/'/\\\\'/g"> inputDescription

cat rawSummary | sed ':a;N;$!ba;s/\n/ /g' | sed "s/'/\\\\'/g" > inputSummary

echo "input summary: `cat inputSummary`"

echo "input description: `cat inputDescription`"

# if they left "dontemailme" assume they do not want RSVP

export USERTLD=`echo "" | sed 's/^.*@//'`

if [[ "$USERTLD" == "dontemailme.com" ]]; then

export RSVP="--fields Custom.RespondezSVP=false"

else

export RSVP="--fields Custom.RespondezSVP=true"

fi

cat >$(Pipeline.Workspace)/createwi.sh <<EOL

set -x

export AZURE_DEVOPS_EXT_PAT=$(AZDOWIToken)

az boards work-item create --title '`cat inputSummary`' --type ExternalUserRequest --org https://dev.azure.com/princessking --project HelloWorldPrj --discussion 'requested by $' --description '`cat inputDescription | tr -d '\n'`' $RSVP > azresp.json

EOL

chmod u+x $(Pipeline.Workspace)/createwi.sh

echo "createwi.sh:"

cat $(Pipeline.Workspace)/createwi.sh

# Create JIRA Issue

cat >$(Pipeline.Workspace)/createjira.sh <<EOT

set -x

curl --request POST \

--url 'https://freshbrewed.atlassian.net/rest/api/2/issue' \

--user '$(JIRAAPIUSER):$(JIRAAPIKEY)' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--data '{

"fields": {

"components": [],

"description": "requested by $\n`cat rawDescription | sed ':a;N;$!ba;s/\n/\\\\n/g' | sed 's/\"/\\\"/g'`",

"issuetype": {

"id": "10001"

},

"labels": [

"AzDOFORM",

"$"

],

"priority": {

"name": "Medium",

"id": "3"

},

"project": {

"id": "10000"

},

"reporter": {

"id": "618742213ae5230069d074cf"

},

"summary": "`cat inputSummary`"

}

}' > jiraresp

EOT

chmod u+x $(Pipeline.Workspace)/createjira.sh

echo "have a nice day."

displayName: 'Check webhook payload'

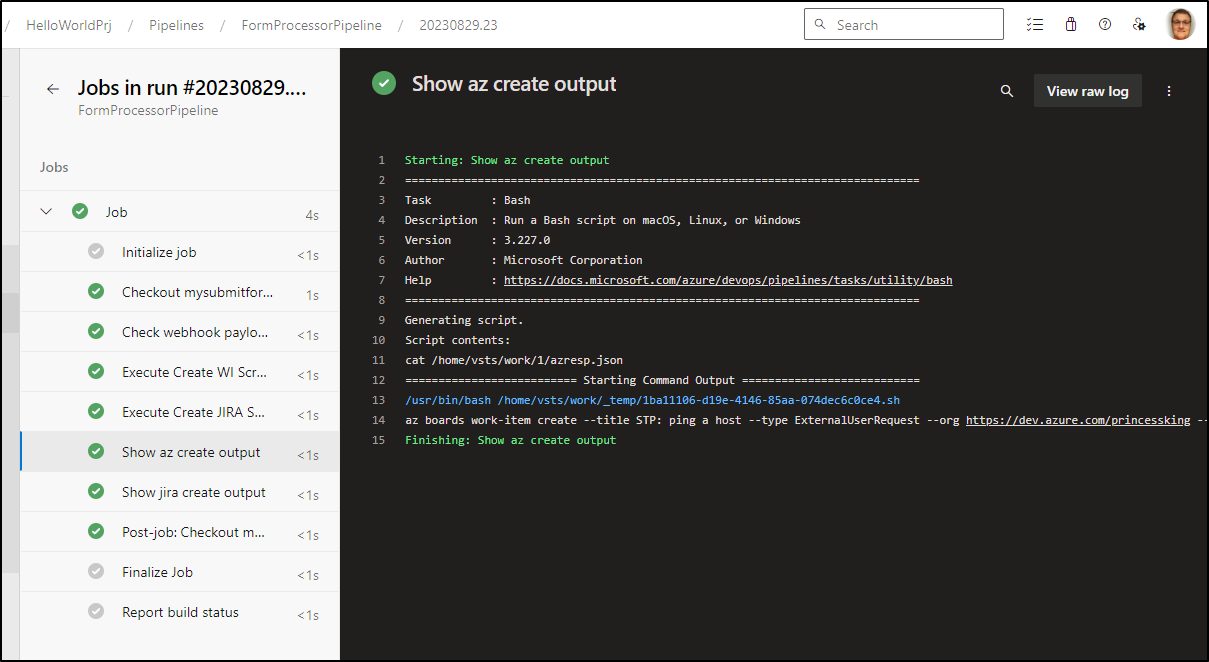

- task: Bash@3

inputs:

filePath: '$(Pipeline.Workspace)/createwi.sh'

workingDirectory: '$(Pipeline.Workspace)'

displayName: 'Execute Create WI Script'

- task: Bash@3

inputs:

filePath: '$(Pipeline.Workspace)/createjira.sh'

workingDirectory: '$(Pipeline.Workspace)'

displayName: 'Execute Create JIRA Script'

- task: Bash@3

inputs:

targetType: 'inline'

script: 'cat $(Pipeline.Workspace)/azresp.json'

workingDirectory: '$(Pipeline.Workspace)'

displayName: 'Show az create output'

- task: Bash@3

inputs:

targetType: 'inline'

script: 'cat $(Pipeline.Workspace)/jiraresp'

workingDirectory: '$(Pipeline.Workspace)'

displayName: 'Show jira create output'

Lest one has way too much debug data, I recommend doing some cleanup now

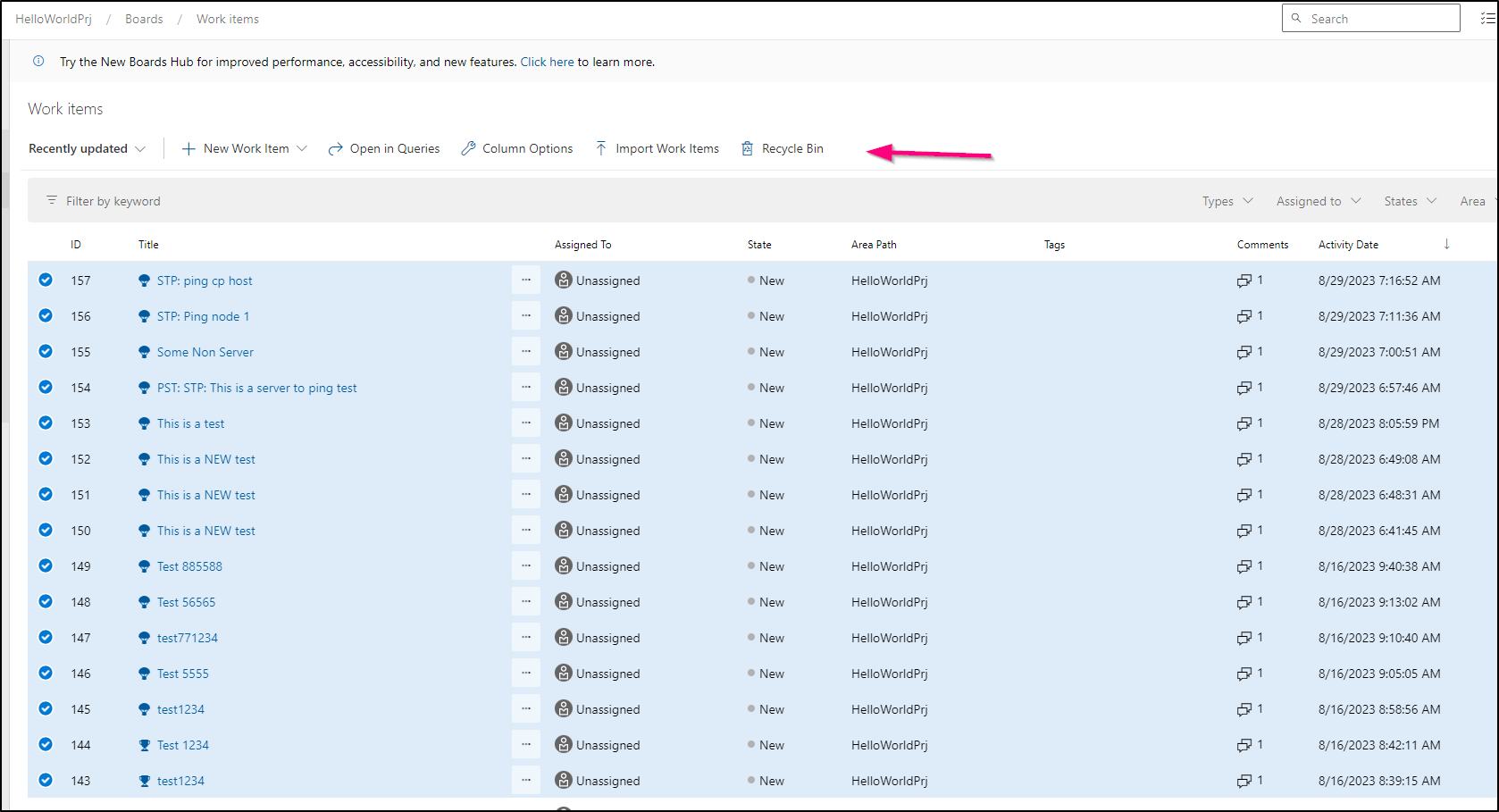

We’ll do one more test so we have an issue to action:

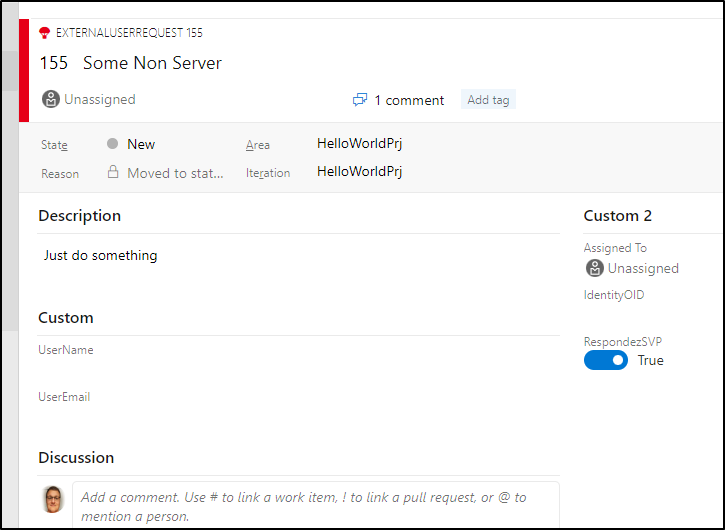

which processed

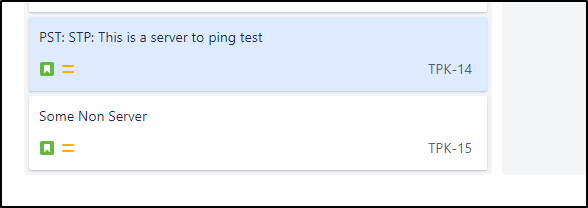

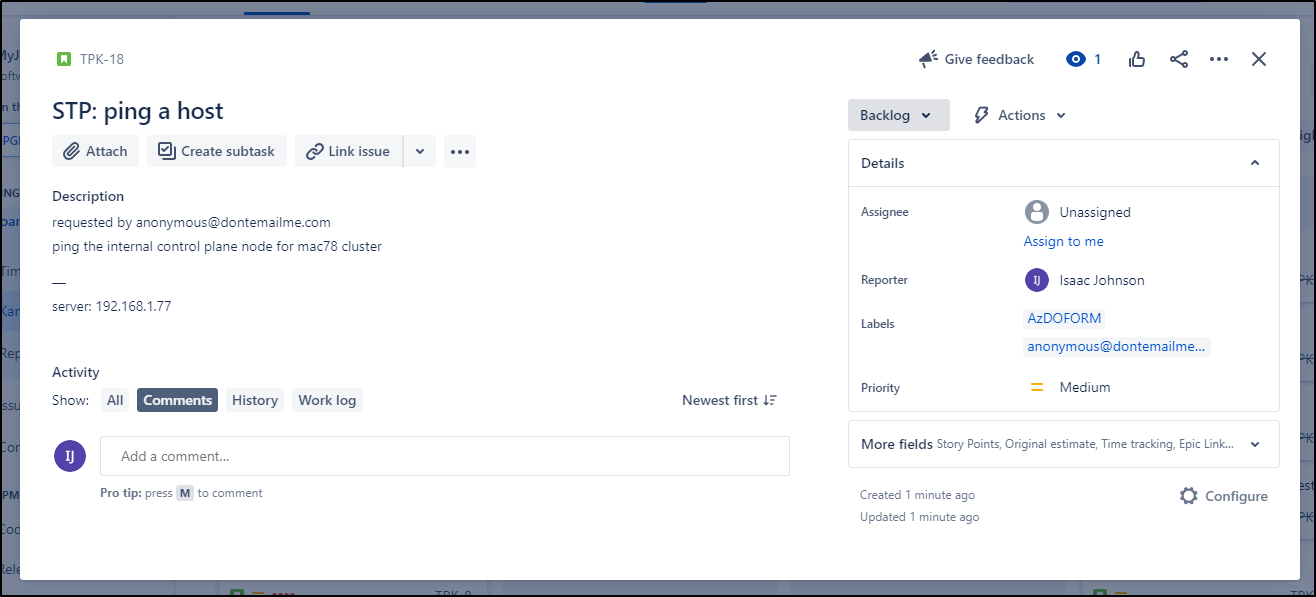

and I got a JIRA ticket

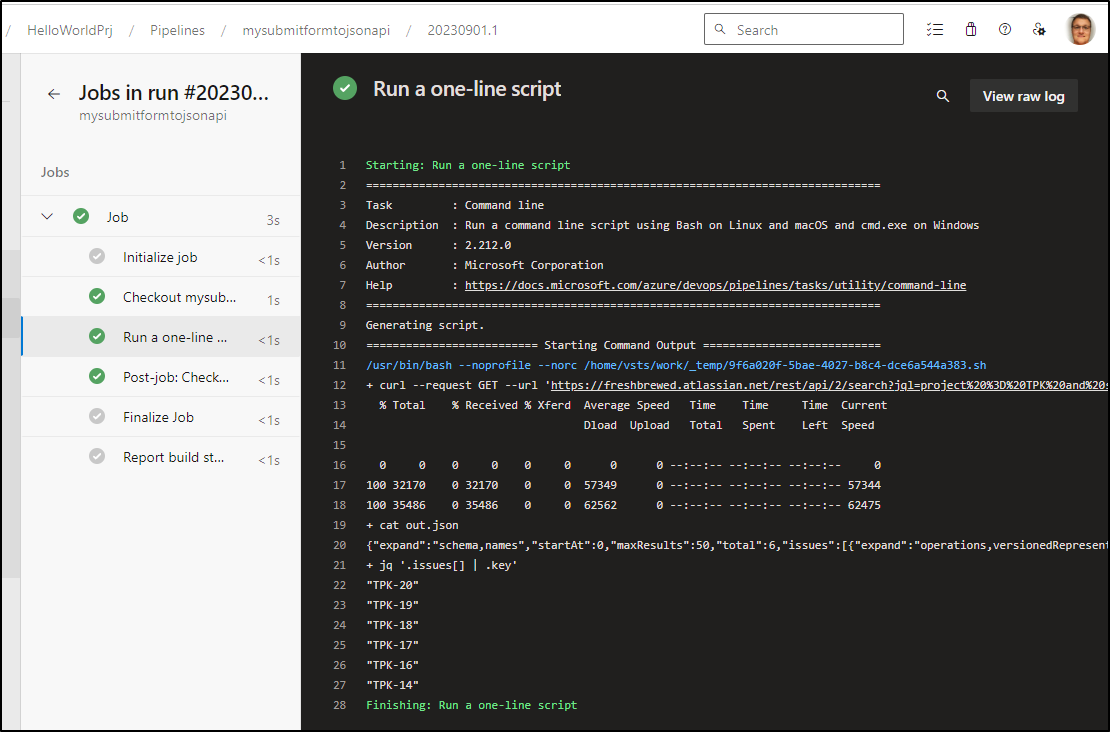

and AZ WI

Next we need to create a scheduled WI collector that can query for results from JIRA to build a matrix….

We can fetch by Issue ID or Key

$ curl --request GET --url 'https://freshbrewed.atlassian.net/rest/api/2/search?jql=project%20%3D%20TPK%20and%20summary%20~%20STP%3A' --user isaac@freshbrewed.science:c28geWVhaCwgaSBhY2NpZGVudGFsbHkgcGFzdGVkIHRoZSBrZXkgZWFybGllci4gZHVtYiB0aGluZyB0byBkby4gYWggd2VsbCwgcm90YXRlIGFuZCBtb3ZlIG9u --header 'Accept: application/json' | jq '.issues[] | .id'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 35486 0 35486 0 0 72568 0 --:--:-- --:--:-- --:--:-- 72420

"10019"

"10018"

"10017"

"10016"

"10015"

"10013"

$ curl --request GET --url 'https://freshbrewed.atlassian.net/rest/api/2/search?jql=project%20%3D%20TPK%20and%20summary%20~%20STP%3A' --user isaac@freshbrewed.science:c28geWVhaCwgaSBhY2NpZGVudGFsbHkgcGFzdGVkIHRoZSBrZXkgZWFybGllci4gZHVtYiB0aGluZyB0byBkby4gYWggd2VsbCwgcm90YXRlIGFuZCBtb3ZlIG9u --header 'Accept: application/json' | jq '.issues[] | .key'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 35486 0 35486 0 0 73775 0 --:--:-- --:--:-- --:--:-- 73775

"TPK-20"

"TPK-19"

"TPK-18"

"TPK-17"

"TPK-16"

"TPK-14"

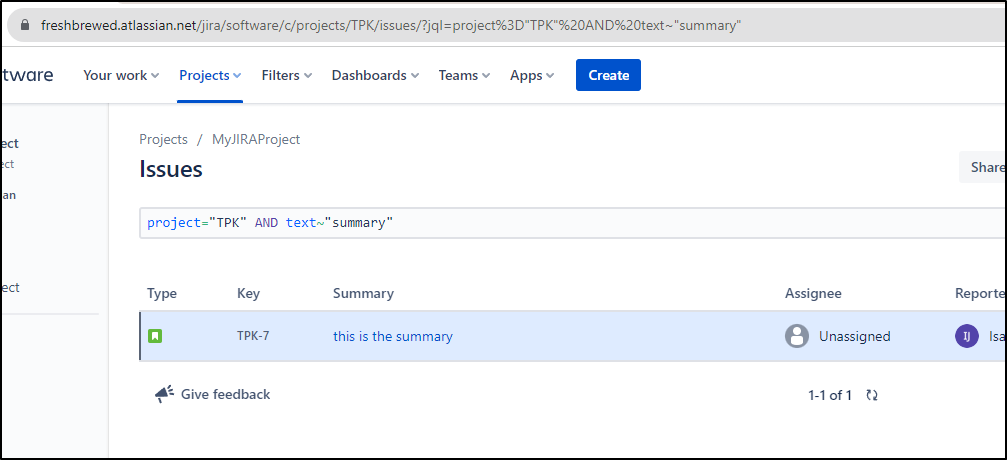

I’ll create a pipeline to also test the REST call

trigger:

- wia-jiratix

variables:

- group: AZDOAutomations

pool:

vmImage: ubuntu-latest

steps:

- script: |

set -x

curl --request GET \

--url 'https://freshbrewed.atlassian.net/rest/api/2/search?jql=project%20%3D%20TPK%20and%20summary%20~%20STP%3A' \

--user '$(JIRAAPIUSER):$(JIRAAPIKEY)' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' > out.json

cat out.json

cat out.json | jq '.issues[] | .key'

displayName: 'Run a one-line script'

That string project%3D"TPK"%20AND%20text~"summary" came from doing a quick JQL search; it’s just basic URL encode.

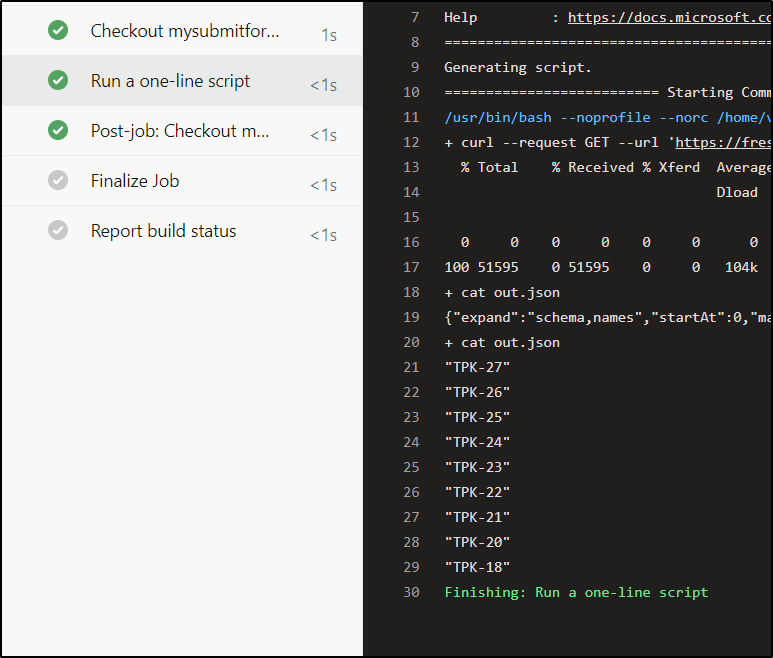

We can now test

I’ll tweak it just a bit more to avoid completed work

trigger:

- wia-jiratix

variables:

- group: AZDOAutomations

pool:

vmImage: ubuntu-latest

steps:

- script: |

set -x

curl --request GET \

--url 'https://freshbrewed.atlassian.net/rest/api/2/search?jql=project%3D"TPK"%20AND%20text~"summary"%20and%20status%20IN%20%28Backlog%2C%20"In%20Progress"%29' \

--user '$(JIRAAPIUSER):$(JIRAAPIKEY)' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' > out.json

cat out.json

cat out.json | jq '.issues[] | .key'

displayName: 'Run a one-line script'

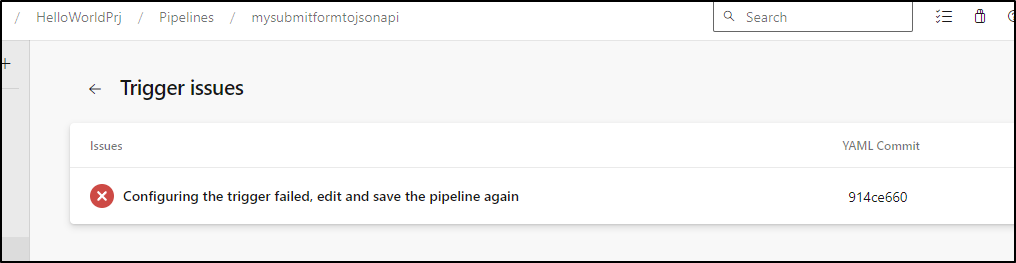

I also noted the error from before about tying to a branch

I’ll use an include syntax which is a bit more permissive

trigger:

branches:

include:

- wia-jiratix

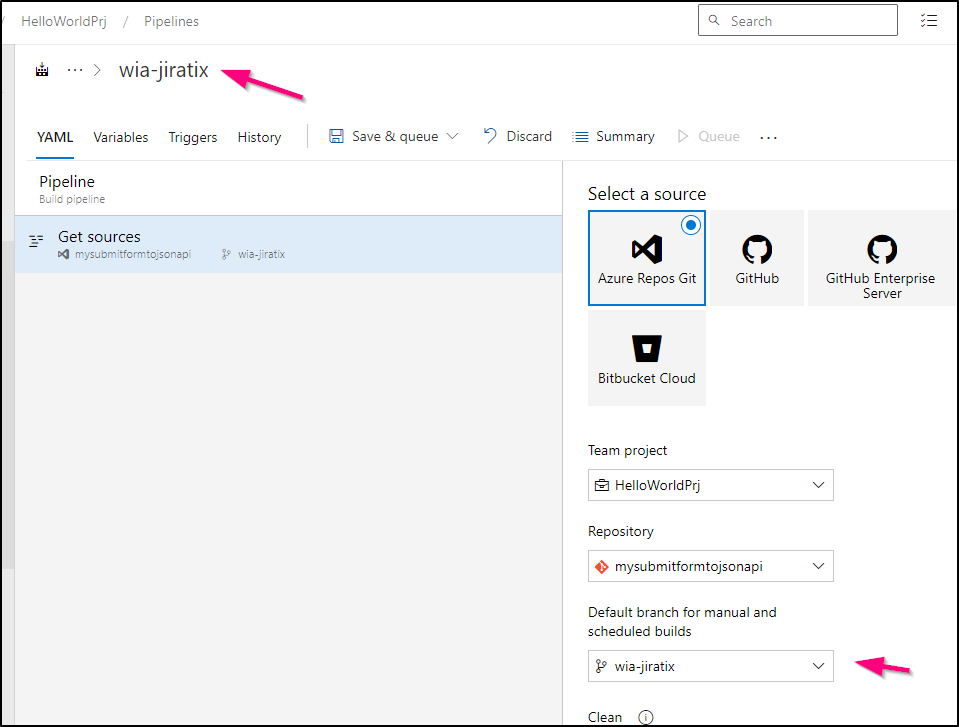

Also, just as a bit of housekeeping, I’ll set the default branch and rename the pipeline to something more reasonable

I also noted my form processor pipeline, set to trigger on webhooks, was errantly kicking off on all branches. Let’s limit that too

resources:

webhooks:

- webhook: issuecollector

connection: issuecollector

trigger:

branches:

include:

- main

- master

exclude:

- wia-*

pr:

branches:

include:

- main

- master

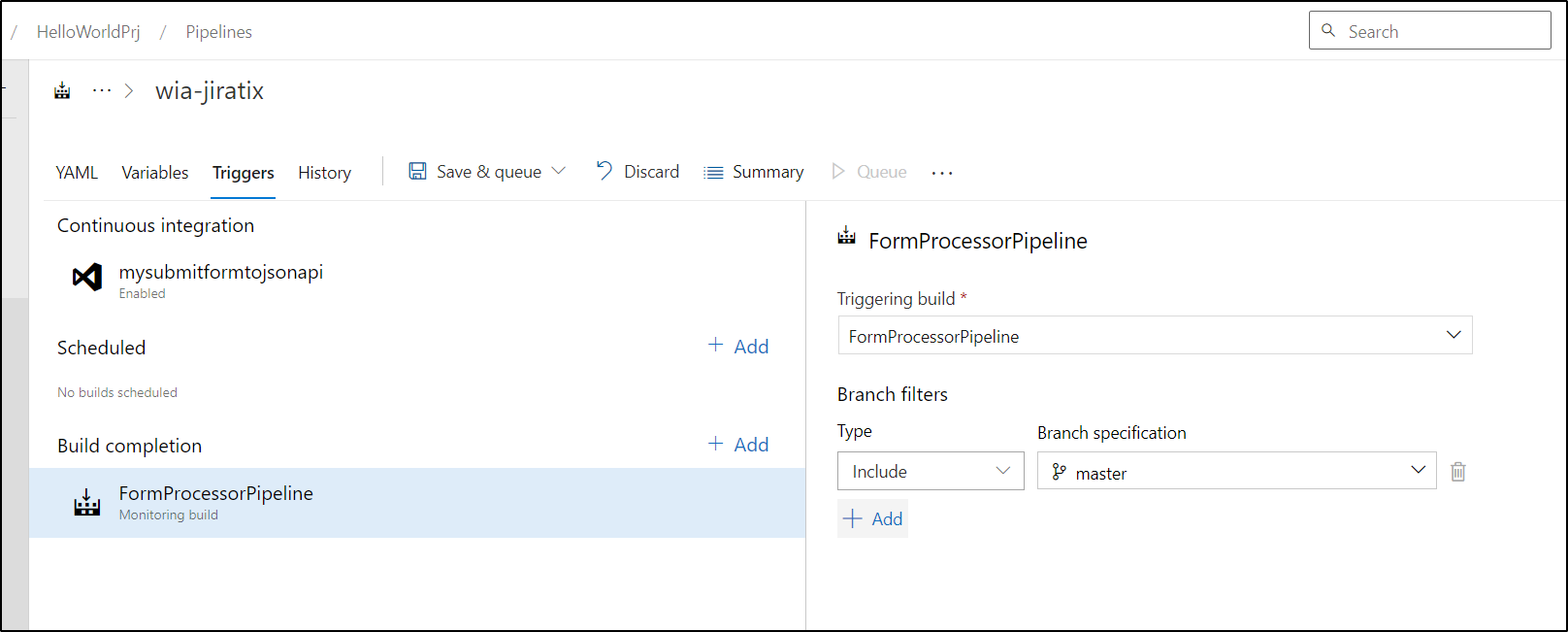

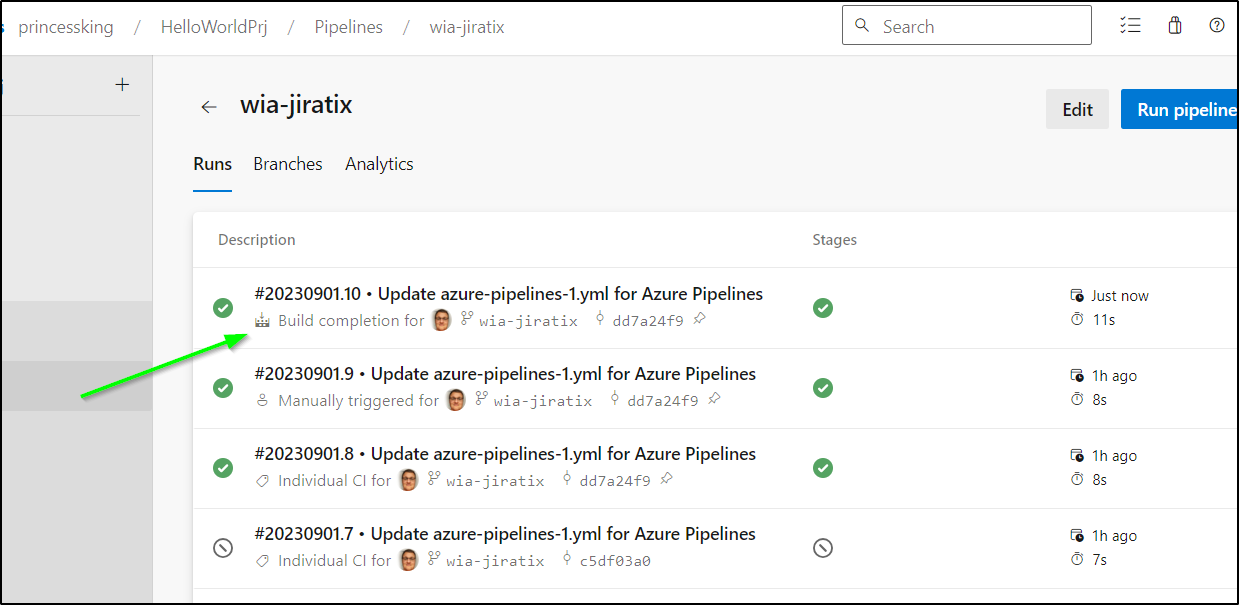

However, it would be nice if we just assumed that when the form pipeline was done, it would be a smart idea to run our WIA checks, regardless of the schedule (to be created).

We can add a resource block to kick off IF the FormProcessor pipeline ran, but only if master or main - I don’t need to run the WIA pipeline if it was a PR or a test run

resources:

pipelines:

- pipeline: FormProcessorPipeline

source: FormProcessorPipeline

project: HelloWorldPrj

trigger:

branches:

include:

- main

- master

trigger:

branches:

include:

- wia-jiratix

variables:

- group: AZDOAutomations

pool:

vmImage: ubuntu-latest

steps:

- script: |

set -x

curl --request GET \

--url 'https://freshbrewed.atlassian.net/rest/api/2/search?jql=project%3D"TPK"%20AND%20text~"summary"%20and%20status%20IN%20%28Backlog%2C%20"In%20Progress"%29' \

--user '$(JIRAAPIUSER):$(JIRAAPIKEY)' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' > out.json

cat out.json

cat out.json | jq '.issues[] | .key'

displayName: 'Run a one-line script'

However, while this should all work, in my case it just didn’t. I had to use manual triggers to exclude wia-jiratix in the FormProcessor and the wia-jiratix never triggered on the source even with the block

trigger:

branches:

include:

- wia-jiratix

resources:

pipelines:

- pipeline: FormProcessorPipeline

source: FormProcessorPipeline

trigger:

branches:

include:

- main

- master

- refs/heads/main

- refs/heads/master

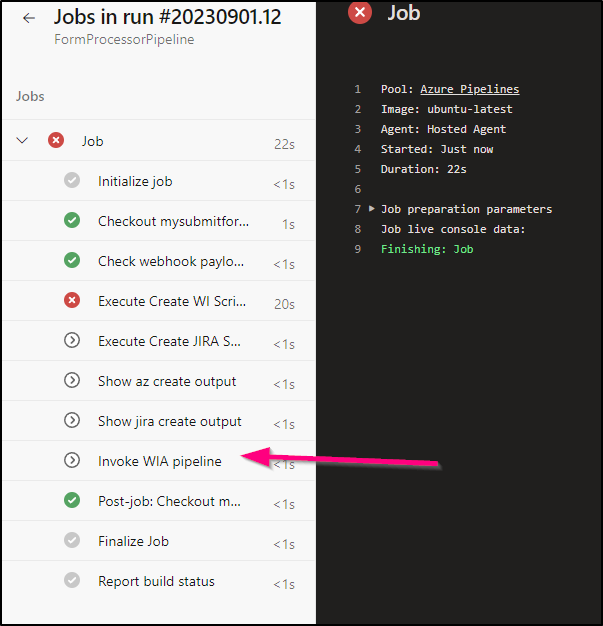

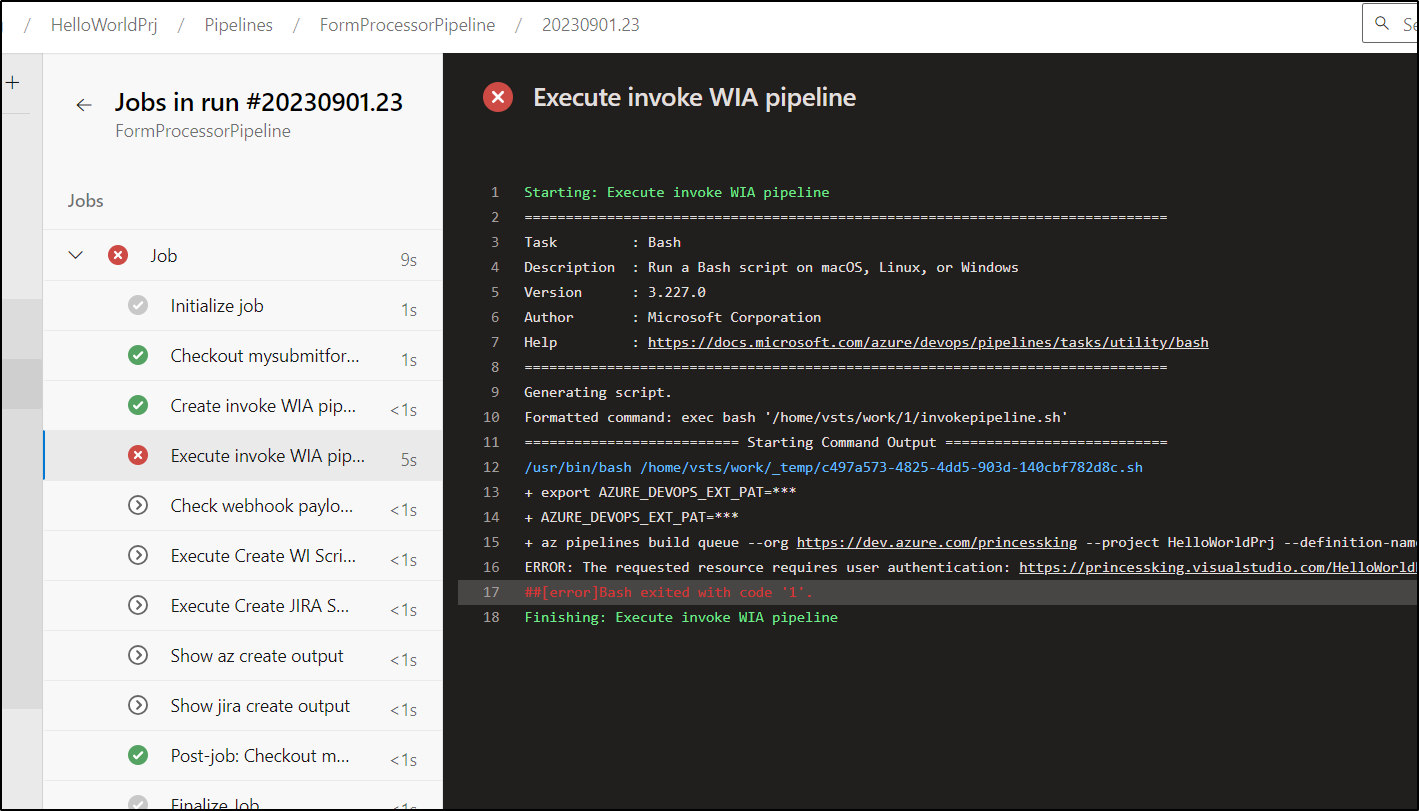

Where there is a will there is way. We can instead tell our Form Processor, when done, to kick off the WIA (push instead of pull) using az pipelines build queue

- task: Bash@3

inputs:

targetType: 'inline'

script: |

export AZURE_DEVOPS_EXT_PAT=$(AZDOWIToken)

az pipelines build queue --org https://dev.azure.com/princessking --project HelloWorldPrj --definition-name wia-jiratix --branch wia-jiratix

workingDirectory: '$(Pipeline.Workspace)'

displayName: 'Invoke WIA pipeline'

The nice part is this will only run if the pipeline is successful

I’m not convinced there is a bug going on with their azure cli. I even copied the plain text key in and ran it and it errored. The exact same command in Ubuntu locally worked

steps:

- task: Bash@3

inputs:

targetType: 'inline'

script: |

cat >$(Pipeline.Workspace)/invokepipeline.sh <<EOL

set -x

export AZURE_DEVOPS_EXT_PAT=q************was plain text************q

az pipelines build queue --org https://dev.azure.com/princessking --project HelloWorldPrj --definition-name wia-jiratix --branch wia-jiratix

EOL

chmod u+x $(Pipeline.Workspace)/invokepipeline.sh

workingDirectory: '$(Pipeline.Workspace)'

displayName: 'Create invoke WIA pipeline'

- task: Bash@3

inputs:

filePath: '$(Pipeline.Workspace)/invokepipeline.sh'

workingDirectory: '$(Pipeline.Workspace)'

displayName: 'Execute invoke WIA pipeline'

I can try a trigger on completion Classic pipeline override next

Thankfully that worked

I realized my JQL was off. It should be project="TPK" AND text~"STP:" and status IN (Backlog, "In Progress")

That looks much better

I’ll now build out a Matrix setup. However, due to the fact the az pipelines cli seems busted, I’ll have to wait on the semaphore

# Starter pipeline

# Start with a minimal pipeline that you can customize to build and deploy your code.

# Add steps that build, run tests, deploy, and more:

# https://aka.ms/yaml

resources:

pipelines:

- pipeline: FormProcessorPipeline

source: FormProcessorPipeline

trigger:

branches:

include:

- main

- master

- refs/heads/main

- refs/heads/master

variables:

- group: AZDOAutomations

pool:

vmImage: ubuntu-latest

stages:

- stage: parse

jobs:

- job: parse_work_item

pool:

vmImage: 'ubuntu-latest'

steps:

- script: |

set -x

curl --request GET \

--url 'https://freshbrewed.atlassian.net/rest/api/2/search?jql=project%3D"TPK"%20AND%20text~"STP%3A"%20and%20status%20IN%20%28Backlog%2C%20"In%20Progress"%29' \

--user '$(JIRAAPIUSER):$(JIRAAPIKEY)' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' > out.json

# Just get IDs

cat out.json | jq -r '.issues[] | .key' | tr '\n' ',' > ids.txt

cat out.json | jq '.issues[] | .key'

displayName: 'Run a one-line script'

- bash: |

#!/bin/bash

set +x

# take comma sep list and set a var (remove trailing comma if there)

echo "##vso[task.setvariable variable=WISTOPROCESS]"`cat ids.txt | sed 's/,$//'` > t.o

set -x

cat t.o

displayName: 'Set WISTOPROCESS'

- bash: |

set -x

export

set +x

export IFS=","

read -a strarr <<< "$(WISTOPROCESS)"

# Print each value of the array by using the loop

export tval="{"

for val in "${strarr[@]}";

do

export tval="${tval}'process$val':{'wi':'$val'}, "

done

set -x

echo "... task.setvariable variable=mywis;isOutput=true]$tval" | sed 's/..$/}/'

set +x

if [[ "$(WISTOPROCESS)" == "" ]]; then

echo "##vso[task.setvariable variable=mywis;isOutput=true]{}" > ./t.o

else

echo "##vso[task.setvariable variable=mywis;isOutput=true]$tval" | sed 's/..$/}/' > ./t.o

fi

set -x

cat ./t.o

name: mtrx

displayName: 'create mywis var'

- job: processor

dependsOn: parse_work_item

pool:

name: 'k3s77-self-hosted'

strategy:

matrix: $[ dependencies.parse_work_item.outputs['mtrx.mywis']]

steps:

- bash: |

if [ "$(wi)" == "" ]; then

echo "NOTHING TO DO YO"

echo "##vso[task.setvariable variable=skipall;]yes"

exit

else

echo "PROCESS THIS WI $(wi)"

echo "##vso[task.setvariable variable=skipall;]no"

fi

echo "my item: $(wi)"

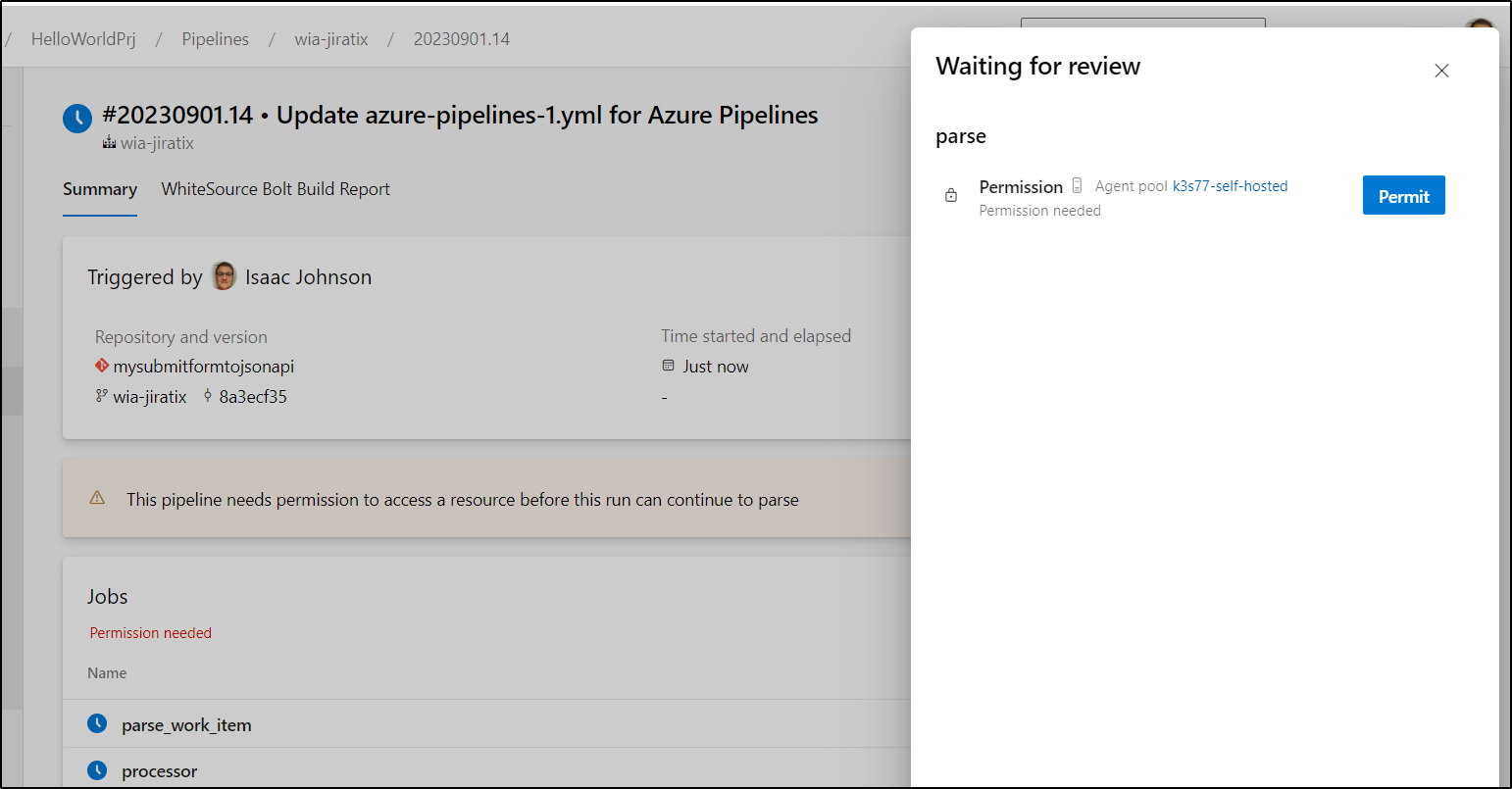

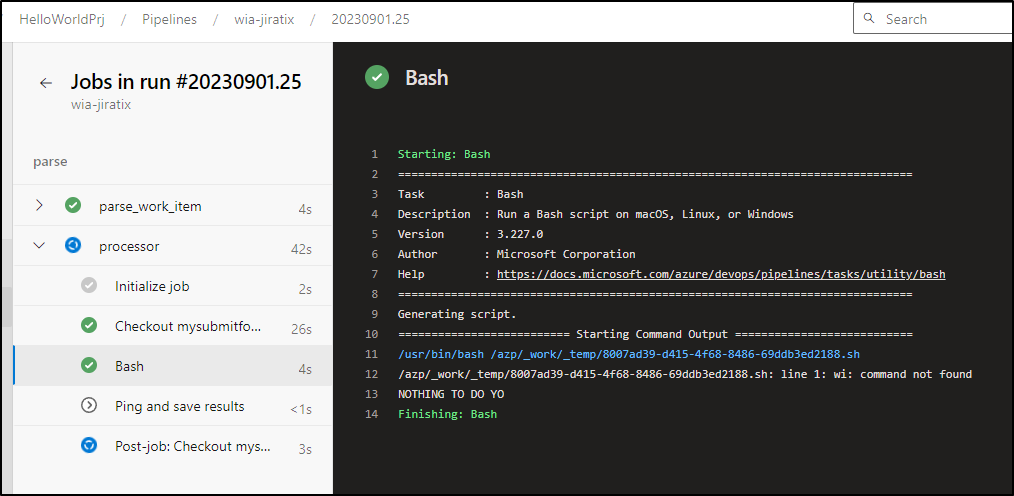

Once run, I’ll need to authorize it to use my private agent pool

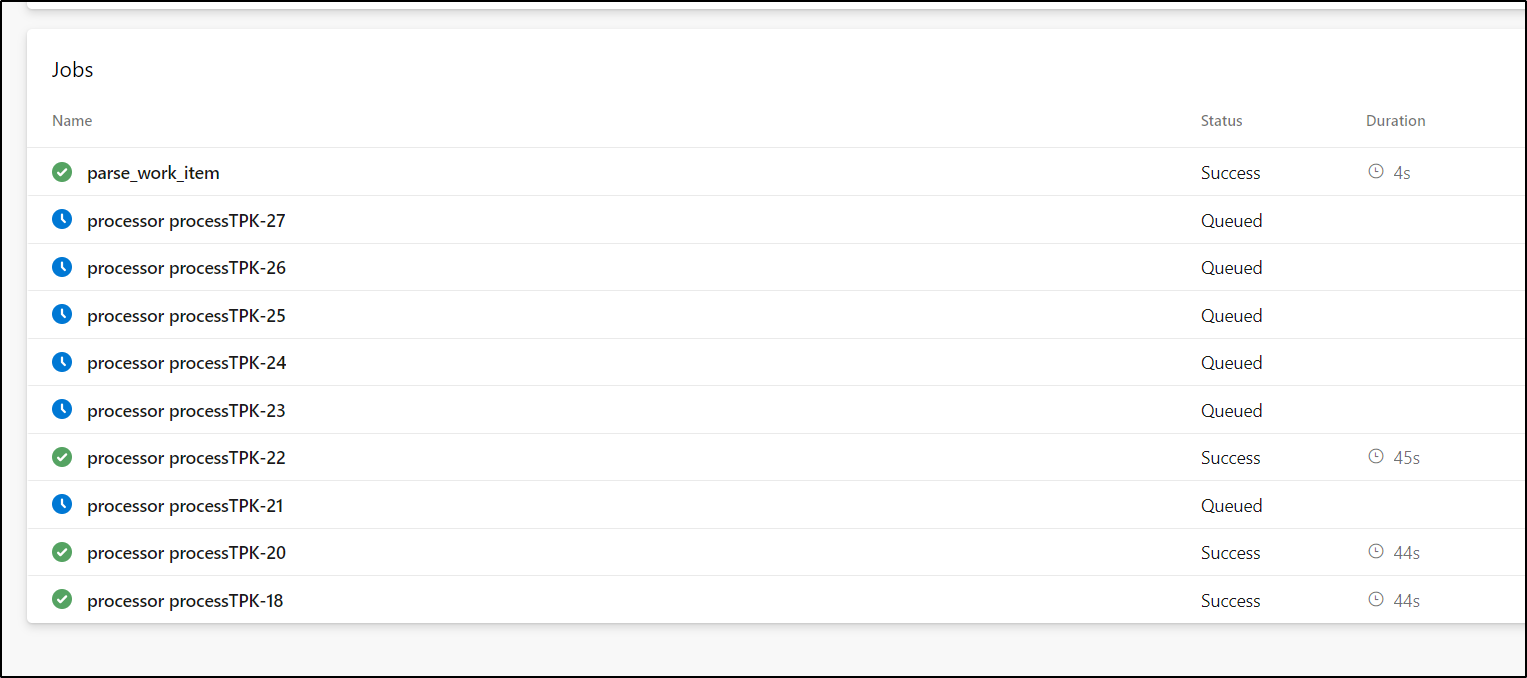

This then kicked off parallel runs (only limited by my max number of private agents in parallel)

After some iterations, I nailed down the steps to add a comment then move to In Progress:

- job: processor

dependsOn: parse_work_item

pool:

name: 'k3s77-self-hosted'

strategy:

matrix: $[ dependencies.parse_work_item.outputs['mtrx.mywis']]

steps:

- bash: |

if [ "$(wi)" == "" ]; then

echo "NOTHING TO DO YO"

echo "##vso[task.setvariable variable=skipall;]yes"

exit

else

echo "PROCESS THIS WI $(wi)"

echo "##vso[task.setvariable variable=skipall;]no"

fi

echo "my item: $(wi)"

- bash: |

set -x

curl --request GET \

--url 'https://freshbrewed.atlassian.net/rest/api/2/issue/$(wi)' \

--user '$(JIRAAPIUSER):$(JIRAAPIKEY)' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' > out.json

cat out.json | jq .fields.description | grep 'server: ' | sed 's/^.*server: \(.*\)\\.*/\1/' | tr -d '\n' > t3

export IPTOPING=`cat t3`

ping -c 1 $IPTOPING > ./t.o

echo '{ "body": "' | tr -d '\n' > ./tt && cat t.o | sed ':a;N;$!ba;s/\n/\\n/g' | tr -d '\n' >> ./tt && echo '"}' >> ./tt

cat ./tt

curl --request POST \

--url 'https://freshbrewed.atlassian.net/rest/api/2/issue/$(wi)/comment' \

--user '$(JIRAAPIUSER):$(JIRAAPIKEY)' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

-d @./tt

# move to in progress

curl --request POST \

--url 'https://freshbrewed.atlassian.net/rest/api/2/issue/$(wi)/transitions' \

--user '$(JIRAAPIUSER):$(JIRAAPIKEY)' \

--header 'Accept: application/json' \

--header 'Content-Type: application/json' \

--data '{

"transition": {

"id": "31"

},

"update": {

"comment": [

{

"add": {

"body": "done"

}

}

]

}

}'

displayName: 'Ping and save results'

condition: eq(variables['skipall'], 'no')

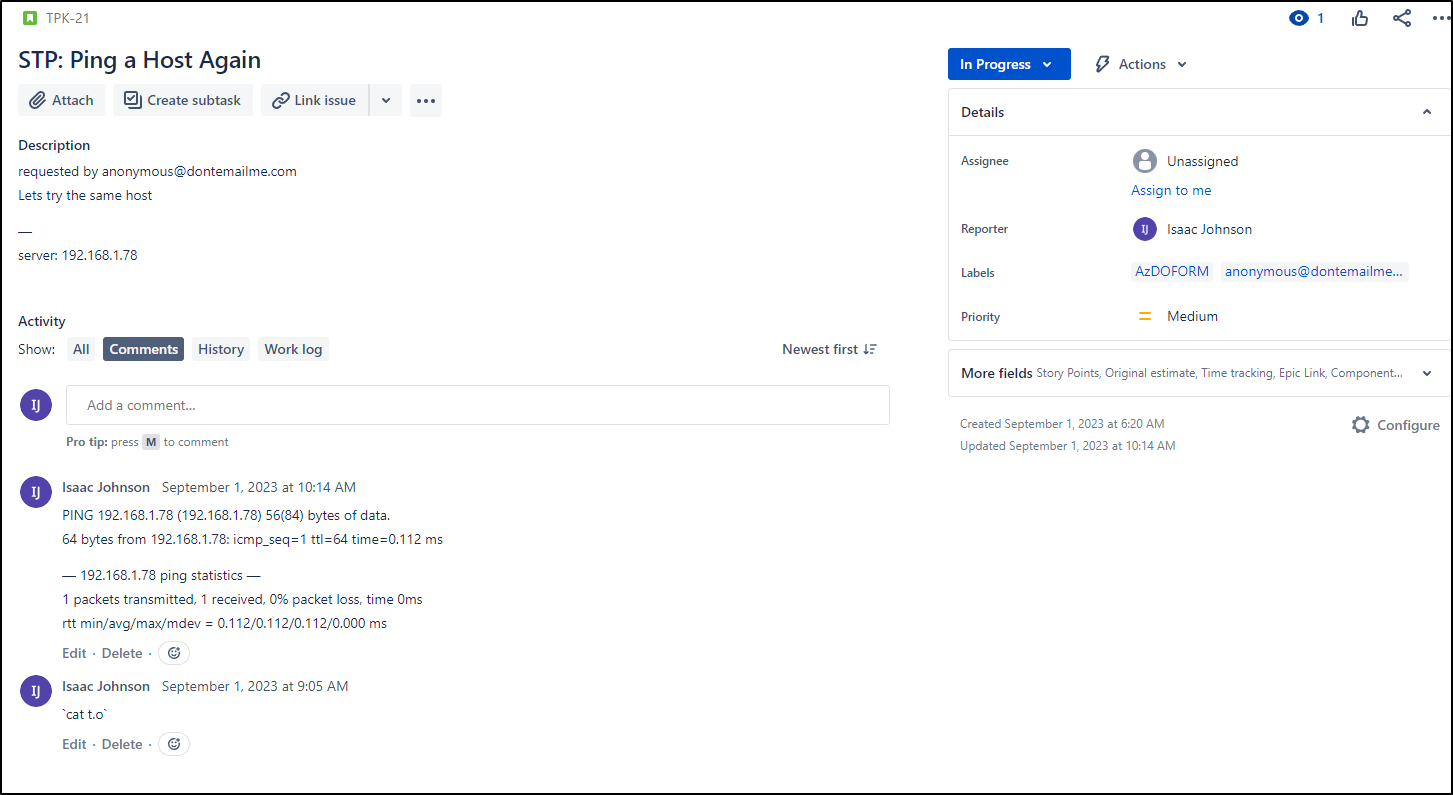

I could see an example run here

I want to set to Done so they finally complete and don’t re-run. We can fetch our valid states

$ curl --request GET --url https://freshbrewed.atlassian.net/rest/api/2/issue/TPK-18/transitions --user isaac@freshbrewed.science:c28geWVhaCwgaSBhY2NpZGVudGFsbHkgcGFzdGVkIHRoZSBrZXkgZWFybGllci4gZHVtYiB0aGluZyB0byBkby4gYWggd2VsbCwgcm90YXRlIGFuZCBtb3ZlIG9u --header 'Accept: application/json' --header 'Content-Type: application/json' | jq '.transitions[] | {name: .name, id: .id}'

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 2002 0 2002 0 0 6335 0 --:--:-- --:--:-- --:--:-- 6335

{

"name": "Backlog",

"id": "11"

}

{

"name": "Selected for Development",

"id": "21"

}

{

"name": "In Progress",

"id": "31"

}

{

"name": "Done",

"id": "41"

}

I’ll change the pipeline one last time to see it go

$ git diff azure-pipelines-1.yml

diff --git a/azure-pipelines-1.yml b/azure-pipelines-1.yml

index 87aedef..36cedbd 100644

--- a/azure-pipelines-1.yml

+++ b/azure-pipelines-1.yml

@@ -134,7 +134,7 @@ stages:

--header 'Content-Type: application/json' \

--data '{

"transition": {

- "id": "31"

+ "id": "41"

},

"update": {

"comment": [

Here we can see it in action

We can watch as they process and mark issues as done

Another way I can see progress is to check the logs on the AzDO agent in Kubernetes

$ kubectl logs azdevops-deployment-77f9c4df6b-n4rp9 | tail -n10

2023-09-01 15:23:57Z: Job processor processTPK-20 completed with result: Succeeded

2023-09-01 15:23:59Z: Running job: processor processTPK-21

2023-09-01 15:25:17Z: Job processor processTPK-21 completed with result: Succeeded

2023-09-01 15:25:19Z: Running job: processor processTPK-22

2023-09-01 15:26:39Z: Job processor processTPK-22 completed with result: Succeeded

2023-09-01 15:26:40Z: Running job: processor processTPK-26

2023-09-01 15:28:08Z: Job processor processTPK-26 completed with result: Succeeded

2023-09-01 15:28:10Z: Running job: processor processTPK-23

2023-09-01 15:29:31Z: Job processor processTPK-23 completed with result: Succeeded

2023-09-01 15:29:32Z: Running job: processor processTPK-24

End to End

Let’s do one last end to end.

We’ll ask for a server to be pinged. This should create a ticket in JIRA and then subsequently kick of a pipeline to process the ticket.

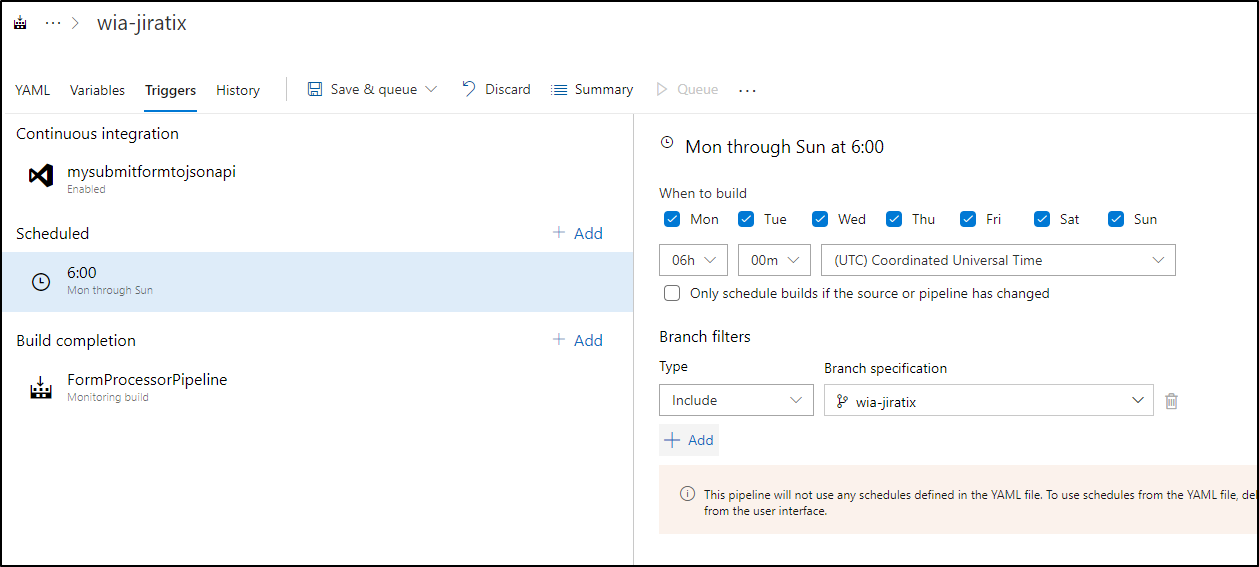

One more thing we can do is define a schedule to catch any WIA tickets that came through, perhaps from inside JIRA or a cloned ticket. Maybe things got moved back to re-process.

We can do a daily check setting the schedule in the triggers. Since we already had to use the ClassicUI approach, we can do it there as well:

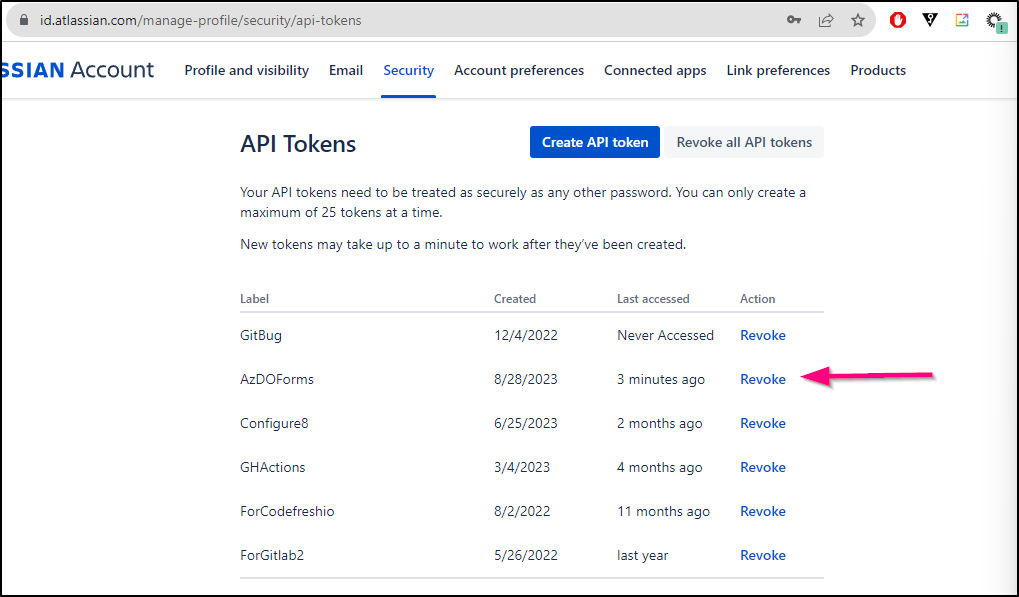

Security

Let’s say you wrote a blog and left a draft out a few days with the actual API token. That would be a pretty dumb thing to do.

So yeah, let’s reset that API Key:

You’ll want to create a new API key and update your Library

A fresh run with an updated key shows we haven’t broken anything.

Summary

I haven’t changed my mind about JIRA versus Azure Work Items. However, there are times we need to use the designated tool and quite often, that is JIRA.

I assumed that others, like myself, would be using a JIRA with limited access. I didn’t create custom flows, fields or types. I just used a standard issue, comments and transitions. I keyed off text in the summary and simply moved things to Done when completed.

I honestly think this is a pretty slick flow for taking in form-driven work in an automated way. I hope you enjoyed and can find ways to put it to use in your environment.