Published: Aug 10, 2023 by Isaac Johnson

It has been a while since we looked into Azure DevOps. The last post was a couple years ago when we discussed work item automations.

Azure DevOps is still a fantastic product with a solid free tier. The question I wanted to answer today is whether we can use Kubernetes-based private agents as easily as we can do with Github Actions.

Private Agents

Some of the more interesting things we often need to do require private agents.

We can run them on VMs or Kubernetes. We’ll take moment to look at both

Kubernetes

It is an interesting decision by Microsoft to not maintain their own Docker image. It’s not a breaking deal, as they do provide the Dockerfiles to use in MSDN

I would like to build and push from a System already in my control. That can be a AzDO pipeline, Github or Gitea.

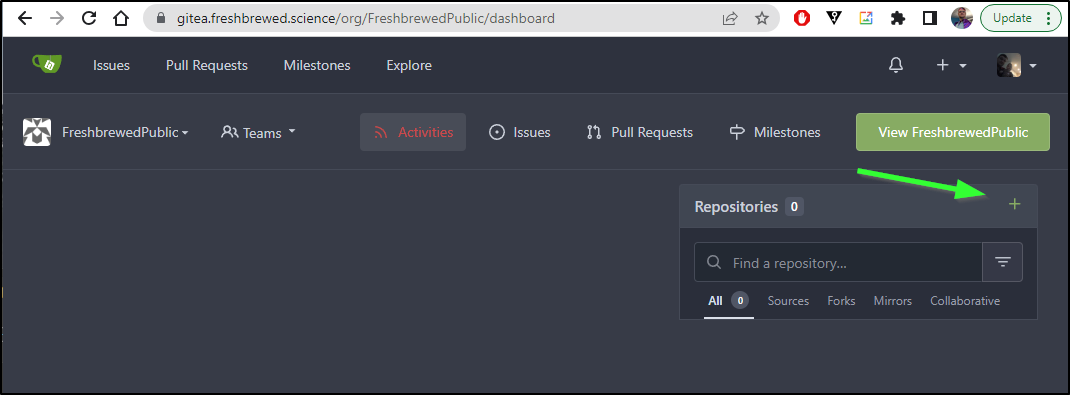

Since we recently setup Gitea, let’s start there.

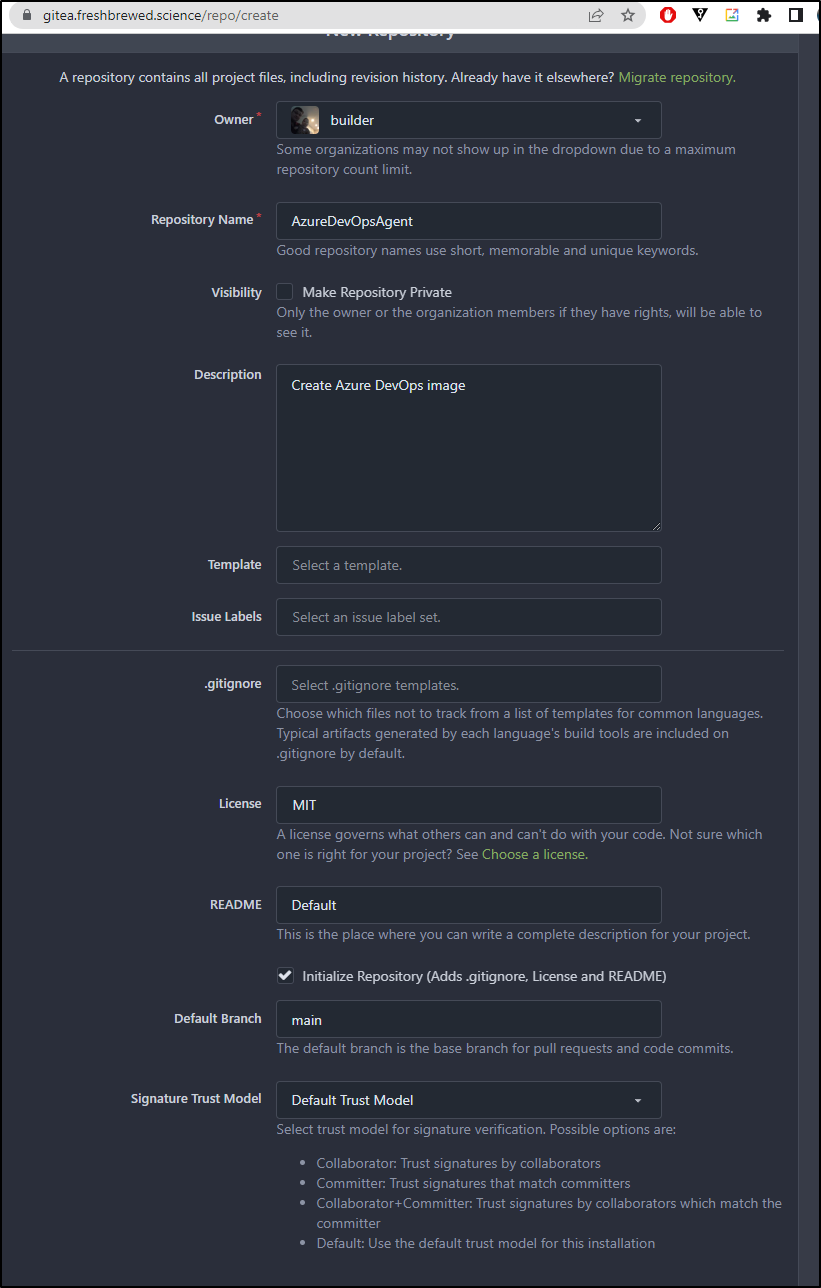

I’ll create a new repo in my Public Org

I made it public

I’m going to create a Dockerfile

FROM ubuntu:20.04

RUN DEBIAN_FRONTEND=noninteractive apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get upgrade -y

RUN DEBIAN_FRONTEND=noninteractive apt-get install -y -qq --no-install-recommends \

apt-transport-https \

apt-utils \

ca-certificates \

curl \

git \

iputils-ping \

jq \

lsb-release \

software-properties-common \

libicu66

RUN curl -sL https://aka.ms/InstallAzureCLIDeb | bash

# Can be 'linux-x64', 'linux-arm64', 'linux-arm', 'rhel.6-x64'.

ENV TARGETARCH=linux-x64

WORKDIR /azp

COPY ./start.sh .

RUN chmod +x start.sh

ENTRYPOINT [ "./start.sh" ]

And a ‘start.sh’ shell script with 755 perms

#!/bin/bash

set -e

if [ -z "$AZP_URL" ]; then

echo 1>&2 "error: missing AZP_URL environment variable"

exit 1

fi

if [ -z "$AZP_TOKEN_FILE" ]; then

if [ -z "$AZP_TOKEN" ]; then

echo 1>&2 "error: missing AZP_TOKEN environment variable"

exit 1

fi

AZP_TOKEN_FILE=/azp/.token

echo -n $AZP_TOKEN > "$AZP_TOKEN_FILE"

fi

unset AZP_TOKEN

if [ -n "$AZP_WORK" ]; then

mkdir -p "$AZP_WORK"

fi

export AGENT_ALLOW_RUNASROOT="1"

cleanup() {

if [ -e config.sh ]; then

print_header "Cleanup. Removing Azure Pipelines agent..."

# If the agent has some running jobs, the configuration removal process will fail.

# So, give it some time to finish the job.

while true; do

./config.sh remove --unattended --auth PAT --token $(cat "$AZP_TOKEN_FILE") && break

echo "Retrying in 30 seconds..."

sleep 30

done

fi

}

print_header() {

lightcyan='\033[1;36m'

nocolor='\033[0m'

echo -e "${lightcyan}$1${nocolor}"

}

# Let the agent ignore the token env variables

export VSO_AGENT_IGNORE=AZP_TOKEN,AZP_TOKEN_FILE

print_header "1. Determining matching Azure Pipelines agent..."

AZP_AGENT_PACKAGES=$(curl -LsS \

-u user:$(cat "$AZP_TOKEN_FILE") \

-H 'Accept:application/json;' \

"$AZP_URL/_apis/distributedtask/packages/agent?platform=$TARGETARCH&top=1")

AZP_AGENT_PACKAGE_LATEST_URL=$(echo "$AZP_AGENT_PACKAGES" | jq -r '.value[0].downloadUrl')

if [ -z "$AZP_AGENT_PACKAGE_LATEST_URL" -o "$AZP_AGENT_PACKAGE_LATEST_URL" == "null" ]; then

echo 1>&2 "error: could not determine a matching Azure Pipelines agent"

echo 1>&2 "check that account '$AZP_URL' is correct and the token is valid for that account"

exit 1

fi

print_header "2. Downloading and extracting Azure Pipelines agent..."

curl -LsS $AZP_AGENT_PACKAGE_LATEST_URL | tar -xz & wait $!

source ./env.sh

trap 'cleanup; exit 0' EXIT

trap 'cleanup; exit 130' INT

trap 'cleanup; exit 143' TERM

print_header "3. Configuring Azure Pipelines agent..."

./config.sh --unattended \

--agent "${AZP_AGENT_NAME:-$(hostname)}" \

--url "$AZP_URL" \

--auth PAT \

--token $(cat "$AZP_TOKEN_FILE") \

--pool "${AZP_POOL:-Default}" \

--work "${AZP_WORK:-_work}" \

--replace \

--acceptTeeEula & wait $!

print_header "4. Running Azure Pipelines agent..."

trap 'cleanup; exit 0' EXIT

trap 'cleanup; exit 130' INT

trap 'cleanup; exit 143' TERM

chmod +x ./run.sh

# To be aware of TERM and INT signals call run.sh

# Running it with the --once flag at the end will shut down the agent after the build is executed

./run.sh "$@" & wait $!

To test, I’ll try locally with Dockerhub first

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/AgentBuild$ docker build -t azdoagenttest:0.0.1 .

[+] Building 2.6s (13/13) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 38B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/ubuntu:20.04 0.8s

=> [1/8] FROM docker.io/library/ubuntu:20.04@sha256:c9820a44b950956a790c354700c1166a7ec648bc0d215fa438d3a339812f1d01 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 2.60kB 0.0s

=> CACHED [2/8] RUN DEBIAN_FRONTEND=noninteractive apt-get update 0.0s

=> CACHED [3/8] RUN DEBIAN_FRONTEND=noninteractive apt-get upgrade -y 0.0s

=> CACHED [4/8] RUN DEBIAN_FRONTEND=noninteractive apt-get install -y -qq --no-install-recommends apt-transport-https apt-utils 0.0s

=> CACHED [5/8] RUN curl -sL https://aka.ms/InstallAzureCLIDeb | bash 0.0s

=> CACHED [6/8] WORKDIR /azp 0.0s

=> [7/8] COPY ./start.sh . 0.0s

=> [8/8] RUN chmod +x start.sh 0.5s

=> exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:75a3ccb4c979d996bab78b8e9cad16340049be11af7ddc256daa0400b0e54940 0.0s

=> => naming to docker.io/library/azdoagenttest:0.0.1 0.0s

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/AgentBuild$ docker tag azdoagenttest:0.0.1 idjohnson/azdoagent:0.0.1

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/AgentBuild$ docker push idjohnson/azdoagent:0.0.1

The push refers to repository [docker.io/idjohnson/azdoagent]

367a82c366f1: Pushed

80f6bd16f0a4: Pushed

38be99cec070: Pushed

d2194b8953d6: Pushed

73c1584a7072: Pushed

e4a7873884f7: Pushed

f5bb4f853c84: Mounted from library/ubuntu

0.0.1: digest: sha256:2d82ba4d9ac3ace047ce13ecdd0a78ca2525d1d57ca032dca2a54a0d3b7e2912 size: 1995

I really want to see it work first before i sort out the action flow.

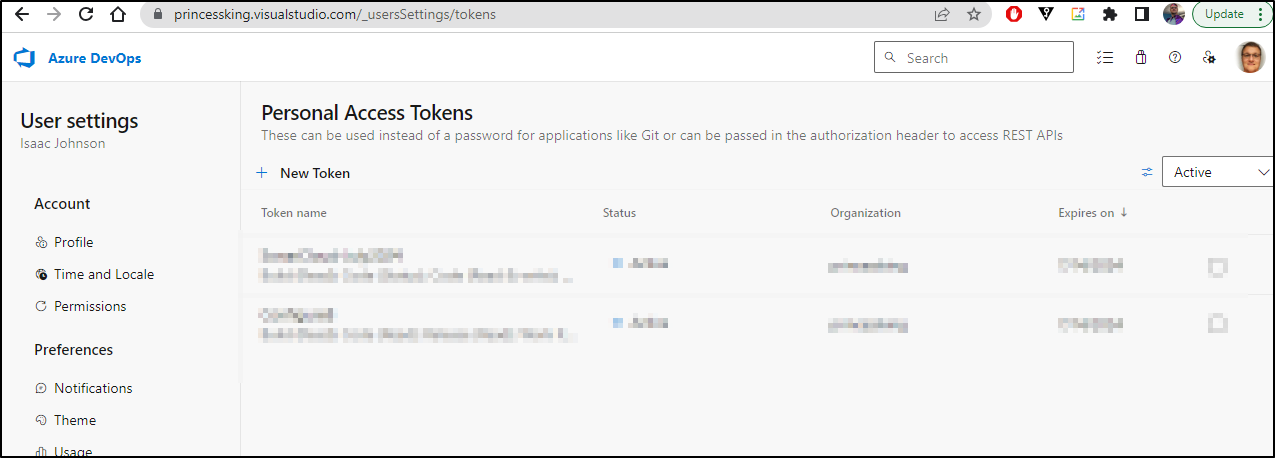

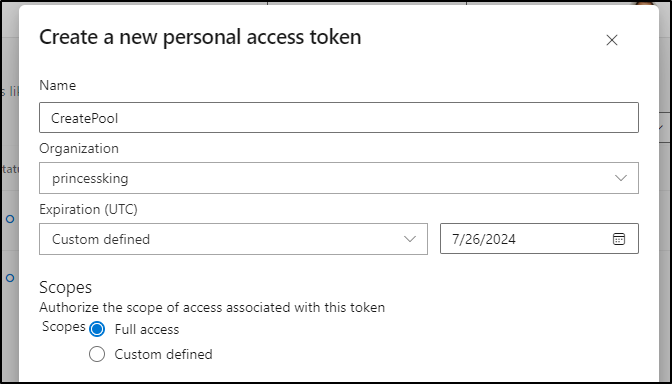

I need a PAT which I can fetch from AzDO

You cannot make an infinitely long PAT - presently it is a year out (used to go to 2 years)

To test, I’ll create an .env file with the PAT

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ cat test.env

AZP_POOL=k3s77-self-hosted

AZP_URL=https://dev.azure.com/princessking

AZP_TOKEN=3p3qrxzaufxr57dndcamwvqtvaeatdxkt3zidlcoux67wwr6eria

I can now create the CM

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl create cm azure-agent-config --from-env-file=test.env

configmap/azure-agent-config created

Which we can check

$ kubectl get cm azure-agent-config -o yaml

apiVersion: v1

data:

AZP_POOL: k3s77-self-hosted

AZP_PAT: 3p3qrxzaufxr57dndcamwvqtvaeatdxkt3zidlcoux67wwr6eria

AZP_URL: https://dev.azure.com/princessking

kind: ConfigMap

metadata:

creationTimestamp: "2023-07-28T11:55:06Z"

name: azure-agent-config

namespace: default

resourceVersion: "191068389"

uid: 48866a50-617b-4004-ab76-5d83d70228a0

I can now use with a basic Deployment.yaml

$ cat Deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: azure-agent-pool

name: azure-agent-pool

spec:

replicas: 1

selector:

matchLabels:

app: azure-agent-pool

template:

metadata:

labels:

app: azure-agent-pool

spec:

containers:

- image: idjohnson/azdoagent:0.0.1

name: ado-agent-1

env:

- name: AZ_AGENT_NAME

value: agent-1

envFrom:

- configMapRef:

name: azure-agent-config

- image: idjohnson/azdoagent:0.0.1

name: ado-agent-2

env:

- name: AZ_AGENT_NAME

value: agent-2

envFrom:

- configMapRef:

name: azure-agent-config

Which I can apply

$ kubectl apply -f Deployment.yaml

deployment.apps/azure-agent-pool created

While this did launch, it could not find the agent pool in question

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl logs azure-agent-pool-5857c599fc-nkv6j

Defaulted container "ado-agent-1" out of: ado-agent-1, ado-agent-2

1. Determining matching Azure Pipelines agent...

2. Downloading and extracting Azure Pipelines agent...

3. Configuring Azure Pipelines agent...

___ ______ _ _ _

/ _ \ | ___ (_) | (_)

/ /_\ \_____ _ _ __ ___ | |_/ /_ _ __ ___| |_ _ __ ___ ___

| _ |_ / | | | '__/ _ \ | __/| | '_ \ / _ \ | | '_ \ / _ \/ __|

| | | |/ /| |_| | | | __/ | | | | |_) | __/ | | | | | __/\__ \

\_| |_/___|\__,_|_| \___| \_| |_| .__/ \___|_|_|_| |_|\___||___/

| |

agent v3.224.1 |_| (commit 7861f5f)

>> End User License Agreements:

Building sources from a TFVC repository requires accepting the Team Explorer Everywhere End User License Agreement. This step is not required for building sources from Git repositories.

A copy of the Team Explorer Everywhere license agreement can be found at:

/azp/license.html

>> Connect:

Connecting to server ...

>> Register Agent:

Error reported in diagnostic logs. Please examine the log for more details.

- /azp/_diag/Agent_20230728-120603-utc.log

Agent pool not found: 'k3s77-self-hosted'

Cleanup. Removing Azure Pipelines agent...

Removing agent from the server

Cannot connect to server, because config files are missing. Skipping removing agent from the server.

Removing .credentials

Does not exist. Skipping Removing .credentials

Removing .agent

Does not exist. Skipping Removing .agent

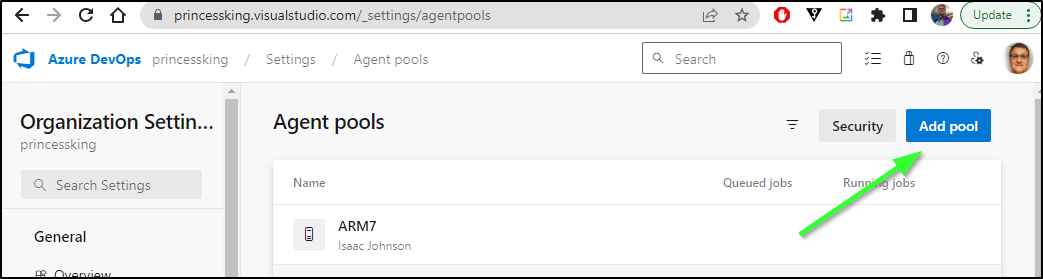

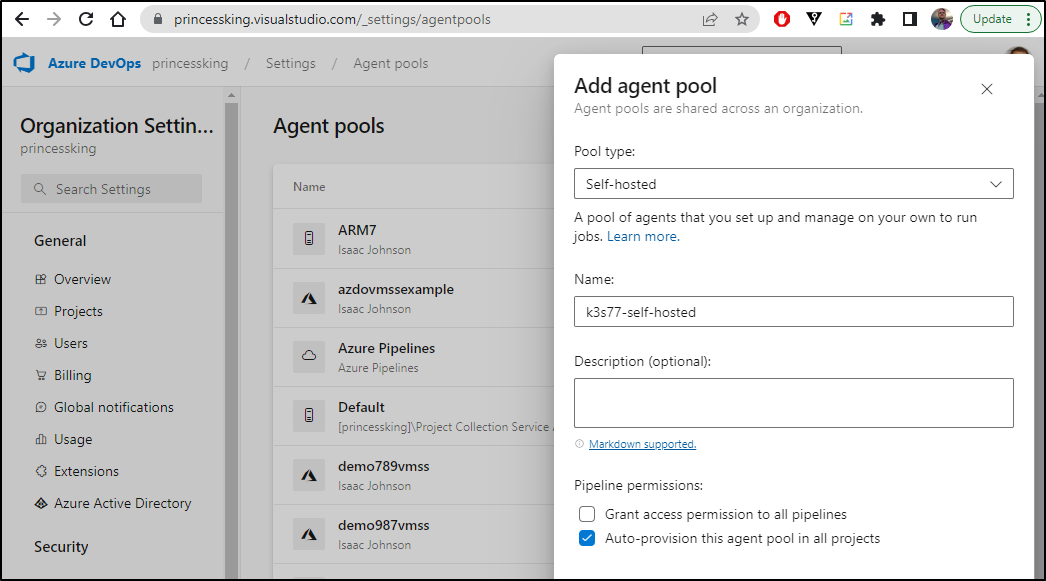

The agents can add them selves, but cannot create pools that do not exist.

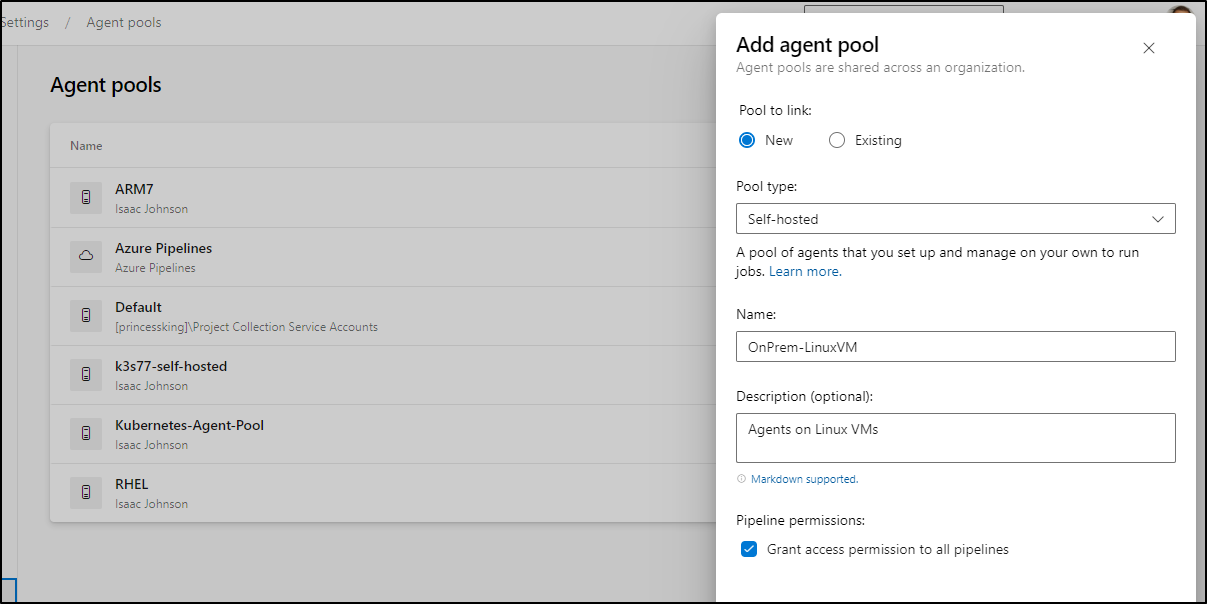

Let’s create that pool

As agent pools are at the org level, I’ll go ahead and provision this but not give access to all projects yet. At times, you do not want to do this and instead opt to just add to all projects.

I’ll rotate the pod

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl delete pod azure-agent-pool-5857c599fc-nkv6j

pod "azure-agent-pool-5857c599fc-nkv6j" deleted

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl get pods -l app=azure-agent-pool

NAME READY STATUS RESTARTS AGE

azure-agent-pool-5857c599fc-4zqb6 2/2 Running 0 17s

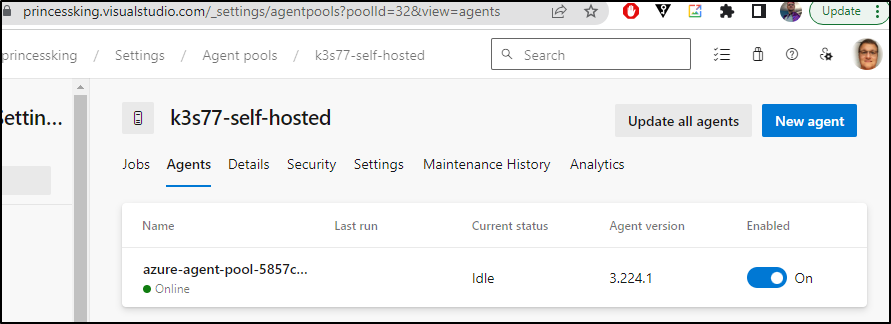

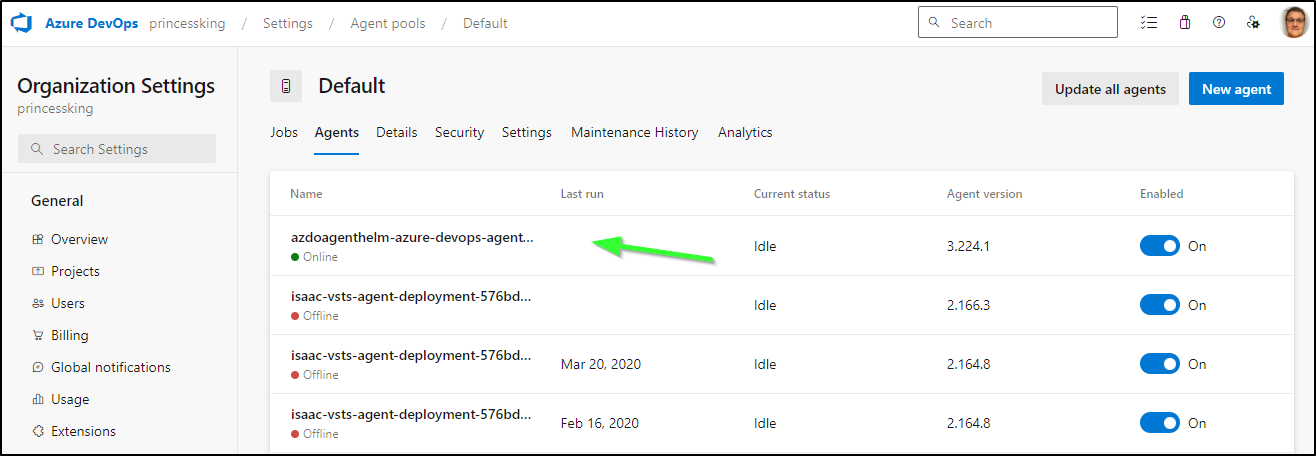

I can see one agent was added already

We cannot have two agents of the same name which is blocking agent 2

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl logs azure-agent-pool-5857c599fc-4zqb6

Defaulted container "ado-agent-1" out of: ado-agent-1, ado-agent-2

1. Determining matching Azure Pipelines agent...

2. Downloading and extracting Azure Pipelines agent...

3. Configuring Azure Pipelines agent...

___ ______ _ _ _

/ _ \ | ___ (_) | (_)

/ /_\ \_____ _ _ __ ___ | |_/ /_ _ __ ___| |_ _ __ ___ ___

| _ |_ / | | | '__/ _ \ | __/| | '_ \ / _ \ | | '_ \ / _ \/ __|

| | | |/ /| |_| | | | __/ | | | | |_) | __/ | | | | | __/\__ \

\_| |_/___|\__,_|_| \___| \_| |_| .__/ \___|_|_|_| |_|\___||___/

| |

agent v3.224.1 |_| (commit 7861f5f)

>> End User License Agreements:

Building sources from a TFVC repository requires accepting the Team Explorer Everywhere End User License Agreement. This step is not required for building sources from Git repositories.

A copy of the Team Explorer Everywhere license agreement can be found at:

/azp/license.html

>> Connect:

Connecting to server ...

>> Register Agent:

Scanning for tool capabilities.

Connecting to the server.

Pool k3s77-self-hosted already contains an agent with name azure-agent-pool-5857c599fc-4zqb6.

Successfully replaced the agent

Testing agent connection.

2023-07-28 12:10:30Z: Settings Saved.

4. Running Azure Pipelines agent...

Scanning for tool capabilities.

Connecting to the server.

2023-07-28 12:10:33Z: Listening for Jobs

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl logs azure-agent-pool-5857c599fc-4zqb6 ado-agent-2

1. Determining matching Azure Pipelines agent...

2. Downloading and extracting Azure Pipelines agent...

3. Configuring Azure Pipelines agent...

___ ______ _ _ _

/ _ \ | ___ (_) | (_)

/ /_\ \_____ _ _ __ ___ | |_/ /_ _ __ ___| |_ _ __ ___ ___

| _ |_ / | | | '__/ _ \ | __/| | '_ \ / _ \ | | '_ \ / _ \/ __|

| | | |/ /| |_| | | | __/ | | | | |_) | __/ | | | | | __/\__ \

\_| |_/___|\__,_|_| \___| \_| |_| .__/ \___|_|_|_| |_|\___||___/

| |

agent v3.224.1 |_| (commit 7861f5f)

>> End User License Agreements:

Building sources from a TFVC repository requires accepting the Team Explorer Everywhere End User License Agreement. This step is not required for building sources from Git repositories.

A copy of the Team Explorer Everywhere license agreement can be found at:

/azp/license.html

>> Connect:

Connecting to server ...

>> Register Agent:

Scanning for tool capabilities.

Connecting to the server.

Successfully added the agent

Testing agent connection.

2023-07-28 12:10:30Z: Settings Saved.

4. Running Azure Pipelines agent...

Scanning for tool capabilities.

Connecting to the server.

Error reported in diagnostic logs. Please examine the log for more details.

- /azp/_diag/Agent_20230728-121030-utc.log

2023-07-28 12:10:32Z: Agent connect error: The signature is not valid.. Retrying until reconnected.

While this was fine, I don’t like the hand created nature of the CM. Additionally we have now more than one container in the pod, ideally, I want a pod per container.

Let’s change this up to use a Deployment with volumes and mapped values.

I’ll create a secret first we can use for the PAT

$ kubectl create secret generic azdopat --from-literal=azdopat=3p3qrxzaufxr57dndcamwvqtvaeatdxkt3zidlcoux67wwr6eria

secret/azdopat created

I can now use that in a Deployment.yaml

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ cat Deployment2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: azdevops-deployment

spec:

replicas: 2

progressDeadlineSeconds: 1800

selector:

matchLabels:

app: azdevops-agent

template:

metadata:

labels:

app: azdevops-agent

spec:

containers:

- name: selfhosted-agents

image: idjohnson/azdoagent:0.0.1

resources:

requests:

cpu: "130m"

memory: "300Mi"

limits:

cpu: "130m"

memory: "300Mi"

env:

- name: AZP_POOL

value: k3s77-self-hosted

- name: AZP_URL

value: https://dev.azure.com/princessking

- name: AZP_TOKEN

valueFrom:

secretKeyRef:

name: azdopat

key: azdopat

optional: false

volumeMounts:

- name: docker-graph-storage

mountPath: /var/run/docker.sock

volumes:

- name: docker-graph-storage

hostPath:

path: /var/run/docker.sock

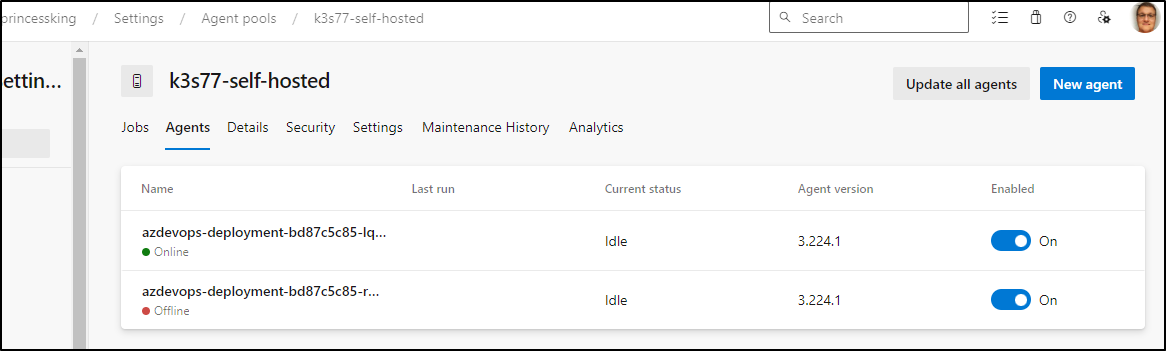

I can now deploy and see them running

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl apply -f Deployment2.yaml

deployment.apps/azdevops-deployment created

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl get pods -l app=azdevops-agent

NAME READY STATUS RESTARTS AGE

azdevops-deployment-bd87c5c85-r8q6d 1/1 Running 0 29s

azdevops-deployment-bd87c5c85-lqcd5 1/1 Running 0 29s

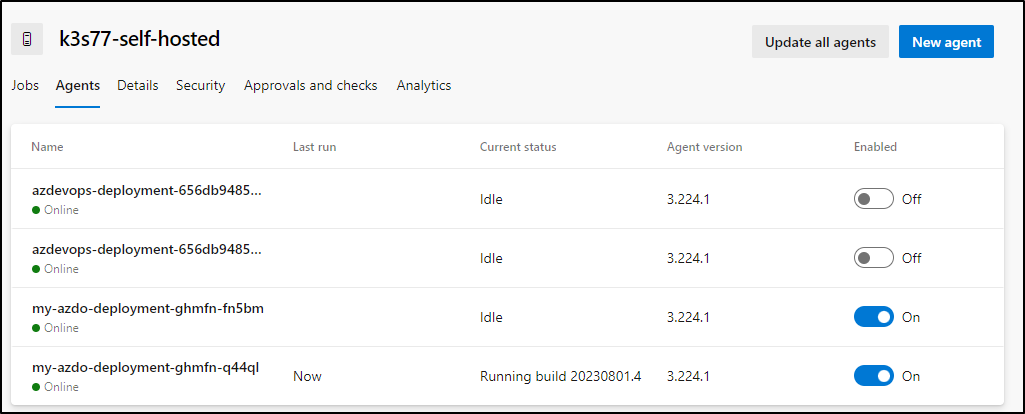

In a few moments, the pods came up in Azure DevOps

Helm

There are helm charts out there as well.

Looking at this one from btungut

If we install the default way

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ helm upgrade --install azdoagenthelm btungut/azure-devops-agent --set agent.pat=3p3qrxzaufxr57dndcamwvqtvaeatdxkt3zidlcoux67wwr6eria --set agent.organizationUrl=https://dev.azure.com/theprincessking --namespace azdoagents --create-namespace

Release "azdoagenthelm" does not exist. Installing it now.

NAME: azdoagenthelm

LAST DEPLOYED: Fri Jul 28 07:31:33 2023

NAMESPACE: azdoagents

STATUS: deployed

REVISION: 1

TEST SUITE: None

Note: this way uses his build of the agent hosted in Dockerhub here. You have to have trust when doing this someone is not building an AzDO agent that pipes your PAT to some nefarious end. This is why we often use our own image.

I can see his agent comming up

$ kubectl get pods -n azdoagents

NAME READY STATUS RESTARTS AGE

azdoagenthelm-azure-devops-agent-5d5b7bcb6b-d5rkh 0/1 ContainerCreating 0 24s

Warning BackOff 6s (x5 over 41s) kubelet Back-off restarting failed container

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl logs azdoagenthelm-azure-devops-agent-5d5b7bcb6b-d5rkh -n azdoagents

Configuring Azure Pipelines agent...

___ ______ _ _ _

/ _ \ | ___ (_) | (_)

/ /_\ \_____ _ _ __ ___ | |_/ /_ _ __ ___| |_ _ __ ___ ___

| _ |_ / | | | '__/ _ \ | __/| | '_ \ / _ \ | | '_ \ / _ \/ __|

| | | |/ /| |_| | | | __/ | | | | |_) | __/ | | | | | __/\__ \

\_| |_/___|\__,_|_| \___| \_| |_| .__/ \___|_|_|_| |_|\___||___/

| |

agent v2.214.1 |_| (commit 4234141)

>> End User License Agreements:

Building sources from a TFVC repository requires accepting the Team Explorer Everywhere End User License Agreement. This step is not required for building sources from Git repositories.

A copy of the Team Explorer Everywhere license agreement can be found at:

/azp/license.html

>> Connect:

Error reported in diagnostic logs. Please examine the log for more details.

- /azp/_diag/Agent_20230728-123307-utc.log

The resource cannot be found.

Seems he may have not added the start.sh or it had bad perms. Either way, not my image so lets use our own.

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ helm upgrade --install azdoagenthelm btungut/azure-devops-agent --set agent.pat=3p3qrxzaufxr57dndcamwvqtvaeatdxkt3zidlcoux67wwr6eria --set agent.organizationUrl=https://dev.azure.com/theprincessking --set image.repository=idjohnson/azdoagent --set image.tag=0.0.1 --namespace azdoagents --create-namespace

Release "azdoagenthelm" has been upgraded. Happy Helming!

NAME: azdoagenthelm

LAST DEPLOYED: Fri Jul 28 07:34:51 2023

NAMESPACE: azdoagents

STATUS: deployed

REVISION: 2

TEST SUITE: None

I should mention I debugged (for longer than I want to admit) the following error

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl logs azdoagenthelm-azure-devops-agent-idjtest -n azdoagents

1. Determining matching Azure Pipelines agent...

parse error: Invalid numeric literal at line 1, column 4

Only to realize I typoed my AzDO instance (princessking vs theprincessking).

$ helm upgrade --install azdoagenthelm btungut/azure-devops-agent --set agent.pat=3p3qrxzaufxr57dndcamwvqtvaeatdxkt3zidlcoux67wwr6eria --set agent.organizationUrl=https://dev.azure.com/princessking --set image.repository=idjohnson/azdoagent --set image.tag=0.0.1 --namespace azdoagents --create-namespace

Release "azdoagenthelm" has been upgraded. Happy Helming!

NAME: azdoagenthelm

LAST DEPLOYED: Sat Jul 29 06:22:15 2023

NAMESPACE: azdoagents

STATUS: deployed

REVISION: 4

TEST SUITE: None

And I can see it running

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl get pods -n azdoagents

NAME READY STATUS RESTARTS AGE

azdoagenthelm-azure-devops-agent-875fb4cf9-ts2dn 1/1 Running 0 36s

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/deploy$ kubectl logs azdoagenthelm-azure-devops-agent-875fb4cf9-ts2dn -n azdoagents

1. Determining matching Azure Pipelines agent...

2. Downloading and extracting Azure Pipelines agent...

3. Configuring Azure Pipelines agent...

___ ______ _ _ _

/ _ \ | ___ (_) | (_)

/ /_\ \_____ _ _ __ ___ | |_/ /_ _ __ ___| |_ _ __ ___ ___

| _ |_ / | | | '__/ _ \ | __/| | '_ \ / _ \ | | '_ \ / _ \/ __|

| | | |/ /| |_| | | | __/ | | | | |_) | __/ | | | | | __/\__ \

\_| |_/___|\__,_|_| \___| \_| |_| .__/ \___|_|_|_| |_|\___||___/

| |

agent v3.224.1 |_| (commit 7861f5f)

>> End User License Agreements:

Building sources from a TFVC repository requires accepting the Team Explorer Everywhere End User License Agreement. This step is not required for building sources from Git repositories.

A copy of the Team Explorer Everywhere license agreement can be found at:

/azp/license.html

>> Connect:

Connecting to server ...

>> Register Agent:

Scanning for tool capabilities.

Connecting to the server.

Successfully added the agent

Testing agent connection.

2023-07-29 11:22:39Z: Settings Saved.

4. Running Azure Pipelines agent...

Scanning for tool capabilities.

Connecting to the server.

2023-07-29 11:22:42Z: Listening for Jobs

I’ll check the pool it used

$ kubectl describe pod azdoagenthelm-azure-devops-agent-875fb4cf9-ts2dn -n azdoagents | grep AZP_POOL

AZP_POOL: Default

Testing

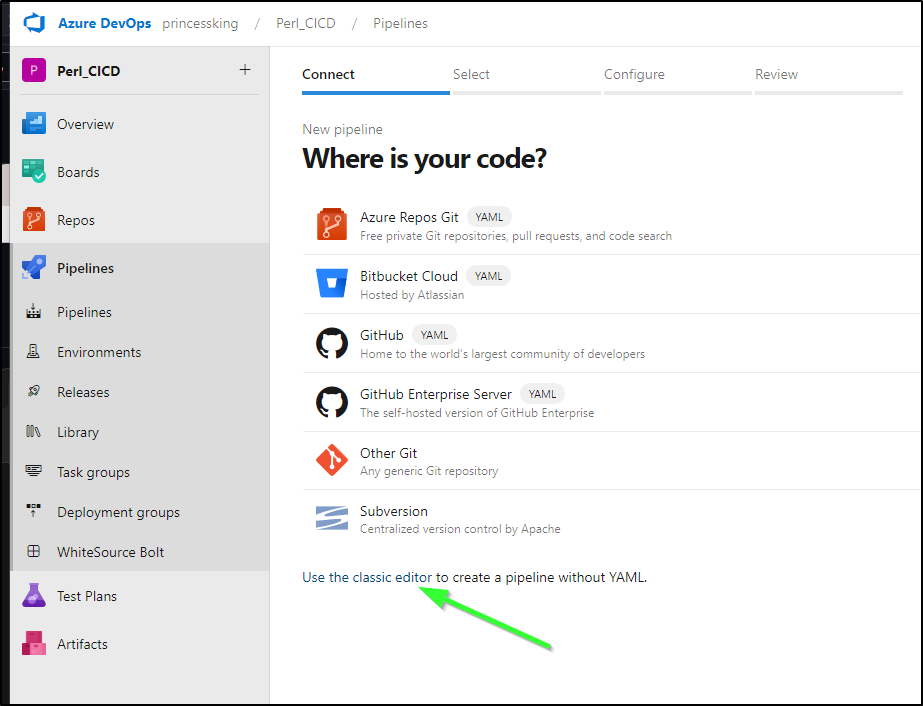

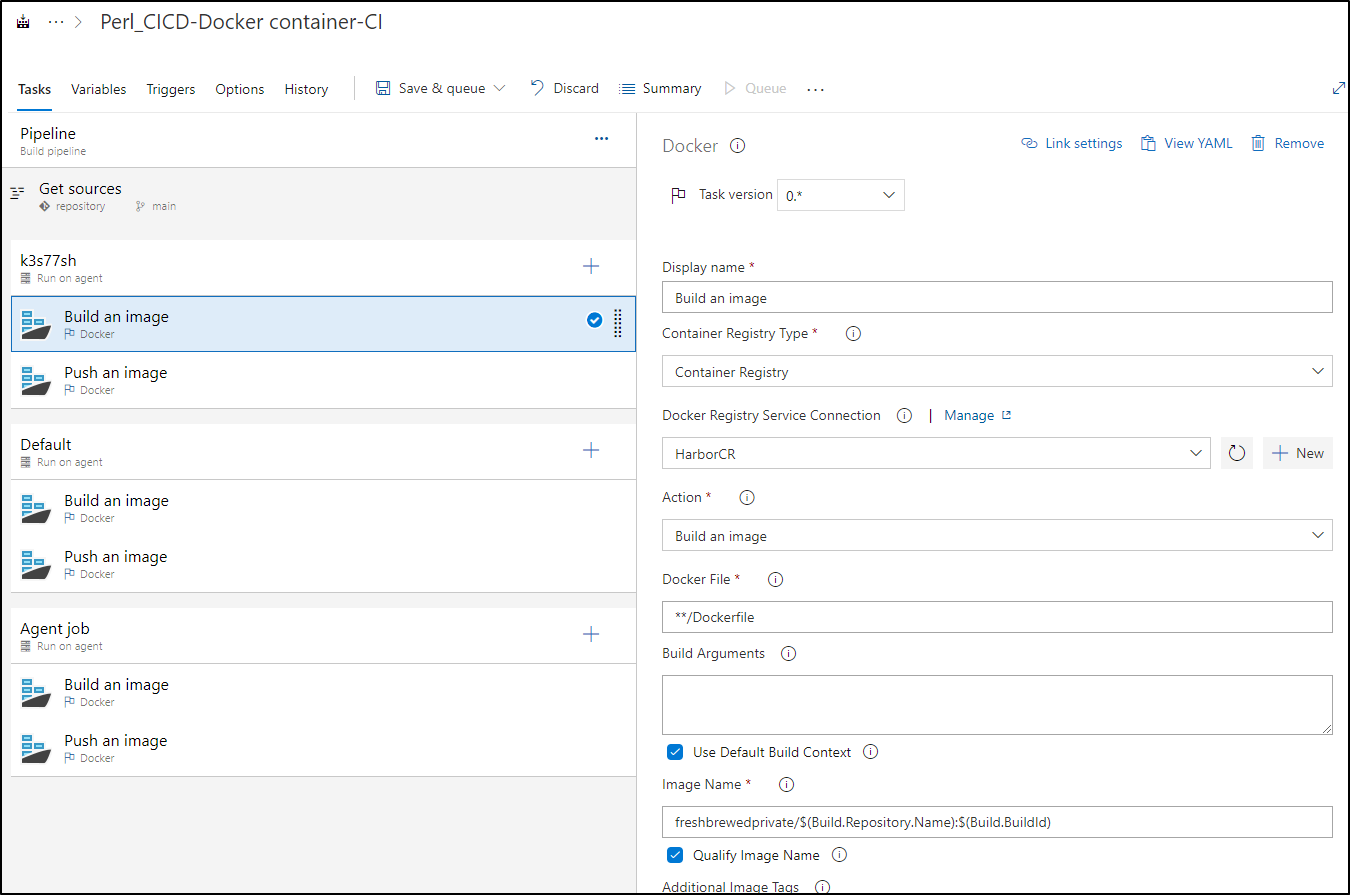

The original way to create pipelines was graphical and interestingly enough they still expose that “classic” method for pipeline creation

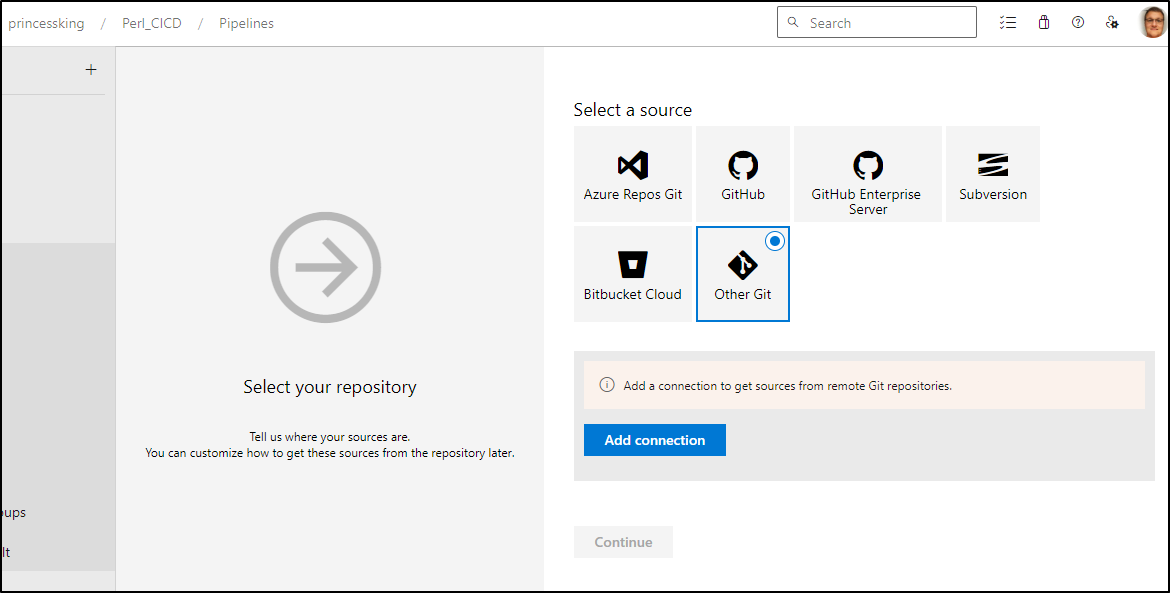

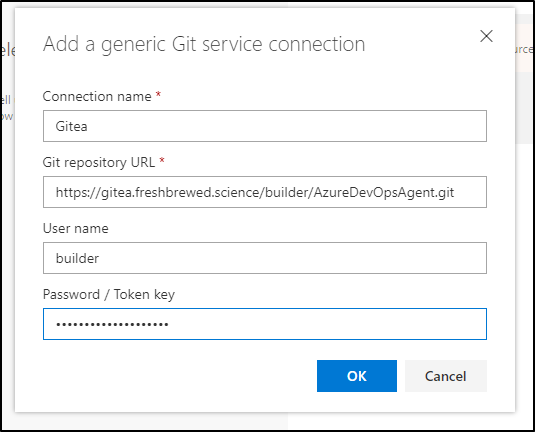

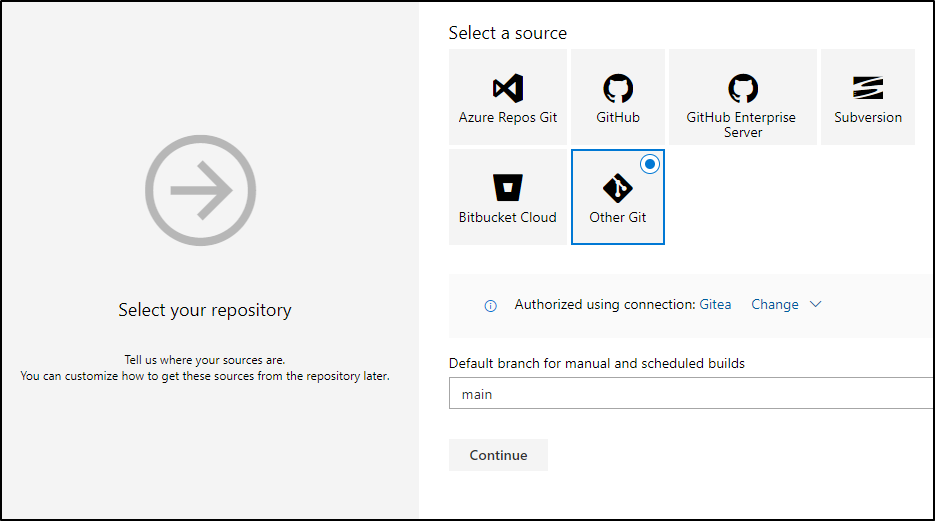

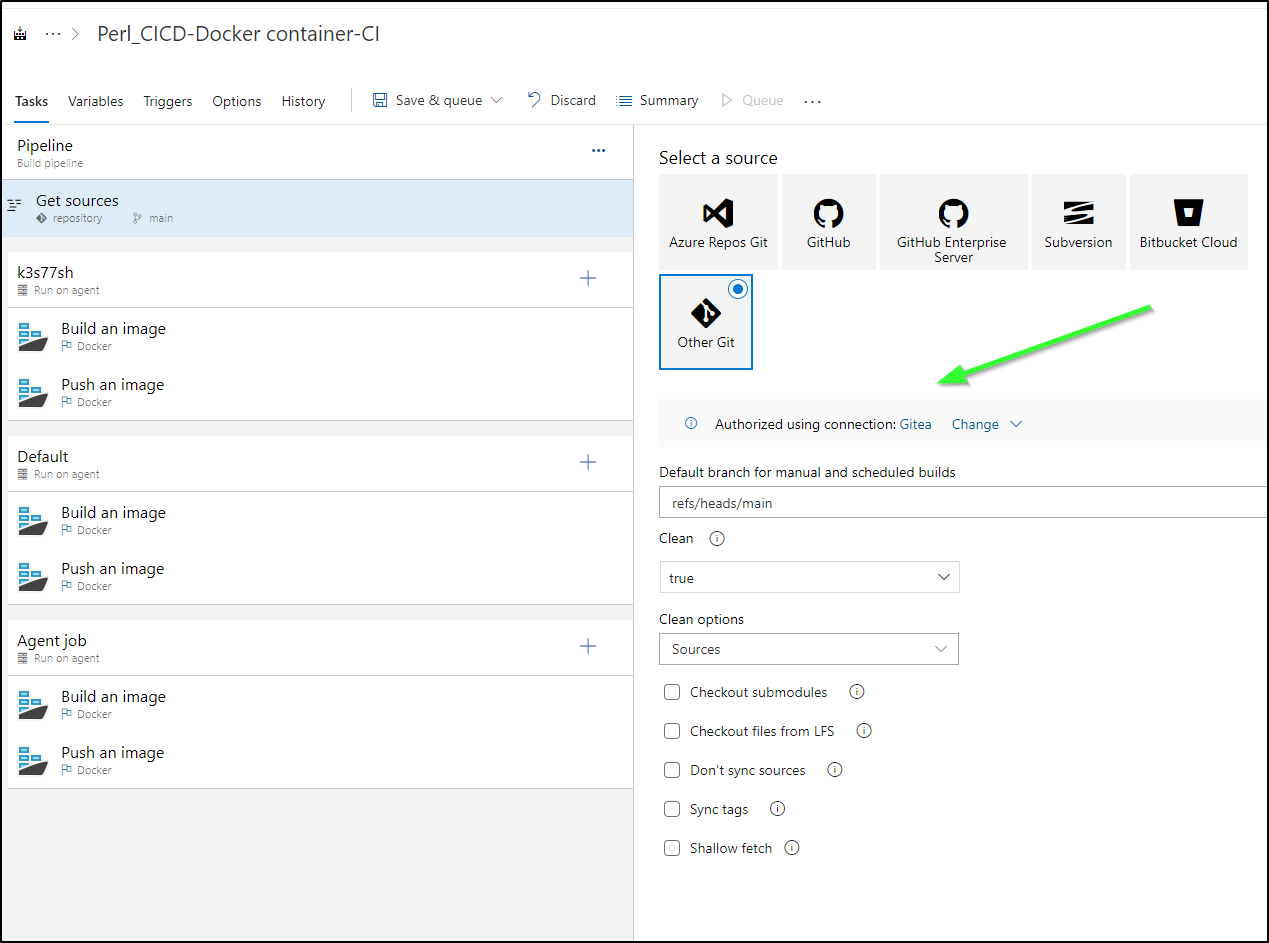

I’ll add my Gitea repo

For which I’ll need to add authentication

I’ll use main (it defaulted to master)

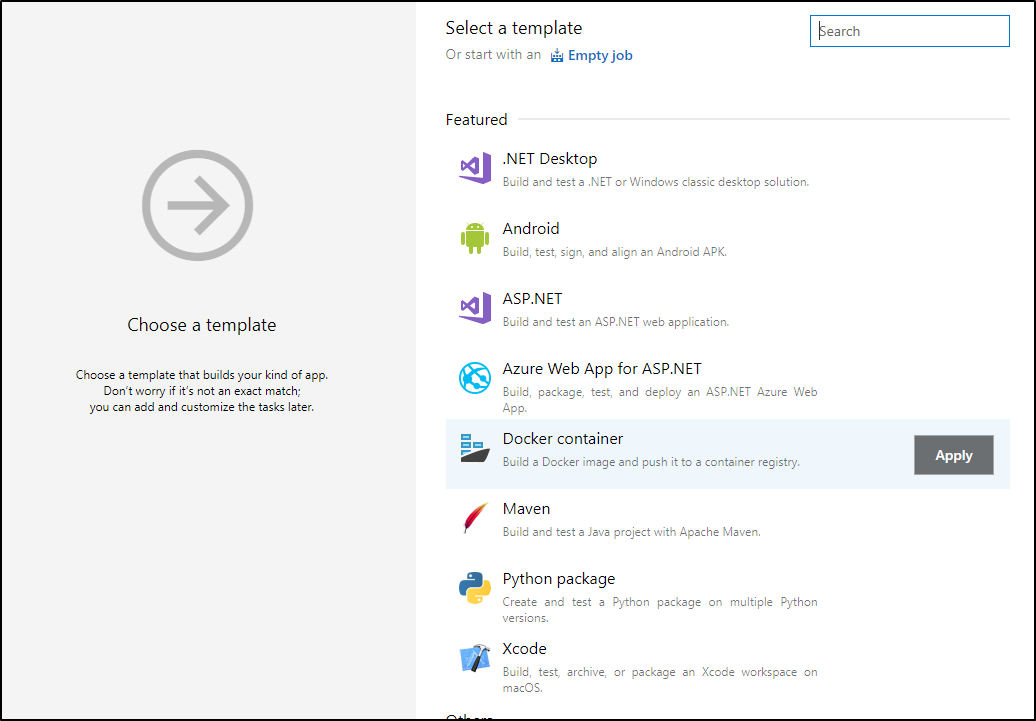

I’ll opt for the Docker build template

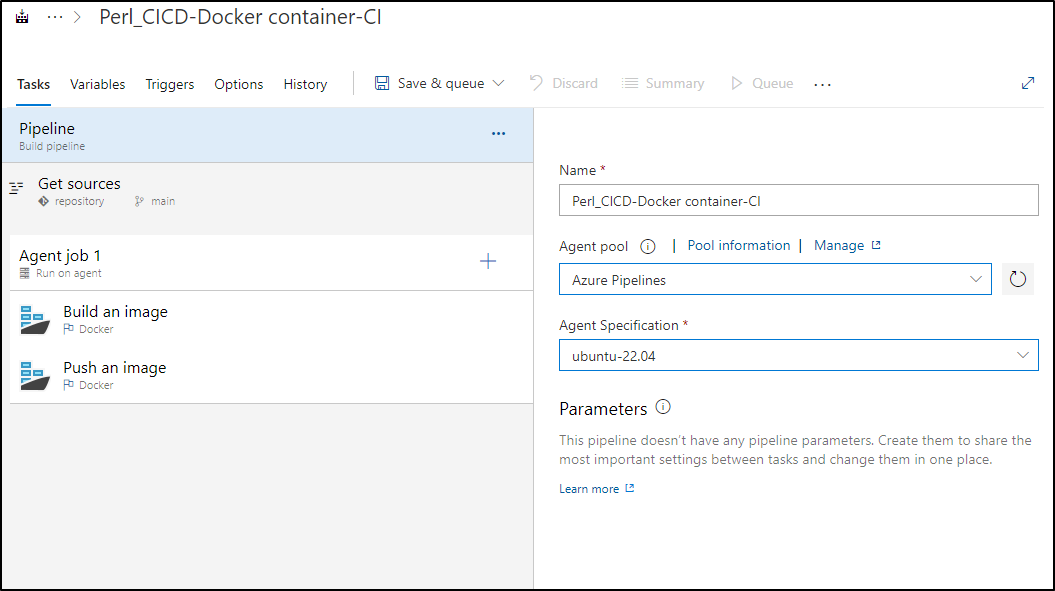

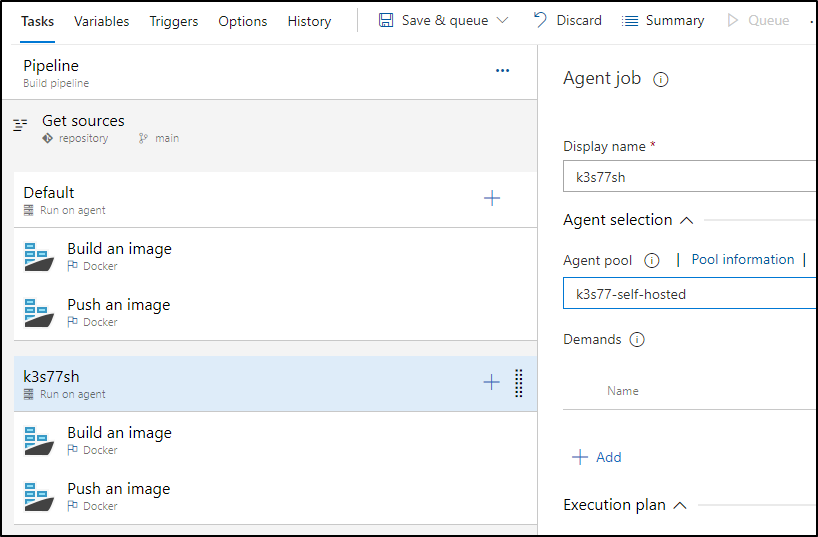

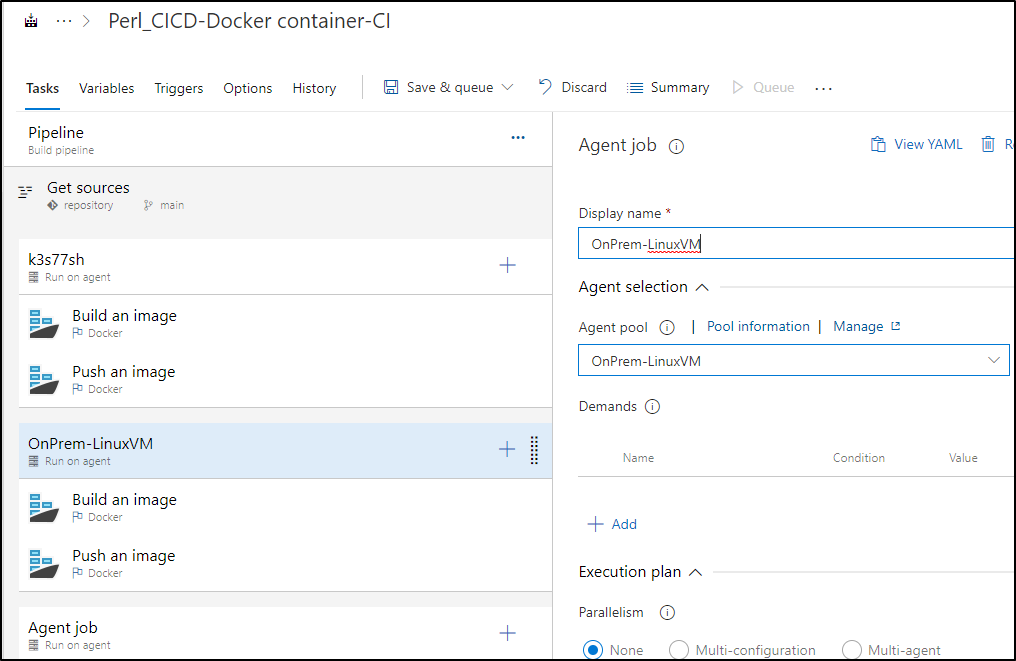

I’ll leave my main pipeline on Ubuntu 22 provided images

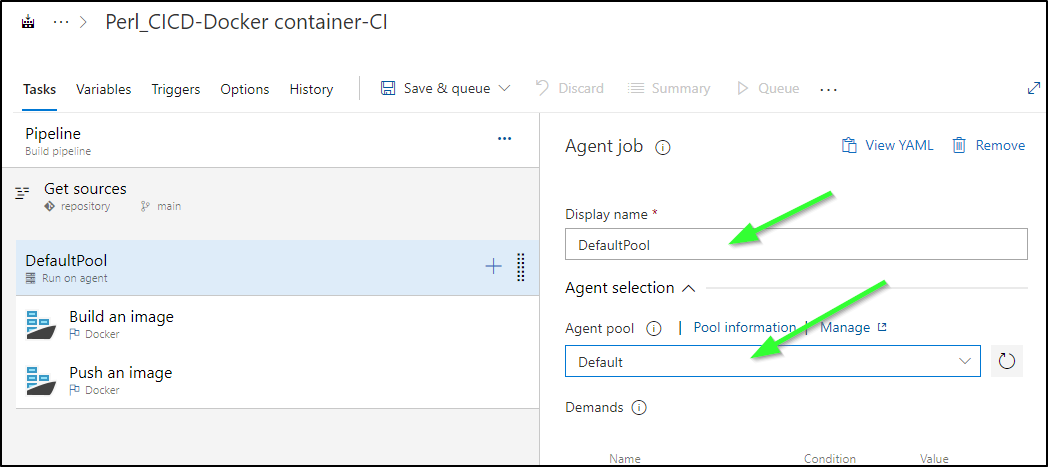

However, I’ll change up the first stage to use the “Default” pool which has my Helm based agent

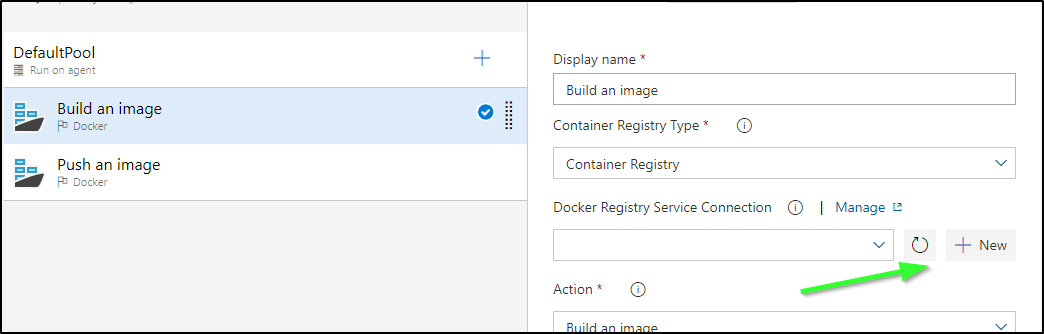

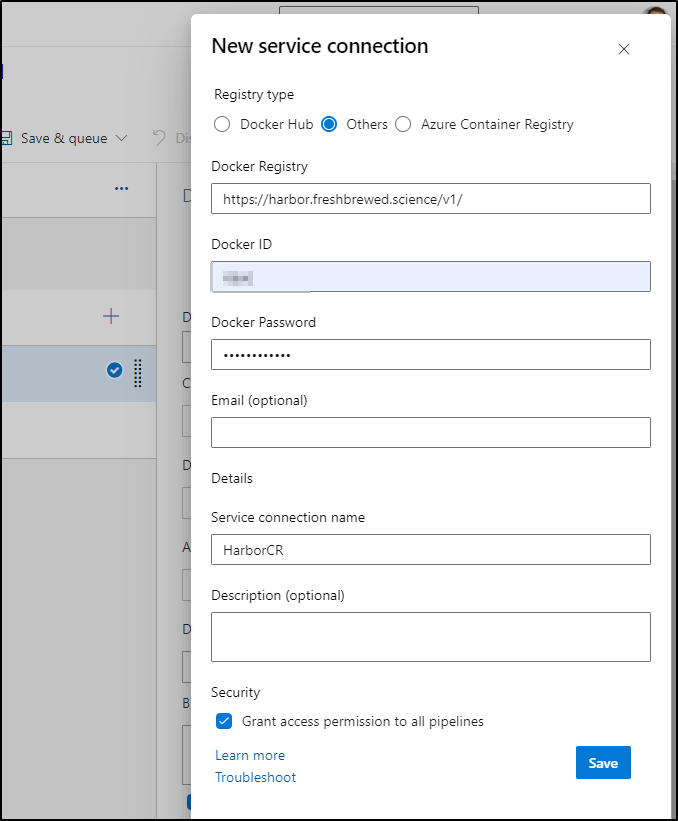

For build and push, I will need to add my CR

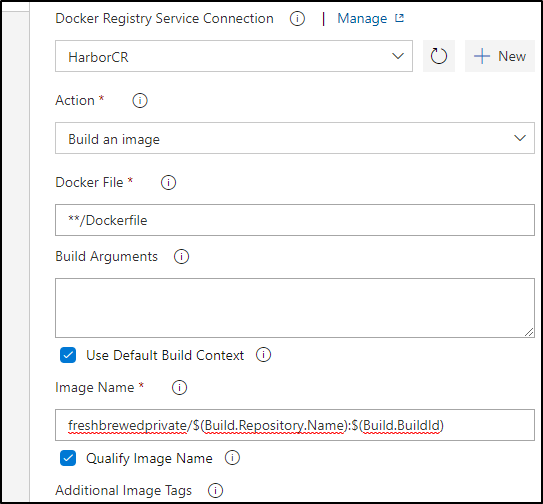

setting details

I’ll both set my HarborCR that I created, but also set the project (namespace) in the Image Name field as Harbor, like ECR and others usually puts images in a path

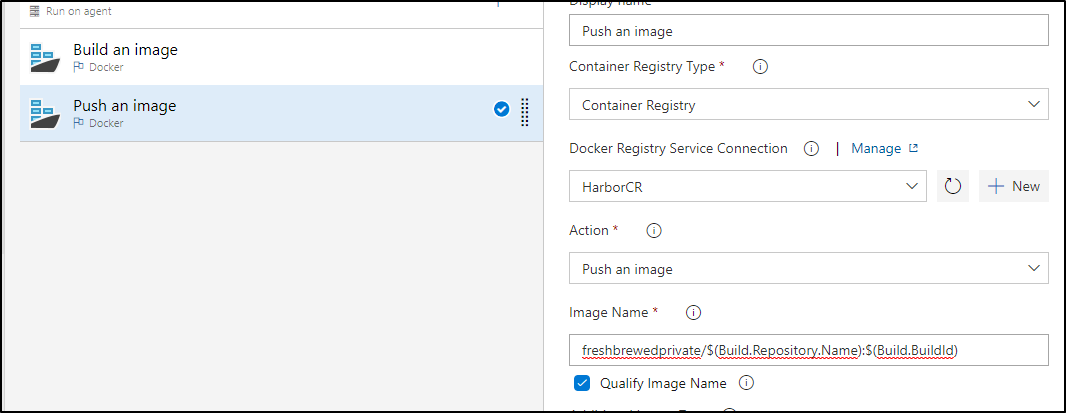

Then do the same for Push an Image

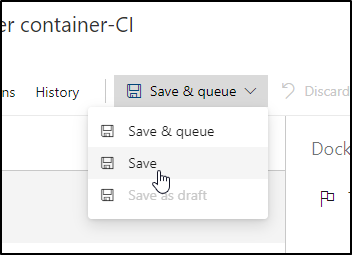

Now is a good time to save. As this is via the UI, taking a minute to save it every now and then is a good idea

I’ll copy those steps out to a new block for the first agent pool

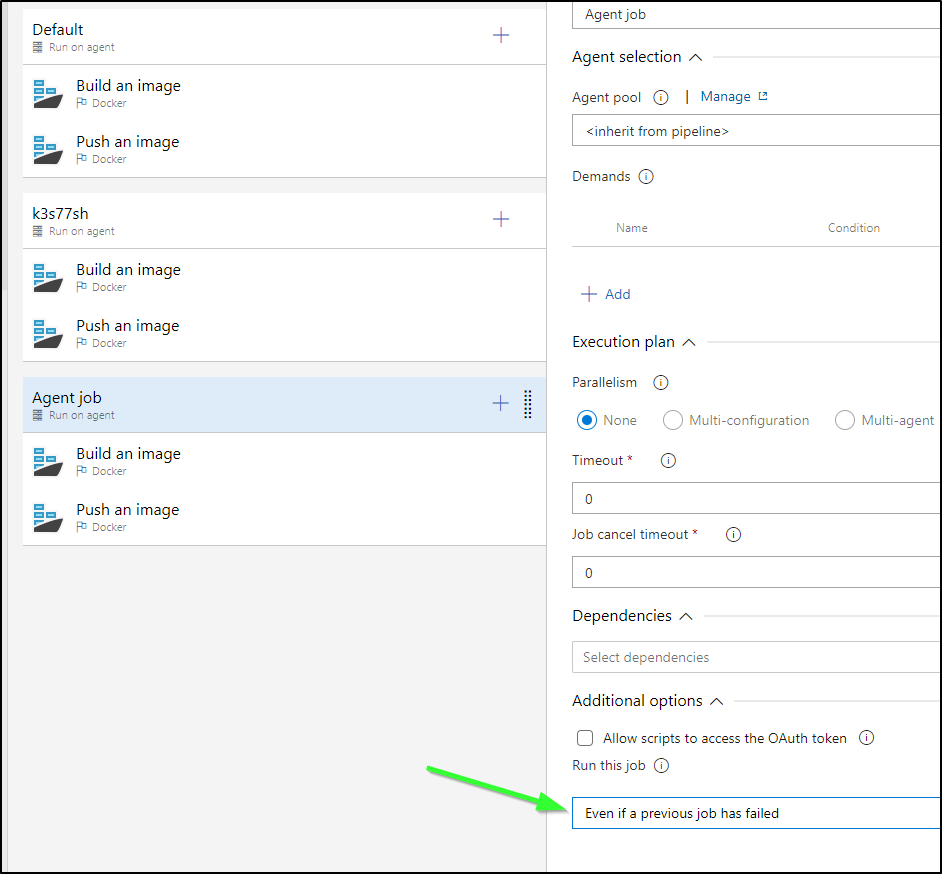

I lastly added a run using Azure agents. I also went to each job block and set each to run “Even if a previous job has failed”

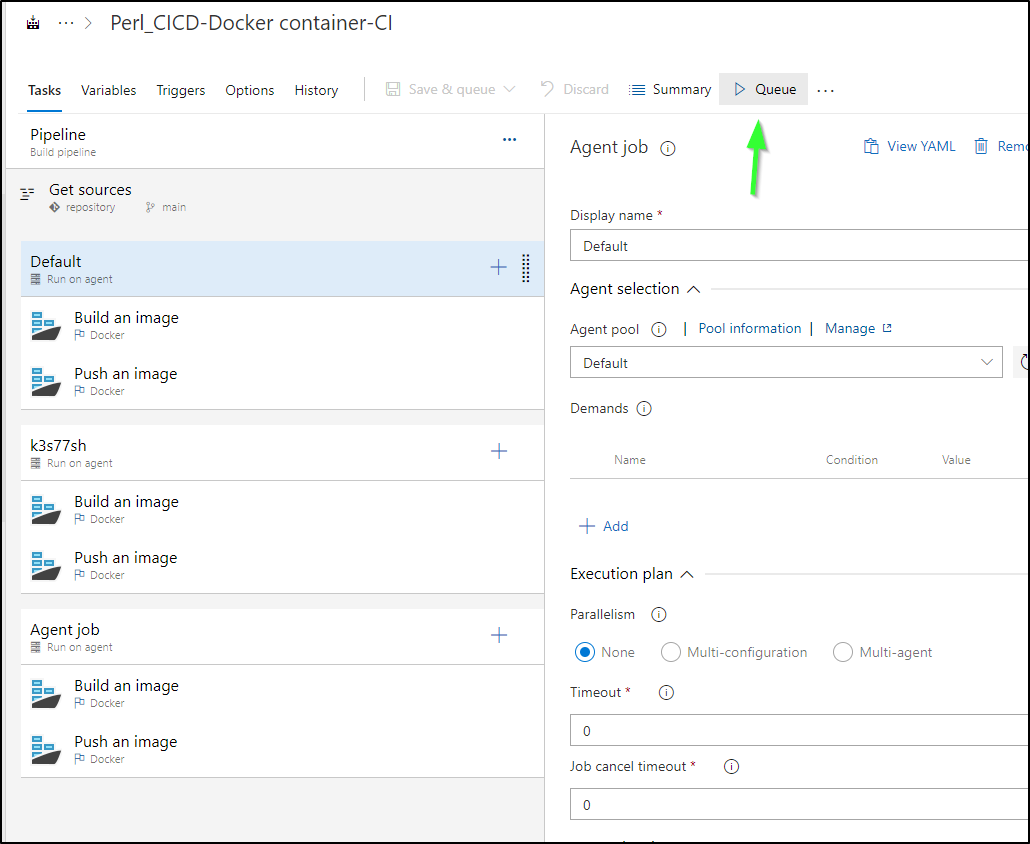

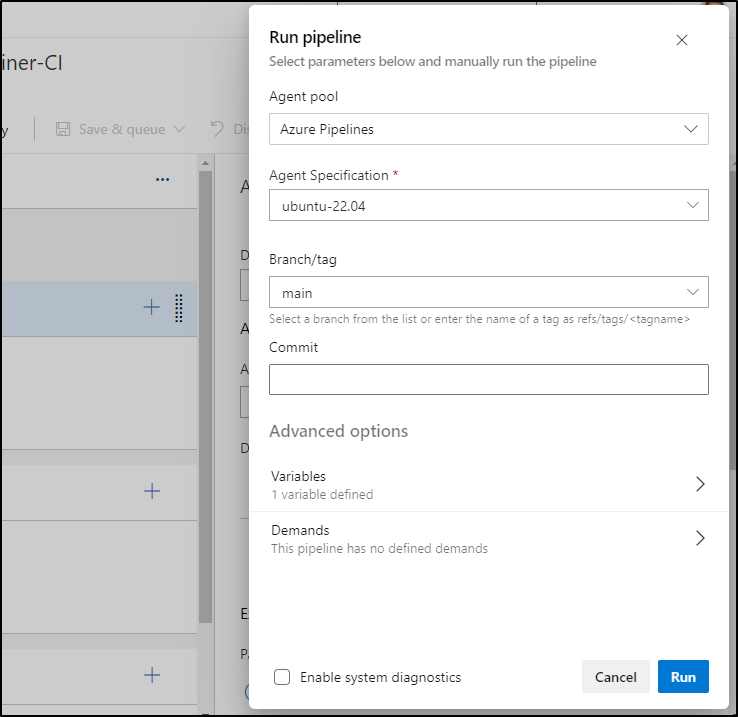

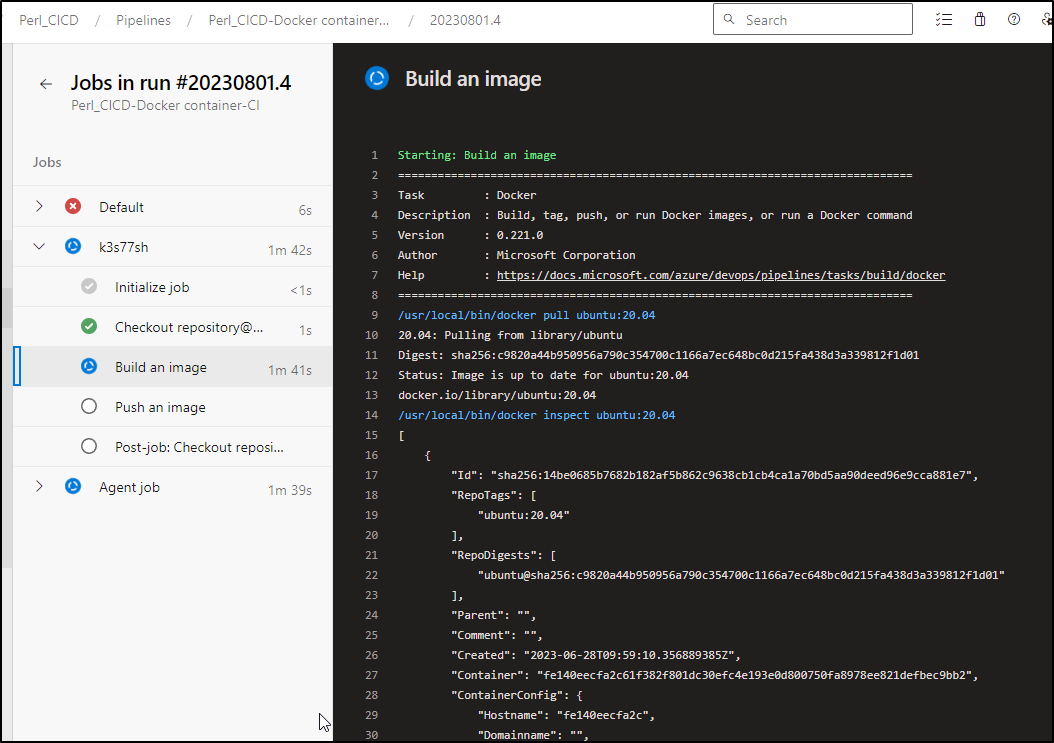

We can now Queue a run

And no need to worry that it has Agent Specification at the top - that is for the pipeline as a whole and the last inherited (Agent Job) job

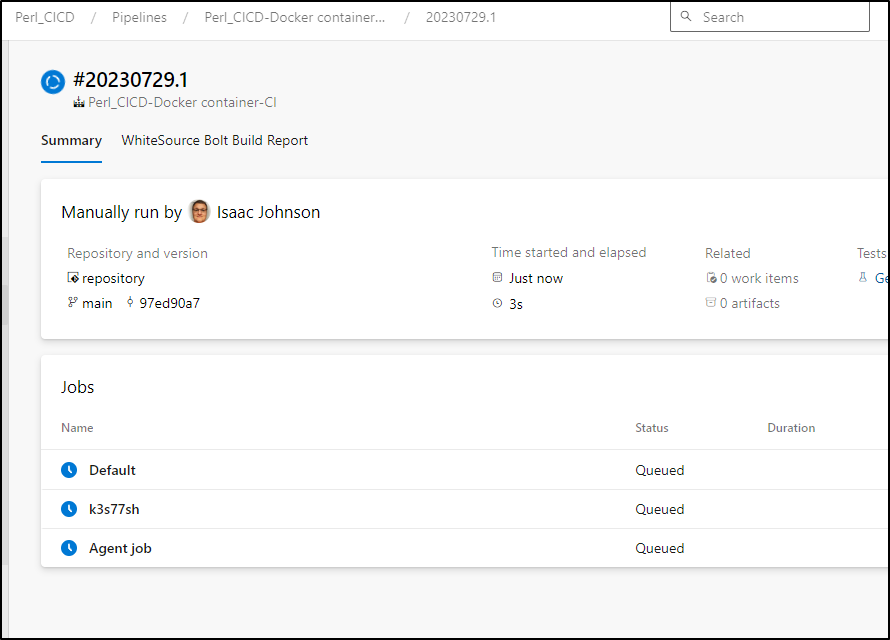

We queue things up

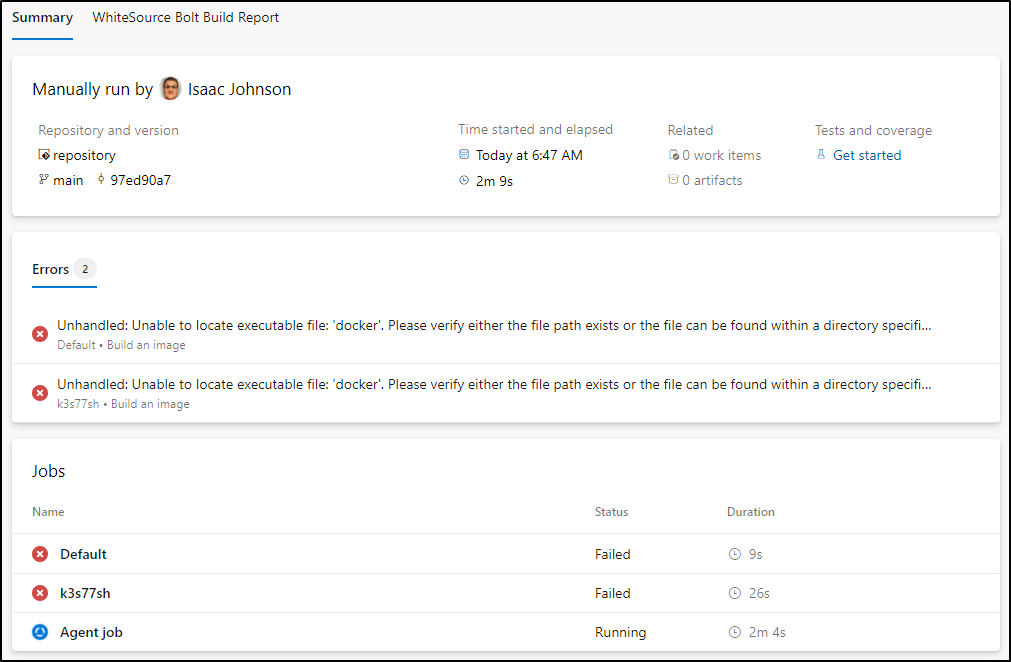

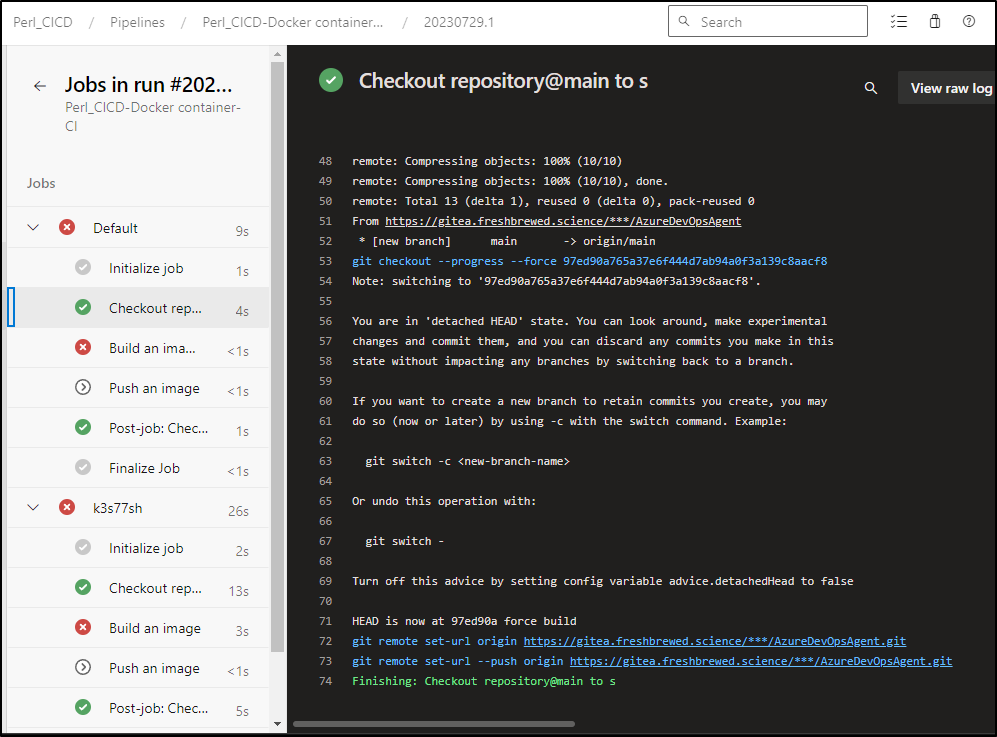

Right away we can see that our agents do not have docker installed (or usable)

Though I can confirm they pulled source

DinD

Let’s try and add Docker in Docker so we can build containers in containers.

I’ll update my Dockerfile

$ cat Dockerfile

FROM ubuntu:20.04

RUN DEBIAN_FRONTEND=noninteractive apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get upgrade -y

RUN DEBIAN_FRONTEND=noninteractive apt-get install -y -qq --no-install-recommends \

apt-transport-https \

apt-utils \

ca-certificates \

curl \

git \

iputils-ping \

jq \

lsb-release \

software-properties-common \

libicu66

# DinD

RUN DEBIAN_FRONTEND=noninteractive apt update \

&& DEBIAN_FRONTEND=noninteractive apt install -y ca-certificates openssh-client \

wget curl iptables supervisor \

&& rm -rf /var/lib/apt/list/*

ENV DOCKER_CHANNEL=stable \

DOCKER_VERSION=24.0.2 \

DOCKER_COMPOSE_VERSION=v2.18.1 \

BUILDX_VERSION=v0.10.4 \

DEBUG=false

# Docker and buildx installation

RUN set -eux; \

\

arch="$(uname -m)"; \

case "$arch" in \

# amd64

x86_64) dockerArch='x86_64' ; buildx_arch='linux-amd64' ;; \

# arm32v6

armhf) dockerArch='armel' ; buildx_arch='linux-arm-v6' ;; \

# arm32v7

armv7) dockerArch='armhf' ; buildx_arch='linux-arm-v7' ;; \

# arm64v8

aarch64) dockerArch='aarch64' ; buildx_arch='linux-arm64' ;; \

*) echo >&2 "error: unsupported architecture ($arch)"; exit 1 ;;\

esac; \

\

if ! wget -O docker.tgz "https://download.docker.com/linux/static/${DOCKER_CHANNEL}/${dockerArch}/docker-${DOCKER_VERSION}.tgz"; then \

echo >&2 "error: failed to download 'docker-${DOCKER_VERSION}' from '${DOCKER_CHANNEL}' for '${dockerArch}'"; \

exit 1; \

fi; \

\

tar --extract \

--file docker.tgz \

--strip-components 1 \

--directory /usr/local/bin/ \

; \

rm docker.tgz; \

if ! wget -O docker-buildx "https://github.com/docker/buildx/releases/download/${BUILDX_VERSION}/buildx-${BUILDX_VERSION}.${buildx_arch}"; then \

echo >&2 "error: failed to download 'buildx-${BUILDX_VERSION}.${buildx_arch}'"; \

exit 1; \

fi; \

mkdir -p /usr/local/lib/docker/cli-plugins; \

chmod +x docker-buildx; \

mv docker-buildx /usr/local/lib/docker/cli-plugins/docker-buildx; \

\

dockerd --version; \

docker --version; \

docker buildx version

VOLUME /var/lib/docker

# Docker compose installation

RUN curl -L "https://github.com/docker/compose/releases/download/${DOCKER_COMPOSE_VERSION}/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose \

&& chmod +x /usr/local/bin/docker-compose && docker-compose version

# Create a symlink to the docker binary in /usr/local/lib/docker/cli-plugins

# for users which uses 'docker compose' instead of 'docker-compose'

RUN ln -s /usr/local/bin/docker-compose /usr/local/lib/docker/cli-plugins/docker-compose

# AzDO

RUN curl -sL https://aka.ms/InstallAzureCLIDeb | bash

# Can be 'linux-x64', 'linux-arm64', 'linux-arm', 'rhel.6-x64'.

ENV TARGETARCH=linux-x64

WORKDIR /azp

COPY ./start.sh .

RUN chmod +x start.sh

ENTRYPOINT [ "./start.sh" ]

And build a new image.

$ docker build -t azdoagenttest:0.1.0 .

[+] Building 73.6s (18/18) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 2.88kB 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/ubuntu:20.04 1.7s

=> [auth] library/ubuntu:pull token for registry-1.docker.io 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 30B 0.0s

=> [ 1/12] FROM docker.io/library/ubuntu:20.04@sha256:c9820a44b950956a790c354700c1166a7ec648bc0d215fa438d3a339812f1d01 0.0s

=> CACHED [ 2/12] RUN DEBIAN_FRONTEND=noninteractive apt-get update 0.0s

=> CACHED [ 3/12] RUN DEBIAN_FRONTEND=noninteractive apt-get upgrade -y 0.0s

=> CACHED [ 4/12] RUN DEBIAN_FRONTEND=noninteractive apt-get install -y -qq --no-install-recommends apt-transport-https apt-utils ca-certificates curl git iputils-pin 0.0s

=> [ 5/12] RUN DEBIAN_FRONTEND=noninteractive apt update && DEBIAN_FRONTEND=noninteractive apt install -y ca-certificates openssh-client wget curl iptables supervisor && rm -rf 12.7s

=> [ 6/12] RUN set -eux; arch="$(uname -m)"; case "$arch" in x86_64) dockerArch='x86_64' ; buildx_arch='linux-amd64' ;; armhf) dockerArch='armel' ; buildx_arch='linux-arm-v6' ;; ar 13.9s

=> [ 7/12] RUN curl -L "https://github.com/docker/compose/releases/download/v2.18.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose && chmod +x /usr/local/bin/docke 5.7s

=> [ 8/12] RUN ln -s /usr/local/bin/docker-compose /usr/local/lib/docker/cli-plugins/docker-compose 0.8s

=> [ 9/12] RUN curl -sL https://aka.ms/InstallAzureCLIDeb | bash 32.0s

=> [10/12] WORKDIR /azp 0.0s

=> [11/12] COPY ./start.sh . 0.0s

=> [12/12] RUN chmod +x start.sh 0.6s

=> exporting to image 4.8s

=> => exporting layers 4.8s

=> => writing image sha256:6593aa12f6c1fe56634e1fe1602bf88cbaccc9689a16868476db713844f2f5d1 0.0s

=> => naming to docker.io/library/azdoagenttest:0.1.0

That took a bit (a minute 13s).

I tagged and pushed to Dockerhub

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/AgentBuild$ docker tag azdoagenttest:0.1.0 idjohnson/azdoagent:0.1.0

builder@DESKTOP-QADGF36:~/Workspaces/AzureDevOpsAgent/AgentBuild$ docker push idjohnson/azdoagent:0.1.0

The push refers to repository [docker.io/idjohnson/azdoagent]

791df7f28c1e: Pushed

41863e3afcf7: Pushed

04f37b7b372a: Pushed

6c1a6bc2e8a6: Pushed

0488620052ad: Pushed

e5792629eab1: Pushed

a9298916d465: Pushed

d2194b8953d6: Layer already exists

73c1584a7072: Layer already exists

e4a7873884f7: Layer already exists

f5bb4f853c84: Layer already exists

0.1.0: digest: sha256:4f9c9622a2d51907258d7c5dce0c67d2e254f3d1ab75f133f5d833ed42055002 size: 2837

I’ll try on the helm-based images

$ helm upgrade --install azdoagenthelm btungut/azure-devops-agent --set agent.pat=3p3qrxzaufxr57dndcamwvqtvaeatdxkt3zidlcoux67wwr6eria --set agent.organizationUrl=https://dev.azure.com/princessking --set image.repository=idjohnson/azdoagent --set image.tag=0.1.1 --namespace azdoagents --create-namespace

Release "azdoagenthelm" has been upgraded. Happy Helming!

NAME: azdoagenthelm

LAST DEPLOYED: Sun Jul 30 11:16:53 2023

NAMESPACE: azdoagents

STATUS: deployed

REVISION: 5

TEST SUITE: None

And the deployment

$ kubectl edit deployment azdoagenthelm-azure-devops-agent -n azdoagents

These too failed to connect to the docker socket.

I tried using the Github action runner deployment

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-azdo-deployment

spec:

template:

spec:

repository: idjohnson/ansible-playbooks

image: idjohnson/azdoagent:0.1.0

imagePullPolicy: IfNotPresent

dockerEnabled: true

env:

- name: AZP_POOL

value: k3s77-self-hosted

- name: AZP_URL

value: https://dev.azure.com/princessking

- name: AZP_TOKEN

valueFrom:

secretKeyRef:

name: azdopat

key: azdopat

optional: false

labels:

- my-azdo-deployment

It seemed to come up okay

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl logs my-azdo-deployment-ghmfn-q44ql

Defaulted container "runner" out of: runner, docker

1. Determining matching Azure Pipelines agent...

2. Downloading and extracting Azure Pipelines agent...

3. Configuring Azure Pipelines agent...

___ ______ _ _ _

/ _ \ | ___ (_) | (_)

/ /_\ \_____ _ _ __ ___ | |_/ /_ _ __ ___| |_ _ __ ___ ___

| _ |_ / | | | '__/ _ \ | __/| | '_ \ / _ \ | | '_ \ / _ \/ __|

| | | |/ /| |_| | | | __/ | | | | |_) | __/ | | | | | __/\__ \

\_| |_/___|\__,_|_| \___| \_| |_| .__/ \___|_|_|_| |_|\___||___/

| |

agent v3.224.1 |_| (commit 7861f5f)

>> End User License Agreements:

Building sources from a TFVC repository requires accepting the Team Explorer Everywhere End User License Agreement. This step is not required for building sources from Git repositories.

A copy of the Team Explorer Everywhere license agreement can be found at:

/azp/license.html

>> Connect:

Connecting to server ...

>> Register Agent:

Scanning for tool capabilities.

Connecting to the server.

Successfully added the agent

Testing agent connection.

2023-08-01 11:32:42Z: Settings Saved.

4. Running Azure Pipelines agent...

Scanning for tool capabilities.

Connecting to the server.

2023-08-01 11:32:44Z: Listening for Jobs

2023-08-01 11:33:18Z: Running job: k3s77sh

2023-08-01 11:39:43Z: Job k3s77sh completed with result: Failed

2023-08-01 11:46:01Z: Running job: k3s77sh

To make sure I was using these “RunnerDeployment” agents, I disabled the former in the UI for now

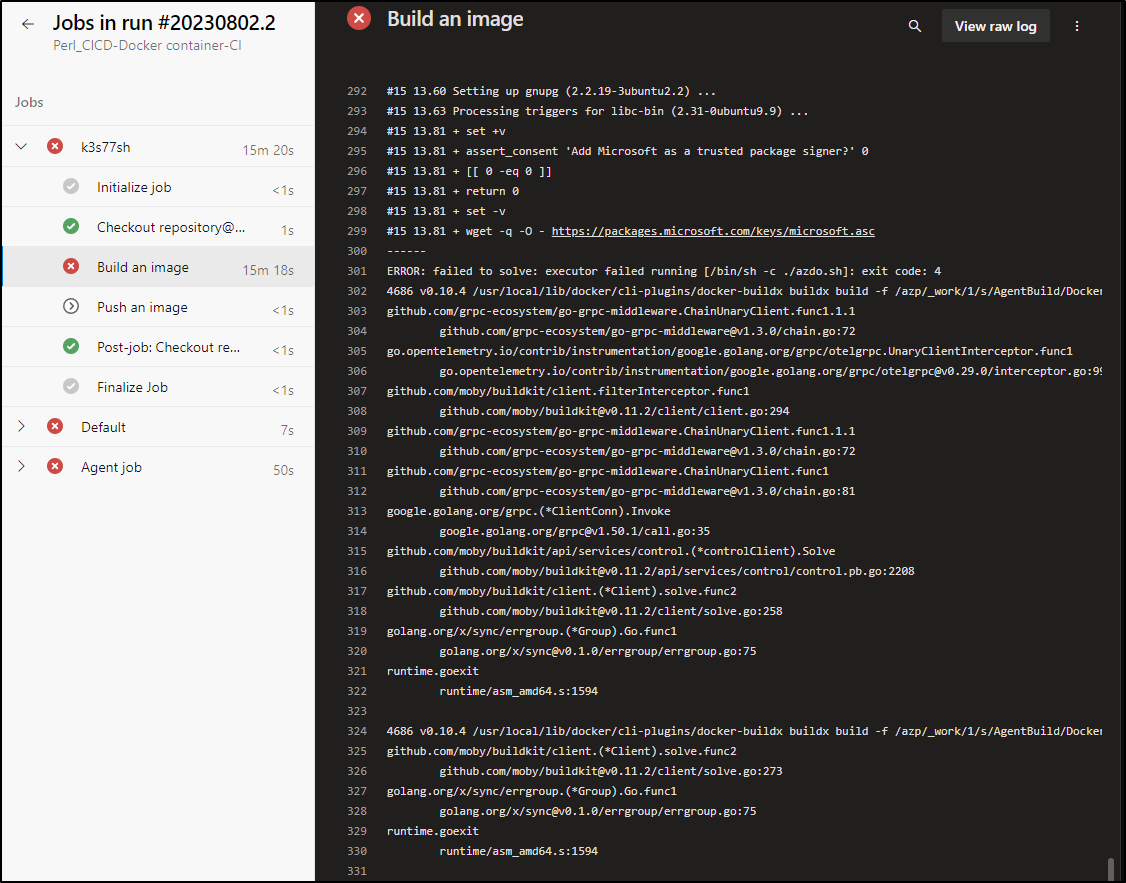

The example build made it much farther, actually starting to build a docker image, but failing on the AzDO build step near the bottom (where it tries to load from RUN curl -sL https://aka.ms/InstallAzureCLIDeb | bash)

I had one last idea - perhaps the dynamic curl and bash just was not jiving here. I copied down that aka.ms url and used it as a local bash script

That failed as well.

Note: Next Post we’ll cover some implications here, but warning - do NOT leave this RunnerDeploy going - delete it after you test

I thought perhaps if I forced the pods to use the main node - the one I know has a working docker, i could isolate any of the other node issues

$ kubectl edit deployment azdevops-deployment

error: deployments.apps "azdevops-deployment" is invalid

deployment.apps/azdevops-deployment edited

$ kubectl get pods | grep az

azure-vote-back-6fcdc5cbd5-wgj8q 1/1 Running 3 (79d ago) 374d

vote-back-azure-vote-7ffdcdbb9d-fgnjn 1/1 Running 3 (79d ago) 199d

vote-front-azure-vote-7ddd5967c8-s4zvn 1/1 Running 3 (79d ago) 375d

azure-vote-front-5f4b8d498-9ddsj 1/1 Running 5 (17h ago) 34d

azdevops-deployment-77f9c4df6b-n4rp9 1/1 Running 0 7s

azdevops-deployment-656db94858-whnpr 1/1 Terminating 15 (17h ago) 5d14h

azdevops-deployment-77f9c4df6b-vh5xh 1/1 Running 0 5s

azdevops-deployment-656db94858-dhrjh 1/1 Terminating 0 5d4h

I just added nodeName to the spec

$ kubectl get deployment azdevops-deployment -o yaml | grep -C 10 nodeName

cpu: 130m

memory: 300Mi

securityContext:

privileged: true

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/docker.sock

name: docker-graph-storage

dnsPolicy: ClusterFirst

nodeName: isaac-macbookair

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /var/run/docker.sock

type: ""

name: docker-graph-storage

status:

When pared with this Dockerfile (I did many iterations)

FROM ubuntu:20.04

RUN DEBIAN_FRONTEND=noninteractive apt-get update

RUN DEBIAN_FRONTEND=noninteractive apt-get upgrade -y

RUN DEBIAN_FRONTEND=noninteractive apt-get install -y -qq --no-install-recommends \

apt-transport-https \

apt-utils \

ca-certificates \

curl \

git \

iputils-ping \

jq \

lsb-release \

software-properties-common \

libicu66

# DinD

RUN DEBIAN_FRONTEND=noninteractive apt update \

&& DEBIAN_FRONTEND=noninteractive apt install -y ca-certificates openssh-client \

wget curl iptables supervisor \

&& rm -rf /var/lib/apt/list/*

ENV DOCKER_CHANNEL=stable \

DOCKER_VERSION=24.0.2 \

DOCKER_COMPOSE_VERSION=v2.18.1 \

BUILDX_VERSION=v0.10.4 \

DEBUG=false

# Docker and buildx installation

RUN set -eux; \

\

arch="$(uname -m)"; \

case "$arch" in \

# amd64

x86_64) dockerArch='x86_64' ; buildx_arch='linux-amd64' ;; \

# arm32v6

armhf) dockerArch='armel' ; buildx_arch='linux-arm-v6' ;; \

# arm32v7

armv7) dockerArch='armhf' ; buildx_arch='linux-arm-v7' ;; \

# arm64v8

aarch64) dockerArch='aarch64' ; buildx_arch='linux-arm64' ;; \

*) echo >&2 "error: unsupported architecture ($arch)"; exit 1 ;;\

esac; \

\

if ! wget -O docker.tgz "https://download.docker.com/linux/static/${DOCKER_CHANNEL}/${dockerArch}/docker-${DOCKER_VERSION}.tgz"; then \

echo >&2 "error: failed to download 'docker-${DOCKER_VERSION}' from '${DOCKER_CHANNEL}' for '${dockerArch}'"; \

exit 1; \

fi; \

\

tar --extract \

--file docker.tgz \

--strip-components 1 \

--directory /usr/local/bin/ \

; \

rm docker.tgz; \

if ! wget -O docker-buildx "https://github.com/docker/buildx/releases/download/${BUILDX_VERSION}/buildx-${BUILDX_VERSION}.${buildx_arch}"; then \

echo >&2 "error: failed to download 'buildx-${BUILDX_VERSION}.${buildx_arch}'"; \

exit 1; \

fi; \

mkdir -p /usr/local/lib/docker/cli-plugins; \

chmod +x docker-buildx; \

mv docker-buildx /usr/local/lib/docker/cli-plugins/docker-buildx; \

\

dockerd --version; \

docker --version; \

docker buildx version

VOLUME /var/lib/docker

# Docker compose installation

RUN curl -L "https://github.com/docker/compose/releases/download/${DOCKER_COMPOSE_VERSION}/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose \

&& chmod +x /usr/local/bin/docker-compose && docker-compose version

# Create a symlink to the docker binary in /usr/local/lib/docker/cli-plugins

# for users which uses 'docker compose' instead of 'docker-compose'

RUN ln -s /usr/local/bin/docker-compose /usr/local/lib/docker/cli-plugins/docker-compose

# AzDO

RUN curl -sL https://aka.ms/InstallAzureCLIDeb | bash

# Can be 'linux-x64', 'linux-arm64', 'linux-arm', 'rhel.6-x64'.

ENV TARGETARCH=linux-x64

WORKDIR /azp

COPY ./start.sh .

RUN chmod +x start.sh

ENTRYPOINT [ "./start.sh" ]

and start.sh

#!/bin/bash

set -e

if [ -z "$AZP_URL" ]; then

echo 1>&2 "error: missing AZP_URL environment variable"

exit 1

fi

if [ -z "$AZP_TOKEN_FILE" ]; then

if [ -z "$AZP_TOKEN" ]; then

echo 1>&2 "error: missing AZP_TOKEN environment variable"

exit 1

fi

AZP_TOKEN_FILE=/azp/.token

echo -n $AZP_TOKEN > "$AZP_TOKEN_FILE"

fi

unset AZP_TOKEN

if [ -n "$AZP_WORK" ]; then

mkdir -p "$AZP_WORK"

fi

export AGENT_ALLOW_RUNASROOT="1"

cleanup() {

if [ -e config.sh ]; then

print_header "Cleanup. Removing Azure Pipelines agent..."

# If the agent has some running jobs, the configuration removal process will fail.

# So, give it some time to finish the job.

while true; do

./config.sh remove --unattended --auth PAT --token $(cat "$AZP_TOKEN_FILE") && break

echo "Retrying in 30 seconds..."

sleep 30

done

fi

}

print_header() {

lightcyan='\033[1;36m'

nocolor='\033[0m'

echo -e "${lightcyan}$1${nocolor}"

}

# Let the agent ignore the token env variables

export VSO_AGENT_IGNORE=AZP_TOKEN,AZP_TOKEN_FILE

print_header "1. Determining matching Azure Pipelines agent..."

AZP_AGENT_PACKAGES=$(curl -LsS \

-u user:$(cat "$AZP_TOKEN_FILE") \

-H 'Accept:application/json;' \

"$AZP_URL/_apis/distributedtask/packages/agent?platform=$TARGETARCH&top=1")

AZP_AGENT_PACKAGE_LATEST_URL=$(echo "$AZP_AGENT_PACKAGES" | jq -r '.value[0].downloadUrl')

if [ -z "$AZP_AGENT_PACKAGE_LATEST_URL" -o "$AZP_AGENT_PACKAGE_LATEST_URL" == "null" ]; then

echo 1>&2 "error: could not determine a matching Azure Pipelines agent"

echo 1>&2 "check that account '$AZP_URL' is correct and the token is valid for that account"

exit 1

fi

print_header "2. Downloading and extracting Azure Pipelines agent..."

curl -LsS $AZP_AGENT_PACKAGE_LATEST_URL | tar -xz & wait $!

source ./env.sh

trap 'cleanup; exit 0' EXIT

trap 'cleanup; exit 130' INT

trap 'cleanup; exit 143' TERM

print_header "3. Configuring Azure Pipelines agent..."

./config.sh --unattended \

--agent "${AZP_AGENT_NAME:-$(hostname)}" \

--url "$AZP_URL" \

--auth PAT \

--token $(cat "$AZP_TOKEN_FILE") \

--pool "${AZP_POOL:-Default}" \

--work "${AZP_WORK:-_work}" \

--replace \

--acceptTeeEula & wait $!

print_header "4. Running Azure Pipelines agent..."

trap 'cleanup; exit 0' EXIT

trap 'cleanup; exit 130' INT

trap 'cleanup; exit 143' TERM

chmod +x ./run.sh

# To be aware of TERM and INT signals call run.sh

# Running it with the --once flag at the end will shut down the agent after the build is executed

./run.sh "$@" & wait $!

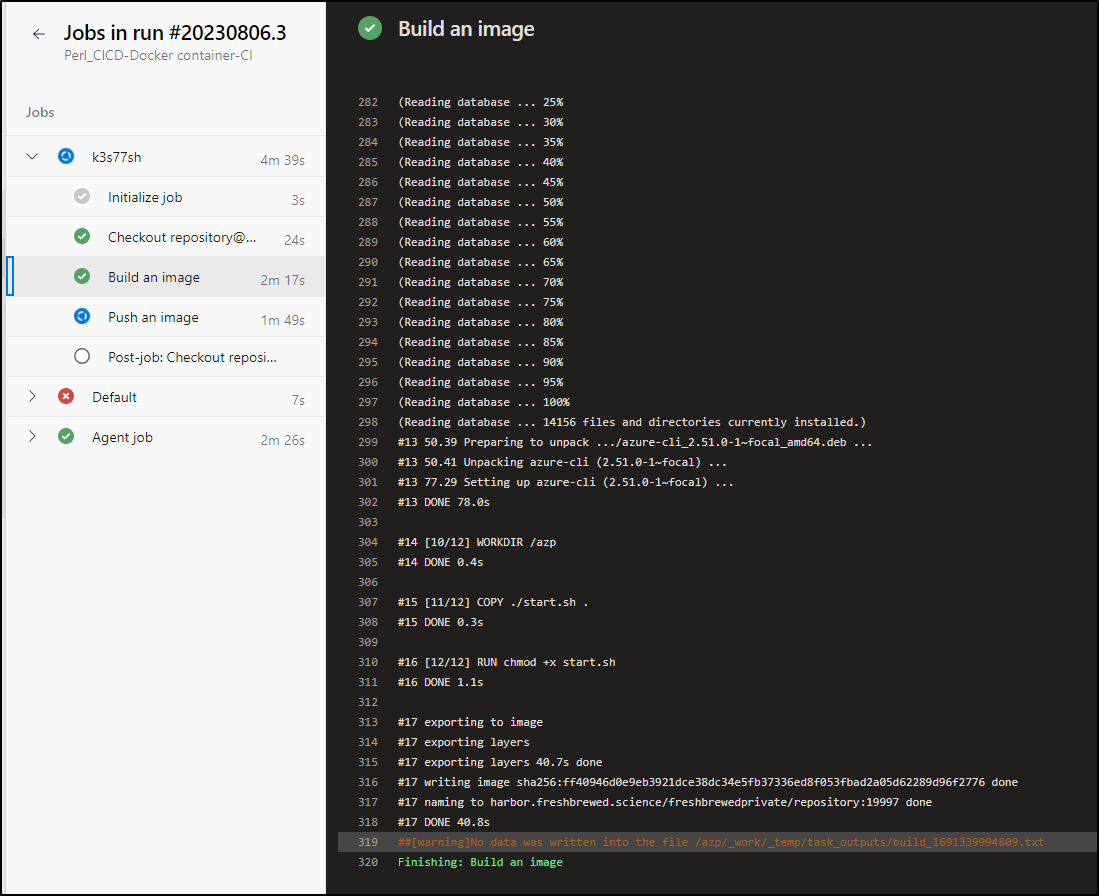

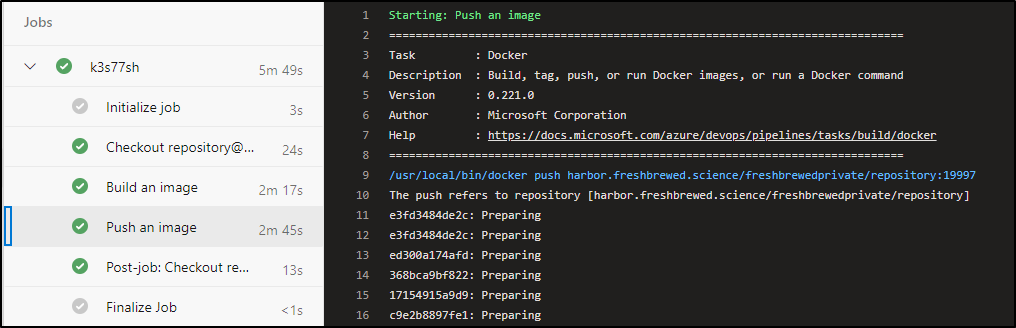

I finally got it to build

Summary

Let’s speak about what we built out here.

We created a Docker based Azure DevOps Private agent, launched into K8s that would associate with our instance using a PAT and mounting the hosts docker.sock to provide docker build support

apiVersion: apps/v1

kind: Deployment

metadata:

name: azdevops-deployment

spec:

replicas: 2

progressDeadlineSeconds: 1800

selector:

matchLabels:

app: azdevops-agent

template:

metadata:

labels:

app: azdevops-agent

spec:

containers:

- name: selfhosted-agents

image: idjohnson/azdoagent:0.1.0

resources:

requests:

cpu: "130m"

memory: "300Mi"

limits:

cpu: "130m"

memory: "300Mi"

env:

- name: AZP_POOL

value: k3s77-self-hosted

- name: AZP_URL

value: https://dev.azure.com/princessking

- name: AZP_TOKEN

valueFrom:

secretKeyRef:

name: azdopat

key: azdopat

optional: false

volumeMounts:

- name: docker-graph-storage

mountPath: /var/run/docker.sock

nodeName: isaac-macbookair

volumes:

- name: docker-graph-storage

hostPath:

path: /var/run/docker.sock

We then used this deployed agent to build itself and push to HarborCR

using a code-less Azure Classic Build Pipeline:

To add a bit more fun, we fetched from a Gitea repo hosted within the same Kubernetes cluster

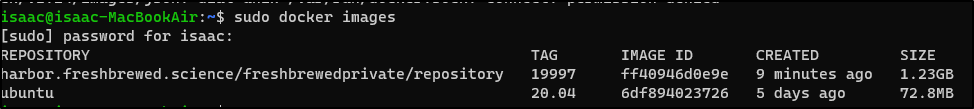

I should point out that because we used the docker.sock on the host, I can see the image on that host if I go there directly

VM (Or machine) based

This is perhaps the simple case, but one worth reviewing.

As we just reviewed, we are on the main node of the cluster and can see it has a built image

isaac@isaac-MacBookAir:~$ sudo docker images

[sudo] password for isaac:

REPOSITORY TAG IMAGE ID CREATED SIZE

harbor.freshbrewed.science/freshbrewedprivate/repository 19997 ff40946d0e9e 9 minutes ago 1.23GB

ubuntu 20.04 6df894023726 5 days ago 72.8MB

We can host an AzDO agent here as well.

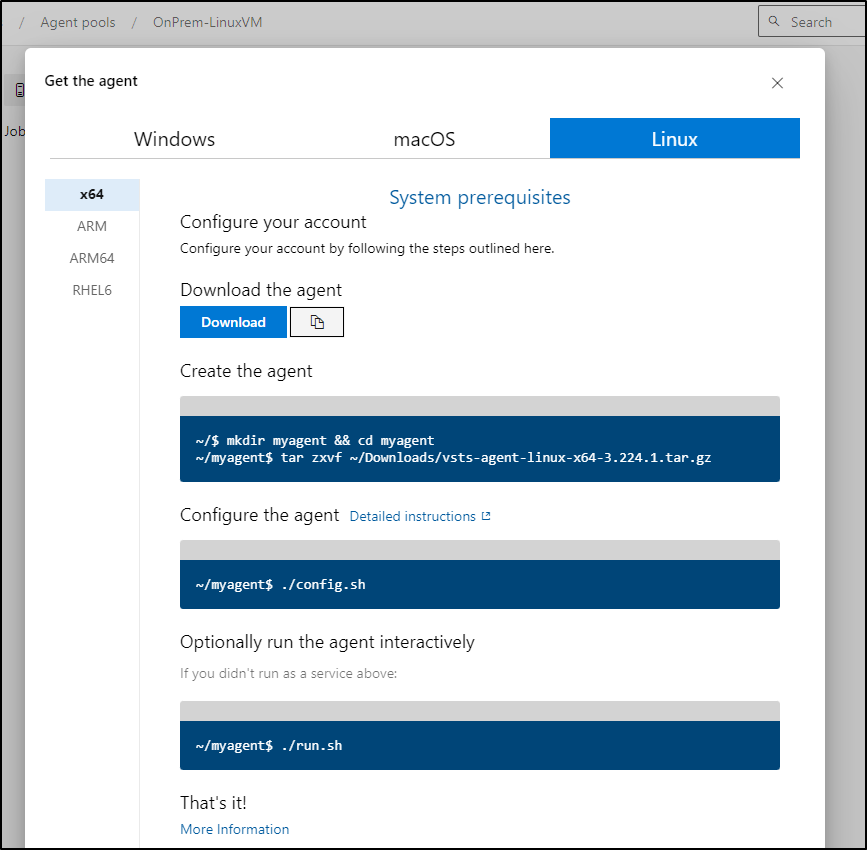

We start by adding a new agent pool

We can then add a new agent using the steps

First, we download and expand

isaac@isaac-MacBookAir:~$ wget https://vstsagentpackage.azureedge.net/agent/3.224.1/vsts-agent-linux-x64-3.224.1.tar.gz

--2023-08-06 11:53:34-- https://vstsagentpackage.azureedge.net/agent/3.224.1/vsts-agent-linux-x64-3.224.1.tar.gz

Resolving vstsagentpackage.azureedge.net (vstsagentpackage.azureedge.net)... 72.21.81.200, 2606:2800:11f:17a5:191a:18d5:537:22f9

Connecting to vstsagentpackage.azureedge.net (vstsagentpackage.azureedge.net)|72.21.81.200|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 159407977 (152M) [application/octet-stream]

Saving to: ‘vsts-agent-linux-x64-3.224.1.tar.gz’

vsts-agent-linux-x64-3.224.1.tar.gz 100%[================================================================>] 152.02M 9.83MB/s in 15s

2023-08-06 11:53:49 (10.2 MB/s) - ‘vsts-agent-linux-x64-3.224.1.tar.gz’ saved [159407977/159407977]

isaac@isaac-MacBookAir:~$ mkdir myagent

isaac@isaac-MacBookAir:~$ cd myagent/

isaac@isaac-MacBookAir:~/myagent$ tar zxf ../vsts-agent-linux-x64-3.224.1.tar.gz

Now I can config

isaac@isaac-MacBookAir:~/myagent$ ./config.sh

___ ______ _ _ _

/ _ \ | ___ (_) | (_)

/ /_\ \_____ _ _ __ ___ | |_/ /_ _ __ ___| |_ _ __ ___ ___

| _ |_ / | | | '__/ _ \ | __/| | '_ \ / _ \ | | '_ \ / _ \/ __|

| | | |/ /| |_| | | | __/ | | | | |_) | __/ | | | | | __/\__ \

\_| |_/___|\__,_|_| \___| \_| |_| .__/ \___|_|_|_| |_|\___||___/

| |

agent v3.224.1 |_| (commit 7861f5f)

>> End User License Agreements:

Building sources from a TFVC repository requires accepting the Team Explorer Everywhere End User License Agreement. This step is not required for building sources from Git repositories.

A copy of the Team Explorer Everywhere license agreement can be found at:

/home/isaac/myagent/license.html

Enter (Y/N) Accept the Team Explorer Everywhere license agreement now? (press enter for N) > Y

>> Connect:

Enter server URL > https://dev.azure.com/princessking

Enter authentication type (press enter for PAT) >

Enter personal access token > ****************************************************

Connecting to server ...

>> Register Agent:

Enter agent pool (press enter for default) > OnPrem-LinuxVM

Enter agent name (press enter for isaac-MacBookAir) >

Scanning for tool capabilities.

Connecting to the server.

Successfully added the agent

Testing agent connection.

Enter work folder (press enter for _work) >

2023-08-06 16:56:24Z: Settings Saved.

For reference, I grabbed the same PAT I used for the k8s agents using a quick kubectl command

$ kubectl get secrets azdopat -o json | jq -r .data.azdopat | base64 --decode

3asdfsadfasdfasdfasdfasdfasdfasdfasdfasdfasdfasfasdfasdfa

We could use ./run.sh but that isn’t very helpful as it’s interactive and would only last as long as my shell. I could use nohup ./run.sh & to background it but neither are necessary as there is any easy way to just run as a service.

isaac@isaac-MacBookAir:~/myagent$ sudo ./svc.sh install

Creating launch agent in /etc/systemd/system/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service

Run as user: isaac

Run as uid: 1000

gid: 1000

Created symlink /etc/systemd/system/multi-user.target.wants/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service → /etc/systemd/system/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service.

isaac@isaac-MacBookAir:~/myagent$ sudo ./svc.sh start

/etc/systemd/system/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service

● vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service - Azure Pipelines Agent (princessking.OnPrem-LinuxVM.isaac-MacBookAir)

Loaded: loaded (/etc/systemd/system/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2023-08-06 11:59:22 CDT; 25ms ago

Main PID: 3769840 (runsvc.sh)

Tasks: 3 (limit: 9318)

Memory: 1.2M

CGroup: /system.slice/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service

├─3769840 /bin/bash /home/isaac/myagent/runsvc.sh

└─3769843 ./externals/node16/bin/node ./bin/AgentService.js

Aug 06 11:59:22 isaac-MacBookAir systemd[1]: Started Azure Pipelines Agent (princessking.OnPrem-LinuxVM.isaac-MacBookAir).

Aug 06 11:59:22 isaac-MacBookAir runsvc.sh[3769840]: .path=/home/linuxbrew/.linuxbrew/bin:/home/linuxbrew/.linuxbrew/sbin:/usr/loca…/snap/bin

Aug 06 11:59:23 isaac-MacBookAir runsvc.sh[3769843]: Starting Agent listener with startup type: service

Aug 06 11:59:23 isaac-MacBookAir runsvc.sh[3769843]: Started listener process

Aug 06 11:59:23 isaac-MacBookAir runsvc.sh[3769843]: Started running service

Hint: Some lines were ellipsized, use -l to show in full.

One thing I will do is handle passwordless sudo as that will likely make things hard as a build agent.

isaac@isaac-MacBookAir:~/myagent$ sudo cat /etc/sudoers | grep isaac

isaac ALL=(ALL:ALL) NOPASSWD:ALL

I’ll change up the AzDO pipeline to test using this agent

I got an error about docker permissions

/usr/bin/docker pull ubuntu:20.04

Got permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Post http://%2Fvar%2Frun%2Fdocker.sock/v1.24/images/create?fromImage=ubuntu&tag=20.04: dial unix /var/run/docker.sock: connect: permission denied

/usr/bin/docker inspect ubuntu:20.04

Got permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Get http://%2Fvar%2Frun%2Fdocker.sock/v1.24/containers/ubuntu:20.04/json: dial unix /var/run/docker.sock: connect: permission denied

[]

/usr/bin/docker build -f /home/***/myagent/_work/1/s/AgentBuild/Dockerfile -t harbor.freshbrewed.science/freshbrewedprivate/repository:19998 --label com.azure.dev.image.system.teamfoundationcollectionuri=https://dev.azure.com/princessking/ --label com.azure.dev.image.build.sourceversion=436785ec1455b0c0c406189e773395b7893b2c02 --label image.base.ref.name=ubuntu:20.04 /home/***/myagent/_work/1/s/AgentBuild

Got permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Post http://%2Fvar%2Frun%2Fdocker.sock/v1.24/build?buildargs=%7B%7D&cachefrom=%5B%5D&cgroupparent=&cpuperiod=0&cpuquota=0&cpusetcpus=&cpusetmems=&cpushares=0&dockerfile=Dockerfile&labels=%7B%22com.azure.dev.image.build.sourceversion%22%3A%22436785ec1455b0c0c406189e773395b7893b2c02%22%2C%22com.azure.dev.image.system.teamfoundationcollectionuri%22%3A%22https%3A%2F%2Fdev.azure.com%2Fprincessking%2F%22%2C%22image.base.ref.name%22%3A%22ubuntu%3A20.04%22%7D&memory=0&memswap=0&networkmode=default&rm=1&shmsize=0&t=harbor.freshbrewed.science%2Ffreshbrewedprivate%2Frepository%3A19998&target=&ulimits=null&version=1: dial unix /var/run/docker.sock: connect: permission denied

##[error]Got permission denied while trying to connect to the Docker daemon socket at unix:///var/run/docker.sock: Post http://%2Fvar%2Frun%2Fdocker.sock/v1.24/build?buildargs=%7B%7D&cachefrom=%5B%5D&cgroupparent=&cpuperiod=0&cpuquota=0&cpusetcpus=&cpusetmems=&cpushares=0&dockerfile=Dockerfile&labels=%7B%22com.azure.dev.image.build.sourceversion%22%3A%22436785ec1455b0c0c406189e773395b7893b2c02%22%2C%22com.azure.dev.image.system.teamfoundationcollectionuri%22%3A%22https%3A%2F%2Fdev.azure.com%2Fprincessking%2F%22%2C%22image.base.ref.name%22%3A%22ubuntu%3A20.04%22%7D&memory=0&memswap=0&networkmode=default&rm=1&shmsize=0&t=harbor.freshbrewed.science%2Ffreshbrewedprivate%2Frepository%3A19998&target=&ulimits=null&version=1: dial unix /var/run/docker.sock: connect: permission denied

##[error]The process '/usr/bin/docker' failed with exit code 1

Finishing: Build an image

which was easy to correct on the host by adding our user to a new docker group

isaac@isaac-MacBookAir:~/myagent$ sudo groupadd -f docker

isaac@isaac-MacBookAir:~/myagent$ sudo usermod -aG docker isaac

isaac@isaac-MacBookAir:~/myagent$ newgrp docker

isaac@isaac-MacBookAir:~/myagent$ groups | grep docker

docker adm cdrom sudo dip plugdev lpadmin lxd sambashare isaac

isaac@isaac-MacBookAir:~/myagent$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

isaac@isaac-MacBookAir:~/myagent$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

To get that to take effect, I’ll stop and start our agent.

isaac@isaac-MacBookAir:~/myagent$ sudo ./svc.sh stop

/etc/systemd/system/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service

● vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service - Azure Pipelines Agent (princessking.OnPrem-LinuxVM.isaac-MacBookAir)

Loaded: loaded (/etc/systemd/system/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service; enabled; vendor preset: enabled)

Active: inactive (dead) since Sun 2023-08-06 12:05:26 CDT; 12ms ago

Process: 3769840 ExecStart=/home/isaac/myagent/runsvc.sh (code=exited, status=0/SUCCESS)

Main PID: 3769840 (code=exited, status=0/SUCCESS)

Aug 06 12:02:15 isaac-MacBookAir runsvc.sh[3769843]: 2023-08-06 17:02:15Z: Running job: OnPrem-LinuxVM

Aug 06 12:02:31 isaac-MacBookAir runsvc.sh[3769843]: 2023-08-06 17:02:31Z: Job OnPrem-LinuxVM completed with result: Failed

Aug 06 12:05:26 isaac-MacBookAir systemd[1]: Stopping Azure Pipelines Agent (princessking.OnPrem-LinuxVM.isaac-MacBookAir)...

Aug 06 12:05:26 isaac-MacBookAir runsvc.sh[3769843]: Shutting down agent listener

Aug 06 12:05:26 isaac-MacBookAir runsvc.sh[3769843]: Sending SIGINT to agent listener to stop

Aug 06 12:05:26 isaac-MacBookAir runsvc.sh[3769843]: Exiting...

Aug 06 12:05:26 isaac-MacBookAir runsvc.sh[3769843]: Agent listener exited with error code 0

Aug 06 12:05:26 isaac-MacBookAir runsvc.sh[3769843]: Agent listener exit with 0 return code, stop the service, no retry needed.

Aug 06 12:05:26 isaac-MacBookAir systemd[1]: vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service: Succeeded.

Aug 06 12:05:26 isaac-MacBookAir systemd[1]: Stopped Azure Pipelines Agent (princessking.OnPrem-LinuxVM.isaac-MacBookAir).

isaac@isaac-MacBookAir:~/myagent$ sudo ./svc.sh start

/etc/systemd/system/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service

● vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service - Azure Pipelines Agent (princessking.OnPrem-LinuxVM.isaac-MacBookAir)

Loaded: loaded (/etc/systemd/system/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2023-08-06 12:05:59 CDT; 15ms ago

Main PID: 3793098 (runsvc.sh)

Tasks: 2 (limit: 9318)

Memory: 504.0K

CGroup: /system.slice/vsts.agent.princessking.OnPrem\x2dLinuxVM.isaac\x2dMacBookAir.service

├─3793098 /bin/bash /home/isaac/myagent/runsvc.sh

└─3793099 cat .path

Aug 06 12:05:59 isaac-MacBookAir systemd[1]: Started Azure Pipelines Agent (princessking.OnPrem-LinuxVM.isaac-MacBookAir).

Aug 06 12:05:59 isaac-MacBookAir runsvc.sh[3793098]: .path=/home/linuxbrew/.linuxbrew/bin:/home/linuxbrew/.linuxbrew/sbin:/usr/loca…/snap/bin

Hint: Some lines were ellipsized, use -l to show in full.

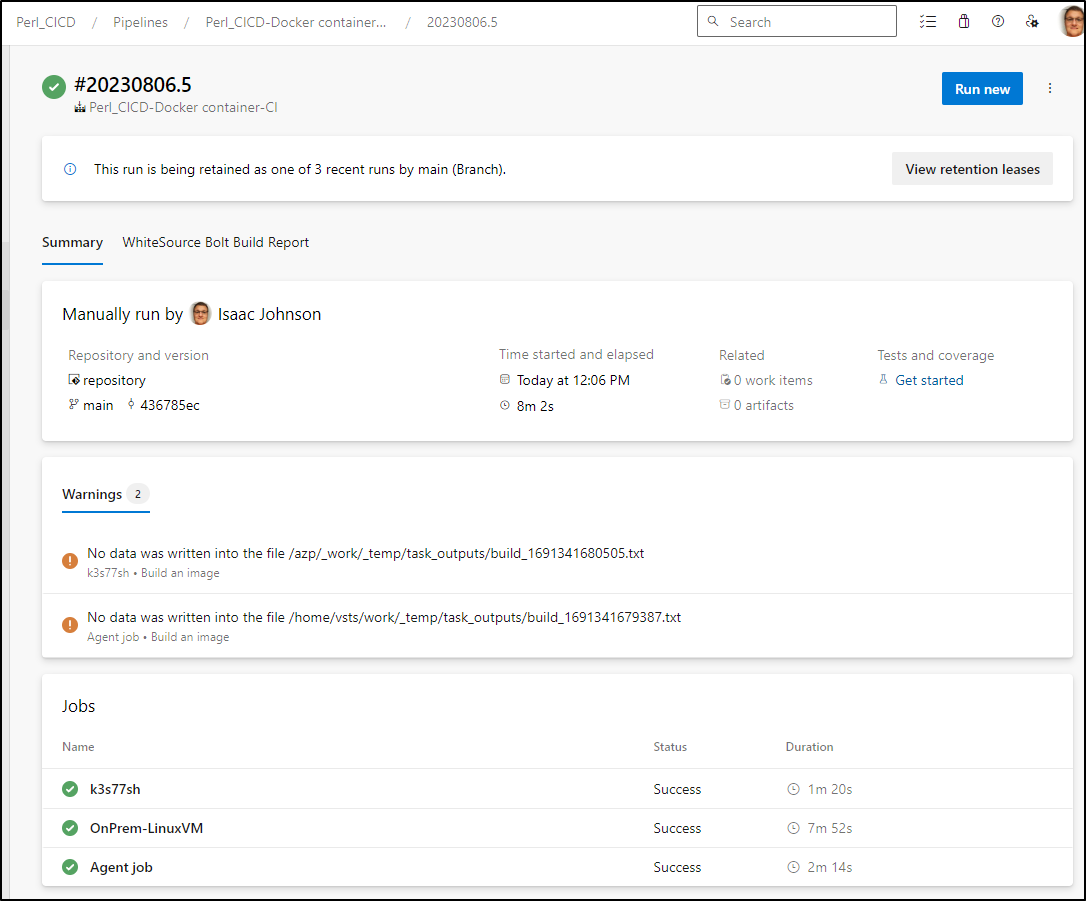

And all three worked; the K8s hosted agent, the VM agent and the Azure Provided one

Summary

This showed a few very simple patterns to launch Azure DevOps agents, containerized and otherwise and use them to build docker images. I find the docker image test to be the most challenging because it requires a lot of containerization setup to be handled. However, this is a good test because if you can build using a Dockerfile, then you can really build anything.

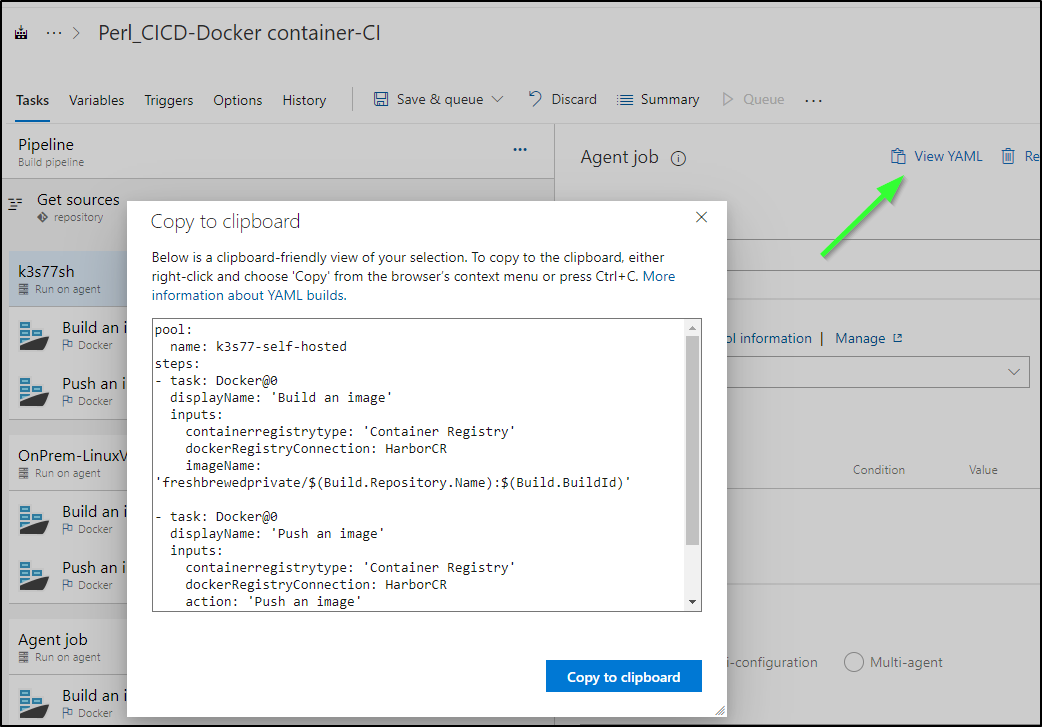

As far as next steps, I would want to switch to YAML. In the classic pipelines there exists a “View YAML” option on most stages, jobs and tasks:

We can use this to move to YAML rather easily.

I wrote another post on VMSS agents. It’s three years old, but still very valid - you can use VMSS to create on-the-fly very large hosts in Azure that can be used to build then are destroyed after. This can be a very cost effective measure to using the cloud for DevOps build purposes.

Background image

I gave MJ the Azure DevOps logo with the key word “Modern” and just let it pitch ideas. I really loved this profile it came up with. As I zoomed out, some of the options were a bit too risqué, but I really just love this “Azure DevOps” modern agent, according to the AI

I’m leaving a link to the fullsize image above in case others wish to download or use as a background.

The first image is below