Published: Aug 8, 2023 by Isaac Johnson

Homarr Dashboard is a dashboard. It’s an Open Source project created by Thomas Camlong. He seems like a pretty smart guy based on LinkedIn, knocking out his masters while doing internships around the world.

Now let’s check out Homarr and see what it can do.

Running in Docker

We can run Homarr in Docker with a simple docker run command

I’ll make a dir to store the configs

$ mkdir /home/builder/homarr

Then run with Docker

$ docker run \

--name homarr \

--restart unless-stopped \

-p 7575:7575 \

-v /home/builder/homarr/configs:/app/data/configs \

-v /home/builder/homarr/icons:/app/public/icons \

-d ghcr.io/ajnart/homarr:latest

I can now see it running

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a613dc3843bd ghcr.io/ajnart/homarr:latest "docker-entrypoint.s…" 44 seconds ago Up 42 seconds 0.0.0.0:7575->7575/tcp homarr

cead637ea0a2 louislam/uptime-kuma:1 "/usr/bin/dumb-init …" 16 months ago Up 11 days (healthy) 0.0.0.0:3001->3001/tcp uptime-kuma

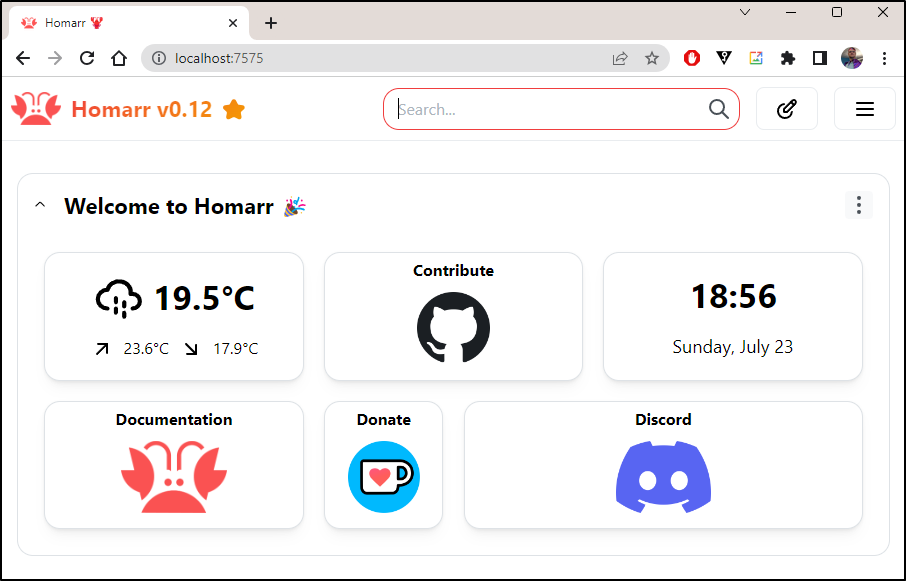

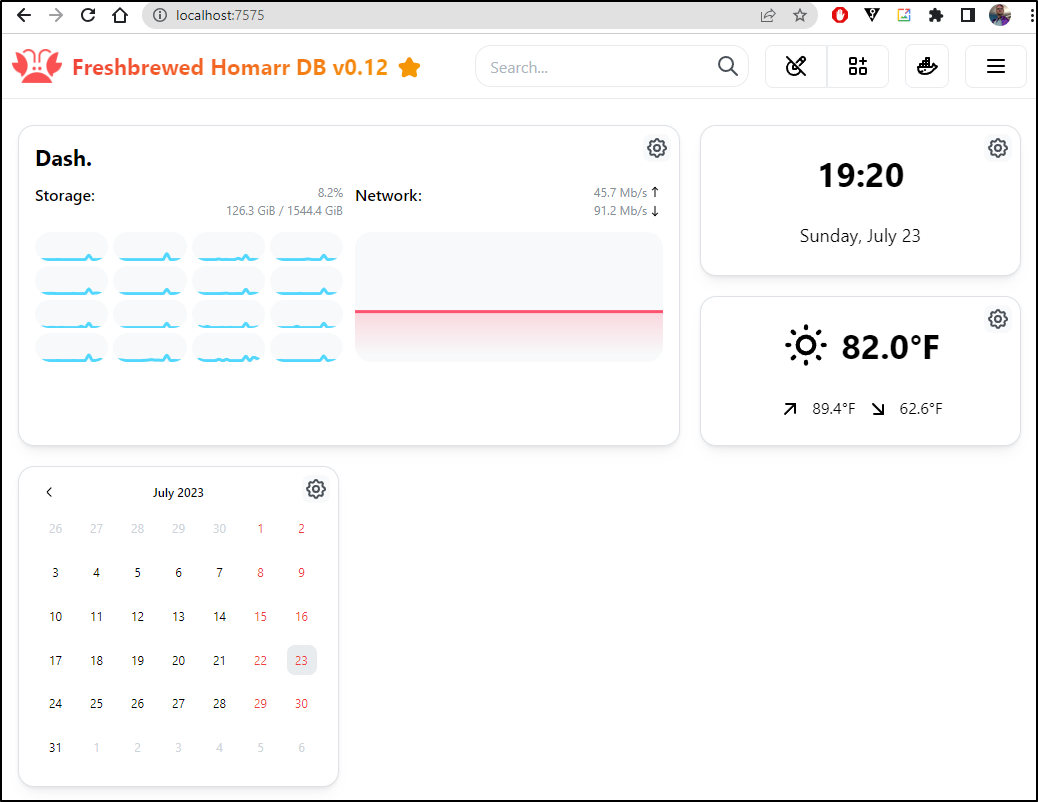

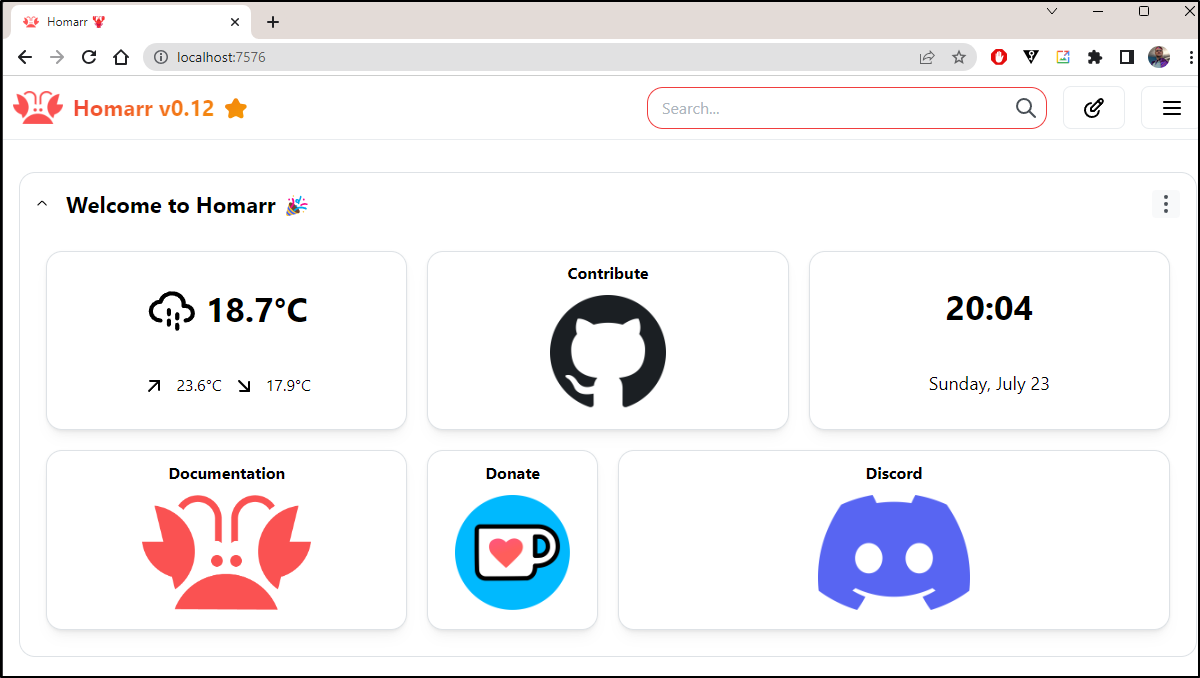

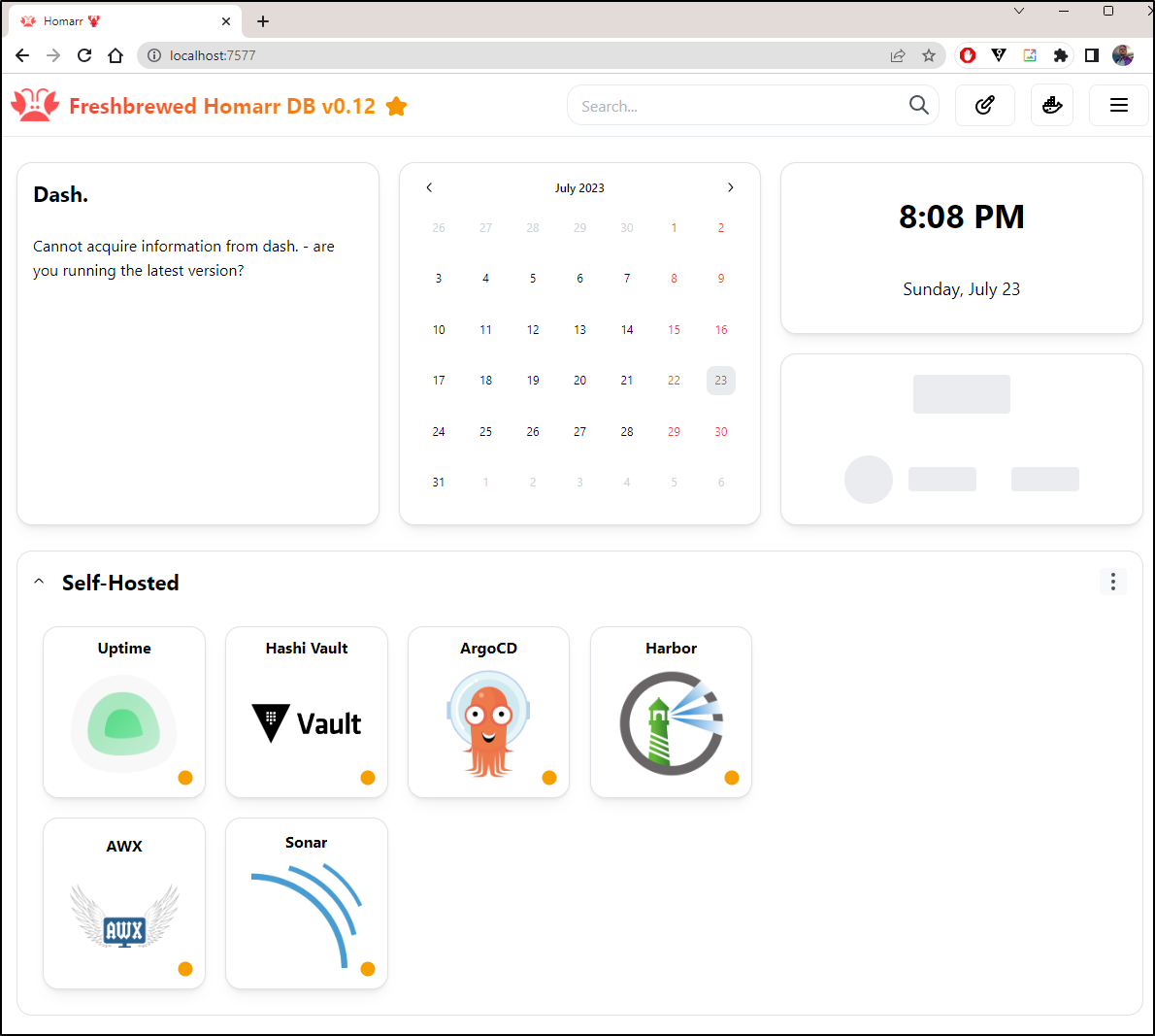

I can now go to http://localhost:7575 and see a pretty standard dashboard

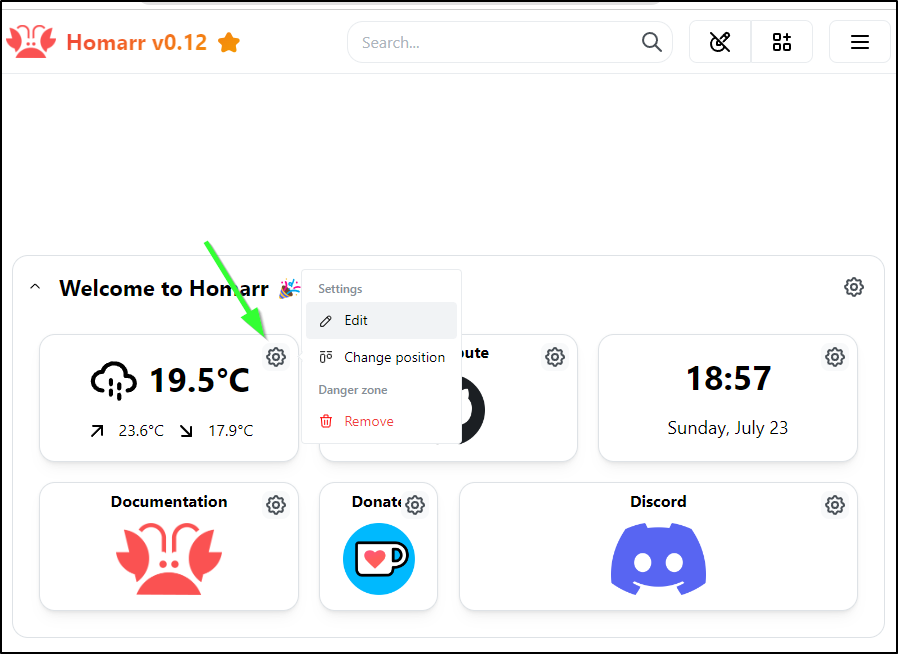

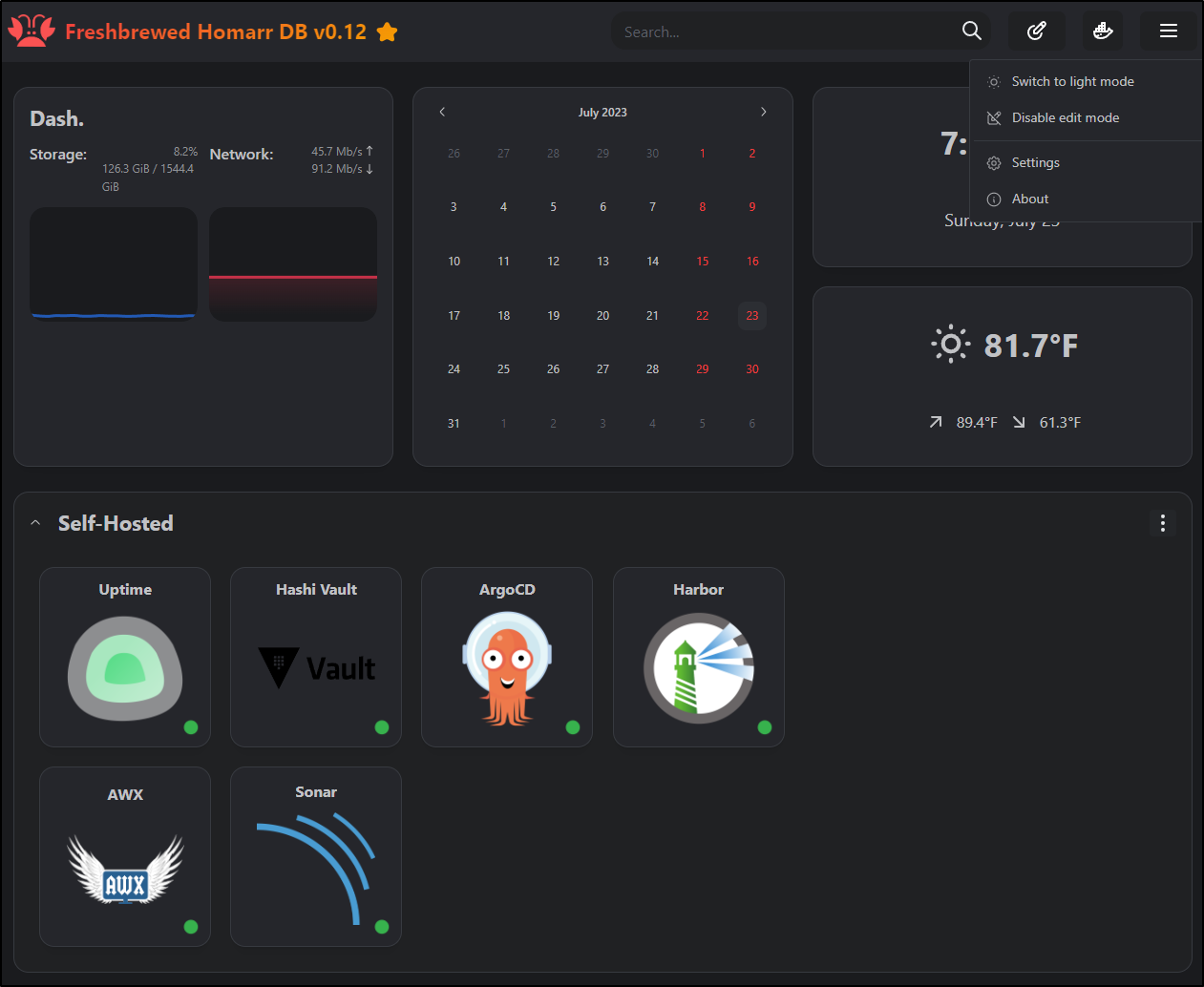

I can edit an of the widgets by clicking the gear icon

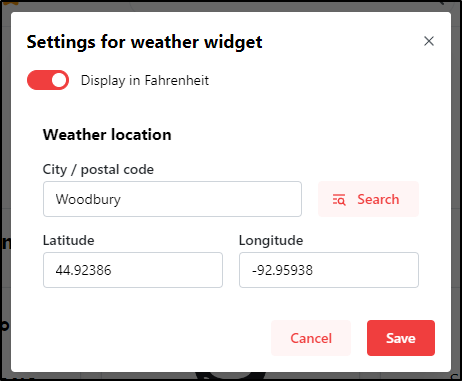

For instance, I’ll change the weather to be my town (not my actual GPS) and switch to Fahrenheit.

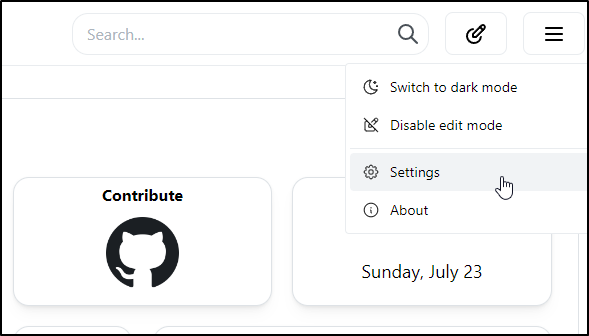

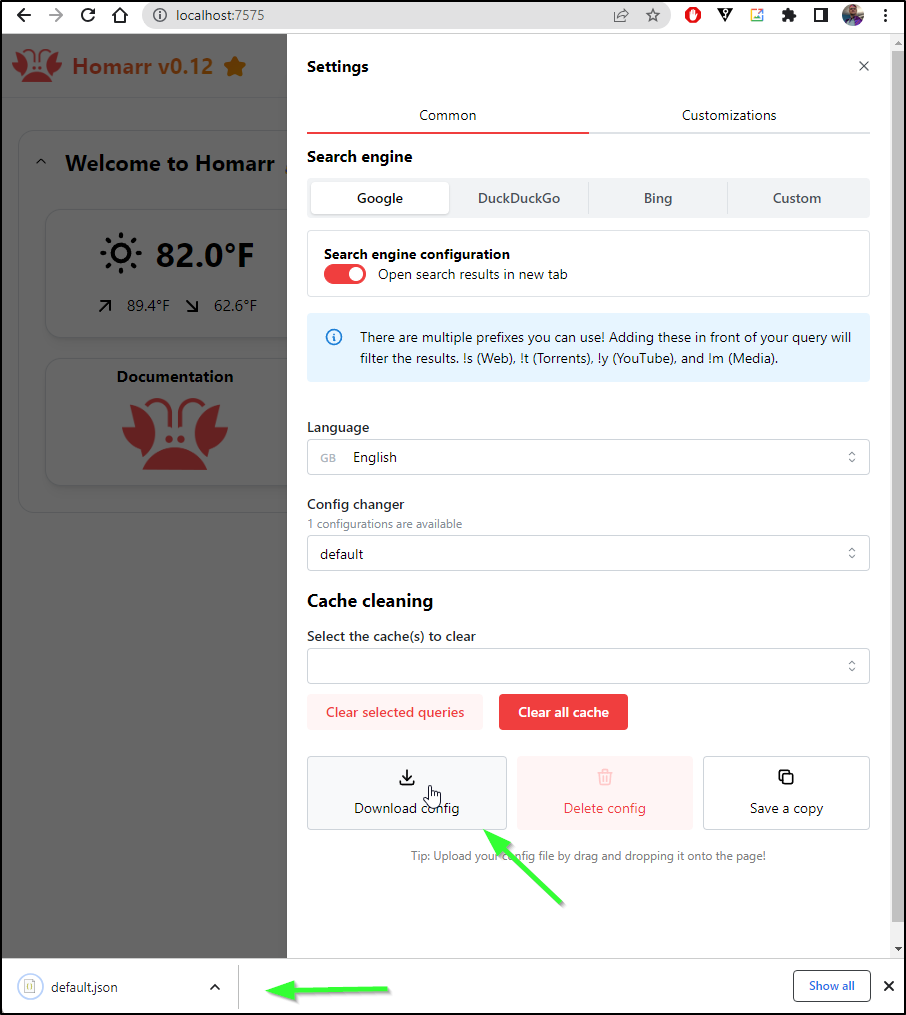

I’ll click the hamburger icon and choose “settings”

I can now download the config

I can confirm, as well, that the docker container is updating the default.json in the data directory we created

$ cat /home/builder/homarr/configs/default.json | jq '.widgets[] | select(.type=="weather") | .properties.location.name'

"Woodbury"

Widgets

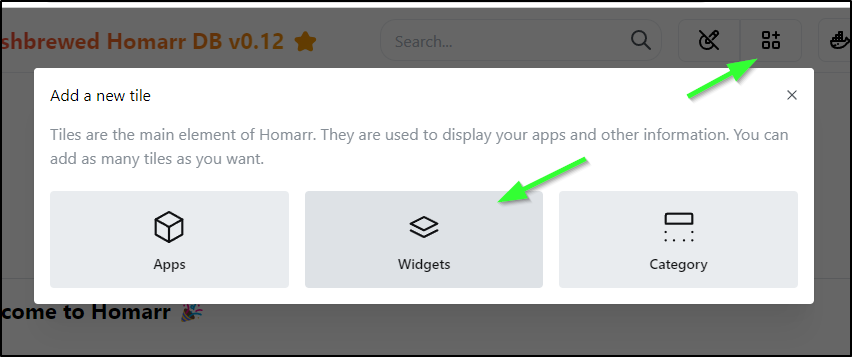

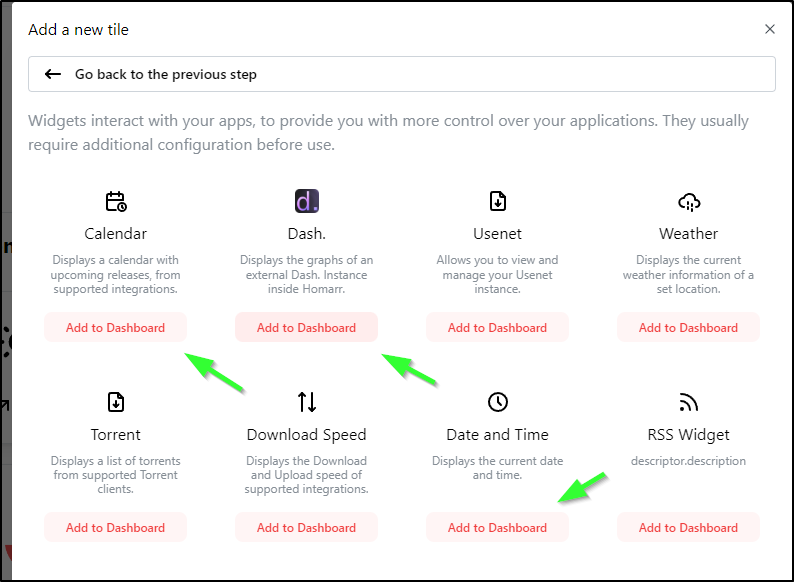

Let’s add some widgets

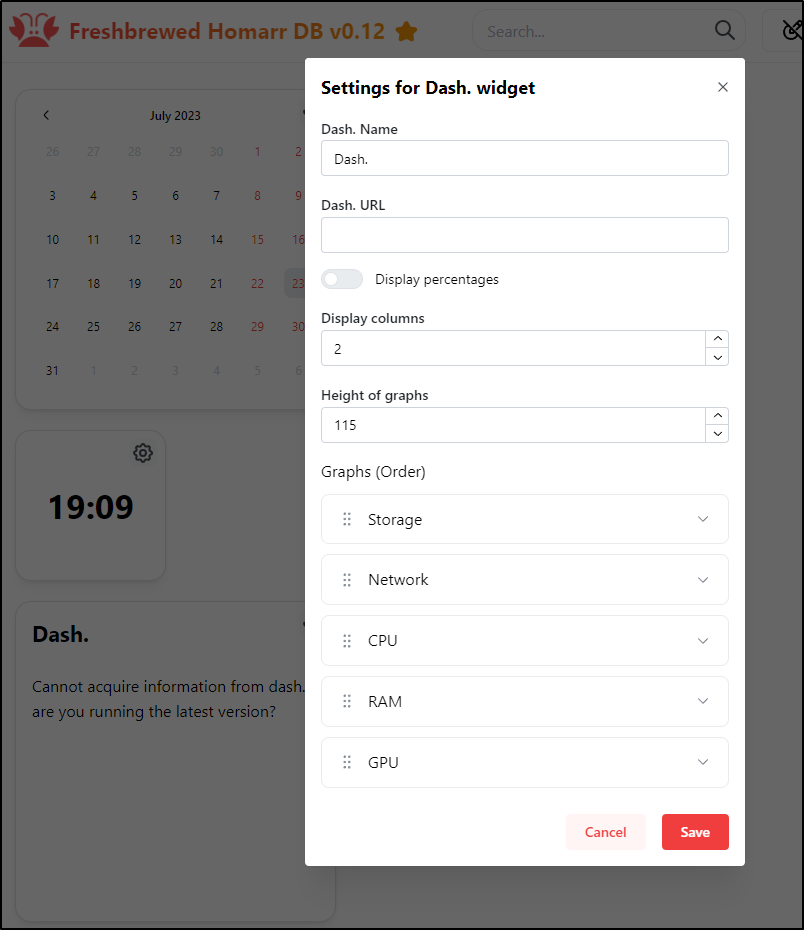

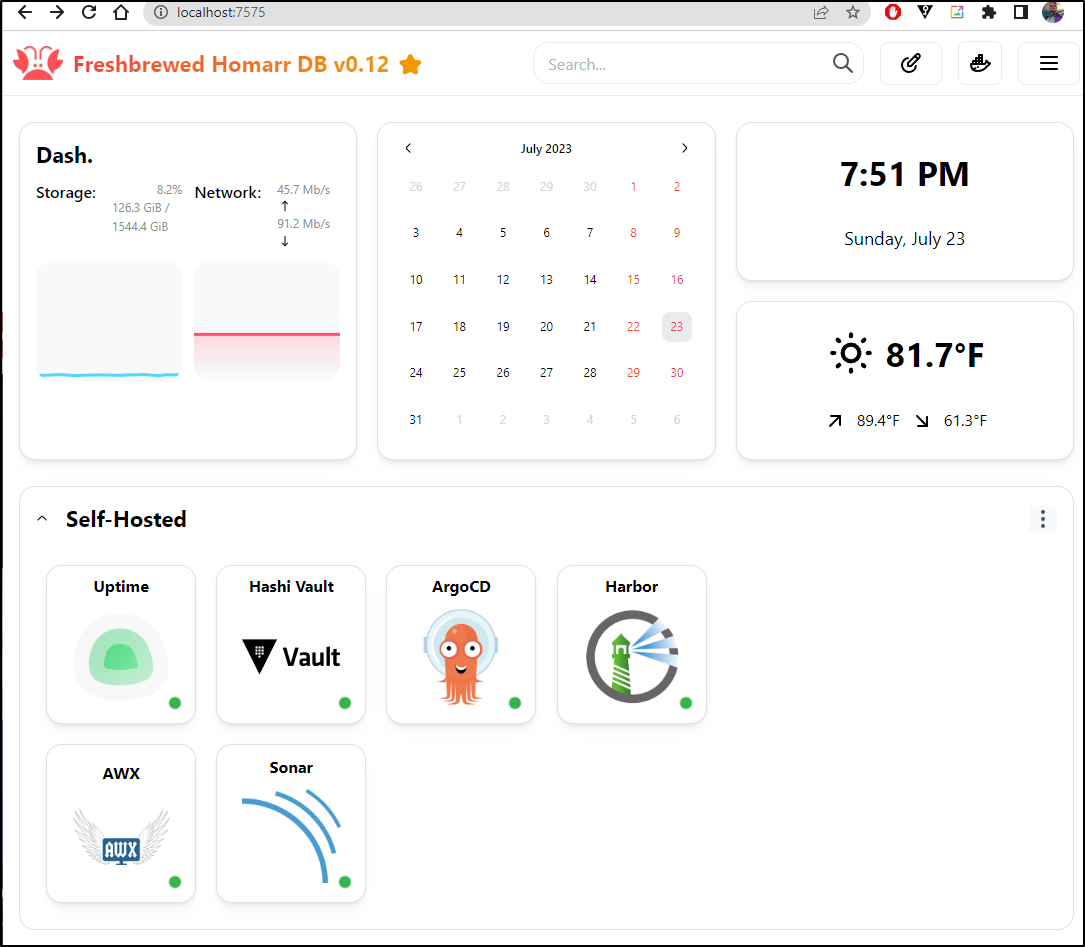

I’ll add Calendar, Dash and Date and Time

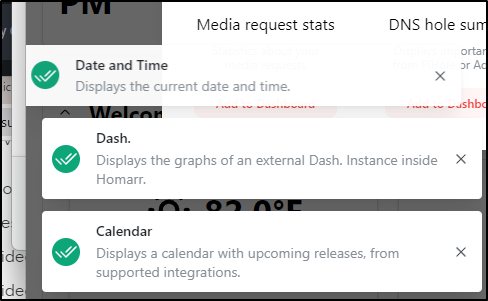

I see a quick little pop-up notification in the bottom left to let me know they are being installed

The Calendar and Data and Time were fine, but clearly Dash expects a running dash container

We can follow the steps. I want to run it in daemon mode:

$ docker container run -d -p 3001:3001 -v /:/mnt/host:ro --privileged mauricenino/dashdot

Unable to find image 'mauricenino/dashdot:latest' locally

latest: Pulling from mauricenino/dashdot

f56be85fc22e: Pull complete

931b0e865bc2: Pull complete

60542df8b663: Pull complete

062e26bc2446: Pull complete

ede9886f30d6: Pull complete

8d3380cddd3d: Pull complete

129cc582bb71: Pull complete

f25eb9fcb368: Pull complete

12698d949180: Pull complete

a56ce646a635: Pull complete

eeec0c2ddc61: Pull complete

528ab4cd066b: Pull complete

Digest: sha256:8b81b6f2c49fb19df61d2bd7b42d798a97f4cf34d0b90f7f27c17070389ee0df

Status: Downloaded newer image for mauricenino/dashdot:latest

cff97b1cc6d605eccb76227573b968496954c2d6496e66630894182a748beb68

docker: Error response from daemon: driver failed programming external connectivity on endpoint bold_mendeleev (7673593cb5ce0e02d0ff200ef279e6e95f2cea329c2f54c19b99dd6b175b3bde): Bind for 0.0.0.0:3001 failed: port is already allocated.

# Ill use a different port

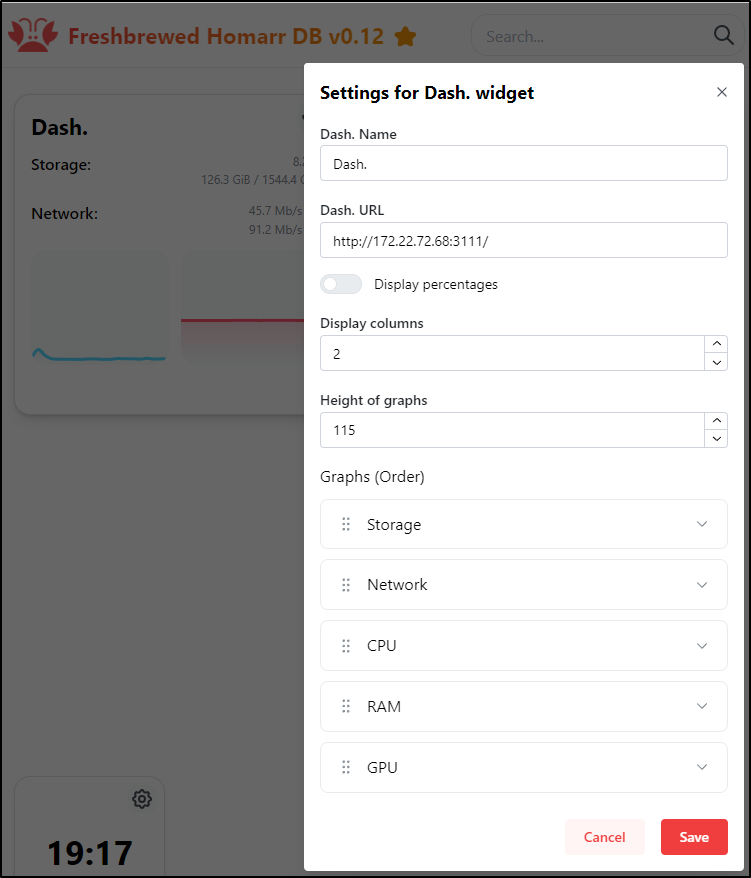

$ docker container run -d -p 3111:3001 -v /:/mnt/host:ro --privileged mauricenino/dashdot

8fa2c1da5048d4a60feed560961e2a9d490449fb1ae883b38b3c3eb4fb15ab9f

I was inclined to use the IP of the Dash container

$ docker inspect -f '{{range.NetworkSettings.Networks}}{{.IPAddress}}{{end}}' epic_perlman

172.17.0.4

But it actually uses the IP of my WSL instead

builder@DESKTOP-QADGF36:~/Workspaces/zipkin-ruby-example$ ifconfig | grep inet

inet 172.22.72.68 netmask 255.255.240.0 broadcast 172.22.79.255

I made a few tweaks to show the CPUs and widen the widget

Apps

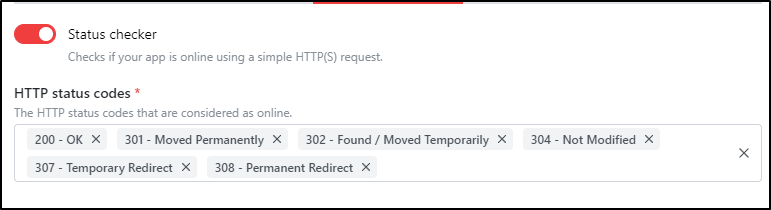

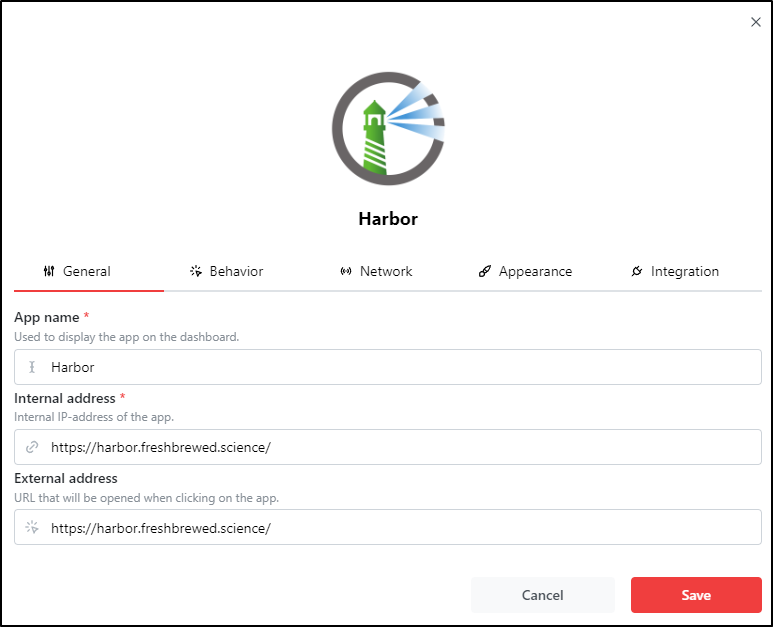

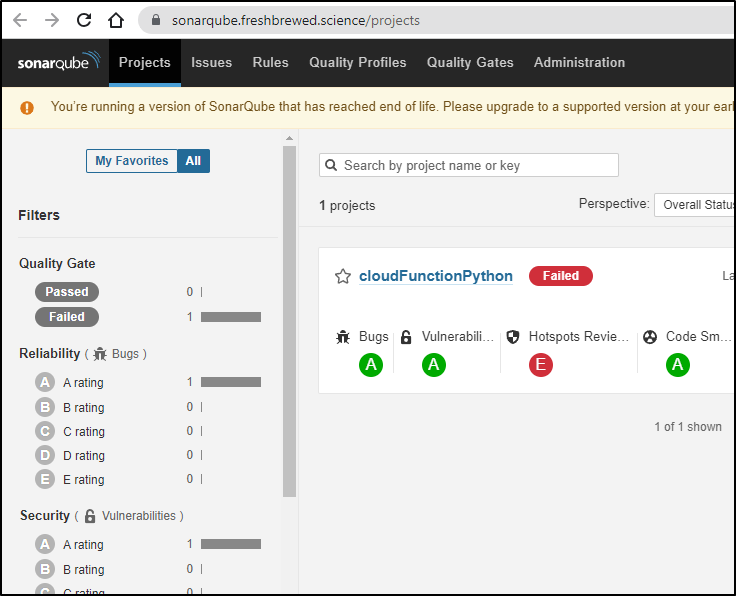

We can add an “App” which is just a web service. The optional “Network” check will check for proper http return code.

Here, I’ll add my self-hosted Harbor instance

Homarr crashed after adding the App the first time and I lost my config at the time. I would recommend doing a quick backup next time.

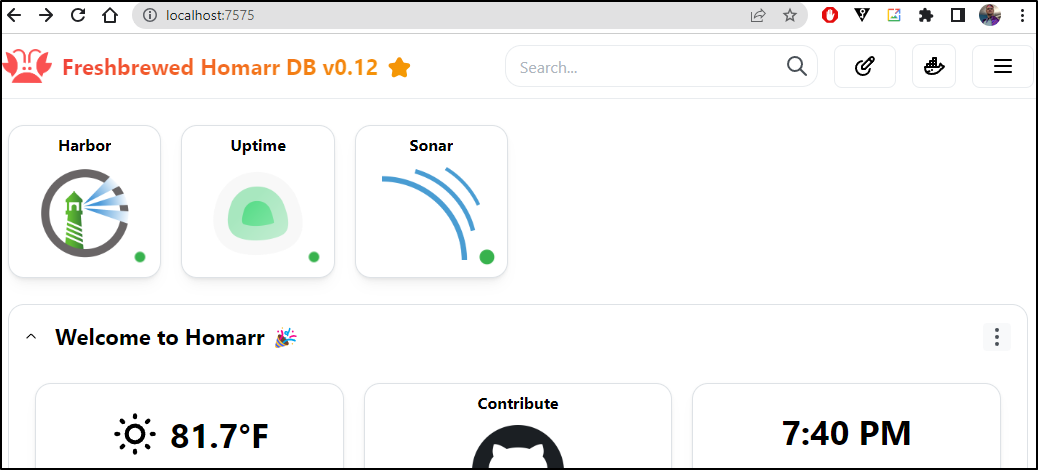

That said, I added several back and saved. Initially they showed “red” (not up), but it seems to do the checks after saving the config, because they all flipped green soon after

The icons not only serve as a health check, but also a link to the app

Now that I have it just the way I want it

I downloaded the Config

I’m not a Dark Mode fan, but I know a lot of folks are; so here is the same page in Dark Mode

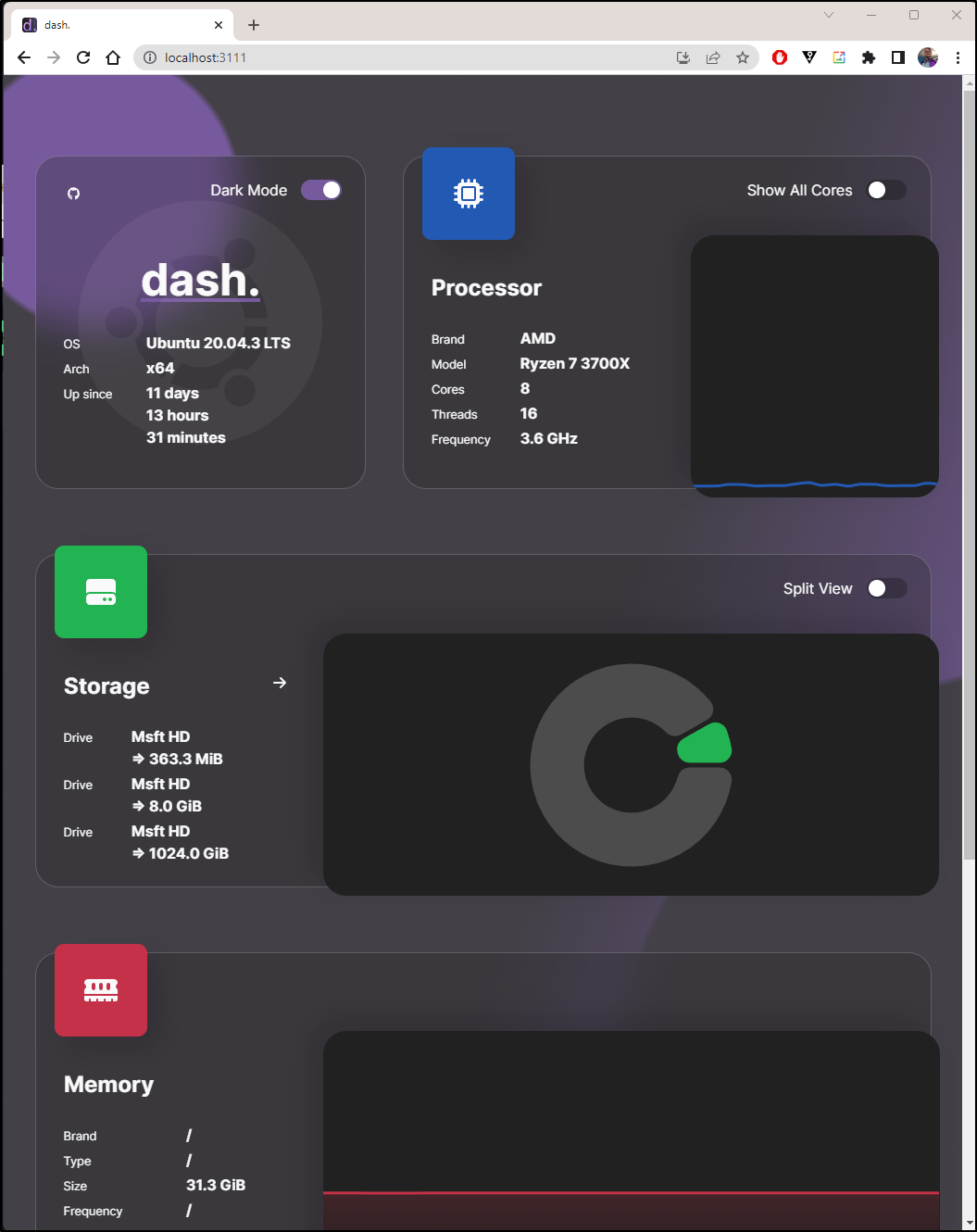

Dash

We launched Dash and I don’t want to gloss over that.

We can view the Dash (?dashboard) on the 3111 port we mapped (normally 3001, but i had Uptime Kuma running there)

We can explore “light” and “dark” modes which I think are misleading as they are muted and wild pastels. I love both.

Kubernetes

There is no guide for Homarr in Kubernetes. That doesn’t mean we cannot do it. Let’s build the YAML together.

We need two PVCs for the container configs (icons and configs) and then a deployment that will expose port 7575

$ cat createHomarr.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: icons-pvc

spec:

storageClassName: local-path

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: configs-pvc

spec:

storageClassName: local-path

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: homarr-deployment

labels:

app: homarr

spec:

replicas: 1

selector:

matchLabels:

app: homarr

template:

metadata:

labels:

app: homarr

spec:

containers:

- name: homarr-container

image: ghcr.io/ajnart/homarr:latest

ports:

- containerPort: 7575

volumeMounts:

- name: configs-volume

mountPath: /app/data/configs

- name: icons-volume

mountPath: /app/public/icons

volumes:

- name: configs-volume

persistentVolumeClaim:

claimName: configs-pvc # Replace "configs-pvc" with the name of your PersistentVolumeClaim for configs.

- name: icons-volume

persistentVolumeClaim:

claimName: icons-pvc # Replace "icons-pvc" with the name of your PersistentVolumeClaim for icons.

$ kubectl apply -f createHomarr.yaml

persistentvolumeclaim/icons-pvc created

persistentvolumeclaim/configs-pvc created

deployment.apps/homarr-deployment created

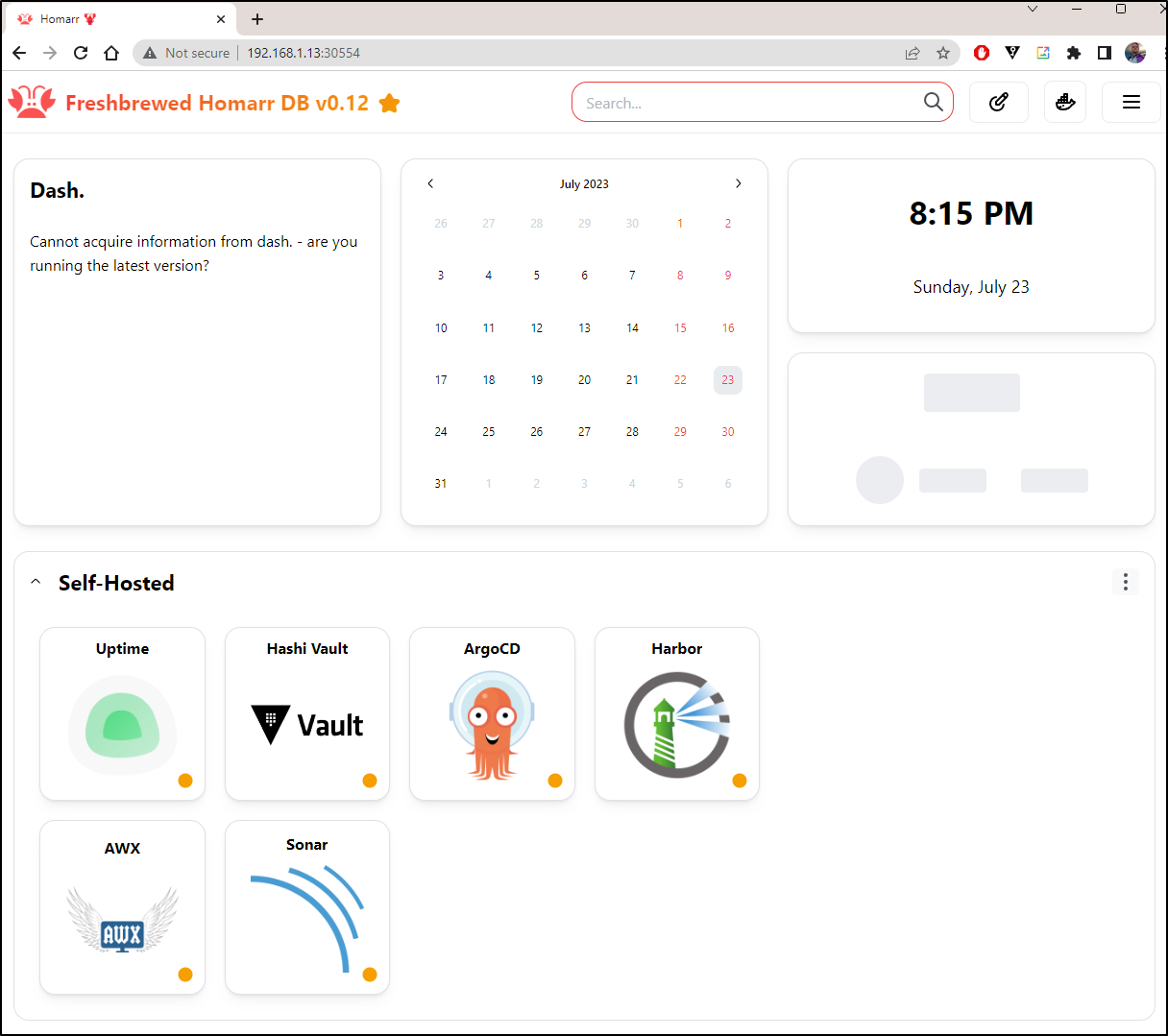

Since I have Homarr running in Docker right now on 7575, I’ll use port 7576 in my port-forward

$ kubectl port-forward homarr-deployment-5b6cf788d9-fj6vr 7576:7575

Forwarding from 127.0.0.1:7576 -> 7575

Forwarding from [::1]:7576 -> 7575

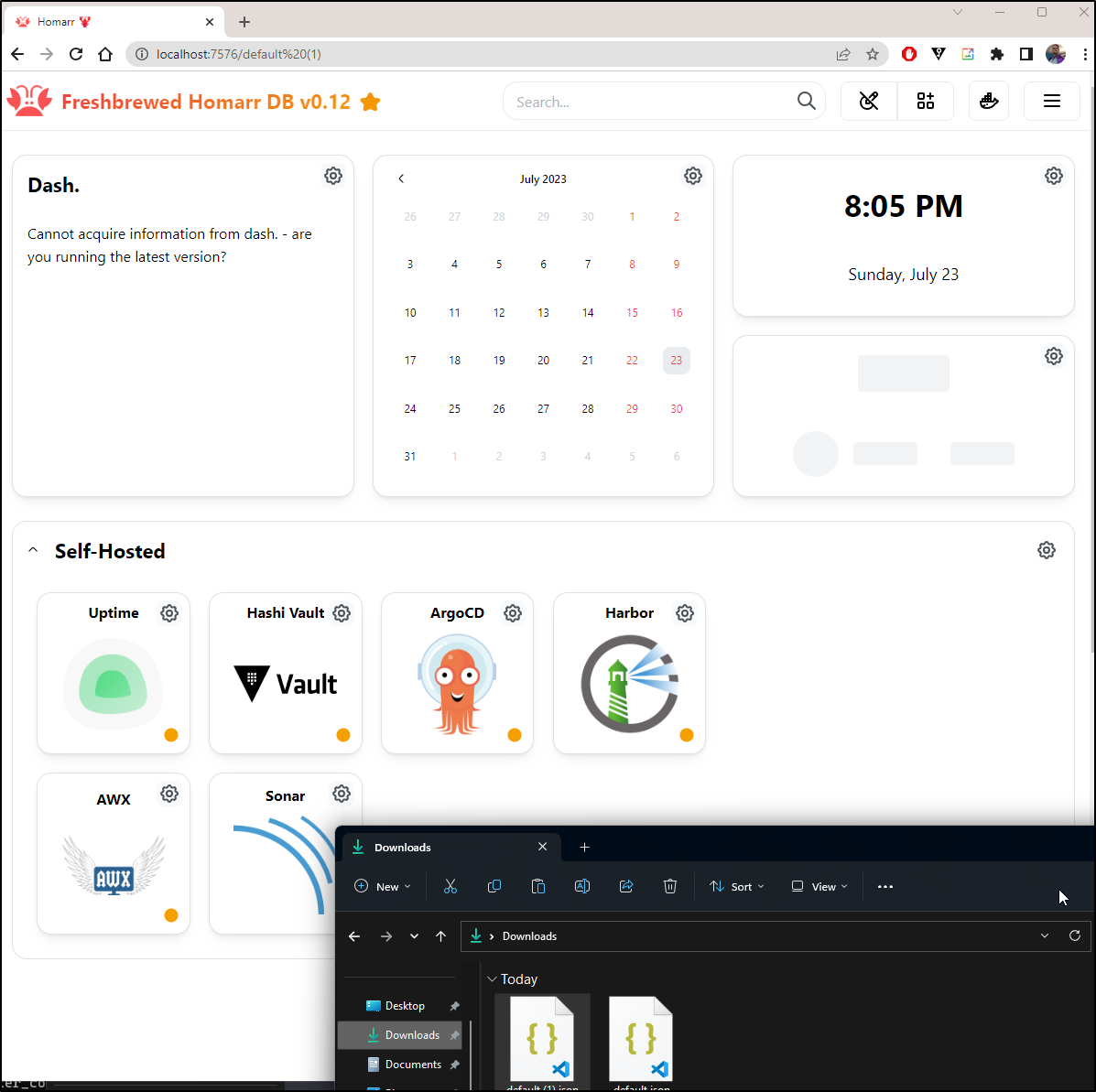

And it came right up

I dragged and dropped my config into the settings page and I could see it updated

I did exit and save and saw it seem to take affect

I could also see the logs of the pod

$ kubectl logs homarr-deployment-5b6cf788d9-fj6vr

Listening on port 7575 url: http://homarr-deployment-5b6cf788d9-fj6vr:7575

ℹ Requested frontend content of configuration 'default'

ℹ Requested frontend content of configuration 'default'

ℹ Saving updated configuration of 'default (1)' config.

ℹ Requested frontend content of configuration 'default (1)'

ℹ Requested frontend content of configuration 'default (1)'

ℹ Saving updated configuration of 'default (1)' config.

ℹ Requested frontend content of configuration 'default (1)'

ℹ Requested frontend content of configuration 'default (1)'

ℹ Requested frontend content of configuration 'default (1)'

ℹ Requested frontend content of configuration 'default (1)'

I decided to cycle the pod just to be sure the saves took

$ kubectl delete pod homarr-deployment-5b6cf788d9-fj6vr

pod "homarr-deployment-5b6cf788d9-fj6vr" deleted

$ kubectl get pods | grep homarr

homarr-deployment-5b6cf788d9-dwb6x 1/1 Running 0 24s

$ kubectl port-forward homarr-deployment-5b6cf788d9-dwb6x 7577:7575

Forwarding from 127.0.0.1:7577 -> 7575

Forwarding from [::1]:7577 -> 7575

Indeed they did

One might be tempted to expose Homarr with a proper TLS ingress - and you could. But then you would have un-authed users doing whatever they want. Until we can get a read-only mode or user auth, it would be inadvisable to do that without tight CIDR Restrictions.

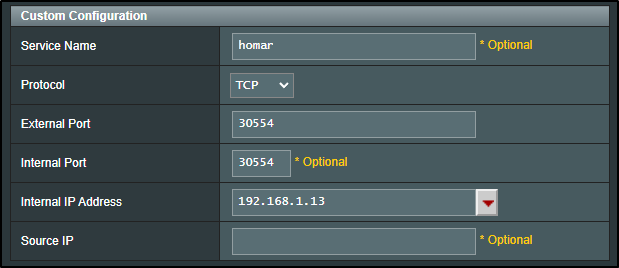

That said, we can expose with NodePort

builder@DESKTOP-QADGF36:~/Workspaces/zipkin-ruby-example$ kubectl expose deployment homarr-deployment --type=NodePort --name homarsvc

service/homarsvc exposed

builder@DESKTOP-QADGF36:~/Workspaces/zipkin-ruby-example$ kubectl get svc homarsvc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

homarsvc NodePort 10.43.109.19 <none> 7575:30554/TCP 6s

Which created

$ kubectl get svc homarsvc -o yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2023-07-24T01:13:29Z"

labels:

app: homarr

name: homarsvc

namespace: default

resourceVersion: "583773"

uid: fde4f434-d687-418a-973e-6d231695d81a

spec:

clusterIP: 10.43.109.19

clusterIPs:

- 10.43.109.19

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- nodePort: 30554

port: 7575

protocol: TCP

targetPort: 7575

selector:

app: homarr

sessionAffinity: None

type: NodePort

status:

loadBalancer: {}

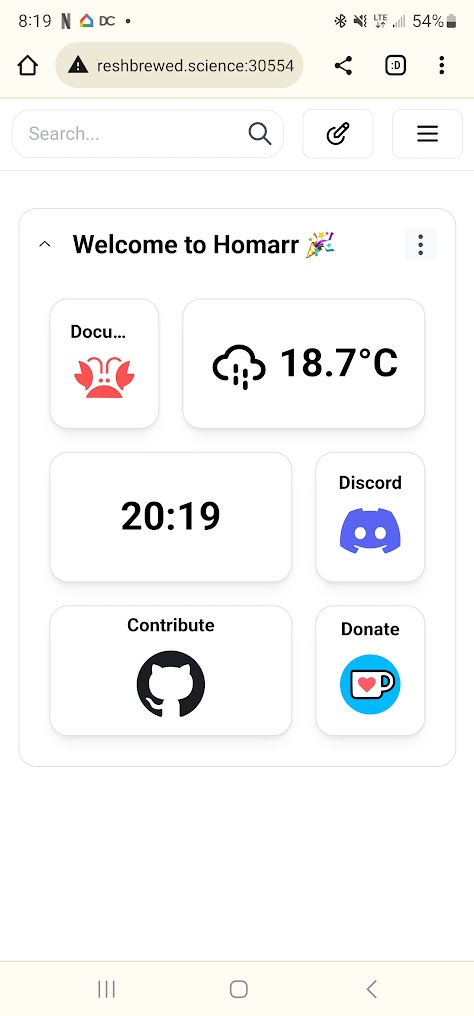

And I can now hit homarr with any host in the cluster on port 30554

Which if I was a nutter butter I could expose externally

I tested using my phone to show it was exposed externally (before removing the forwarding rule)

Summary

Homar is a great new project. I like the crab icon (reminds me of Aiven).

It has a lot of promise. We can see the Github Project is quite active which is what you want to see in a good Open-Source project. The Initial Release was just over a year ago in May.

I would love to see a proper helm chart (perhaps I can contribute that) and some form of login and/or edit mode requiring a password.