Published: Jul 13, 2023 by Isaac Johnson

I saw a recent announcement that Ngrok now has a native Ingress controller for K8s. I actually really like Ngrok already for making an easy to use forwarding dynamic DNS standalone ingress agent. This would take it a step further.

Setup

Let’s start with a basic demo k3s cluster.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 13d v1.26.4+k3s1

isaac-macbookpro Ready <none> 13d v1.26.4+k3s1

builder-macbookpro2 Ready <none> 13d v1.26.4+k3s1

We can see while i have a Metrics server, Local path provisioner and CoreDNS, I do not have an NGinx nor Istio ingress controller

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-59b4f5bbd5-848mv 1/1 Running 0 12d

kube-system local-path-provisioner-76d776f6f9-t9glp 1/1 Running 0 12d

kube-system metrics-server-7b67f64457-zcr22 1/1 Running 0 12d

portainer portainer-68ff748bd8-5w9wx 1/1 Running 0 33h

I’ll add the NGrok Helm repo and update

$ helm repo add ngrok https://ngrok.github.io/kubernetes-ingress-controller

"ngrok" already exists with the same configuration, skipping

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "epsagon" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "freshbrewed" chart repository

...Successfully got an update from the "myharbor" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

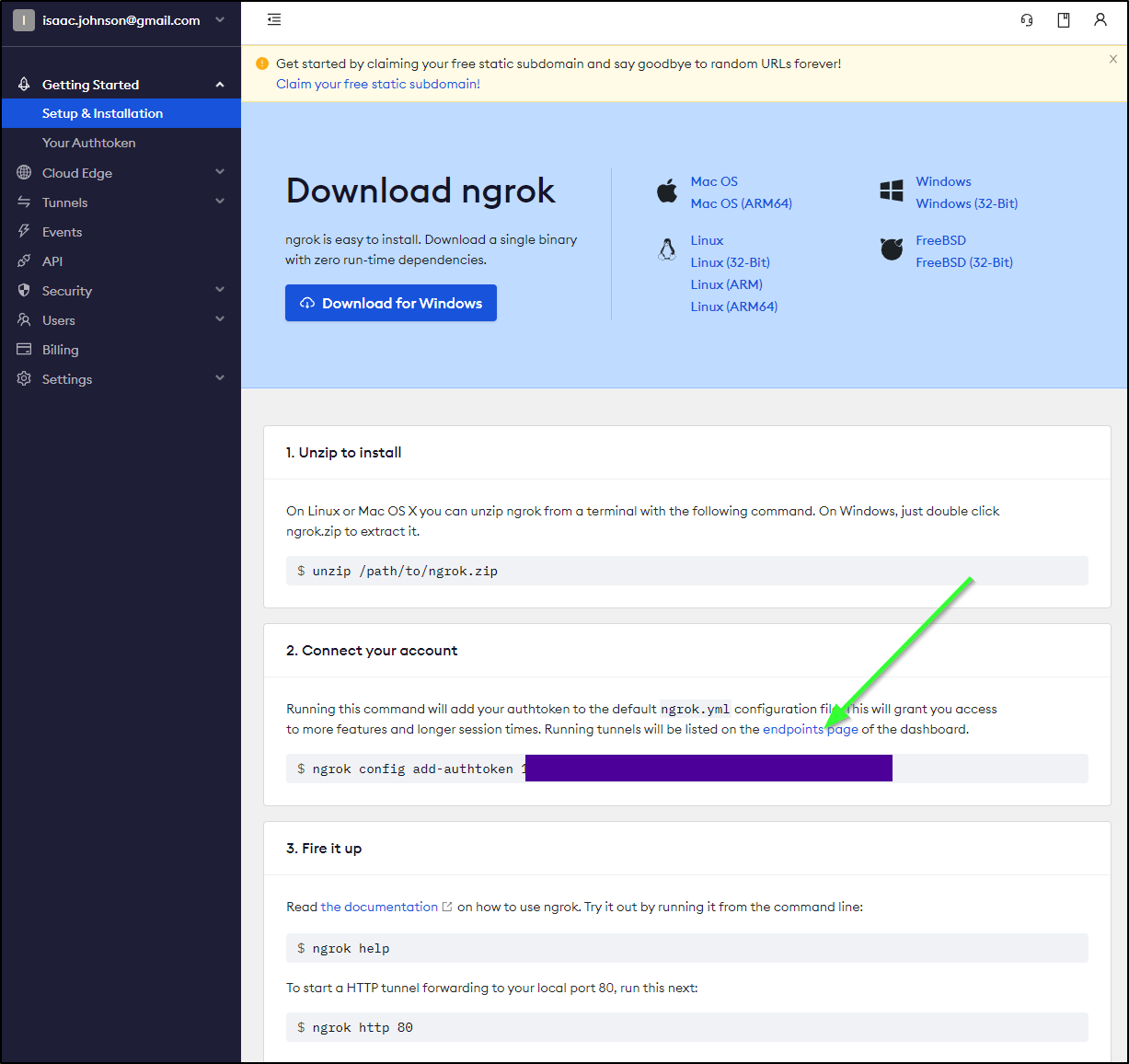

For the next step, we’ll need an Auth Token and API Key

The Auth token is often found on your main page in the NGrok Dashboard

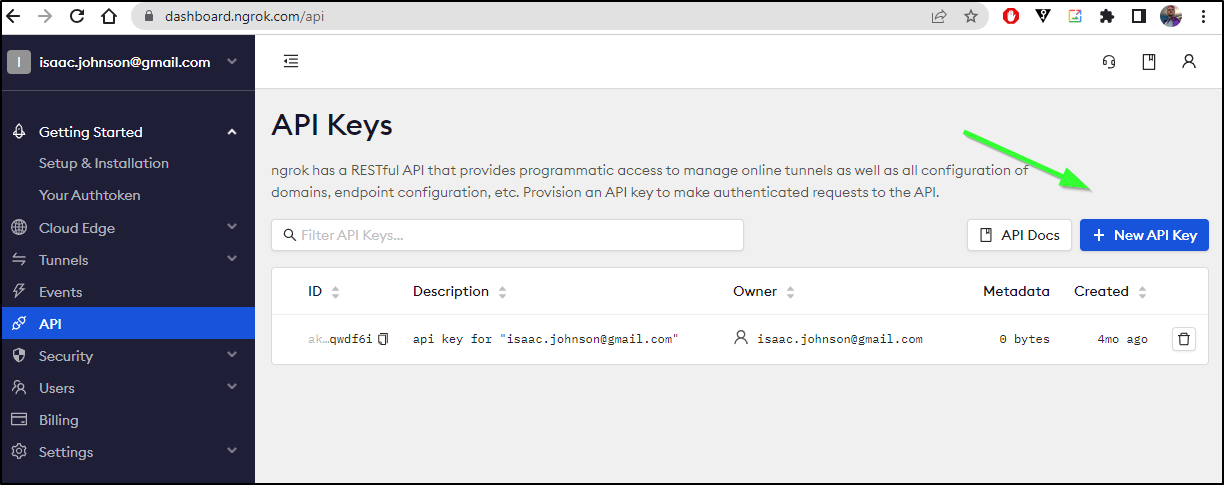

As for the API Key, we can go the API Keys page and click the “New API Key” button to do so.

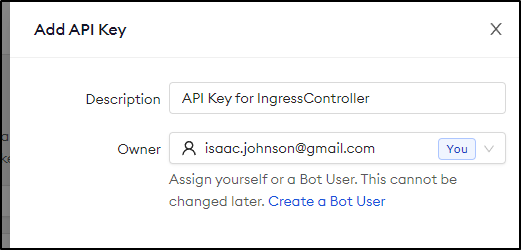

We can give it a description and optionally change ownership

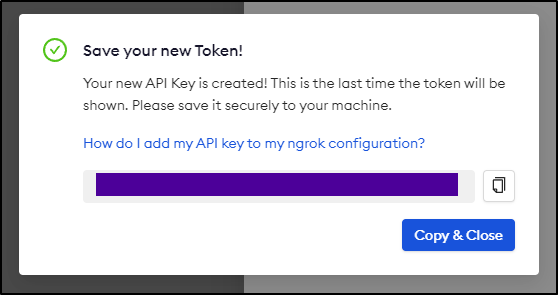

It will then show you the API key once

We can now use those in the Helm install command

$ helm install ngrok-ingress-controller ngrok/kubernetes-ingress-controller \

> --namespace ngrok-ingress-controller \

> --create-namespace \

> --set credentials.apiKey=$NGROK_API_KEY \

> --set credentials.authtoken=$NGROK_AUTHTOKEN

NAME: ngrok-ingress-controller

LAST DEPLOYED: Sun Jul 9 08:17:54 2023

NAMESPACE: ngrok-ingress-controller

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

================================================================================

The ngrok Ingress controller has been deployed as a Deployment type to your

cluster.

If you haven't yet, create some Ingress resources in your cluster and they will

be automatically configured on the internet using ngrok.

One example, taken from your cluster, is the Service:

"metrics-server"

You can make this accessible via Ngrok with the following manifest:

--------------------------------------------------------------------------------

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: metrics-server

namespace: kube-system

spec:

ingressClassName: ngrok

rules:

- host: metrics-server-TK0cjlAQ.ngrok.app

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: metrics-server

port:

number: 443

--------------------------------------------------------------------------------

Applying this manifest will make the service "metrics-server"

available on the public internet at "https://metrics-server-TK0cjlAQ.ngrok.app/".

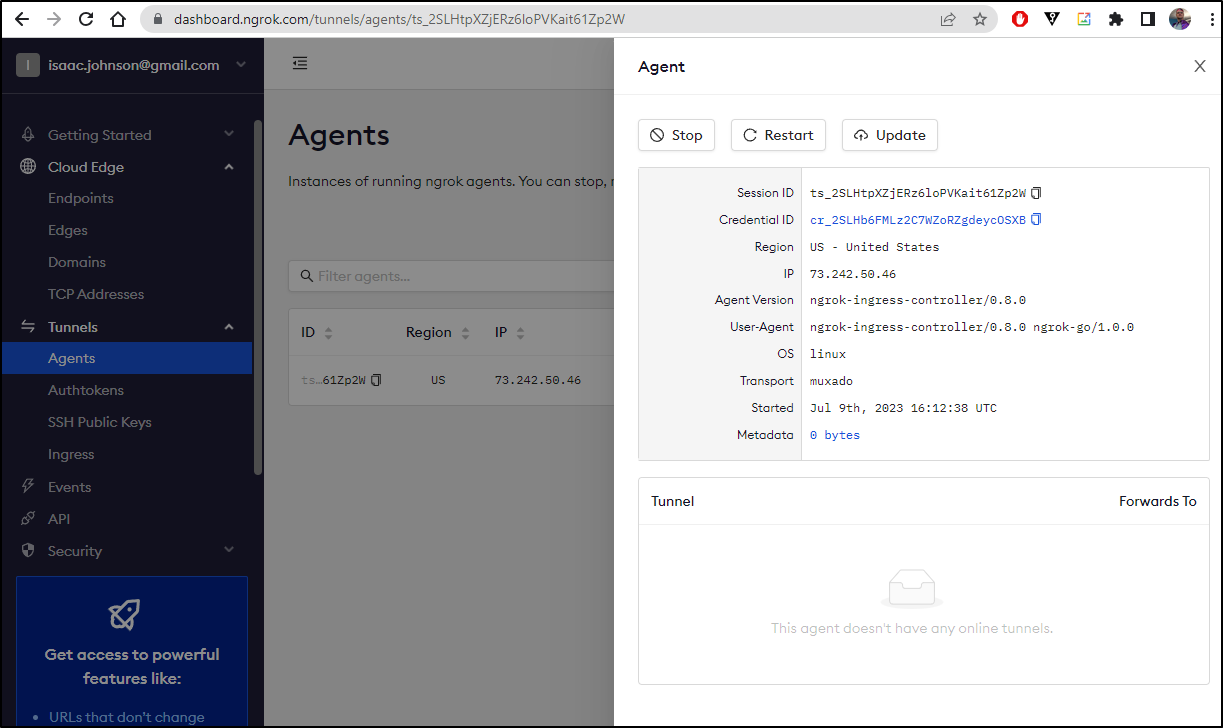

Once done, view your edges in the Dashboard https://dashboard.ngrok.com/cloud-edge/edges

Find the tunnels running in your cluster here https://dashboard.ngrok.com/tunnels/agents

If you have any questions or feedback, please join us in https://ngrok.com/slack and let us know!

Testing

Let’s now use my favourite off-the-shelf demo app, the Azure Vote App.

$ helm repo add azure-samples https://azure-samples.github.io/helm-charts/

"azure-samples" already exists with the same configuration, skipping

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "epsagon" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "myharbor" chart repository

...Successfully got an update from the "freshbrewed" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

$ helm install azure-samples/azure-vote --generate-name

NAME: azure-vote-1688908760

LAST DEPLOYED: Sun Jul 9 08:19:21 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Azure Vote application has been started on your Kubernetes cluster.

Title: Azure Vote App

Vote 1 value: Cats

Vote 2 value: Dogs

The externally accessible IP address can take a minute or so to provision. Run the following command to monitor the provisioning status. Once an External IP address has been provisioned, brows to this IP address to access the Azure Vote application.

kubectl get service -l name=azure-vote-front -w

Once I see the pods are created

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

vote-back-azure-vote-1688908760-66b87b78df-trv4z 1/1 Running 0 98s

vote-front-azure-vote-1688908760-855c6ccc95-kmtl9 1/1 Running 0 98s

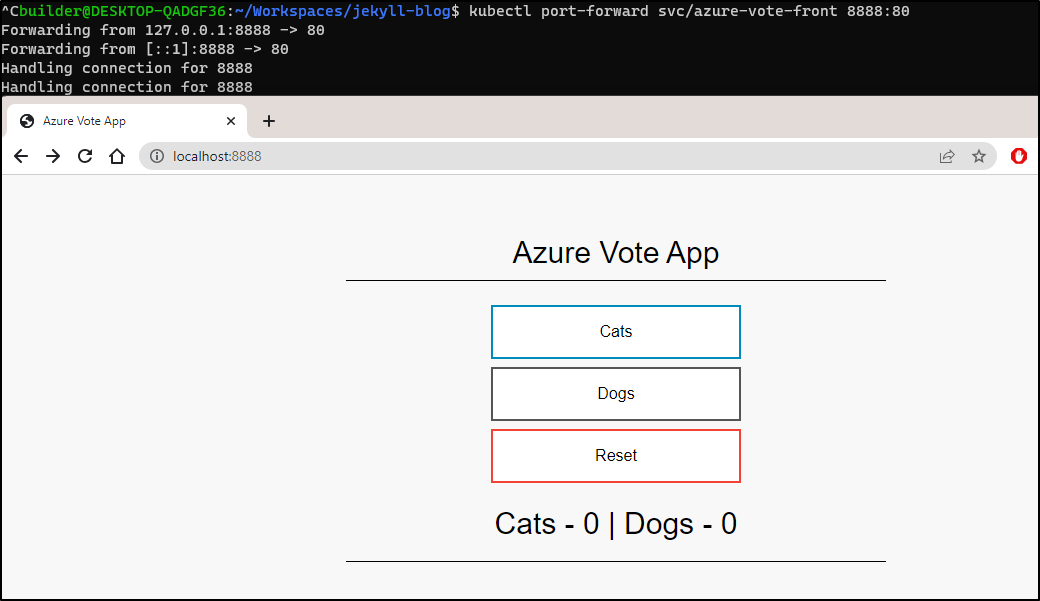

Then I’ll port-forward to test

$ kubectl port-forward svc/azure-vote-front 8888:80

Forwarding from 127.0.0.1:8888 -> 80

Forwarding from [::1]:8888 -> 80

Handling connection for 8888

Handling connection for 8888

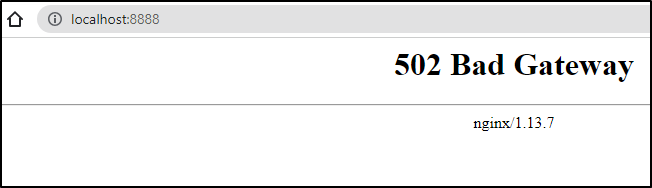

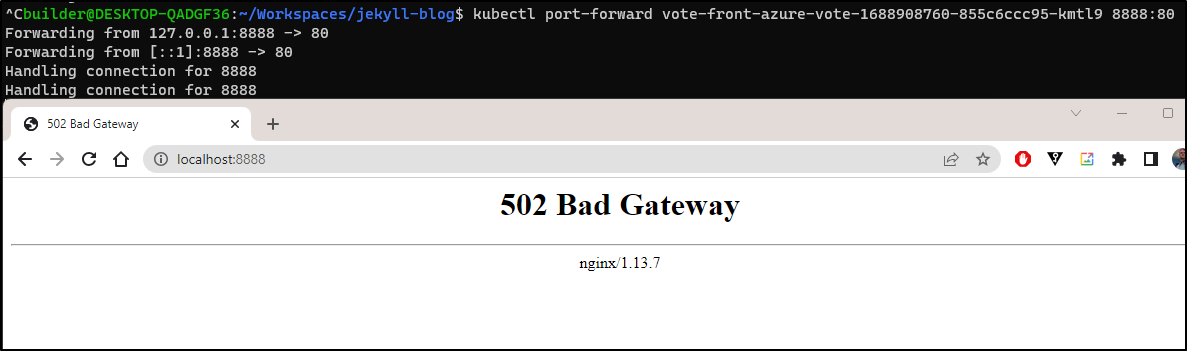

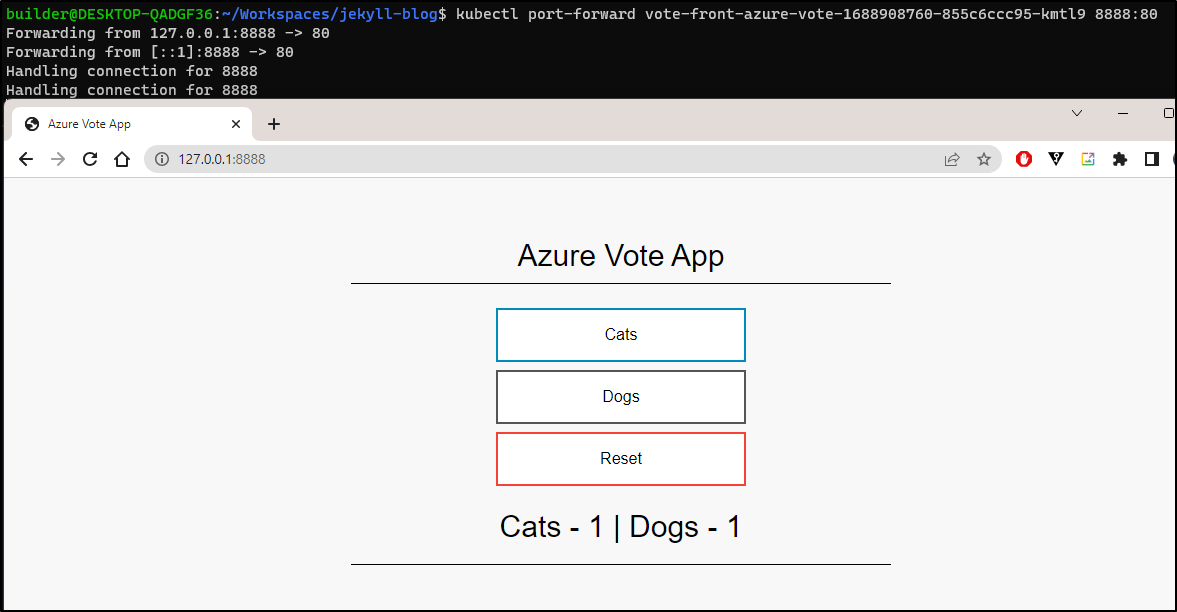

Using the service, it gave me a 502 when i tried to vote

as well as the pod

Though I realized that happened once before and using 127.0.0.1 for whatever reason solved it (and it did here too)

Before we setup the Ingress with NGrok, let’s check our service

$ kubectl get svc azure-vote-front -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

meta.helm.sh/release-name: azure-vote-1688908760

meta.helm.sh/release-namespace: default

creationTimestamp: "2023-07-09T13:19:24Z"

finalizers:

- service.kubernetes.io/load-balancer-cleanup

labels:

app.kubernetes.io/managed-by: Helm

name: azure-vote-front

name: azure-vote-front

namespace: default

resourceVersion: "565769"

uid: 33b87bf0-48a9-4376-9903-1e0c35d78af2

spec:

allocateLoadBalancerNodePorts: true

clusterIP: 10.43.236.182

clusterIPs:

- 10.43.236.182

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- nodePort: 30996

port: 80

protocol: TCP

targetPort: 80

selector:

app: vote-front-azure-vote-1688908760

sessionAffinity: None

type: LoadBalancer

status:

loadBalancer:

ingress:

- ip: 192.168.1.13

- ip: 192.168.1.159

- ip: 192.168.1.206

The service uses 80 so we should be able to create an NGrok ingress with

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: azure-vote-front

spec:

ingressClassName: ngrok

rules:

- host: azure-vote-front-tk0cjlaq.ngrok.app

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: azure-vote-front

port:

number: 80

I’ll create it

$ kubectl apply -f ingress-ngrok.yaml

ingress.networking.k8s.io/azure-vote-front created

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

azure-vote-front ngrok azure-vote-front-tk0cjlaq.ngrok.app 80 26s

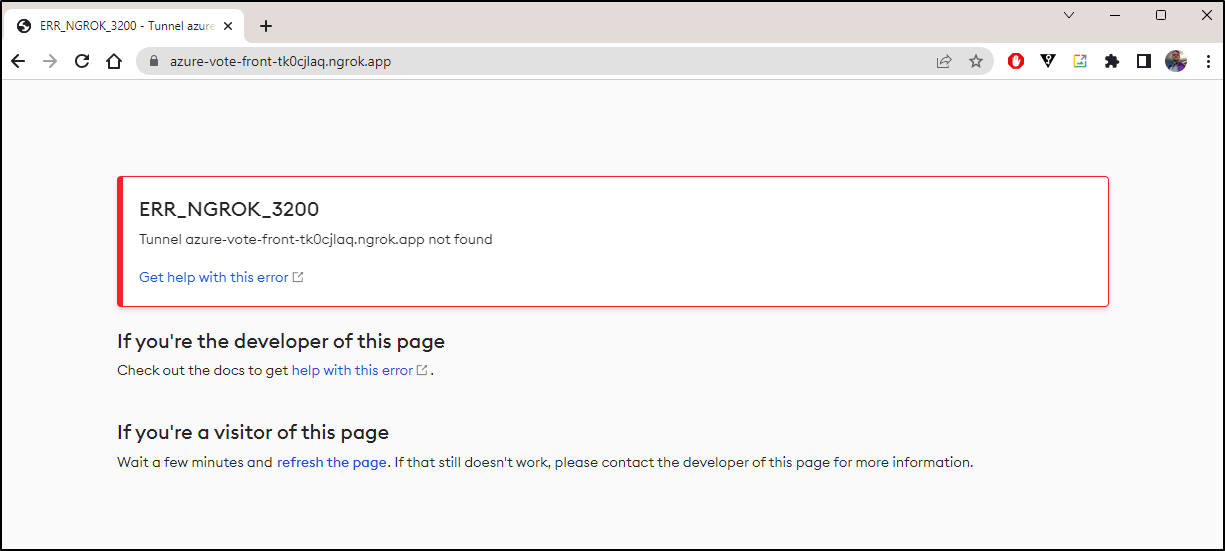

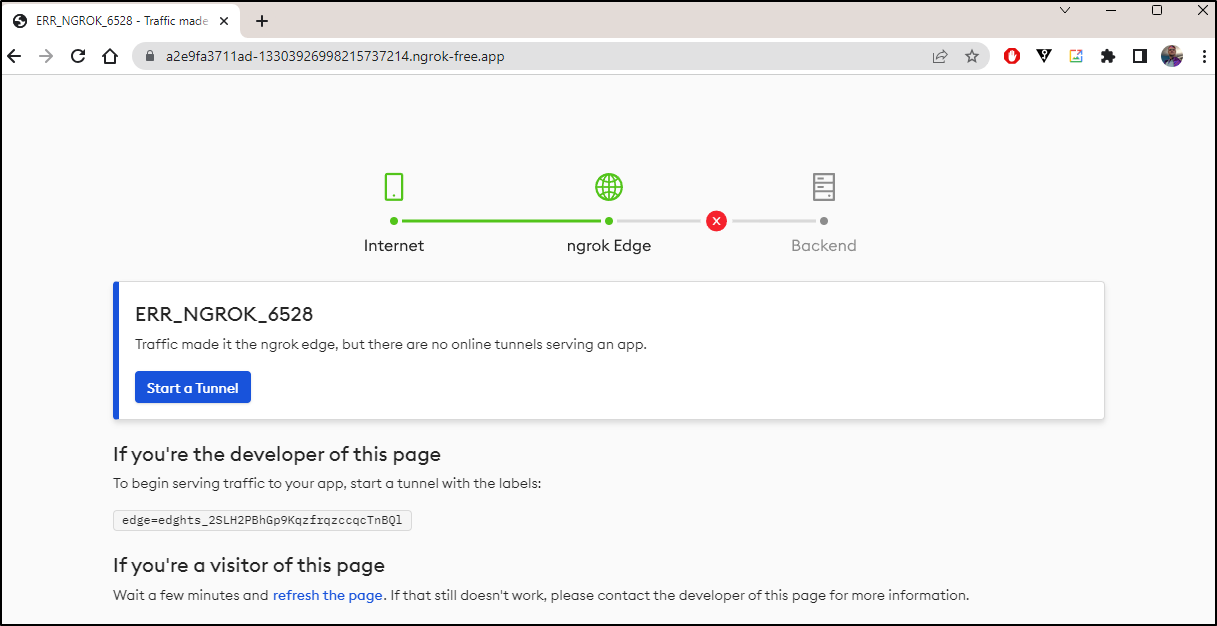

which didn’t work

I tried a few times, even giving plenty of time to create a tunnel

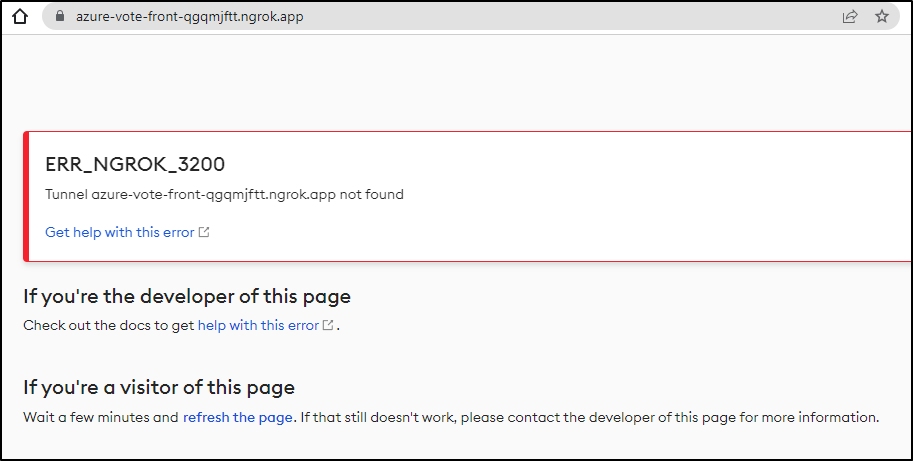

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

azure-vote-front ngrok azure-vote-front-qgqmjftt.ngrok.app 80 9s

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

azure-vote-front ngrok azure-vote-front-qgqmjftt.ngrok.app 80 95m

That’s when the error became clear. It’s about money.

Looking at the Ingress controller logs

$ kubectl logs ngrok-ingress-controller-kubernetes-ingress-controller-mann55k2 -n ngrok-ingress-controller | head -n 40

{"level":"info","ts":"2023-07-09T13:42:24Z","logger":"setup","msg":"starting manager","version":"0.8.0","commit":"2d38bc7e6cbc1e413f6d75a7e15e781242c7674f"}

{"level":"info","ts":"2023-07-09T13:42:24Z","logger":"controller-runtime.metrics","msg":"Metrics server is starting to listen","addr":":8080"}

{"level":"info","ts":"2023-07-09T13:42:25Z","logger":"setup","msg":"no matching ingresses found","all ingresses":null,"all ngrok ingresses":null,"all ingress classes":[{"metadata":{"name":"ngrok","uid":"4e2f529f-b1dc-4c14-a8eb-8419e6957e8a","resourceVersion":"567369","generation":1,"creationTimestamp":"2023-07-09T13:42:23Z","labels":{"app.kubernetes.io/component":"controller","app.kubernetes.io/instance":"ngrok-ingress-controller","app.kubernetes.io/managed-by":"Helm","app.kubernetes.io/name":"kubernetes-ingress-controller","app.kubernetes.io/part-of":"kubernetes-ingress-controller","app.kubernetes.io/version":"0.8.0","helm.sh/chart":"kubernetes-ingress-controller-0.10.0"},"annotations":{"meta.helm.sh/release-name":"ngrok-ingress-controller","meta.helm.sh/release-namespace":"ngrok-ingress-controller"},"managedFields":[{"manager":"helm","operation":"Update","apiVersion":"networking.k8s.io/v1","time":"2023-07-09T13:42:23Z","fieldsType":"FieldsV1","fieldsV1":{"f:metadata":{"f:annotations":{".":{},"f:meta.helm.sh/release-name":{},"f:meta.helm.sh/release-namespace":{}},"f:labels":{".":{},"f:app.kubernetes.io/component":{},"f:app.kubernetes.io/instance":{},"f:app.kubernetes.io/managed-by":{},"f:app.kubernetes.io/name":{},"f:app.kubernetes.io/part-of":{},"f:app.kubernetes.io/version":{},"f:helm.sh/chart":{}}},"f:spec":{"f:controller":{}}}}]},"spec":{"controller":"k8s.ngrok.com/ingress-controller"}}],"all ngrok ingress classes":[{"metadata":{"name":"ngrok","uid":"4e2f529f-b1dc-4c14-a8eb-8419e6957e8a","resourceVersion":"567369","generation":1,"creationTimestamp":"2023-07-09T13:42:23Z","labels":{"app.kubernetes.io/component":"controller","app.kubernetes.io/instance":"ngrok-ingress-controller","app.kubernetes.io/managed-by":"Helm","app.kubernetes.io/name":"kubernetes-ingress-controller","app.kubernetes.io/part-of":"kubernetes-ingress-controller","app.kubernetes.io/version":"0.8.0","helm.sh/chart":"kubernetes-ingress-controller-0.10.0"},"annotations":{"meta.helm.sh/release-name":"ngrok-ingress-controller","meta.helm.sh/release-namespace":"ngrok-ingress-controller"},"managedFields":[{"manager":"helm","operation":"Update","apiVersion":"networking.k8s.io/v1","time":"2023-07-09T13:42:23Z","fieldsType":"FieldsV1","fieldsV1":{"f:metadata":{"f:annotations":{".":{},"f:meta.helm.sh/release-name":{},"f:meta.helm.sh/release-namespace":{}},"f:labels":{".":{},"f:app.kubernetes.io/component":{},"f:app.kubernetes.io/instance":{},"f:app.kubernetes.io/managed-by":{},"f:app.kubernetes.io/name":{},"f:app.kubernetes.io/part-of":{},"f:app.kubernetes.io/version":{},"f:helm.sh/chart":{}}},"f:spec":{"f:controller":{}}}}]},"spec":{"controller":"k8s.ngrok.com/ingress-controller"}}]}

{"level":"info","ts":"2023-07-09T13:42:25Z","logger":"setup","msg":"starting manager"}

{"level":"info","ts":"2023-07-09T13:42:25Z","msg":"Starting server","path":"/metrics","kind":"metrics","addr":"[::]:8080"}

{"level":"info","ts":"2023-07-09T13:42:25Z","msg":"Starting server","kind":"health probe","addr":"[::]:8081"}

{"level":"info","ts":"2023-07-09T13:42:25Z","logger":"controllers.tunnel","msg":"Starting EventSource","source":"kind source: *v1alpha1.Tunnel"}

I0709 13:42:25.165341 1 leaderelection.go:248] attempting to acquire leader lease ngrok-ingress-controller/ngrok-ingress-controller-kubernetes-ingress-controller-leader...

{"level":"info","ts":"2023-07-09T13:42:25Z","logger":"controllers.tunnel","msg":"Starting Controller"}

{"level":"info","ts":"2023-07-09T13:42:25Z","logger":"controllers.tunnel","msg":"Starting workers","worker count":1}

I0709 13:42:43.784550 1 leaderelection.go:258] successfully acquired lease ngrok-ingress-controller/ngrok-ingress-controller-kubernetes-ingress-controller-leader

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"ingress","controllerGroup":"networking.k8s.io","controllerKind":"Ingress","source":"kind source: *v1.Ingress"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"tcpedge","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"TCPEdge","source":"kind source: *v1alpha1.TCPEdge"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"ingress","controllerGroup":"networking.k8s.io","controllerKind":"Ingress","source":"kind source: *v1.IngressClass"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"tcpedge","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"TCPEdge","source":"kind source: *v1alpha1.IPPolicy"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting Controller","controller":"tcpedge","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"TCPEdge"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"domain","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"Domain","source":"kind source: *v1alpha1.Domain"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting Controller","controller":"domain","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"Domain"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"ippolicy","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"IPPolicy","source":"kind source: *v1alpha1.IPPolicy"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting Controller","controller":"ippolicy","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"IPPolicy"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"ingress","controllerGroup":"networking.k8s.io","controllerKind":"Ingress","source":"kind source: *v1.Service"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"ingress","controllerGroup":"networking.k8s.io","controllerKind":"Ingress","source":"kind source: *v1alpha1.Domain"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"ingress","controllerGroup":"networking.k8s.io","controllerKind":"Ingress","source":"kind source: *v1alpha1.HTTPSEdge"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"ingress","controllerGroup":"networking.k8s.io","controllerKind":"Ingress","source":"kind source: *v1alpha1.Tunnel"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"ingress","controllerGroup":"networking.k8s.io","controllerKind":"Ingress","source":"kind source: *v1alpha1.NgrokModuleSet"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting Controller","controller":"ingress","controllerGroup":"networking.k8s.io","controllerKind":"Ingress"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting EventSource","controller":"httpsedge","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"HTTPSEdge","source":"kind source: *v1alpha1.HTTPSEdge"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting Controller","controller":"httpsedge","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"HTTPSEdge"}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting workers","controller":"domain","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"Domain","worker count":1}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting workers","controller":"ingress","controllerGroup":"networking.k8s.io","controllerKind":"Ingress","worker count":1}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting workers","controller":"ippolicy","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"IPPolicy","worker count":1}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting workers","controller":"httpsedge","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"HTTPSEdge","worker count":1}

{"level":"info","ts":"2023-07-09T13:42:43Z","msg":"Starting workers","controller":"tcpedge","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"TCPEdge","worker count":1}

{"level":"info","ts":"2023-07-09T13:45:26Z","logger":"cache-store-driver","msg":"syncing driver state!!"}

{"level":"error","ts":"2023-07-09T13:45:27Z","msg":"Reconciler error","controller":"domain","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"Domain","Domain":{"name":"azure-vote-front-qgqmjftt-ngrok-app","namespace":"default"},"namespace":"default","name":"azure-vote-front-qgqmjftt-ngrok-app","reconcileID":"18f09c10-5abf-4bc3-a3d2-6a1baafd0c30","error":"HTTP 400: Only Personal, Pro or Enterprise accounts can reserve domains.\nYour account can't reserve domains. \n\nUpgrade to a paid plan at: https://dashboard.ngrok.com/billing/subscription [ERR_NGROK_401]\n\nOperation ID: op_2SL00Ag4Dfrs8Q4zGjiBVzYpzb6","stacktrace":"sigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:329\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:274\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:235"}

{"level":"error","ts":"2023-07-09T13:45:27Z","msg":"Reconciler error","controller":"domain","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"Domain","Domain":{"name":"azure-vote-front-qgqmjftt-ngrok-app","namespace":"default"},"namespace":"default","name":"azure-vote-front-qgqmjftt-ngrok-app","reconcileID":"e31988db-ffe2-486f-968b-59f0a3e1acc8","error":"HTTP 400: Only Personal, Pro or Enterprise accounts can reserve domains.\nYour account can't reserve domains. \n\nUpgrade to a paid plan at: https://dashboard.ngrok.com/billing/subscription [ERR_NGROK_401]\n\nOperation ID: op_2SL006lp3JePs8tRIA1UtaoRrRc","stacktrace":"sigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:329\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:274\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:235"}

{"level":"error","ts":"2023-07-09T13:45:27Z","msg":"Reconciler error","controller":"domain","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"Domain","Domain":{"name":"azure-vote-front-qgqmjftt-ngrok-app","namespace":"default"},"namespace":"default","name":"azure-vote-front-qgqmjftt-ngrok-app","reconcileID":"d3f9914a-c1f7-4c2a-a85a-8731cb7015bf","error":"HTTP 400: Only Personal, Pro or Enterprise accounts can reserve domains.\nYour account can't reserve domains. \n\nUpgrade to a paid plan at: https://dashboard.ngrok.com/billing/subscription [ERR_NGROK_401]\n\nOperation ID: op_2SL00AJZYvoYPBhTp1hWLuuq2ri","stacktrace":"sigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:329\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:274\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:235"}

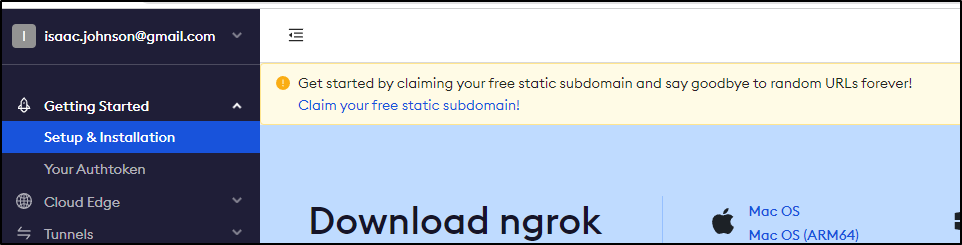

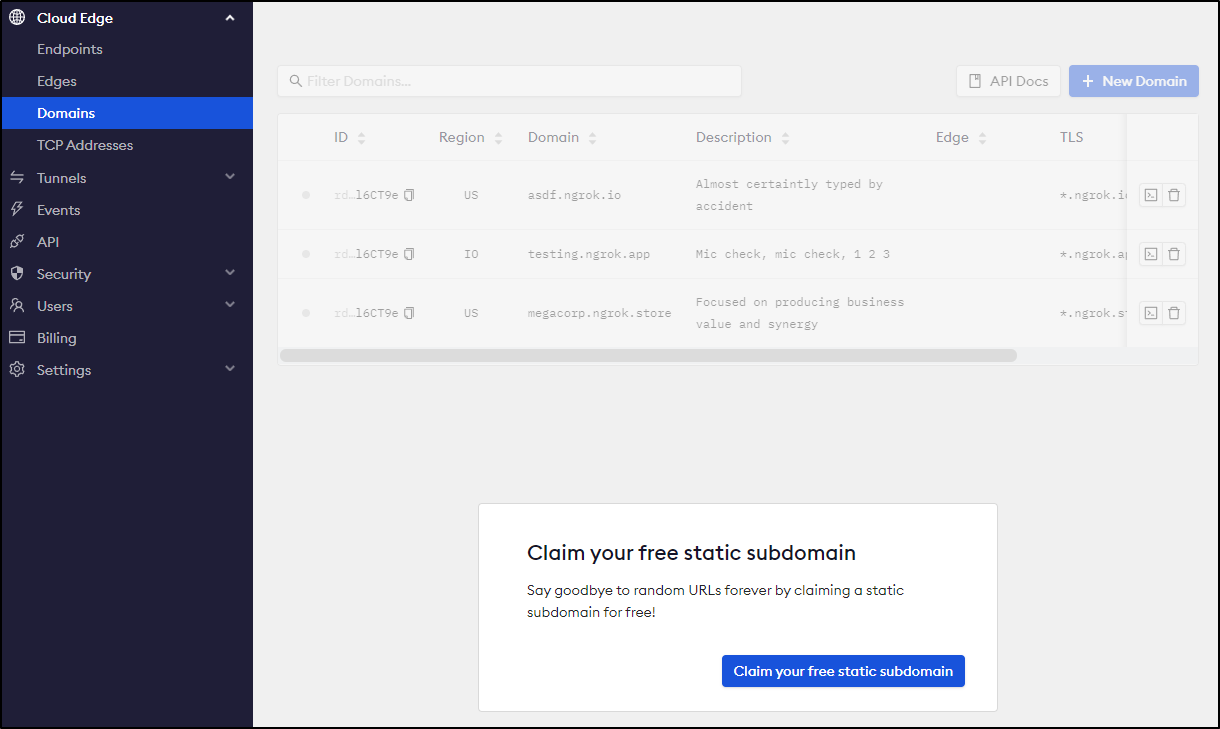

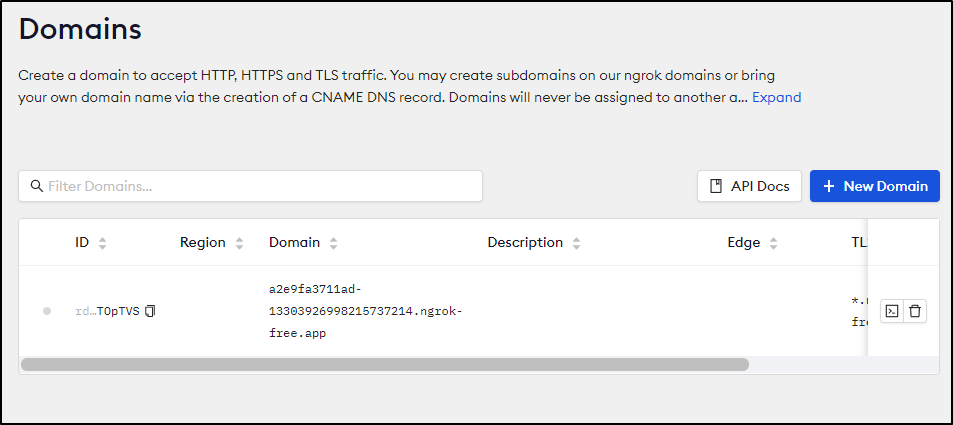

I noticed there was a “claim a free subdomain” banner I had not noticed before

That bounced me over to the domains page where I clicked the button

And it gave me an ngrok-free.app domain

I tried using that endpoint in the controller

$ cat ingress-ngrok.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: azure-vote-front

spec:

ingressClassName: ngrok

rules:

- host: azure-vote-front.a2e9fa3711ad-13303926998215737214.ngrok-free.app

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: azure-vote-front

port:

number: 80

$ kubectl apply -f ingress-ngrok.yaml

ingress.networking.k8s.io/azure-vote-front created

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

azure-vote-front ngrok azure-vote-front.a2e9fa3711ad-13303926998215737214.ngrok-free.app 80 110s

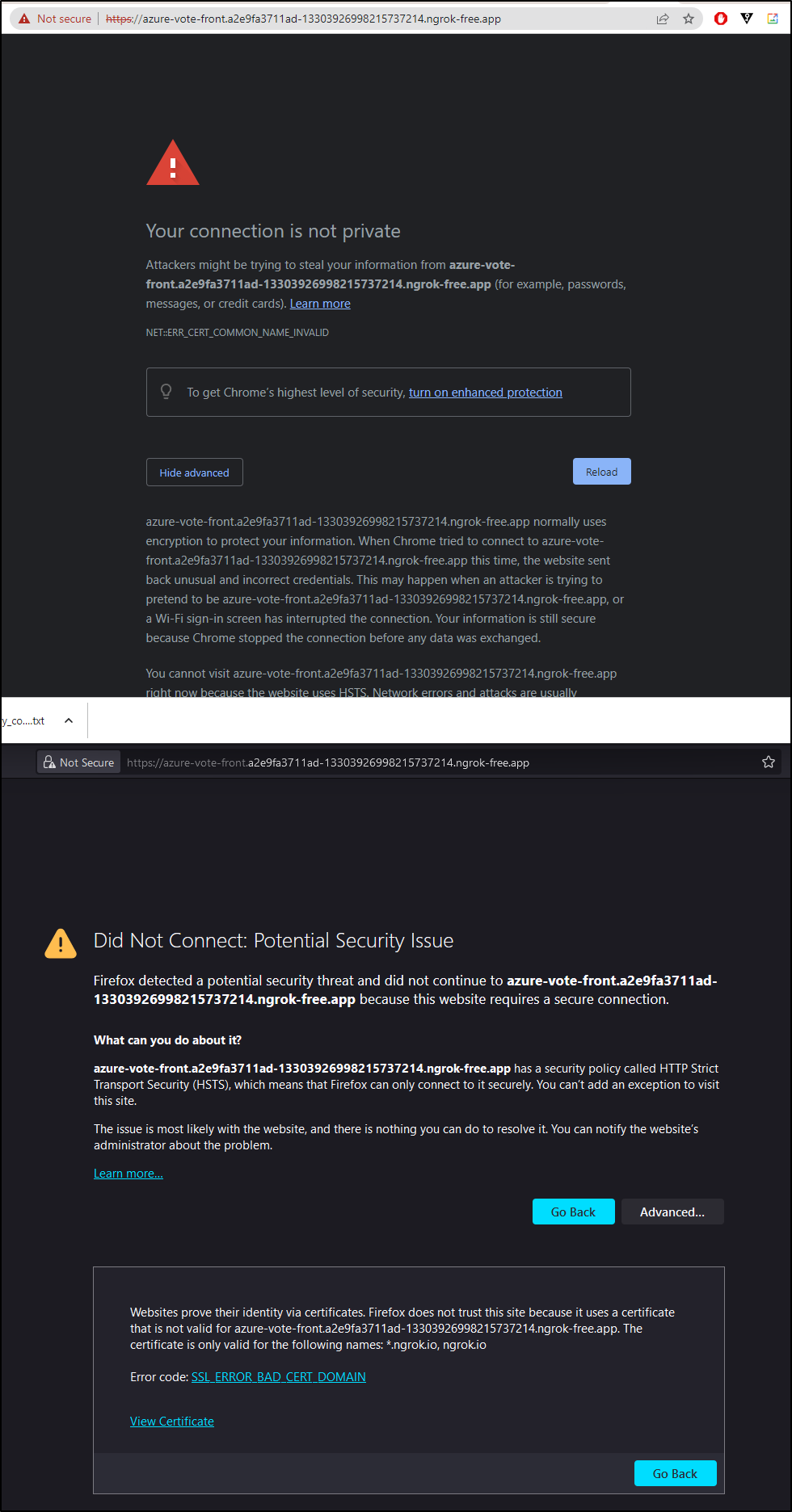

Now I have a new problem. My browsers refuse to serve https when they know the backend cert is only valid for ngrok.io.

I decided I would try again

$ kubectl delete -f ingress-ngrok.yaml

ingress.networking.k8s.io "azure-vote-front" deleted

$ helm delete ngrok-ingress-controller -n ngrok-ingress-controller

release "ngrok-ingress-controller" uninstalled

$ helm install ngrok-ingress-controller ngrok/kubernetes-ingress-controller --namespace ngrok-ingress-controller --create-namespace --set credentials.apiKey=$NGROK_API_KEY --set credentials.authtoken=$NGROK_AUTHTOKEN

NAME: ngrok-ingress-controller

LAST DEPLOYED: Sun Jul 9 10:35:36 2023

NAMESPACE: ngrok-ingress-controller

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

================================================================================

The ngrok Ingress controller has been deployed as a Deployment type to your

cluster.

If you haven't yet, create some Ingress resources in your cluster and they will

be automatically configured on the internet using ngrok.

One example, taken from your cluster, is the Service:

"metrics-server"

You can make this accessible via Ngrok with the following manifest:

--------------------------------------------------------------------------------

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: metrics-server

namespace: kube-system

spec:

ingressClassName: ngrok

rules:

- host: metrics-server-KLOewW3K.ngrok.app

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: metrics-server

port:

number: 443

--------------------------------------------------------------------------------

Applying this manifest will make the service "metrics-server"

available on the public internet at "https://metrics-server-KLOewW3K.ngrok.app/".

Once done, view your edges in the Dashboard https://dashboard.ngrok.com/cloud-edge/edges

Find the tunnels running in your cluster here https://dashboard.ngrok.com/tunnels/agents

If you have any questions or feedback, please join us in https://ngrok.com/slack and let us know!

Another post

I tried this post which seemed even simpler

$ helm install ngrok-ingress-controller ngrok/kubernetes-ingress-controller --set credentials.apiKey=asdfasdfasdfasdfasdfasdfsadfasdf --set credentials.authtoken=asdfasdfasdfasdfasdfasdfasdfasdf

NAME: ngrok-ingress-controller

LAST DEPLOYED: Sun Jul 9 10:59:26 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

================================================================================

The ngrok Ingress controller has been deployed as a Deployment type to your

cluster.

If you haven't yet, create some Ingress resources in your cluster and they will

be automatically configured on the internet using ngrok.

One example, taken from your cluster, is the Service:

"metrics-server"

You can make this accessible via Ngrok with the following manifest:

--------------------------------------------------------------------------------

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: metrics-server

namespace: kube-system

spec:

ingressClassName: ngrok

rules:

- host: metrics-server-3YuNSo5k.ngrok.app

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: metrics-server

port:

number: 443

--------------------------------------------------------------------------------

Applying this manifest will make the service "metrics-server"

available on the public internet at "https://metrics-server-3YuNSo5k.ngrok.app/".

Once done, view your edges in the Dashboard https://dashboard.ngrok.com/cloud-edge/edges

Find the tunnels running in your cluster here https://dashboard.ngrok.com/tunnels/agents

If you have any questions or feedback, please join us in https://ngrok.com/slack and let us know!

I see the ‘free’ domain

$ export NGROK_SUBDOMAIN="a2e9fa3711ad-13303926998215737214.ngrok-free.app"

Then ran their one-liner

$ wget https://raw.githubusercontent.com/ngrok/kubernetes-ingress-controller/main/docs/examples/hello-world/manifests.yaml -O - | envsubst | kubectl apply -f -

--2023-07-09 11:00:24-- https://raw.githubusercontent.com/ngrok/kubernetes-ingress-controller/main/docs/examples/hello-world/manifests.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.111.133, 185.199.110.133, 185.199.109.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.111.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 886 [text/plain]

Saving to: ‘STDOUT’

- 100%[=============================================================================================================>] 886 --.-KB/s in 0s

2023-07-09 11:00:24 (65.2 MB/s) - written to stdout [886/886]

ingress.networking.k8s.io/game-2048 created

service/game-2048 created

deployment.apps/game-2048 created

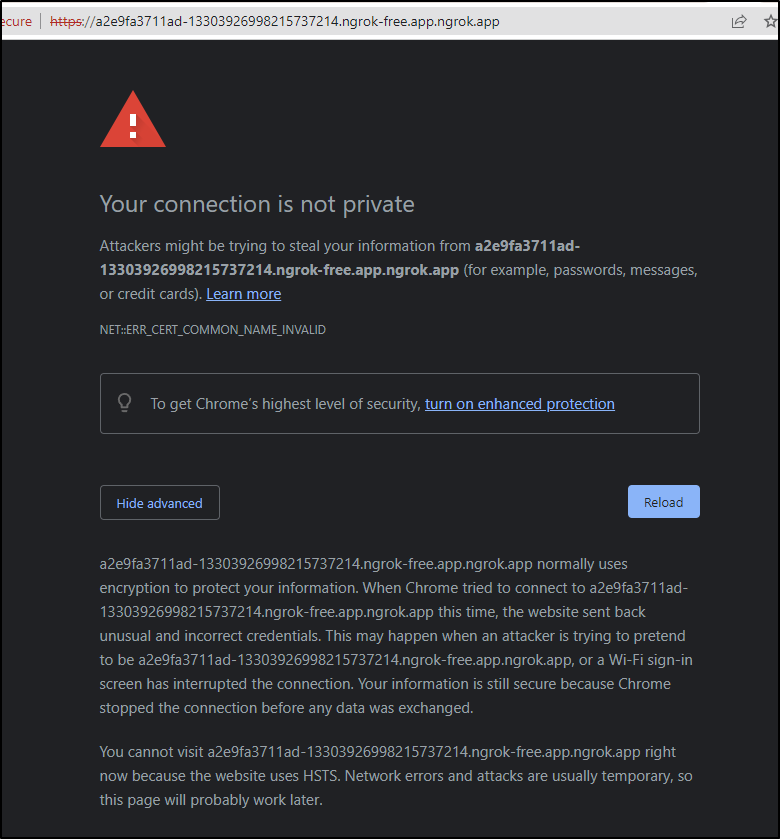

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

game-2048 ngrok a2e9fa3711ad-13303926998215737214.ngrok-free.app.ngrok.app 80 8s

Just as broken

And the logs from the ingress controller suggested it tried to get a reserved domain

{"level":"error","ts":"2023-07-09T16:00:26Z","msg":"Reconciler error","controller":"domain","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"Domain","Domain":{"name":"a2e9fa3711ad-13303926998215737214-ngrok-free-app-ngrok-app","namespace":"default"},"namespace":"default","name":"a2e9fa3711ad-13303926998215737214-ngrok-free-app-ngrok-app","reconcileID":"694e41ad-ef6c-4391-aef1-131a9a96d9cc","error":"HTTP 400: Only Personal, Pro or Enterprise accounts can reserve domains.\nYour account can't reserve domains. \n\nUpgrade to a paid plan at: https://dashboard.ngrok.com/billing/subscription [ERR_NGROK_401]\n\nOperation ID: op_2SLGPwXN0M3Keha7QL9R1KPRVZL","stacktrace":"sigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:329\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:274\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:235"}

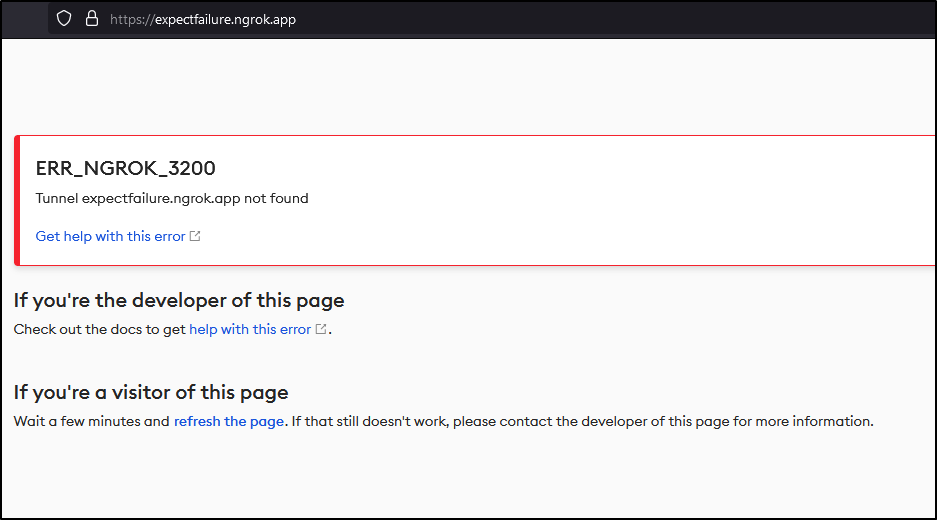

I tried a simple name

$ export NGROK_SUBDOMAIN="expectfailure"

$ wget https://raw.githubusercontent.com/ngrok/kubernetes-ingress-controller/main/docs/examples/hello-world/manifests.yaml -O - | envsubst | kubectl apply -f -

--2023-07-09 11:03:40-- https://raw.githubusercontent.com/ngrok/kubernetes-ingress-controller/main/docs/examples/hello-world/manifests.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.111.133, 185.199.110.133, 185.199.109.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.111.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 886 [text/plain]

Saving to: ‘STDOUT’

- 100%[=============================================================================================================>] 886 --.-KB/s in 0s

2023-07-09 11:03:40 (71.9 MB/s) - written to stdout [886/886]

ingress.networking.k8s.io/game-2048 configured

service/game-2048 unchanged

deployment.apps/game-2048 unchanged

Which, of course, gives me the 3200 error as it cannot find a tunnel

and I can see in the logs

{"level":"error","ts":"2023-07-09T16:06:28Z","msg":"Reconciler error","controller":"domain","controllerGroup":"ingress.k8s.ngrok.com","controllerKind":"Domain","Domain":{"name":"expectfailure-ngrok-app","namespace":"default"},"namespace":"default","name":"expectfailure-ngrok-app","reconcileID":"e51ad8f4-30f4-4d5f-b9a4-71498e94e2a7","error":"HTTP 400: Only Personal, Pro or Enterprise accounts can reserve domains.\nYour account can't reserve domains. \n\nUpgrade to a paid plan at: https://dashboard.ngrok.com/billing/subscription [ERR_NGROK_401]\n\nOperation ID: op_2SLH9KtPrk09xQxYUd62w45OGIv","stacktrace":"sigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:329\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:274\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:235"}

Last try

So I followed the first guide yet again, though this time i used the straight YAML for the ingress sample app.

I also grabbed a new Tunnel token just to be safe.

$ cat ngrok-final-test.yaml

apiVersion: v1

kind: Service

metadata:

name: game-2048

namespace: ngrok-ingress-controller

spec:

ports:

- name: http

port: 80

targetPort: 80

selector:

app: game-2048

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: game-2048

namespace: ngrok-ingress-controller

spec:

replicas: 1

selector:

matchLabels:

app: game-2048

template:

metadata:

labels:

app: game-2048

spec:

containers:

- name: backend

image: alexwhen/docker-2048

ports:

- name: http

containerPort: 80

---

# ngrok Ingress Controller Configuration

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: game-2048-ingress

namespace: ngrok-ingress-controller

spec:

ingressClassName: ngrok

rules:

- host: a2e9fa3711ad-13303926998215737214.ngrok-free.app

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: game-2048

port:

number: 80

$ kubectl apply -f ngrok-final-test.yaml

service/game-2048 created

deployment.apps/game-2048 created

ingress.networking.k8s.io/game-2048-ingress created

The tunnel page is just as broken

And the Ingress Controller logs are full of errors

{"level":"error","ts":"2023-07-09T16:15:15Z","logger":"cache-store-driver","msg":"error creating domain","domain":{"metadata":{"name":"a2e9fa3711ad-13303926998215737214-ngrok-free-app","namespace":"ngrok-ingress-controller","creationTimestamp":null},"spec":{"domain":"a2e9fa3711ad-13303926998215737214.ngrok-free.app"},"status":{}},"error":"the server could not find the requested resource (post domains.ingress.k8s.ngrok.com)","stacktrace":"github.com/ngrok/kubernetes-ingress-controller/internal/store.(*Driver).Sync\n\tgithub.com/ngrok/kubernetes-ingress-controller/internal/store/driver.go:203\ngithub.com/ngrok/kubernetes-ingress-controller/internal/controllers.(*IngressReconciler).reconcileAll\n\tgithub.com/ngrok/kubernetes-ingress-controller/internal/controllers/ingress_controller.go:153\ngithub.com/ngrok/kubernetes-ingress-controller/internal/controllers.(*IngressReconciler).Reconcile\n\tgithub.com/ngrok/kubernetes-ingress-controller/internal/controllers/ingress_controller.go:131\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Reconcile\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:122\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:323\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:274\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:235"}

{"level":"error","ts":"2023-07-09T16:15:25Z","logger":"controllers.ingress","msg":"Failed to sync ingress to store","error":"the server could not find the requested resource (post domains.ingress.k8s.ngrok.com)","stacktrace":"github.com/ngrok/kubernetes-ingress-controller/internal/controllers.(*IngressReconciler).reconcileAll\n\tgithub.com/ngrok/kubernetes-ingress-controller/internal/controllers/ingress_controller.go:155\ngithub.com/ngrok/kubernetes-ingress-controller/internal/controllers.(*IngressReconciler).Reconcile\n\tgithub.com/ngrok/kubernetes-ingress-controller/internal/controllers/ingress_controller.go:131\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Reconcile\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:122\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:323\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:274\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:235"}

{"level":"error","ts":"2023-07-09T16:15:25Z","msg":"Reconciler error","controller":"ingress","controllerGroup":"networking.k8s.io","controllerKind":"Ingress","Ingress":{"name":"game-2048-ingress","namespace":"ngrok-ingress-controller"},"namespace":"ngrok-ingress-controller","name":"game-2048-ingress","reconcileID":"ae5fa8c0-5cdb-4cc7-ad08-80990eb5b1fa","error":"the server could not find the requested resource (post domains.ingress.k8s.ngrok.com)","stacktrace":"sigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:329\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:274\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:235"}

{"level":"info","ts":"2023-07-09T16:15:25Z","logger":"cache-store-driver","msg":"syncing driver state!!"}

{"level":"error","ts":"2023-07-09T16:15:25Z","logger":"cache-store-driver","msg":"error creating domain","domain":{"metadata":{"name":"a2e9fa3711ad-13303926998215737214-ngrok-free-app","namespace":"ngrok-ingress-controller","creationTimestamp":null},"spec":{"domain":"a2e9fa3711ad-13303926998215737214.ngrok-free.app"},"status":{}},"error":"the server could not find the requested resource (post domains.ingress.k8s.ngrok.com)","stacktrace":"github.com/ngrok/kubernetes-ingress-controller/internal/store.(*Driver).Sync\n\tgithub.com/ngrok/kubernetes-ingress-controller/internal/store/driver.go:203\ngithub.com/ngrok/kubernetes-ingress-controller/internal/controllers.(*IngressReconciler).reconcileAll\n\tgithub.com/ngrok/kubernetes-ingress-controller/internal/controllers/ingress_controller.go:153\ngithub.com/ngrok/kubernetes-ingress-controller/internal/controllers.(*IngressReconciler).Reconcile\n\tgithub.com/ngrok/kubernetes-ingress-controller/internal/controllers/ingress_controller.go:131\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Reconcile\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:122\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:323\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:274\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).Start.func2.2\n\tsigs.k8s.io/controller-runtime@v0.14.1/pkg/internal/controller/controller.go:235"}

The ingress controller shows no tunnels

Alternative Container

There is another non-Grok created container here with some guides to follow. Let’s chase that path…

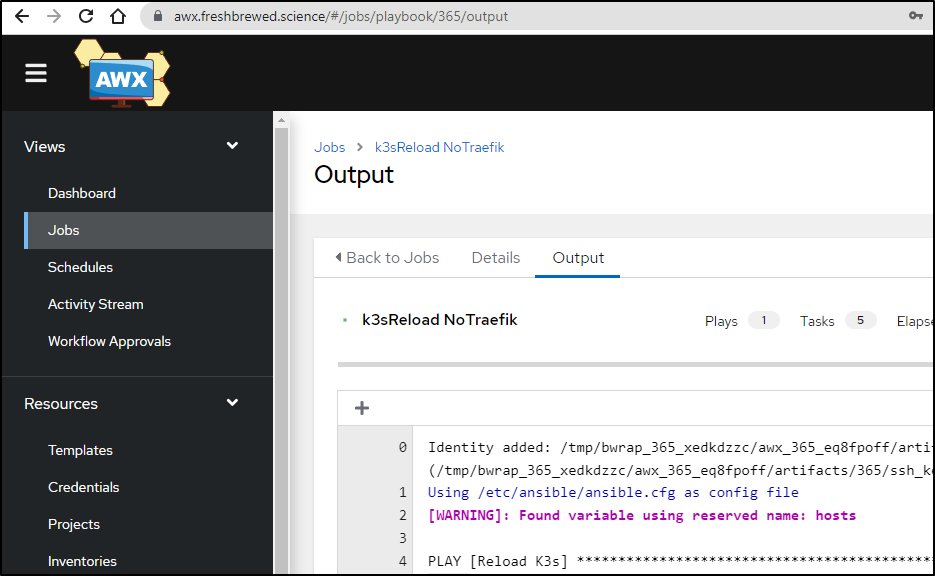

First, I’m going to reset the cluster next as we’ve done a bit too much experimenting here for me to trust it’s results

$ kubectl get node

NAME STATUS ROLES AGE VERSION

anna-macbookair Ready control-plane,master 2m8s v1.26.4+k3s1

isaac-macbookpro Ready <none> 87s v1.26.4+k3s1

builder-macbookpro2 Ready <none> 85s v1.26.4+k3s1

I tried chasing this guide but he’s since pulled is ‘sp-ngrok’ chart. His more recent endeavors seem to focus on Contour.

I then went to follow this medium post but the container crashed because of old versions

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-78cc4c645b-7vc2n 1/1 Running 0 56s

ngrok-5df4cdcf59-8nhkh 0/1 Error 2 (20s ago) 43s

$ kubectl logs ngrok-5df4cdcf59-8nhkh

Your ngrok agent version "2" is no longer supported. Only the most recent version of the ngrok agent is supported without an account.

Update to a newer version with `ngrok update` or by downloading from https://ngrok.com/download.

Sign up for an account to avoid forced version upgrades: https://ngrok.com/signup.

ERR_NGROK_120

That itself is not a reason to give up, I found the underlying docker container here and pulled it down.

I pulled it down and tried a rebuild

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ docker build -t first .

[+] Building 13.9s (12/12) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.50kB 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/alpine:3.12 2.4s

=> [auth] library/alpine:pull token for registry-1.docker.io 0.0s

=> [1/6] FROM docker.io/library/alpine:3.12@sha256:c75ac27b49326926b803b9ed43bf088bc220d22556de1bc5f72d742c91398f69 0.7s

=> => resolve docker.io/library/alpine:3.12@sha256:c75ac27b49326926b803b9ed43bf088bc220d22556de1bc5f72d742c91398f69 0.0s

=> => sha256:cb64bbe7fa613666c234e1090e91427314ee18ec6420e9426cf4e7f314056813 528B / 528B 0.0s

=> => sha256:24c8ece58a1aa807c0d8ea121f91cee2efba99624d0a8aed732155fb31f28993 1.47kB / 1.47kB 0.0s

=> => sha256:1b7ca6aea1ddfe716f3694edb811ab35114db9e93f3ce38d7dab6b4d9270cb0c 2.81MB / 2.81MB 0.2s

=> => sha256:c75ac27b49326926b803b9ed43bf088bc220d22556de1bc5f72d742c91398f69 1.64kB / 1.64kB 0.0s

=> => extracting sha256:1b7ca6aea1ddfe716f3694edb811ab35114db9e93f3ce38d7dab6b4d9270cb0c 0.4s

=> [internal] load build context 0.0s

=> => transferring context: 2.64kB 0.0s

=> [2/6] RUN set -x && apk add --no-cache -t .deps ca-certificates && wget -q -O /etc/apk/keys/sgerrand.rsa.pub https://alpine-pkgs.sgerrand.com/sgerrand.rsa.pub && wget https://github.com/ 3.6s

=> [3/6] RUN set -x && apk add --no-cache curl && APKARCH="$(apk --print-arch)" && case "$APKARCH" in armhf) NGROKARCH="arm" ;; armv7) NGROKARCH="arm" ;; armel) NGRO 3.6s

=> [4/6] COPY --chown=ngrok ngrok.yml /home/ngrok/.ngrok2/ 0.0s

=> [5/6] COPY entrypoint.sh / 0.0s

=> [6/6] RUN ngrok --version 0.7s

=> exporting to image 0.2s

=> => exporting layers 0.2s

=> => writing image sha256:38d82941dc7541daf68ea0d6af4732bc979dc909a8fac5625dfbdbc099e69191 0.0s

=> => naming to docker.io/library/first

I then pushed that up to Dockerhub for myself and others

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ docker push idjohnson/ngrok

Using default tag: latest

The push refers to repository [docker.io/idjohnson/ngrok]

c88ae2eef980: Pushed

5a5b6eeb1940: Pushed

01f5ff12136b: Pushed

1614170bab25: Pushed

156aa3bae40b: Pushed

1ad27bdd166b: Mounted from library/alpine

latest: digest: sha256:86c7f3d94ba60959280fdc163c3d7039ab780a8389001c3f344c11cbd5c3ba49 size: 1571

I then edited the deployment to use my container

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 8m30s

ngrok 0/1 1 0 8m17s

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl edit deployment ngrok

deployment.apps/ngrok edited

Hmmm.. even after deleting the pod, it does not like the version

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-78cc4c645b-7vc2n 1/1 Running 0 9m42s

ngrok-79f54b8567-9f9tc 0/1 ContainerCreating 0 10s

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-78cc4c645b-7vc2n 1/1 Running 0 10m

ngrok-79f54b8567-9f9tc 1/1 Running 3 (31s ago) 67s

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl logs ngrok-79f54b8567-9f9tc

Your ngrok agent version "2" is no longer supported. Only the most recent version of the ngrok agent is supported without an account.

Update to a newer version with `ngrok update` or by downloading from https://ngrok.com/download.

Sign up for an account to avoid forced version upgrades: https://ngrok.com/signup.

ERR_NGROK_120

I reworked the Dockerfile a bit:

$ git diff Dockerfile

diff --git a/Dockerfile b/Dockerfile

index de15851..ddf75ad 100644

--- a/Dockerfile

+++ b/Dockerfile

@@ -25,9 +25,11 @@ RUN set -x \

x86) NGROKARCH="386" ;; \

x86_64) NGROKARCH="amd64" ;; \

esac \

- && curl -Lo /ngrok.zip https://bin.equinox.io/c/4VmDzA7iaHb/ngrok-stable-linux-$NGROKARCH.zip \

- && unzip -o /ngrok.zip -d /bin \

- && rm -f /ngrok.zip \

+ && curl -Lo /ngrok.tgz https://bin.equinox.io/c/bNyj1mQVY4c/ngrok-v3-stable-linux-$NGROKARCH.tgz \

+ && tar -xzf /ngrok.tgz \

+ && mv /ngrok /bin \

+ && chmod 755 /bin/ngrok \

+ && rm -f /ngrok.tgz \

# Create non-root user.

&& adduser -h /home/ngrok -D -u 6737 ngrok

I was having some machine issues with tags, so I pushed the update as ‘ngrok2’ then editted my deployment

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ docker push idjohnson/ngrok2

Using default tag: latest

The push refers to repository [docker.io/idjohnson/ngrok2]

66505da594fa: Pushed

5a5b6eeb1940: Mounted from idjohnson/ngrok

931d31bd850a: Pushed

0daed3bf0e40: Pushed

156aa3bae40b: Mounted from idjohnson/ngrok

1ad27bdd166b: Mounted from idjohnson/ngrok

latest: digest: sha256:f5b11bcf108765de09b5566f24f0f051de6613cb17bd82079e7874bfa98d7b4b size: 1570

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl edit deployment ngrok

deployment.apps/ngrok edited

Looks like there is more work. Seems the old version (v2) just created a tunnel, the new one needs (v3) configs

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-78cc4c645b-7vc2n 1/1 Running 0 30m

ngrok-5879d66d9c-d849m 0/1 CrashLoopBackOff 2 (18s ago) 47s

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl logs ngrok-5879d66d9c-d849m

NAME:

http - start an HTTP tunnel

USAGE:

ngrok http [address:port | port] [flags]

DESCRIPTION:

Starts a tunnel listening for HTTP/HTTPS traffic with a specific hostname.

The HTTP Host header on incoming public requests is inspected to

determine which tunnel it matches.

HTTPS endpoints terminate TLS traffic at the ngrok server using the

appropriate certificates. The decrypted, HTTP traffic is then forwarded

through the secure tunnel and then to your local server. If you don't want

your TLS traffic to terminate at the ngrok server, use a TLS or TCP tunnel.

TERMS OF SERVICE: https://ngrok.com/tos

EXAMPLES:

ngrok http 8080 # forward ngrok subdomain to port 80

ngrok http example.com:9000 # forward traffic to example.com:9000

ngrok http --domain=bar.ngrok.dev 80 # request subdomain name: 'bar.ngrok.dev'

ngrok http --domain=example.com 1234 # request tunnel 'example.com' (DNS CNAME)

ngrok http --basic-auth='falken:joshua' 80 # enforce basic auth on tunnel endpoint

ngrok http --host-header=example.com 80 # rewrite the Host header to 'example.com'

ngrok http file:///var/log # serve local files in /var/log

ngrok http https://localhost:8443 # forward to a local https server

OPTIONS:

--authtoken string ngrok.com authtoken identifying a user

--basic-auth strings enforce basic auth on tunnel endpoint, 'user:password'

--cidr-allow strings reject connections that do not match the given CIDRs

--cidr-deny strings reject connections that match the given CIDRs

--circuit-breaker float reject requests when 5XX responses exceed this ratio

--compression gzip compress http responses from your web service

--config strings path to config files; they are merged if multiple

--domain string host tunnel on a custom subdomain or hostname (requires DNS CNAME)

-h, --help help for http

--host-header string set Host header; if 'rewrite' use local address hostname

--inspect enable/disable http introspection (default true)

--log string path to log file, 'stdout', 'stderr' or 'false' (default "false")

--log-format string log record format: 'term', 'logfmt', 'json' (default "term")

--log-level string logging level: 'debug', 'info', 'warn', 'error', 'crit' (default "info")

--mutual-tls-cas string path to TLS certificate authority to verify client certs in mutual tls

--oauth string enforce authentication oauth provider on tunnel endpoint, e.g. 'google'

--oauth-allow-domain strings allow only oauth users with these email domains

--oauth-allow-email strings allow only oauth users with these emails

--oauth-client-id string oauth app client id, optional

--oauth-client-secret string oauth app client secret, optional

--oauth-scope strings request these oauth scopes when users authenticate

ERROR: Error reading configuration file '/home/ngrok/.ngrok2/ngrok.yml': `version` property is required.

--oidc string oidc issuer url, e.g. https://accounts.google.com

--oidc-client-id string oidc app client id

--oidc-client-secret string oidc app client secret

--oidc-scope strings request these oidc scopes when users authenticate

--proxy-proto string version of proxy proto to use with this tunnel, empty if not using

--region string ngrok server region [us, eu, au, ap, sa, jp, in] (default "us")

--request-header-add strings header key:value to add to request

--request-header-remove strings header field to remove from request if present

--response-header-add strings header key:value to add to response

--response-header-remove strings header field to remove from response if present

--scheme strings which schemes to listen on (default [https])

--verify-webhook string validate webhooks are signed by this provider, e.g. 'slack'

--verify-webhook-secret string secret used by provider to sign webhooks, if any

--websocket-tcp-converter convert ingress websocket connections to TCP upstream

ERROR:

ERROR: If you're upgrading from an older version of ngrok, you can run:

ERROR:

ERROR: ngrok config upgrade

ERROR:

ERROR: to upgrade to the new format and add the version number.

I used an ephimeral container to convert the config yaml

root@nginx-78cc4c645b-7vc2n:/# echo "web_addr: 0.0.0.0:4040" > /root/.config/ngrok/ngrok.yml

root@nginx-78cc4c645b-7vc2n:/# ./ngrok config upgrade

Upgraded config file: '/root/.config/ngrok/ngrok.yml'

Applied changes:

- Added 'version: 2'

- Added 'region: us'

- Created a backup at: /root/.config/ngrok/ngrok.yml.v1.bak

---------------

web_addr: 0.0.0.0:4040

version: "2"

region: us

root@nginx-78cc4c645b-7vc2n:/# cat /root/.config/ngrok/ngrok.yml

web_addr: 0.0.0.0:4040

version: "2"

region: us

# set locally

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ cat ngrok.yml

web_addr: 0.0.0.0:4040

version: "2"

region: us

Now I can rebuild and push a tag

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ docker build -t third .

[+] Building 2.0s (12/12) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 38B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/alpine:3.12 1.4s

=> [auth] library/alpine:pull token for registry-1.docker.io 0.0s

=> [1/6] FROM docker.io/library/alpine:3.12@sha256:c75ac27b49326926b803b9ed43bf088bc220d22556de1bc5f72d742c91398 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 120B 0.0s

=> CACHED [2/6] RUN set -x && apk add --no-cache -t .deps ca-certificates && wget -q -O /etc/apk/keys/sgerrand 0.0s

=> CACHED [3/6] RUN set -x && apk add --no-cache curl && APKARCH="$(apk --print-arch)" && case "$APKARCH" in 0.0s

=> [4/6] COPY --chown=ngrok ngrok.yml /home/ngrok/.ngrok2/ 0.0s

=> [5/6] COPY entrypoint.sh / 0.0s

=> [6/6] RUN ngrok --version 0.4s

=> exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:b49f4300ab6bbe52ec35b122fc9b79883c1f58b83d506c824701cc94c9ea2b9f 0.0s

=> => naming to docker.io/library/third 0.0s

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ docker tag third idjohnson/ngrok:v3

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ docker push idjohnson/ngrok:v3

The push refers to repository [docker.io/idjohnson/ngrok]

2c75f3c862c4: Pushed

5a5b6eeb1940: Layer already exists

d67857a1d16b: Pushed

0daed3bf0e40: Mounted from idjohnson/ngrok2

156aa3bae40b: Layer already exists

1ad27bdd166b: Layer already exists

v3: digest: sha256:d303e2036d4b16f15c3eec92a16b584f171ce198a1f058dbb9c96d0f494e96ed size: 1570

Then edit my deployments and see this new image works

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl edit deployment ngrok

deployment.apps/ngrok edited

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-78cc4c645b-7vc2n 1/1 Running 0 73m

ngrok-9cff47d5b-6zsnl 1/1 Running 0 2m5s

I can now extract the Ngrok URL from the container

$ kubectl exec $(kubectl get pods -l=app=ngrok -o=jsonpath='{.items[0].metadata.name}') -- curl http://localhost:4040/api/tunnels | jq

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 445 100 445 0 0 420k 0 --:--:-- --:--:-- --:--:-- 434k

{

"tunnels": [

{

"name": "command_line",

"ID": "6b91b4ce657470bdb0bd567bee4f9229",

"uri": "/api/tunnels/command_line",

"public_url": "https://a0da-73-242-50-46.ngrok.io",

"proto": "https",

"config": {

"addr": "http://nginx-service:80",

"inspect": true

},

"metrics": {

"conns": {

"count": 0,

"gauge": 0,

"rate1": 0,

"rate5": 0,

"rate15": 0,

"p50": 0,

"p90": 0,

"p95": 0,

"p99": 0

},

"http": {

"count": 0,

"rate1": 0,

"rate5": 0,

"rate15": 0,

"p50": 0,

"p90": 0,

"p95": 0,

"p99": 0

}

}

}

],

"uri": "/api/tunnels"

}

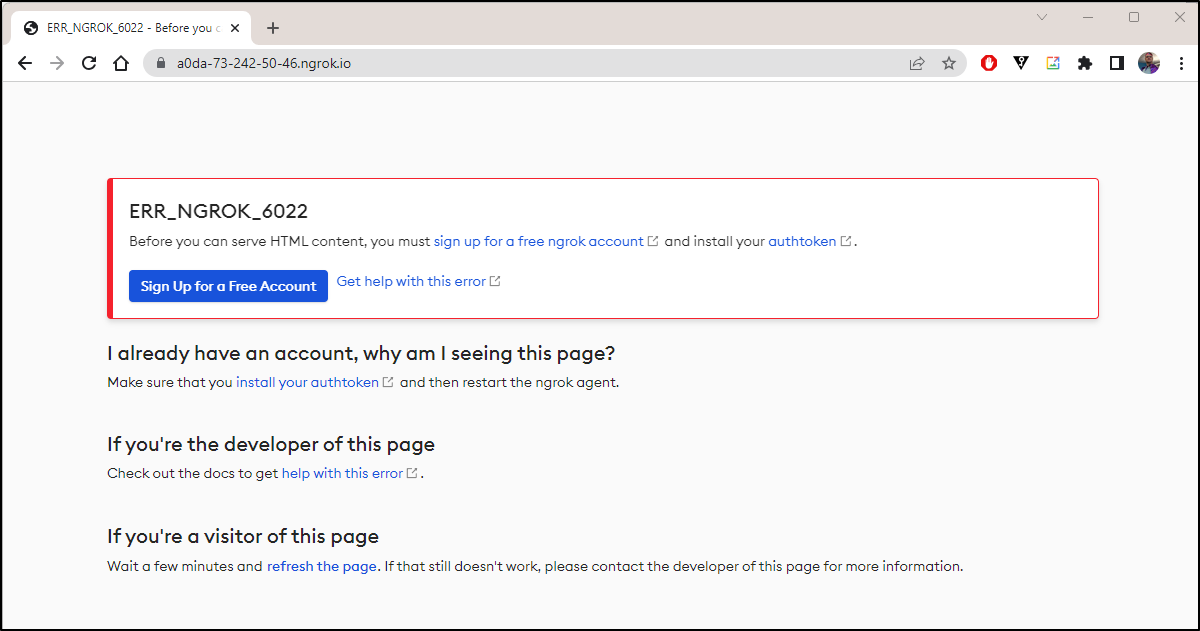

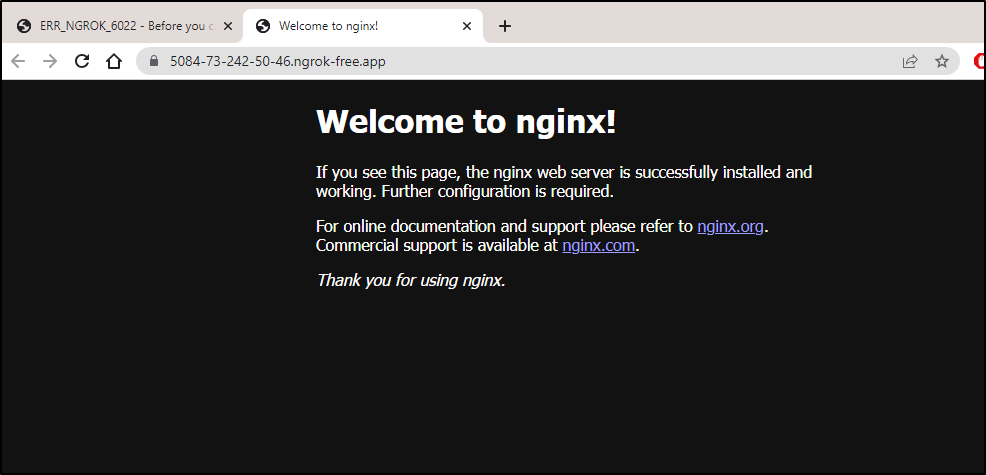

I did not record - but I swear for a moment I saw the NGinx page before it redirected to a “6022”, signup page

I then editted my deployment one more time. I did not plan to bake my token into the image

$ kubectl edit deployment ngrok

deployment.apps/ngrok edited

# change:

spec:

containers:

- args:

- http

- nginx-service

command:

- ngrok

env:

- name: NGROK_AUTHTOKEN

value: 2asdfasfasdfasdfsadfasdfasdfasdf3

image: idjohnson/ngrok:v3

imagePullPolicy: Always

name: ngrok

ports:

- containerPort: 4040

protocol: TCP

I see a new pod and new URL

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-78cc4c645b-7vc2n 1/1 Running 0 102m

ngrok-8595966d8d-bwzgz 1/1 Running 0 91s

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl exec $(kubectl get pods -l=app=ngrok -o=jsonpath='{.items[0].metadata.name}') -- curl http://localhost:4040/api/tunnels | jq

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0{

"tunnels": [

{

"name": "command_line",

"ID": "b7d9c452361075d87f12b3da24c3fb46",

"uri": "/api/tunnels/command_line",

"public_url": "https://5084-73-242-50-46.ngrok-free.app",

"proto": "https",

"config": {

"addr": "http://nginx-service:80",

"inspect": true

},

"metrics": {

"conns": {

100 451 100 451 0 0 227k 0 --:--:-- --:--:-- --:--:-- 440k

"count": 0,

"gauge": 0,

"rate1": 0,

"rate5": 0,

"rate15": 0,

"p50": 0,

"p90": 0,

"p95": 0,

"p99": 0

},

"http": {

"count": 0,

"rate1": 0,

"rate5": 0,

"rate15": 0,

"p50": 0,

"p90": 0,

"p95": 0,

"p99": 0

}

}

}

],

"uri": "/api/tunnels"

}

And this time it works! https://5084-73-242-50-46.ngrok-free.app/

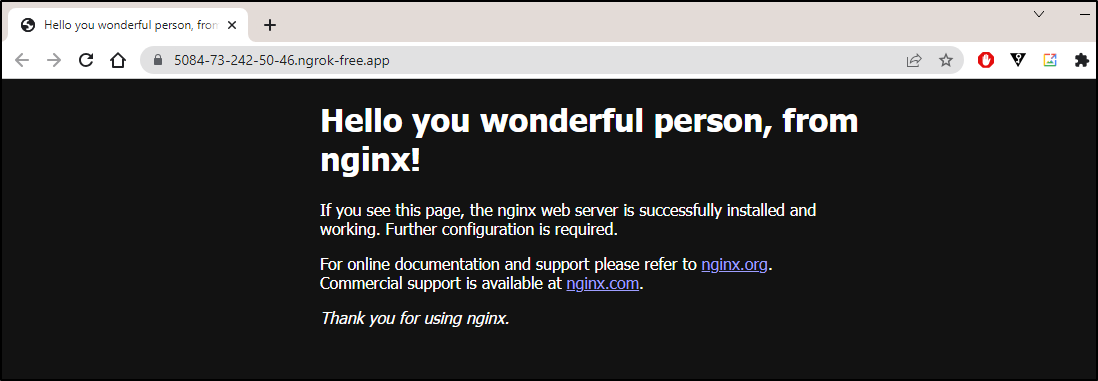

And just to double check it is working, I’ll hop into the Nginx pod and change the hello page

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl exec -it nginx-78cc4c645b-7vc2n -- /bin/bash

root@nginx-78cc4c645b-7vc2n:/# cat /usr/share/nginx/html

/index.html

cat: /usr/share/nginx/html: Is a directory

bash: /index.html: No such file or directory

root@nginx-78cc4c645b-7vc2n:/# cat /usr/share/nginx/html/index.html

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

root@nginx-78cc4c645b-7vc2n:/# sed -i 's/Welcome to/Hello you wonderful person, from/g' /usr/share/nginx/html/index.html

root@nginx-78cc4c645b-7vc2n:/#

And see it works

The reason this is working is because we launched it and exposed the nginx-service with the commands in the deployment yaml

spec:

containers:

- args:

- http

- nginx-service

command:

- ngrok

I can see that as well if I hop into the Nginx container

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl exec -it ngrok-8595966d8d-bwzgz -- /bin/sh

/ $ ps -ef

PID USER TIME COMMAND

1 ngrok 0:07 ngrok http nginx-service

28 ngrok 0:00 /bin/sh

36 ngrok 0:00 ps -ef

/ $

How might that work with the Azure Vote App?

Testing with Azure Vote

I’ll install as I had before

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ helm install azure-samples/azure-vote --generate-name

NAME: azure-vote-1688994153

LAST DEPLOYED: Mon Jul 10 08:02:33 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Azure Vote application has been started on your Kubernetes cluster.

Title: Azure Vote App

Vote 1 value: Cats

Vote 2 value: Dogs

The externally accessible IP address can take a minute or so to provision. Run the following command to monitor the provisioning status. Once an External IP address has been provisioned, brows to this IP address to access the Azure Vote application.

kubectl get service -l name=azure-vote-front -w

I can see the service come up

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 130m

nginx-service ClusterIP 10.43.173.42 <none> 80/TCP 119m

vote-back-azure-vote-1688994153 ClusterIP 10.43.133.213 <none> 6379/TCP 56s

azure-vote-front LoadBalancer 10.43.60.235 192.168.1.13,192.168.1.159,192.168.1.206 80:30097/TCP 56s

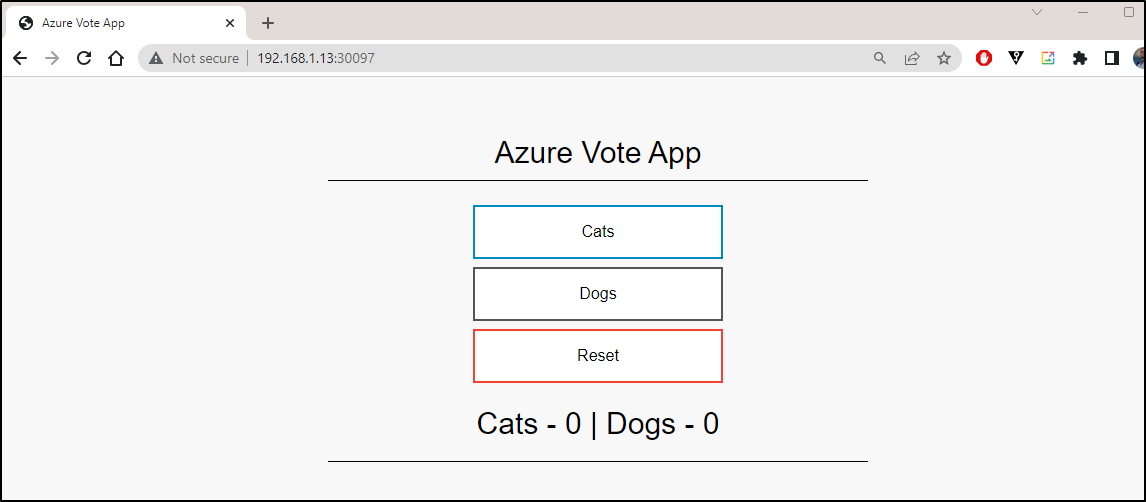

and test on the NodePort

I’ll now change my Ngrok deployment from the nginx service to this azure-vote-front one

spec:

containers:

- args:

- http

- azure-vote-front

command:

- ngrok

env:

- name: NGROK_AUTHTOKEN

This will necessarily bounce the pod

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl edit deployment ngrok

deployment.apps/ngrok edited

builder@DESKTOP-QADGF36:~/Workspaces/docker-ngrok$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-78cc4c645b-7vc2n 1/1 Running 0 121m

vote-back-azure-vote-1688994153-7b76fb69b9-xxzgc 1/1 Running 0 3m16s

vote-front-azure-vote-1688994153-6fdc76bdd9-hbhg7 1/1 Running 0 3m16s

ngrok-669dd5fdd8-lxdkg 1/1 Running 0 4s

ngrok-8595966d8d-bwzgz 1/1 Terminating 0 20m

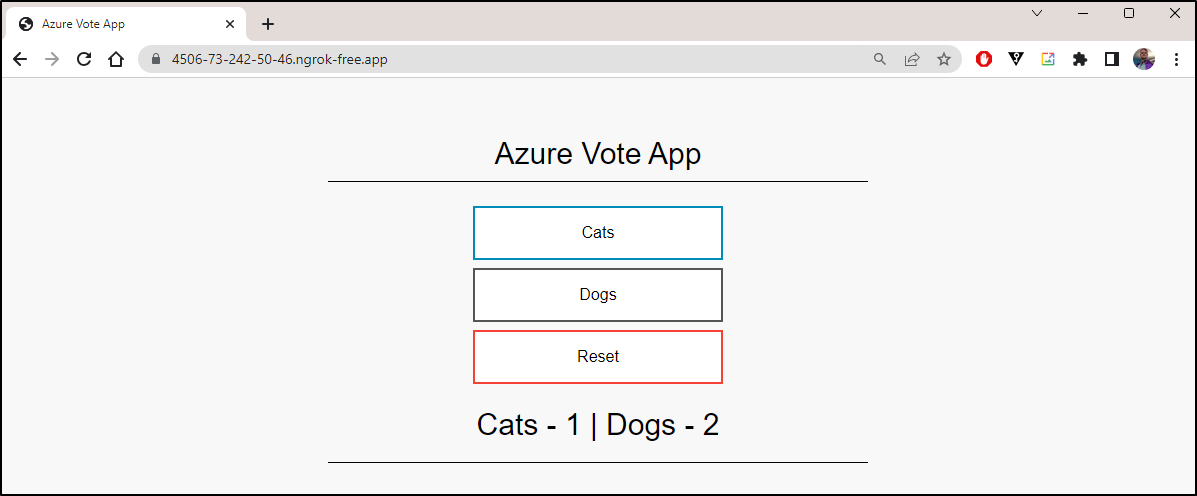

And give me a new DyDNS name

$ kubectl exec $(kubectl get pods -l=app=ngrok -o=jsonpath='{.items[0].metadata.name}') -- curl http://localhost:4040/api/tunnels | jq

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0{

"tunnels": [

{

"name": "command_line",

"ID": "49511d21b271fbdd35dea454bf0ebb10",

"uri": "/api/tunnels/command_line",

"public_url": "https://4506-73-242-50-46.ngrok-free.app",

"proto": "https",

"config": {

"addr": "http://azure-vote-front:80",

100 454 100 454 0 0 308k 0 --:--:-- --:--:-- --:--:-- 443k

"inspect": true

},

"metrics": {

"conns": {

"count": 0,

"gauge": 0,

"rate1": 0,

"rate5": 0,

"rate15": 0,

"p50": 0,

"p90": 0,

"p95": 0,

"p99": 0

},

"http": {

"count": 0,

"rate1": 0,

"rate5": 0,

"rate15": 0,

"p50": 0,

"p90": 0,

"p95": 0,

"p99": 0

}

}

}

],

"uri": "/api/tunnels"

}

Which works just dandy!

Summary

I really tried to make the official NGrok Ingress Controller work; short of paying for it, that is. I can get the direct tunnel to work just fine ( as I spoke to in March ).

Updating the Container from Werner Beroux did, however, work (and yes, I did submit a PR back for their consideration).