Published: Apr 27, 2023 by Isaac Johnson

In the last post, we focused on setting up a simple SigNoz system in the test cluster and trying Slack alert channels. Today, we’ll launch SigNoz in the main on-prem cluster, configure Ingress with proper DNS and TLS, and set up alerts. For alerts, we’ll configure and test Pagerduty and Webhooks by way of Zappier and Make.

Setting up Production on-prem

First, I need to get my default storage class:

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs cluster.local/nfs-server-provisioner-1658802767 Delete Immediate true 274d

managed-nfs-storage (default) fuseim.pri/ifs Delete Immediate true 274d

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 274d

That’s a problem. We shouldn’t have two.

Let’s eliminate one;

$ kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"false"}}}'

storageclass.storage.k8s.io/local-path patched

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs cluster.local/nfs-server-provisioner-1658802767 Delete Immediate true 274d

managed-nfs-storage (default) fuseim.pri/ifs Delete Immediate true 274d

local-path rancher.io/local-path Delete WaitForFirstConsumer false 274d

I’ll now double check volume expansion is enabled

$ DEFAULT_STORAGE_CLASS=$(kubectl get storageclass -o=jsonpath='{.items[?(@.metadata.annotations.storageclass\.kubernetes\.io/is-default-class=="true")].metadata.name}')

$ echo $DEFAULT_STORAGE_CLASS

managed-nfs-storage

$ kubectl patch storageclass "$DEFAULT_STORAGE_CLASS" -p '{"allowVolumeExpansion": true}'

storageclass.storage.k8s.io/managed-nfs-storage patched (no change)

I’ll do a repo add and update

$ helm repo add signoz https://charts.signoz.io

"signoz" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "metallb" chart repository

...Successfully got an update from the "c7n" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "cribl" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "ingress-nginx" chart repository

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "kubecost" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "istio" chart repository

...Successfully got an update from the "rancher-stable" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "jenkins" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "gitlab" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

I’ll now install with Helm. I could do Ingress setup here, but I’ll handle that separately

$ helm --namespace platform install signoz-release signoz/signoz --create-namespace

coalesce.go:162: warning: skipped value for initContainers: Not a table.

NAME: signoz-release

LAST DEPLOYED: Wed Apr 26 18:11:26 2023

NAMESPACE: platform

STATUS: deployed

REVISION: 1

NOTES:

1. You have just deployed SigNoz cluster:

- frontend version: '0.18.3'

- query-service version: '0.18.3'

- alertmanager version: '0.23.0-0.2'

- otel-collector version: '0.66.7'

- otel-collector-metrics version: '0.66.7'

2. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace platform -l "app.kubernetes.io/name=signoz,app.kubernetes.io/instance=signoz-release,app.kubernetes.io/component=frontend" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:3301 to use your application"

kubectl --namespace platform port-forward $POD_NAME 3301:3301

If you have any ideas, questions, or any feedback, please share on our Github Discussions:

https://github.com/SigNoz/signoz/discussions/713

I expect it will take a few on a new cluster

$ kubectl get pods -n platform

NAME READY STATUS RESTARTS AGE

signoz-release-k8s-infra-otel-agent-ljncc 0/1 Running 0 18s

signoz-release-zookeeper-0 0/1 ContainerCreating 0 18s

signoz-release-clickhouse-operator-5c446459c8-hlr5t 0/2 ContainerCreating 0 18s

signoz-release-k8s-infra-otel-agent-4w76d 0/1 Running 0 17s

signoz-release-otel-collector-metrics-6995ffdbcd-9cjwl 0/1 Init:0/1 0 18s

signoz-release-otel-collector-8446ff94db-plqgb 0/1 Init:0/1 0 18s

signoz-release-frontend-574f4c7f5-vgtbx 0/1 Init:0/1 0 18s

signoz-release-query-service-0 0/1 Init:0/1 0 18s

signoz-release-alertmanager-0 0/1 Init:0/1 0 18s

signoz-release-k8s-infra-otel-deployment-577d4bc7f8-k5tlv 0/1 Running 0 18s

signoz-release-k8s-infra-otel-agent-qjp22 0/1 Running 0 17s

signoz-release-k8s-infra-otel-agent-sgpq5 0/1 Running 0 17s

$ kubectl get pods -n platform

NAME READY STATUS RESTARTS AGE

signoz-release-k8s-infra-otel-agent-4w76d 1/1 Running 0 4m55s

signoz-release-k8s-infra-otel-agent-ljncc 1/1 Running 0 4m56s

signoz-release-clickhouse-operator-5c446459c8-hlr5t 2/2 Running 0 4m56s

signoz-release-k8s-infra-otel-agent-qjp22 1/1 Running 0 4m55s

signoz-release-k8s-infra-otel-deployment-577d4bc7f8-k5tlv 1/1 Running 0 4m56s

signoz-release-zookeeper-0 1/1 Running 0 4m56s

signoz-release-k8s-infra-otel-agent-sgpq5 1/1 Running 0 4m55s

chi-signoz-release-clickhouse-cluster-0-0-0 1/1 Running 0 4m20s

signoz-release-query-service-0 1/1 Running 0 4m56s

signoz-release-otel-collector-metrics-6995ffdbcd-9cjwl 1/1 Running 0 4m56s

signoz-release-otel-collector-8446ff94db-plqgb 1/1 Running 0 4m56s

signoz-release-frontend-574f4c7f5-vgtbx 1/1 Running 0 4m56s

signoz-release-alertmanager-0 1/1 Running 0 4m56s

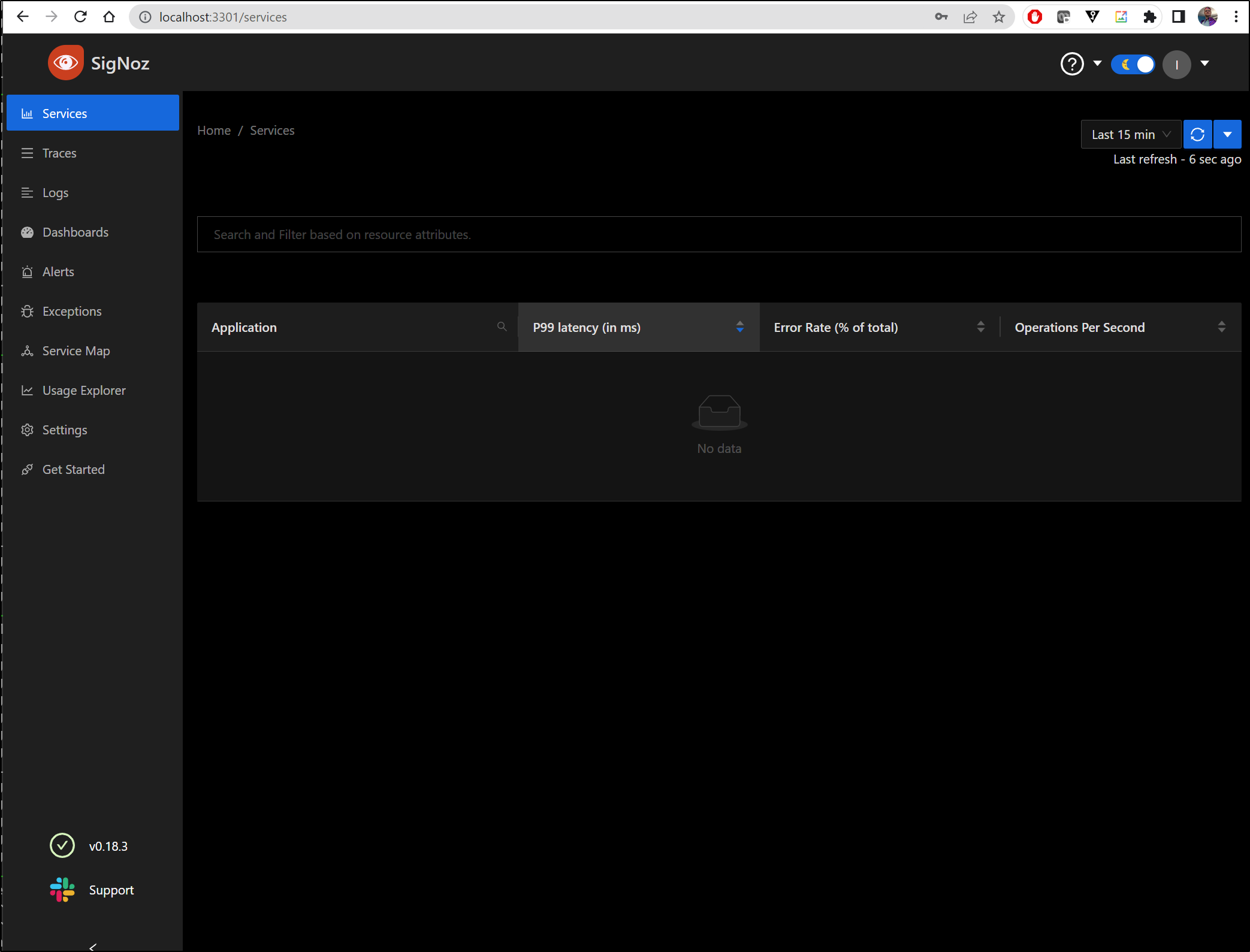

I can now port-forward to the frontend and sign-in with my admin account

$ kubectl --namespace platform port-forward svc/`kubectl get svc --namespace platform -l "app.kubernetes.io/component=frontend" -o jsonpath="{.items[0].metadata.name}"` 3301:3301

Forwarding from 127.0.0.1:3301 -> 3301

Forwarding from [::1]:3301 -> 3301

Handling connection for 3301

Handling connection for 3301

Let’s add some Ingress next

$ cat r53-signoz.json

{

"Comment": "CREATE signoz fb.s A record ",

"Changes": [

{

"Action": "CREATE",

"ResourceRecordSet": {

"Name": "signoz.freshbrewed.science",

"Type": "A",

"TTL": 300,

"ResourceRecords": [

{

"Value": "73.242.50.46"

}

]

}

}

]

}

$ aws route53 change-resource-record-sets --hosted-zone-id Z39E8QFU0F9PZP --change-batch file://r53-signoz.json

{

"ChangeInfo": {

"Id": "/change/C01482562FU2Y7S43TH2H",

"Status": "PENDING",

"SubmittedAt": "2023-04-26T23:35:48.136Z",

"Comment": "CREATE signoz fb.s A record "

}

}

Now I’ll apply the ingress

$ cat signoz.ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

ingress.kubernetes.io/proxy-body-size: "0"

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "600"

nginx.org/proxy-read-timeout: "600"

labels:

app.kubernetes.io/name: signoz

name: signoz

namespace: platform

spec:

rules:

- host: signoz.freshbrewed.science

http:

paths:

- backend:

service:

name: signoz-release-frontend

port:

number: 3301

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- signoz.freshbrewed.science

secretName: signoz-tls

$ kubectl apply -f signoz.ingress.yaml -n platform

ingress.networking.k8s.io/signoz created

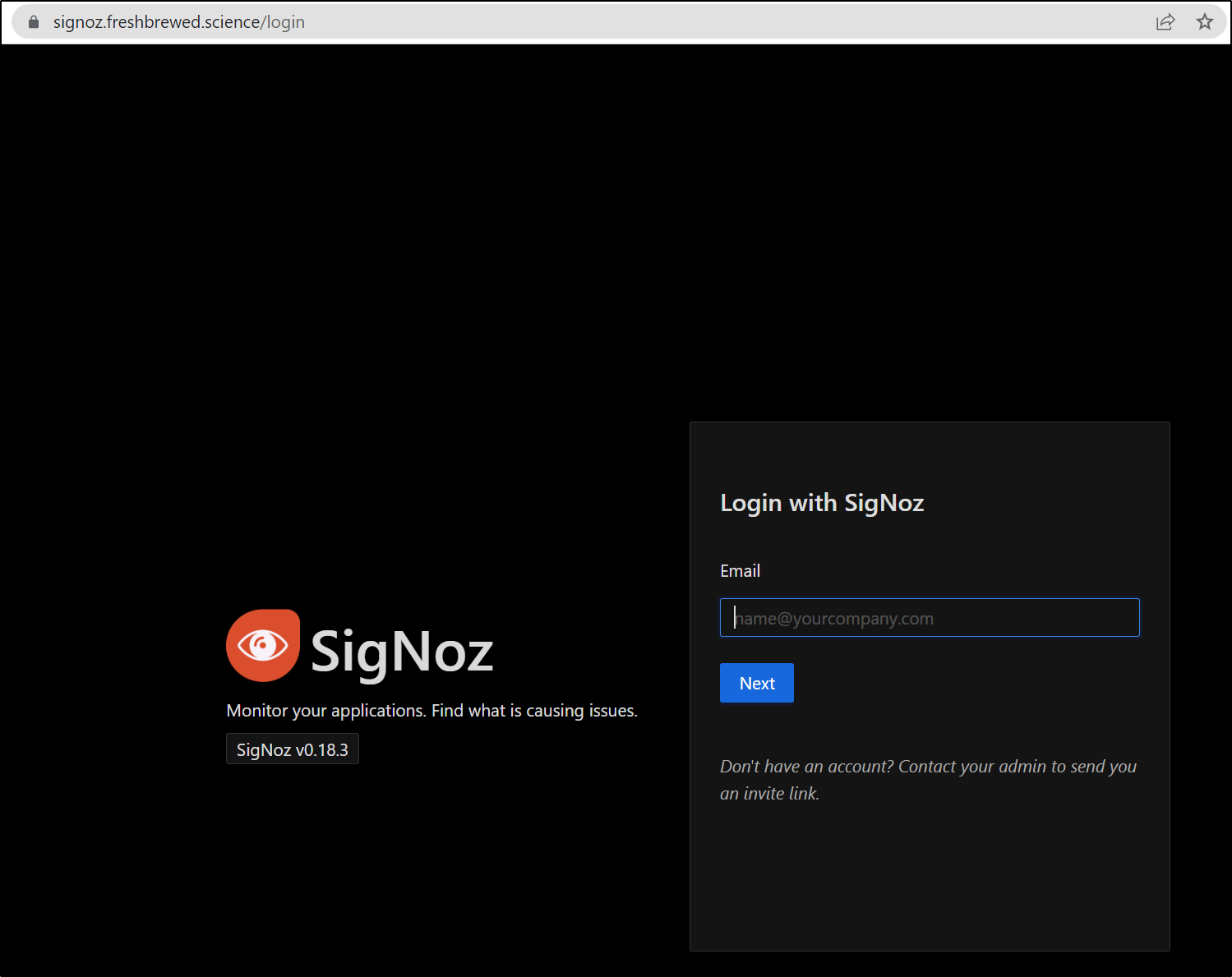

Now I can login

PagerDuty Alerts

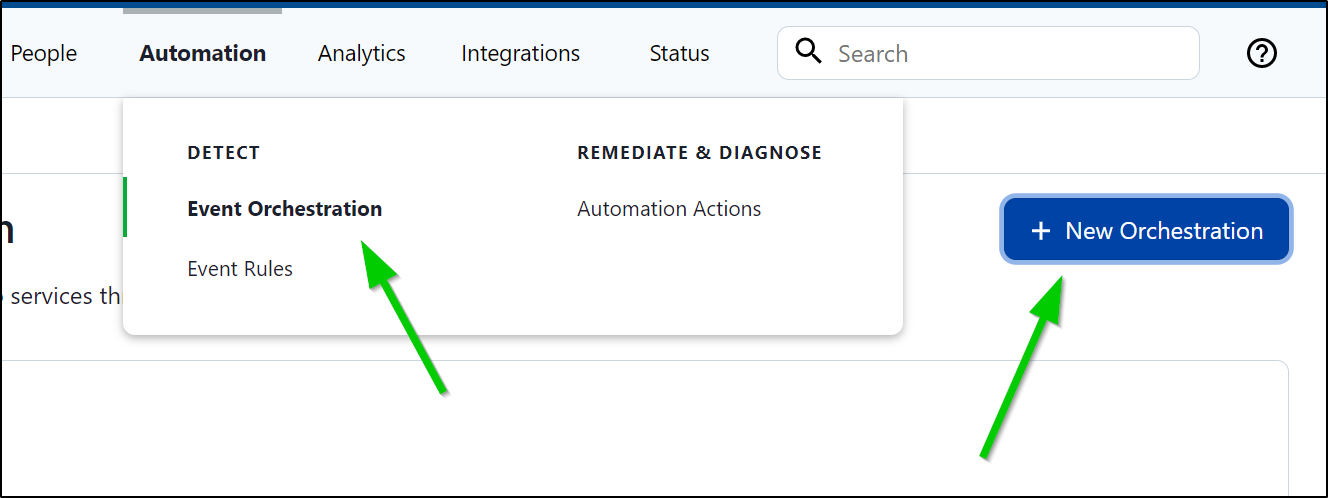

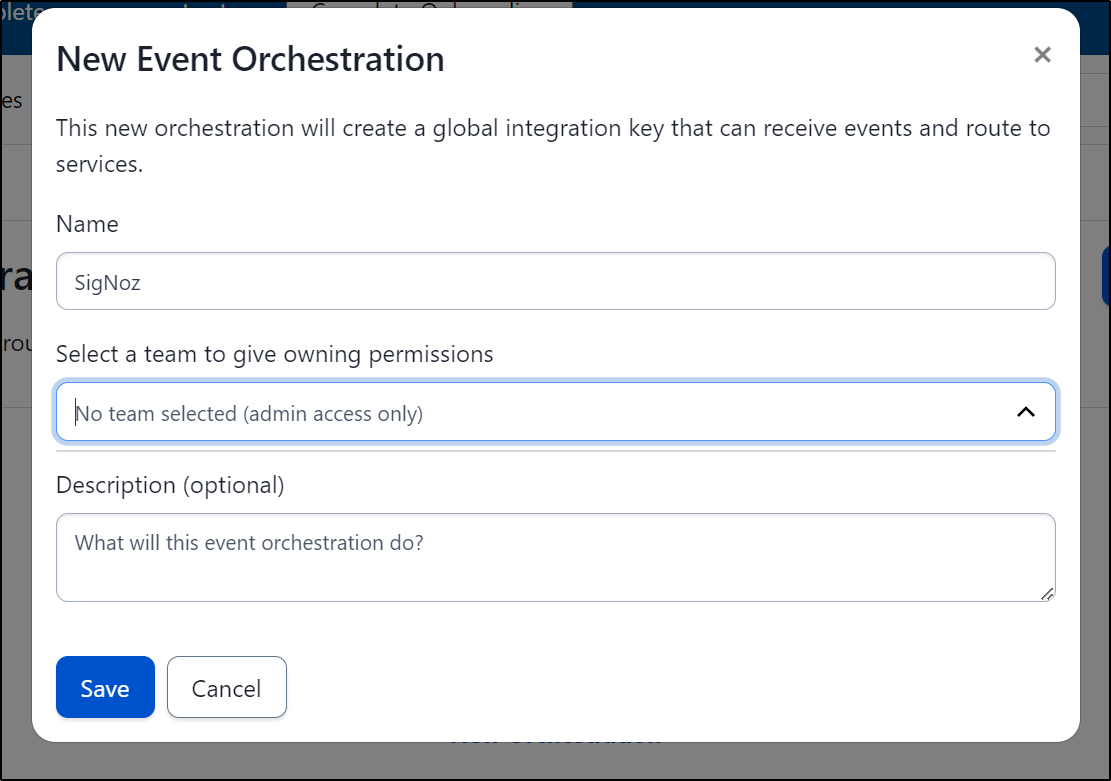

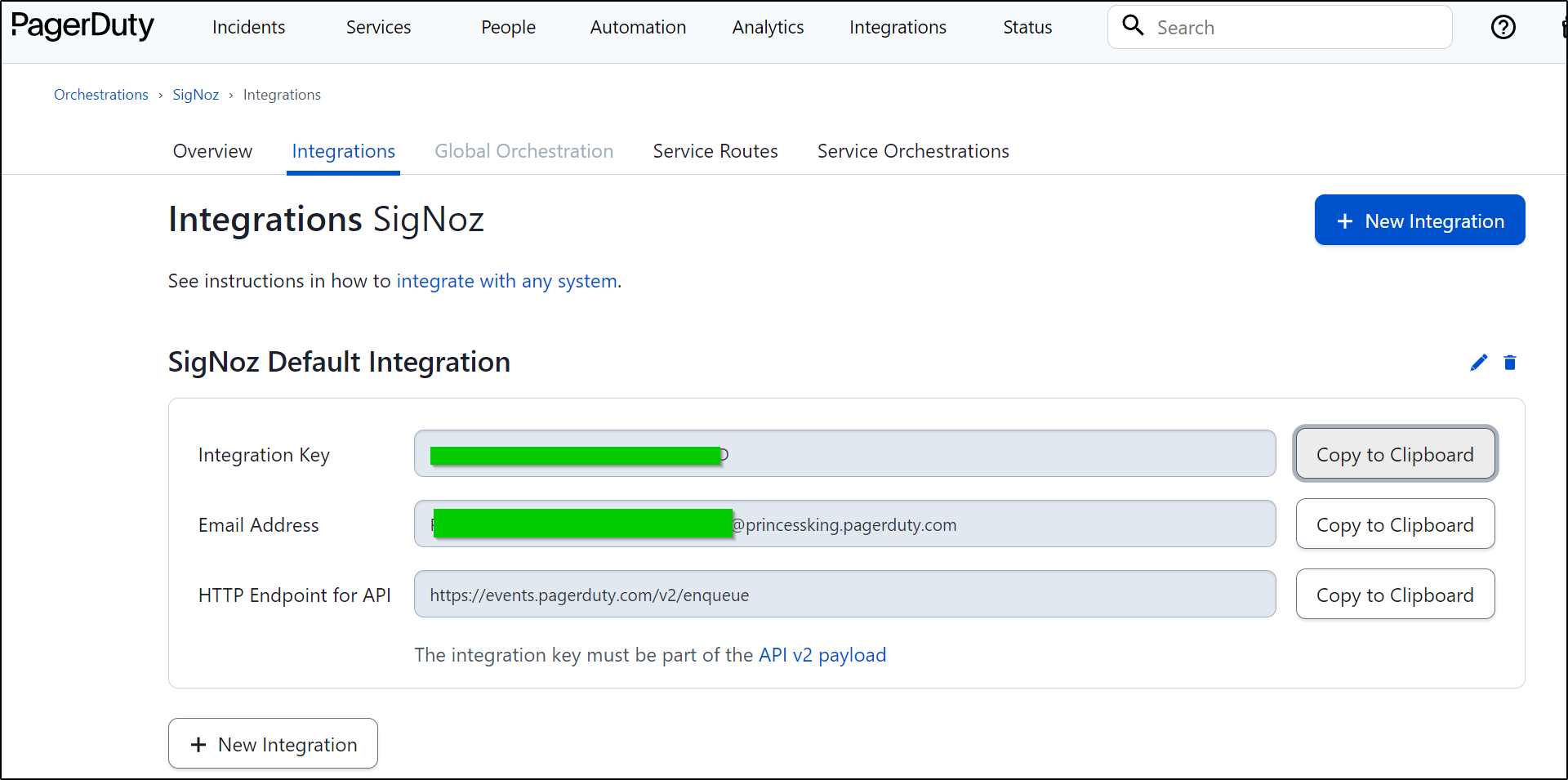

I’ll need to create a new Event Orchestration in Automation

Give the Event Orchestration a name and save

While we can get the Integration key here, we need to tie it to a service

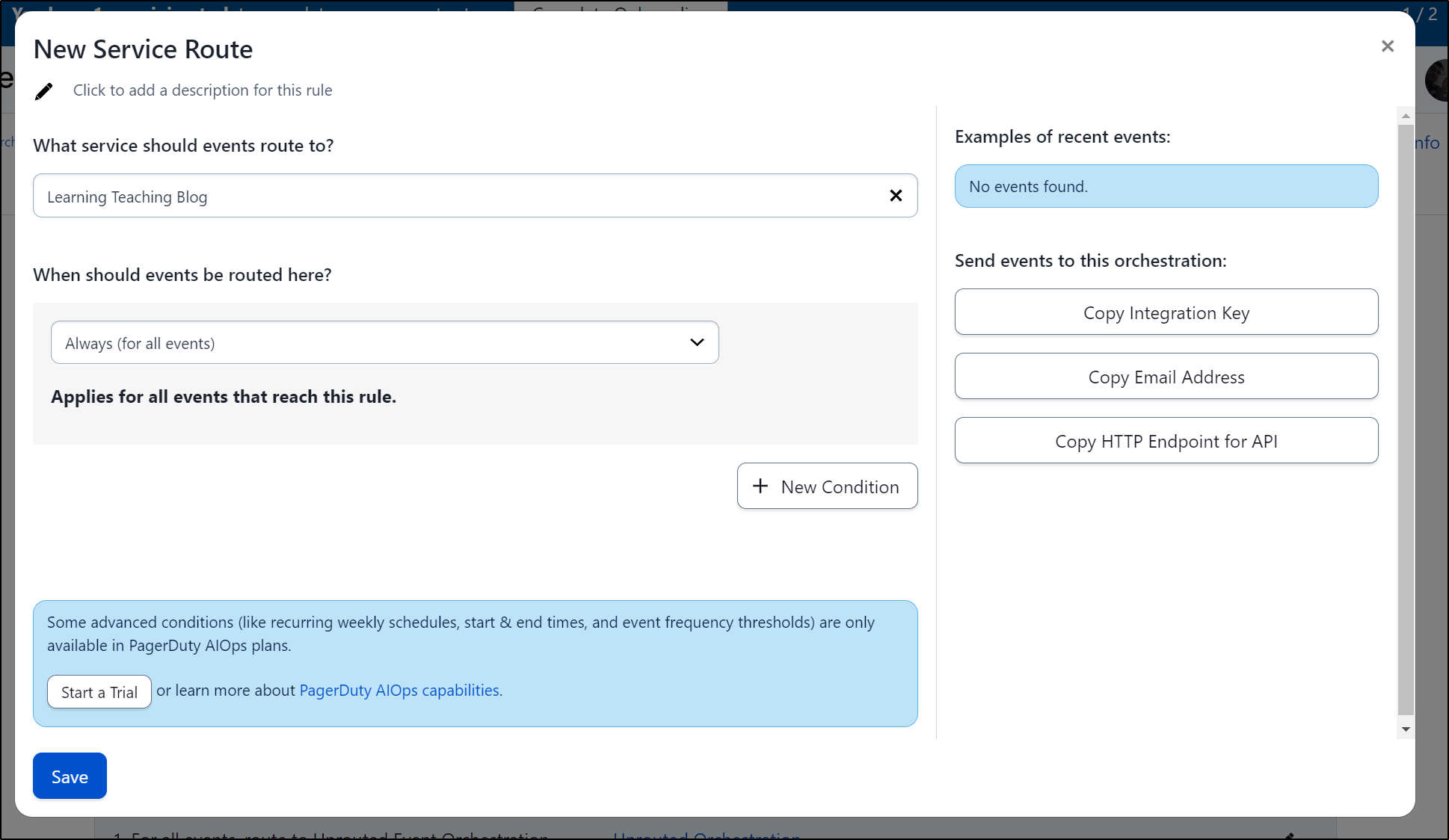

From there I can route incoming events to a service

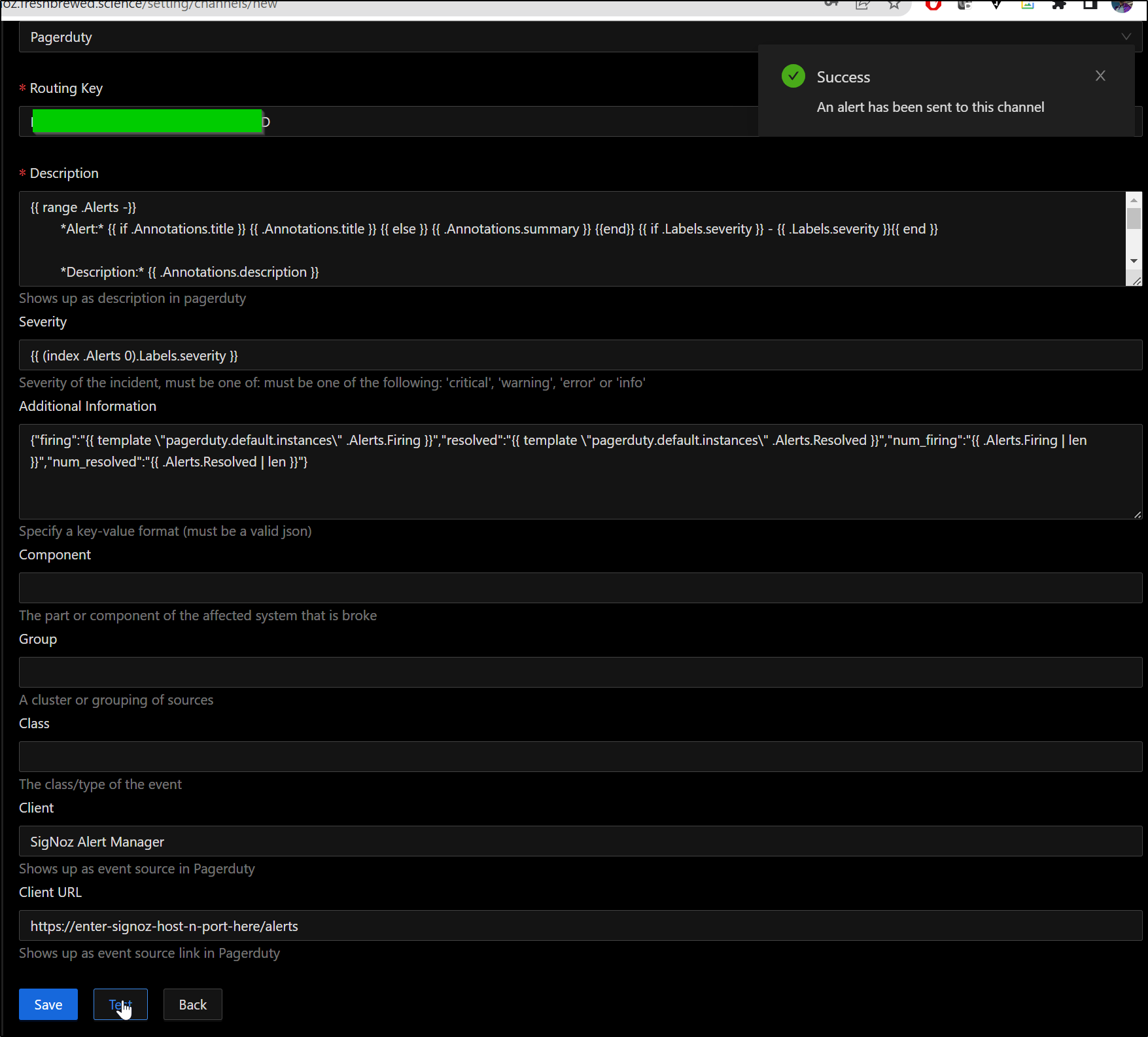

I can put the “Integration Key” into the “Routing Key” field in a new Pagerduty notification area and click Test to test

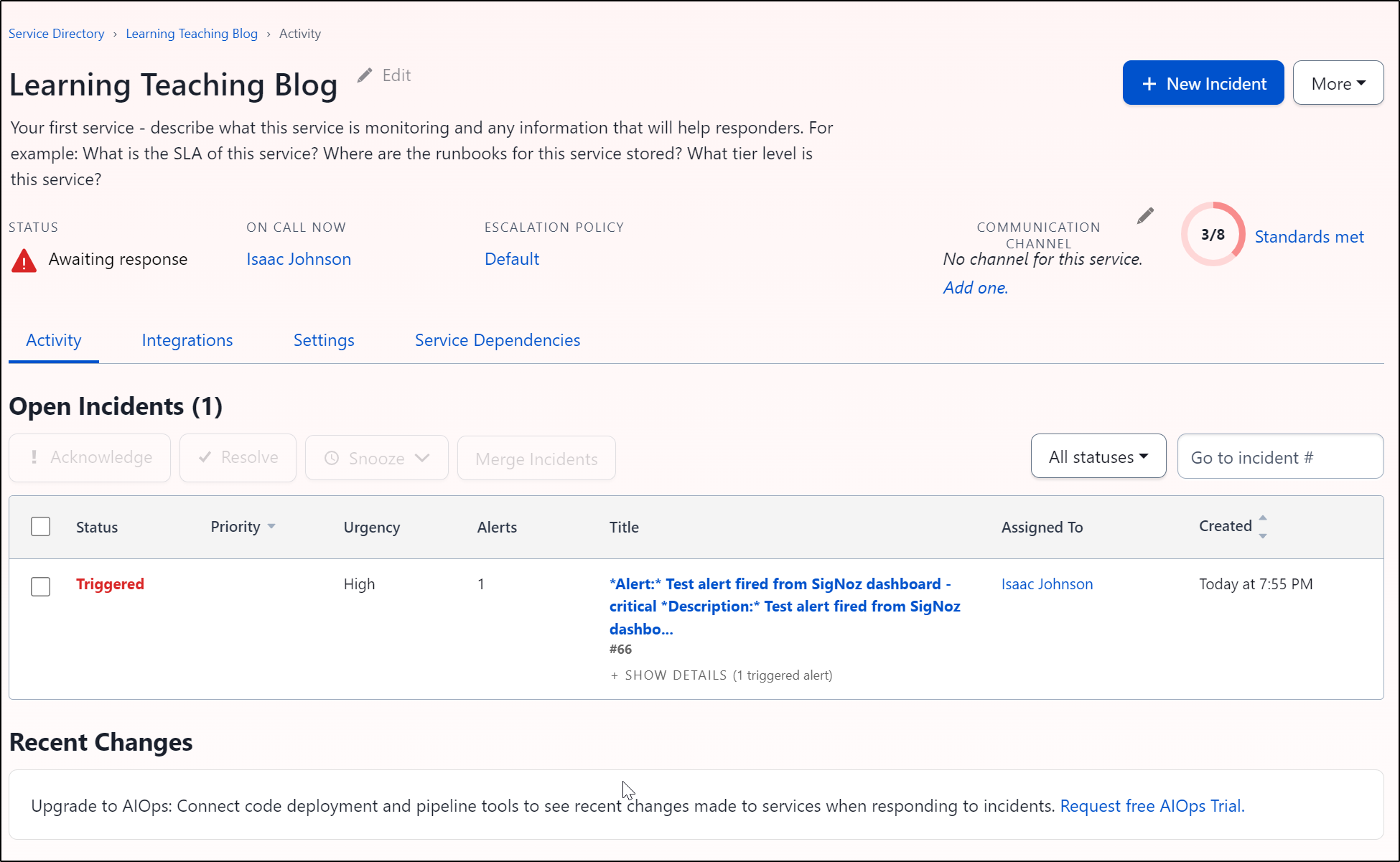

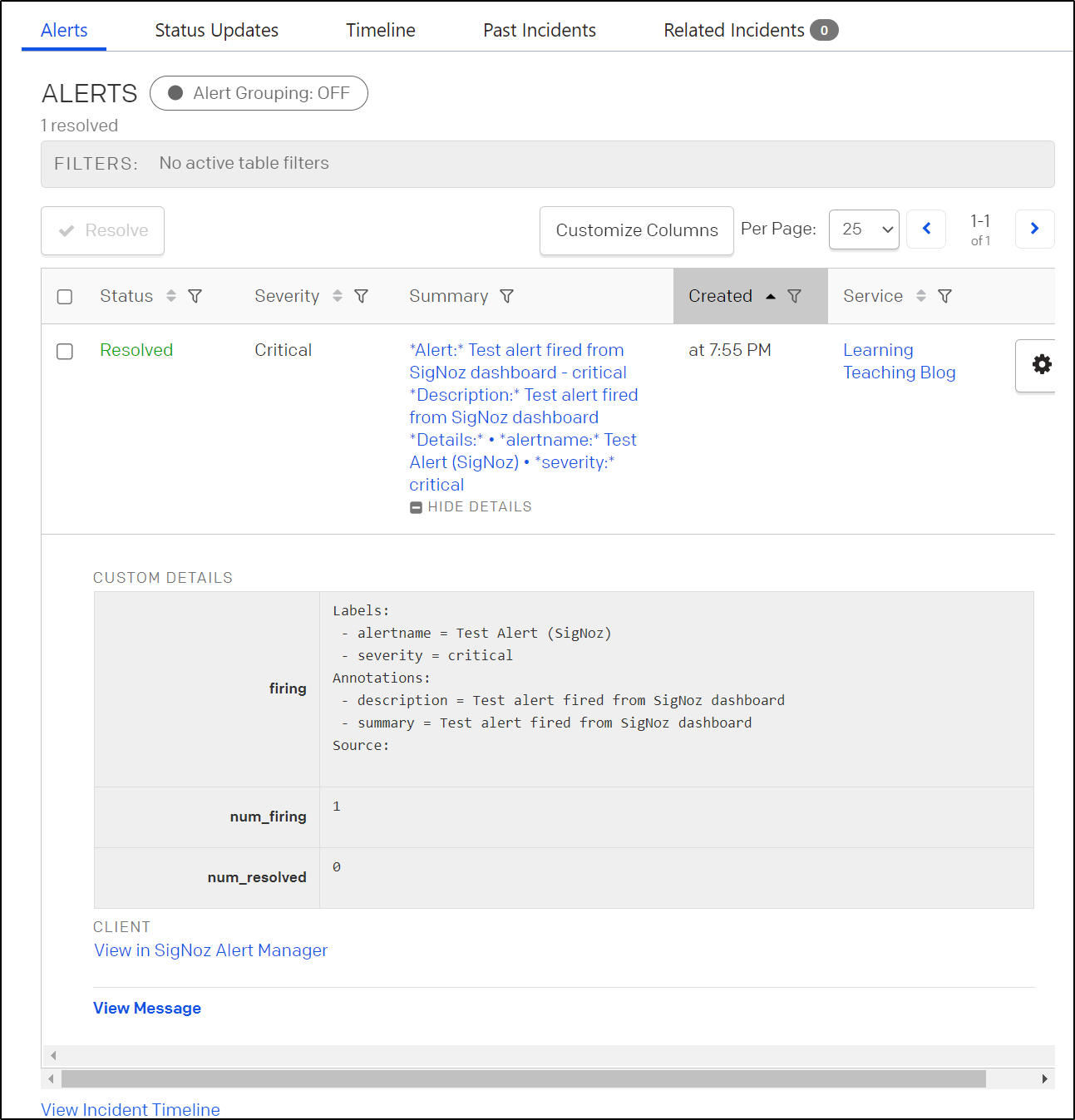

And I can see it triggered

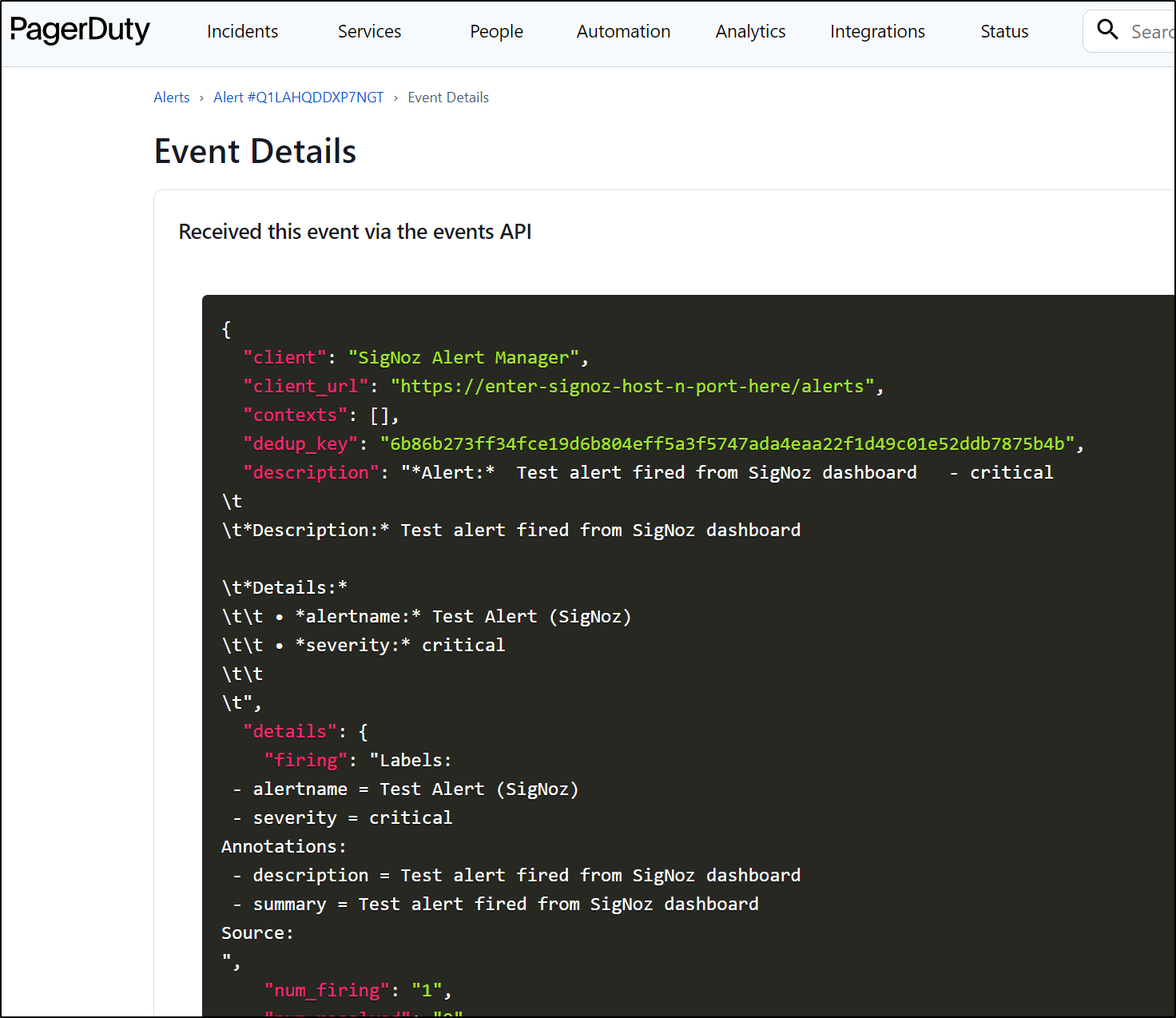

I can see details of the event in Pagerduty

I can “View Message” at the bottom for even more details

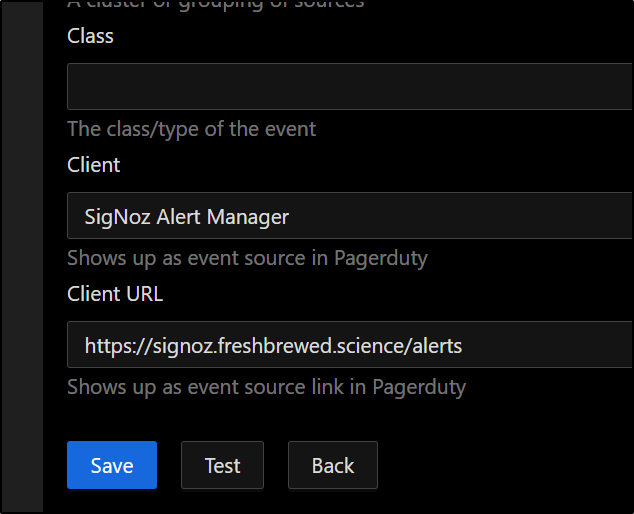

So I’ll update the Client URL for my host

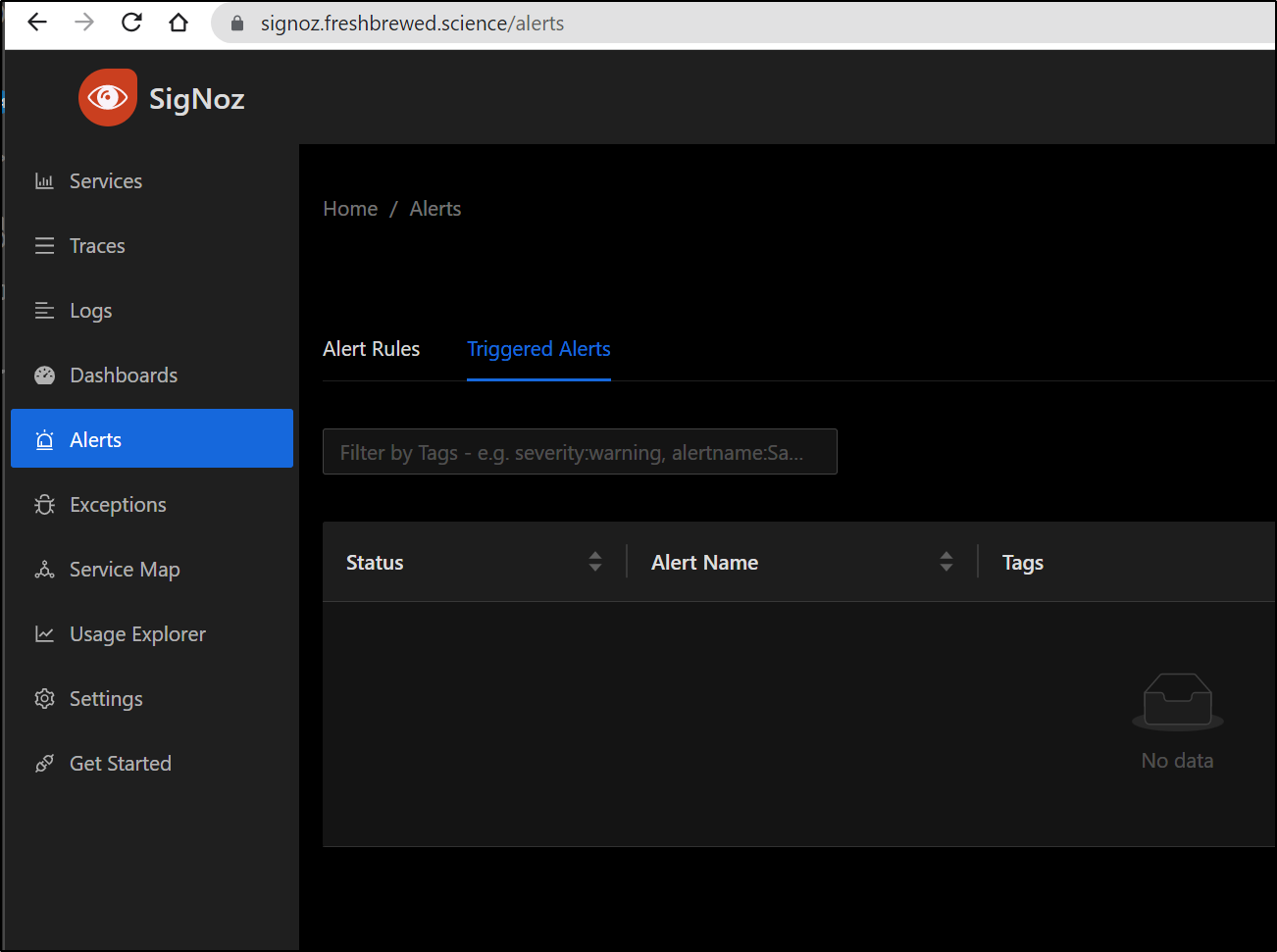

Which, if I had a real triggered alert, we would see details

Webhooks

One of the areas we wanted to improve was creating some form of email alerts.

Today, we’ll look at two offerings with a free or cheap tier; Zappier and Make (formerly Integromat)

Zappier

We can login or signup for Zappier

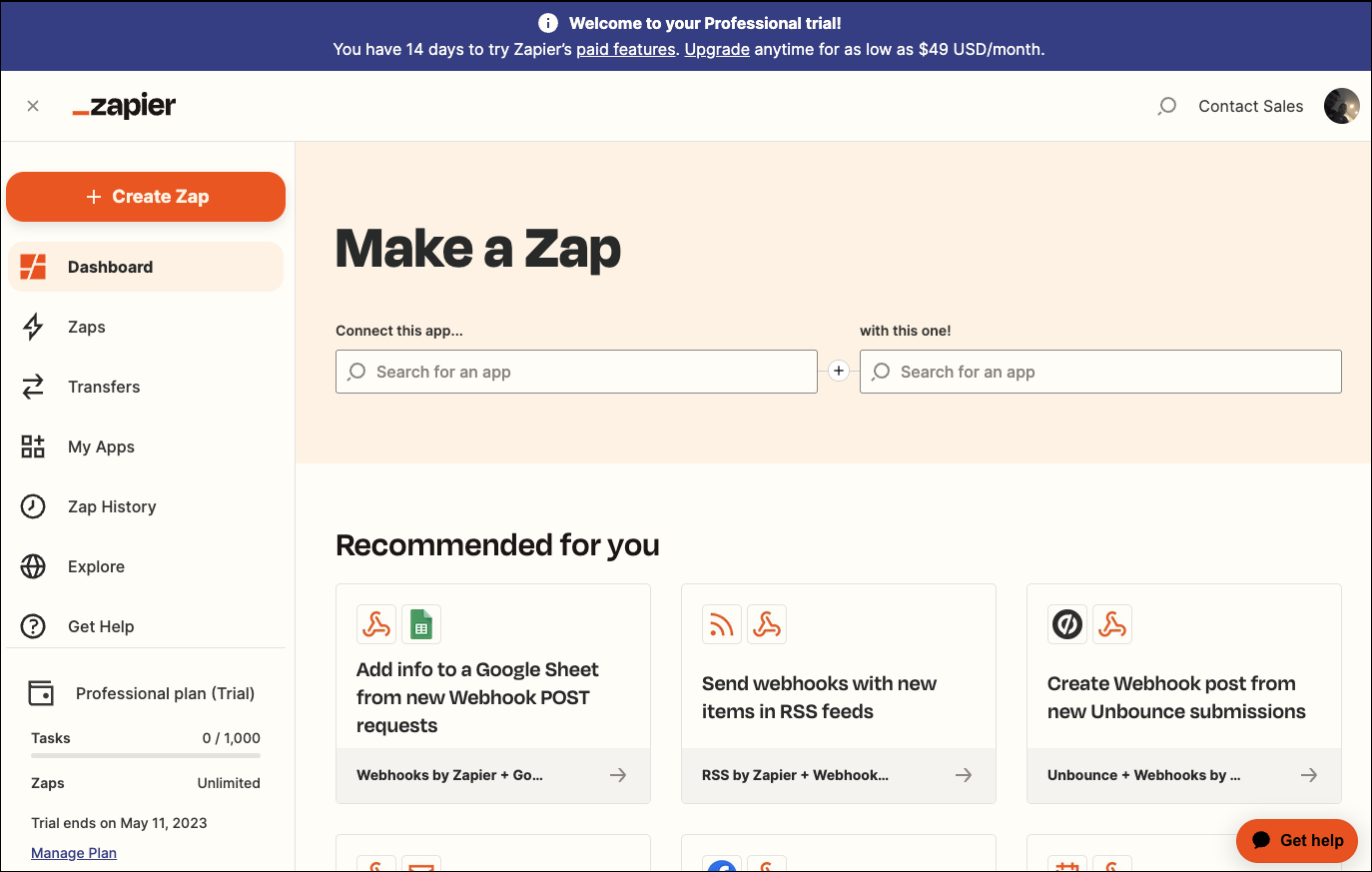

From there, I can make a new “Zap”

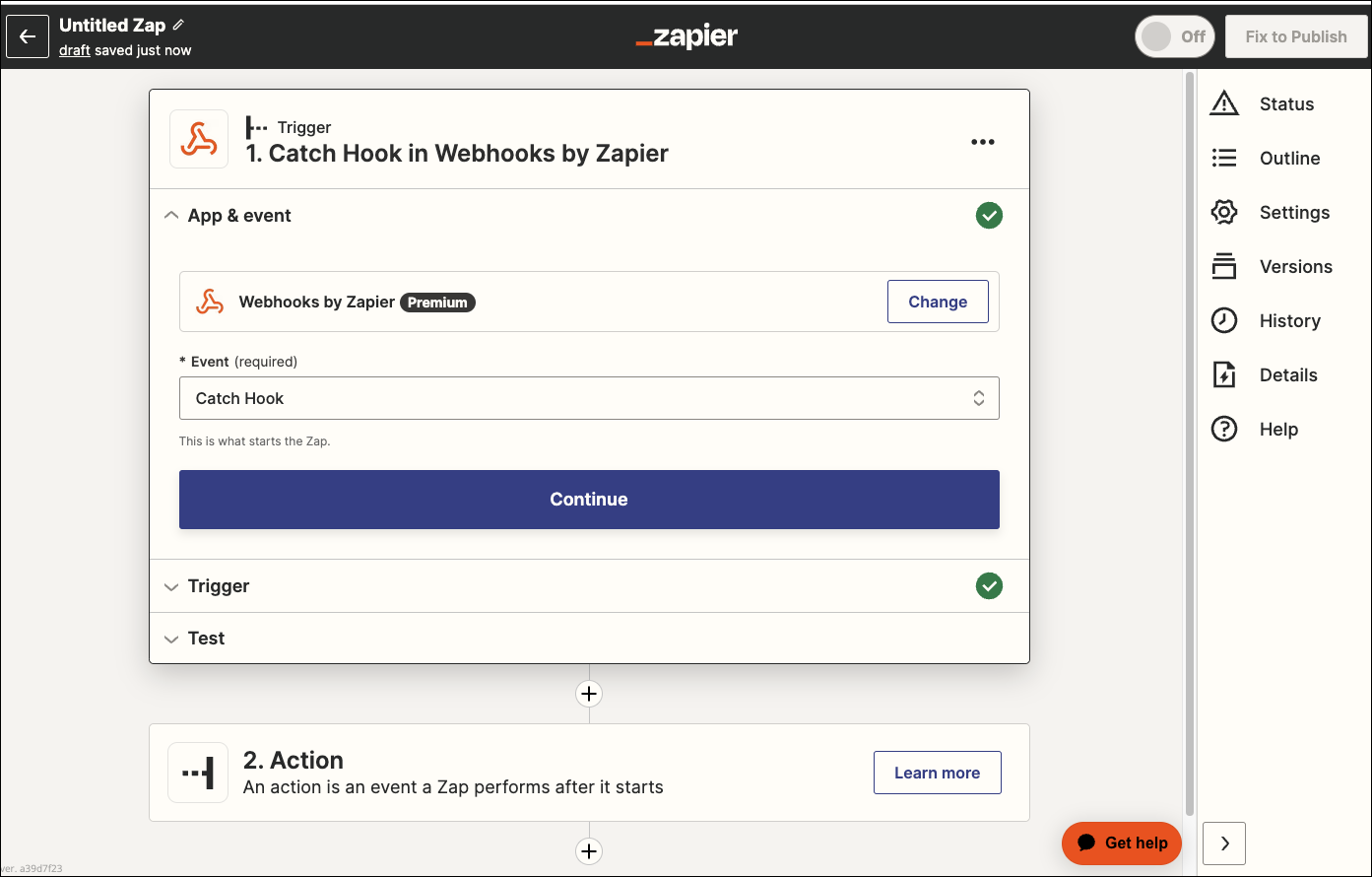

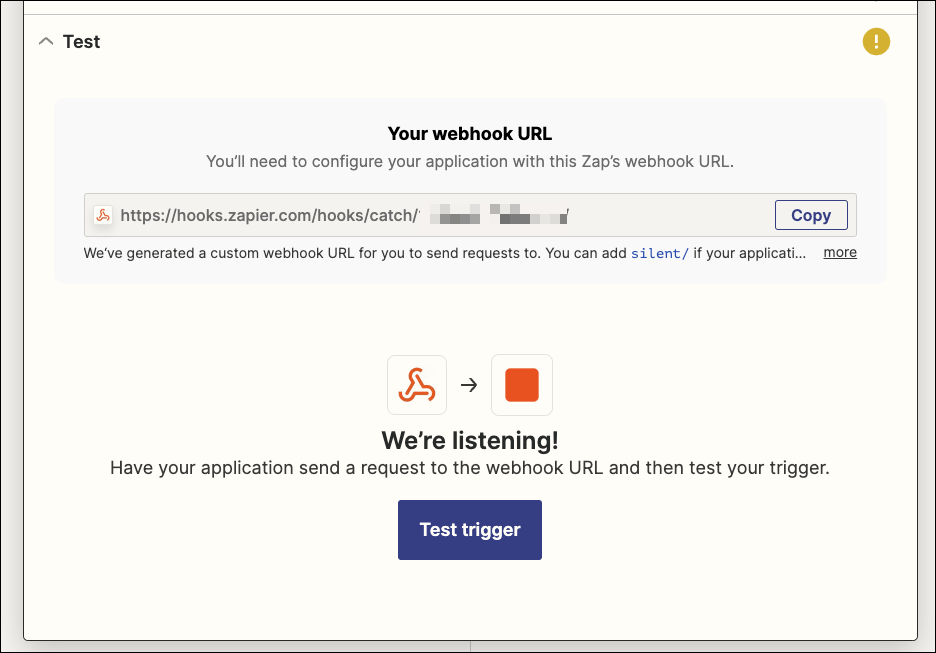

We can then use one of the “Catch Hook” in Webhooks

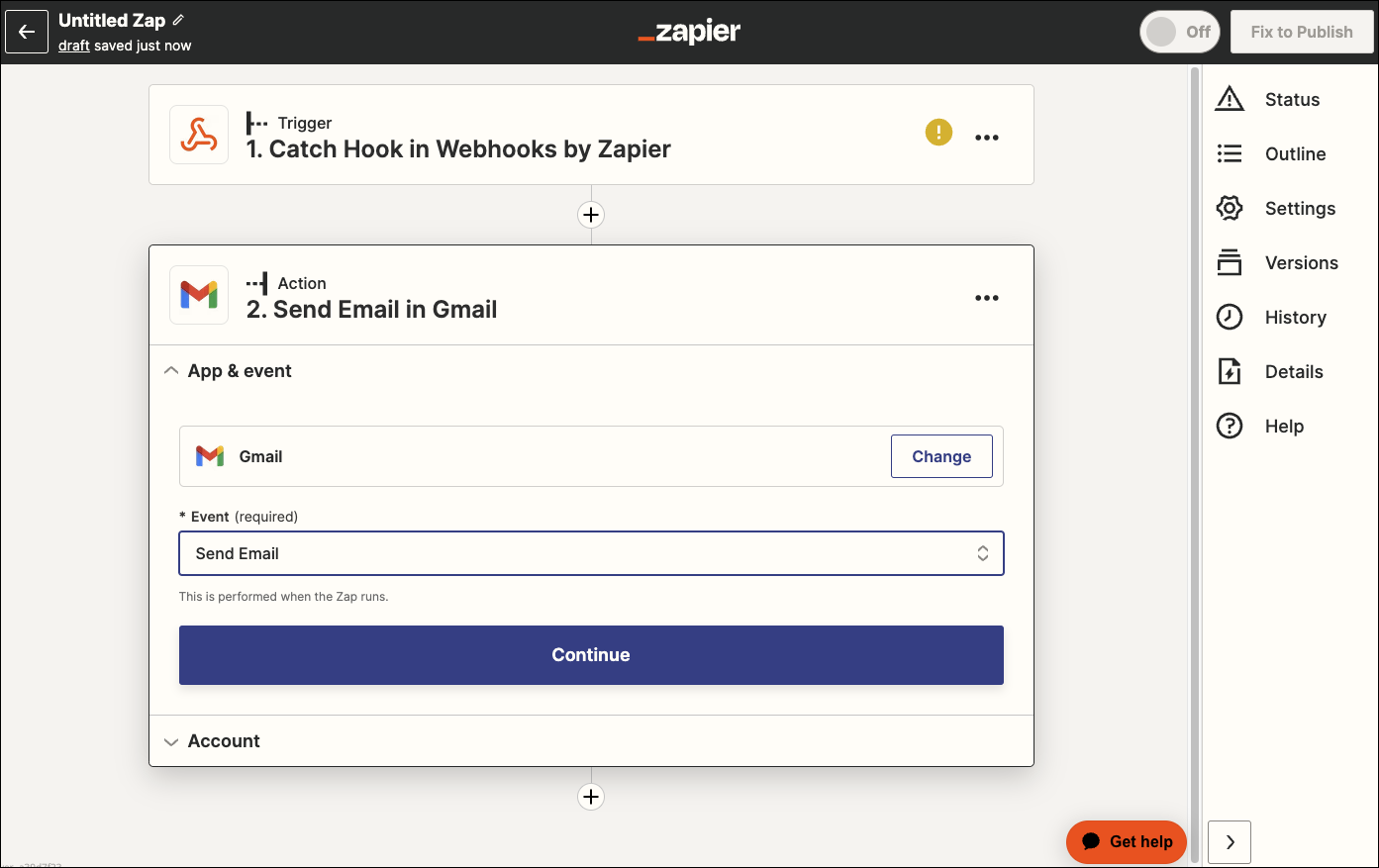

for the action, we can use an email system like Gmail

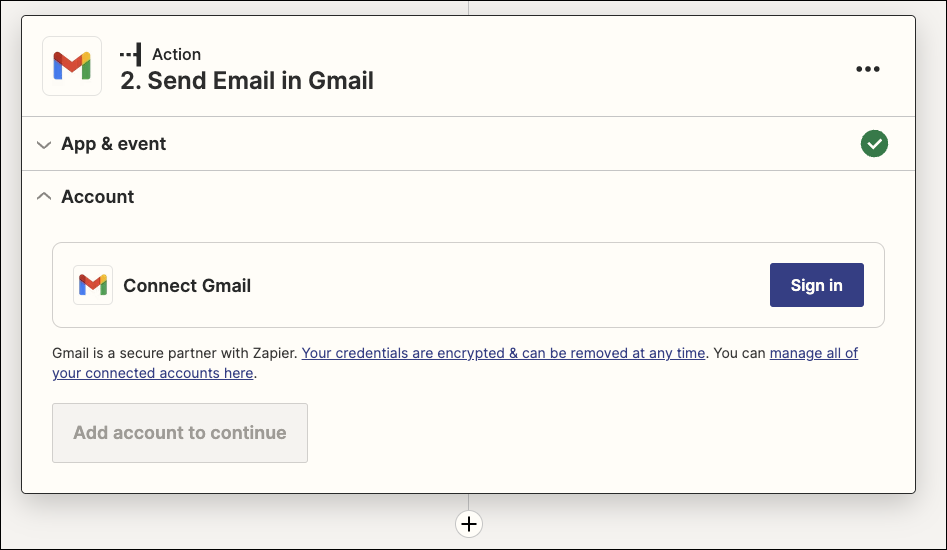

I’ll need to “Connect” it via “Sign In”

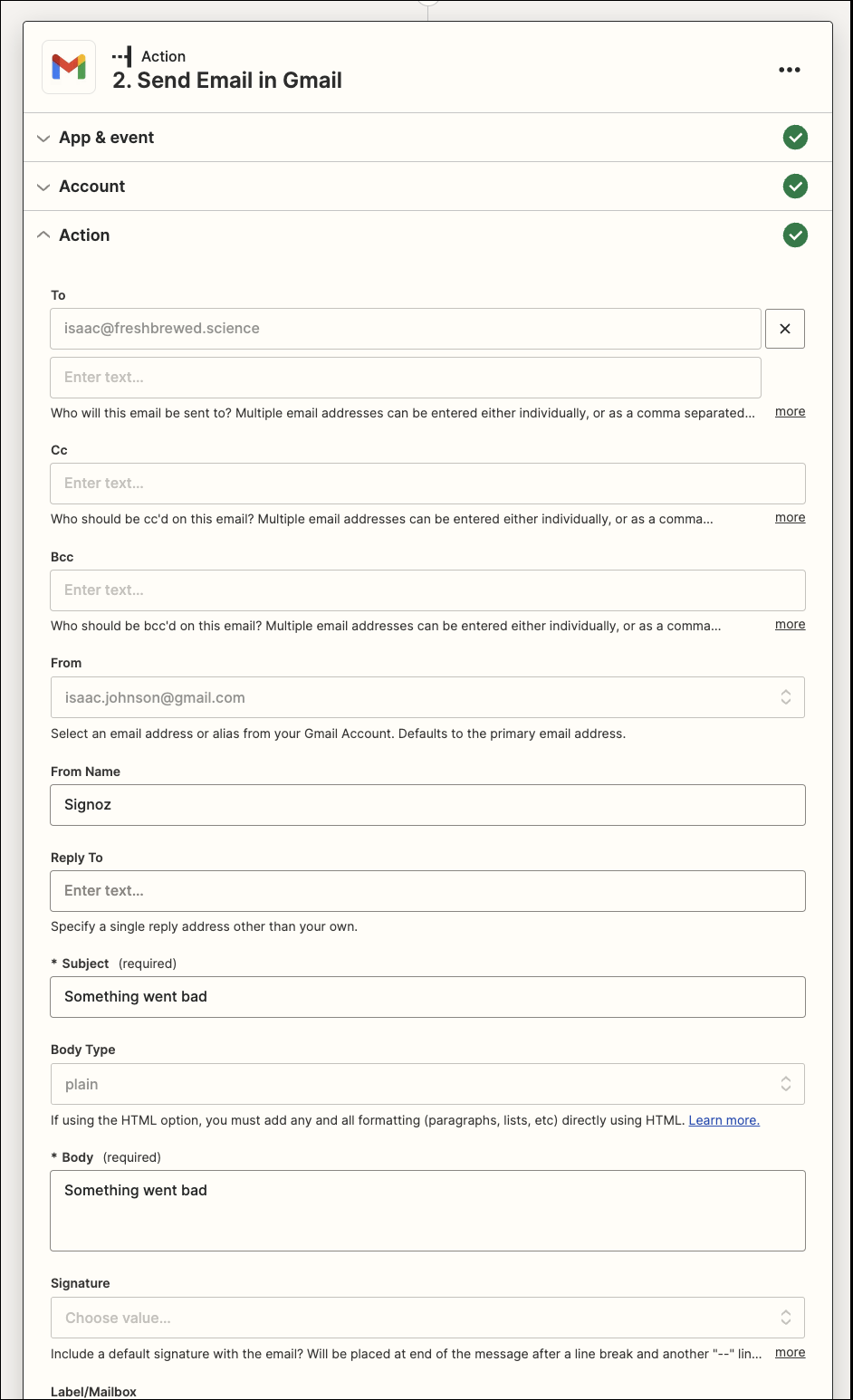

Once we auth and approve it, we can now set many of the fields

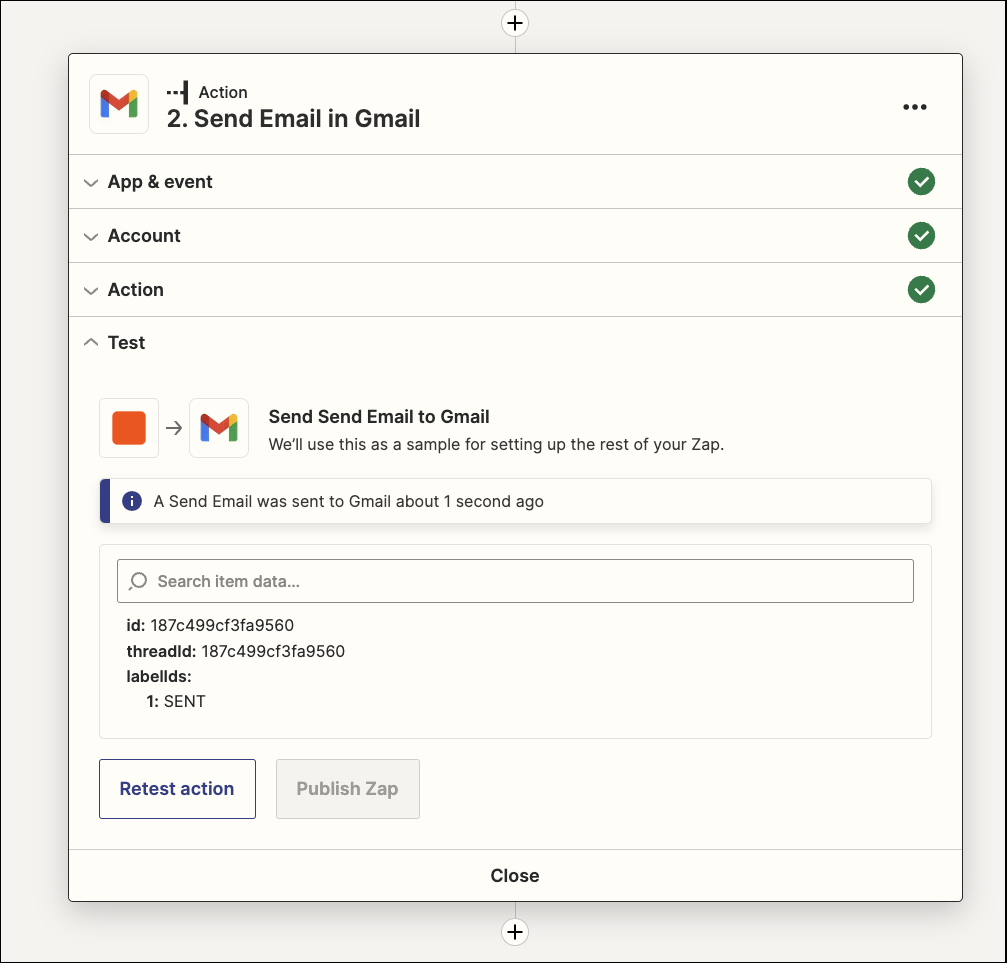

When ready, I can click “Test”

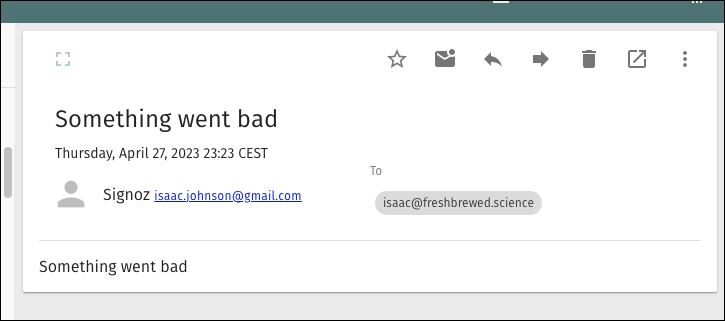

And see I got an email

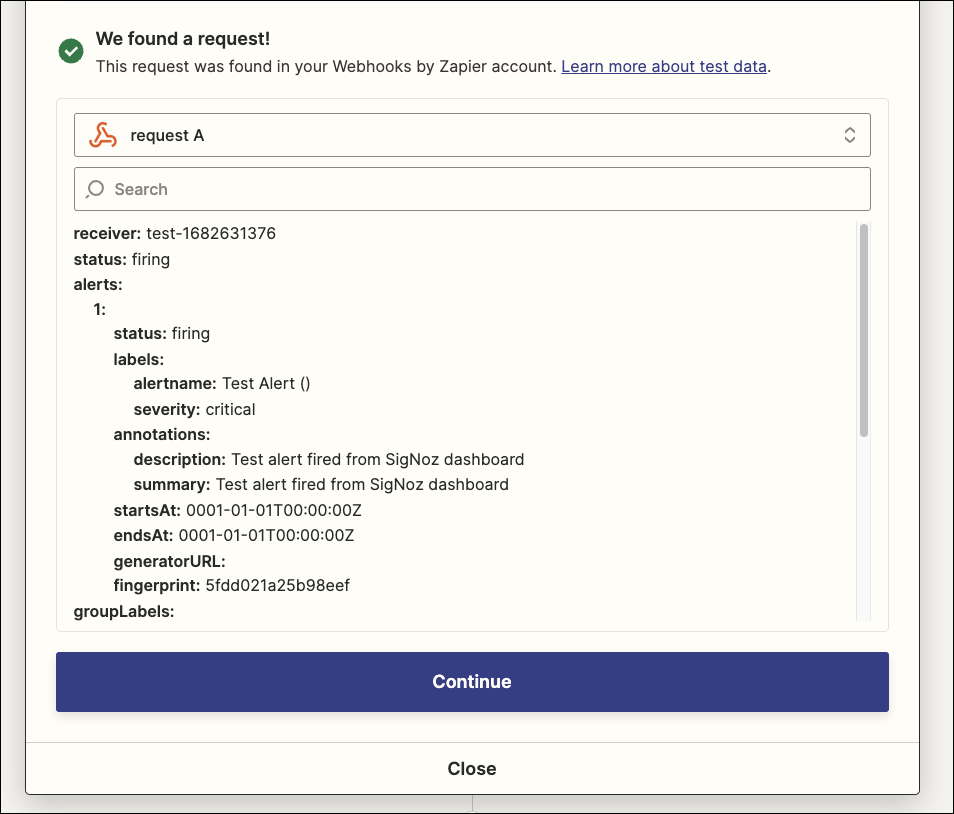

Now I can test the trigger

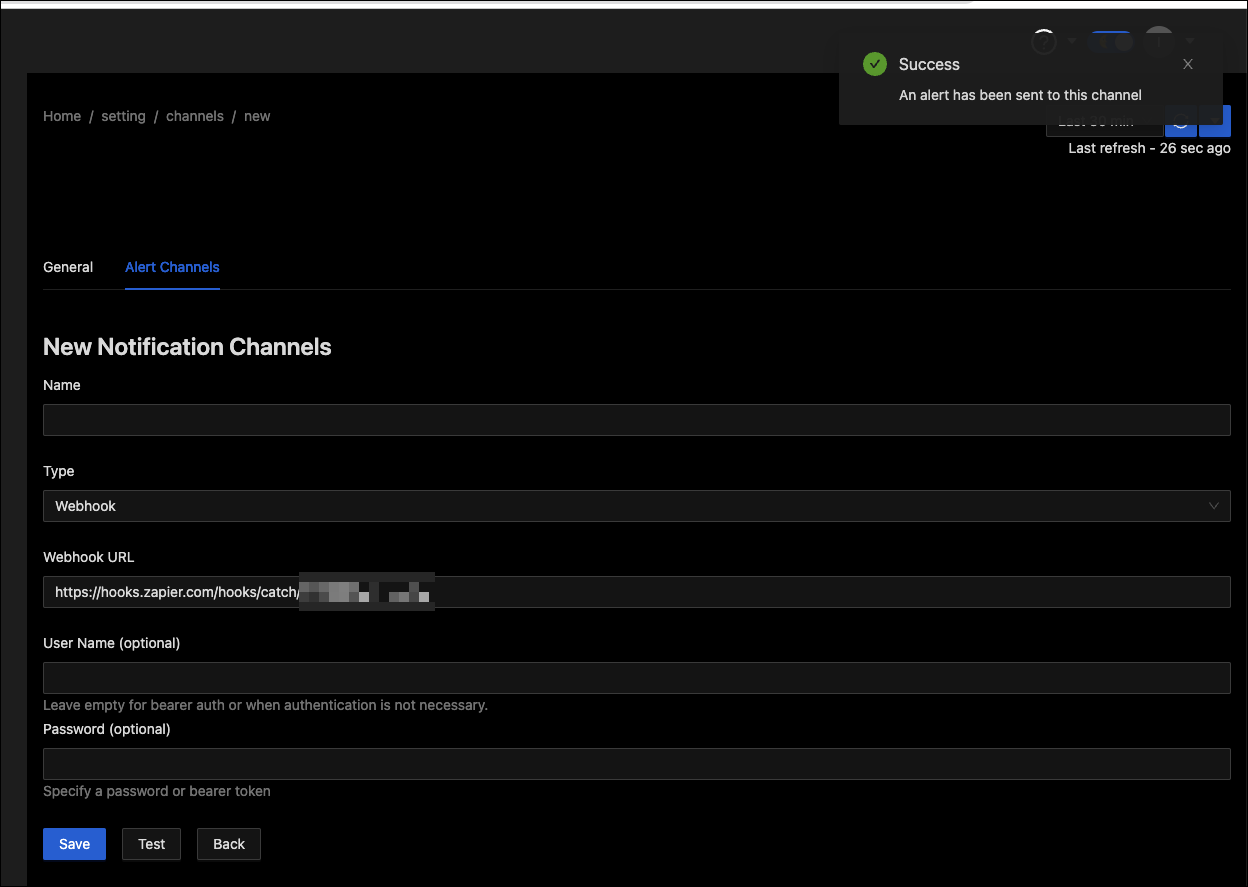

I can now add that in a new Alert Channel - Webhook. Then click test

And we can see the response

Make (Integromat)

We can sign up at make

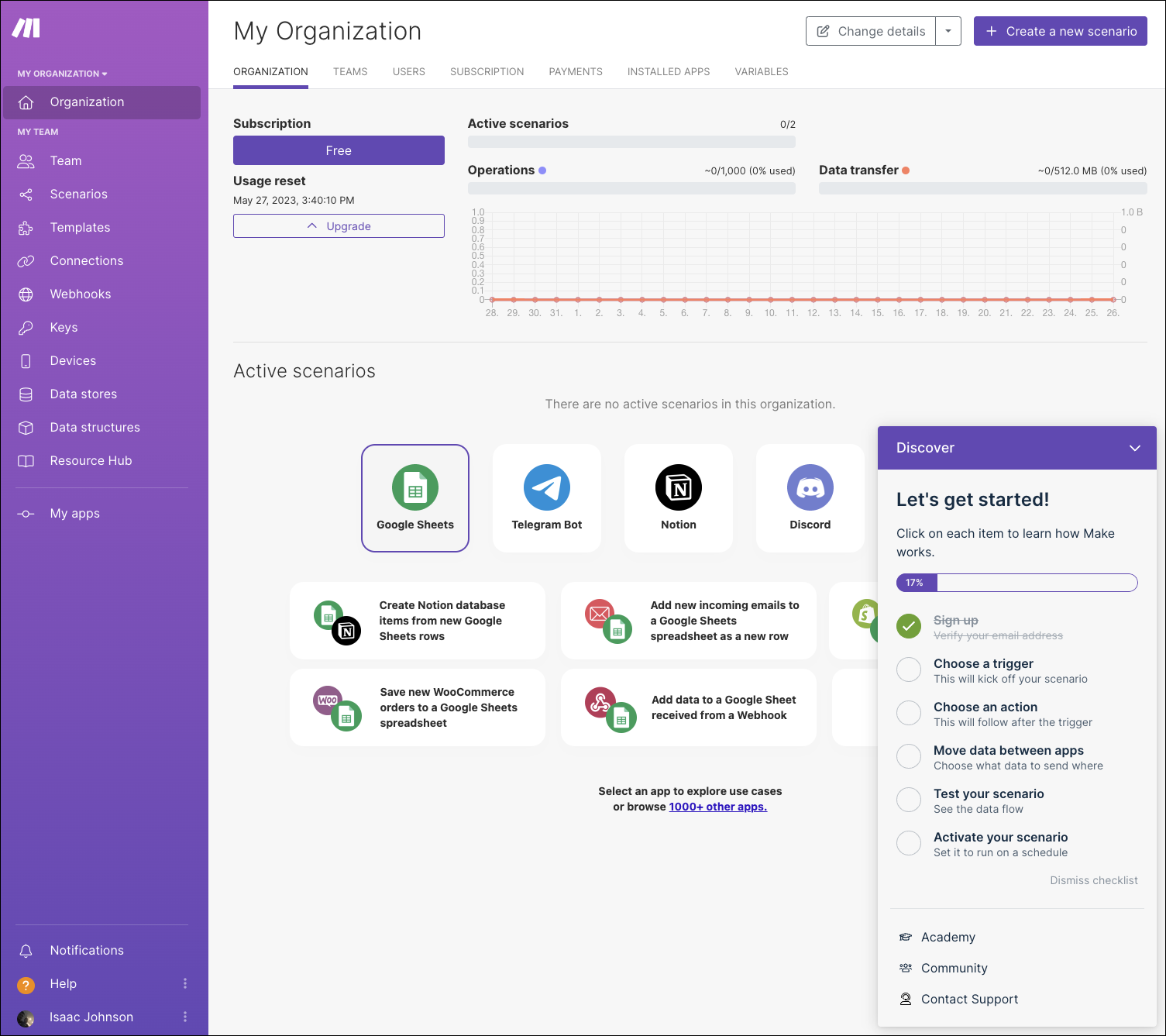

Once signed up, we are presented with a dashboard

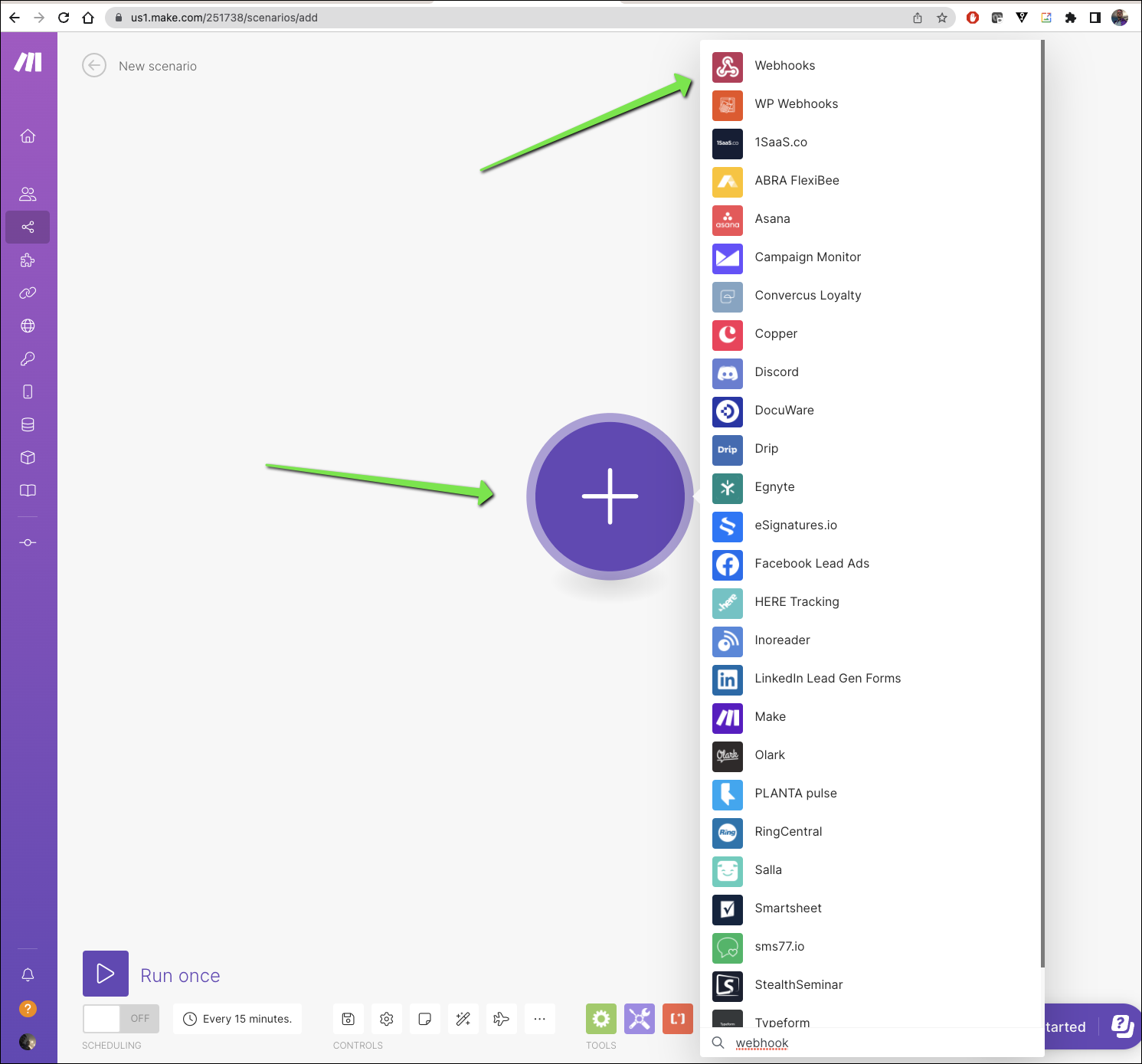

I can create a new scenario and select webhooks

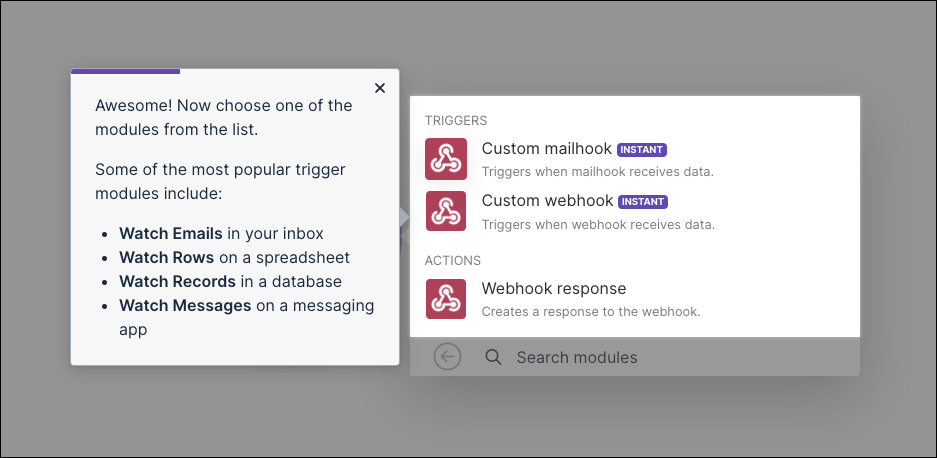

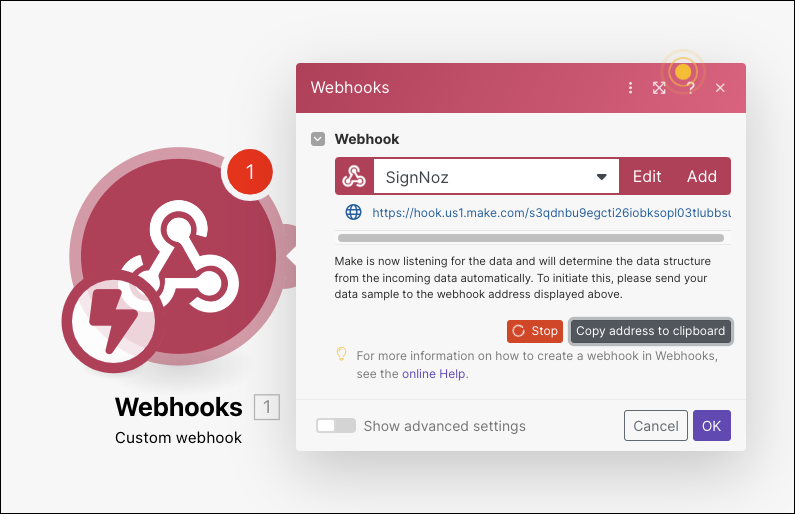

I’ll want to select a trigger of “custom webhook”

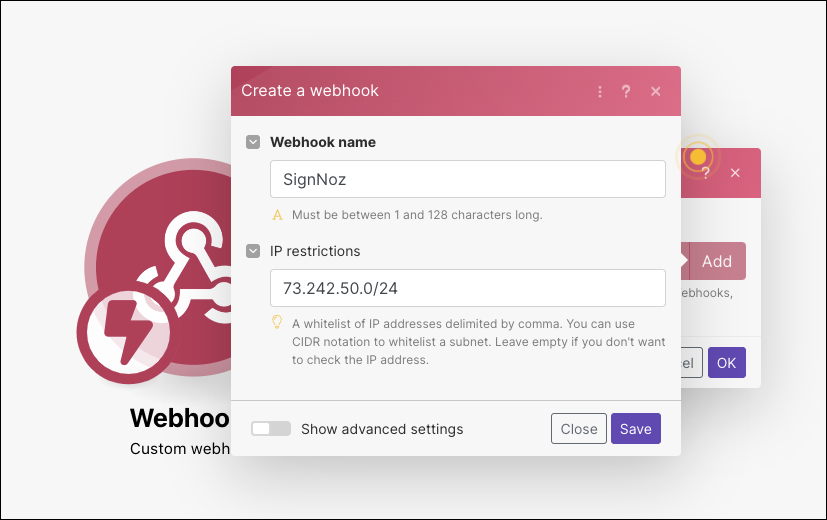

I’ll click Add and give it name. I can optionally restrict to an IP or block of IPs (CIDR). Here, I’ll add my home network egress range

I can copy the webhook URL now

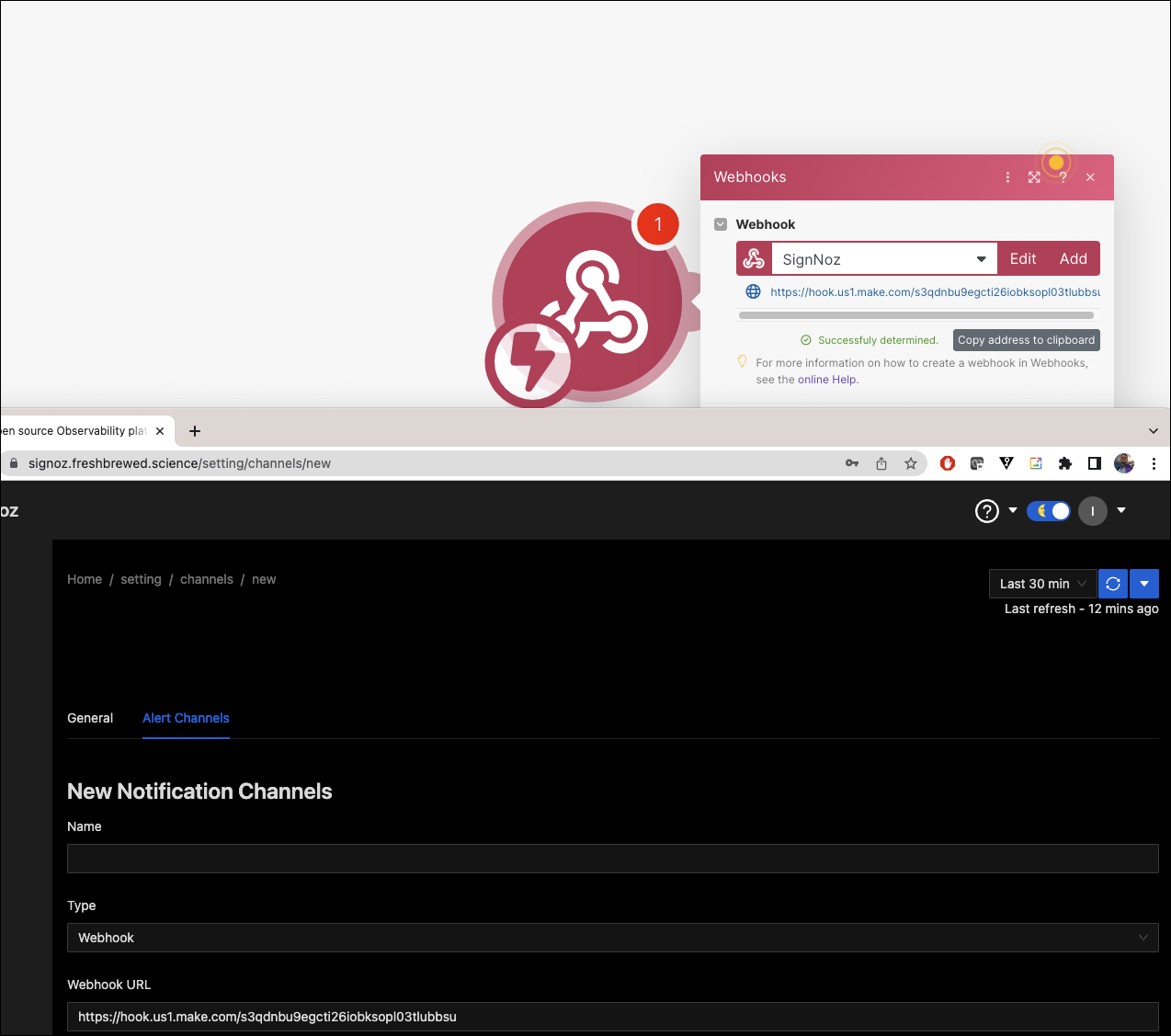

I can now add that to SigNoz and test and see it show up in Make

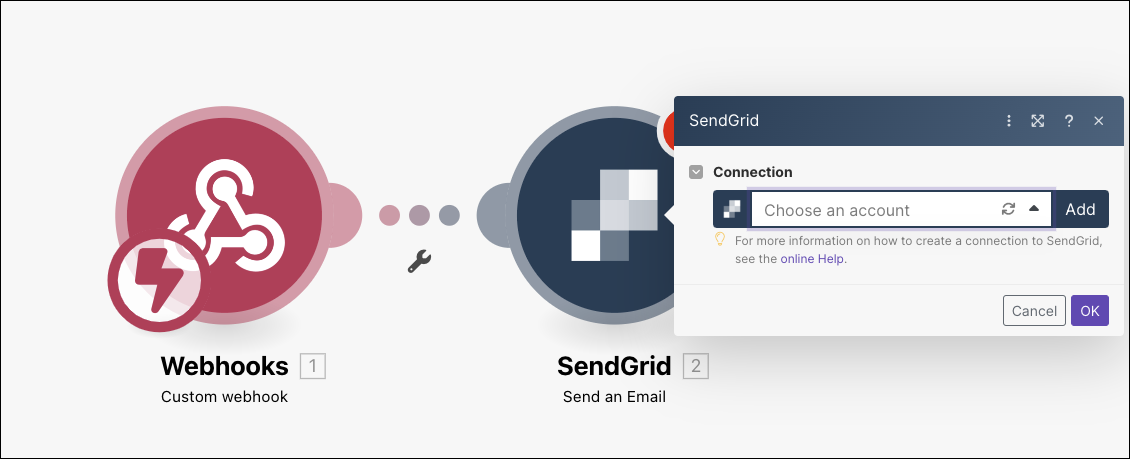

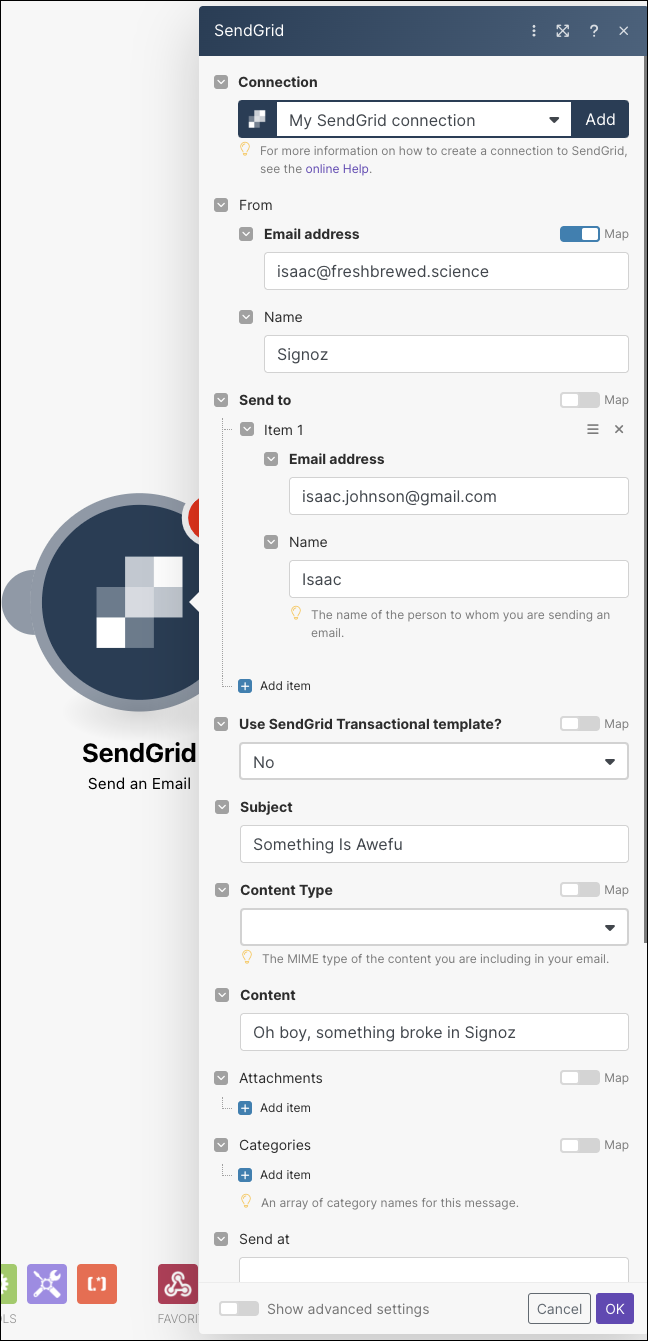

I’ll now click the “+” on the right and add the SendGrid action

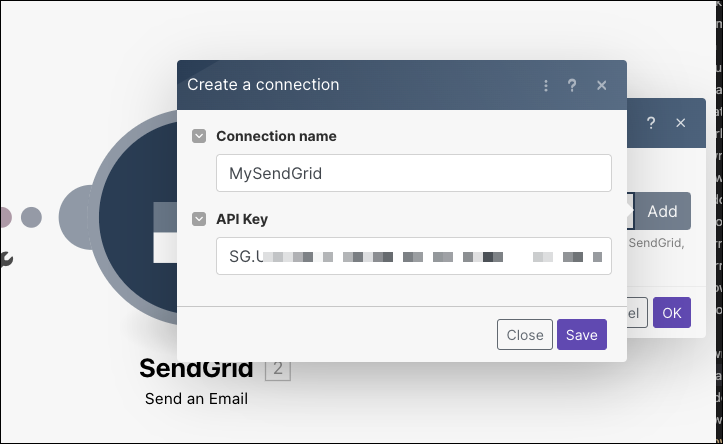

Set my token

Note - if you usually use the “Restricted Access” token, you’ll need to expand access for it to work (set to Full).

We can now fill out some details

If I click Run Once and then test the Webhook in SigNoz, I can see it was received

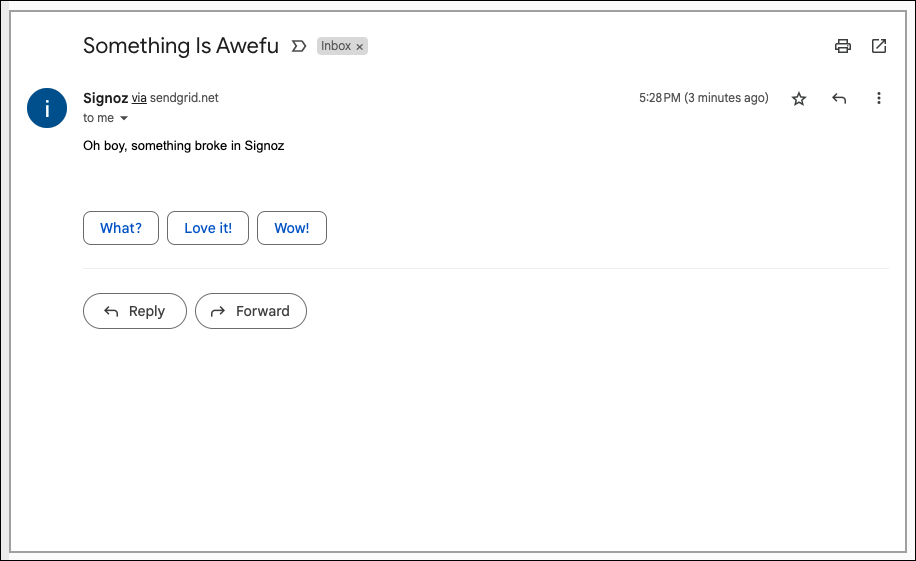

And I can see it ran and delivered an email.

Summary

In this we setup SigNoz on the production cluster. We added a proper TLS ingress with a domain name. We then properly setup pagerduty integration then experimented with webhooks from Zappier and Make.

There is still much more to do, but we now have a generally production ready SigNoz. The NFS Volumes are an a Proper NAS and I should be able to route OpenTelemetry traces internally (based on Ingress, only 443 will come in externally).

The idea of having many systems in a hybrid or cross-cloud scenario would likely warrant the commercial hosted options. But then, that is the general idea - one can fully use the product in it’s Open-Source self-hosted mode but pay to add features like Federated Identity and a hosted PaaS endpoint.