Published: Apr 5, 2022 by Isaac Johnson

In our last post, we covered how to containerize our tests as part of a docker build. Today we will continue that idea to see how we can move the building of images off our local systems into the cloud or via a GitOps systems. To start, we’ll explore is via Azure using Azure Container Registry (ACR).

Additionally, we’ll look at GitOps, this time via Flux built-in to AKS as a preview feature to automatically deploy the containers we are building and tagging in ACR.

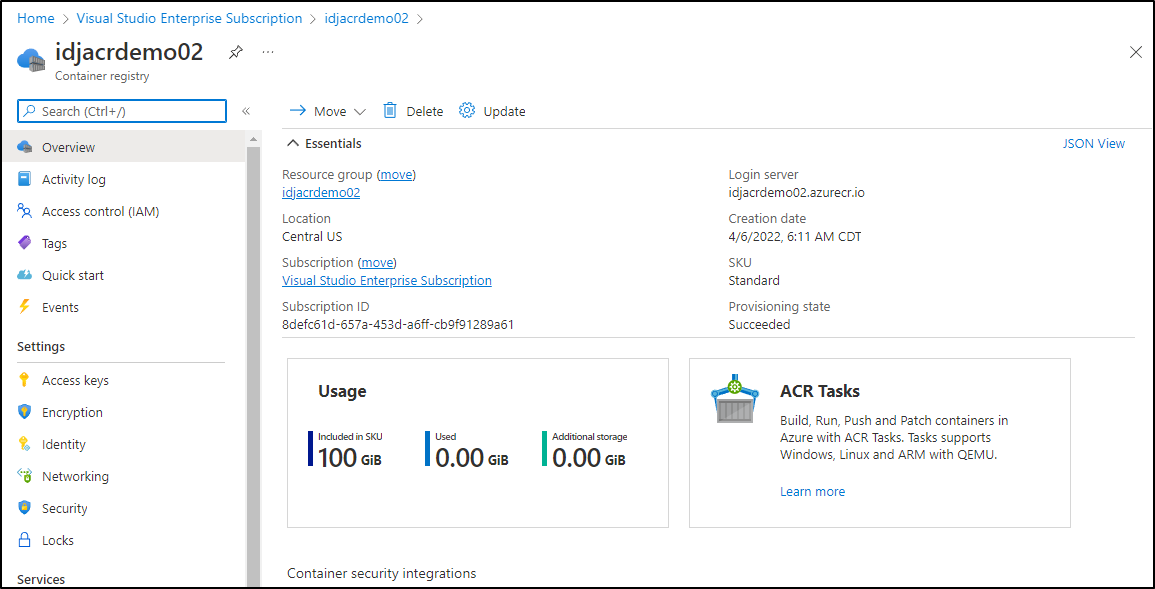

Create an ACR

Azure Container Registry (ACR) can be used for much more than just storing OCI compliant images.

First, let’s create a resource group

$ az group create --location centralus -n idjacrdemo02

{

"id": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourceGroups/idjacrdemo02",

"location": "centralus",

"managedBy": null,

"name": "idjacrdemo02",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

I would like us to try a preview feature of ACR which is to log to a Log Analytics WS. Let’s also create a workspace

$ az monitor log-analytics workspace create -g idjacrdemo02 --workspace-name idjacrws

{

"createdDate": "Wed, 06 Apr 2022 11:12:36 GMT",

"customerId": "e2ab502d-265c-4130-a12b-8bd284342e6d",

"eTag": null,

"features": {

"clusterResourceId": null,

"disableLocalAuth": null,

"enableDataExport": null,

"enableLogAccessUsingOnlyResourcePermissions": true,

"immediatePurgeDataOn30Days": null,

"legacy": 0,

"searchVersion": 1

},

"forceCmkForQuery": null,

"id": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourcegroups/idjacrdemo02/providers/microsoft.operationalinsights/workspaces/idjacrws",

"location": "centralus",

"modifiedDate": "Wed, 06 Apr 2022 11:12:37 GMT",

"name": "idjacrws",

"privateLinkScopedResources": null,

"provisioningState": "Succeeded",

"publicNetworkAccessForIngestion": "Enabled",

"publicNetworkAccessForQuery": "Enabled",

"resourceGroup": "idjacrdemo02",

"retentionInDays": 30,

"sku": {

"capacityReservationLevel": null,

"lastSkuUpdate": "Wed, 06 Apr 2022 11:12:36 GMT",

"name": "pergb2018"

},

"tags": null,

"type": "Microsoft.OperationalInsights/workspaces",

"workspaceCapping": {

"dailyQuotaGb": -1.0,

"dataIngestionStatus": "RespectQuota",

"quotaNextResetTime": "Wed, 06 Apr 2022 19:00:00 GMT"

}

}

Then we can create our ACR and point it to the workspace

$ az acr create -n idjacrdemo02 -g idjacrdemo02 --workspace idjacrws --admin-enabled true --sku Standard

Argument '--workspace' is in preview and under development. Reference and support levels: https://aka.ms/CLI_refstatus

{

"adminUserEnabled": true,

"anonymousPullEnabled": false,

"creationDate": "2022-04-06T11:11:06.021628+00:00",

"dataEndpointEnabled": false,

"dataEndpointHostNames": [],

"encryption": {

"keyVaultProperties": null,

"status": "disabled"

},

"id": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourceGroups/idjacrdemo02/providers/Microsoft.ContainerRegistry/registries/idjacrdemo02",

"identity": null,

"location": "centralus",

"loginServer": "idjacrdemo02.azurecr.io",

"name": "idjacrdemo02",

"networkRuleBypassOptions": "AzureServices",

"networkRuleSet": null,

"policies": {

"exportPolicy": {

"status": "enabled"

},

"quarantinePolicy": {

"status": "disabled"

},

"retentionPolicy": {

"days": 7,

"lastUpdatedTime": "2022-04-06T11:13:47.500489+00:00",

"status": "disabled"

},

"trustPolicy": {

"status": "disabled",

"type": "Notary"

}

},

"privateEndpointConnections": [],

"provisioningState": "Succeeded",

"publicNetworkAccess": "Enabled",

"resourceGroup": "idjacrdemo02",

"sku": {

"name": "Standard",

"tier": "Standard"

},

"status": null,

"systemData": {

"createdAt": "2022-04-06T11:11:06.021628+00:00",

"createdBy": "Isaac.Johnson@mediware.com",

"createdByType": "User",

"lastModifiedAt": "2022-04-06T11:13:47.329755+00:00",

"lastModifiedBy": "Isaac.Johnson@mediware.com",

"lastModifiedByType": "User"

},

"tags": {},

"type": "Microsoft.ContainerRegistry/registries",

"zoneRedundancy": "Disabled"

}

With this made, we could just start building in ACR using the Azure CLI

If you recall our workspace from the last blog:

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ ls

Dockerfile node_modules package-lock.json package.json server.js tests

(and if you want to just catch up, the repo is public, just git clone https://github.com/idjohnson/dockerWithTests2.git)

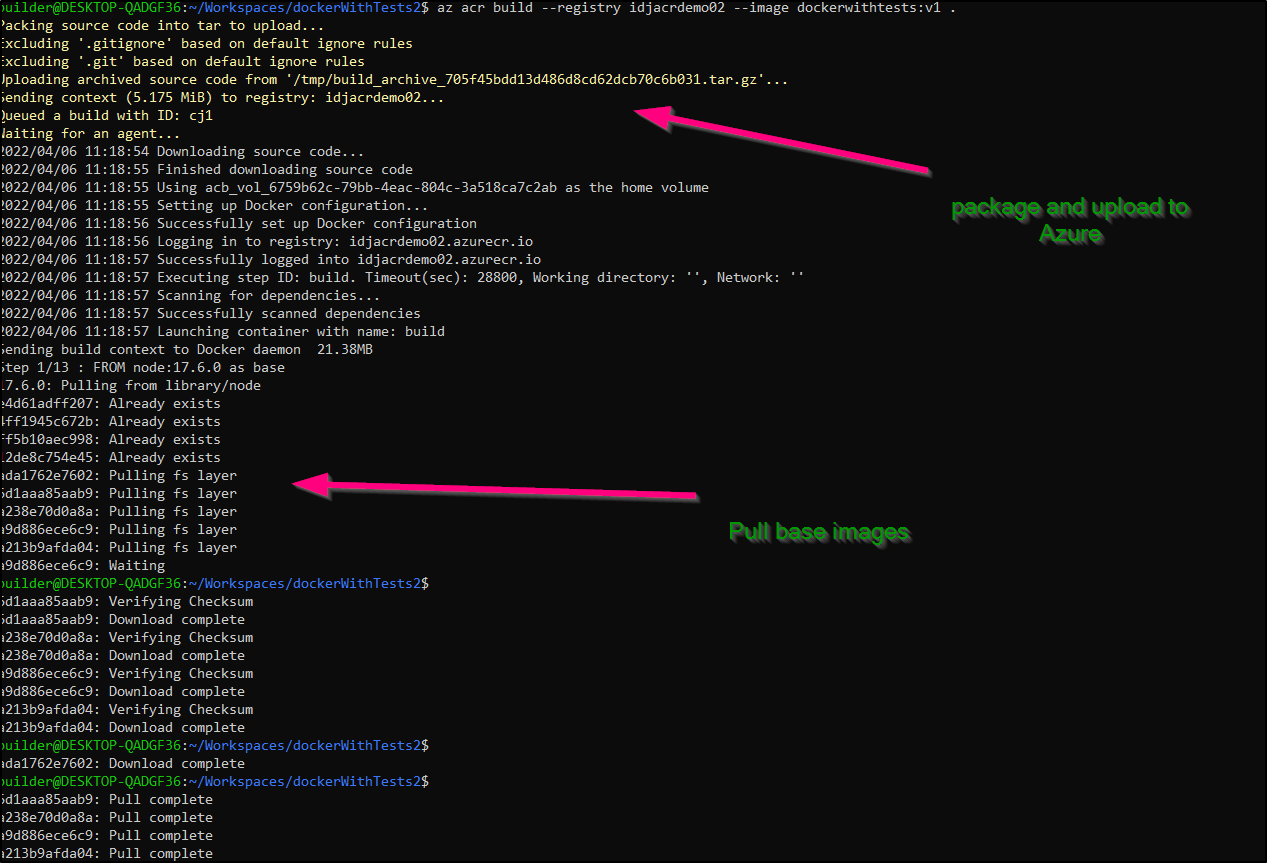

Building with Azure CLI

Just run the build command locally:

$ az acr build --registry idjacrdemo02 --image dockerwithtests:v1 .

Packing source code into tar to upload...

Excluding '.gitignore' based on default ignore rules

Excluding '.git' based on default ignore rules

Uploading archived source code from '/tmp/build_archive_705f45bdd13d486d8cd62dcb70c6b031.tar.gz'...

Sending context (5.175 MiB) to registry: idjacrdemo02...

Queued a build with ID: cj1

Waiting for an agent...

2022/04/06 11:18:54 Downloading source code...

2022/04/06 11:18:55 Finished downloading source code

2022/04/06 11:18:55 Using acb_vol_6759b62c-79bb-4eac-804c-3a518ca7c2ab as the home volume

2022/04/06 11:18:55 Setting up Docker configuration...

2022/04/06 11:18:56 Successfully set up Docker configuration

2022/04/06 11:18:56 Logging in to registry: idjacrdemo02.azurecr.io

2022/04/06 11:18:57 Successfully logged into idjacrdemo02.azurecr.io

2022/04/06 11:18:57 Executing step ID: build. Timeout(sec): 28800, Working directory: '', Network: ''

2022/04/06 11:18:57 Scanning for dependencies...

2022/04/06 11:18:57 Successfully scanned dependencies

2022/04/06 11:18:57 Launching container with name: build

Sending build context to Docker daemon 21.38MB

Step 1/13 : FROM node:17.6.0 as base

17.6.0: Pulling from library/node

e4d61adff207: Already exists

4ff1945c672b: Already exists

ff5b10aec998: Already exists

12de8c754e45: Already exists

ada1762e7602: Pulling fs layer

6d1aaa85aab9: Pulling fs layer

a238e70d0a8a: Pulling fs layer

a9d886ece6c9: Pulling fs layer

a213b9afda04: Pulling fs layer

a9d886ece6c9: Waiting

a213b9afda04: Waiting

6d1aaa85aab9: Verifying Checksum

6d1aaa85aab9: Download complete

a238e70d0a8a: Verifying Checksum

a238e70d0a8a: Download complete

a9d886ece6c9: Verifying Checksum

a9d886ece6c9: Download complete

a213b9afda04: Verifying Checksum

a213b9afda04: Download complete

ada1762e7602: Verifying Checksum

ada1762e7602: Download complete

ada1762e7602: Pull complete

6d1aaa85aab9: Pull complete

a238e70d0a8a: Pull complete

a9d886ece6c9: Pull complete

a213b9afda04: Pull complete

Digest: sha256:08e37ce0636ad9796900a180f2539f3110648e4f2c1b541bc0d4d3039e6b3251

Status: Downloaded newer image for node:17.6.0

---> 36fad710e29d

Step 2/13 : WORKDIR /code

---> Running in 234b0e7f20b9

Removing intermediate container 234b0e7f20b9

---> 9a0972c93165

Step 3/13 : COPY package.json package.json

---> b38febe7fe04

Step 4/13 : COPY package-lock.json package-lock.json

---> 1faaf1f6ab47

Step 5/13 : FROM base as test

---> 1faaf1f6ab47

Step 6/13 : RUN npm ci

---> Running in f53e7bfc49d3

added 209 packages, and audited 210 packages in 5s

27 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

npm notice

npm notice New minor version of npm available! 8.5.1 -> 8.6.0

npm notice Changelog: <https://github.com/npm/cli/releases/tag/v8.6.0>

npm notice Run `npm install -g npm@8.6.0` to update!

npm notice

Removing intermediate container f53e7bfc49d3

---> 643376414f6f

Step 7/13 : COPY . .

---> ea5694f0498e

Step 8/13 : RUN npm run test

---> Running in fc23f763b595

> nodewithtests@1.0.0 test

> mocha ./**/*.js

Array

#indexOf()

✔ should return -1 when the value is missing

1 passing (11ms)

Removing intermediate container fc23f763b595

---> a2b05fa2d1f6

Step 9/13 : FROM base as prod

---> 1faaf1f6ab47

Step 10/13 : ENV NODE_ENV=production

---> Running in 9d3689b4d014

Removing intermediate container 9d3689b4d014

---> 426ba37e640e

Step 11/13 : RUN npm ci --production

---> Running in fe574e6b69ca

added 132 packages, and audited 133 packages in 3s

8 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

npm notice

npm notice New minor version of npm available! 8.5.1 -> 8.6.0

npm notice Changelog: <https://github.com/npm/cli/releases/tag/v8.6.0>

npm notice Run `npm install -g npm@8.6.0` to update!

npm notice

Removing intermediate container fe574e6b69ca

---> 338a31360ce9

Step 12/13 : COPY . .

---> 0e4c26442e62

Step 13/13 : CMD [ "node", "server.js" ]

---> Running in 3f8a5698b1ee

Removing intermediate container 3f8a5698b1ee

---> 9ed84ef34c60

Successfully built 9ed84ef34c60

Successfully tagged idjacrdemo02.azurecr.io/dockerwithtests:v1

2022/04/06 11:19:41 Successfully executed container: build

2022/04/06 11:19:41 Executing step ID: push. Timeout(sec): 3600, Working directory: '', Network: ''

2022/04/06 11:19:41 Pushing image: idjacrdemo02.azurecr.io/dockerwithtests:v1, attempt 1

The push refers to repository [idjacrdemo02.azurecr.io/dockerwithtests]

0587f5174c9d: Preparing

00b8f07d9446: Preparing

20af7065848e: Preparing

cb8d14911f50: Preparing

3210d9f31b35: Preparing

0d26169e1f03: Preparing

ee94197d9e68: Preparing

d7650b5a3403: Preparing

070a1f23eb0a: Preparing

316e3949bffa: Preparing

e3f84a8cee1f: Preparing

48144a6f44ae: Preparing

26d5108b2cba: Preparing

89fda00479fc: Preparing

0d26169e1f03: Waiting

ee94197d9e68: Waiting

d7650b5a3403: Waiting

070a1f23eb0a: Waiting

316e3949bffa: Waiting

e3f84a8cee1f: Waiting

48144a6f44ae: Waiting

26d5108b2cba: Waiting

89fda00479fc: Waiting

cb8d14911f50: Pushed

20af7065848e: Pushed

3210d9f31b35: Pushed

0d26169e1f03: Pushed

00b8f07d9446: Pushed

0587f5174c9d: Pushed

ee94197d9e68: Pushed

070a1f23eb0a: Pushed

d7650b5a3403: Pushed

26d5108b2cba: Pushed

48144a6f44ae: Pushed

316e3949bffa: Pushed

89fda00479fc: Pushed

e3f84a8cee1f: Pushed

v1: digest: sha256:483c9370db7036f199efdb7c17c7e66c67cdfaa0e9bd8d15e17708539ce102ac size: 3259

2022/04/06 11:20:19 Successfully pushed image: idjacrdemo02.azurecr.io/dockerwithtests:v1

2022/04/06 11:20:19 Step ID: build marked as successful (elapsed time in seconds: 44.207690)

2022/04/06 11:20:19 Populating digests for step ID: build...

2022/04/06 11:20:20 Successfully populated digests for step ID: build

2022/04/06 11:20:20 Step ID: push marked as successful (elapsed time in seconds: 37.840104)

2022/04/06 11:20:20 The following dependencies were found:

2022/04/06 11:20:20

- image:

registry: idjacrdemo02.azurecr.io

repository: dockerwithtests

tag: v1

digest: sha256:483c9370db7036f199efdb7c17c7e66c67cdfaa0e9bd8d15e17708539ce102ac

runtime-dependency:

registry: registry.hub.docker.com

repository: library/node

tag: 17.6.0

digest: sha256:08e37ce0636ad9796900a180f2539f3110648e4f2c1b541bc0d4d3039e6b3251

git: {}

Run ID: cj1 was successful after 1m27s

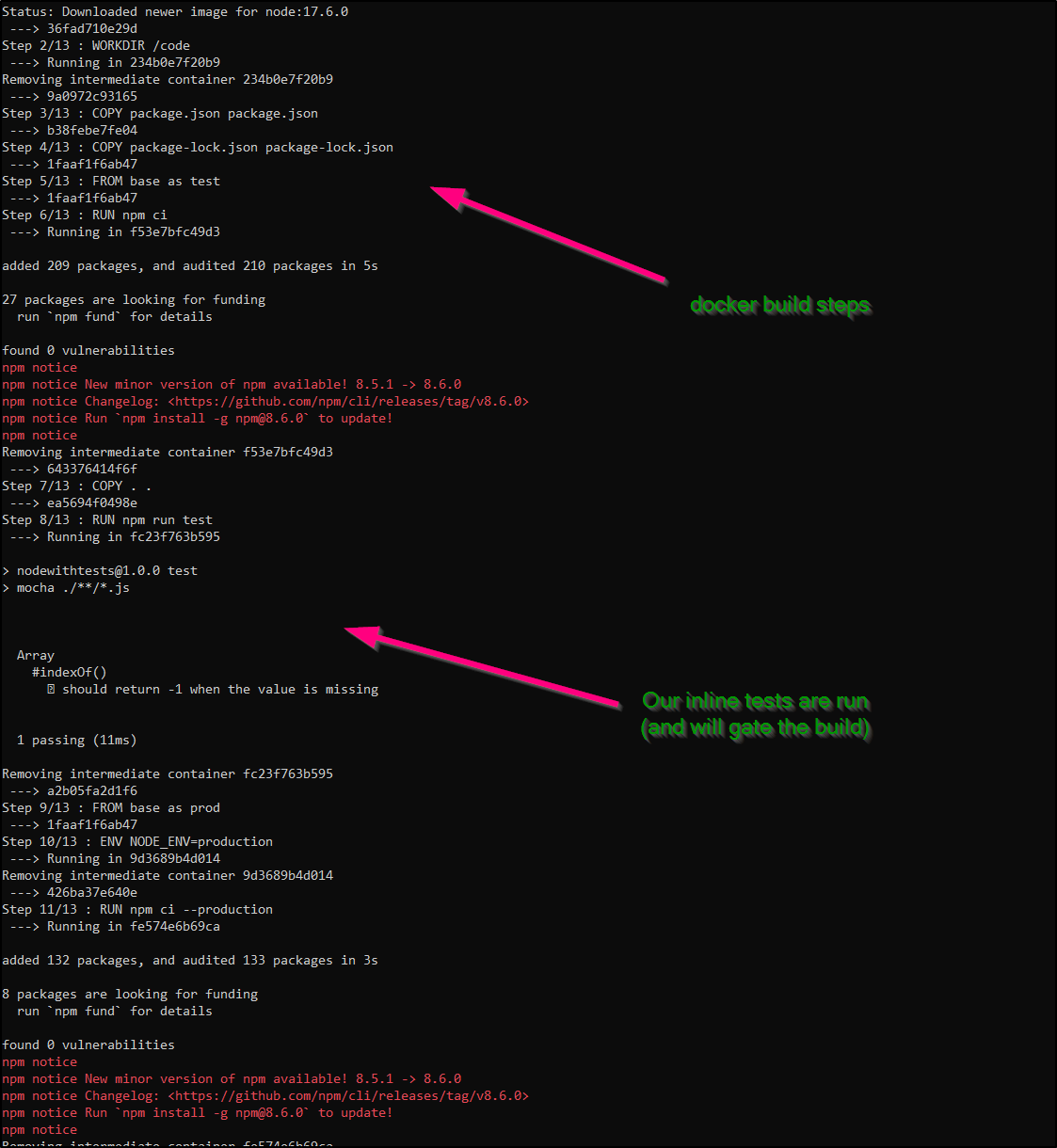

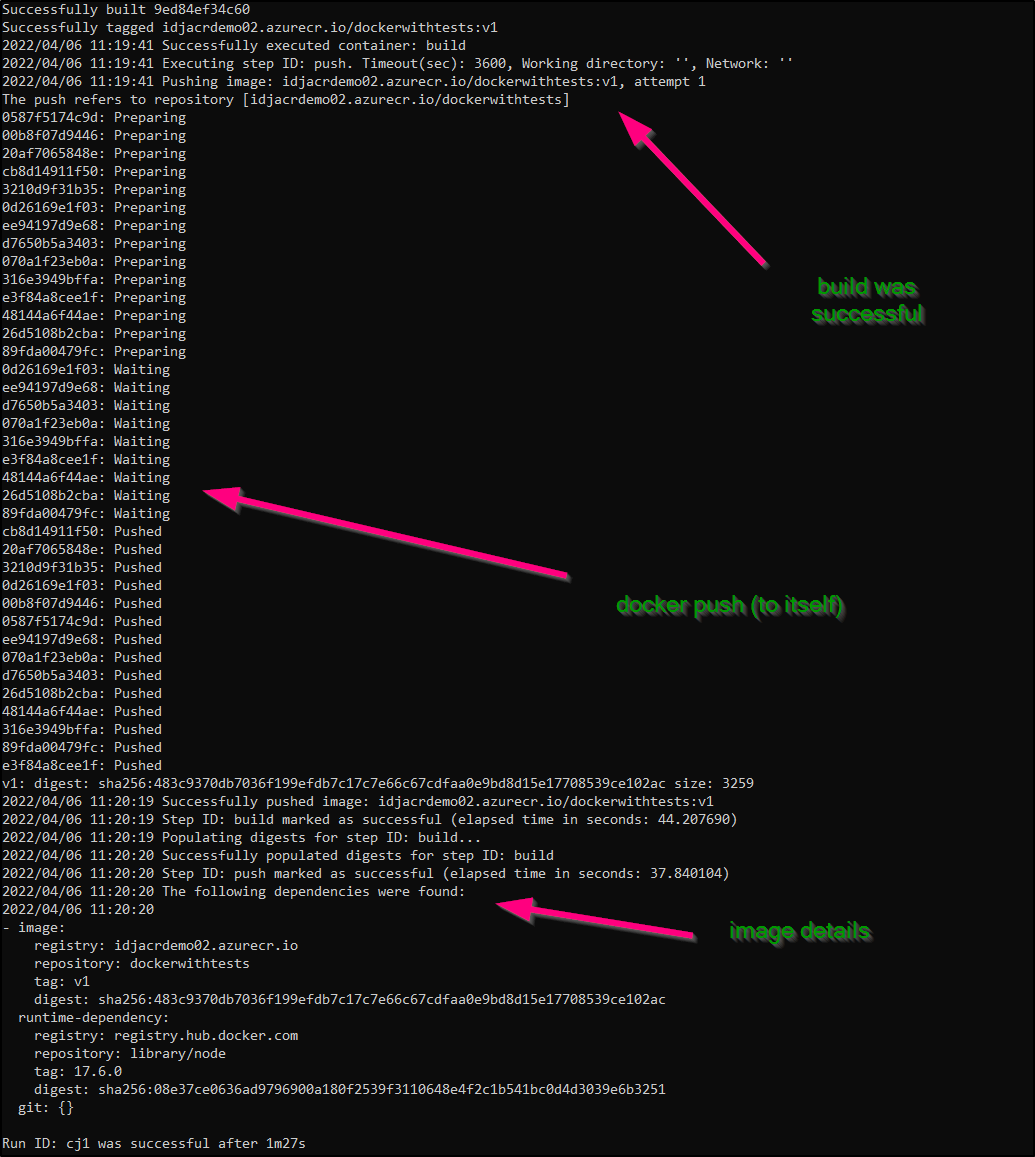

There is a bit to unpack on the above. Let’s break down what happens above.

First, we upload our repo and then ACR will pull base images

ACR the builds the image and runs tests

If successful, it will then push the container to ACR (itself) then give us the image details

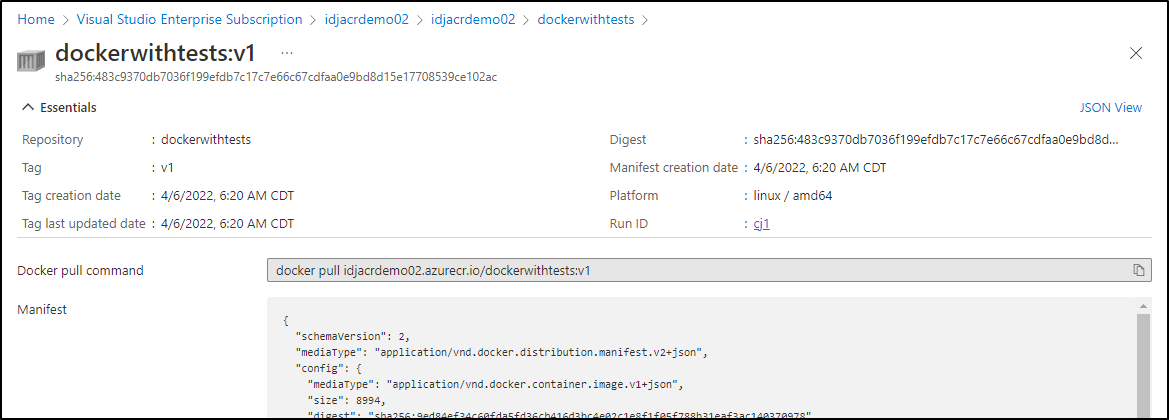

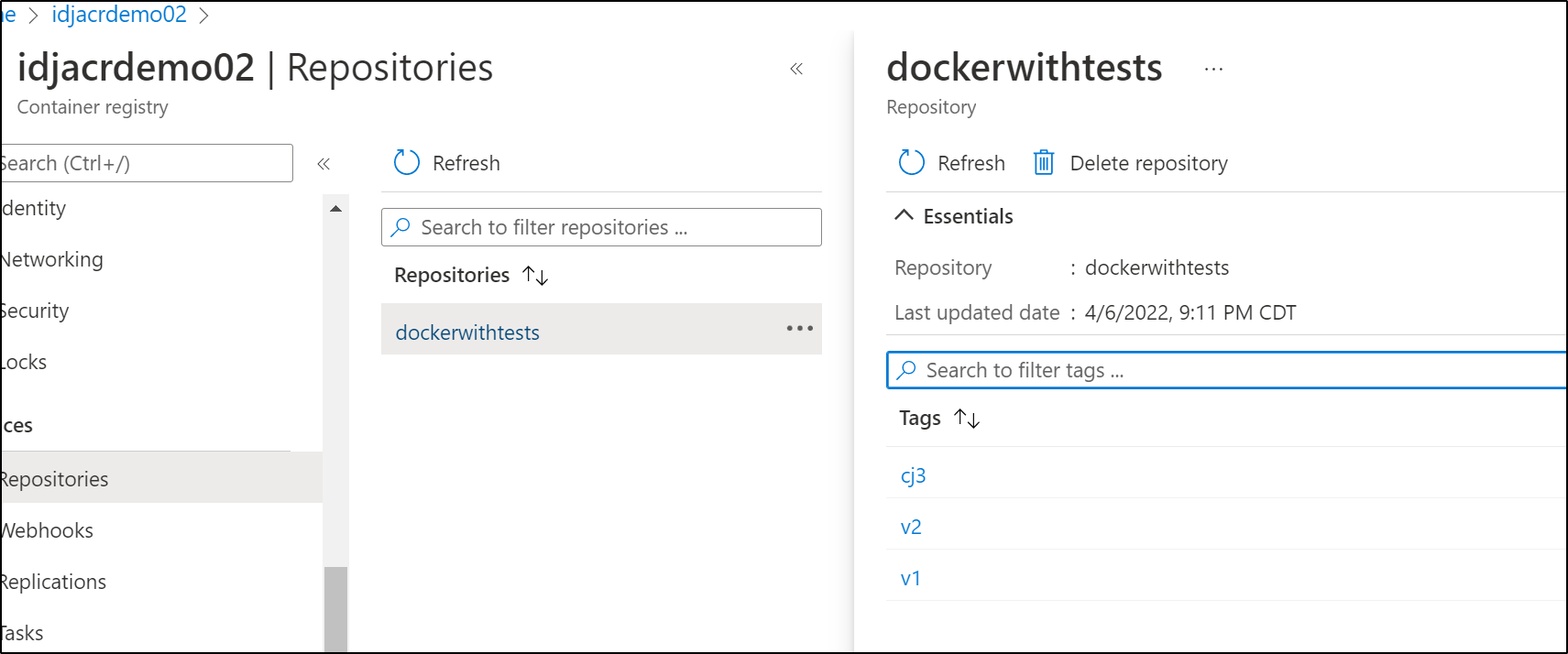

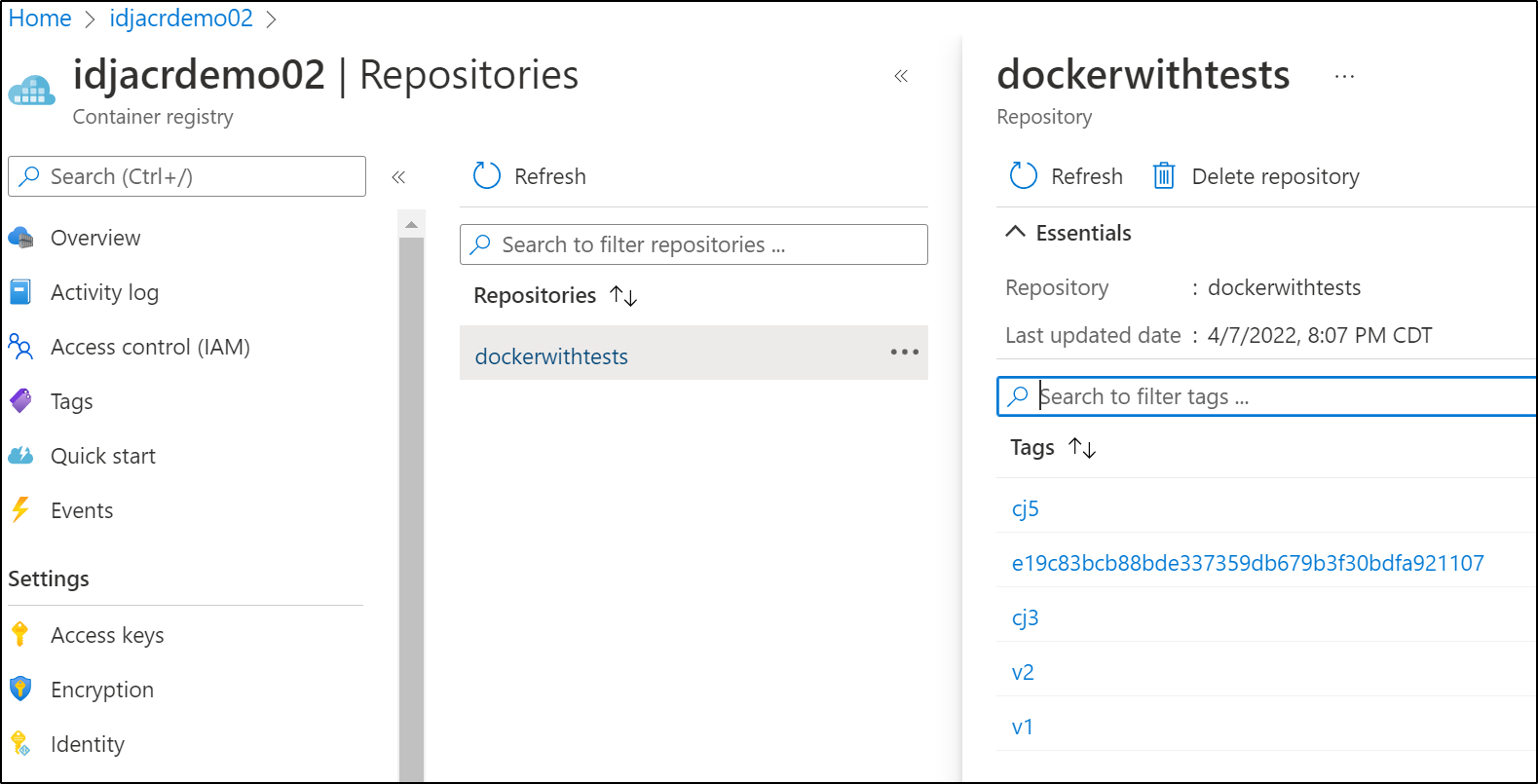

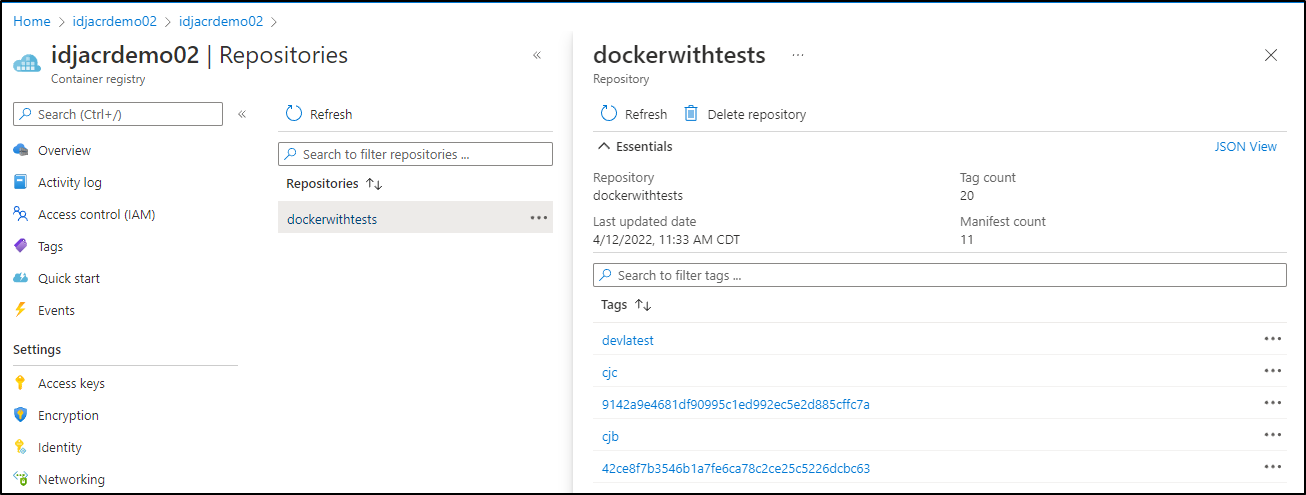

And we can see that reflected in ACR via the portal

We can also list the images using the command line

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ az acr repository show -n idjacrdemo02 --repository dockerwithtests

{

"changeableAttributes": {

"deleteEnabled": true,

"listEnabled": true,

"readEnabled": true,

"teleportEnabled": false,

"writeEnabled": true

},

"createdTime": "2022-04-06T11:20:19.1837284Z",

"imageName": "dockerwithtests",

"lastUpdateTime": "2022-04-06T11:20:19.2494238Z",

"manifestCount": 1,

"registry": "idjacrdemo02.azurecr.io",

"tagCount": 1

}

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ az acr repository show-tags -n idjacrdemo02 --repository dockerwithtests

[

"v1"

]

Hookdeck

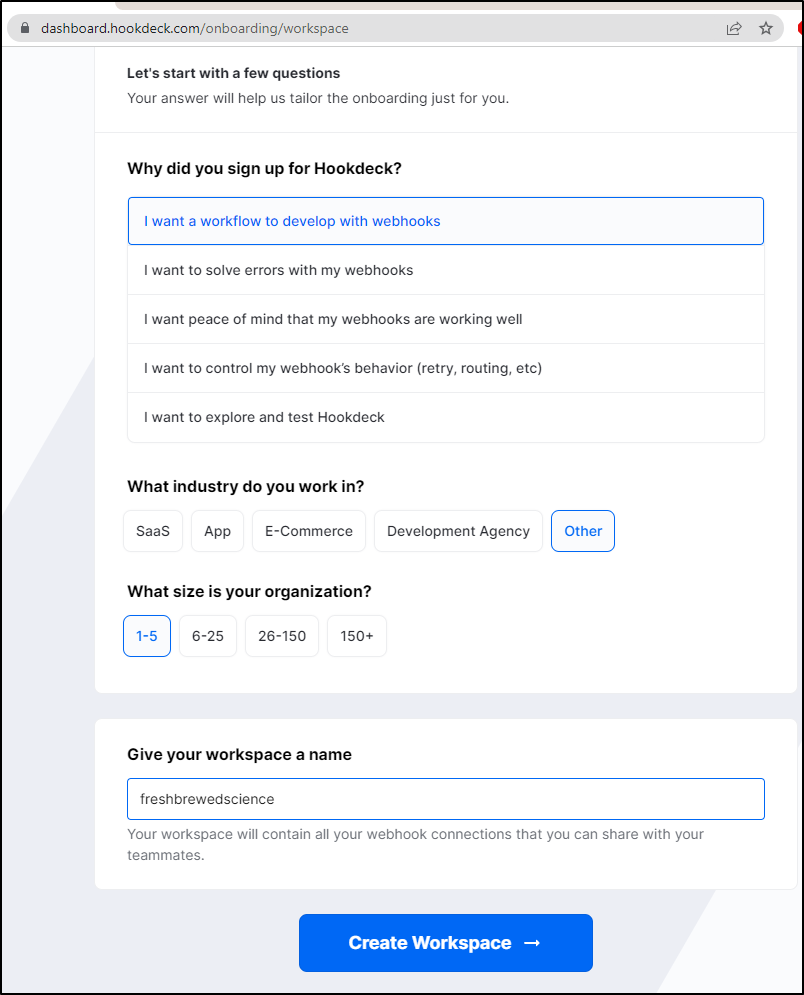

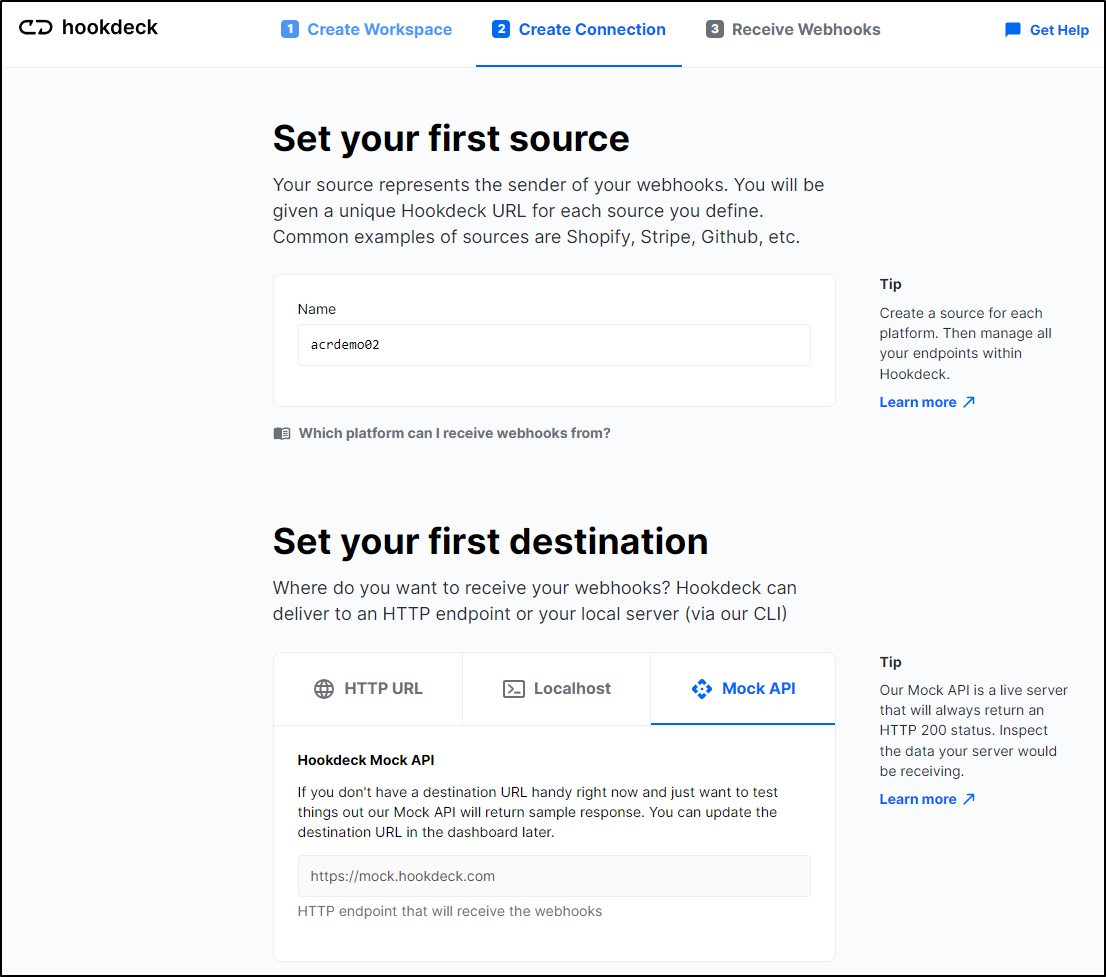

I’m going to setup HookDeck to do some webhook testing.

We can create an account at https://dashboard.hookdeck.com/signup

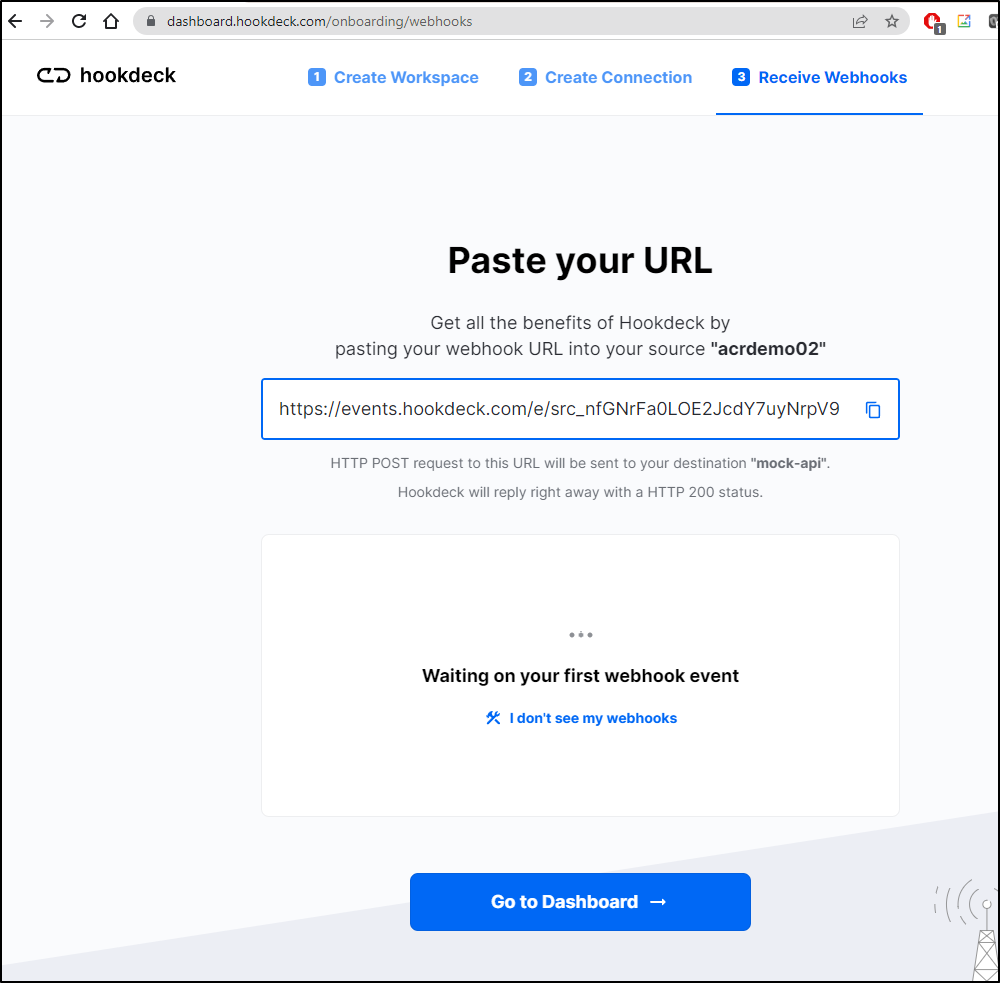

I’ll use Mock API for now and give a source name

Then it will give me the Webhook URL I can use (https://events.hookdeck.com/e/src_nfGNrFa0LOE2JcdY7uyNrpV9)

Clicking done will show the dashboard:

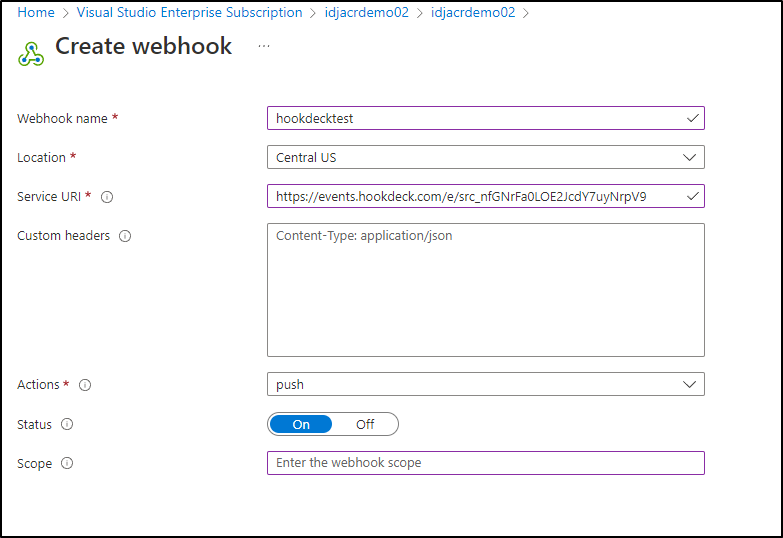

Next, I’m going to enable a webhook on an repository action on my ACR to use that endpoint. Click “+ Add” from Services/Webhooks in the portal and add the webhook

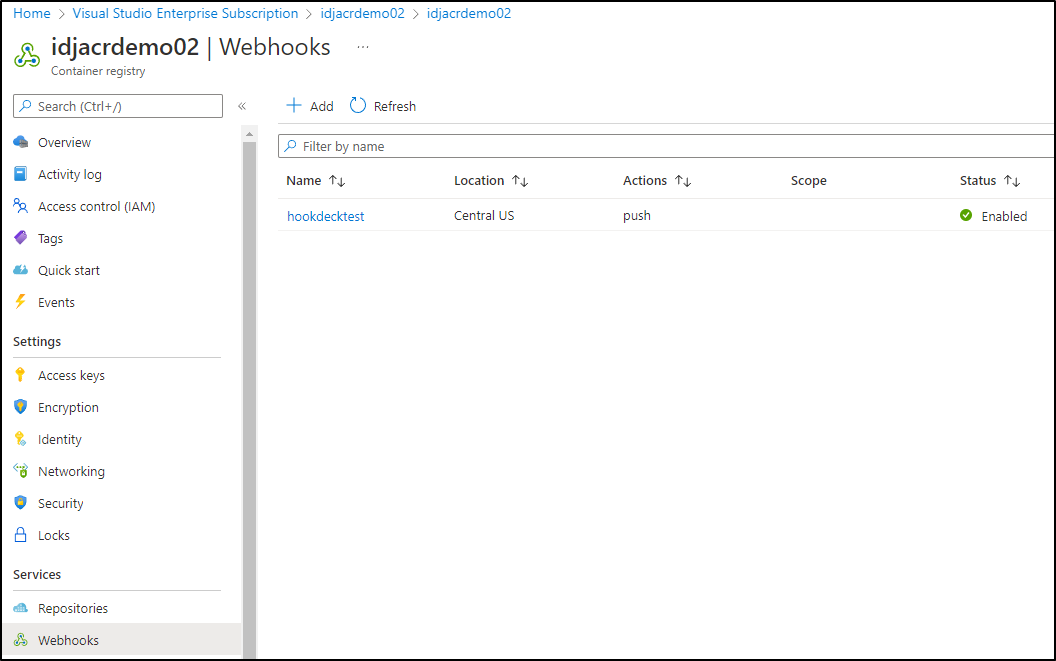

We can see it was created

Now let’s build a new tag.

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ az acr build --registry idjacrdemo02 --image dockerwithtests:v2 .

Packing source code into tar to upload...

Excluding '.gitignore' based on default ignore rules

Excluding '.git' based on default ignore rules

Uploading archived source code from '/tmp/build_archive_981202325a214146b7ef2e1eeabef0e6.tar.gz'...

Sending context (5.175 MiB) to registry: idjacrdemo02...

Queued a build with ID: cj2

Waiting for an agent...

2022/04/06 11:36:42 Downloading source code...

2022/04/06 11:36:44 Finished downloading source code

2022/04/06 11:36:44 Using acb_vol_573ed97a-c15c-44fa-b74a-30b740029a57 as the home volume

2022/04/06 11:36:44 Setting up Docker configuration...

2022/04/06 11:36:45 Successfully set up Docker configuration

2022/04/06 11:36:45 Logging in to registry: idjacrdemo02.azurecr.io

2022/04/06 11:36:46 Successfully logged into idjacrdemo02.azurecr.io

2022/04/06 11:36:46 Executing step ID: build. Timeout(sec): 28800, Working directory: '', Network: ''

2022/04/06 11:36:46 Scanning for dependencies...

2022/04/06 11:36:46 Successfully scanned dependencies

2022/04/06 11:36:46 Launching container with name: build

Sending build context to Docker daemon 21.38MB

Step 1/13 : FROM node:17.6.0 as base

17.6.0: Pulling from library/node

e4d61adff207: Already exists

4ff1945c672b: Already exists

ff5b10aec998: Already exists

12de8c754e45: Already exists

ada1762e7602: Pulling fs layer

6d1aaa85aab9: Pulling fs layer

a238e70d0a8a: Pulling fs layer

a9d886ece6c9: Pulling fs layer

a213b9afda04: Pulling fs layer

a9d886ece6c9: Waiting

a213b9afda04: Waiting

6d1aaa85aab9: Verifying Checksum

6d1aaa85aab9: Download complete

a9d886ece6c9: Verifying Checksum

a9d886ece6c9: Download complete

a238e70d0a8a: Verifying Checksum

a238e70d0a8a: Download complete

a213b9afda04: Verifying Checksum

a213b9afda04: Download complete

ada1762e7602: Verifying Checksum

ada1762e7602: Download complete

ada1762e7602: Pull complete

6d1aaa85aab9: Pull complete

a238e70d0a8a: Pull complete

a9d886ece6c9: Pull complete

a213b9afda04: Pull complete

Digest: sha256:08e37ce0636ad9796900a180f2539f3110648e4f2c1b541bc0d4d3039e6b3251

Status: Downloaded newer image for node:17.6.0

---> 36fad710e29d

Step 2/13 : WORKDIR /code

---> Running in 89beefdd8a48

Removing intermediate container 89beefdd8a48

---> f85ae95fd37b

Step 3/13 : COPY package.json package.json

---> 35880f254bc9

Step 4/13 : COPY package-lock.json package-lock.json

---> 05d6e38e0514

Step 5/13 : FROM base as test

---> 05d6e38e0514

Step 6/13 : RUN npm ci

---> Running in 6441db13d605

added 209 packages, and audited 210 packages in 5s

27 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

npm notice

npm notice New minor version of npm available! 8.5.1 -> 8.6.0

npm notice Changelog: <https://github.com/npm/cli/releases/tag/v8.6.0>

npm notice Run `npm install -g npm@8.6.0` to update!

npm notice

Removing intermediate container 6441db13d605

---> 10a2d3f802d3

Step 7/13 : COPY . .

---> 15c8ca566443

Step 8/13 : RUN npm run test

---> Running in d7b000df4feb

> nodewithtests@1.0.0 test

> mocha ./**/*.js

Array

#indexOf()

✔ should return -1 when the value is missing

1 passing (6ms)

Removing intermediate container d7b000df4feb

---> 62eb24478ec1

Step 9/13 : FROM base as prod

---> 05d6e38e0514

Step 10/13 : ENV NODE_ENV=production

---> Running in 22c48708ea0e

Removing intermediate container 22c48708ea0e

---> c8f609b723a1

Step 11/13 : RUN npm ci --production

---> Running in 3dce3590a07d

added 132 packages, and audited 133 packages in 3s

8 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

npm notice

npm notice New minor version of npm available! 8.5.1 -> 8.6.0

npm notice Changelog: <https://github.com/npm/cli/releases/tag/v8.6.0>

npm notice Run `npm install -g npm@8.6.0` to update!

npm notice

Removing intermediate container 3dce3590a07d

---> bbf4e04ac702

Step 12/13 : COPY . .

---> 3258675446a4

Step 13/13 : CMD [ "node", "server.js" ]

---> Running in e90c99367756

Removing intermediate container e90c99367756

---> b37d7953d010

Successfully built b37d7953d010

Successfully tagged idjacrdemo02.azurecr.io/dockerwithtests:v2

2022/04/06 11:37:32 Successfully executed container: build

2022/04/06 11:37:32 Executing step ID: push. Timeout(sec): 3600, Working directory: '', Network: ''

2022/04/06 11:37:32 Pushing image: idjacrdemo02.azurecr.io/dockerwithtests:v2, attempt 1

The push refers to repository [idjacrdemo02.azurecr.io/dockerwithtests]

1a8392fc1475: Preparing

df84361b92e8: Preparing

bf0572ef523b: Preparing

6defdd362e80: Preparing

feb4b72dcc5b: Preparing

0d26169e1f03: Preparing

ee94197d9e68: Preparing

d7650b5a3403: Preparing

070a1f23eb0a: Preparing

316e3949bffa: Preparing

e3f84a8cee1f: Preparing

48144a6f44ae: Preparing

26d5108b2cba: Preparing

89fda00479fc: Preparing

0d26169e1f03: Waiting

ee94197d9e68: Waiting

d7650b5a3403: Waiting

070a1f23eb0a: Waiting

316e3949bffa: Waiting

e3f84a8cee1f: Waiting

48144a6f44ae: Waiting

26d5108b2cba: Waiting

89fda00479fc: Waiting

6defdd362e80: Pushed

feb4b72dcc5b: Pushed

bf0572ef523b: Pushed

ee94197d9e68: Layer already exists

0d26169e1f03: Layer already exists

d7650b5a3403: Layer already exists

070a1f23eb0a: Layer already exists

316e3949bffa: Layer already exists

48144a6f44ae: Layer already exists

e3f84a8cee1f: Layer already exists

26d5108b2cba: Layer already exists

89fda00479fc: Layer already exists

1a8392fc1475: Pushed

df84361b92e8: Pushed

v2: digest: sha256:c814af787e44cdacefc58b3ce2cd93e97e4b54fdd6a39e29e41813f4e36e9077 size: 3259

2022/04/06 11:37:36 Successfully pushed image: idjacrdemo02.azurecr.io/dockerwithtests:v2

2022/04/06 11:37:36 Step ID: build marked as successful (elapsed time in seconds: 46.362353)

2022/04/06 11:37:36 Populating digests for step ID: build...

2022/04/06 11:37:37 Successfully populated digests for step ID: build

2022/04/06 11:37:37 Step ID: push marked as successful (elapsed time in seconds: 3.294926)

2022/04/06 11:37:37 The following dependencies were found:

2022/04/06 11:37:37

- image:

registry: idjacrdemo02.azurecr.io

repository: dockerwithtests

tag: v2

digest: sha256:c814af787e44cdacefc58b3ce2cd93e97e4b54fdd6a39e29e41813f4e36e9077

runtime-dependency:

registry: registry.hub.docker.com

repository: library/node

tag: 17.6.0

digest: sha256:08e37ce0636ad9796900a180f2539f3110648e4f2c1b541bc0d4d3039e6b3251

git: {}

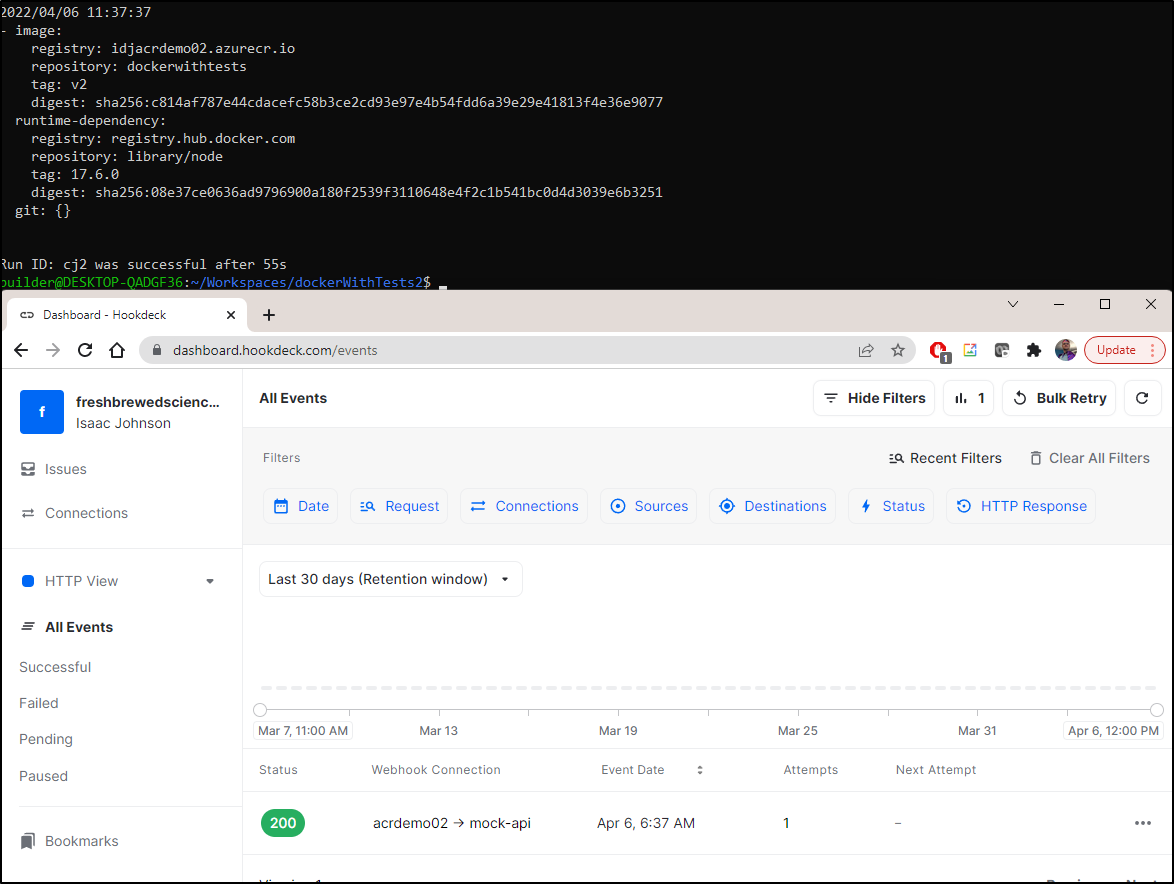

Run ID: cj2 was successful after 55s

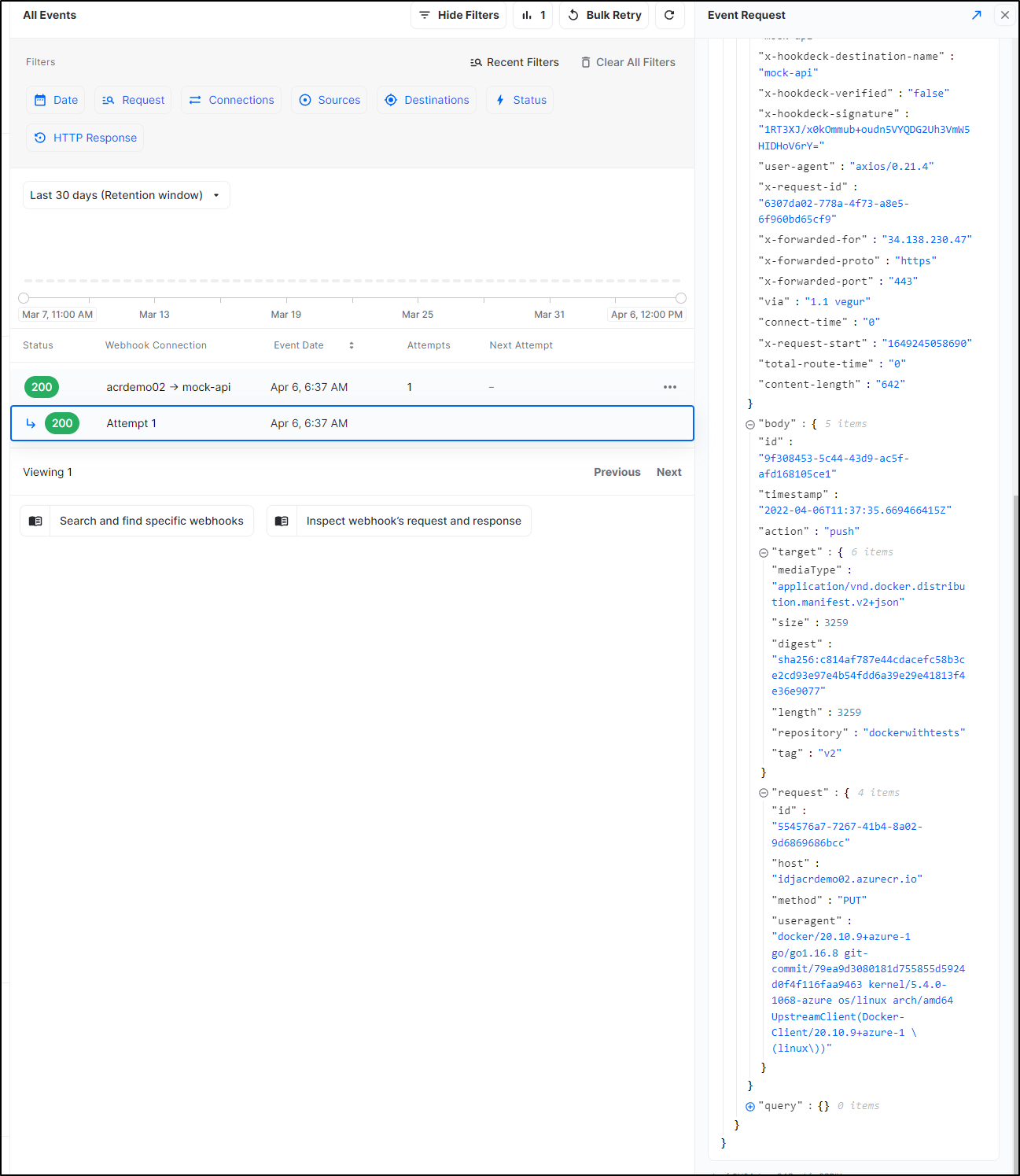

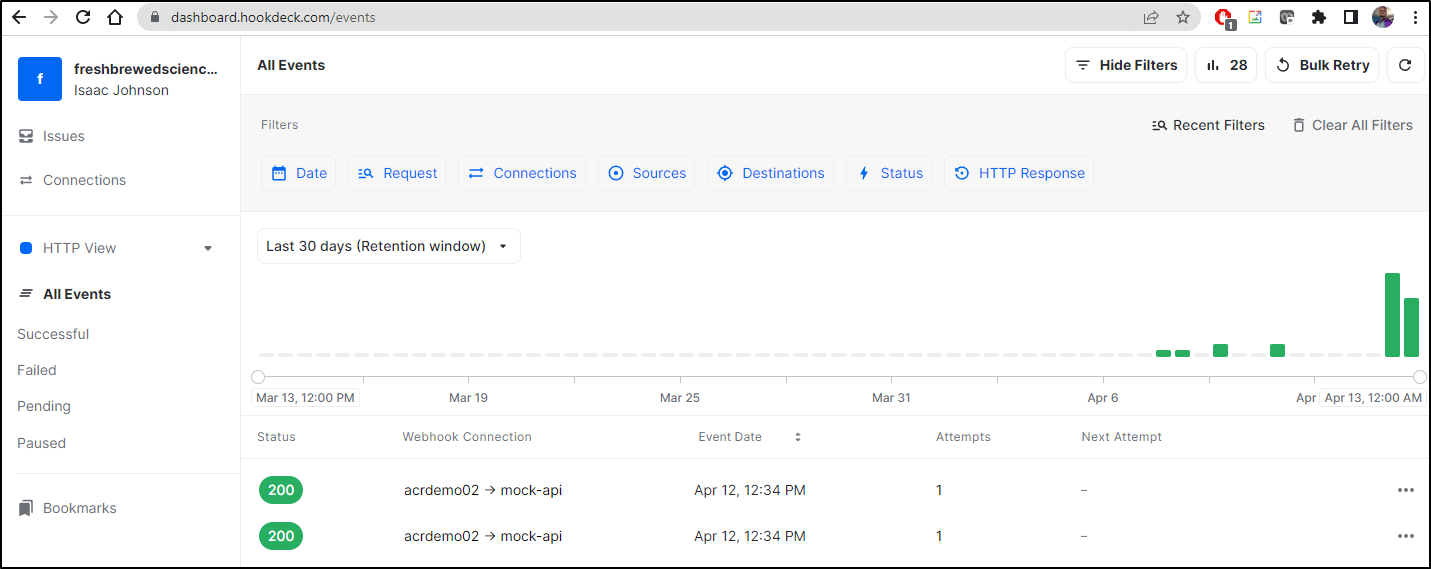

We see it triggered hookdeck

The details tell us all we need to know:

Here is our body JSON

{

"id": "9f308453-5c44-43d9-ac5f-afd168105ce1",

"timestamp": "2022-04-06T11:37:35.669466415Z",

"action": "push",

"target": {

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

"size": 3259,

"digest": "sha256:c814af787e44cdacefc58b3ce2cd93e97e4b54fdd6a39e29e41813f4e36e9077",

"length": 3259,

"repository": "dockerwithtests",

"tag": "v2"

},

"request": {

"id": "554576a7-7267-41b4-8a02-9d6869686bcc",

"host": "idjacrdemo02.azurecr.io",

"method": "PUT",

"useragent": "docker/20.10.9+azure-1 go/go1.16.8 git-commit/79ea9d3080181d755855d5924d0f4f116faa9463 kernel/5.4.0-1068-azure os/linux arch/amd64 UpstreamClient(Docker-Client/20.10.9+azure-1 \\(linux\\))"

}

}

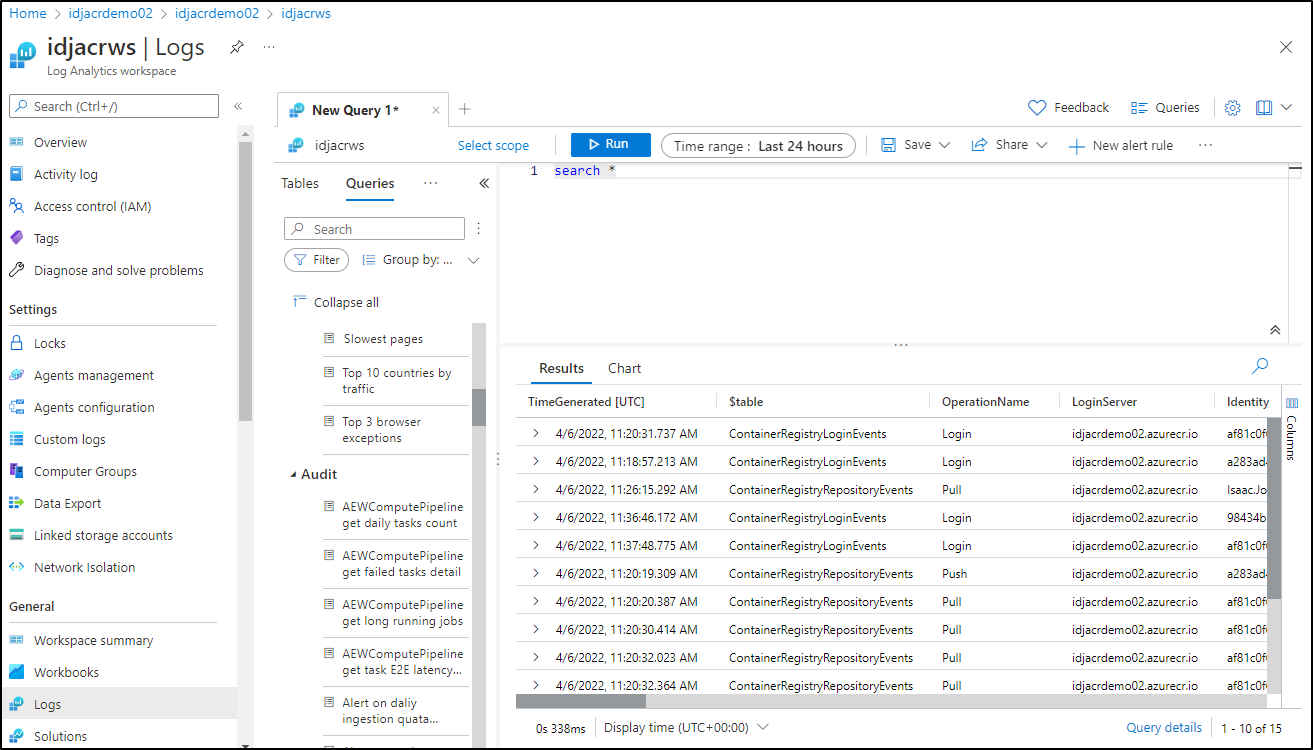

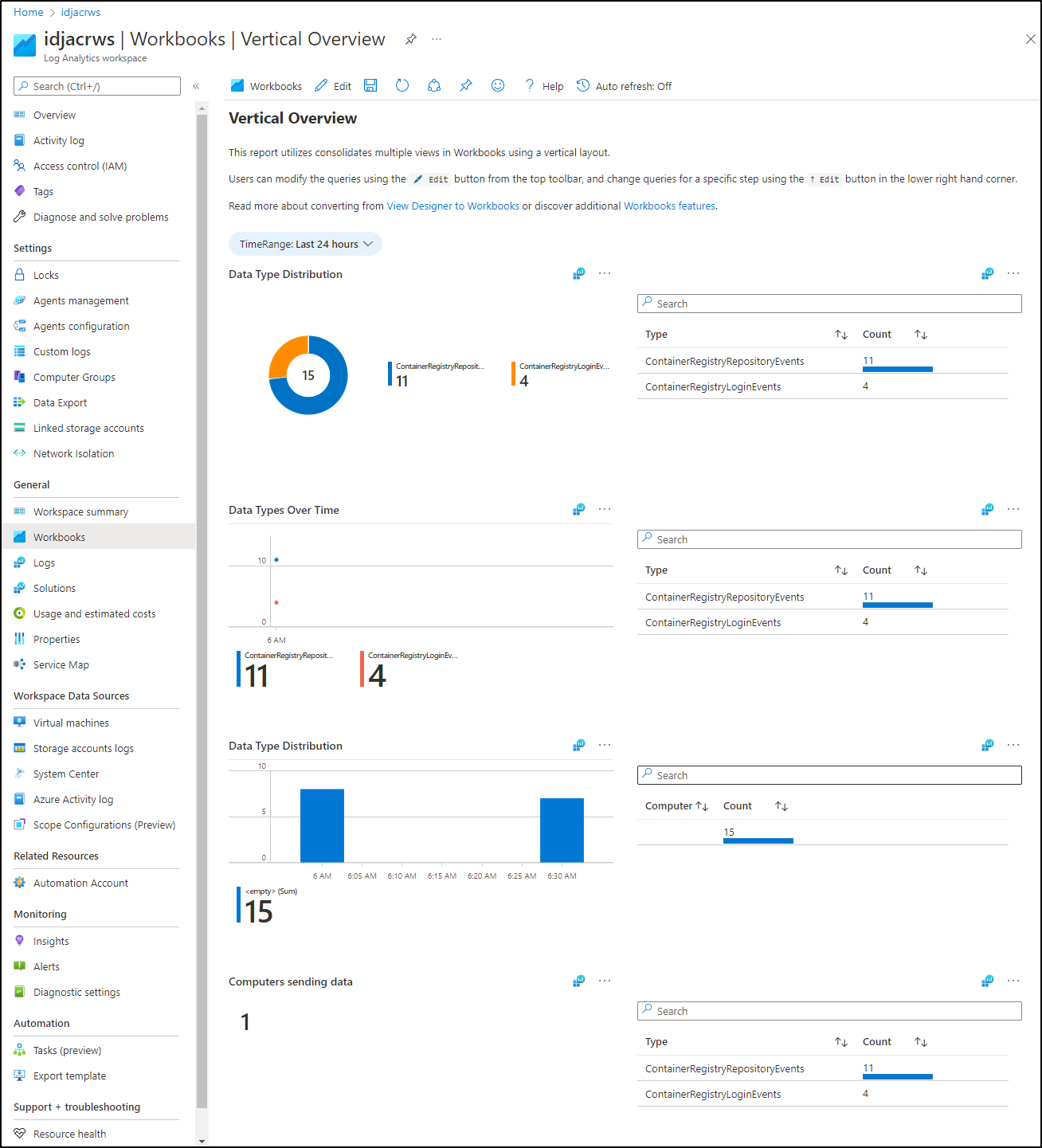

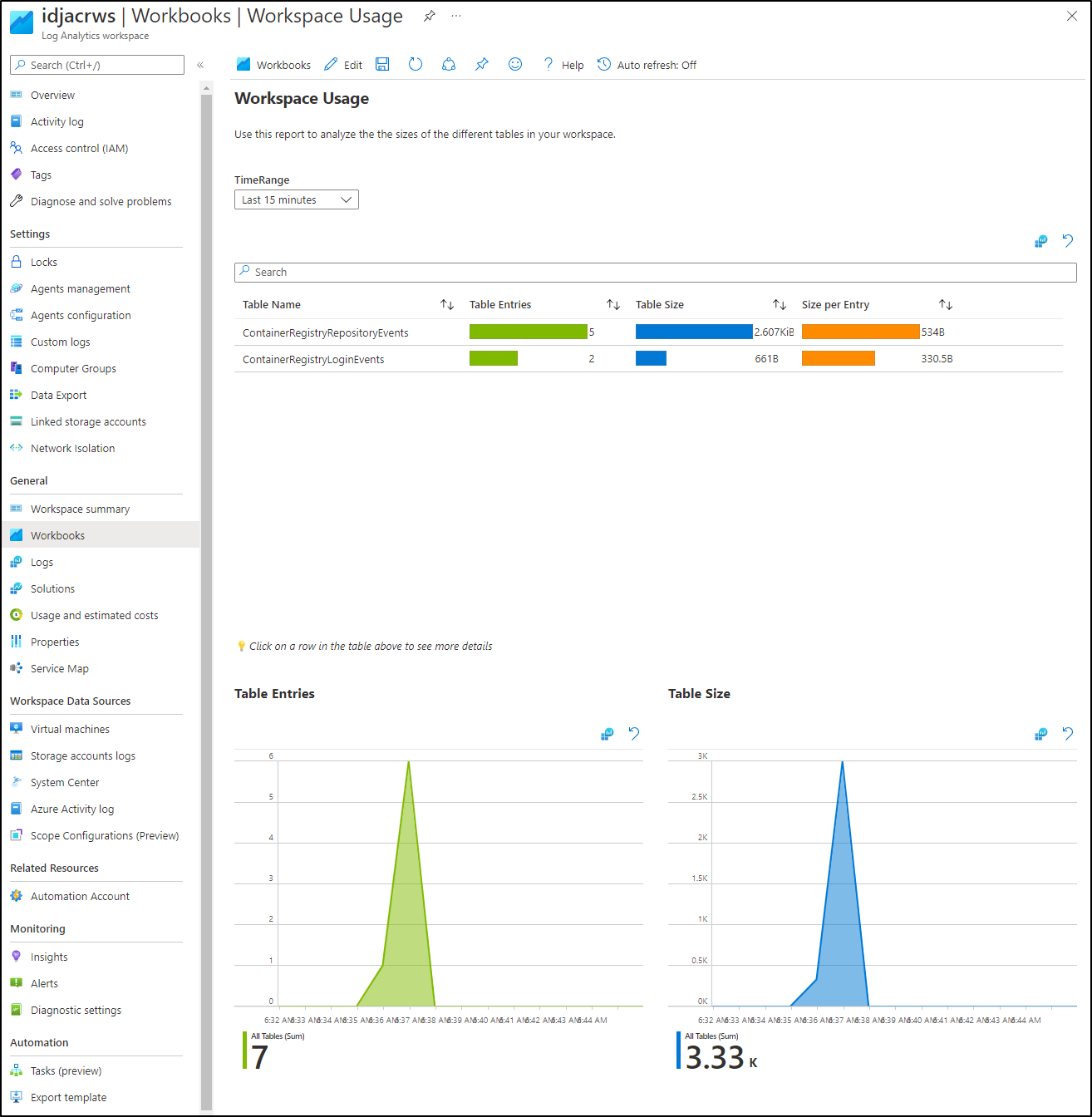

I should also point out that we are indeed getting logs and events in our ALM workspace

which we can use to get all sorts of details; such as Data Types over time

or workspace usage

So now the question is knowing that we can have ACR trigger webhooks, and knowing that we could enable GitOps in AKS or GKE just as easy, what might be a scalable pattern for automated deployments?

If our ACR is really in charge of the build and tests (CI), what performs the CD?

ACR Tasks

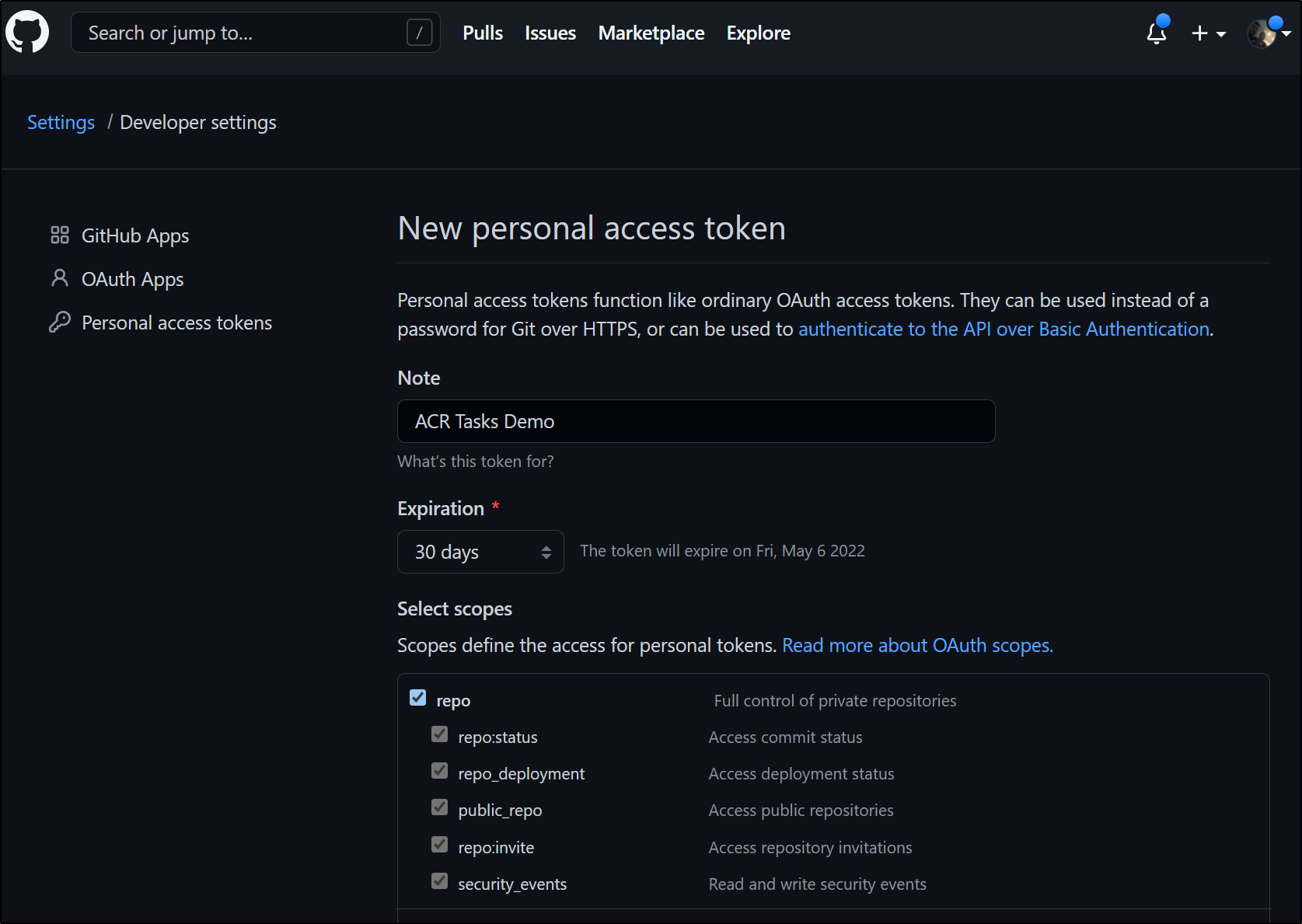

First, create a Github token https://github.com/settings/tokens/new

Next we need ot create our task definition file. Per the docs, we can build, tag, test, and push. However, for this simple example, we will just implement build tag and push.

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ vi taskmulti.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ cat taskmulti.yaml

version: v1.1.0

steps:

# Build target image

- build: -t {{.Run.Registry}}/dockerwithtests:{{.Run.ID}} -f Dockerfile .

# Push image

- push:

- {{.Run.Registry}}/dockerwithtests:{{.Run.ID}}

Let’s set some env vars then push the task up to ACR

$ ACR_NAME=idjacrdemo02

$ GIT_USER=idjohnson

$ GIT_PAT=ghp_asdfasdfasdfasdasdfasdsdasdasdfasdfasd

Create the task (trigger)

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ az acr task create --registry $ACR_NAME --name gitopstrigger --context https://github.com/$GIT_USER/dockerWithTests2.git#main --file taskmulti.yaml --git-access-token $GIT_PAT

{

"agentConfiguration": {

"cpu": 2

},

"creationDate": "2022-04-07T02:09:23.708948+00:00",

"credentials": null,

"id": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourceGroups/idjacrdemo02/providers/Microsoft.ContainerRegistry/registries/idjacrdemo02/tasks/gitopstrigger",

"identity": null,

"location": "centralus",

"name": "gitopstrigger",

"platform": {

"architecture": "amd64",

"os": "linux",

"variant": null

},

"provisioningState": "Succeeded",

"resourceGroup": "idjacrdemo02",

"status": "Enabled",

"step": {

"baseImageDependencies": null,

"contextAccessToken": null,

"contextPath": "https://github.com/idjohnson/dockerWithTests2.git#main",

"taskFilePath": "taskmulti.yaml",

"type": "FileTask",

"values": [],

"valuesFilePath": null

},

"systemData": {

"createdAt": "2022-04-07T02:09:23.5921154+00:00",

"createdBy": "Isaac.Johnson@mediware.com",

"createdByType": "User",

"lastModifiedAt": "2022-04-07T02:09:23.5921154+00:00",

"lastModifiedBy": "Isaac.Johnson@mediware.com",

"lastModifiedByType": "User"

},

"tags": null,

"timeout": 3600,

"trigger": {

"baseImageTrigger": {

"baseImageTriggerType": "Runtime",

"name": "defaultBaseimageTriggerName",

"status": "Enabled",

"updateTriggerEndpoint": null,

"updateTriggerPayloadType": "Default"

},

"sourceTriggers": [

{

"name": "defaultSourceTriggerName",

"sourceRepository": {

"branch": "main",

"repositoryUrl": "https://github.com/idjohnson/dockerWithTests2.git#main",

"sourceControlAuthProperties": null,

"sourceControlType": "Github"

},

"sourceTriggerEvents": [

"commit"

],

"status": "Enabled"

}

],

"timerTriggers": null

},

"type": "Microsoft.ContainerRegistry/registries/tasks"

}

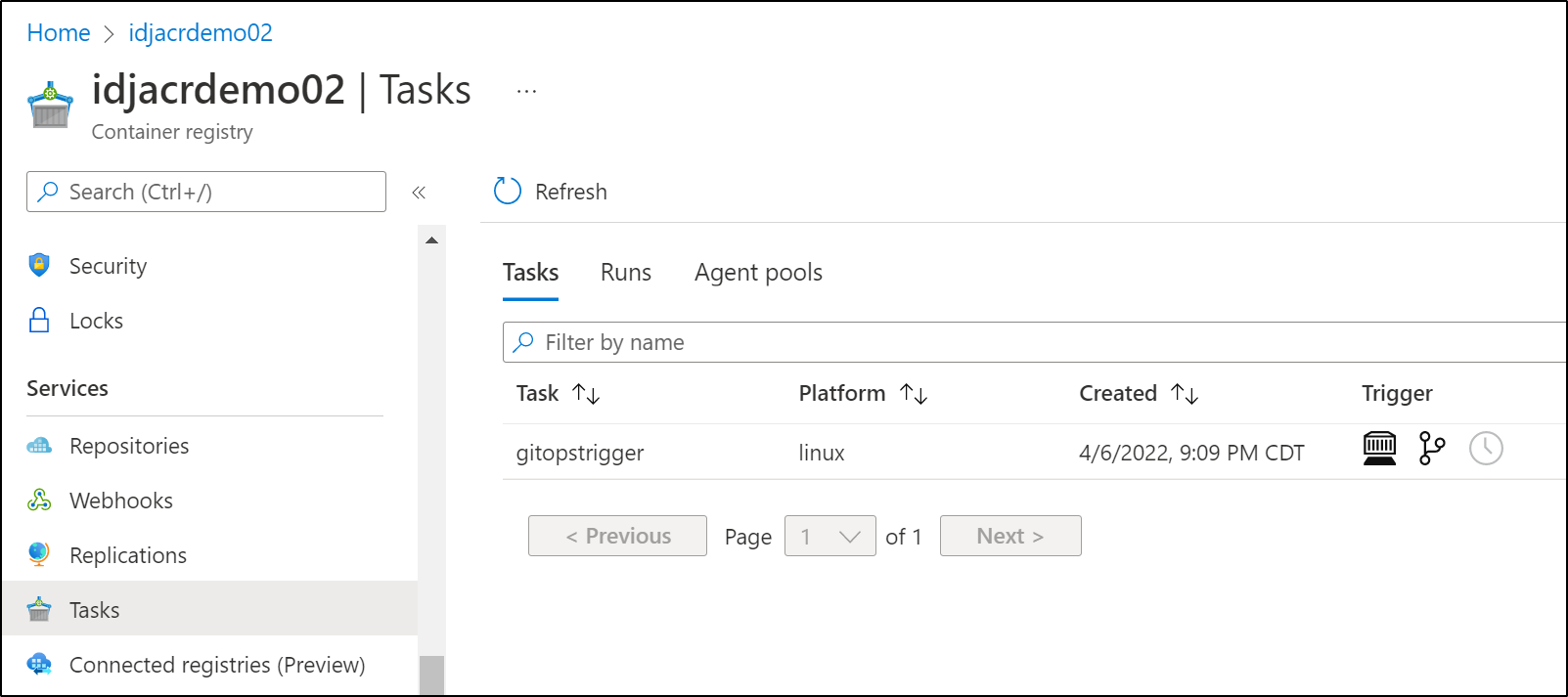

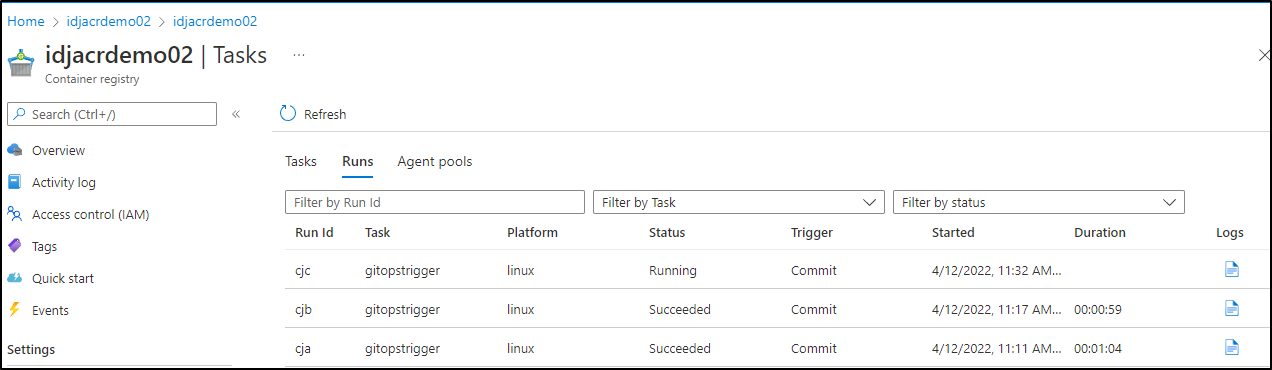

We can see now see the Task in our Registry

To test, we add and push

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ git add taskmulti.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ git commit -m "adding ACR Task steps"

[main dfc2e88] adding ACR Task steps

1 file changed, 7 insertions(+)

create mode 100644 taskmulti.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ git push

Enumerating objects: 4, done.

Counting objects: 100% (4/4), done.

Delta compression using up to 4 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (3/3), 413 bytes | 413.00 KiB/s, done.

Total 3 (delta 1), reused 0 (delta 0)

remote: Resolving deltas: 100% (1/1), completed with 1 local object.

To https://github.com/idjohnson/dockerWithTests2.git

bf22908..dfc2e88 main -> main

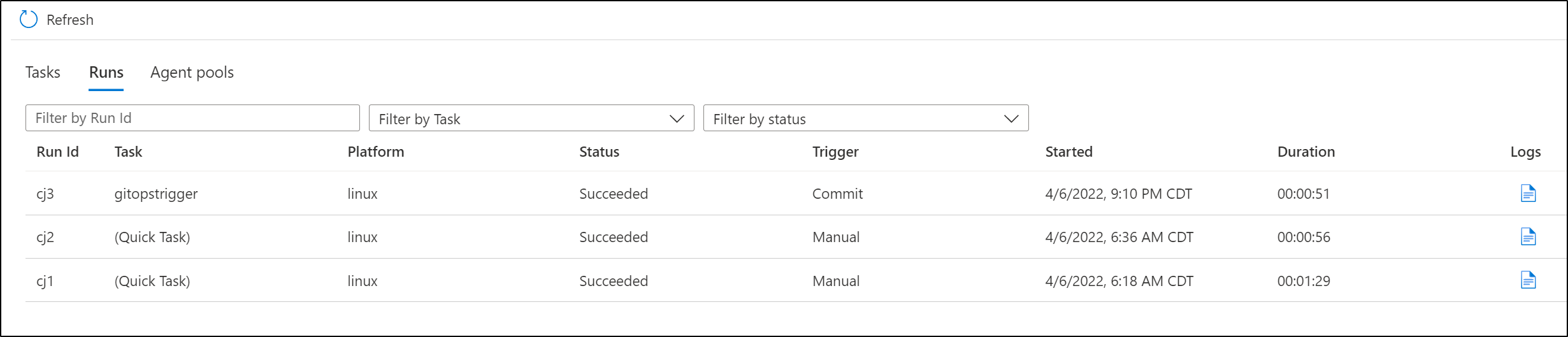

And can see a run triggered

We can see it built and pushed the new cj3 tag

Mutliple tags

Let’s push a tag from Commit

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ cat taskmulti.yaml

version: v1.1.0

steps:

# Build target image

- build: -t {{.Run.Registry}}/dockerwithtests:{{.Run.ID}} -t {{.Run.Registry}}/dockerwithtests:{{.Run.Commit}} -f Dockerfile .

# Push image

- push:

- {{.Run.Registry}}/dockerwithtests:{{.Run.Commit}}

- push:

- {{.Run.Registry}}/dockerwithtests:{{.Run.ID}}

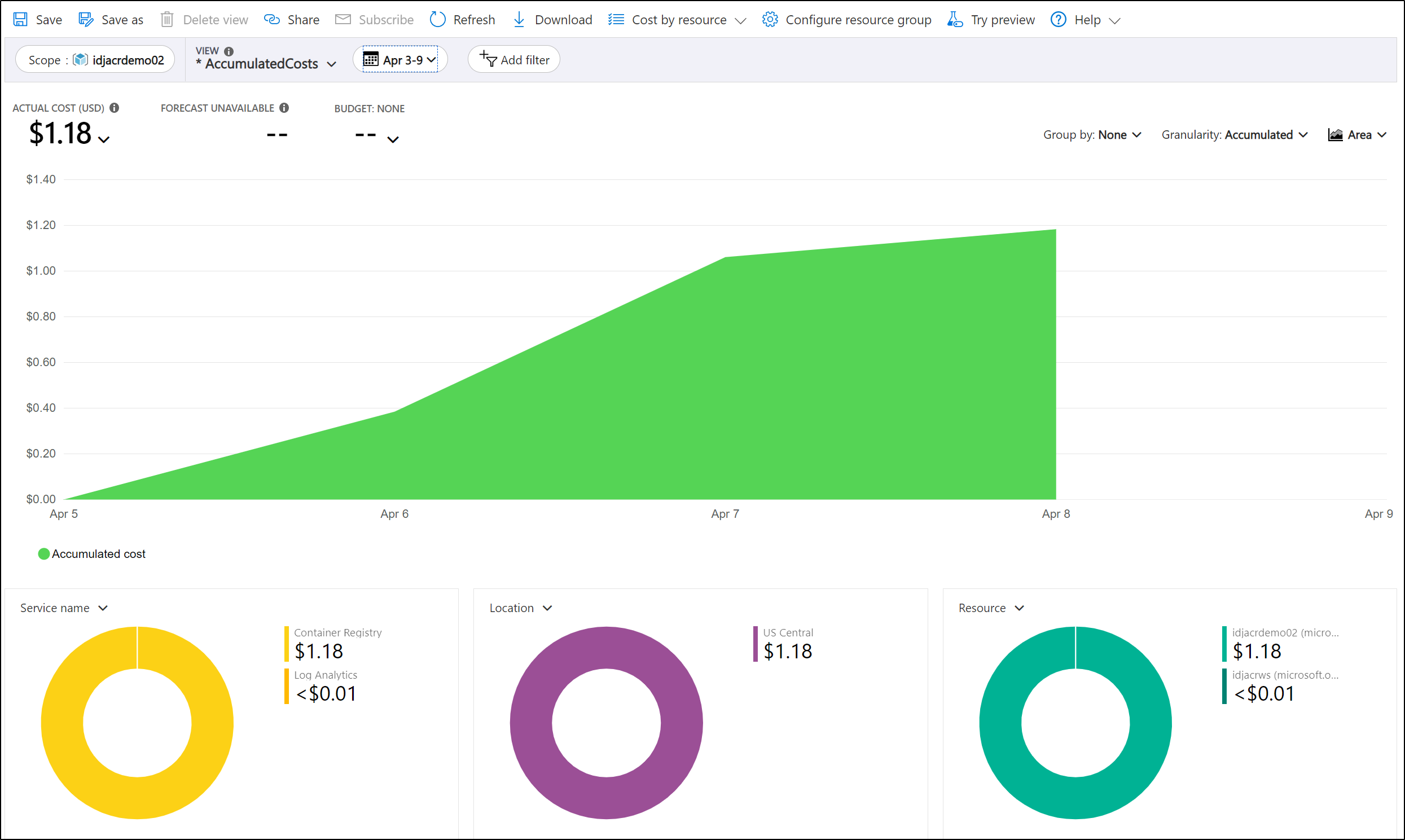

We can see that for the last week, we’re at $1.18

GitOps with AKS

Let’s create a Kubernetes Cluster we can use for Gitops.

First create (or update) a service principal

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ az ad sp create-for-rbac -n idjaks45sp.mediwareinformationsystems.onmicrosoft.com --skip-assignment --output json > my_sp.json

Changing "idjaks45sp.mediwareinformationsystems.onmicrosoft.com" to a valid URI of "http://idjaks45sp.mediwareinformationsystems.onmicrosoft.com", which is the required format used for service principal names

Found an existing application instance of "b2049cf3-082e-47e5-a141-24305989b5a5". We will patch it

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ export SP_PASS=`cat my_sp.json | jq -r .password`

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ export SP_ID=`cat my_sp.json | jq -r .appId`

Then we can make the cluster

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ az aks create --resource-group idjacrdemo02 --name idjaks46 --location centralus --node-c

ount 2 --enable-cluster-autoscaler --min-count 2 --max-count 3 --generate-ssh-keys --network-plugin azure --network-policy azure --service-princ

ipal $SP_ID --client-secret $SP_PASS

- Running ..

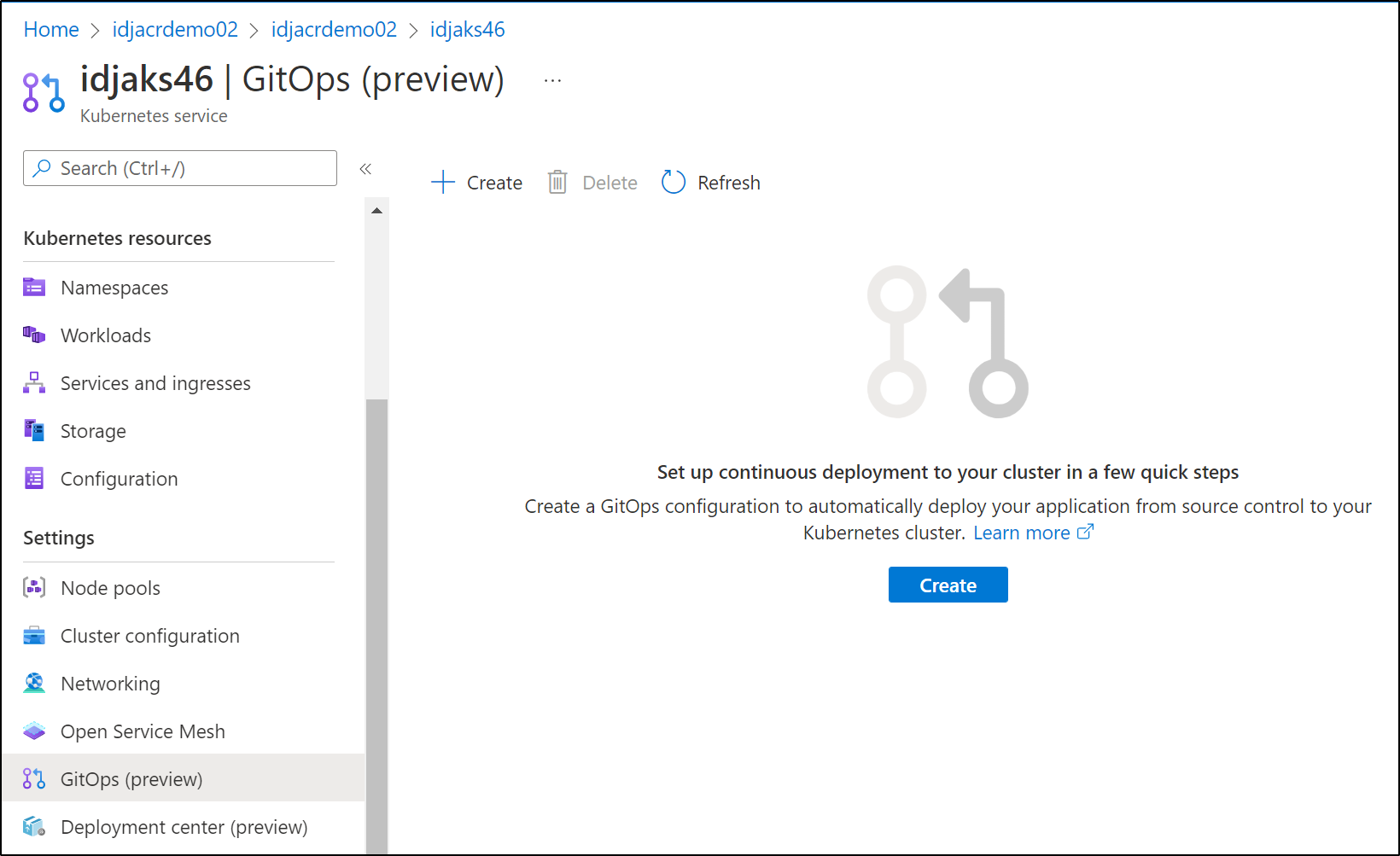

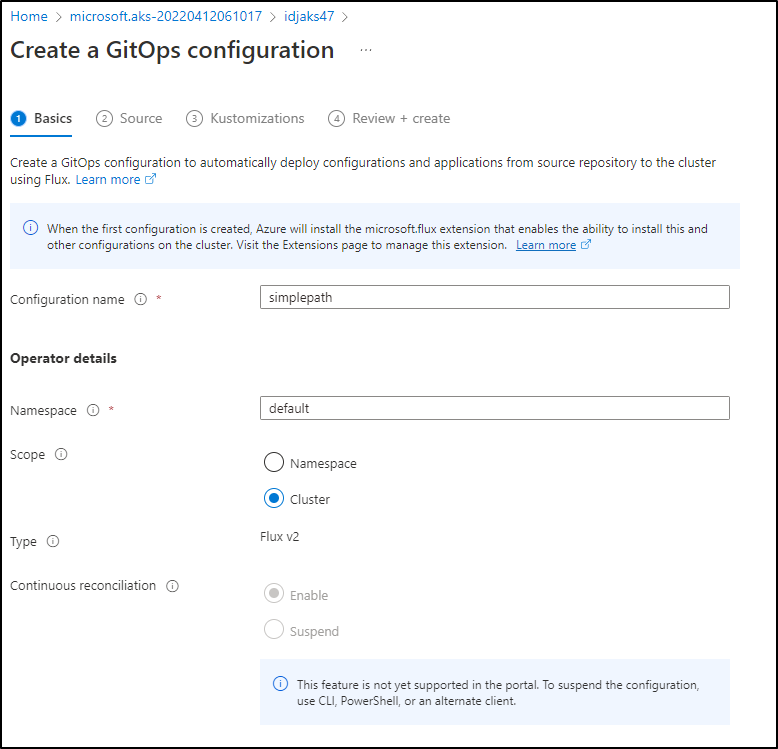

First, we can enable GitOps via the Portal

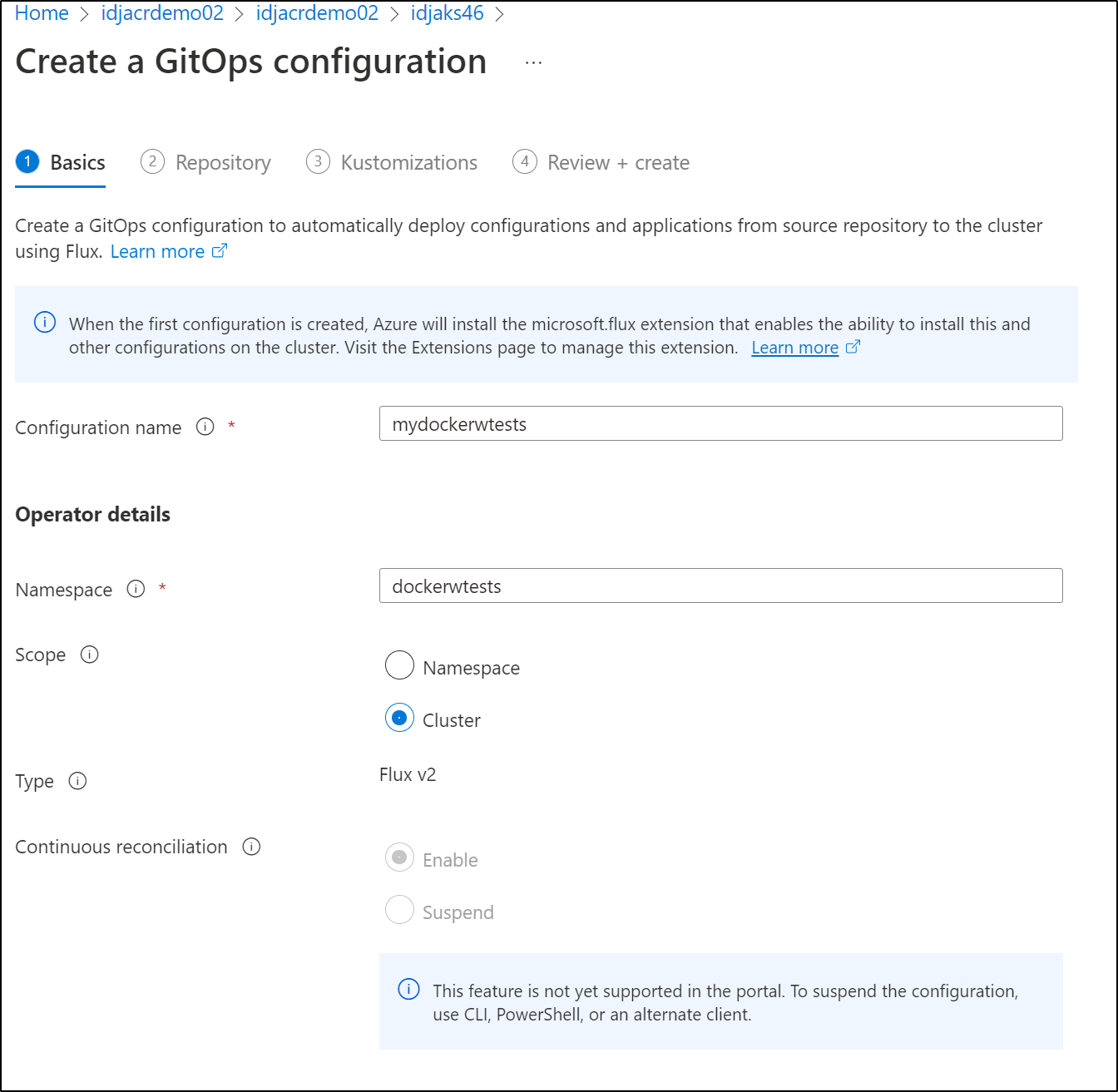

Next, define a workspace to install Flux into and select the scope of the Flux Operator

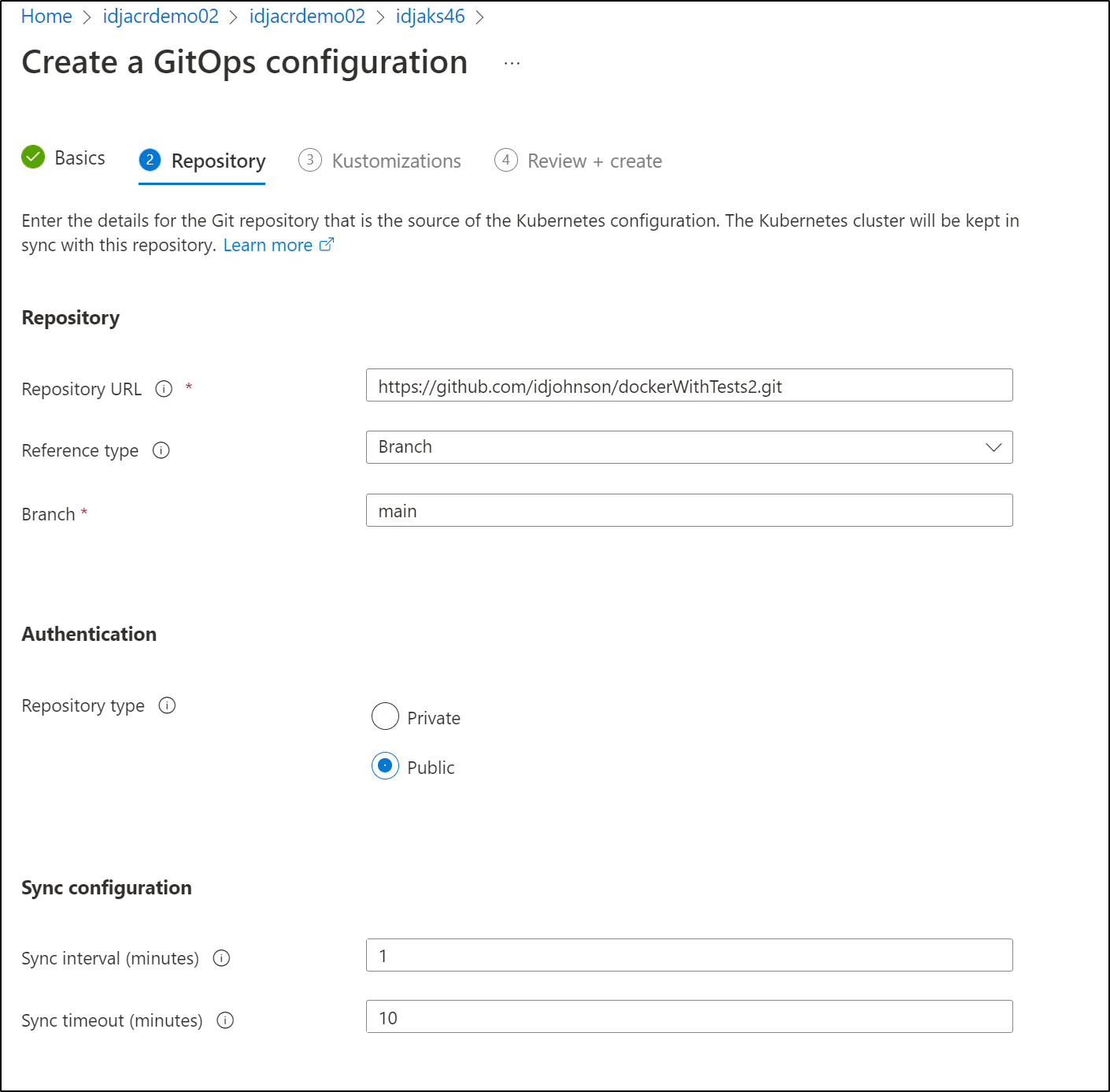

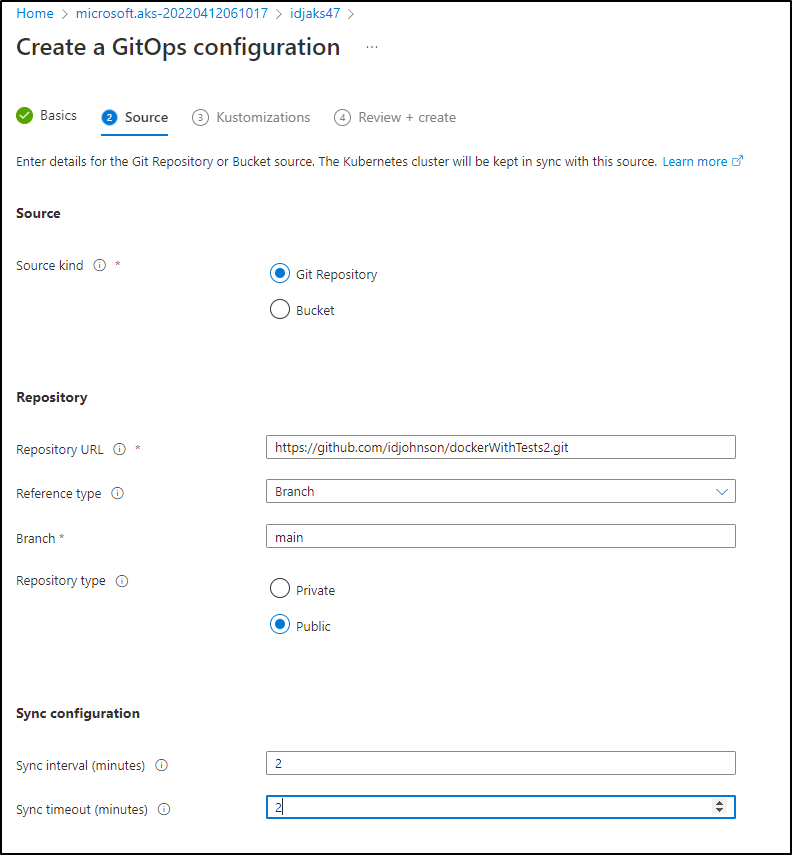

Next, we need our Github URL

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ git remote show origin | head -n4

* remote origin

Fetch URL: https://github.com/idjohnson/dockerWithTests2.git

Push URL: https://github.com/idjohnson/dockerWithTests2.git

HEAD branch: main

And because we are using a Public repo:

we do not need to add auth credentials (but would if we were using a private one)

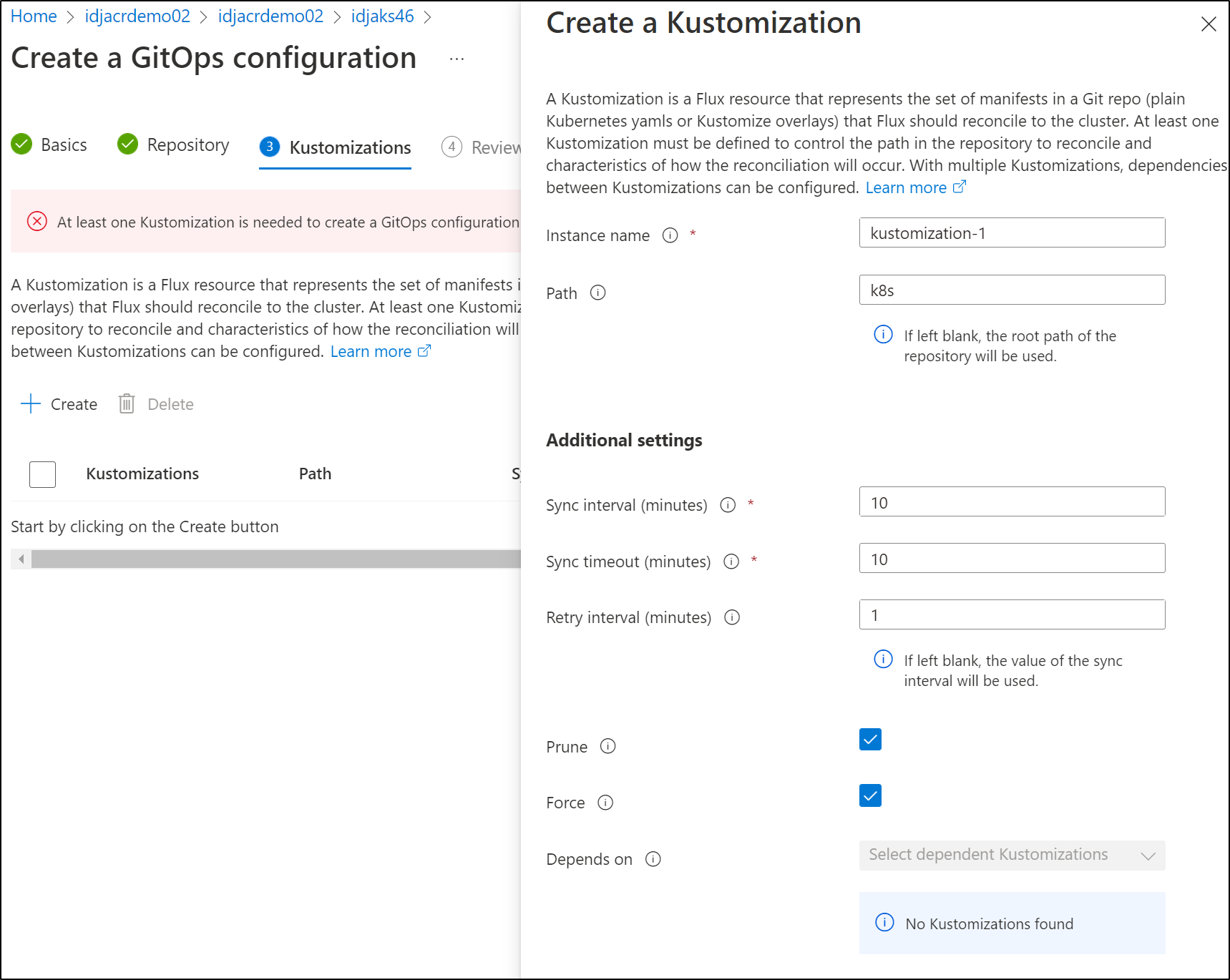

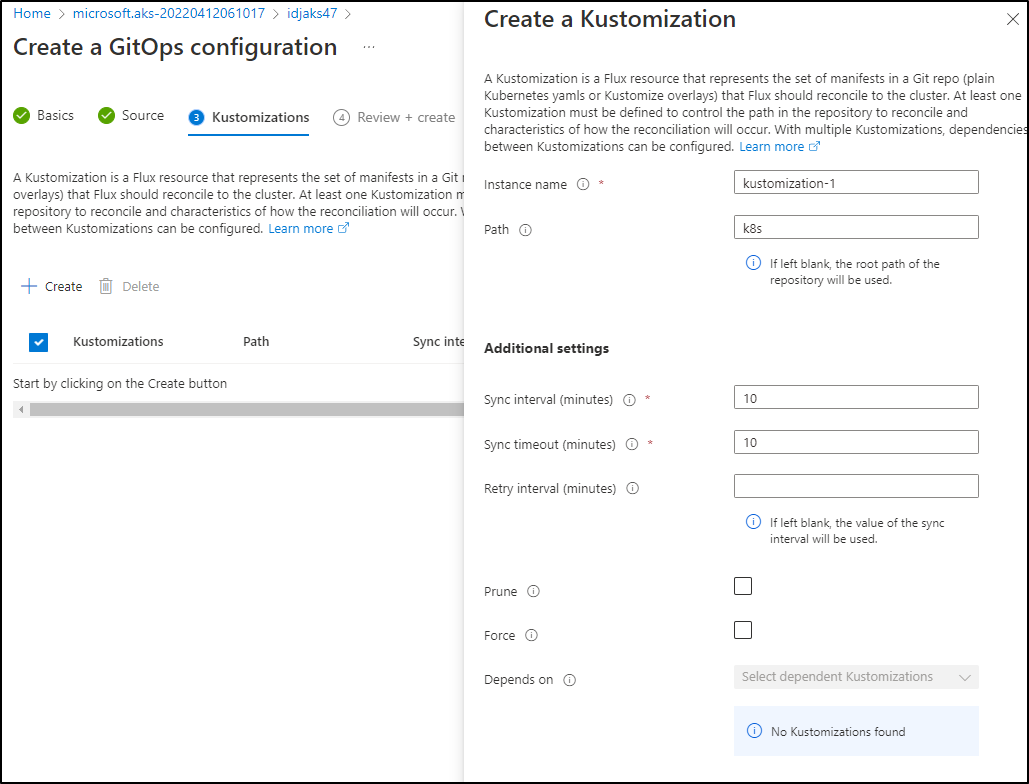

We will store our YAMLs in a “k8s” folder we will create

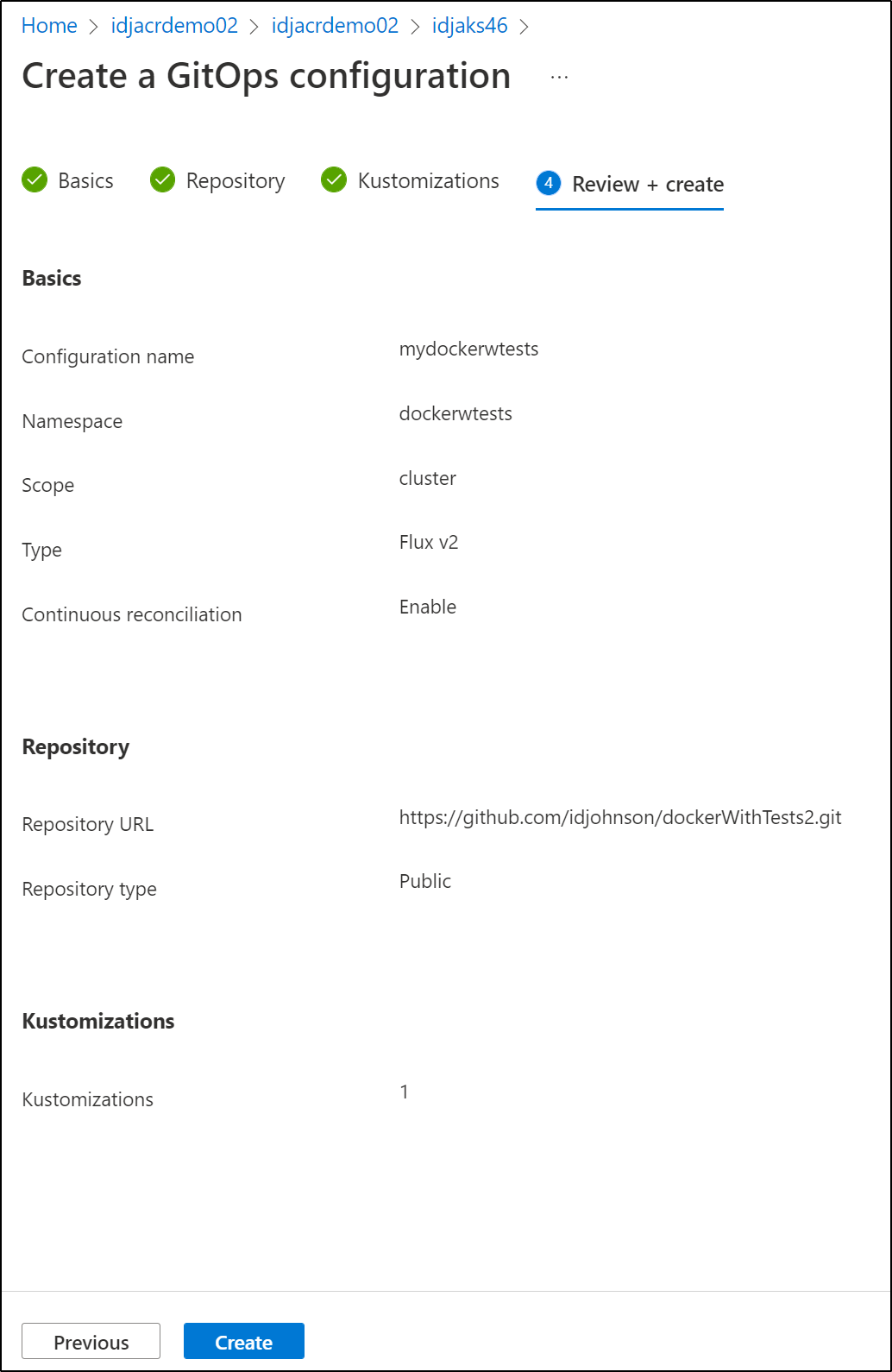

Lastly, review and create

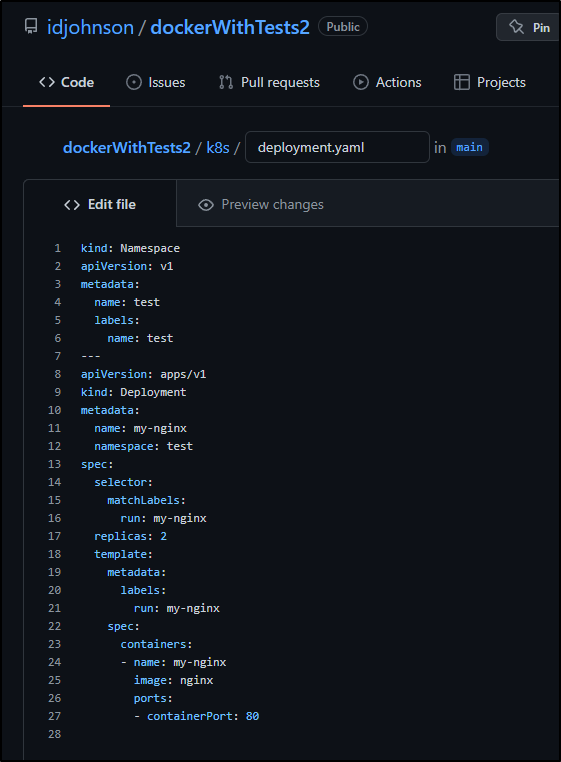

We will make a k8s folder now and create a deployment and customization. I’ll use a basic nginx deployment but override with a known image from our ACR

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ cat k8s/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

selector:

matchLabels:

run: my-nginx

replicas: 2

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

ports:

- containerPort: 80

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ cat k8s/kustomization.yaml

resources:

- deployment.yaml

images:

- name: nginx

newName: idjacrdemo02.azurecr.io/dockerwithtests

newTag: cj3

We’ll add and push

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ git add k8s/

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ git commit -m "create initial gitops folders"

[main 085a7ee] create initial gitops folders

2 files changed, 25 insertions(+)

create mode 100644 k8s/deployment.yaml

create mode 100644 k8s/kustomization.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/dockerWithTests2$ git push

Enumerating objects: 6, done.

Counting objects: 100% (6/6), done.

Delta compression using up to 4 threads

Compressing objects: 100% (5/5), done.

Writing objects: 100% (5/5), 640 bytes | 320.00 KiB/s, done.

Total 5 (delta 1), reused 0 (delta 0)

remote: Resolving deltas: 100% (1/1), completed with 1 local object.

To https://github.com/idjohnson/dockerWithTests2.git

e19c83b..085a7ee main -> main

The problem I encountered, even when I chose GitOps on default, were errors setting up Flux into the AKS cluster. No usable error message was really given.

Via Portal

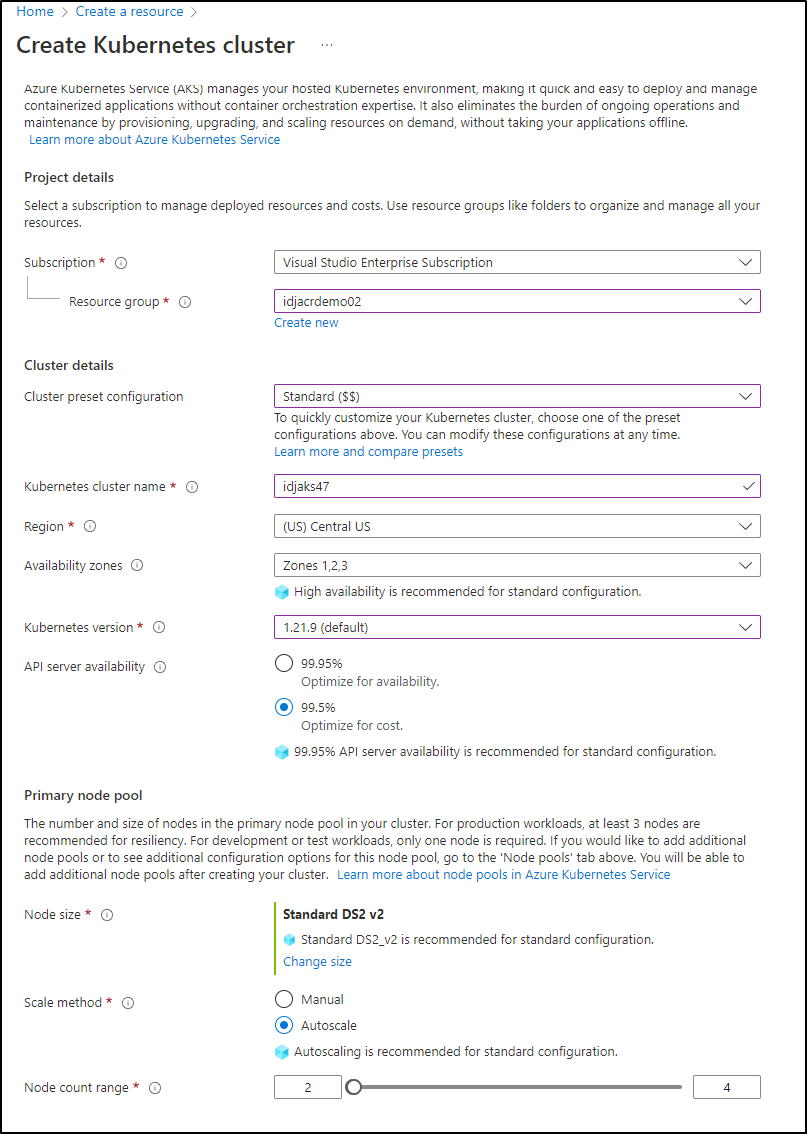

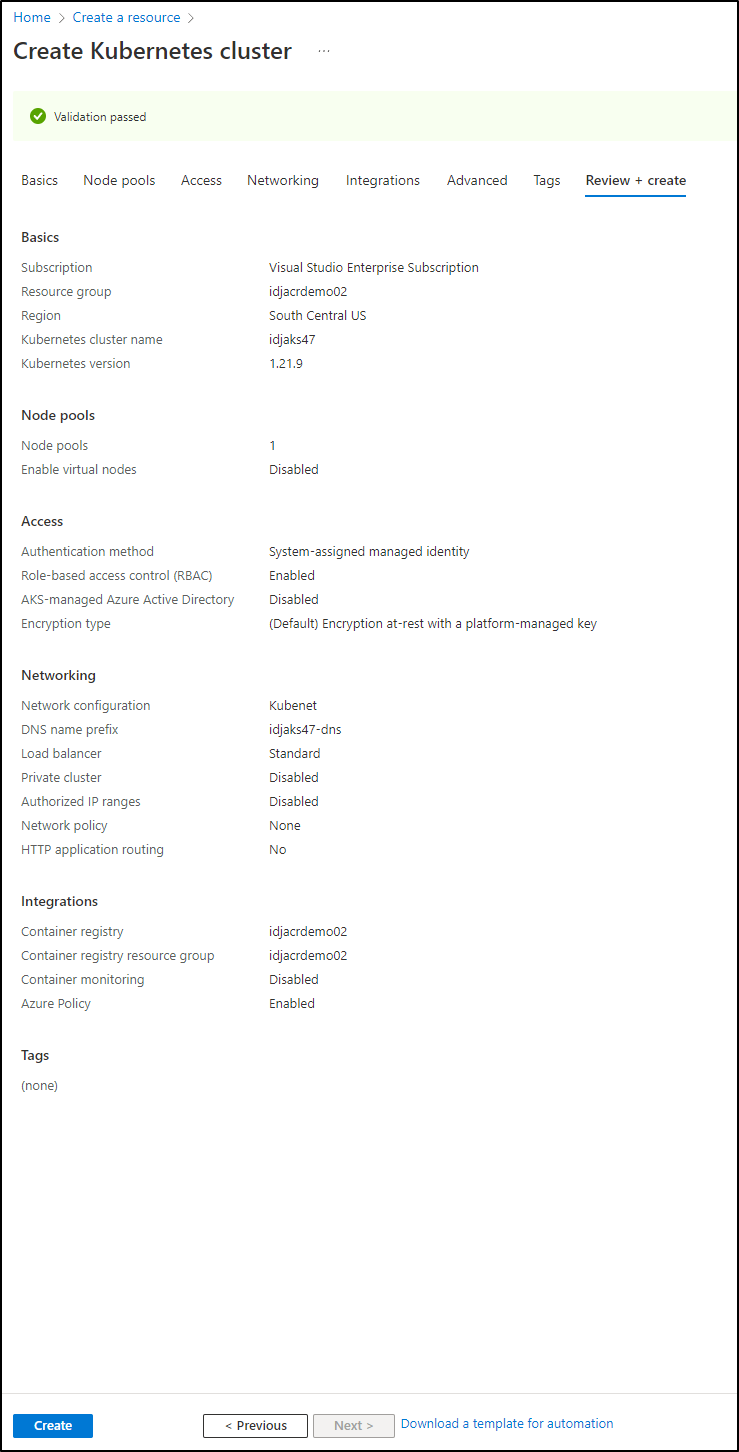

I’ll create a new AKS in the same RG. There are a few more options we can pick in the Portal such as cluster preset configuration and API server availability

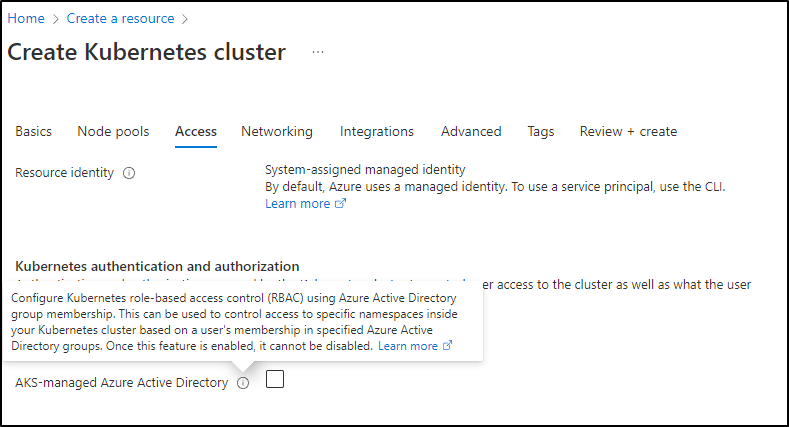

We could enable AAD based RBAC if we chose here. Then we would manage access via Portal or CLI tying to AD groups. I bring this up as so often I see companies build out Kubernetes and then try to jerry rig in AAD RBAC later. You could save so much time just leveraging AAD at create time.

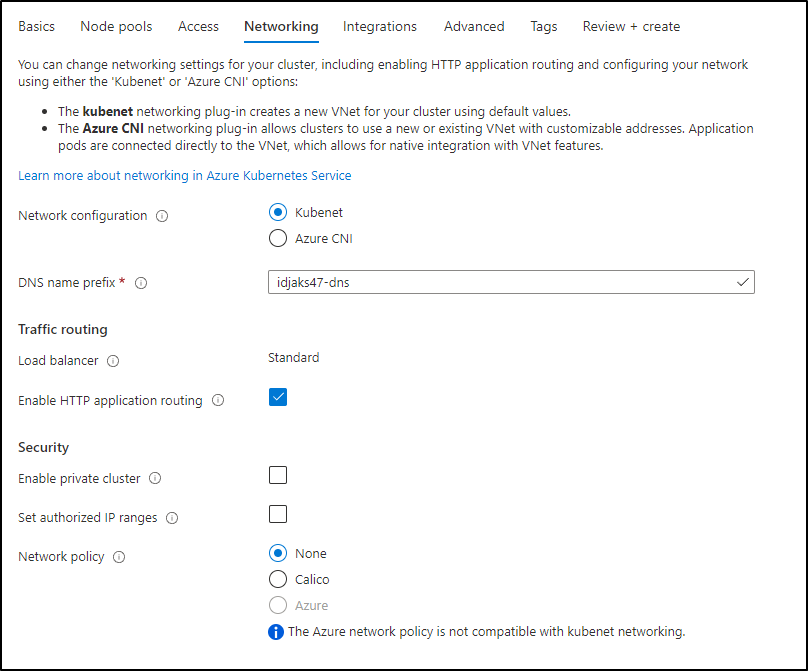

I will enable HTTP application routing as this is a dev cluster

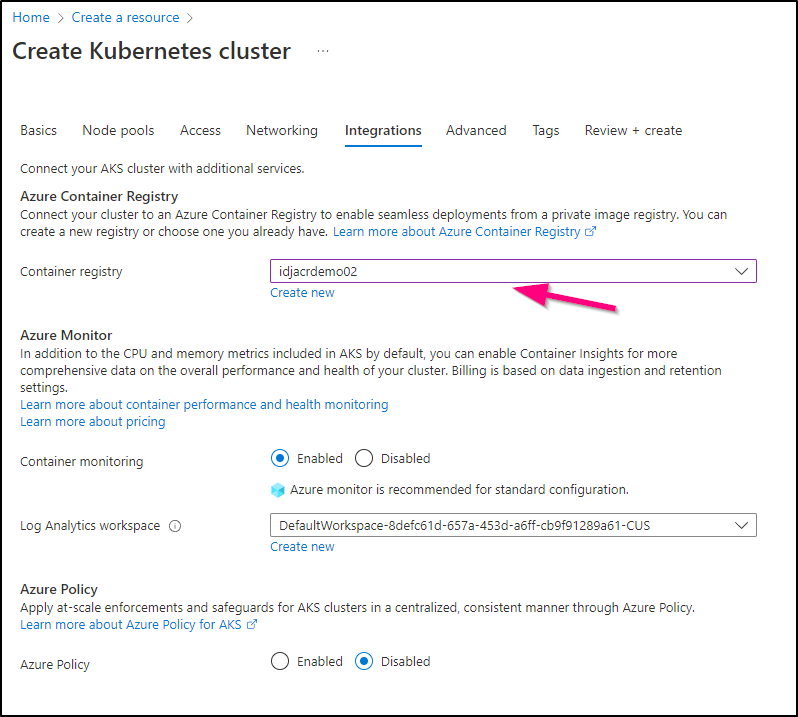

We can integrate to our ACR right now in the wizard. This means we won’t need an imagePullSecret later

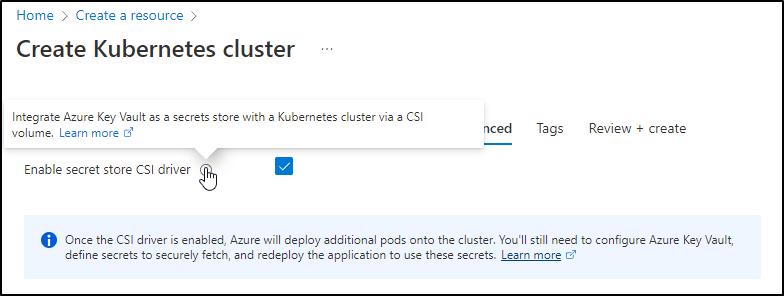

Another advanced feature is to use AKV for secrets management. This works by using sidecars much like Istio or Hashi Vault. You can see more in the setup instructions here

I had to tweak some settings for my subscription including region, but I finally passed validation so i could create

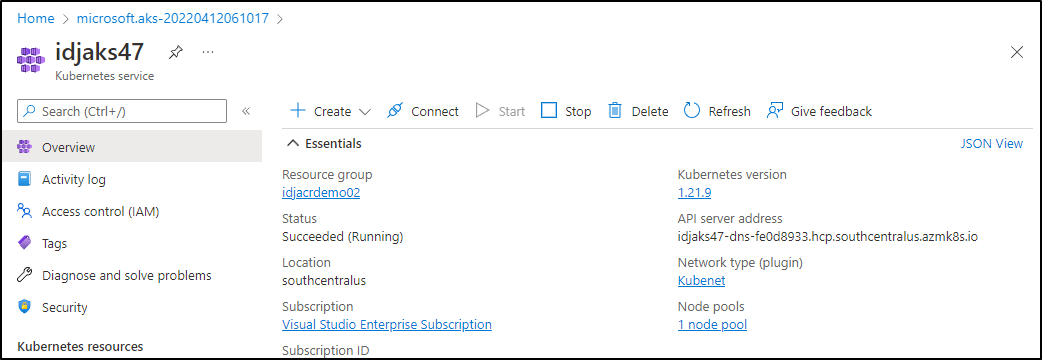

We now have our AKS

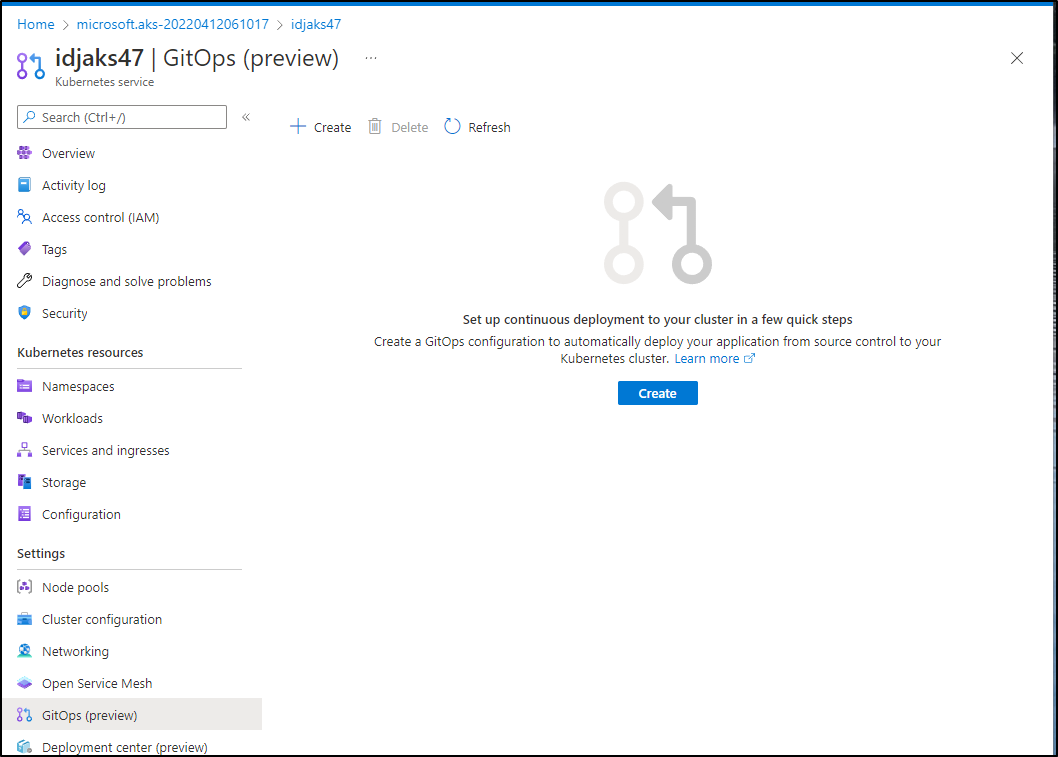

Let’s try a basic GitOps setup to the default namespace. This should be the simplest path

set to default and cluster scope

we’ll add our Github URL (https://github.com/idjohnson/dockerWithTests2.git) and set to main

add a simple kustomization (need at least one)

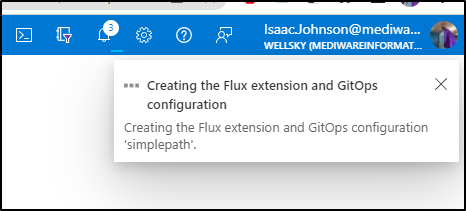

and chose to create

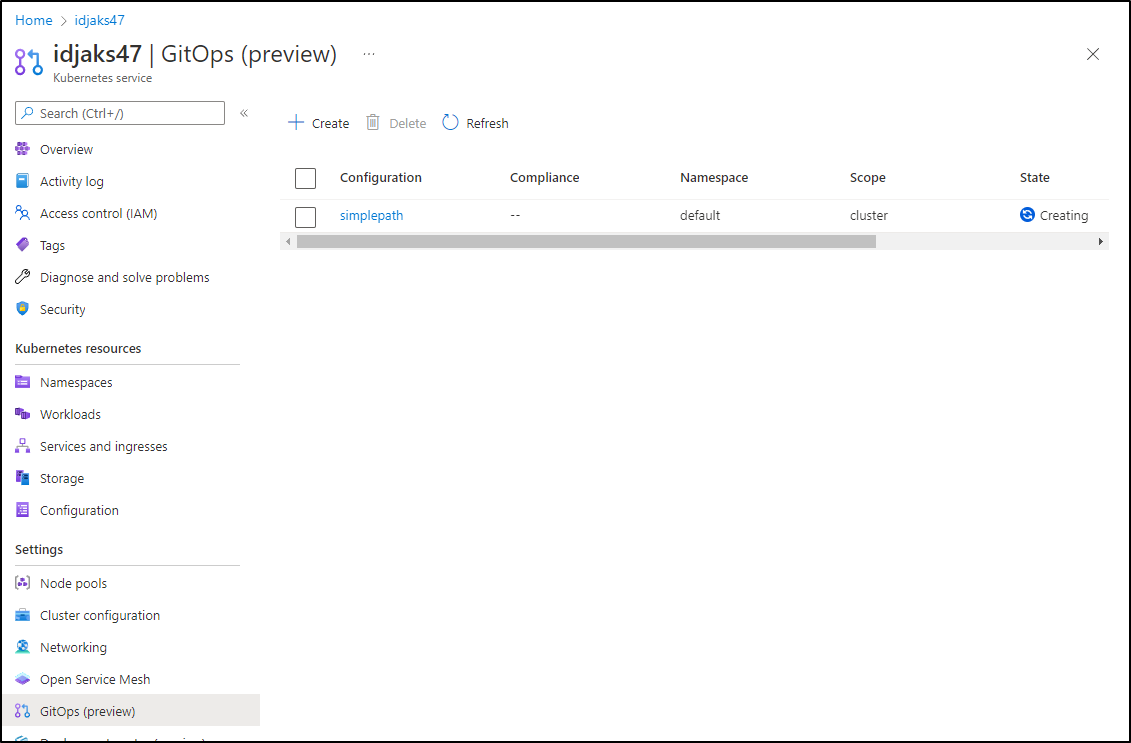

Perhaps it was due to adding Policy? I cannot be sure, but this time it seems to be on track to setup GitOps

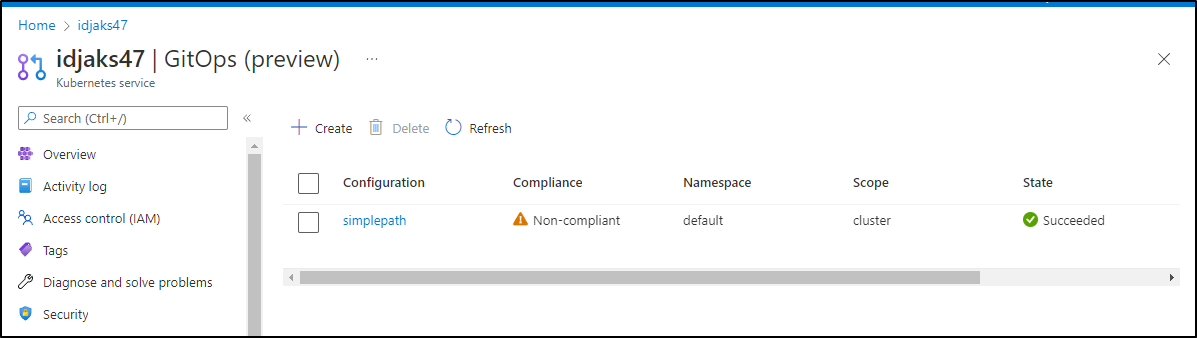

and now it succeeds

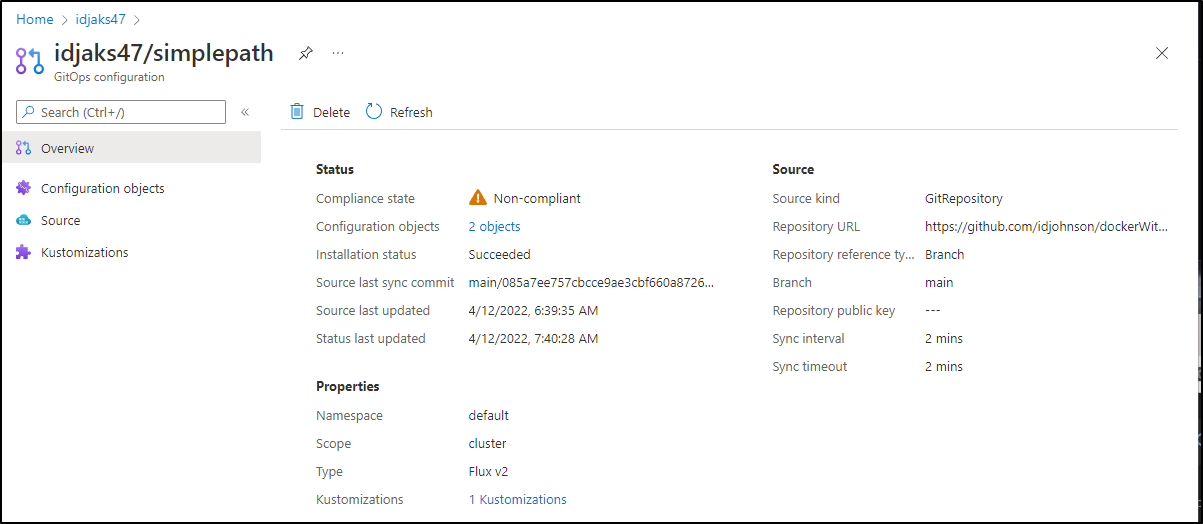

We can look at details

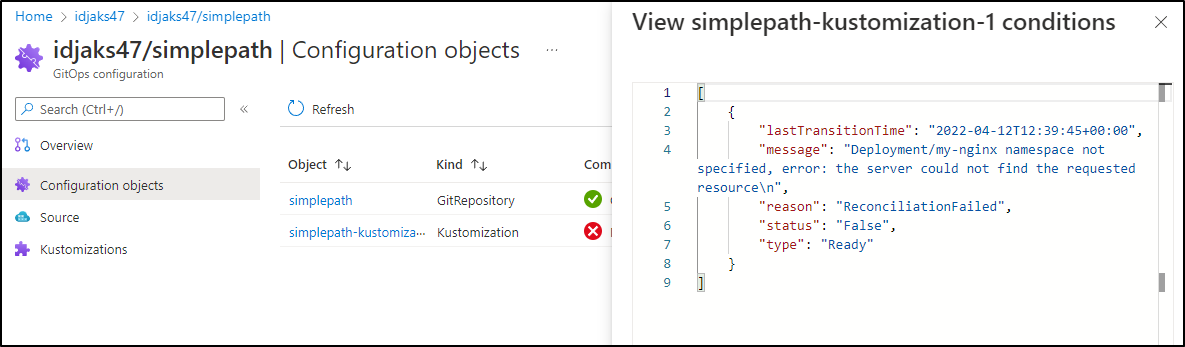

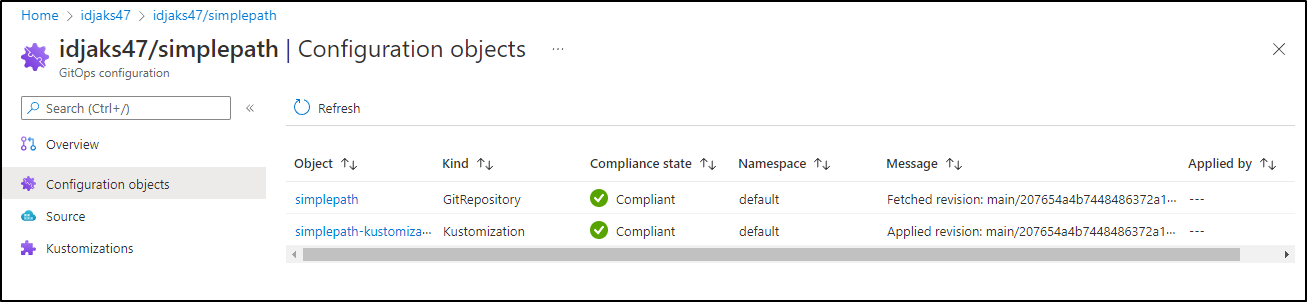

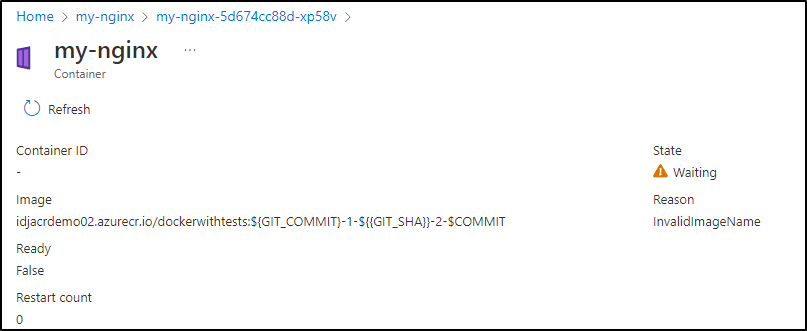

The pods did not launch, so we can check the Configuration Objects to see why

I’ll change my namespace in the yaml (and create it on the fly)

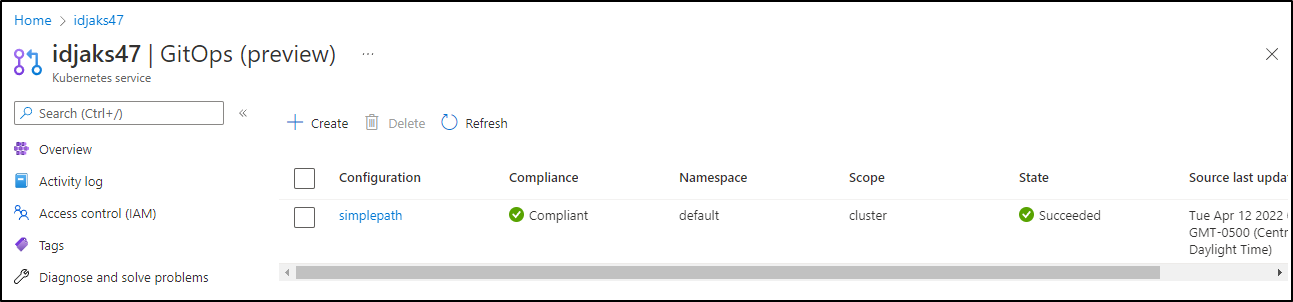

We can now see that the simplepath configuration is shown as healthy and compliant

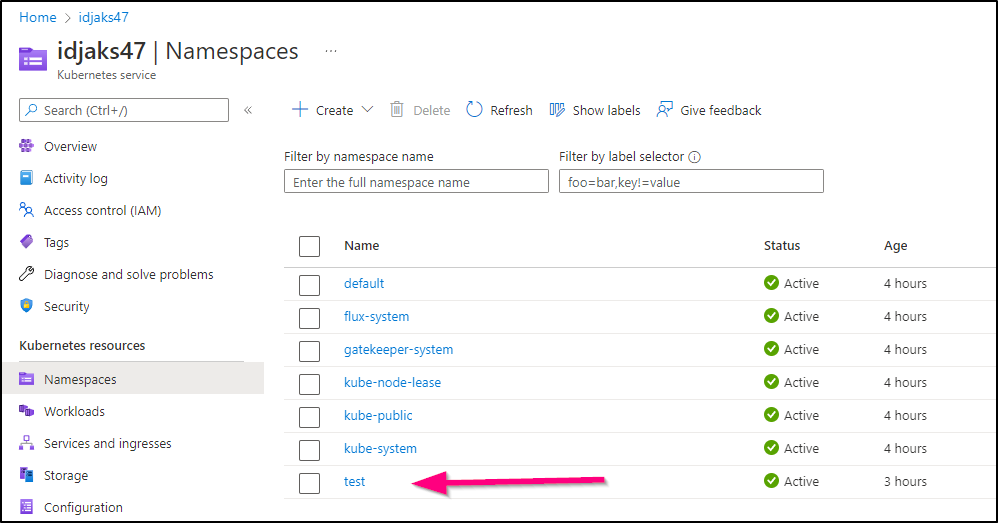

and that the new namespace was created

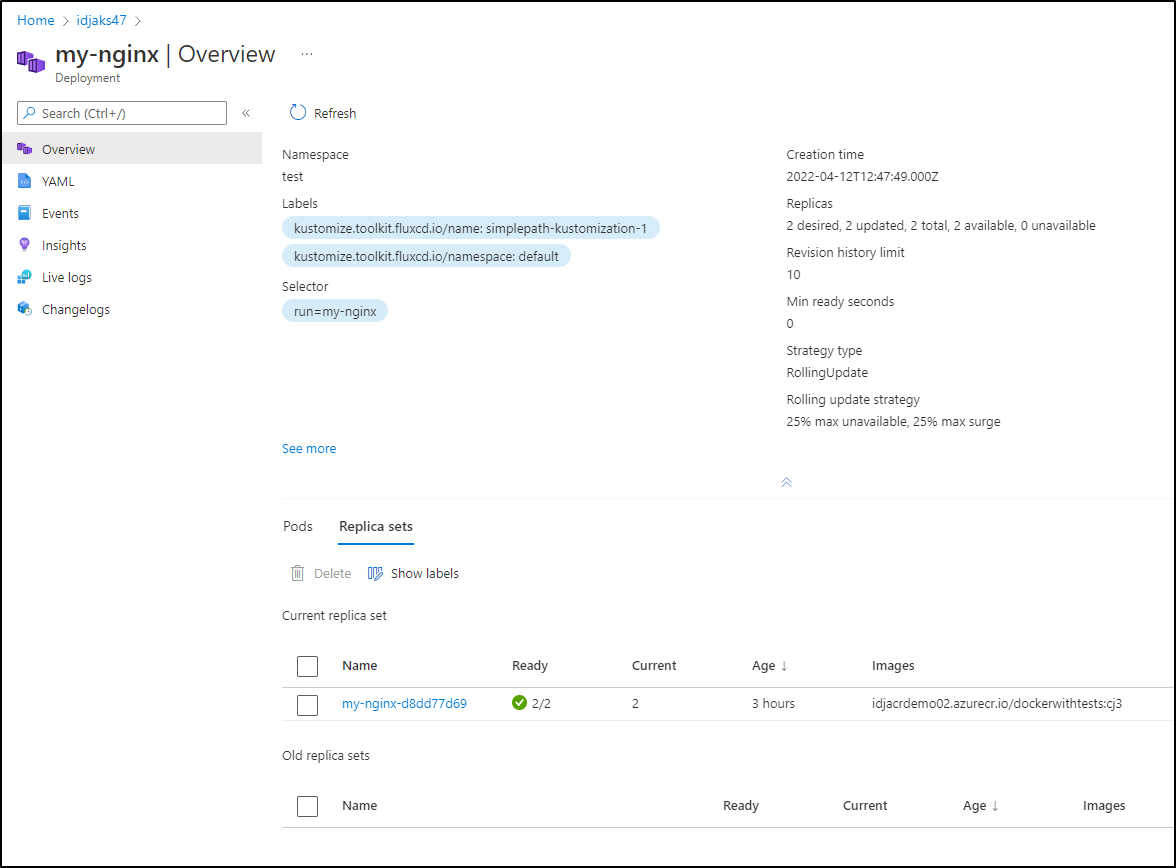

And we can see our container referenced:

apiVersion: v1

kind: Service

metadata:

name: nginx-app-svc

namespace: test

spec:

type: LoadBalancer

ports:

- port: 80

selector:

run: my-nginx

Let’s create a service to ingress traffic

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git diff

diff --git a/k8s/deployment.yaml b/k8s/deployment.yaml

index e719506..6d8e201 100644

--- a/k8s/deployment.yaml

+++ b/k8s/deployment.yaml

@@ -25,3 +25,15 @@ spec:

image: nginx

ports:

- containerPort: 80

+---

+apiVersion: v1

+kind: Service

+metadata:

+ name: nginx-run-svc

+ namespace: test

+spec:

+ type: LoadBalancer

+ ports:

+ - port: 80

+ selector:

+ run: my-nginx

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ cat deployment.yaml

kind: Namespace

apiVersion: v1

metadata:

name: test

labels:

name: test

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

namespace: test

spec:

selector:

matchLabels:

run: my-nginx

replicas: 2

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-run-svc

namespace: test

spec:

type: LoadBalancer

ports:

- port: 80

selector:

run: my-nginx

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git add deployment.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git commit -m "add svc with LB"

[main 816ed91] add svc with LB

1 file changed, 12 insertions(+)

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git push

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 16 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 517 bytes | 517.00 KiB/s, done.

Total 4 (delta 2), reused 0 (delta 0)

remote: Resolving deltas: 100% (2/2), completed with 2 local objects.

To https://github.com/idjohnson/dockerWithTests2.git

b325185..816ed91 main -> main

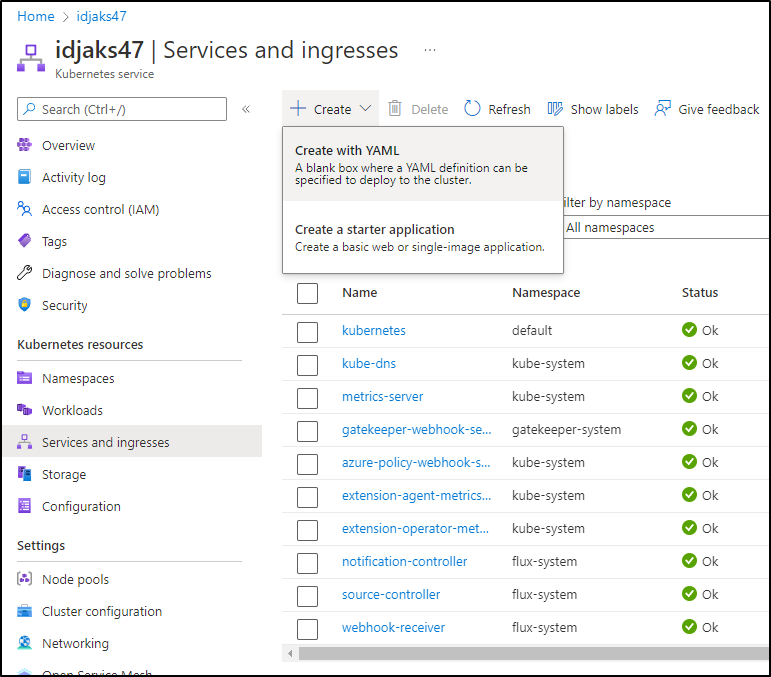

Note: we could also create it on the fly in the portal (but code is better):

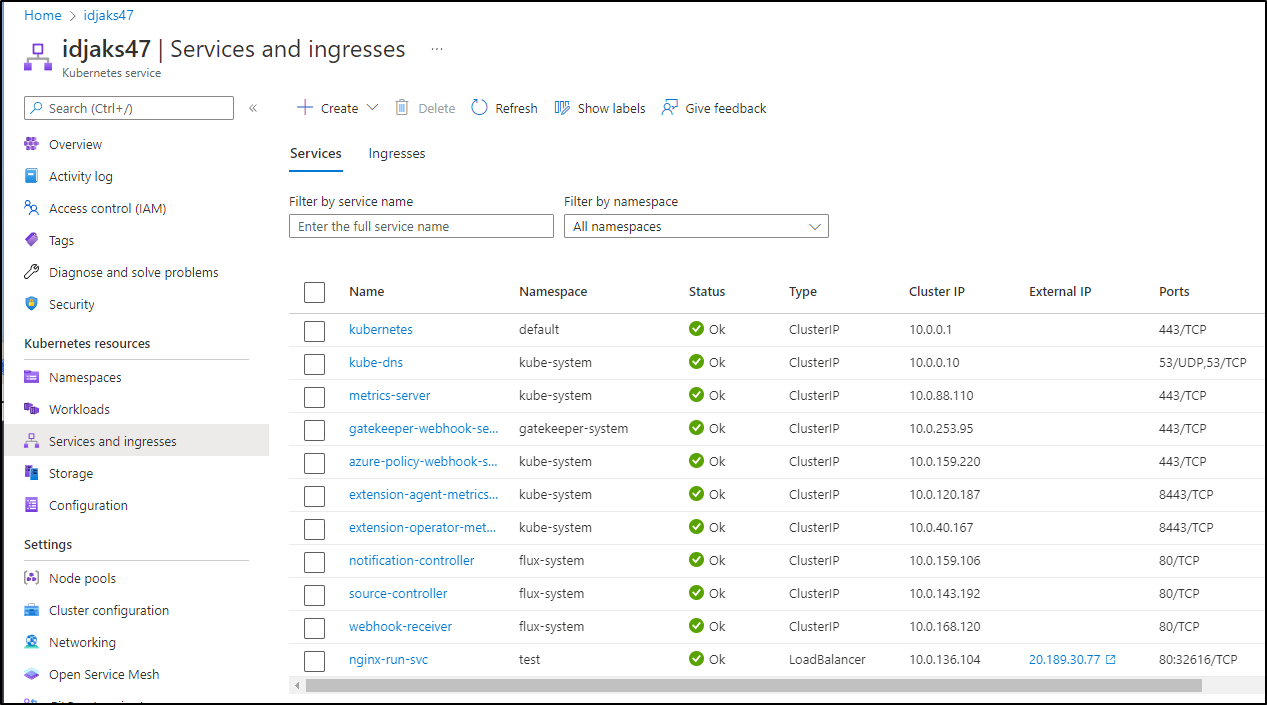

This creates a service

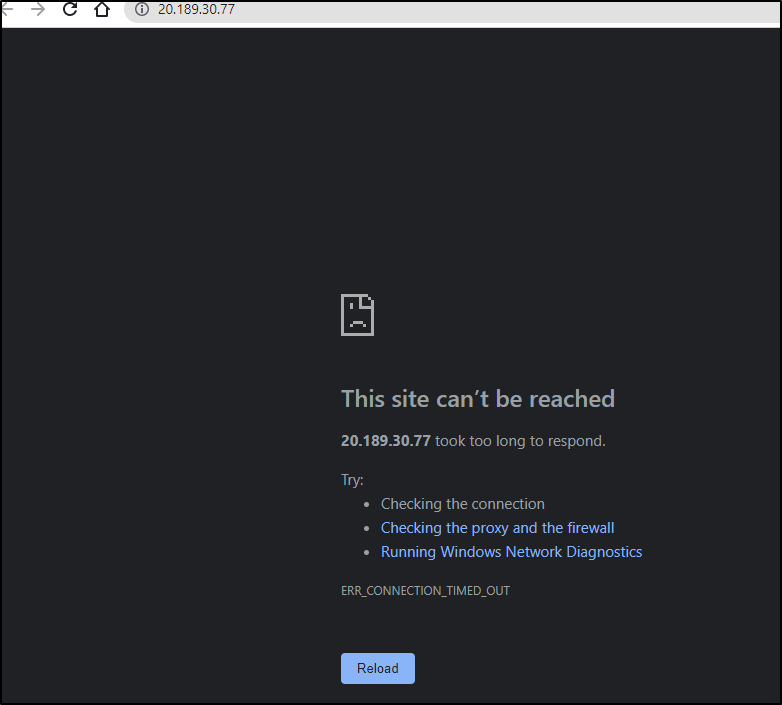

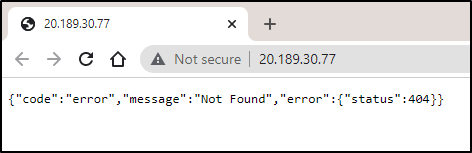

However, it doesn’t seem to load a page

The reason is Ronin operates on port 8000, not 80. We can actually correct this in code and deploy via Gitops (Flux to sync in 2m)

We simply need to change the containerport in the deployment yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git diff

diff --git a/k8s/deployment.yaml b/k8s/deployment.yaml

index 6d8e201..56b6cca 100644

--- a/k8s/deployment.yaml

+++ b/k8s/deployment.yaml

@@ -24,7 +24,7 @@ spec:

- name: my-nginx

image: nginx

ports:

- - containerPort: 80

+ - containerPort: 8000

---

apiVersion: v1

kind: Service

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git add deployment.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git commit -m "Change containerport to 8000"

[main 207654a] Change containerport to 8000

1 file changed, 1 insertion(+), 1 deletion(-)

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git push

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 16 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 398 bytes | 398.00 KiB/s, done.

Total 4 (delta 2), reused 0 (delta 0)

remote: Resolving deltas: 100% (2/2), completed with 2 local objects.

To https://github.com/idjohnson/dockerWithTests2.git

816ed91..207654a main -> main

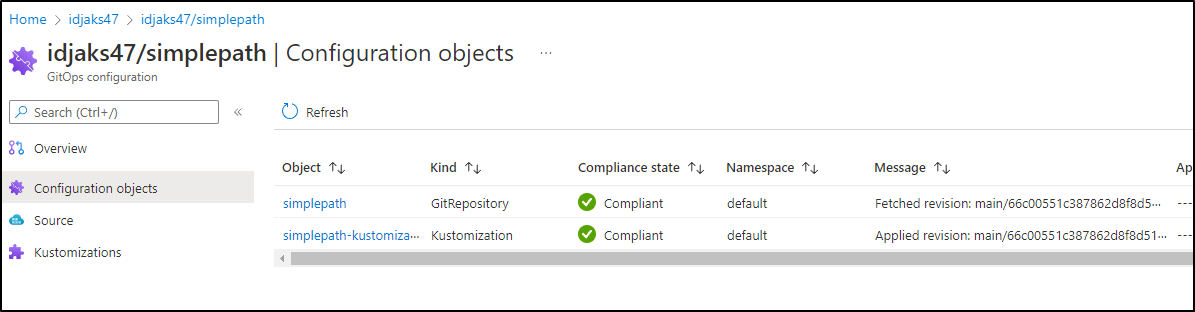

We can see when a revision is pulled in

however, due to not forcing, the service did not change

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ kubectl get svc -n test

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-run-svc LoadBalancer 10.0.136.104 20.189.30.77 80:32616/TCP 14m

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git diff

diff --git a/k8s/deployment.yaml b/k8s/deployment.yaml

index 56b6cca..c4ae3ec 100644

--- a/k8s/deployment.yaml

+++ b/k8s/deployment.yaml

@@ -29,7 +29,7 @@ spec:

apiVersion: v1

kind: Service

metadata:

- name: nginx-run-svc

+ name: nginx-run-svc2

namespace: test

spec:

type: LoadBalancer

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git add deployment.yaml

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git commit -m "Update name of service to force updated"

[main 66c0055] Update name of service to force updated

1 file changed, 1 insertion(+), 1 deletion(-)

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2/k8s$ git push

Enumerating objects: 7, done.

Counting objects: 100% (7/7), done.

Delta compression using up to 16 threads

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 404 bytes | 404.00 KiB/s, done.

Total 4 (delta 2), reused 0 (delta 0)

remote: Resolving deltas: 100% (2/2), completed with 2 local objects.

To https://github.com/idjohnson/dockerWithTests2.git

207654a..66c0055 main -> main

We can watch when it was applied

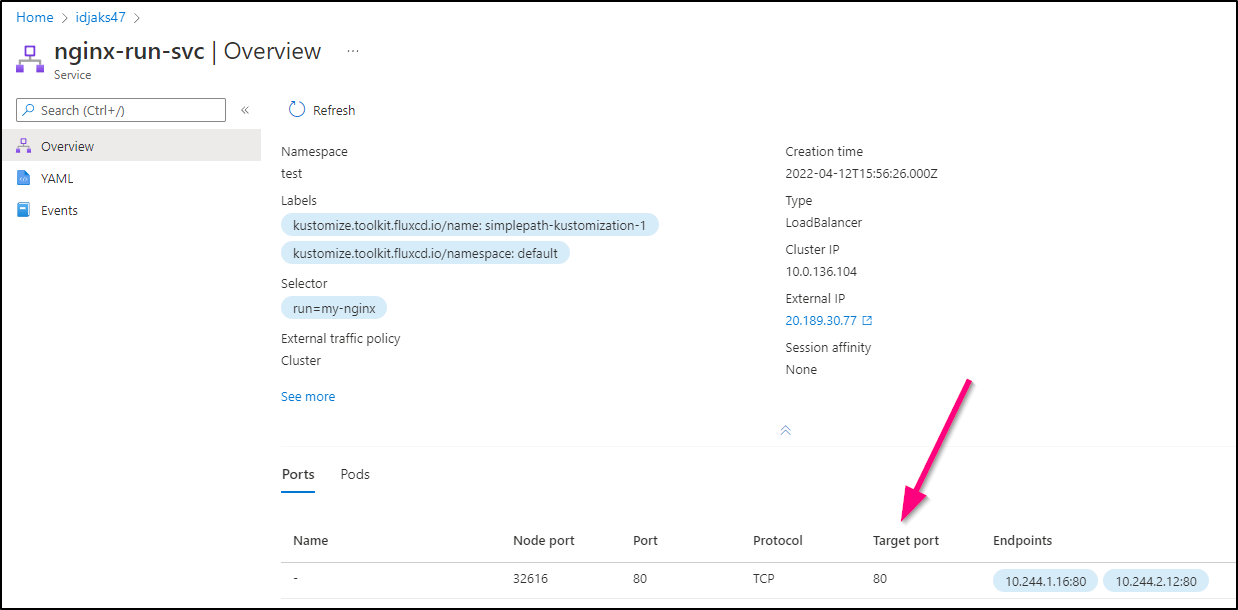

Still not working, I double checked my YAML and saw it needed to set the Target port as it was not the standard port 80

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ git diff

diff --git a/k8s/deployment.yaml b/k8s/deployment.yaml

index c4ae3ec..1e6da6c 100644

--- a/k8s/deployment.yaml

+++ b/k8s/deployment.yaml

@@ -29,11 +29,14 @@ spec:

apiVersion: v1

kind: Service

metadata:

- name: nginx-run-svc2

+ name: nginx-run-svc

namespace: test

spec:

type: LoadBalancer

ports:

- port: 80

+ targetPort: 8000

+ protocol: TCP

+ name: http

selector:

run: my-nginx

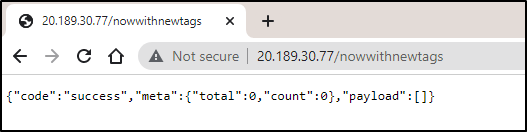

And now see our original service external IP properly serve traffic (redirecting 80 to 8000)

Solving the fixed tag

We have one last thing to change. Right now, we are fixed to the ‘cj3’ tag

$ cat kustomization.yaml

resources:

- deployment.yaml

images:

- name: nginx

newName: idjacrdemo02.azurecr.io/dockerwithtests

newTag: cj3

We can change to:

- build and push a standarddev tag

- use that in our kustomization for the image override

- set our deployment to always pull the image (since we are re-using a tag)

We need to update our ACR Task to build and push another tag, this time ‘devlatest’

builder@DESKTOP-QADGF36:~/Workspaces/dockerWithTests2$ cat taskmulti.yaml

version: v1.1.0

steps:

# Build target image

- build: -t {{.Run.Registry}}/dockerwithtests:devlatest -t {{.Run.Registry}}/dockerwithtests:{{.Run.ID}} -t {{.Run.Registry}}/dockerwithtests:{{.Run.Commit}} -f Dockerfile .

# Push image

- push:

- {{.Run.Registry}}/dockerwithtests:{{.Run.Commit}}

- push:

- {{.Run.Registry}}/dockerwithtests:{{.Run.ID}}

- push:

- {{.Run.Registry}}/dockerwithtests:devlatest

Push the change will trigger ACR to rebuild

And now we see a ‘devlatest’ tag

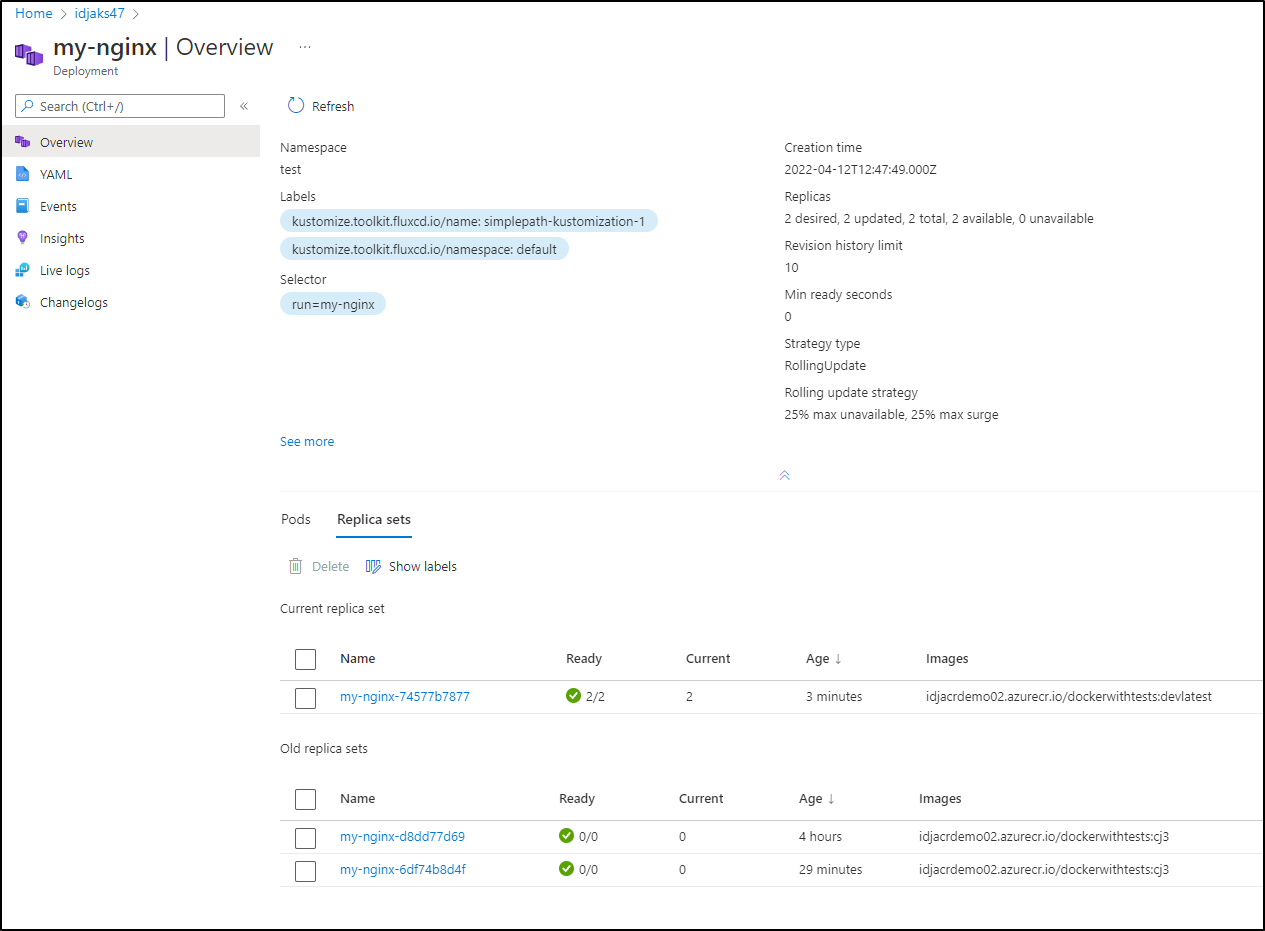

We see our ReplicaSet change over

And the LB is already serving the traffic from the new pods

Summary

We leveraged two Azure PaaS offerings, Azure Container Registry (ACR) and Azure Kubernetes Service (AKS) to work out a CICD-less GitOps workflow that automatically builds, tests, and deploys a containerized app to Kubernetes. We primarily leveraged ACR Tasks for the GitOps flow on container builds (Build and Test) and the GitOps (Flux V2) feature of AKS. However, it is worth noting that the GitOps feature in AKS is just a convenient wrapper around Flux v2.

We also leveraged a nice free webhook testing site, hookdeck, to verify our webhook payloads with ACR

It’s worth noting we took a minor shortcut to get a full CICD-less deployment and that was to use an Always ImagePull Policy and reused a dev label. This could create a cluster of mixed images if we use multiple deployments with the same image name. However, this was because at present, Flux does not have the power to natively, in-line, substitute the SHA for the image. And since we are avoiding adding CICD tooling like Github, Gitlab, Jenkins or Azure DevOps, we have no other ability to edit inline the YAML.

However, as we tag all images with the immutable SHA, we know, from the manifest, which image matches devlatest. Additionally, we would likely have a follow-up release flow to move this app through deployment stages to production (which would likely involve regression and deeper integration testing).

The real goal here was to show that developers can get away from needing CICD tool suite to build and deploy containerized apps. We can follow ReleaseFlow and orchestrate building, testing and deploying apps without more tooling layers.

And someday I would expect Flux to expose inline variables (though, for the record, i did check):