Published: Mar 8, 2022 by Isaac Johnson

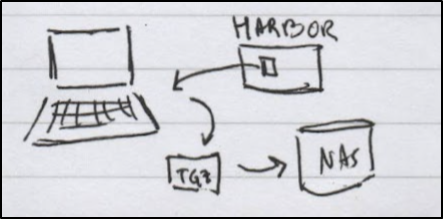

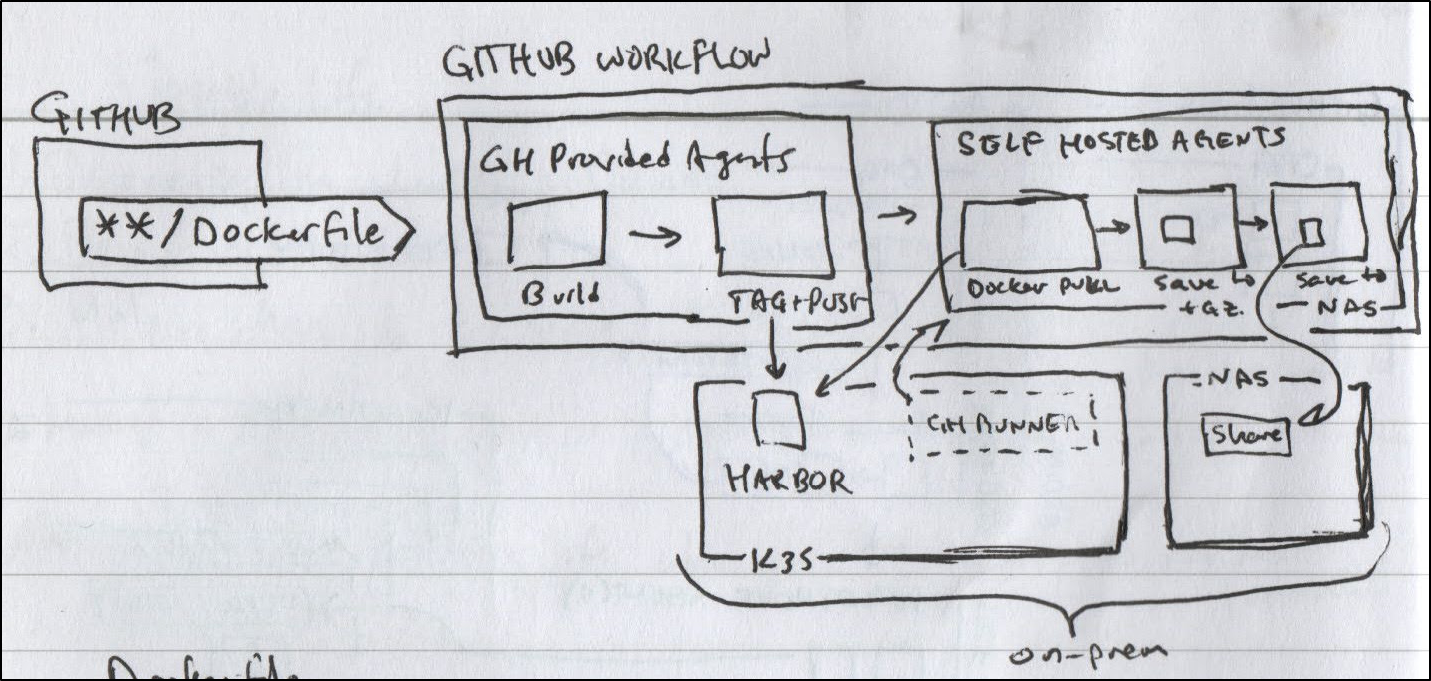

Recently my cluster took another nose dive. This really panicked me as I cannot blog (easily) without it - my private Github runners are hosted in my cluster and moreover the container registry (harbor) is as well. Harbor is, to put mildly, finicky. It’s a bit delicate and tends to fall down hard more often than I would like.

I do not fault Harbor, however. I beat the hell out of my cluster and it’s made out of commodity hardware (literally discarded laptops):

To mitigate this risk, and I think it’s a good strategy for anyone who depends on private hosting of a container registry, I decided it was high time to set up some basic replication to another cloud provider.

Current Setup

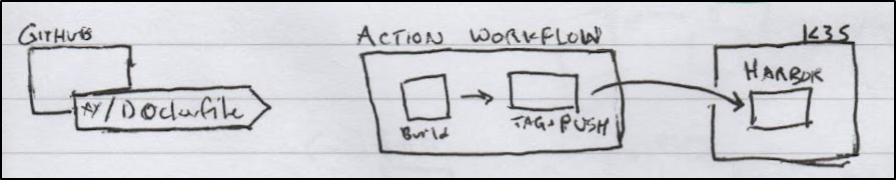

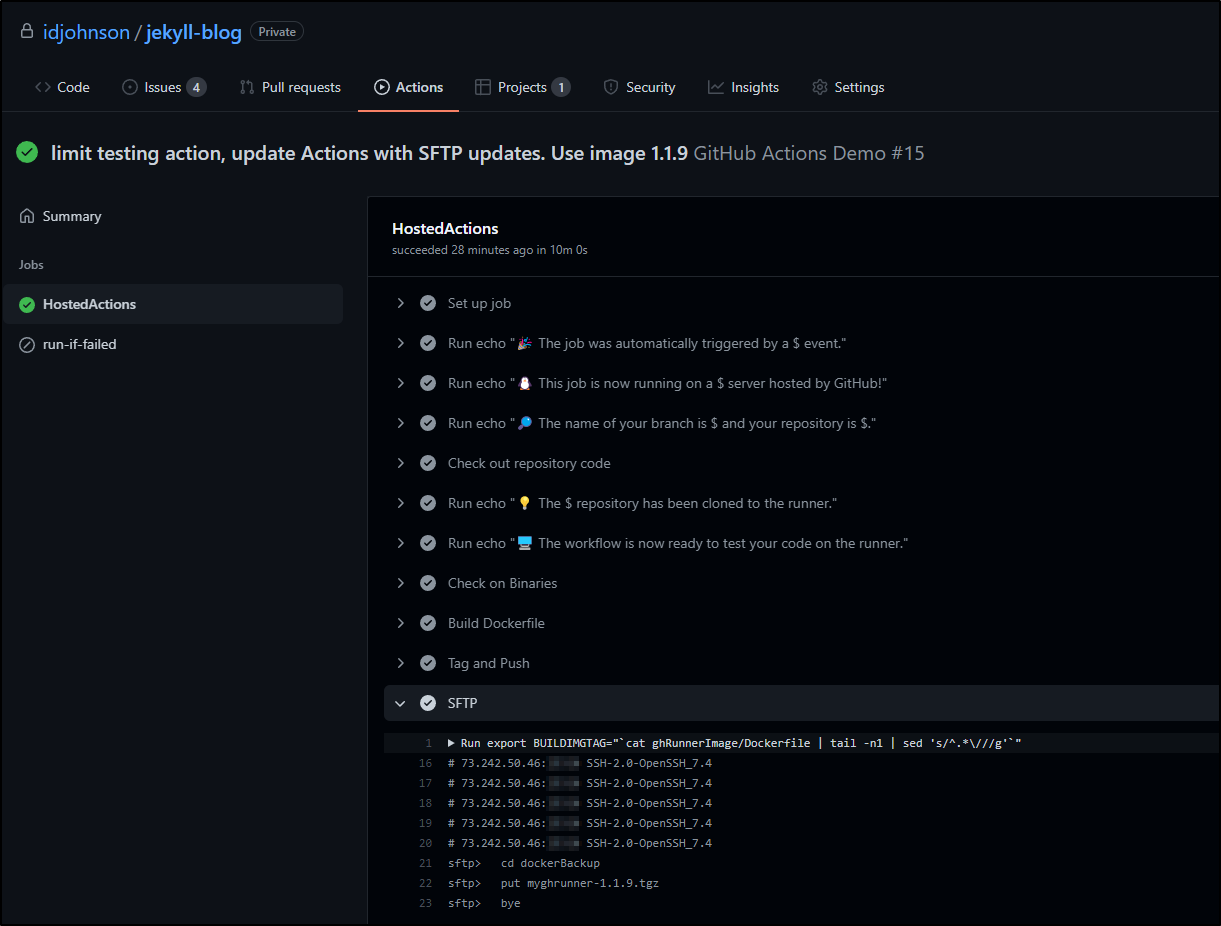

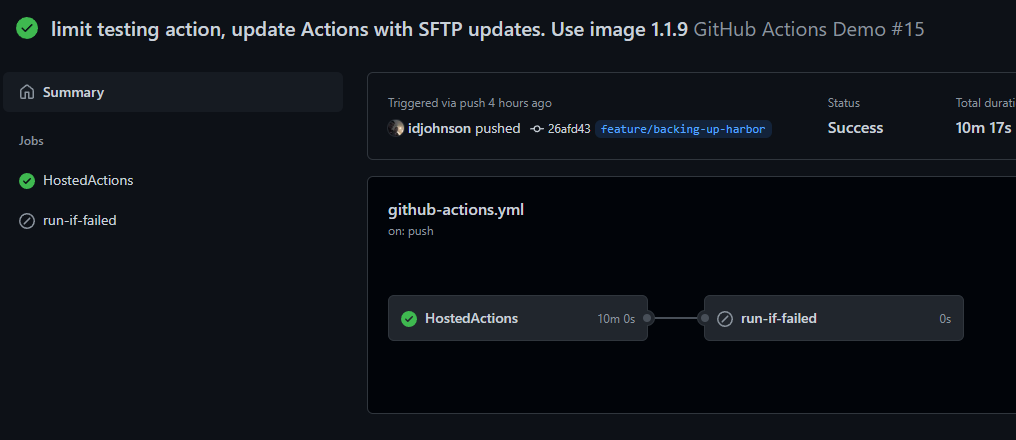

Presently, we have a Github Action set to PRs to build a new Container image if we change a Dockerfile.

Looking at the actual Github Action flow you might notice we also have added Datadog events for tracking and notifications.

name: GitHub Actions Demo

on:

push:

paths:

- "**/Dockerfile"

jobs:

HostedActions:

runs-on: ubuntu-latest

steps:

- run: echo "🎉 The job was automatically triggered by a $ event."

- run: echo "🐧 This job is now running on a $ server hosted by GitHub!"

- run: echo "🔎 The name of your branch is $ and your repository is $."

- name: Check out repository code

uses: actions/checkout@v2

- run: echo "💡 The $ repository has been cloned to the runner."

- run: echo "🖥️ The workflow is now ready to test your code on the runner."

- name: Check on Binaries

run: |

which az

which aws

- name: Build Dockerfile

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

docker build -t $BUILDIMGTAG ghRunnerImage

docker images

- name: Tag and Push

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FINALBUILDTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^#//g'`"

docker tag $BUILDIMGTAG $FINALBUILDTAG

docker images

echo $CR_PAT | docker login harbor.freshbrewed.science -u $CR_USER --password-stdin

docker push $FINALBUILDTAG

env: # Or as an environment variable

CR_PAT: ${{ secrets.CR_PAT }}

CR_USER: ${{ secrets.CR_USER }}

- run: echo "🍏 This job's status is $."

- name: Build count

uses: masci/datadog@v1

with:

api-key: $DATADOG_API_KEY

metrics: |

- type: "count"

name: "dockerbuild.runs.count"

value: 1.0

host: ${{ github.repository_owner }}

tags:

- "project:${{ github.repository }}"

- "branch:${{ github.head_ref }}"

run-if-failed:

runs-on: ubuntu-latest

needs: HostedActions

if: always() && (needs.HostedActions.result == 'failure')

steps:

- name: Datadog-Fail

uses: masci/datadog@v1

with:

api-key: ${{ secrets.DATADOG_API_KEY }}

events: |

- title: "Failed building Dockerfile"

text: "Branch ${{ github.head_ref }} failed to build"

alert_type: "error"

host: ${{ github.repository_owner }}

tags:

- "project:${{ github.repository }}"

- name: Fail count

uses: masci/datadog@v1

with:

api-key: ${{ secrets.DATADOG_API_KEY }}

metrics: |

- type: "count"

name: "dockerbuild.fails.count"

value: 1.0

host: ${{ github.repository_owner }}

tags:

- "project:${{ github.repository }}"

- "branch:${{ github.head_ref }}"

This works fine in so far as it builds the new image and pushes to my private Harbor.

Quick Note on DD Events

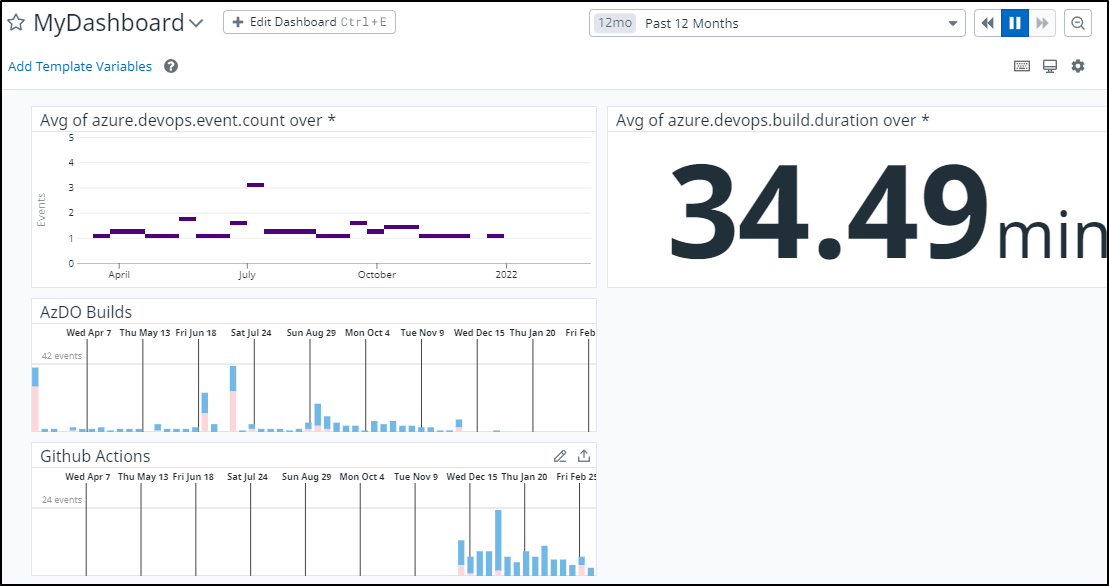

By tracking our Events in Datadog, I can see both Azure DevOps stats comingled with Github Actions. Looking back 12 months, for instance, we can see when I transitioned from AzDO over to Github:

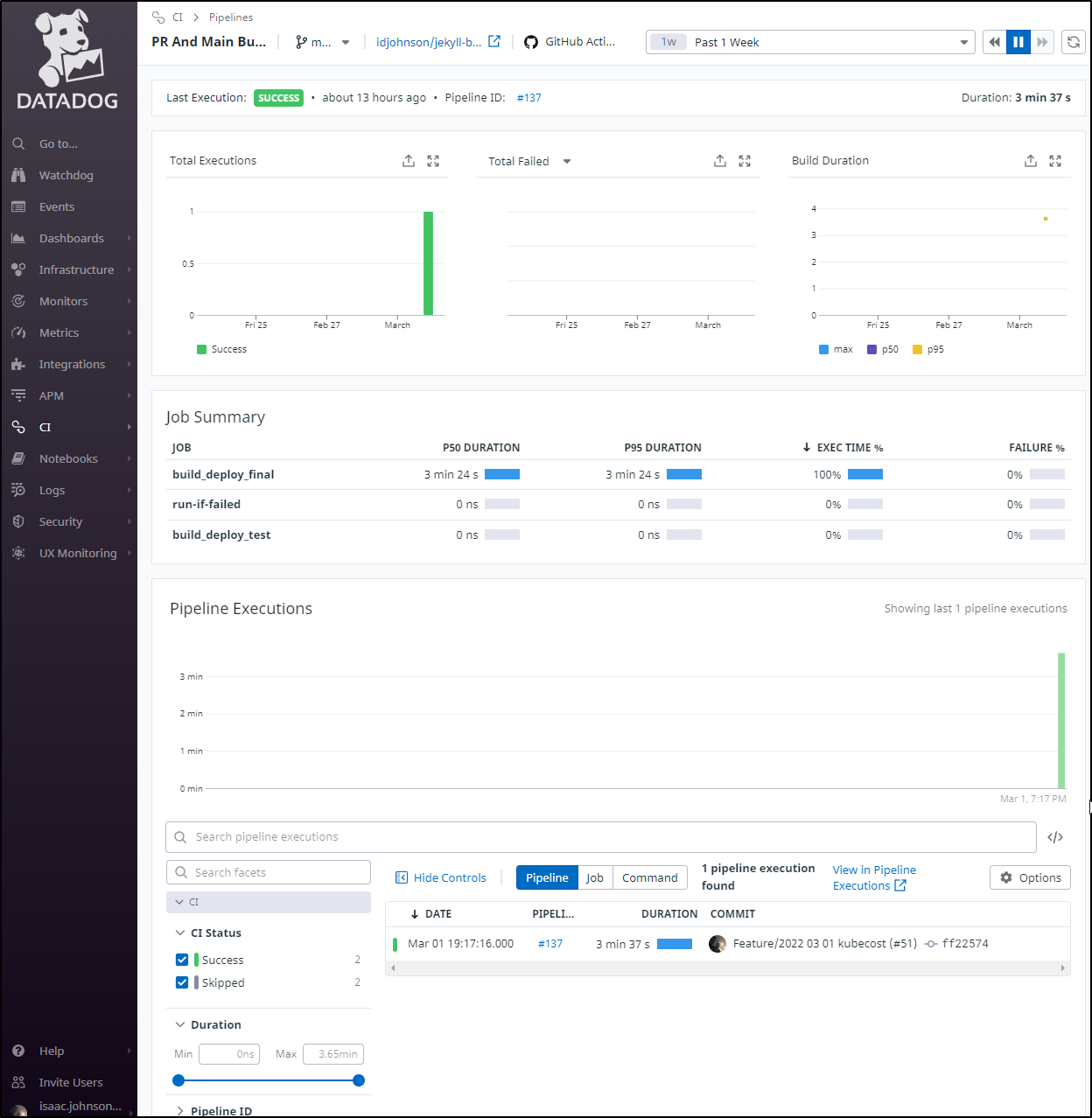

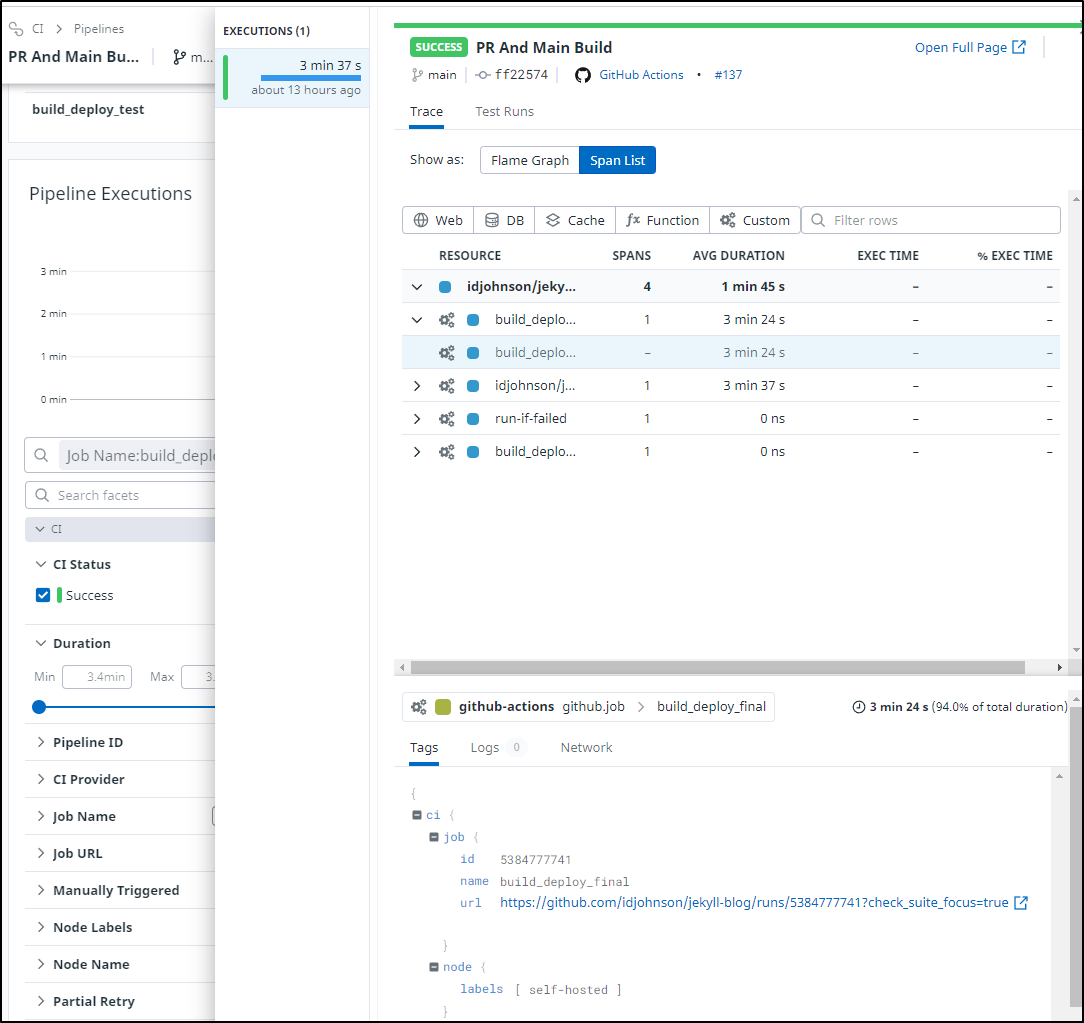

Using Datadog CI tracking, we can track our Github Action workflows with more detail than the raw events:

While CI is a feature add-on, we can basically dig into our builds like they are traces which for large enterprises that have to content with very long CI processes, can be quite useful

SPOF issues

Back to our topic at hand. The issue, of course, lies when the cluster is down. I have a SPOF (Single Point of Failure). This needs mitigation.

To start with, let’s see if we can push some images to Clouds that offer low-cost or free Container Registries.

Create IBM CR

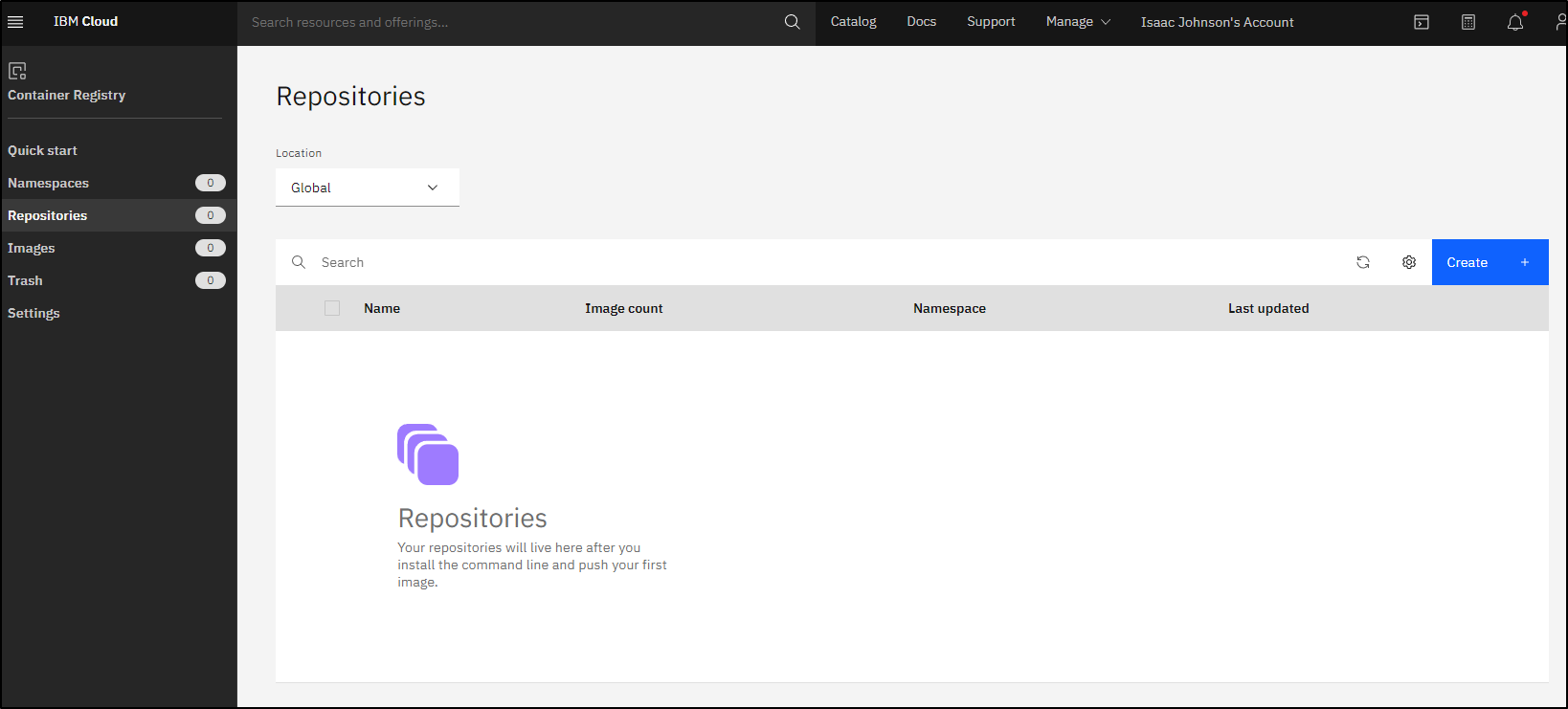

IBM Cloud may not be the biggest, but one thing it does offer is a very nice free tier Container Registry.

Login to IBM Cloud and go to Container Registry under Kubernetes:

Here we see we presently do not have any repositories synced. To sync some, we’ll need an API key. We covered the steps in our last guide but let’s do it one more time.

Install the CLI

builder@DESKTOP-QADGF36:~$ which ibmcloud

builder@DESKTOP-QADGF36:~$ curl -fsSL https://clis.cloud.ibm.com/install/linux | sh

Current platform is linux64. Downloading corresponding IBM Cloud CLI...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 12.0M 100 12.0M 0 0 25.9M 0 --:--:-- --:--:-- --:--:-- 25.9M

Download complete. Executing installer...

Bluemix_CLI/

Bluemix_CLI/bin/

Bluemix_CLI/bin/ibmcloud

Bluemix_CLI/bin/ibmcloud.sig

Bluemix_CLI/bin/NOTICE

Bluemix_CLI/bin/LICENSE

Bluemix_CLI/bin/CF_CLI_Notices.txt

Bluemix_CLI/bin/CF_CLI_SLC_Notices.txt

Bluemix_CLI/autocomplete/

Bluemix_CLI/autocomplete/bash_autocomplete

Bluemix_CLI/autocomplete/zsh_autocomplete

Bluemix_CLI/install

Bluemix_CLI/uninstall

Bluemix_CLI/install_bluemix_cli

Superuser privileges are required to run this script.

[sudo] password for builder:

Install complete.

builder@DESKTOP-QADGF36:~$ which ibmcloud

/usr/local/bin/ibmcloud

Install the CR Plugin:

$ ibmcloud plugin install container-registry -r 'IBM Cloud'

Looking up 'container-registry' from repository 'IBM Cloud'...

Plug-in 'container-registry 0.1.560' found in repository 'IBM Cloud'

Attempting to download the binary file...

10.41 MiB / 10.41 MiB [==========================================================================================================================================================================] 100.00% 0s

10915840 bytes downloaded

Installing binary...

OK

Plug-in 'container-registry 0.1.560' was successfully installed into /home/builder/.bluemix/plugins/container-registry. Use 'ibmcloud plugin show container-registry' to show its details.

Next, login:

$ ibmcloud login -a https://cloud.ibm.com

API endpoint: https://cloud.ibm.com

Email> isaac.johnson@gmail.com

Password>

Authenticating...

OK

Targeted account Isaac Johnson's Account (2189de6cf2374fcc8d59b2f019178a0b)

Select a region (or press enter to skip):

1. au-syd

2. in-che

3. jp-osa

4. jp-tok

5. kr-seo

6. eu-de

7. eu-gb

8. ca-tor

9. us-south

10. us-east

11. br-sao

Enter a number> 9

Targeted region us-south

API endpoint: https://cloud.ibm.com

Region: us-south

User: isaac.johnson@gmail.com

Account: Isaac Johnson's Account (2189de6cf2374fcc8d59b2f019178a0b)

Resource group: No resource group targeted, use 'ibmcloud target -g RESOURCE_GROUP'

CF API endpoint:

Org:

Space:

Ensure our Region is US-South

$ ibmcloud cr region-set us-south

The region is set to 'us-south', the registry is 'us.icr.io'.

OK

Next we want to add a namespace (if not already there)

$ ibmcloud cr namespace-add freshbrewedcr

No resource group is targeted. Therefore, the default resource group for the account ('Default') is targeted.

Adding namespace 'freshbrewedcr' in resource group 'Default' for account Isaac Johnson's Account in registry us.icr.io...

The requested namespace is already owned by your account.

OK

Even though I am aware I created a “MyKey”, i’ll do it again (to rotate creds)

$ ibmcloud iam api-key-create MyKey -d "this is my API key" --file ibmAPIKeyFile

Creating API key MyKey under 2189de6cf2374fcc8d59b2f019178a0b as isaac.johnson@gmail.com...

OK

API key MyKey was created

Successfully save API key information to ibmAPIKeyFile

We can see the values (albeit obscuficated for the blog)

$ cat ibmAPIKeyFile | sed 's/[0-9]/0/g' | sed 's/apikey": ".*/apikey": "----masked-----","/g'

{

"id": "ApiKey-d0000aad-0f00-0000-b0ef-0000f00e0000",

"crn": "crn:v0:bluemix:public:iam-identity::a/0000de0cf0000fcc0d00b0f000000a0bF::apikey:ApiKey-d0000aad-0f00-0000-b0ef-0000f00e0000",

"iam_id": "IBMid-000000Q0G0",

"account_id": "0000de0cf0000fcc0d00b0f000000a0b",

"name": "MyKey",

"description": "this is my API key",

"apikey": "----masked-----","

"locked": false,

"entity_tag": "0-e0e00a00000b0cb00000d000000a0e00",

"created_at": "0000-00-00T00:00+0000",

"created_by": "IBMid-000000Q0G0",

"modified_at": "0000-00-00T00:00+0000"

}

Next I want to create a Principal - an IBM Service ID:

$ ibmcloud iam service-id-create newk3s-default-id --description "New Service ID for IBM Cloud Container Registry in Kubernetes Cluster k3s namespace default"

Creating service ID newk3s-default-id bound to current account as isaac.johnson@gmail.com...

OK

Service ID newk3s-default-id is created successfully

ID ServiceId-asdfasdfasdf-asdfasdf-asdf-asdf-asdfasfasdfasdf

Name newk3s-default-id

Description New Service ID for IBM Cloud Container Registry in Kubernetes Cluster k3s namespace default

CRN crn:v1:bluemix:public:iam-identity::a/asdfasdfasdfasdfasdfasdf::serviceid:ServiceId-asdfasfd-0a60-asfd-asdf-asdfasdfasdf

Version 1-asdfasdfasdfasdfasdfasdfas

Locked false

We’ll bind that ID to a Manager role via an IAM Policy

$ ibmcloud iam service-policy-create newk3s-default-id --roles Manager

Creating policy under current account for service ID newk3s-default-id as isaac.johnson@gmail.com...

OK

Service policy is successfully created

Policy ID: a8fd6b2b-0df2-4a0b-b668-313bd1111ade

Version: 1-a626e911650f4ab06b666d42d496305c

Roles: Manager

Resources:

Service Type All resources in account

Lastly, get an API Key so we can use it

$ ibmcloud iam service-api-key-create newk3s-default-key newk3s-default-id --description 'API key for service ID newk3s-default-id is Kubernetes cluster localk3s namespace default'

Creating API key newk3s-default-key of service ID newk3s-default-id under account 2189de6cf2374fcc8d59b2f019178a0b as isaac.johnson@gmail.com...

OK

Service ID API key newk3s-default-key is created

Please preserve the API key! It cannot be retrieved after it's created.

ID ApiKey-asdfasdf-asdf-asdf-asdf-985asdfasdfb2f5e9015

Name newk3s-default-key

Description API key for service ID newk3s-default-id is Kubernetes cluster localk3s namespace default

Created At 2022-02-23T13:56+0000

API Key ******************MYNEWAPIKEY******************

Locked false

Harbor Replication to IBM Cloud

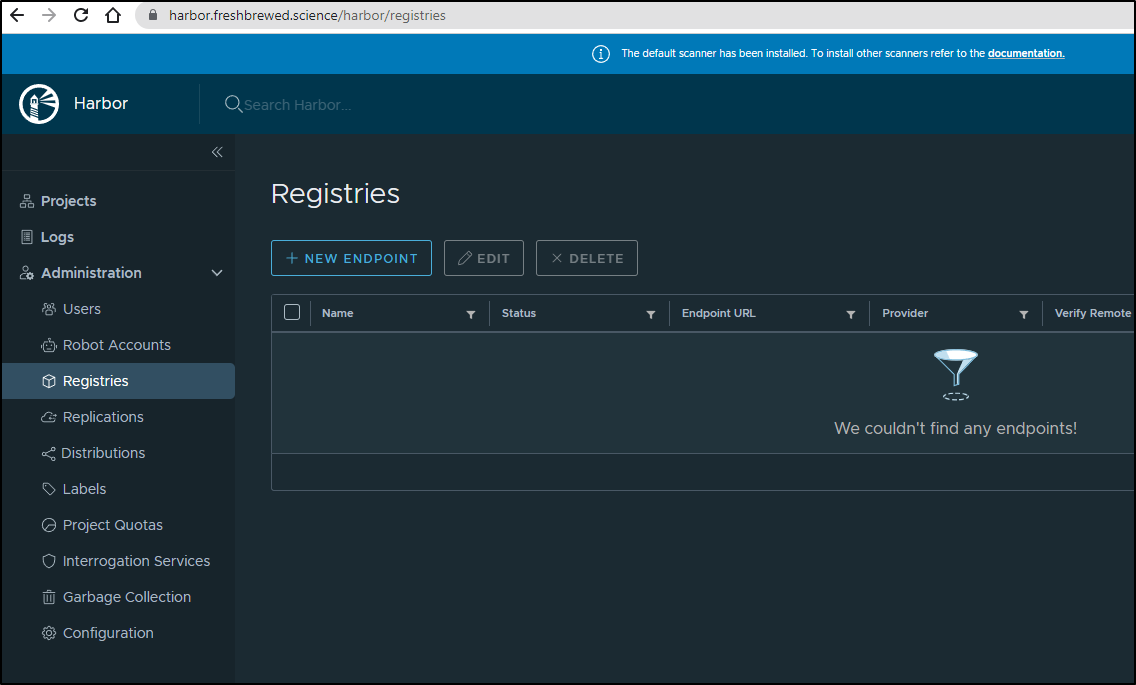

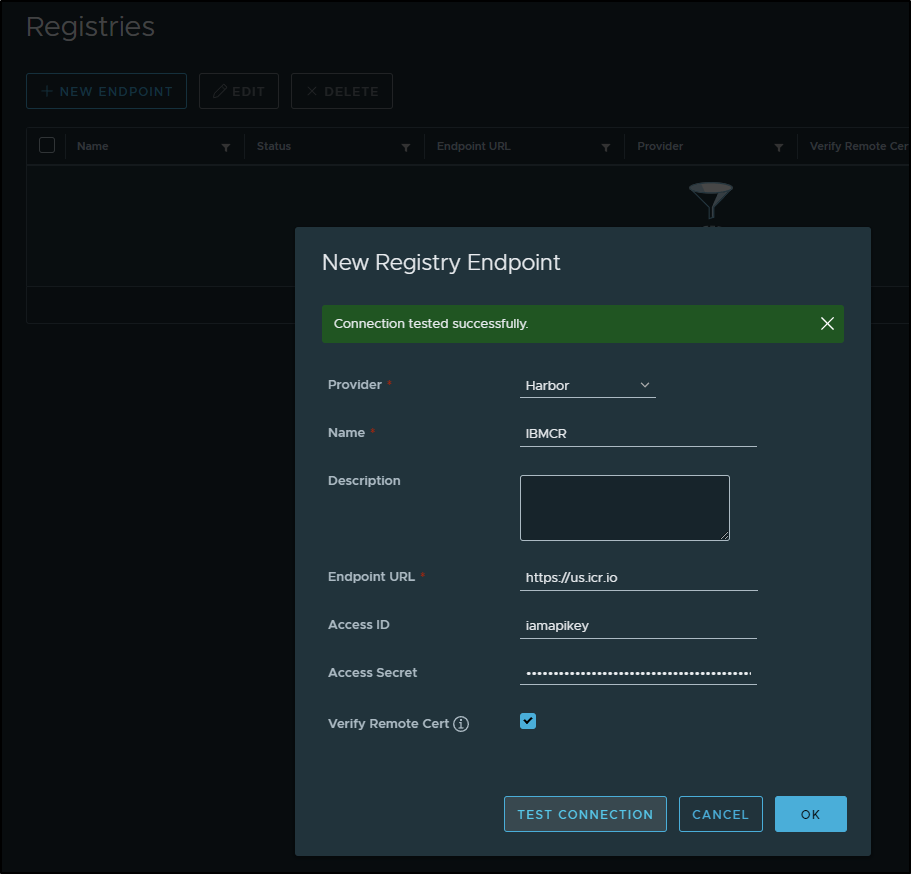

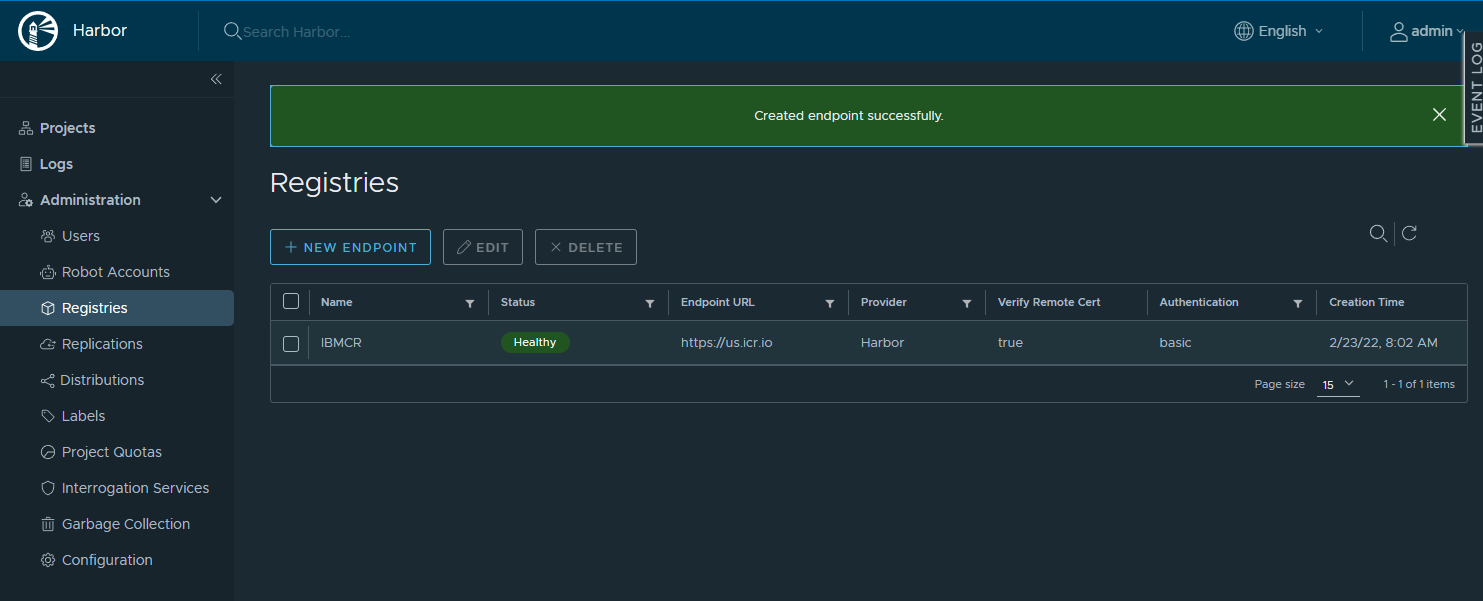

First, we need to create a new Harbor Registry Endpoint

For the endpoint URL use the IBM CR endpoint we saw above when we ensured our region was US South (us.icr.io).

The username will be iamapikey and the “Access Secret” should match the API Key we just created (******MYNEWAPIKEY******).

You can click “Test Connection” (as I did above) to see that the login works. Then save to add to endpoints

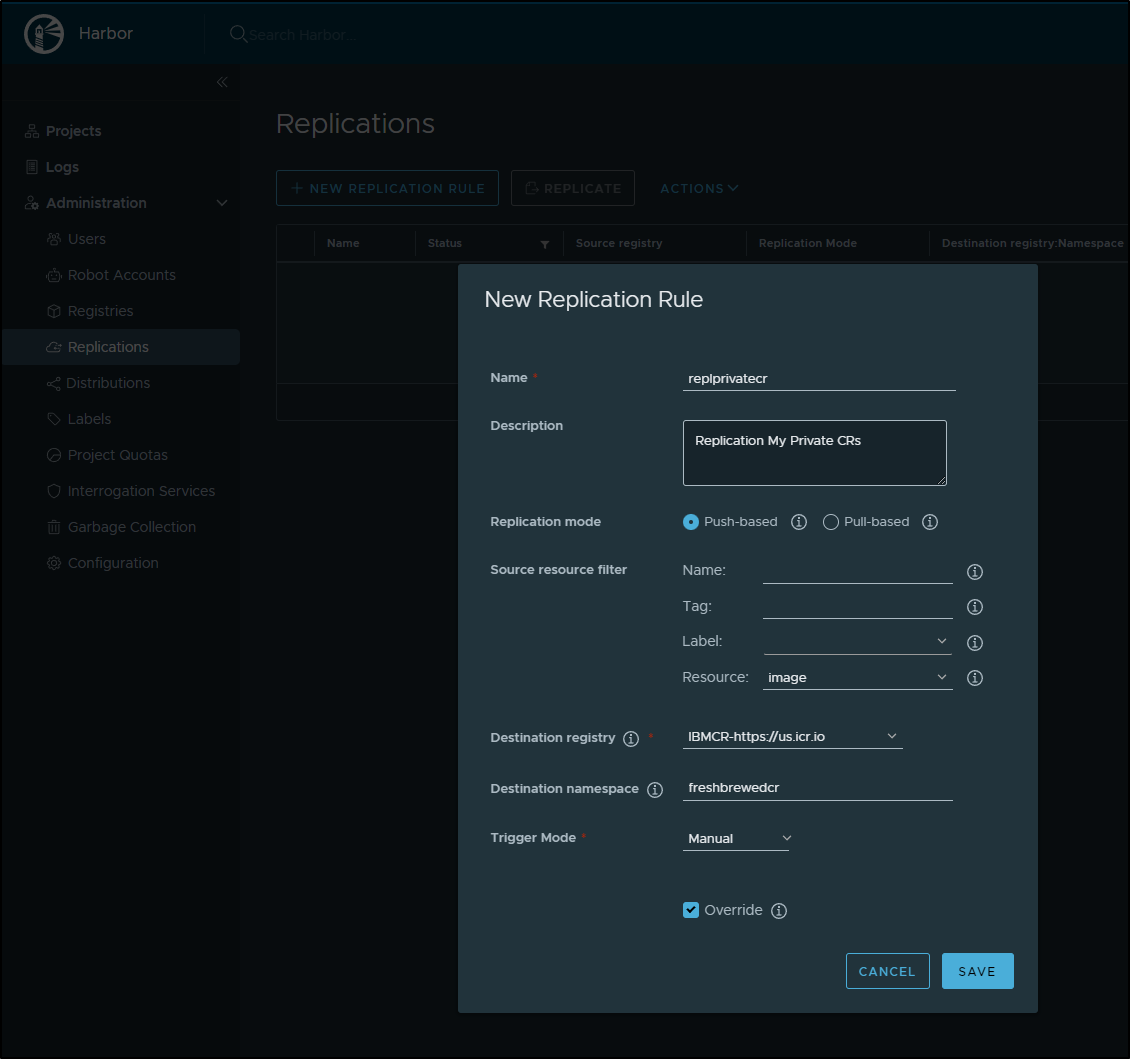

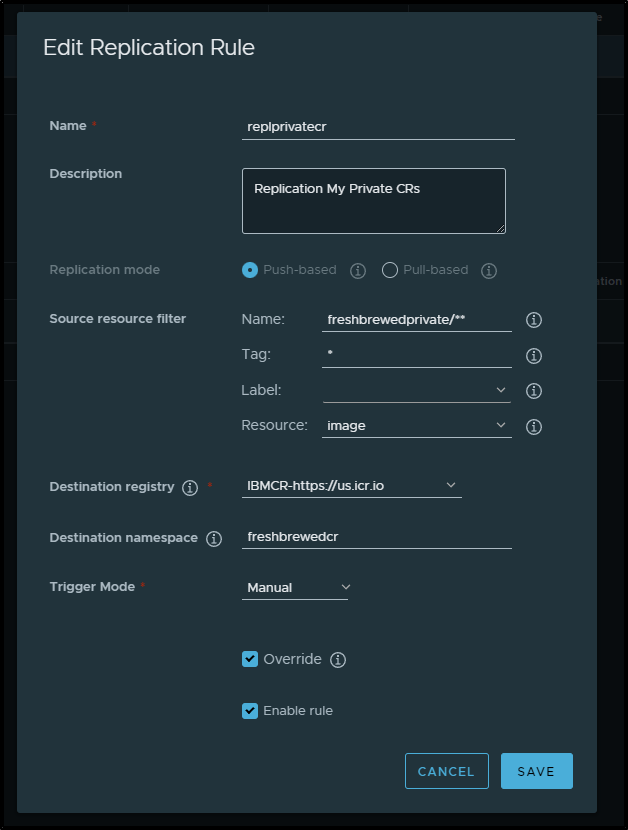

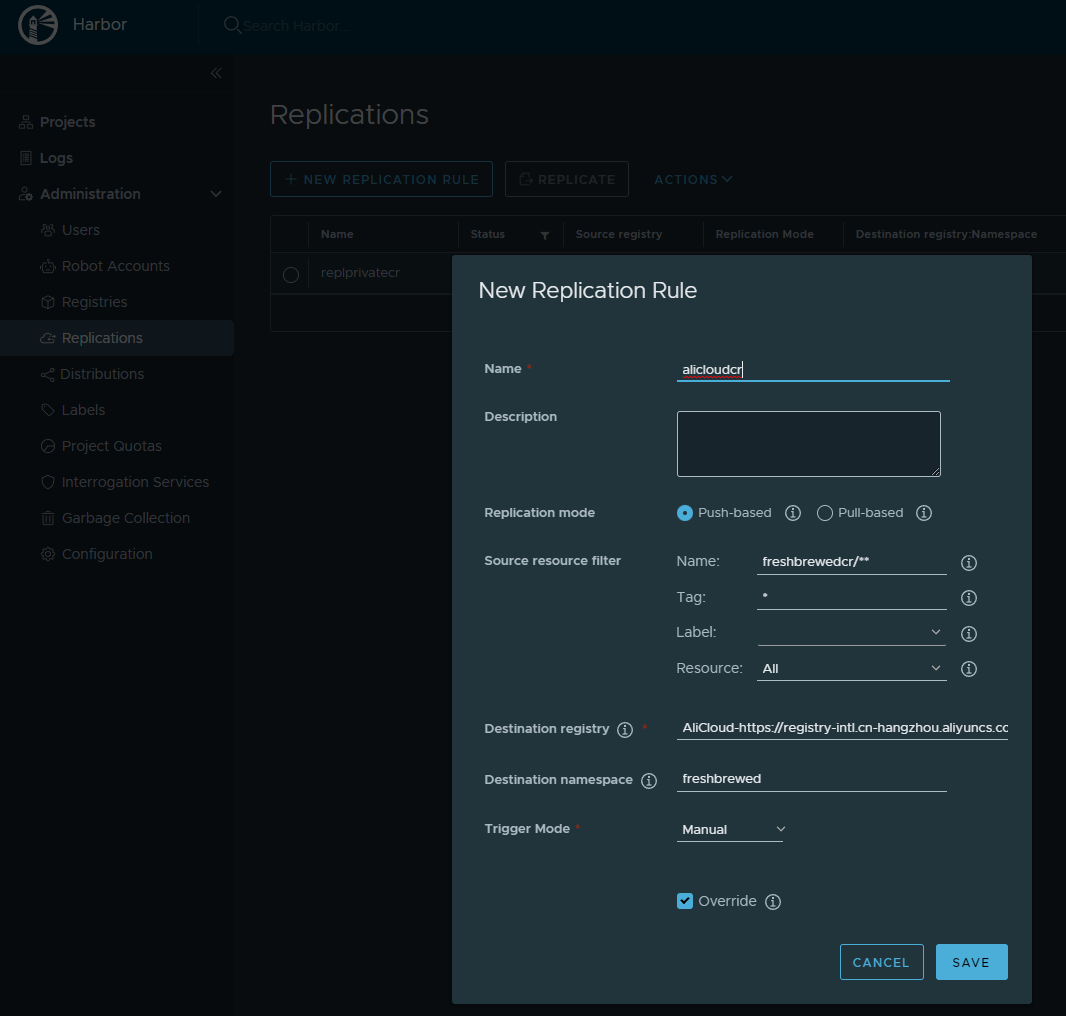

Under Administration/Replications, click “+ New Replication Rule” to create a new rule

We will use our CR and the namespace we created earlier (fresbrewedcr). In this case, I’m setting the Trigger mode to Manual (instead of Scheduled or Event Based).

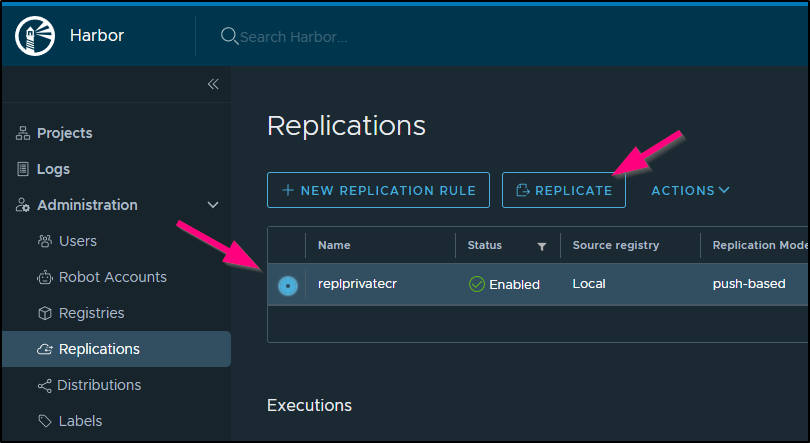

Next, let us replicate our images

It will prompt us to confirm, then start an execution

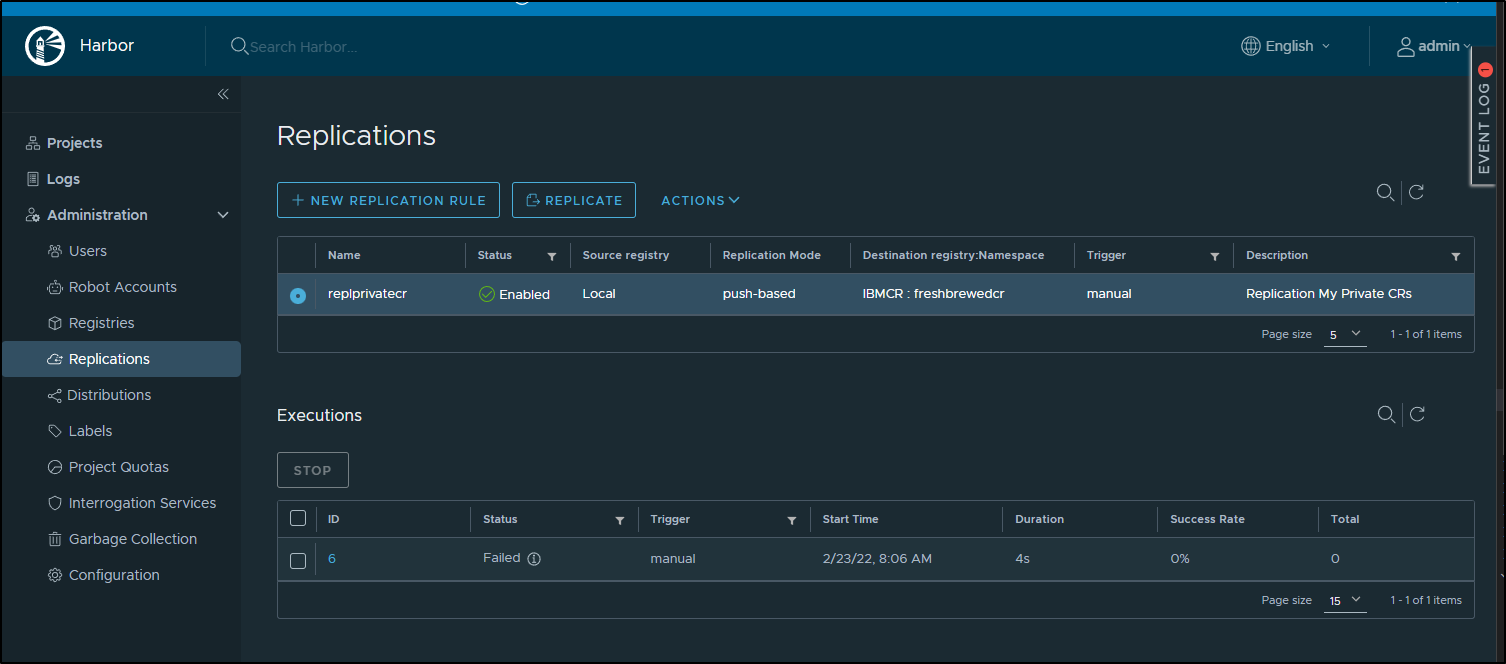

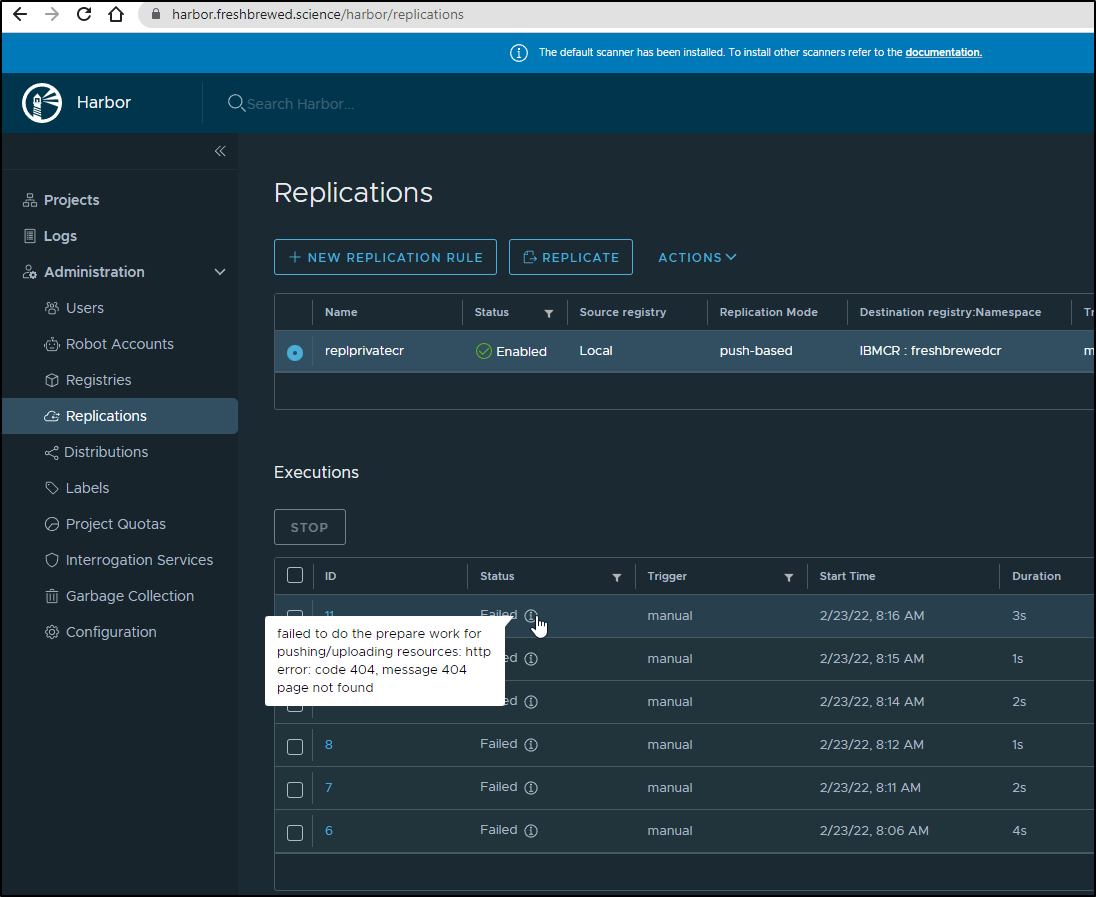

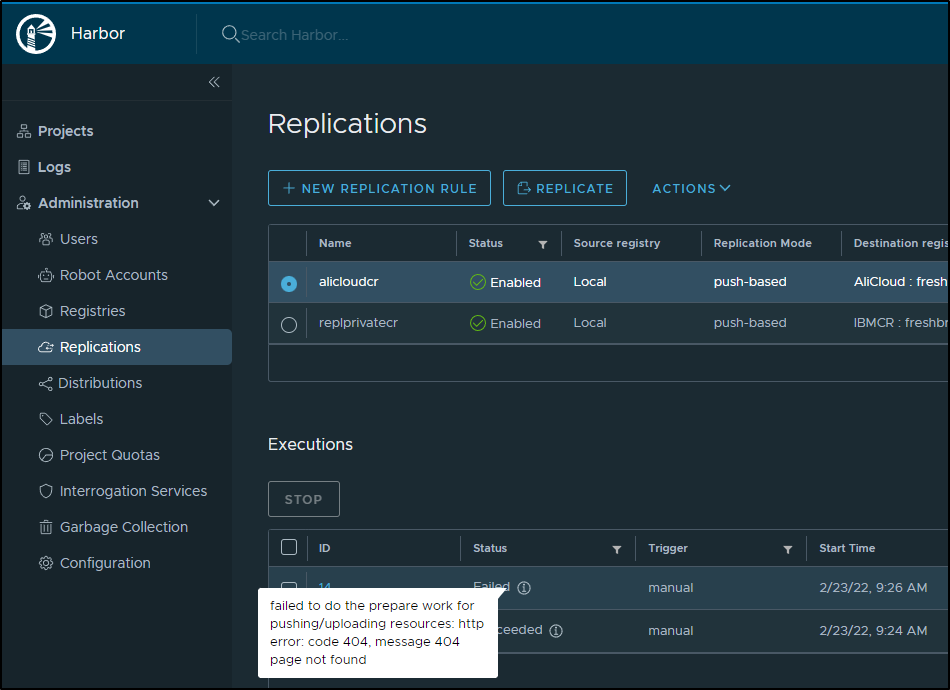

However, no format seemed to work.

I just got 404 errors

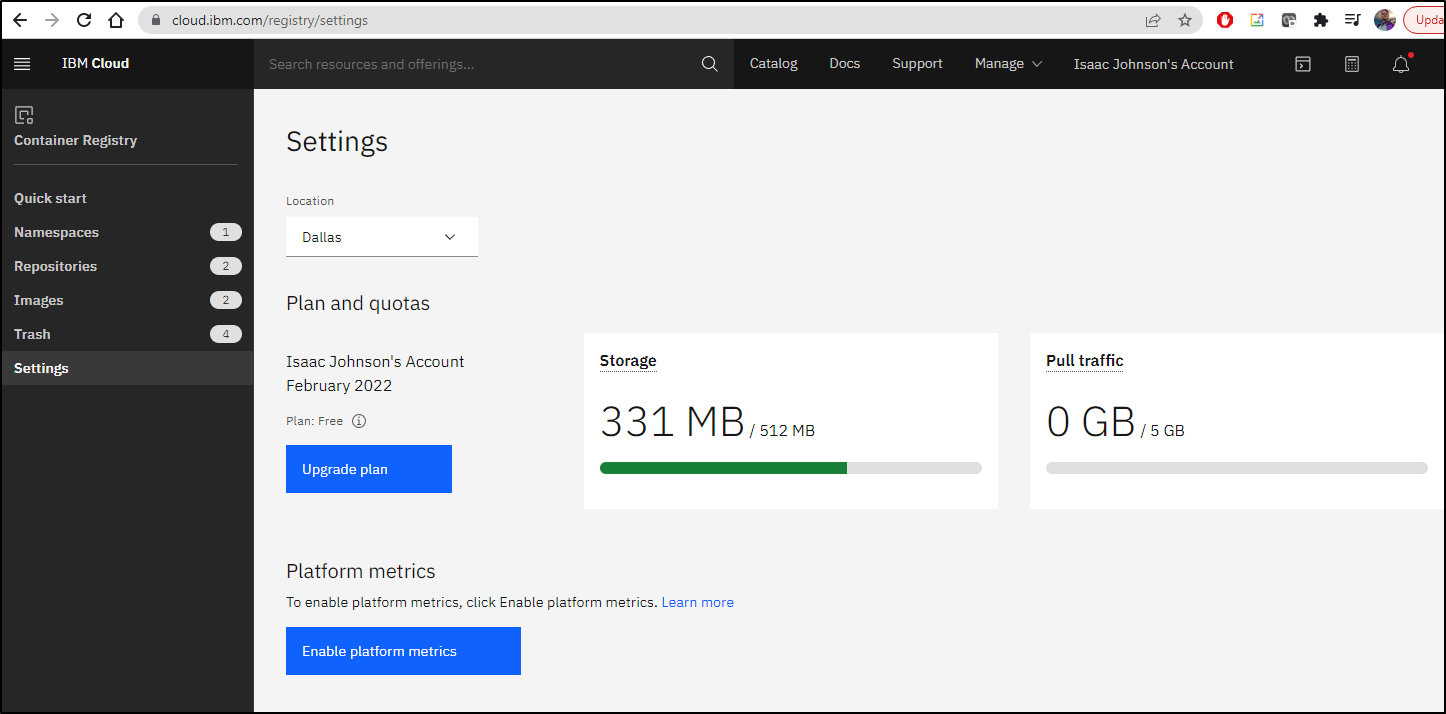

I even verified I was within space boundaries in settings:

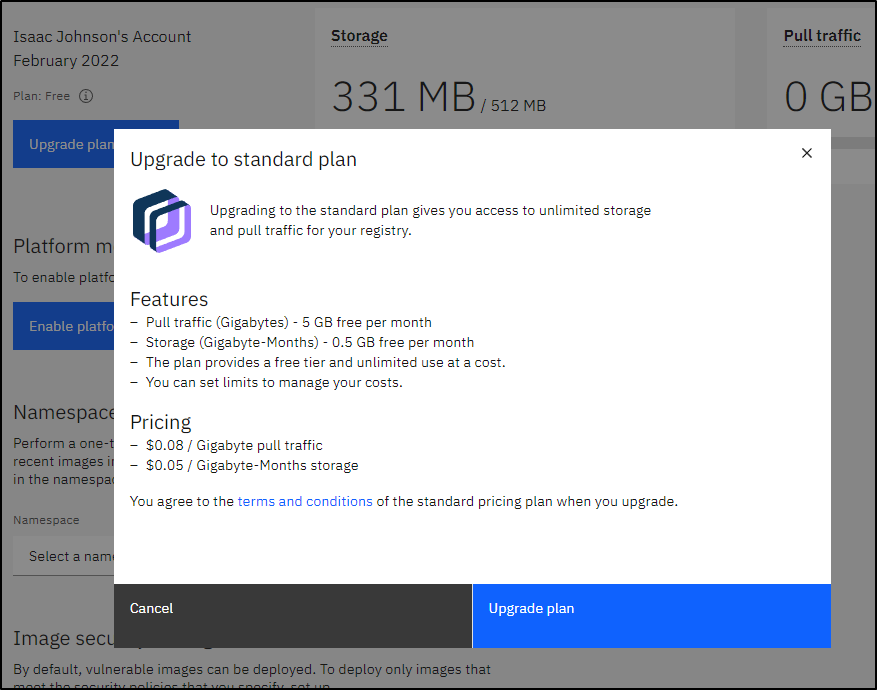

I should point out that IBM CR paid-tier pricing is pretty inexpensive:

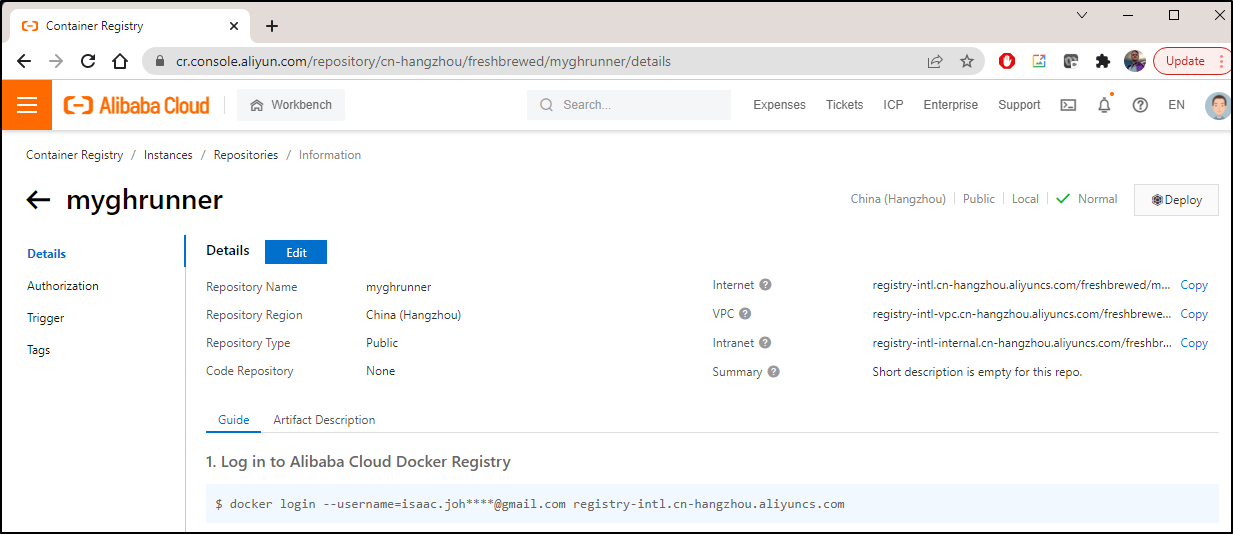

Replication to Ali Cloud

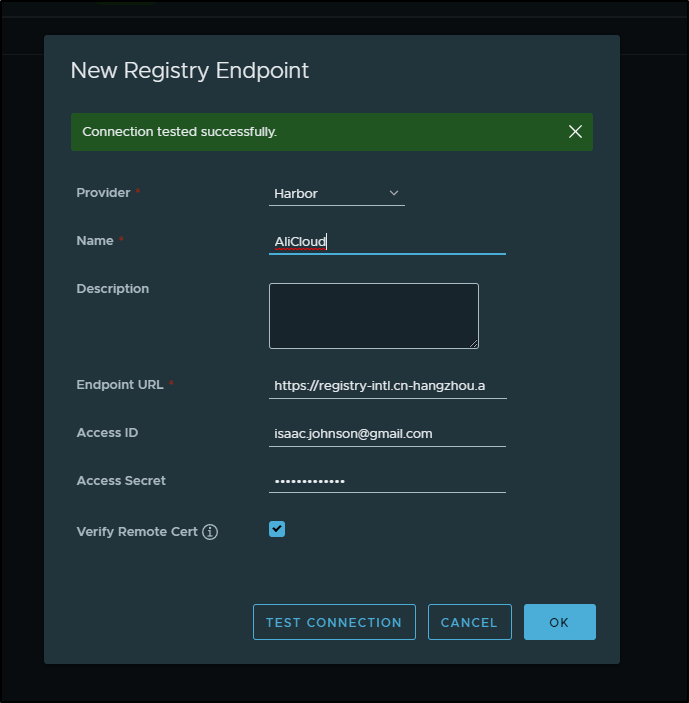

I tried the same with AliCloud

First, I created a valid endpoint

Then a replication rule (also tried “**” and only images)

And that too failed

Replication to ACR

Not willing to give up just yet, I tried one more time, this time to Azure.

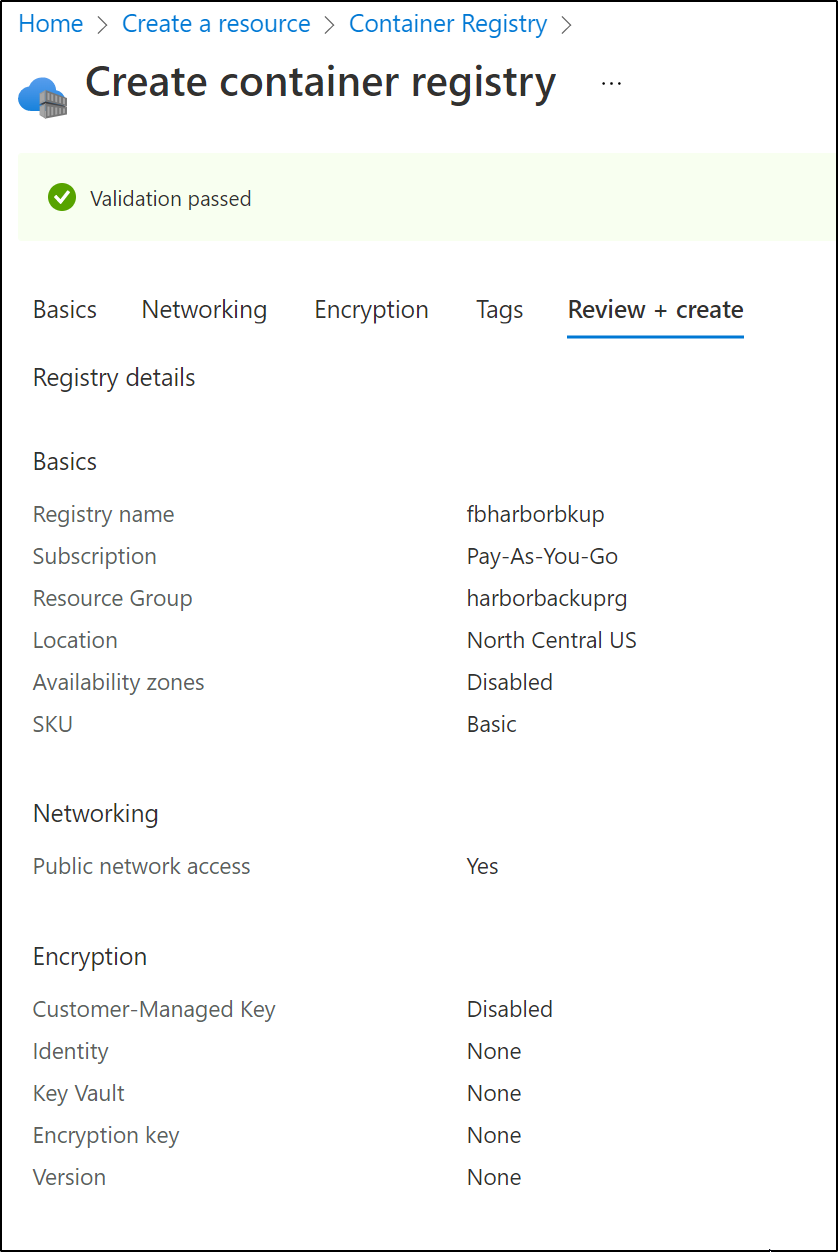

First, I created a new Container Registry from “Create a Resource”

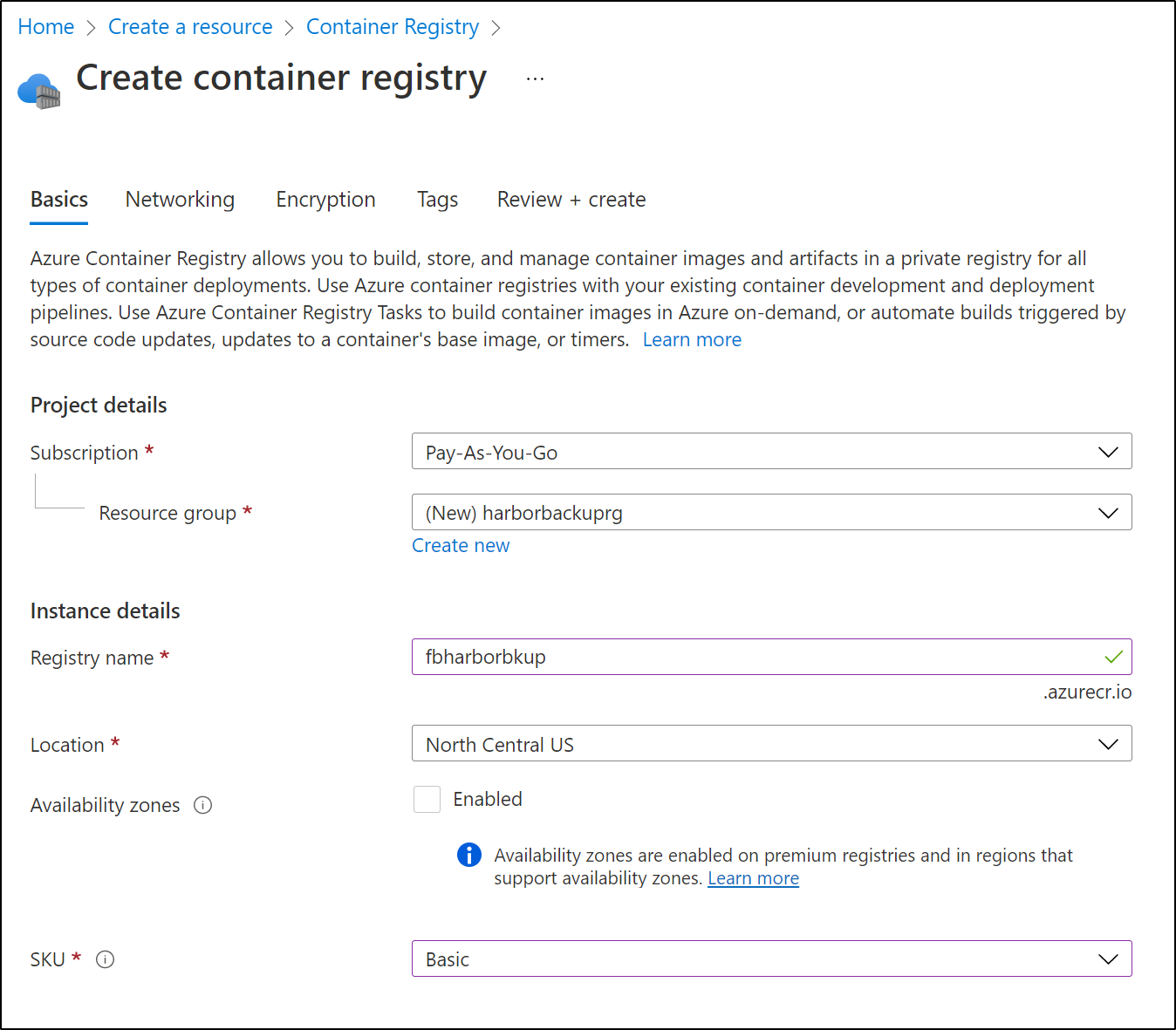

Fill in a name, location, resource group and sku

Then click create

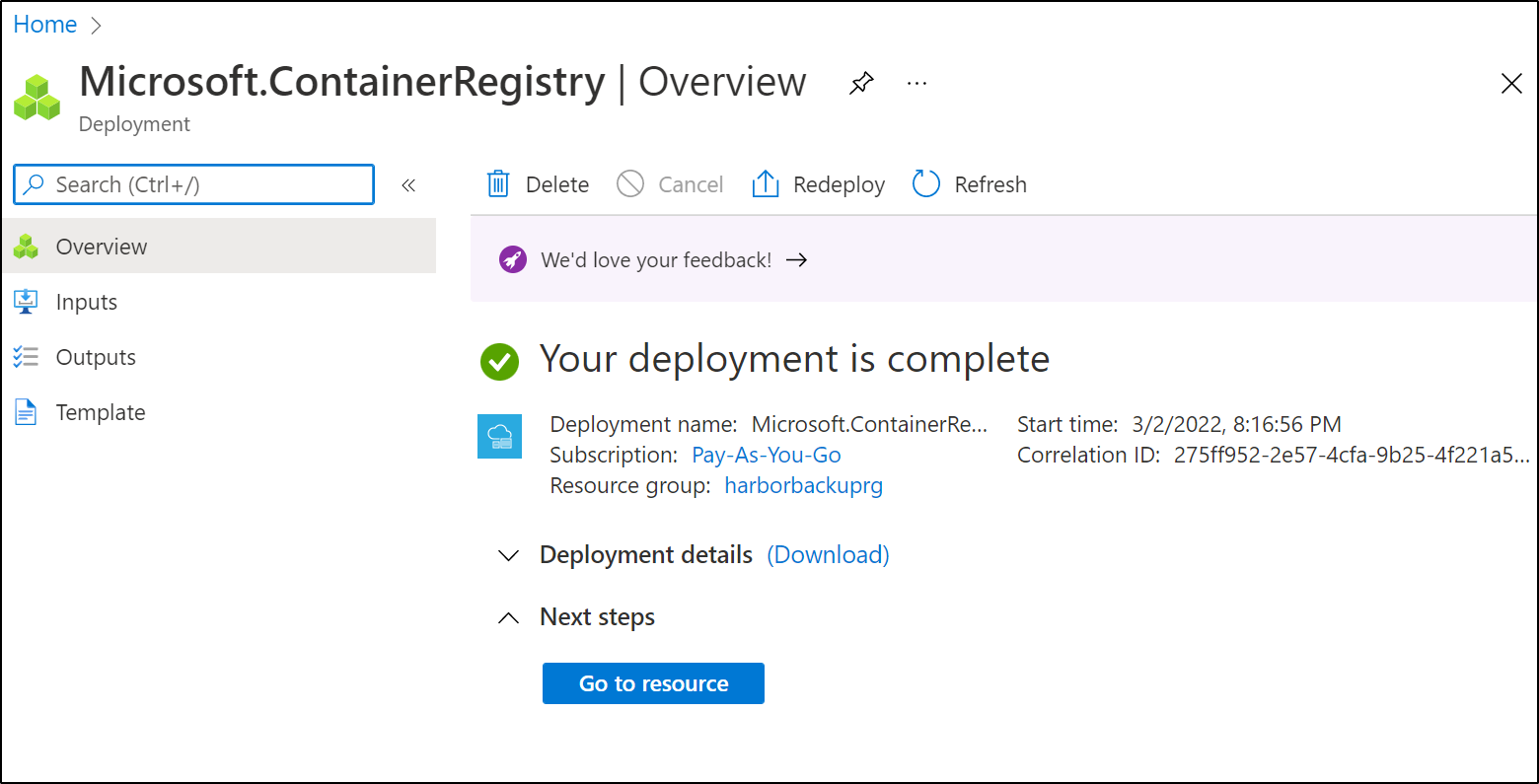

When complete

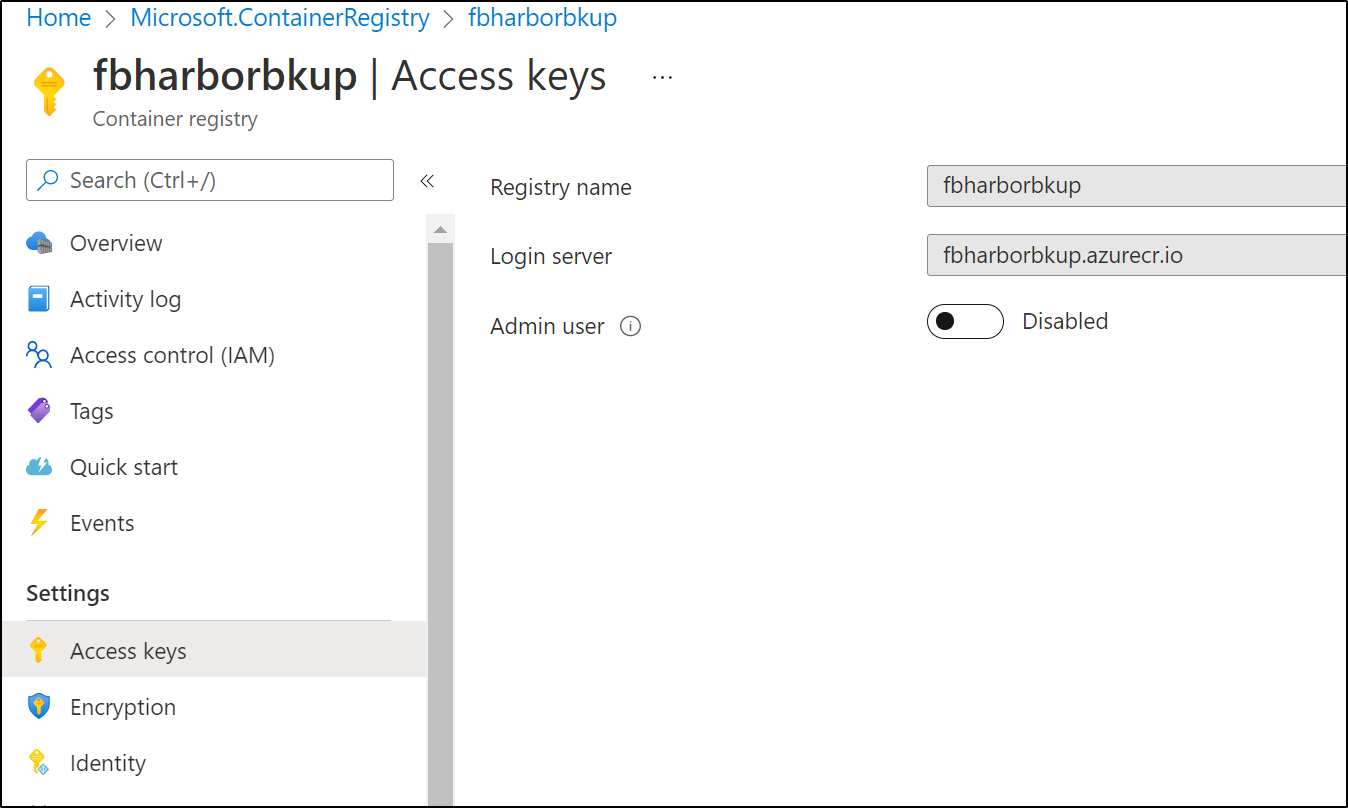

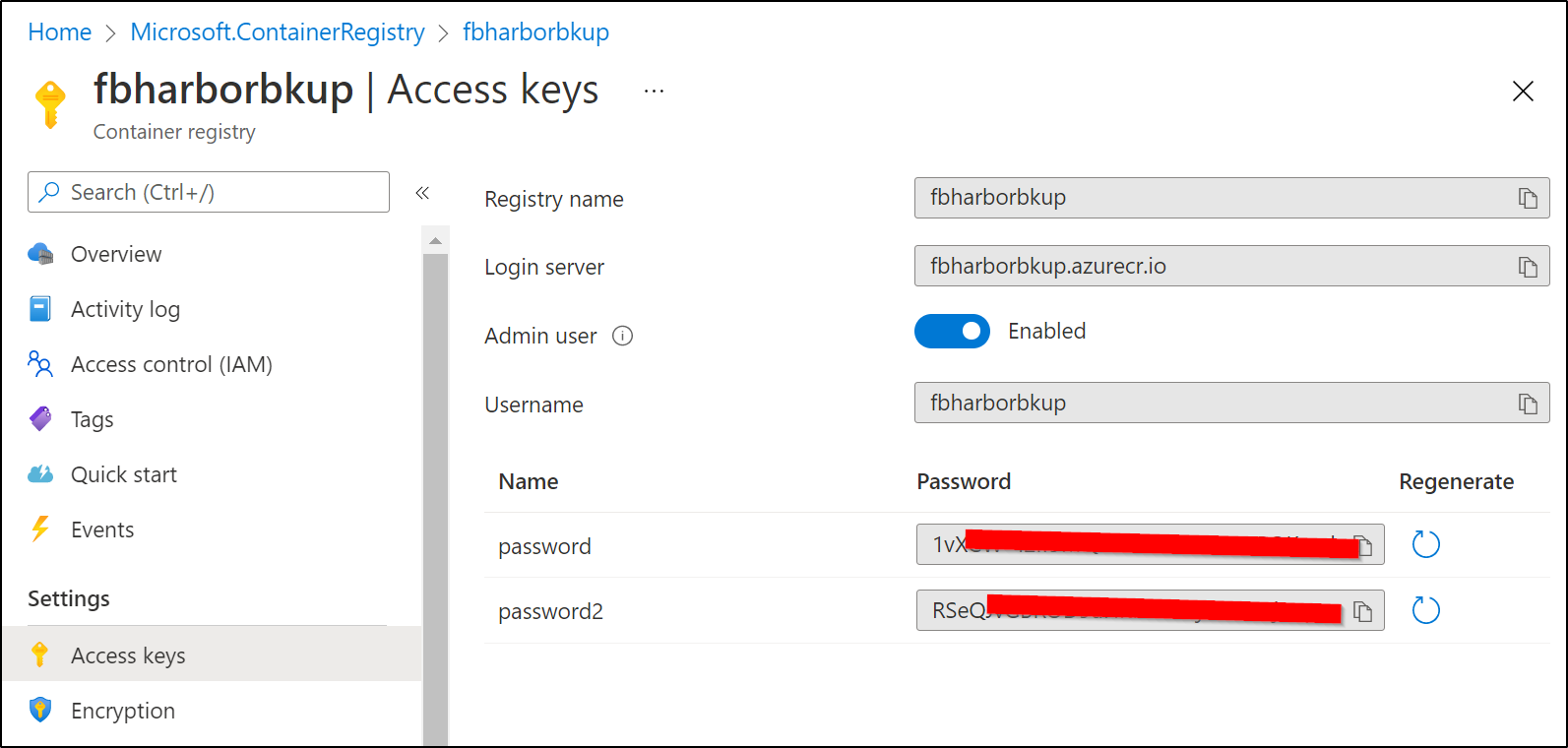

We can go to the CR and under Settings/Access keys, enable the admin user

When enabled, we will see two passwords and the username (either password works).

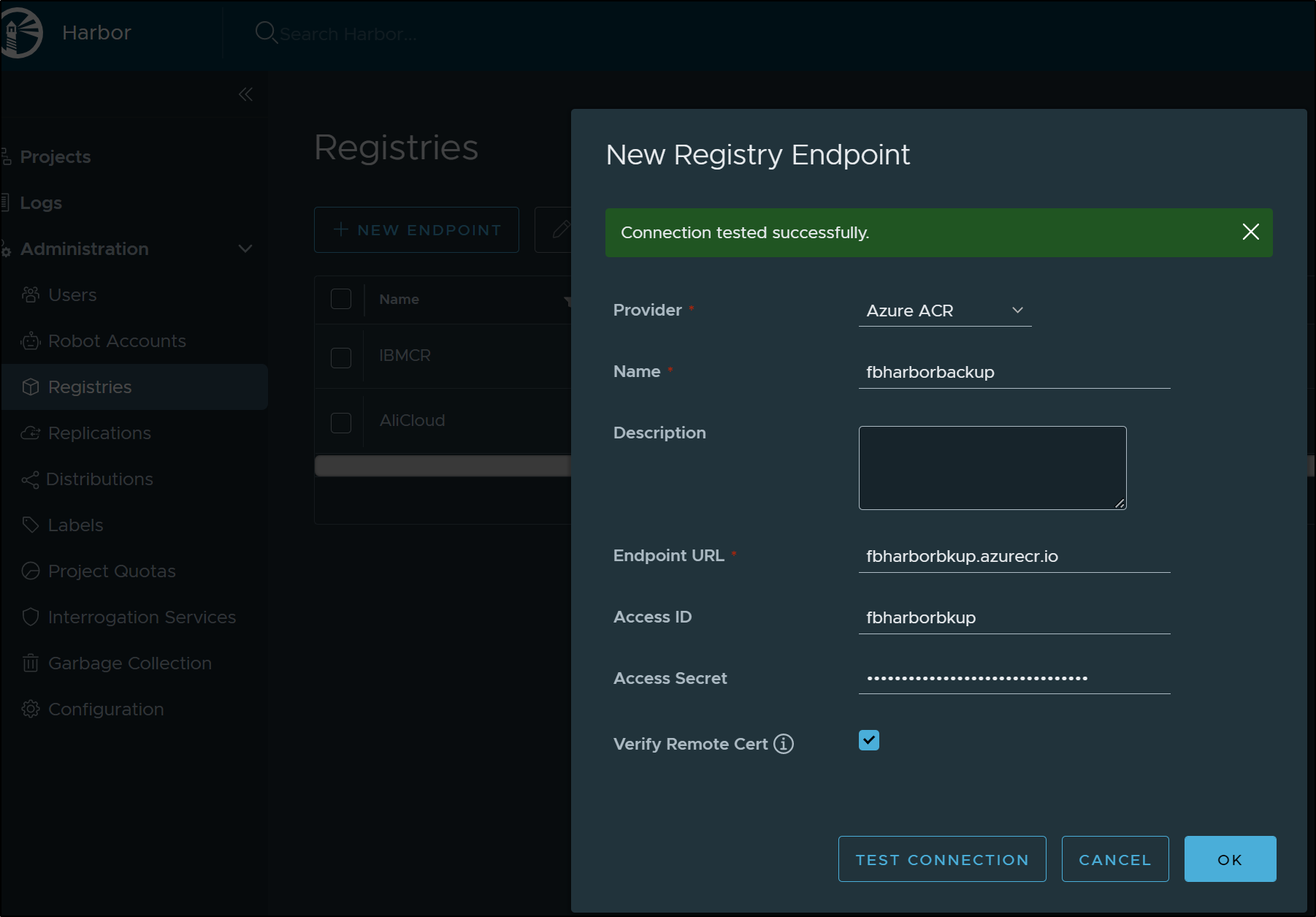

Logging into Harbor, I’ll add a new Registry Endpoint.

In my first attempt, I used “Azure ACR” for the type (seems redundant to say Azure Azure Container Registry)

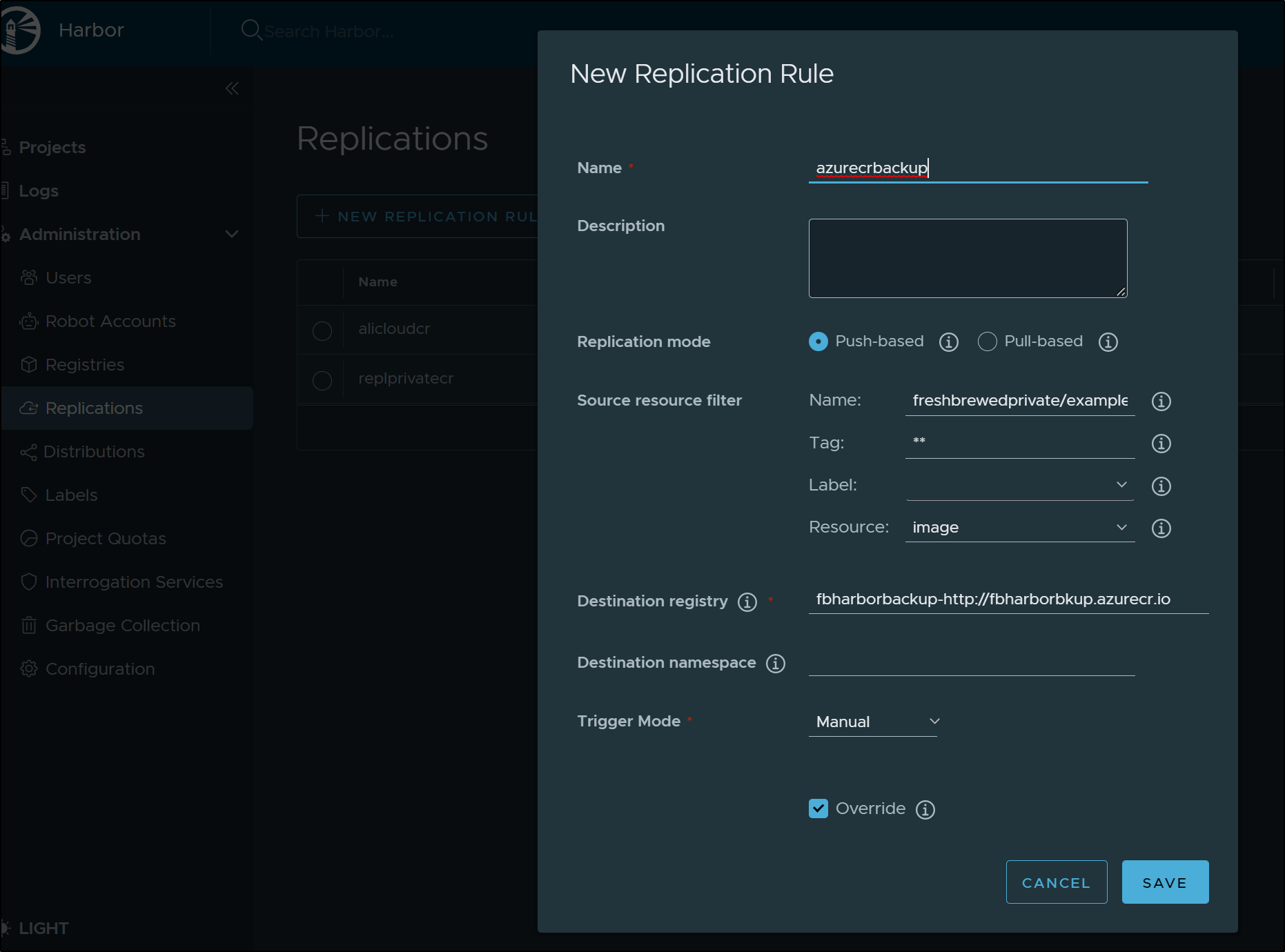

Then I created a replication rule

And invoked it

The problem was, it did not copy to a destination. For whatever reason it copied from the same source to target. The target being my harbor registry. It has to be some sort of odd bug.

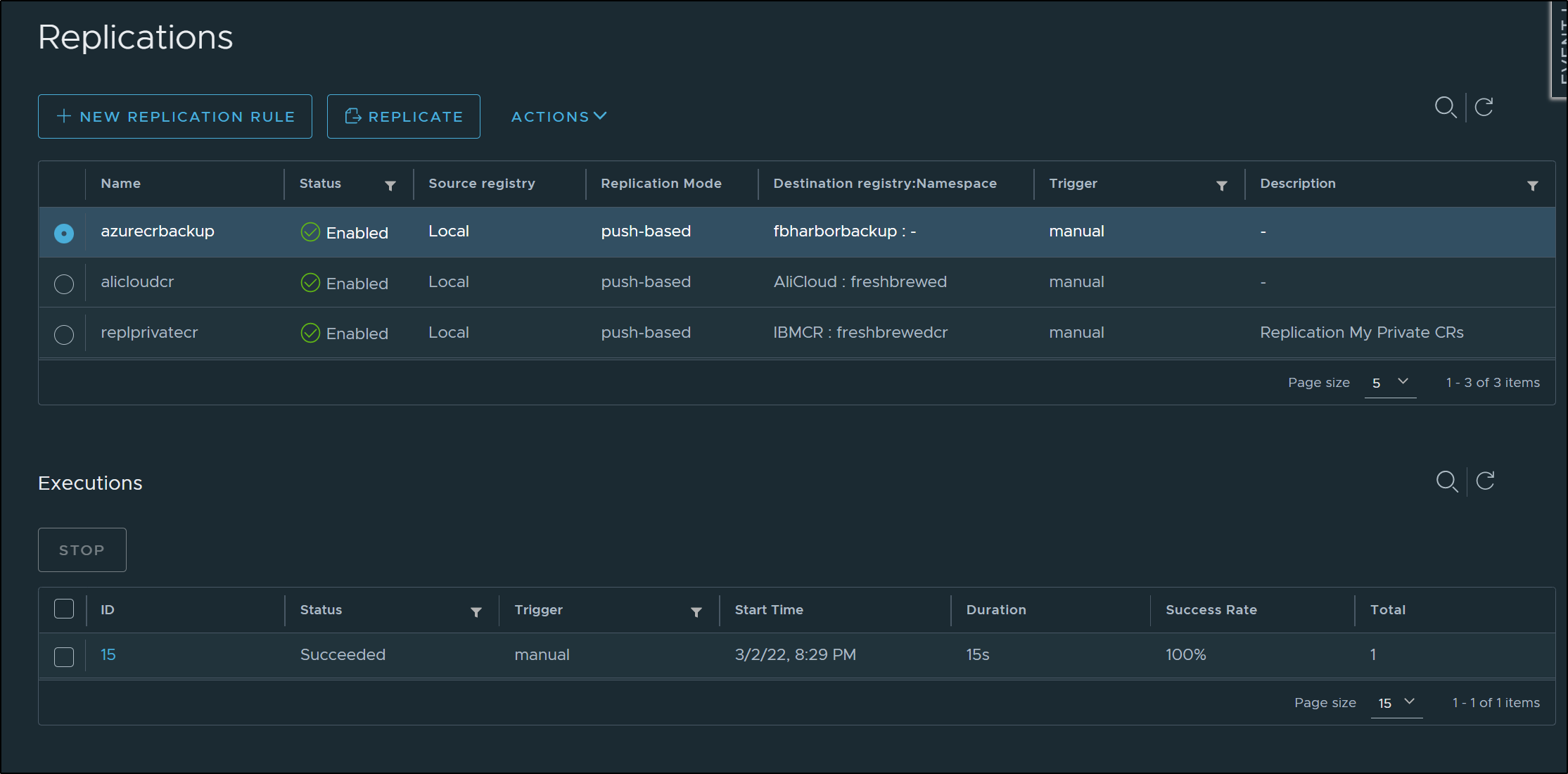

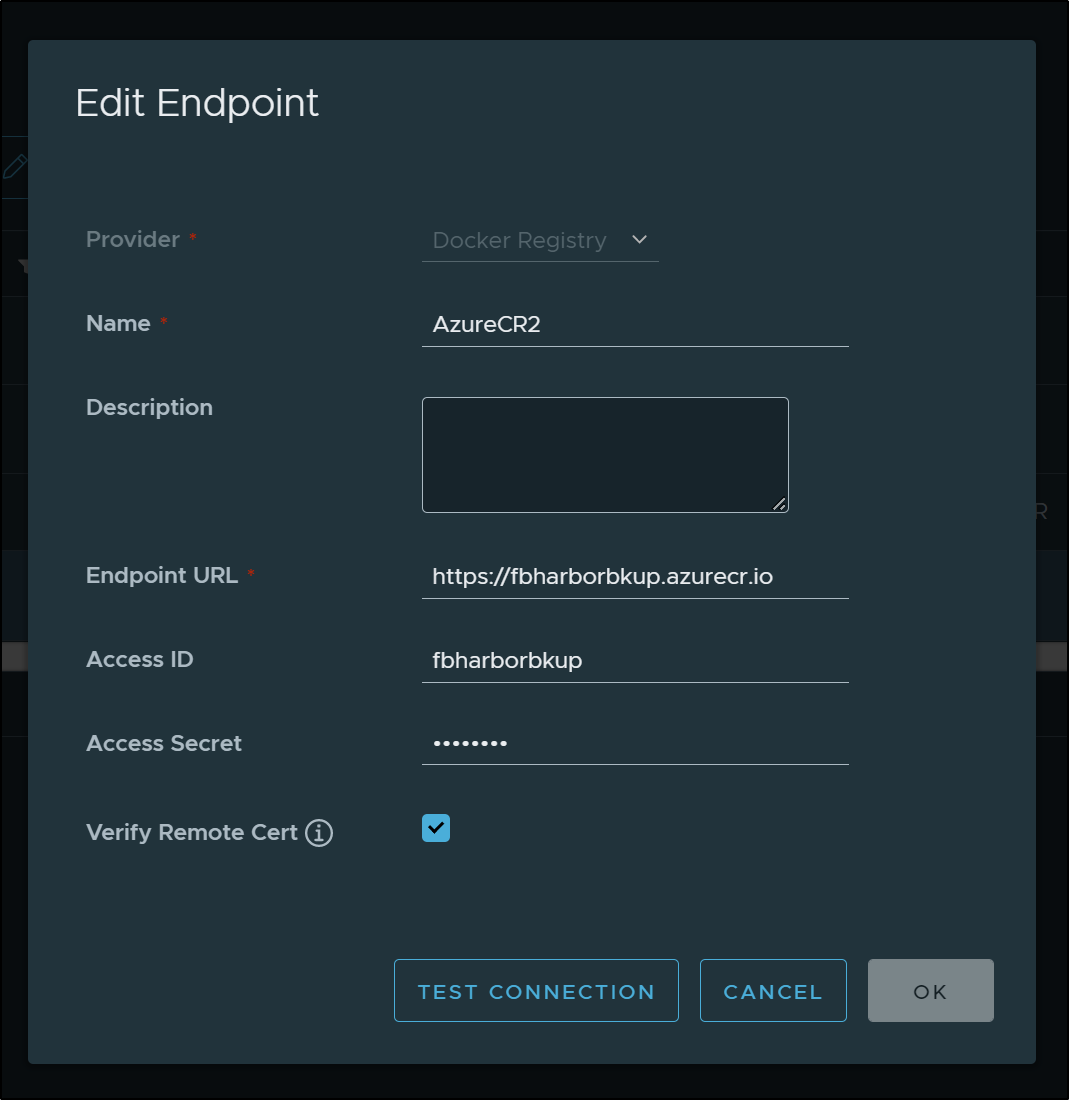

I went back and created a new Endpoint, this time using a standard “Docker Registry” provider

I just changed the destination in the replication rule to use “AzureCR2” and upon invoking maually, it worked just fine.

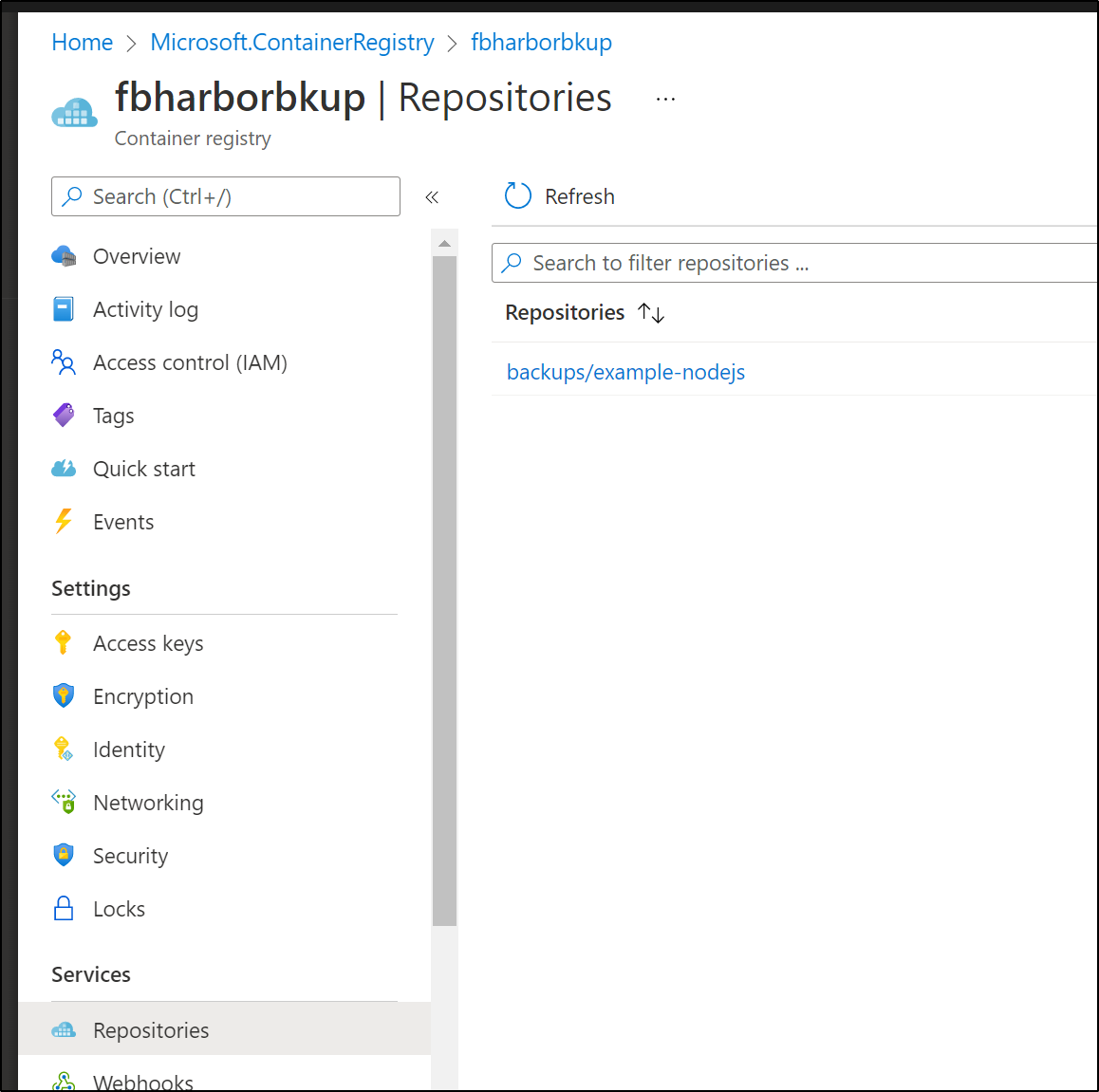

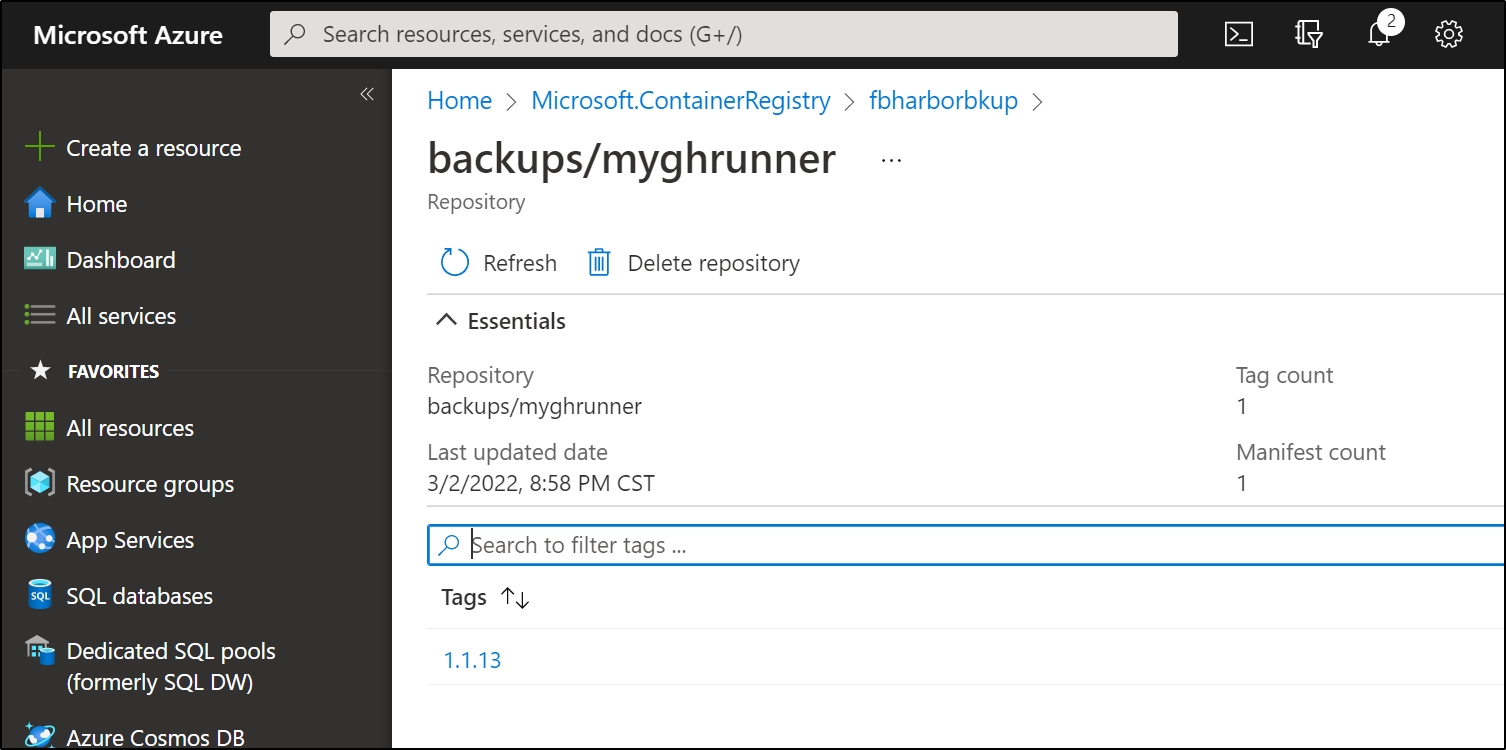

Checking ACR, i could see the sample repo was successfully synced over

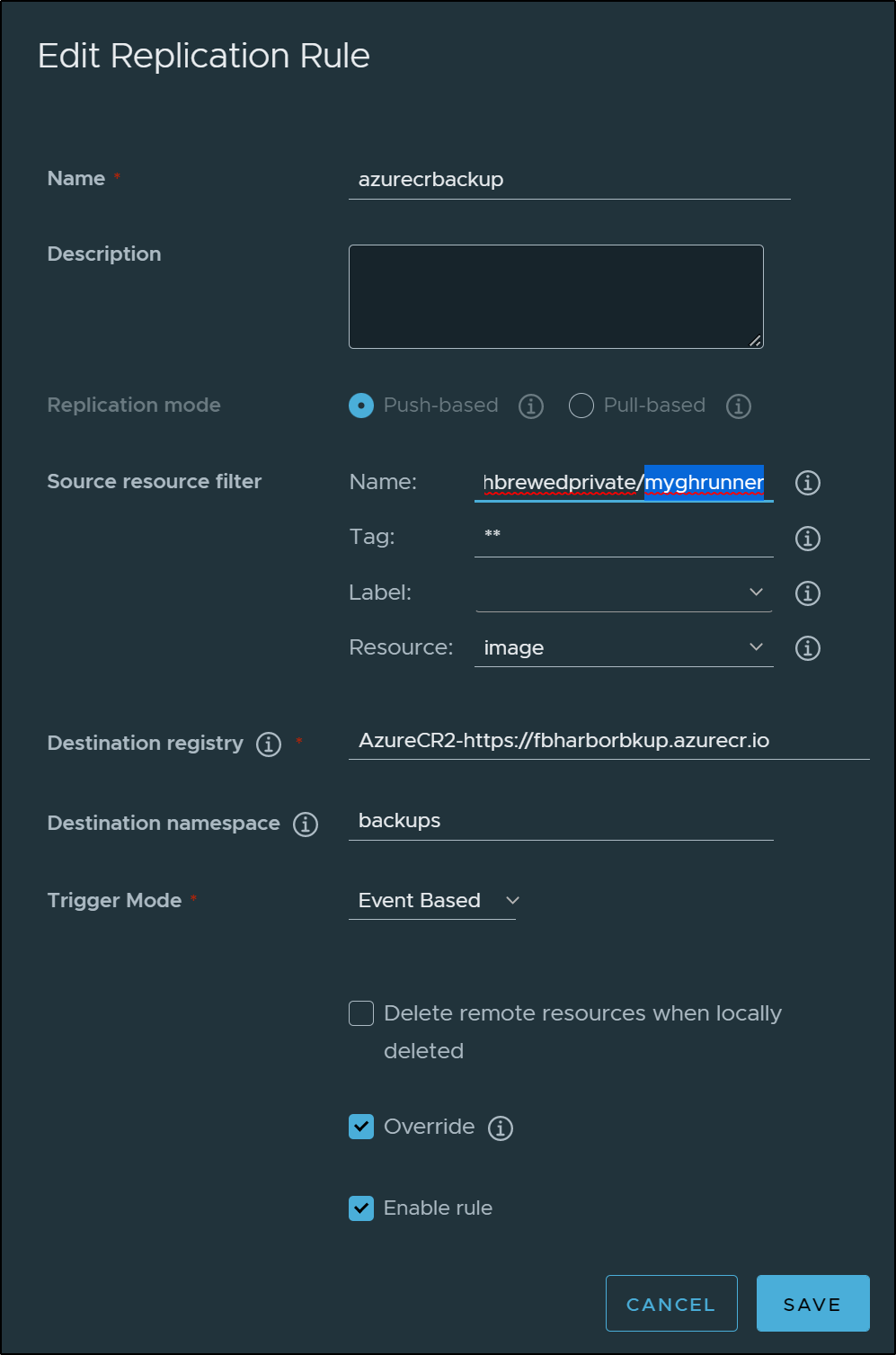

going back to the replication rule, I wanted to set it up to now just sync the ghrunner image that I’ve been building in the github actions workflow.

Besides the name (highlighted below), I also set the Trigger Mode to “Event Based” which triggers on a push (or delete).

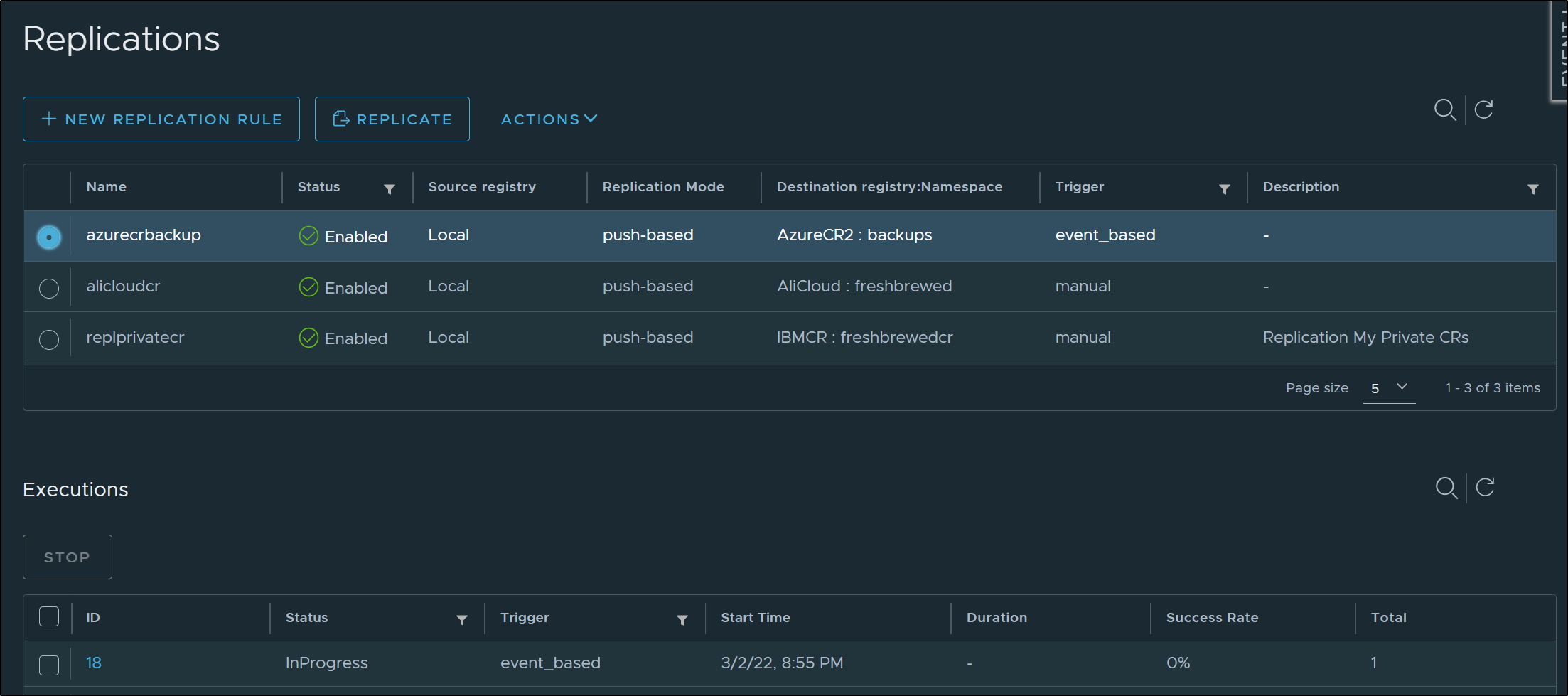

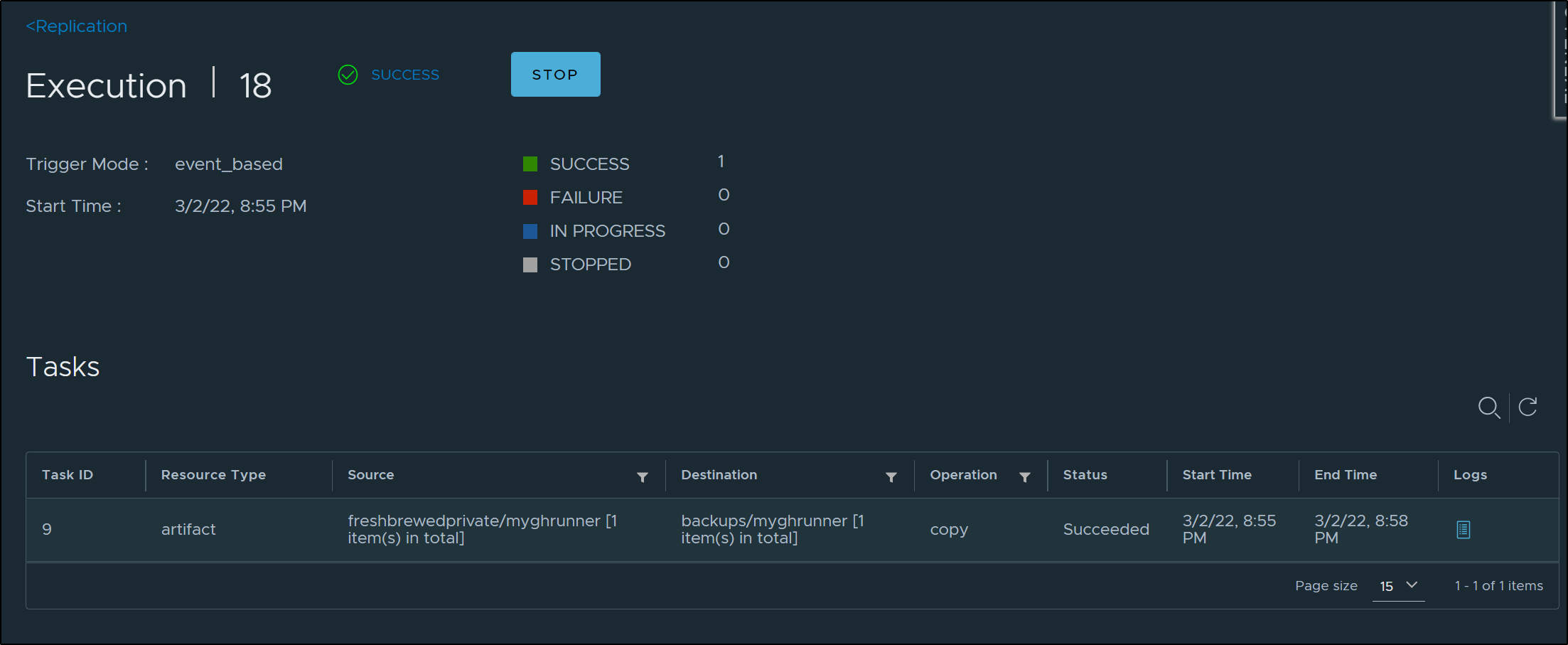

Upon pushing the image in the Github Actions build, I saw it start a sync to ACR in the Excutions pane

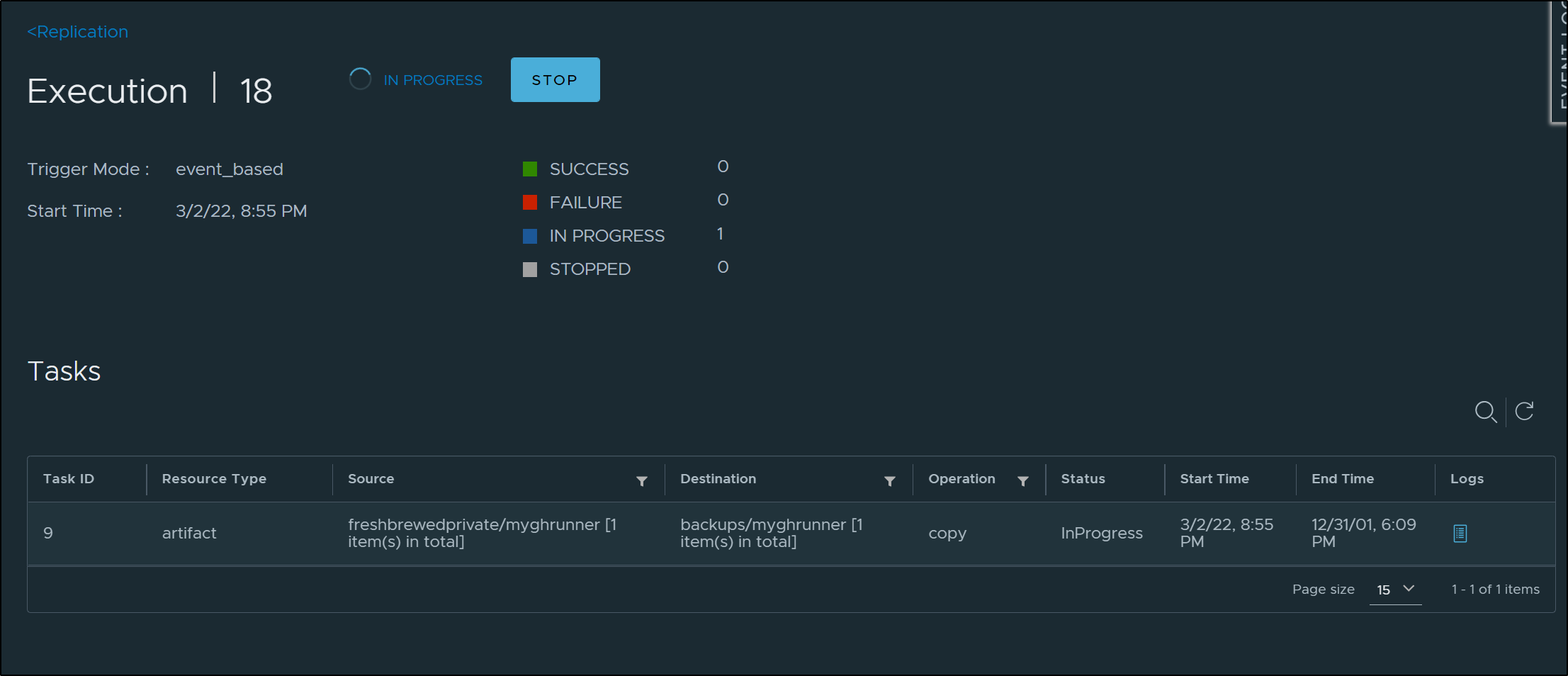

Checking the completions, I could see more details

And it completed in minutes (instead of seconds before)

Checking ACR, I can see the new image created

Including dates and layers if I check the maniffest for the Container Repository

It will take some days to gather costs, but at $0.167/day for the ACR Basic Sku, provided I stay in my 10Gb alotment, I might get a bill for $5/month or so. The standard SKU will run you $20/mo in Central US (for 100Gb) and just over $50/mo for Premium (with 500Gb and a few more bells and whistles).

Manual Replication to IBM and Ali Cloud

If we cannot get Harbor to do it, we can do it manually

First, let’s, just for sanity, force our latest images over, even if done by hand

First, I’ll do IBM Cloud

$ ibmcloud cr login

Logging in to 'us.icr.io'...

Logged in to 'us.icr.io'.

OK

Then I’ll login to AliCloud

$ docker login --username=isaac.johnson@gmail.com registry-intl.cn-hangzhou.aliyuncs.com

Password:

Login Succeeded

Logging in with your password grants your terminal complete access to your account.

For better security, log in with a limited-privilege personal access token. Learn more at https://docs.docker.com/go/access-tokens/

And of course, to access private CR images, I have to login to harbor as well

$ docker login --username=myusername harbor.freshbrewed.science

Password:

Login Succeeded

Logging in with your password grants your terminal complete access to your account.

For better security, log in with a limited-privilege personal access token. Learn more at https://docs.docker.com/go/access-tokens/

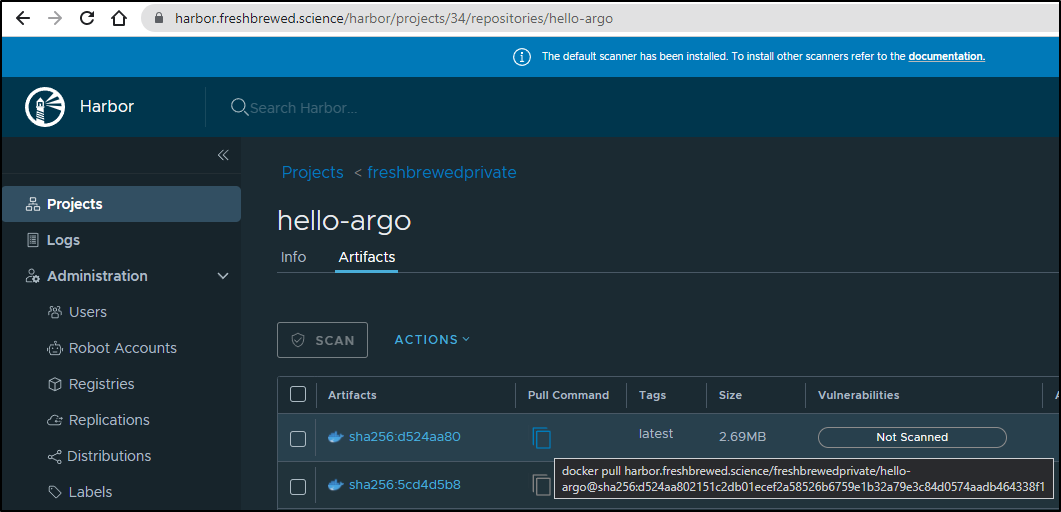

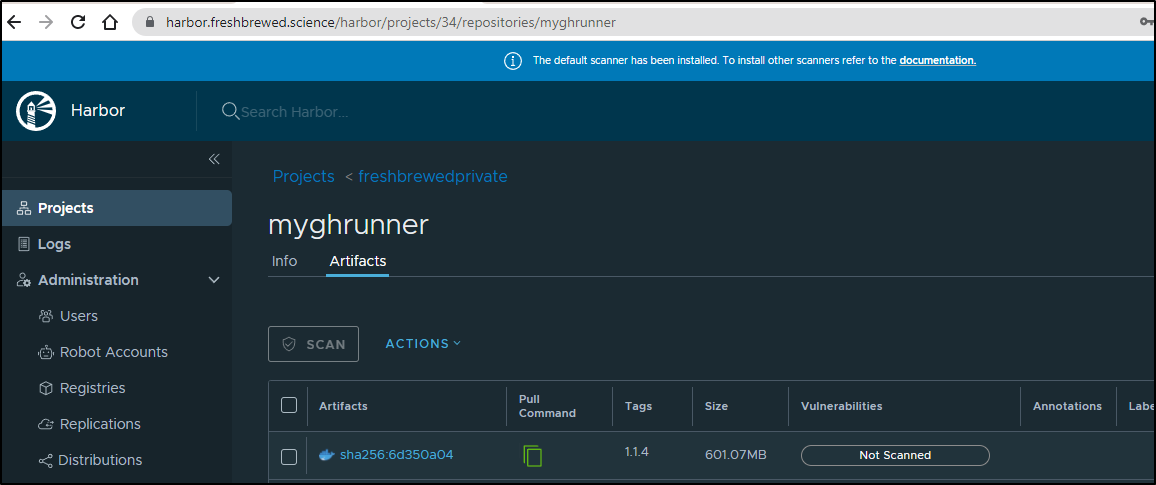

In Harbor, I’ll get the latest image from each Repository. The pull command can be simply copied to the clipboard

For each latest tag we:

Pull an image

$ docker pull harbor.freshbrewed.science/freshbrewedprivate/hello-argo@sha256:d524aa802151c2db01ecef2a58526b6759e1b32a79e3c84d0574aadb464338f1

harbor.freshbrewed.science/freshbrewedprivate/hello-argo@sha256:d524aa802151c2db01ecef2a58526b6759e1b32a79e3c84d0574aadb464338f1: Pulling from freshbrewedprivate/hello-argo

59bf1c3509f3: Pull complete

Digest: sha256:d524aa802151c2db01ecef2a58526b6759e1b32a79e3c84d0574aadb464338f1

Status: Downloaded newer image for harbor.freshbrewed.science/freshbrewedprivate/hello-argo@sha256:d524aa802151c2db01ecef2a58526b6759e1b32a79e3c84d0574aadb464338f1

harbor.freshbrewed.science/freshbrewedprivate/hello-argo@sha256:d524aa802151c2db01ecef2a58526b6759e1b32a79e3c84d0574aadb464338f1

Then we can tag and push to our namespace in AliCloud:

$ docker tag harbor.freshbrewed.science/freshbrewedprivate/hello-argo@sha256:d524aa802151c2db01ecef2a58526b6759e1b32a79e3c84d0574aadb464338f1 registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/hello-argo:latest

$ docker push registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/hello-argo:latest

The push refers to repository [registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/hello-argo]

8d3ac3489996: Pushing [=======================> ] 2.604MB/5.586MB

# later

$ docker push registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/hello-argo:latest

The push refers to repository [registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/hello-argo]

8d3ac3489996: Pushed

latest: digest: sha256:d524aa802151c2db01ecef2a58526b6759e1b32a79e3c84d0574aadb464338f1 size: 528

IBM Cloud as well

$ docker tag harbor.freshbrewed.science/freshbrewedprivate/hello-argo@sha256:d524aa802151c2db01ecef2a58526b6759e1b32a79e3c84d0574aadb464338f1 us.icr.io/freshbrewedcr/hello-argo:latest

$ docker push us.icr.io/freshbrewedcr/hello-argo:latest

The push refers to repository [us.icr.io/freshbrewedcr/hello-argo]

8d3ac3489996: Pushed

latest: digest: sha256:d524aa802151c2db01ecef2a58526b6759e1b32a79e3c84d0574aadb464338f1 size: 528

That was a small image; doing the larger (and more important one), my GH Runner. This has a fixed tag I use so I should ensure i push with that. This image alone would eclipse my free storage in IBM CR, so I will just push to China.

(9:53)

$ docker pull harbor.freshbrewed.science/freshbrewedprivate/myghrunner@sha256:6d350a042d79213291ec25ec6ddf26161ba9766523776669035b630e6cfccf6a

harbor.freshbrewed.science/freshbrewedprivate/myghrunner@sha256:6d350a042d79213291ec25ec6ddf26161ba9766523776669035b630e6cfccf6a: Pulling from freshbrewedprivate/myghrunner

7b1a6ab2e44d: Already exists

55dd4dfd1d49: Pull complete

f6b94392ad06: Pull complete

65229a11bf89: Pull complete

8bb28339f4b9: Pull complete

636988ecc88a: Pull complete

96ef816f01dc: Pull complete

1390e4b4b99f: Pull complete

1797fb937420: Pull complete

2187f1c13de7: Pull complete

a48278b9a27e: Pull complete

0c663509afae: Pull complete

95d63a2e2f97: Pull complete

16af35381f27: Pull complete

f1c9dde1cd07: Pull complete

90694ba1c517: Pull complete

4a1b598016fd: Pull complete

Digest: sha256:6d350a042d79213291ec25ec6ddf26161ba9766523776669035b630e6cfccf6a

Status: Downloaded newer image for harbor.freshbrewed.science/freshbrewedprivate/myghrunner@sha256:6d350a042d79213291ec25ec6ddf26161ba9766523776669035b630e6cfccf6a

harbor.freshbrewed.science/freshbrewedprivate/myghrunner@sha256:6d350a042d79213291ec25ec6ddf26161ba9766523776669035b630e6cfccf6a

builder@DESKTOP-QADGF36:~$ docker tag harbor.freshbrewed.science/freshbrewedprivate/myghrunner@sha256:6d350a042d79213291ec25ec6ddf26161ba9766523776669035b630e6cfccf6a registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/myghrunner:1.1.4

builder@DESKTOP-QADGF36:~$ docker push registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/myghrunner:1.1.4

The push refers to repository [registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/myghrunner]

7b04bab33dc8: Pushed

2765bcf5a4cf: Pushing [> ] 1.617MB/212.1MB

8f353eccf0c6: Pushed

0eb265869108: Pushed

d0ce75e79200: Pushed

ad981eb5b164: Pushing [> ] 3.849MB/1.227GB

c125104d22bb: Pushing [==================================================>] 3.584kB

faab2751ac3f: Waiting

f1b9ccc8c9bc: Waiting

1addd6a3aaed: Waiting

0152563ce9e4: Waiting

cde6f8325730: Waiting

29a611cadaf9: Waiting

6d22ac30d31a: Preparing

b79a45cdc0c0: Waiting

c4b3d29e5837: Waiting

9f54eef41275: Waiting

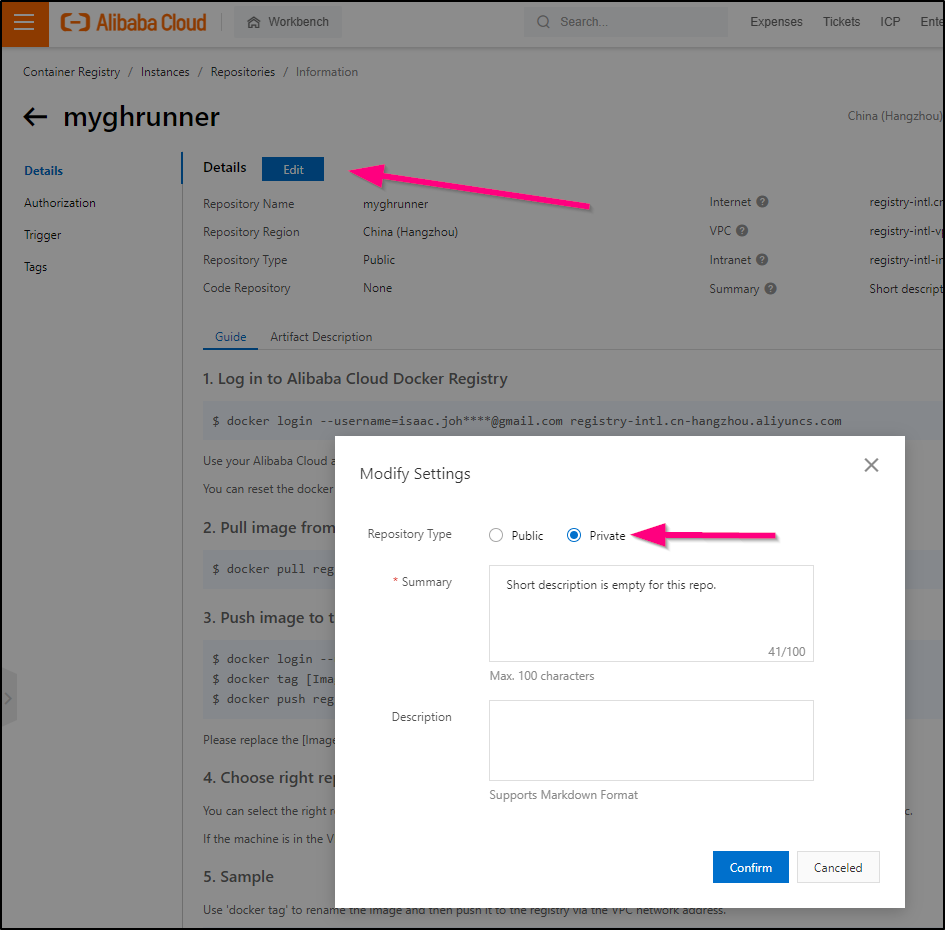

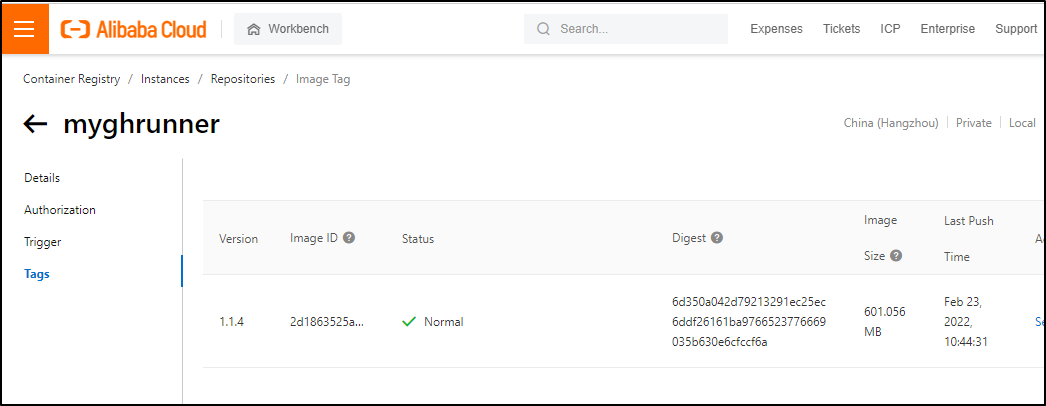

This took well over 30m. However, in the end it was saved out to a Hangzhou based CR

I can ensure that this repo is moved to private by clicking edit and changing from public to private

Disaster Recovery (DR)

So how might we use that image?

We can see the Image used is in the RunnerDeployment object in k8s:

$ kubectl get runnerdeployment my-jekyllrunner-deployment -o yaml | tail -n 14 | head -n4

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.3

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: myharborreg

The key thing here is I’ll want to create an image pull secret then use the Ali Image

$ kubectl create secret docker-registry alicloud --docker-server=registry-intl.cn-hangzhou.aliyuncs.com --docker-username=isaac.johnson@gmail.com --docker-password=myalipassword --docker-email=isaac.johnson@gmail.com

secret/alicloud created

Now i can change my DeploymentRunner to use AliCloud:

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl get runnerdeployment my-jekyllrunner-deployment -o yaml > my-jekyllrunner-deployment.yaml

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ kubectl get runnerdeployment my-jekyllrunner-deployment -o yaml > my-jekyllrunner-deployment.yaml.bak

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ vi my-jekyllrunner-deployment.yaml

builder@DESKTOP-QADGF36:~/Workspaces/jekyll-blog$ diff my-jekyllrunner-deployment.yaml.bak my-jekyllrunner-deployment.yaml

39c39

< image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.3

---

> image: registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/myghrunner:1.1.4

42c42

< - name: myharborreg

---

> - name: alicloud

If there is any question on tags, in AliCloud, one looks in the Tags section at the bottom to see the tag names

Now just apply

$ kubectl apply -f my-jekyllrunner-deployment.yaml

runnerdeployment.actions.summerwind.dev/my-jekyllrunner-deployment configured

And now we can see it pulling the image:

$ kubectl get pods | tail -n 5

my-jekyllrunner-deployment-phkdv-hs97z 2/2 Running 0 3m20s

my-jekyllrunner-deployment-phkdv-96v49 2/2 Running 0 3m20s

my-jekyllrunner-deployment-z25qh-2xcrb 0/2 ContainerCreating 0 51s

my-jekyllrunner-deployment-z25qh-twjm6 0/2 ContainerCreating 0 51s

my-jekyllrunner-deployment-z25qh-qd8sq 0/2 ContainerCreating 0 51s

$ kubectl describe pod my-jekyllrunner-deployment-z25qh-2xcrb | tail -n5

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 45s default-scheduler Successfully assigned default/my-jekyllrunner-deployment-z25qh-2xcrb to isaac-macbookpro

Normal Pulling 43s kubelet Pulling image "registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/myghrunner:1.1.4"

And a while later, we see the pods do come up:

$ kubectl get pods | tail -n 5

my-jekyllrunner-deployment-z25qh-2xcrb 2/2 Running 1 15h

my-jekyllrunner-deployment-z25qh-qd8sq 2/2 Running 1 15h

my-jekyllrunner-deployment-z25qh-twjm6 2/2 Running 1 15h

harbor-registry-harbor-database-0 1/1 Running 5 (15h ago) 2d14h

my-runner-deployment-njn2k-szd5p 2/2 Running 0 12h

And verifying it is using the new image

$ kubectl describe pod my-jekyllrunner-deployment-z25qh-2xcrb | grep Image:

Image: registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/myghrunner:1.1.4

Image: docker:dind

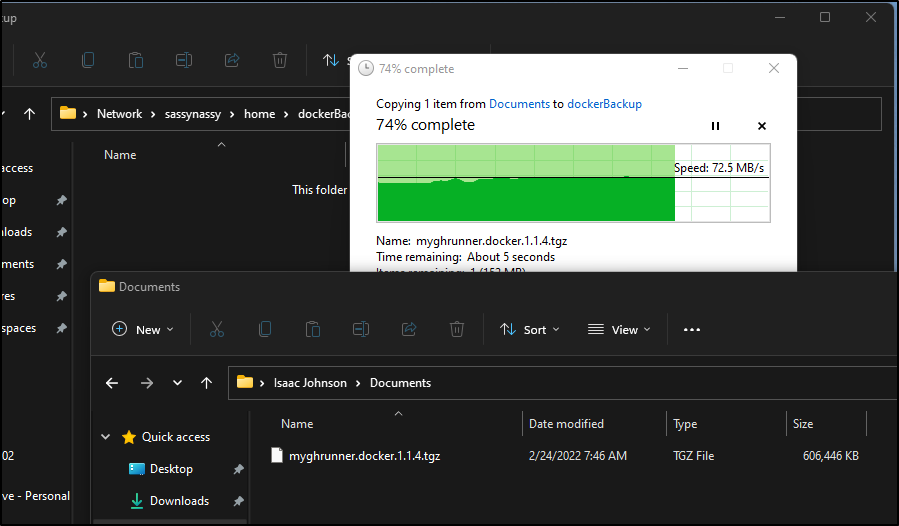

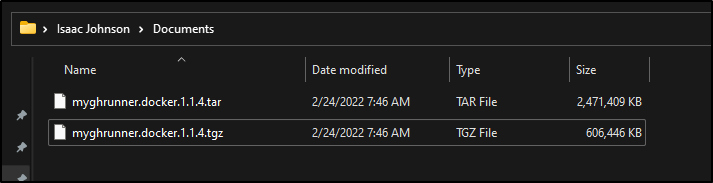

Saving to File storage

There is another way to save out files for transport and archive - that is to dump them to disk.

For instance, if I have an image I pulled, I can easily tgz it and save to external file storage.

For example, first I’ll list the ghrunner images (and if need be, do a “docker pull <image>”)

$ docker images | grep ghrunner

registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/myghrunner 1.1.4 2d1863525ab8 2 months ago 2.44GB

harbor.freshbrewed.science/freshbrewedprivate/myghrunner <none> 2d1863525ab8 2 months ago 2.44GB

harbor.freshbrewed.science/freshbrewedprivate/myghrunner 1.1.3 585b0d6e69a0 2 months ago 2.47GB

harbor.freshbrewed.science/freshbrewedprivate/myghrunner 1.1.1 670715c8c9a2 2 months ago 2.26GB

harbor.freshbrewed.science/freshbrewedprivate/myghrunner 1.1.2 670715c8c9a2 2 months ago 2.26GB

harbor.freshbrewed.science/freshbrewedprivate/myghrunner 1.1.0 16fb58a29b01 2 months ago 2.21GB

Using docker save we can dump a local container image to a tarball. To save space, we’ll gzip it enroute.

$ docker save registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/myghrunner:1.1.4 | gzip > /mnt/c/Users/isaac/Documents/myghrunner.docker.1.1.4.tgz

$ ls -lh /mnt/c/Users/isaac/Documents/myghrunner.docker.1.1.4.tgz

-rwxrwxrwx 1 builder builder 593M Feb 24 07:46 /mnt/c/Users/isaac/Documents/myghrunner.docker.1.1.4.tgz

Then I can just save it to the storage of my choice, be it OneDrive, Box, Google Drive, etc.

When it comes time to load it back, we can just bring the tgz down then load and push to a new CR:

$ docker tag registry-intl.cn-hangzhou.aliyuncs.com/freshbrewed/myghrunner:1.1.4 mynewharbor.freshbrewed.science/freshbrewedprivate/ghrunner:1.1.4 && docker push mynewharbor.freshbrewed.science/freshbrewedprivate/ghrunner:1.1.4

For some size context: My Docker install reports the full ghrunner image as 2.44Gb. The tar gzipped is 592Mb and the Tarball uncompressed is 2.35Gb.

Why file storage might be prefered

If we are to assume that these images are only needed in an emergency situation, it makes far more sense to just back them up to a file store.

For one, consider costs. In Azure, as mentioned ealier on the SKUs of basic, standard and premium, we get 10, 100 and 500Gb included storage for $5/mo, $20/mo and $50/mo respectively. However, if we used standard GPv2 Hot LRS Block Blob storage in Central US, we would pay US$2.08/mo today for 100Gb of storage, $20.80 for 1Tb, $41.60 for 2Tb. Using storage lifecycle policies, one could really pack on the savings moving to archive storage.

If just saving for rainy days, one can get 10Tb of Arhive storage for US$10/mo as of this writing:

And if curious, retrieving, say 50Gb of that Archive storage would cost $1 so it’s not aggregious to fetch an image in an emergency situation.

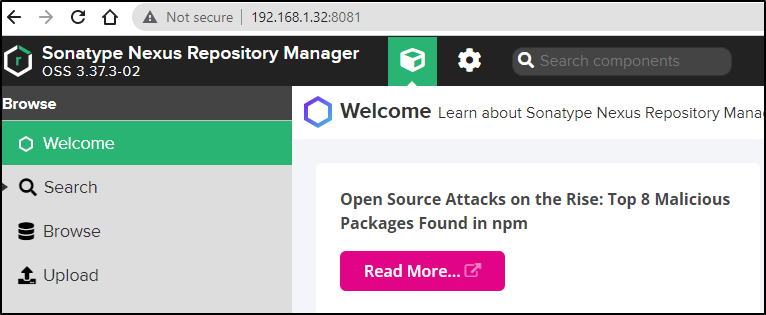

Other ways to store images

One could download and install the OSS versions of Nexus or the Community edition of Artifactory.

I did set up a local Nexus to try:

However, the underlying issue is that both Nexus and JFrog are large Java apps that have a fair amount of steps to setting up TLS. Beyond which, if i used self-signed certs, I might have challenges getting my local docker client to accept. Docker, nowadays, really really hates using http endpoints. There are supposedly ways to setup the client to use insecure registries, but then you have to deal with Kubernetes using them.

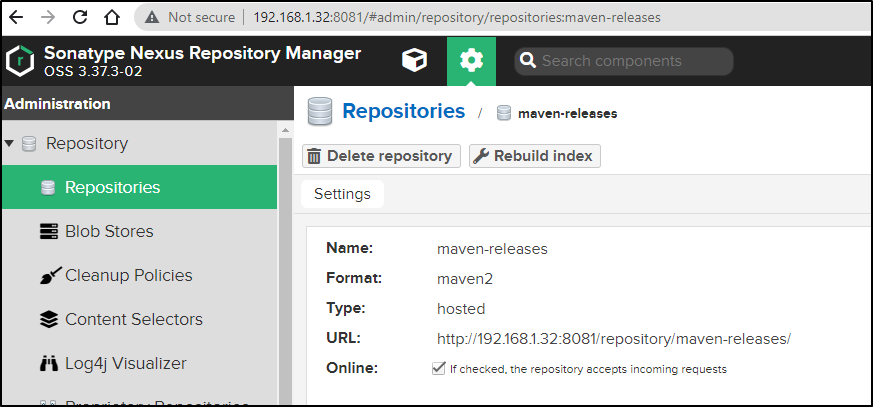

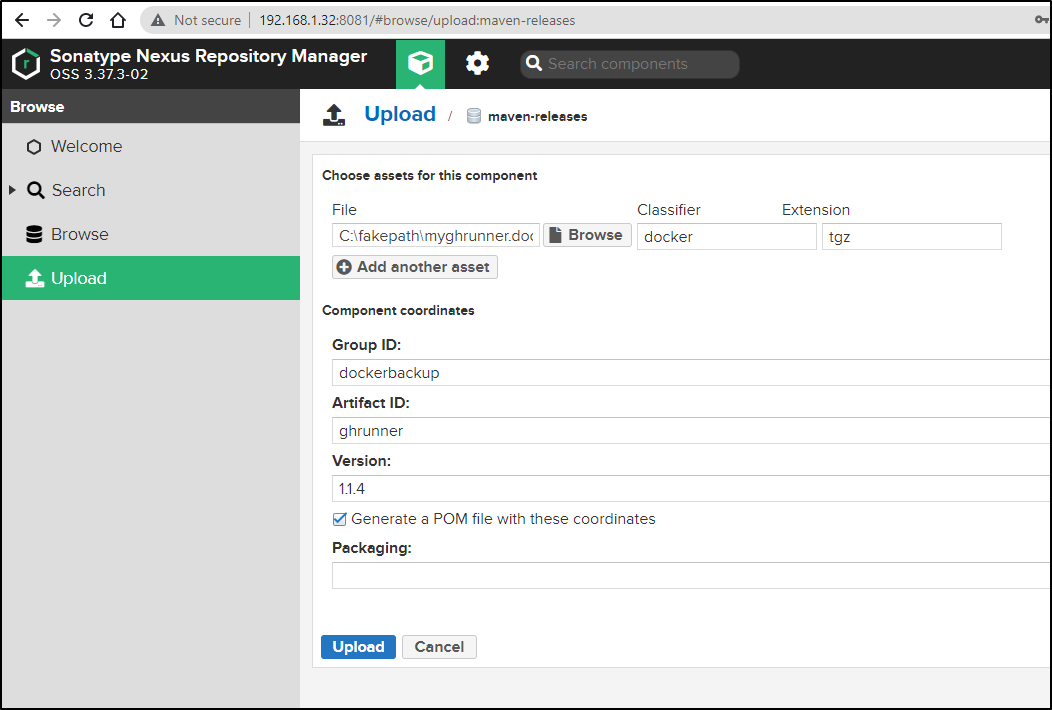

That said, we can setup Nexus OSS and it will have a default Maven releases endpoint for artifacts:

Since I’m in-network, I can just use the WebUI to upload the TGZ’ed docker image

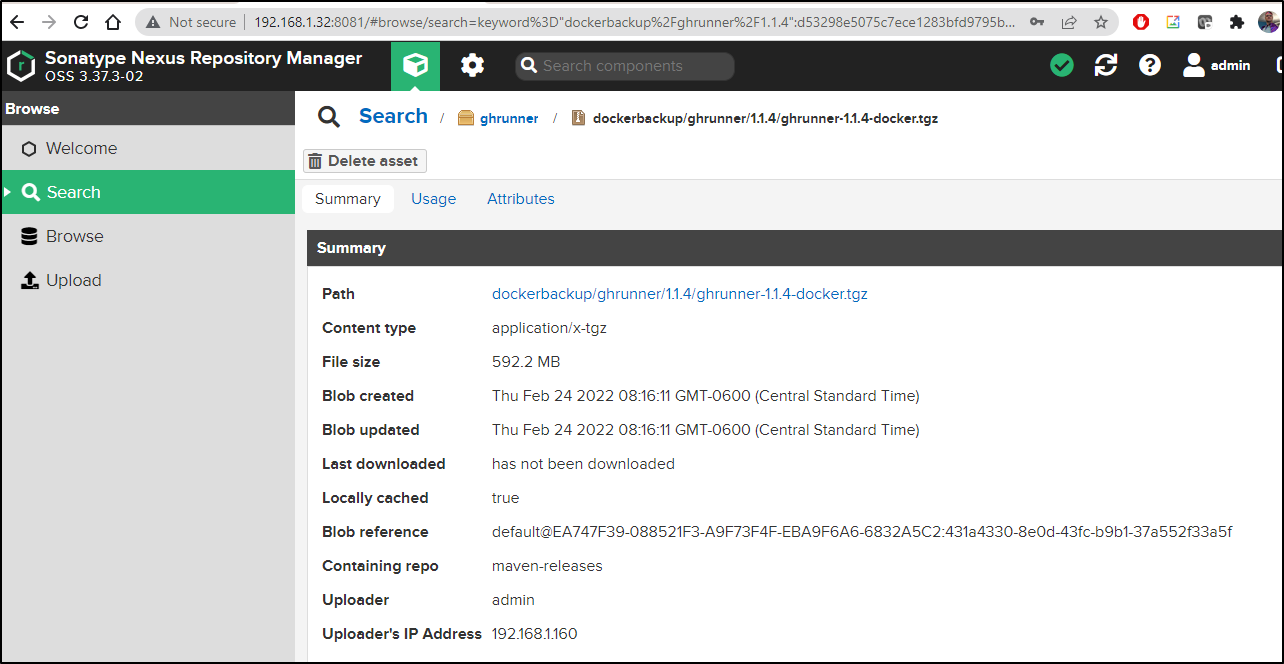

after which I see it listed:

and could download with:

$ wget http://192.168.1.32:8081/repository/maven-releases/dockerbackup/ghrunner/1.1.4/ghrunner-1.1.4-docker.tgz

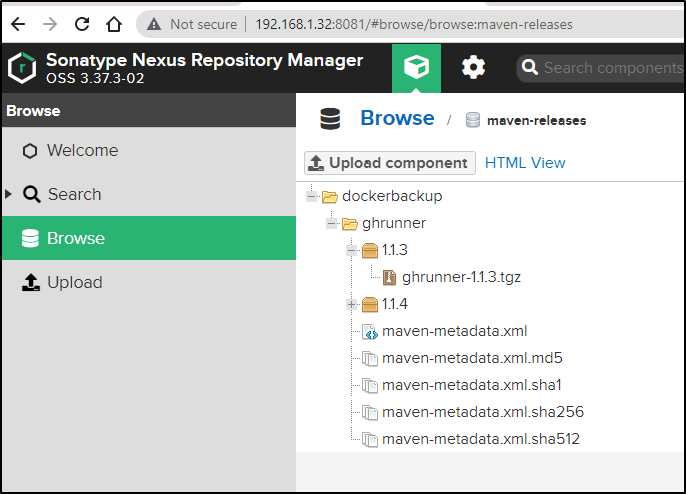

I can also use curl to upload a file:

$ docker save myrunner:1.1.3 | gzip > /mnt/c/Users/isaac/Documents/myghrunner.docker.1.1.3.tgz

$ curl -u admin:MYPASSWORD --upload-file /mnt/c/Users/isaac/Documents/myghrunner.docker.1.1.3.tgz http://192.168.1.32:8081/repository/maven-releases/dockerbackup/ghrunner/1.1.3/ghrunner-1.1.3.tgz

and we can see it is there now

Build to NAS

The following is particular to a Synology NAS. I’ve had magnificent luck with mine and highly recommend them.

(I get nothing for saying that, I just really like their products. I’ve had a DS216play with WD Red WD60EFRX drives for 6 years now that has had zero issues. Only downside is Plex transcoding is limited on that model )

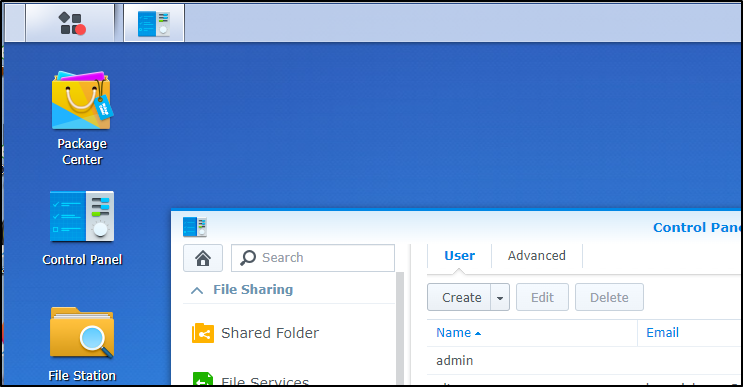

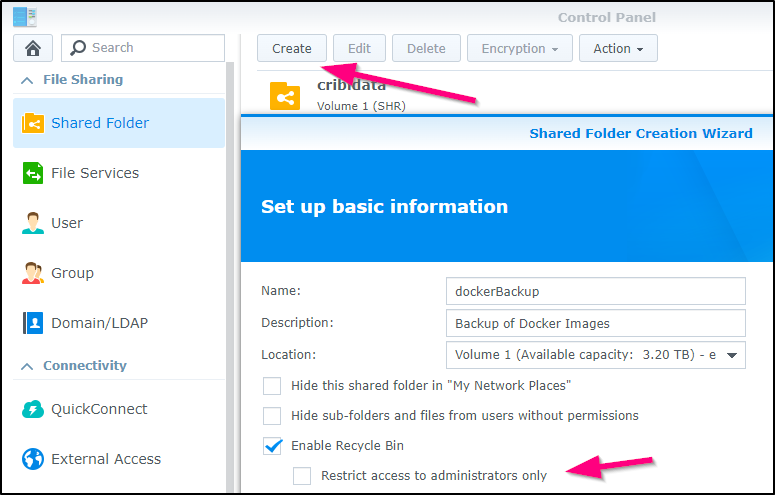

Setting up User, Share and SFTP in Synology DiskStation

First, I’ll create a new user in the NAS just for this purpose

And disable the ability to change password

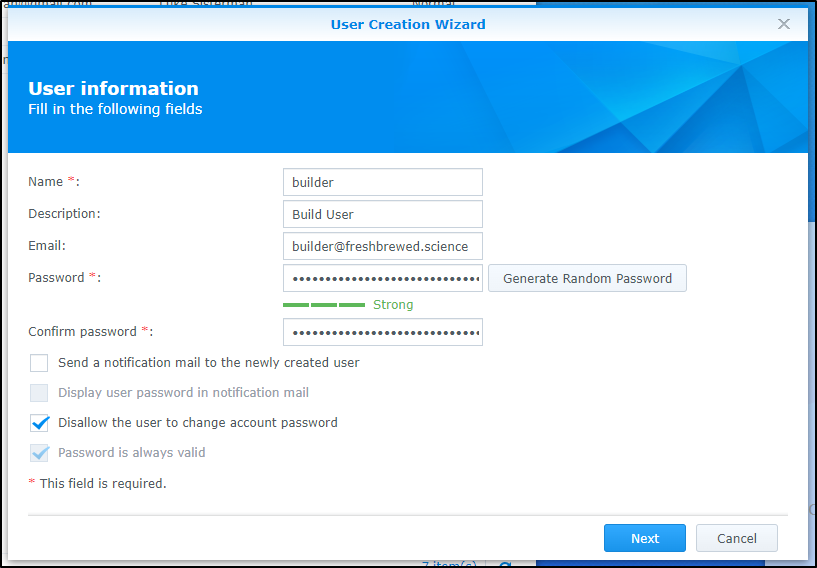

In the user add wizard, I’ll skip adding to any shares, but I will enable FTP usage

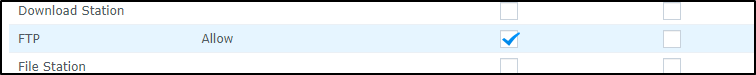

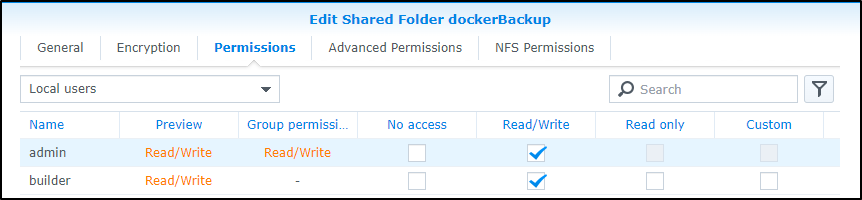

next, I’ll create a new shared folder and make sure it is not restricted to only admins (is by default)

Upon create, I can add my builder users for R/W access

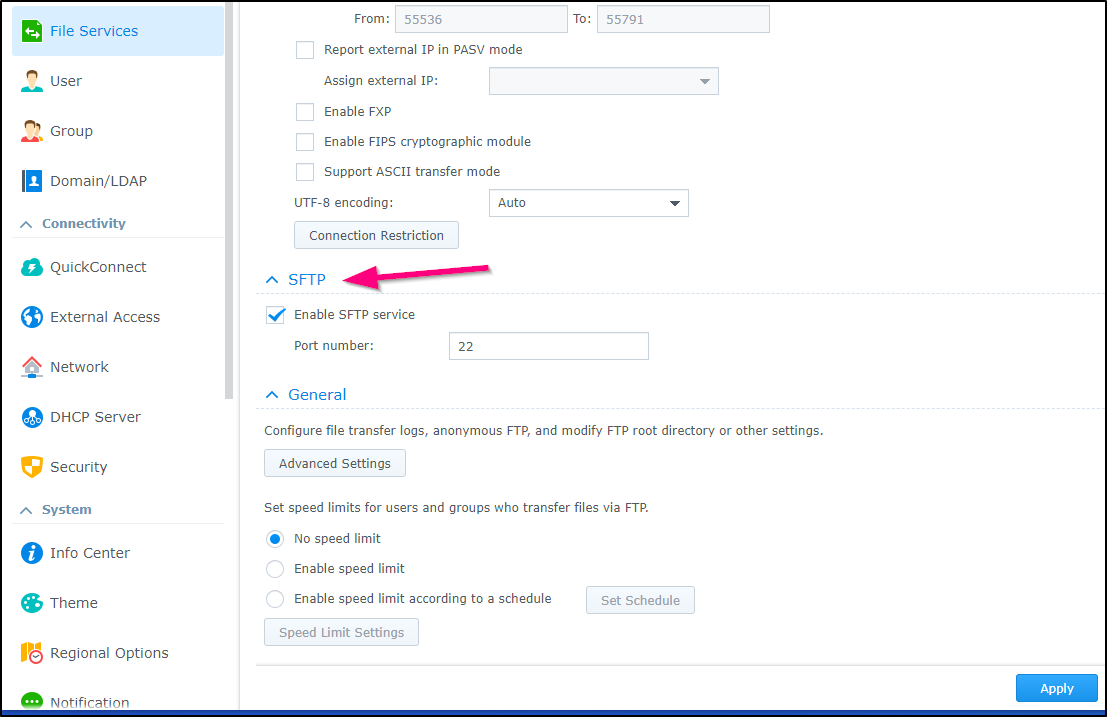

Lastly, under File Services, enable SFTP (if not already) and click apply

We can validate our local ghRunner can reach it by exec’ing into the pod and trying to upload a file

$ kubectl exec -it my-jekyllrunner-deployment-z25qh-2xcrb -- /bin/sh

Defaulted container "runner" out of: runner, docker

$ cd /tmp

$ touch asdf

$ sftp builder@sassynassy

builder@sassynassy's password:

Connected to sassynassy.

sftp> cd dockerBackup

sftp> put asdf

Uploading asdf to /dockerBackup/asdf

asdf 100% 0 0.0KB/s 00:00

sftp> exit

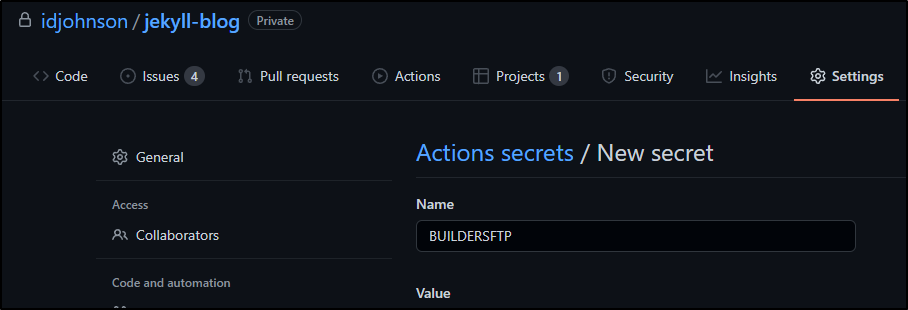

I’ll need to save the password to GH Secrets so i can use it in the pipeline later

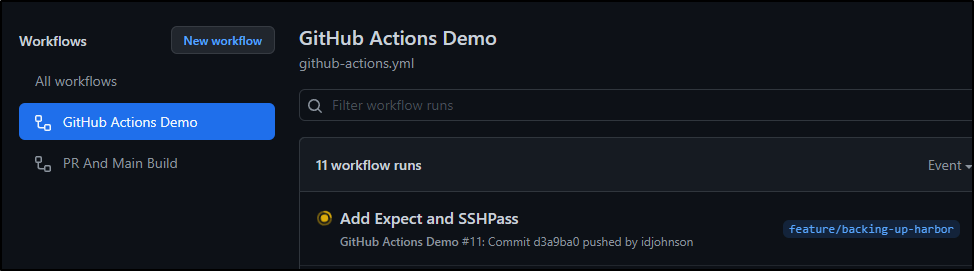

I also added a block in the Dockerfile to add expect and sshpass

# Install Expect and SSHPass

RUN sudo apt update -y \

&& umask 0002 \

&& sudo apt install -y sshpass expect

which spurned a fresh image build. This, in the end, is what we will modify to backup to a NAS on build

Using that update image, we can leverage SSH pass to upload the built container:

Assuming my externally exposed SFTP is 73.242.50.55 on port 12345 (it isn’t, fyi)

- name: SFTP

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FILEOUT="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g' | sed 's/:/-/g'`".tgz

docker save $BUILDIMGTAG | gzip > $FILEOUT

mkdir ~/.ssh || true

ssh-keyscan -p 12345 73.242.50.55 >> ~/.ssh/known_hosts

sshpass -e sftp -P 12345 -oBatchMode=no -b - builder@73.242.50.55 << !

cd dockerBackup

put $FILEOUT

bye

!

env: # Or as an environment variable

SSHPASS: $

Here we can see it actually ran and uploaded from the ephimeral provided Github Actions Runner:

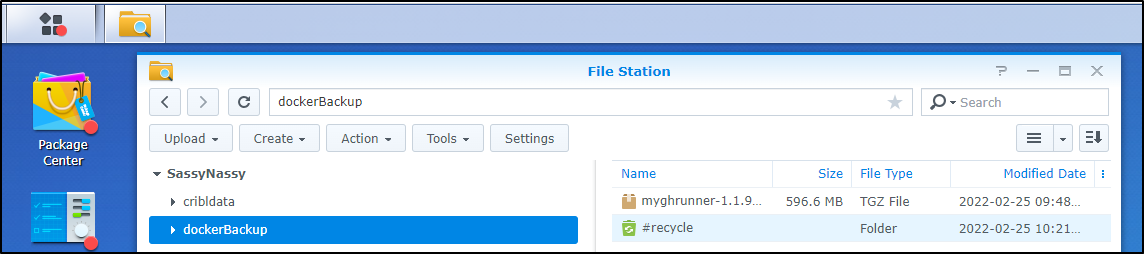

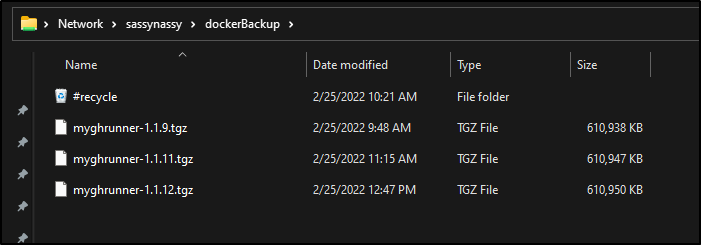

And we can see it is backed up

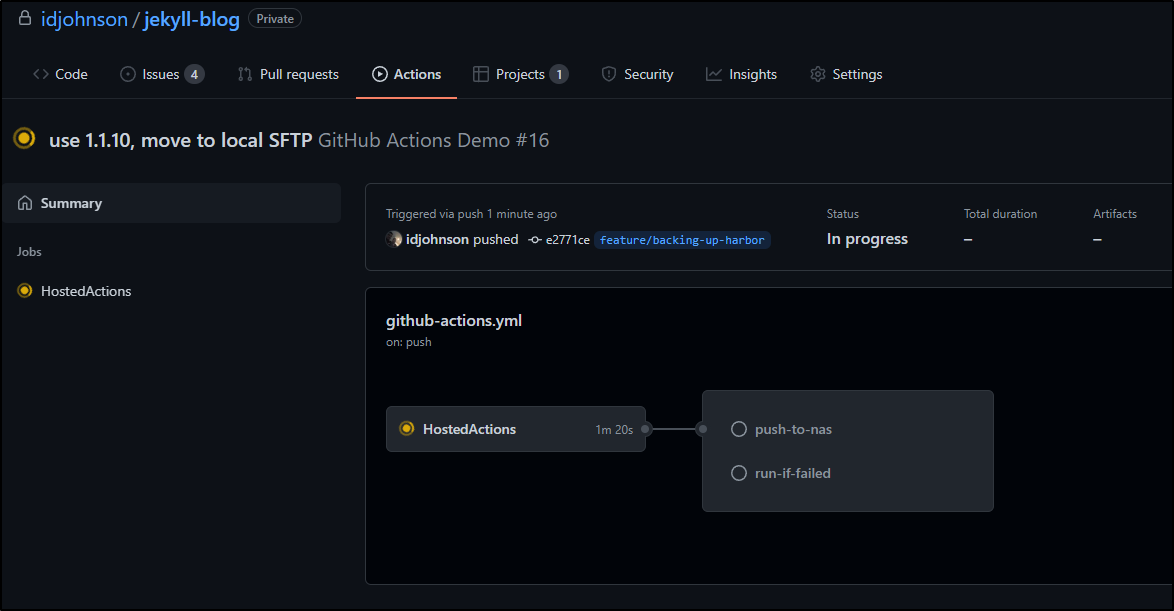

And if we wanted to trust the last runner to push the new runner, we could use the local IP and move to a follow-up stage:

name: GitHub Actions Demo

on:

push:

paths:

- "**/Dockerfile"

jobs:

HostedActions:

runs-on: ubuntu-latest

steps:

- run: echo "🎉 The job was automatically triggered by a $ event."

- run: echo "🐧 This job is now running on a $ server hosted by GitHub!"

- run: echo "🔎 The name of your branch is $ and your repository is $."

- name: Check out repository code

uses: actions/checkout@v2

- run: echo "💡 The $ repository has been cloned to the runner."

- run: echo "🖥️ The workflow is now ready to test your code on the runner."

- name: Check on Binaries

run: |

which az

which aws

- name: Build Dockerfile

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

docker build -t $BUILDIMGTAG ghRunnerImage

docker images

- name: Tag and Push

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FINALBUILDTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^#//g'`"

docker tag $BUILDIMGTAG $FINALBUILDTAG

docker images

echo $CR_PAT | docker login harbor.freshbrewed.science -u $CR_USER --password-stdin

docker push $FINALBUILDTAG

env: # Or as an environment variable

CR_PAT: $

CR_USER: $

- name: Build count

uses: masci/datadog@v1

with:

api-key: $

metrics: |

- type: "count"

name: "dockerbuild.runs.count"

value: 1.0

host: $

tags:

- "project:$"

- "branch:$"

- run: echo "🍏 This job's status is $."

push-to-nas:

runs-on: self-hosted

needs: HostedActions

steps:

- name: Check out repository code

uses: actions/checkout@v2

- name: Pull From Tag

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FINALBUILDTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^#//g'`"

docker images

echo $CR_PAT | docker login harbor.freshbrewed.science -u $CR_USER --password-stdin

docker pull $FINALBUILDTAG

env: # Or as an environment variable

CR_PAT: $

CR_USER: $

- name: SFTP Locally

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FILEOUT="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g' | sed 's/:/-/g'`".tgz

export FINALBUILDTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^#//g'`"

docker save $FINALBUILDTAG | gzip > $FILEOUT

mkdir ~/.ssh || true

ssh-keyscan sassynassy >> ~/.ssh/known_hosts

sshpass -e sftp -oBatchMode=no -b - builder@sassynassy << !

cd dockerBackup

put $FILEOUT

bye

!

env: # Or as an environment variable

SSHPASS: $

run-if-failed:

runs-on: ubuntu-latest

needs: HostedActions

if: always() && (needs.HostedActions.result == 'failure' || needs.push-to-nas.result == 'failure')

steps:

- name: Datadog-Fail

uses: masci/datadog@v1

with:

api-key: $

events: |

- title: "Failed building Dockerfile"

text: "Branch $ failed to build"

alert_type: "error"

host: $

tags:

- "project:$"

- name: Fail count

uses: masci/datadog@v1

with:

api-key: $

metrics: |

- type: "count"

name: "dockerbuild.fails.count"

value: 1.0

host: $

tags:

- "project:$"

- "branch:$"

The new block being push-to-nas:

push-to-nas:

runs-on: self-hosted

needs: HostedActions

steps:

- name: Check out repository code

uses: actions/checkout@v2

- name: Pull From Tag

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FINALBUILDTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^#//g'`"

docker images

echo $CR_PAT | docker login harbor.freshbrewed.science -u $CR_USER --password-stdin

docker pull $FINALBUILDTAG

env: # Or as an environment variable

CR_PAT: $

CR_USER: $

- name: SFTP Locally

run: |

export BUILDIMGTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g'`"

export FILEOUT="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^.*\///g' | sed 's/:/-/g'`".tgz

export FINALBUILDTAG="`cat ghRunnerImage/Dockerfile | tail -n1 | sed 's/^#//g'`"

docker save $FINALBUILDTAG | gzip > $FILEOUT

mkdir ~/.ssh || true

ssh-keyscan sassynassy >> ~/.ssh/known_hosts

sshpass -e sftp -oBatchMode=no -b - builder@sassynassy << !

cd dockerBackup

put $FILEOUT

bye

!

env: # Or as an environment variable

SSHPASS: $

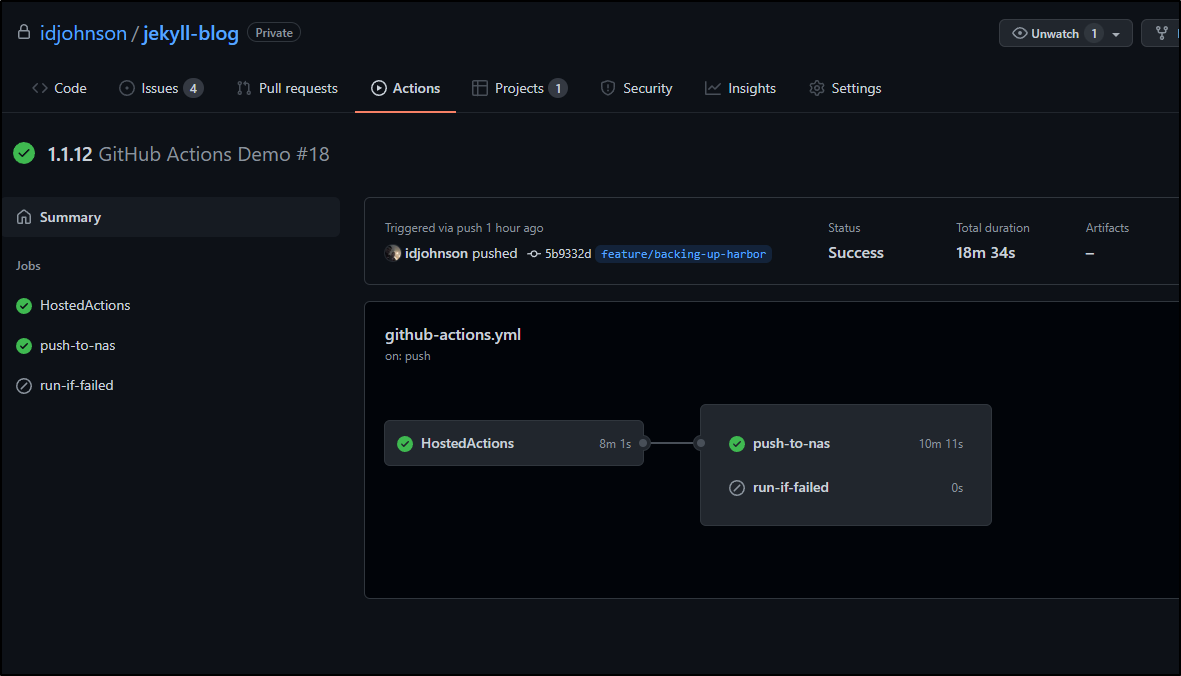

Which we can see run

This clearly takes long as we have to wait and invoke an on-prem agent to pull the large image and then push to the local NAS.

18 minutes instead of 11:

However, this is a lot more secure as we do not need to expose SFTP on the public internet for the Github provided ephemeral agents to use.

And because I now save the images to a local NAS, i can pull them down to load any CR I desire later

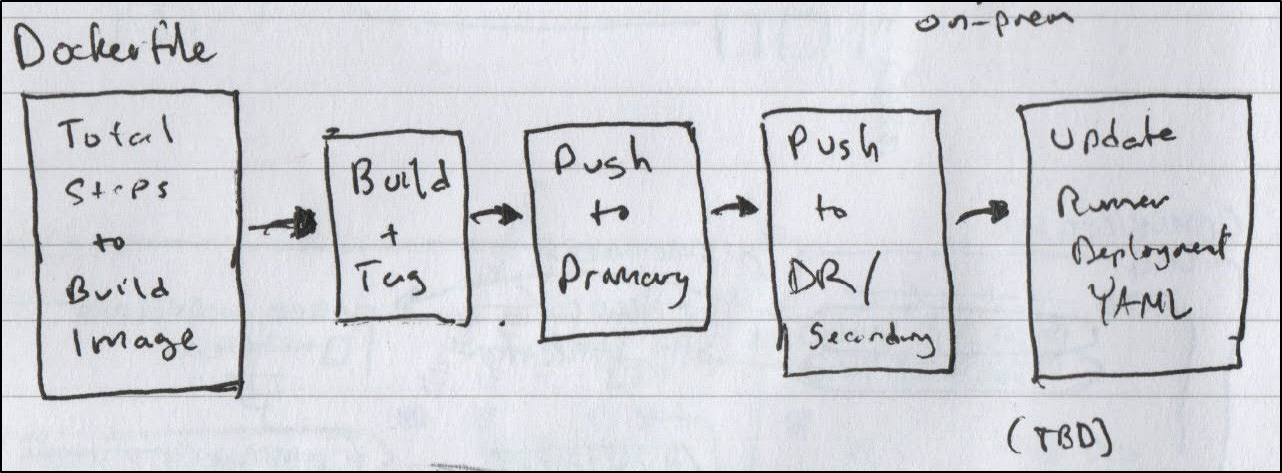

Summary

We covered setting up CRs in IBM Cloud, Ali Cloud and Azure then trying Harbor replication. With ACR we successfully triggered a post build replication of our ghrunner image. For IBM and Ali Cloud, we showed manual replication and how to use them in a DR situation.

We worked through saving container images as files and backing up to a NAS manually. Then we automated the process by building and pushing both the image to a private CR and saving the tgz of the image to a remote FTP site (also on-prem).

Lastly, we finalized the process (albeit with a slight performance hit) by moving the packaging and transmission of the backup image to the same on-prem Github runner (of which we are building a new image).

Our goal would be to ultimately have a turn-key build that would not only build but also update the runner itself (which is what is running).

In order to really be successful on that, however, we would need to create proper tests with a phased rollout (lest we take down the runner deployment and have to manually back it out to fix).

One approach would be to have two deployments. The “staging” runners would use the candidate image in a staging RunnerDeployment.

$ kubectl get runnerdeployment my-jekyllrunner-deployment -o yaml

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: staging-deployment

namespace: default

spec:

replicas: 1

selector: null

template:

metadata: {}

spec:

dockerEnabled: true

dockerdContainerResources: {}

env:

- name: AWS_DEFAULT_REGION

value: us-east-1

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

key: USER_NAME

name: awsjekyll

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

key: PASSWORD

name: awsjekyll

- name: DATADOG_API_KEY

valueFrom:

secretKeyRef:

key: DDAPIKEY

name: ddjekyll

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:$NEWIMAGE

imagePullPolicy: Always

imagePullSecrets:

- name: myharborreg

labels:

- staging-deployment

repository: idjohnson/jekyll-blog

resources: {}

Then in our validation flow before promotion we would use the custom label in our testing stage

jobs:

validation:

runs-on: staging-deployment

I should also point out that the SummerWind style RunnerDeployment does have a field “dockerRegistryMirror” that, provided you use container registry repositories with public endpoints, would allow a fallback endpoint. It is really intended to work around docker.io rate-limiting, but would likely be sufficient for DR on a private registry.

# Optional Docker registry mirror

# Docker Hub has an aggressive rate-limit configuration for free plans.

# To avoid disruptions in your CI/CD pipelines, you might want to setup an external or on-premises Docker registry mirror.

# More information:

# - https://docs.docker.com/docker-hub/download-rate-limit/

# - https://cloud.google.com/container-registry/docs/pulling-cached-images

dockerRegistryMirror: https://mirror.gcr.io/

But that is a topic for a follow-up blog comming soon that will cover next steps in testing and rollout as well as further cost mitigation strategies.