Published: Feb 1, 2022 by Isaac Johnson

Anthos is Google’s offering for Hybrid Cloud management leveraging Service Mesh (managed Istio), GKE and Cloud Run (managed KNative). Releases of Anthos go back to June of 2019 and one can reference the support matrix to see how releases relate to versions of supported services.

Anthos is somewhat similar to Azure Arc in that it lets you extend GCP to the Hybrid-Cloud and On-Prem. Google’s own summary of Anthos:

Anthos lets you build and manage modern applications on Google Cloud, existing on-premises environments, or public cloud environments. Built on open source technologies pioneered by Google—including Kubernetes, Istio, and Knative—Anthos enables consistency between on-premises and cloud environments. Anthos helps accelerate application development and strategically enables your business with transformational technologies such as service mesh, containers, and microservices.

To see how this works, we will connect an on-prem cluster into Anthos (GKE). We’ll explore some of the features of Anthos (Service Mesh, Mapping, Backups) and compare Anthos to Arc. We’ll also onboard an AKS cluster (alongside a GKE cluster already in Anthos). Lastly, we will check out what additional things we can do with Anthos as well as touch on costs.

Getting Started

We’ll start by following this GCP quickstart with a few notes and modifications.

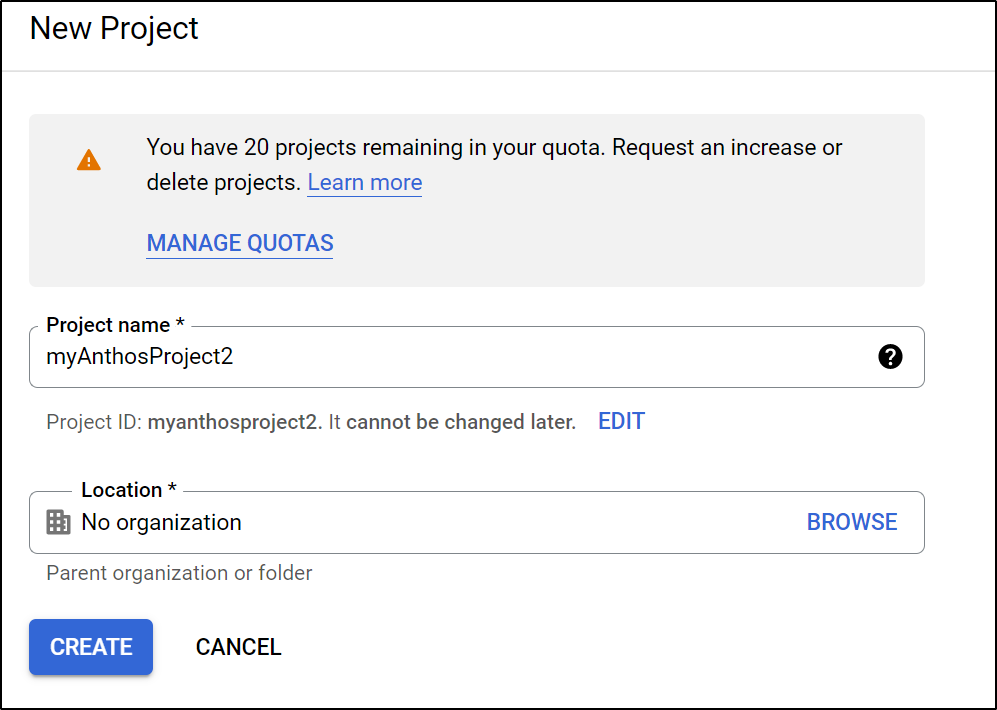

First, we need a GCP project to use. We can create this in the Cloud Console UI or via the gcloud CLI.

$ gcloud projects create --name anthosTestProject --enable-cloud-apis

No project id provided.

Use [anthostestproject-338921] as project id (Y/n)? y

Create in progress for [https://cloudresourcemanager.googleapis.com/v1/projects/anthostestproject-338921].

Waiting for [operations/cp.7026130181910601516] to finish...done.

Enabling service [cloudapis.googleapis.com] on project [anthostestproject-338921]...

Operation "operations/acf.p2-81597672192-4f79b60e-8101-4a5e-bcfe-b2e91f14ae95" finished successfully.

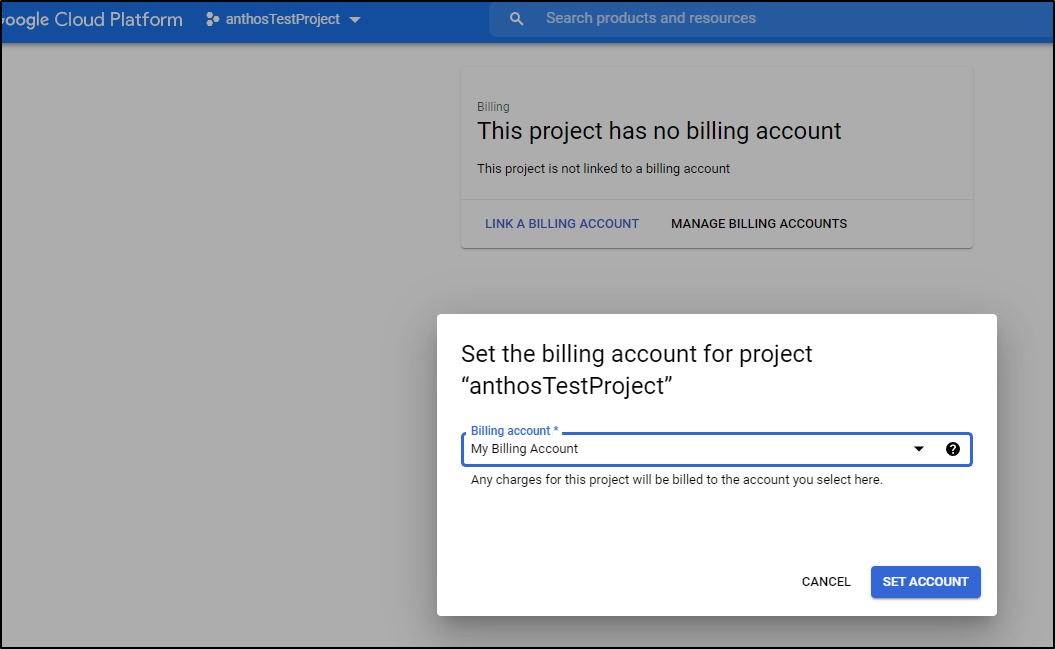

I will admit I tried to keep it to the CLI, but the CLI would not accept my billing account ID:

$ gcloud projects create --name anthosTestProject2 --enable-cloud-apis --billing-project "My Billing Account"

No project id provided.

Use [anthostestproject2-338921] as project id (Y/n)? y

ERROR: (gcloud.projects.create) INVALID_ARGUMENT: Project 'project:My Billing Account' not found or deleted.

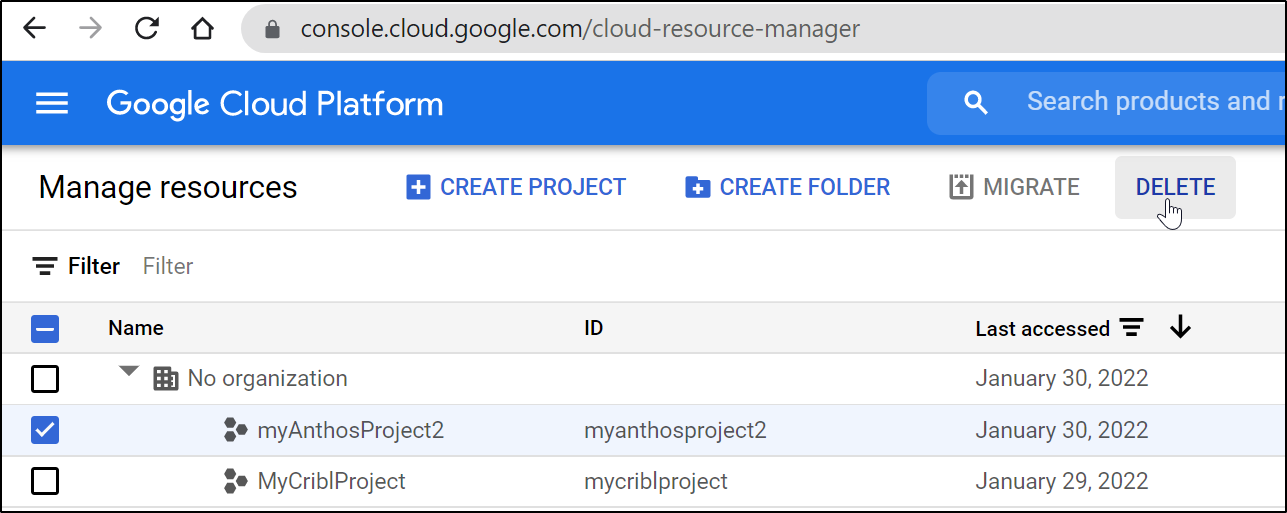

That said, I could do it via the UI after the fact:

Next we want to set the default compute zone. In fact, just listing the zones will allow us to enable the Compute API at the same time:

$ gcloud compute zones list --filter="name~'us'"

API [compute.googleapis.com] not enabled on project [81597672192]. Would you like to enable and retry (this will take a few minutes)? (y/N)? y

Enabling service [compute.googleapis.com] on project [81597672192]...

Operation "operations/acf.p2-81597672192-76fe9aba-5c73-4765-8fd4-a426b0e39db4" finished successfully.

NAME REGION STATUS NEXT_MAINTENANCE TURNDOWN_DATE

us-east1-b us-east1 UP

us-east1-c us-east1 UP

us-east1-d us-east1 UP

us-east4-c us-east4 UP

us-east4-b us-east4 UP

us-east4-a us-east4 UP

us-central1-c us-central1 UP

us-central1-a us-central1 UP

us-central1-f us-central1 UP

us-central1-b us-central1 UP

us-west1-b us-west1 UP

us-west1-c us-west1 UP

us-west1-a us-west1 UP

australia-southeast1-b australia-southeast1 UP

australia-southeast1-c australia-southeast1 UP

australia-southeast1-a australia-southeast1 UP

australia-southeast2-a australia-southeast2 UP

australia-southeast2-b australia-southeast2 UP

australia-southeast2-c australia-southeast2 UP

us-west2-a us-west2 UP

us-west2-b us-west2 UP

us-west2-c us-west2 UP

us-west3-a us-west3 UP

us-west3-b us-west3 UP

us-west3-c us-west3 UP

us-west4-a us-west4 UP

us-west4-b us-west4 UP

us-west4-c us-west4 UP

Then set a zone near you:

$ gcloud config set compute/zone us-central1-f

Updated property [compute/zone].

And enable the servicemanagement api

$ gcloud services enable servicemanagement.googleapis.com

We will want to check our project next, however the script from google (https://github.com/GoogleCloudPlatform/anthos-sample-deployment/releases/latest/download/asd-prereq-checker.sh) neglected to set bash so you’ll get an error (at least in WSL) if you run it directly:

$ wget https://github.com/GoogleCloudPlatform/anthos-sample-deployment/releases/latest/download/asd-prereq-checker.sh

$ chmod u+x ./asd-prereq-checker.sh

$ ./asd-prereq-checker.sh

./asd-prereq-checker.sh: 13: [[: not found

./asd-prereq-checker.sh: 73: function: not found

./asd-prereq-checker.sh: 79: [[: not found

./asd-prereq-checker.sh: 79: ROLE

roles/owner: not found

./asd-prereq-checker.sh: 79: ROLE

roles/owner: not found

Just fix the interpreter and run it:

$ sed -i 's/bin\/sh/bin\/bash/g' asd-prereq-checker.sh

$ ./asd-prereq-checker.sh

Checking project anthostestproject-338921, region us-central1, zone us-central1-f

PASS: User has permission to create service account with the required IAM policies.

PASS: Org Policy will allow this deployment.

PASS: Service Management API is enabled.

WARNING: The following filter keys were not present in any resource : name

PASS: Anthos Sample Deployment does not already exist.

PASS: Project ID is valid.

PASS: Project has sufficient quota to support this deployment.

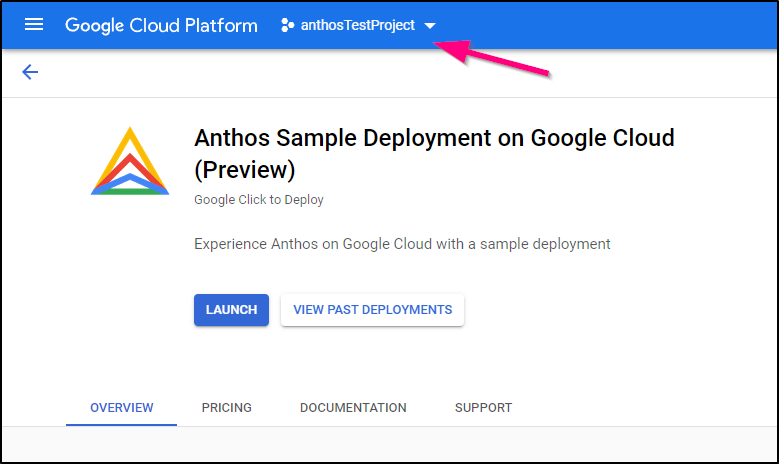

Our next step is to Launch the Anthos Sample Deployment

(note: make sure you select the right project first as GCP console tends to default to the last project you viewed)

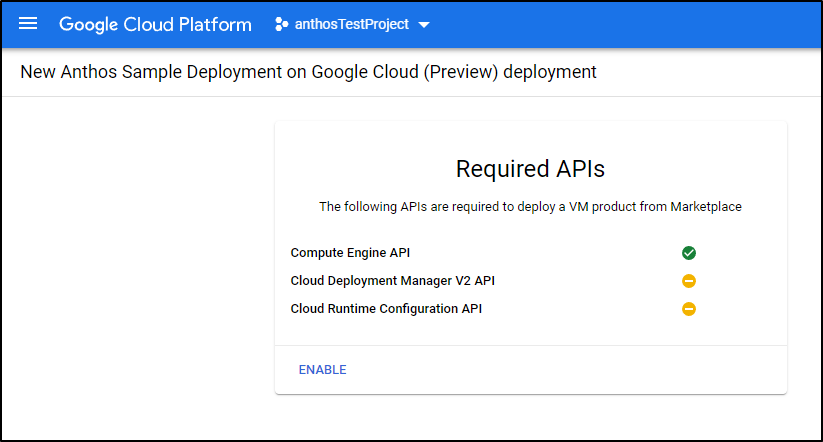

Enable any additional APIs you might have missed:

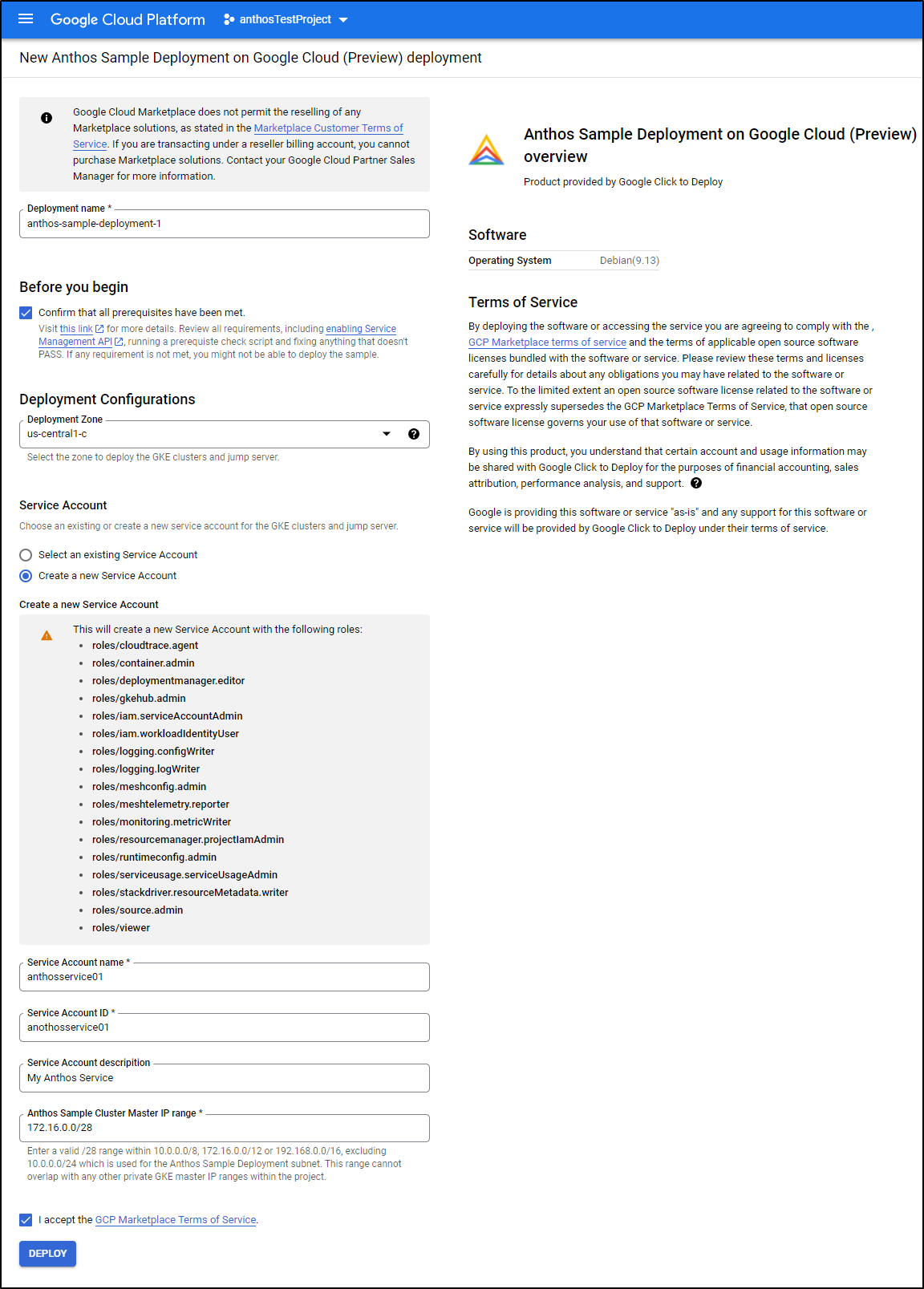

I chose to create a new Service Account for this (just to make cleanup easier later). Click Deploy to deploy.

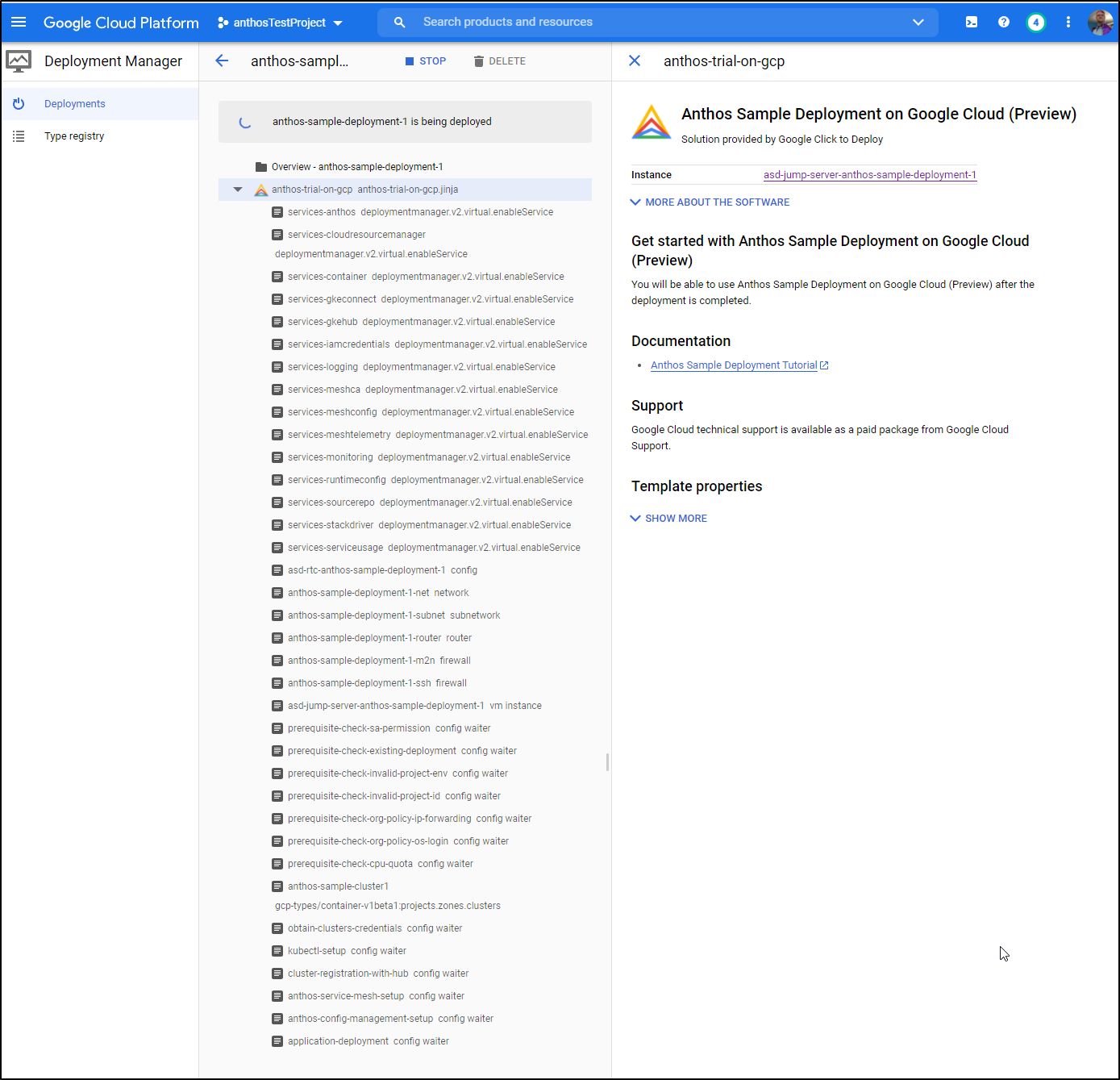

We will now see Anthos start the deployment process which might take some time (around 15 minutes).

When completed, I got an email

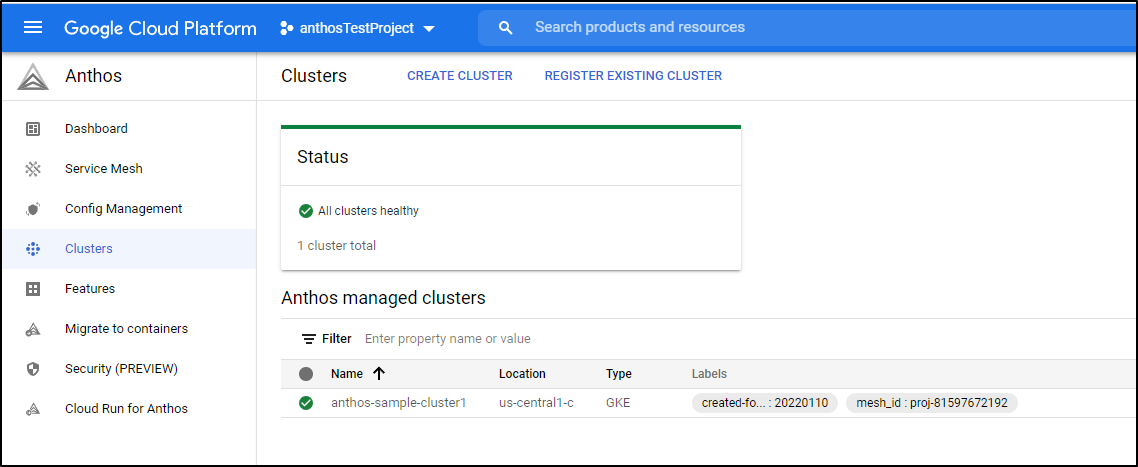

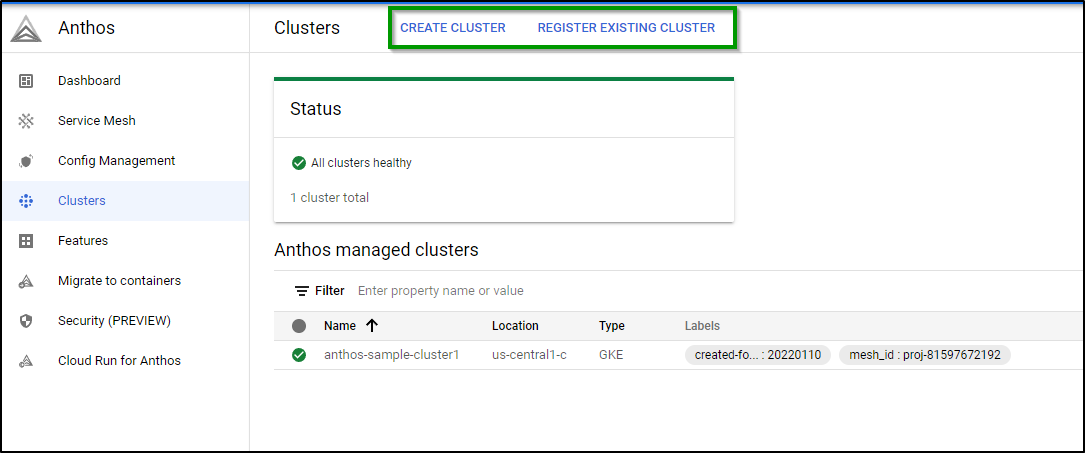

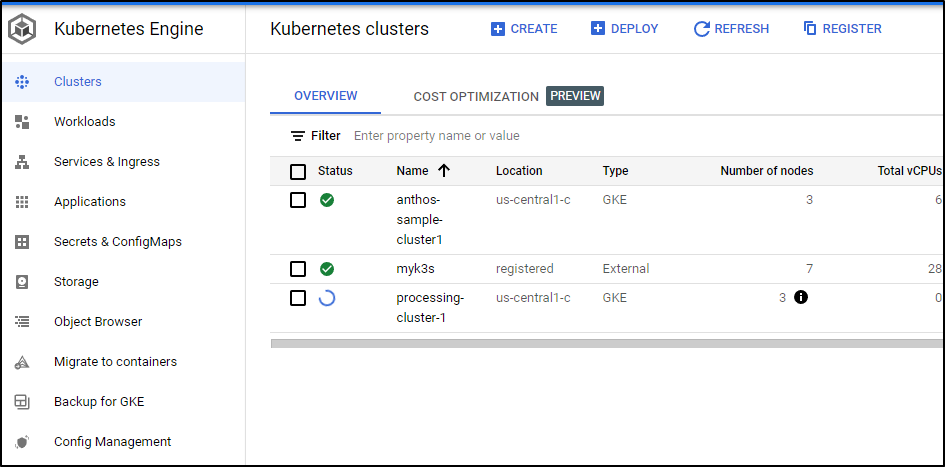

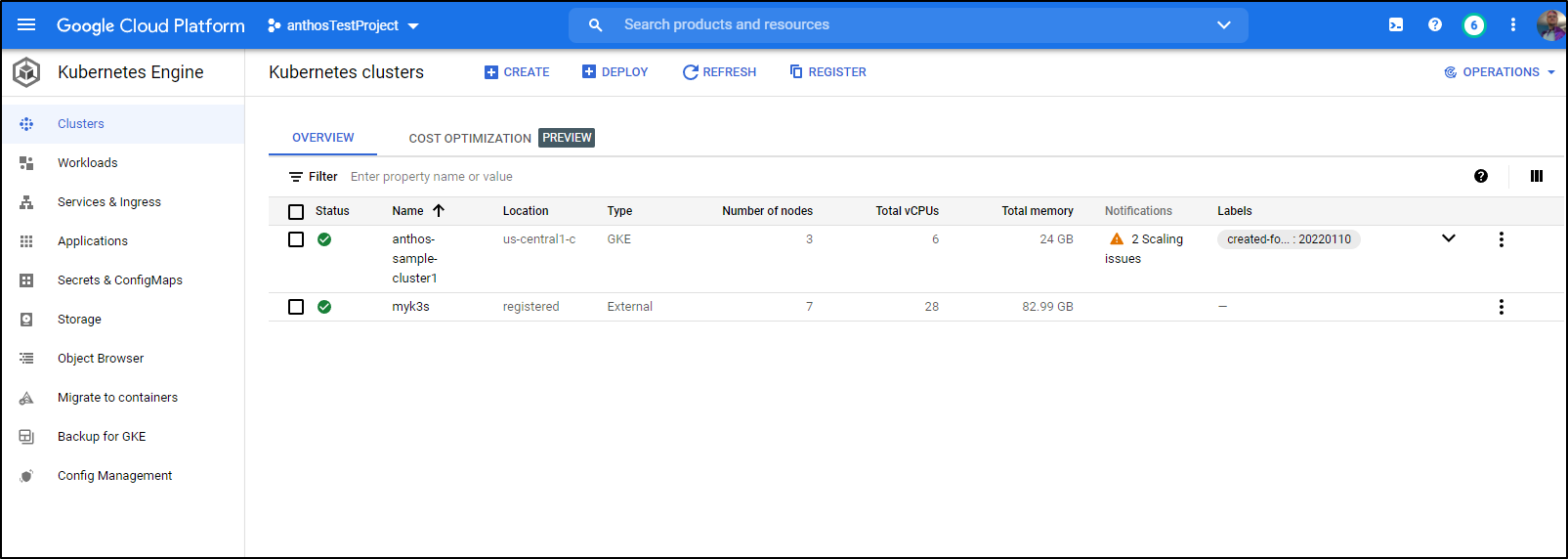

Under Anthos Clusters we can now see a GKE cluster:

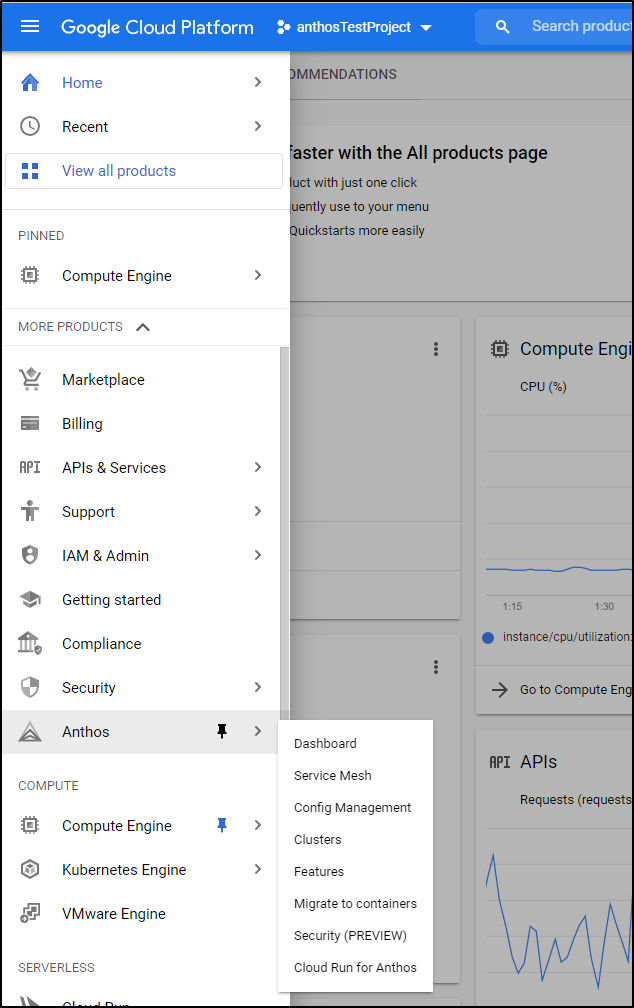

(Note: you can also find all the Anthos settings under a new top level API menu in GCP Console called “Anthos”)

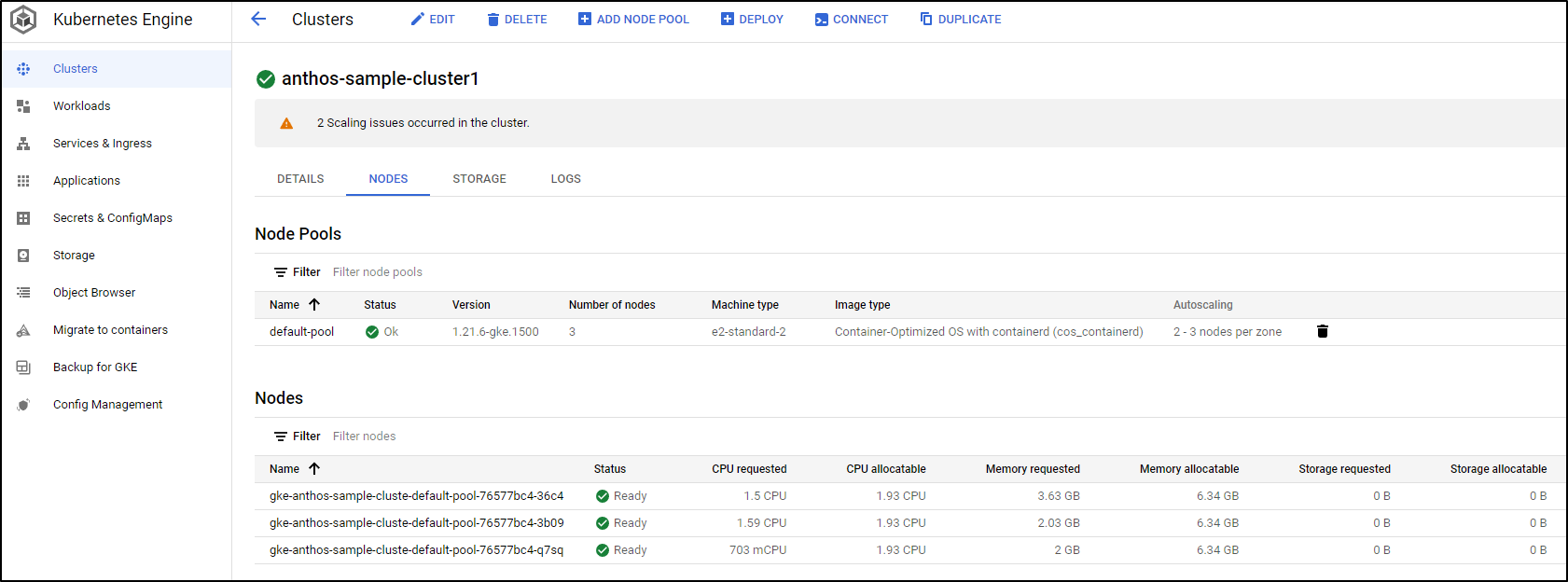

We can also dig up details of the cluster in GKE (such as Node details)

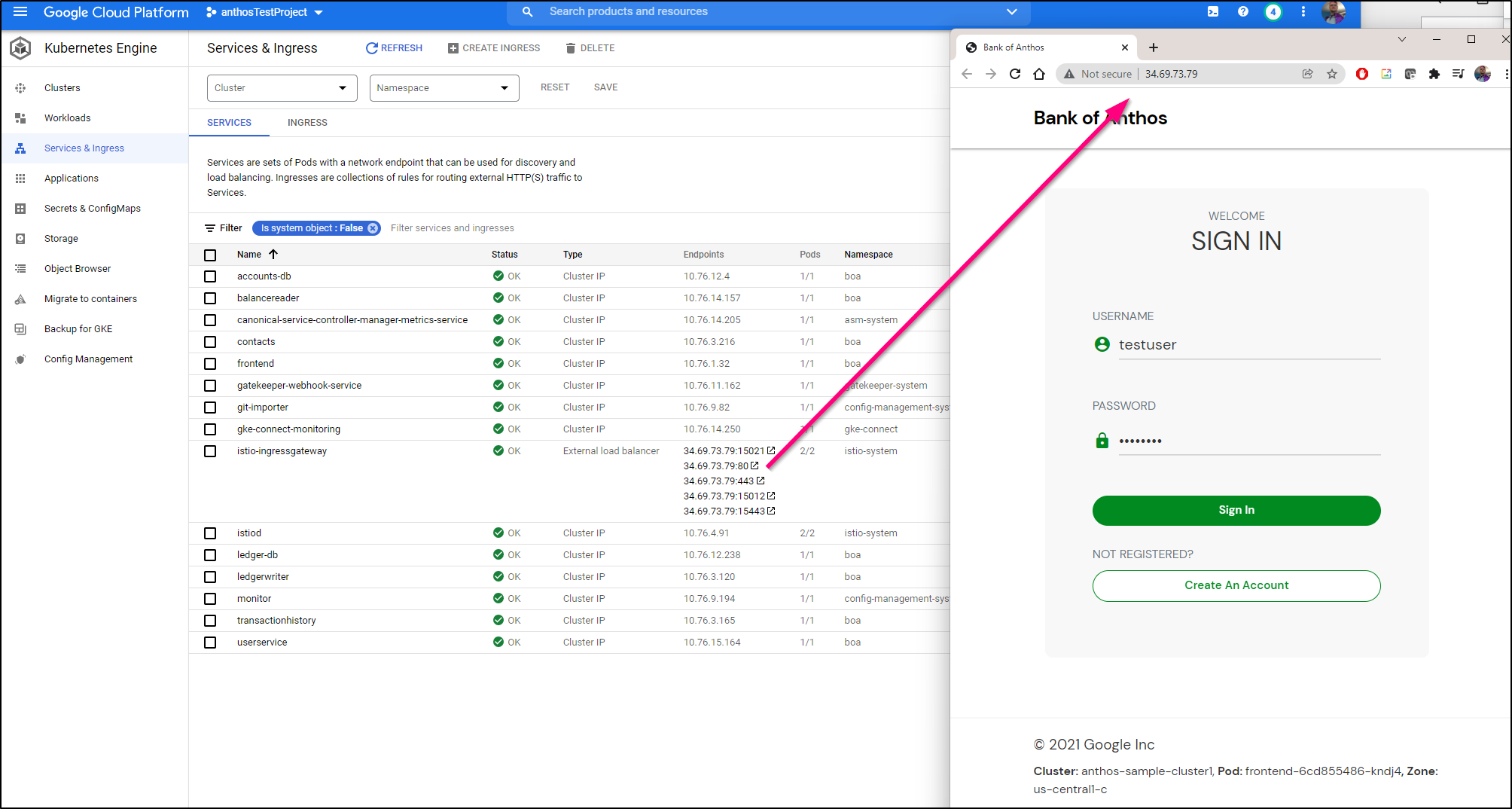

We can look at Workloads to see Pods and Ingresses to see our Ingresses. Looking there we can find the front end to this “bank” app sample:

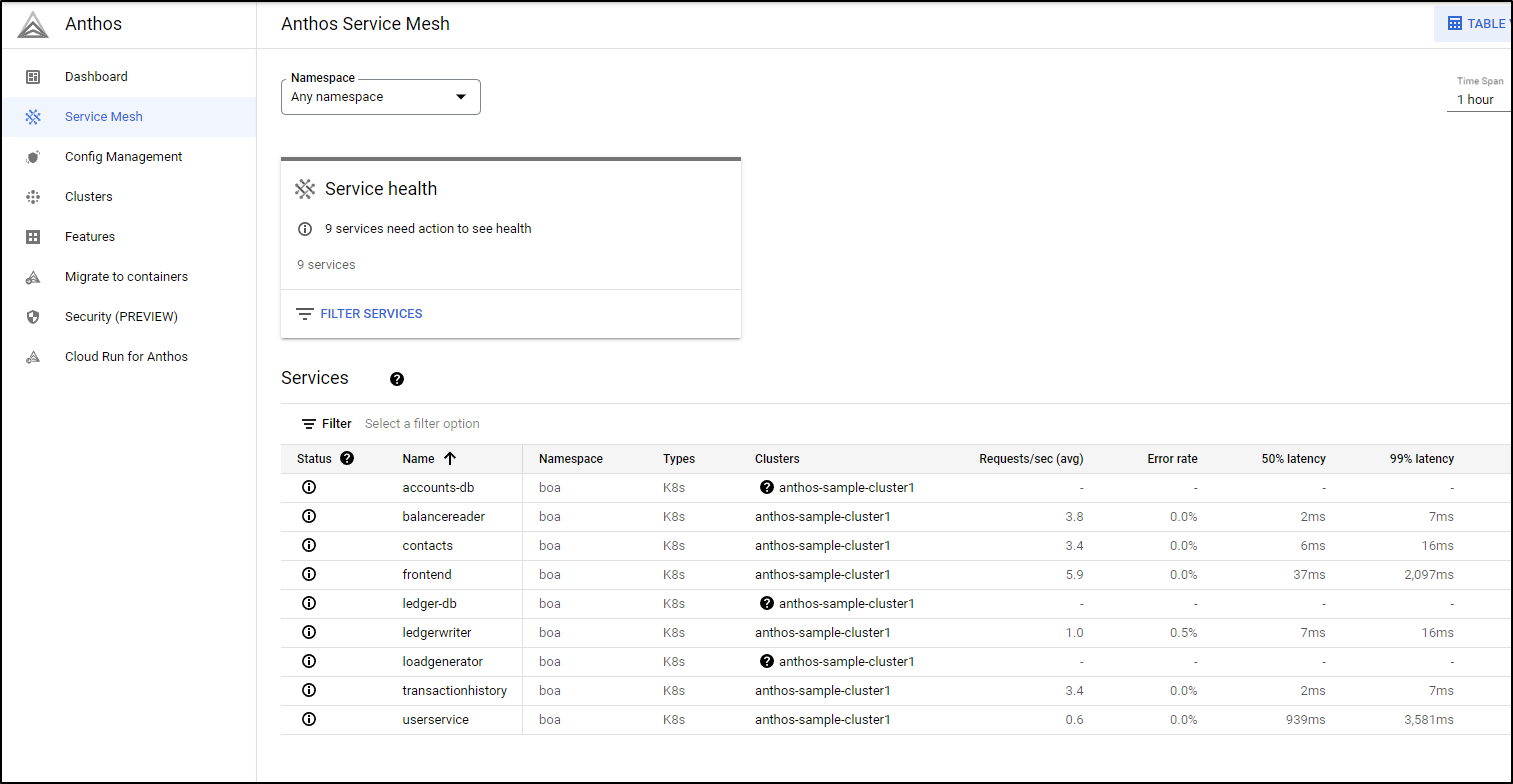

Because we are leveraging Istio mesh, we can go to the Service Mesh page to see details of our services, the frontend logically maps the webservice we checked out above.

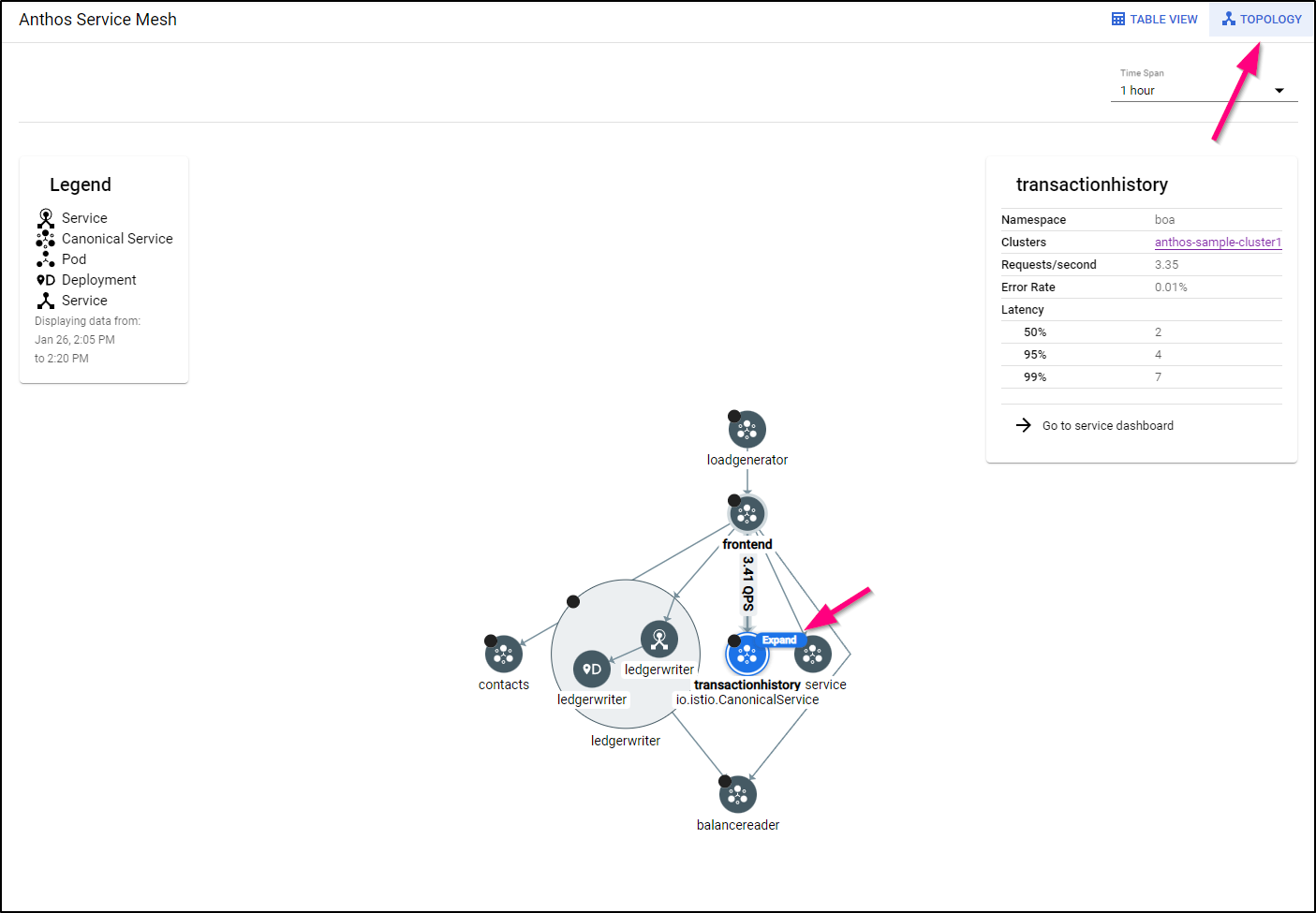

Besides the Table View, there is a great Topology view that shows a map of our services with the ability to expand any top-level service.

SLOs and SLIs

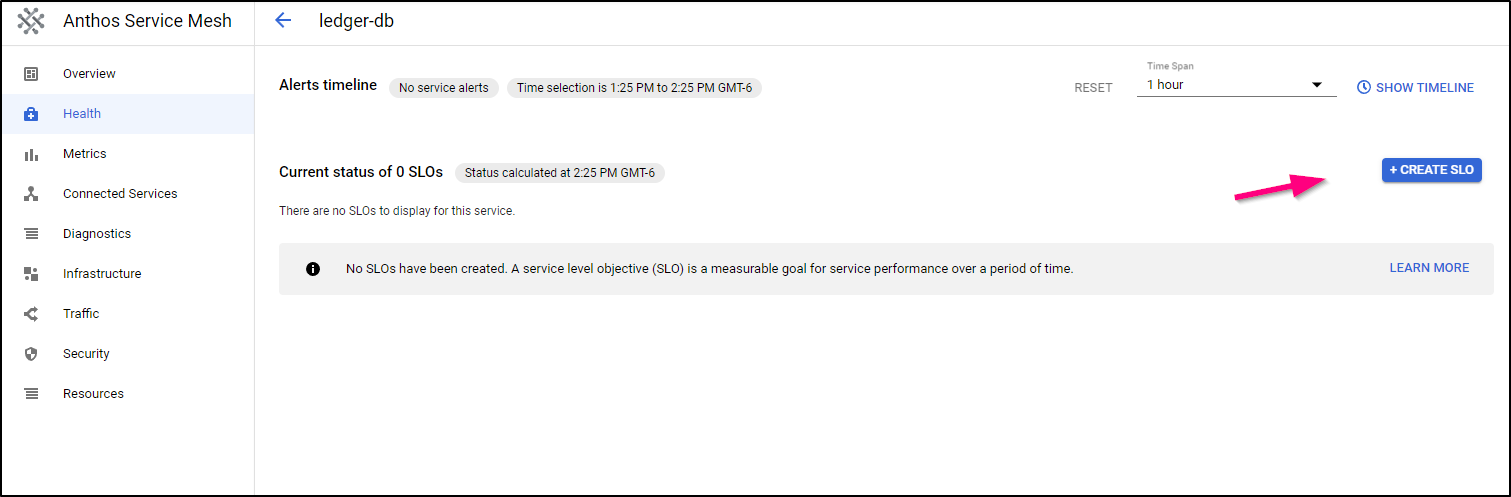

One of the handy parts of Anthos Service Mesh is that we can use metrics to generate Service Level Indicators and Objectives.

To do this, select a service and choose “Create SLO”

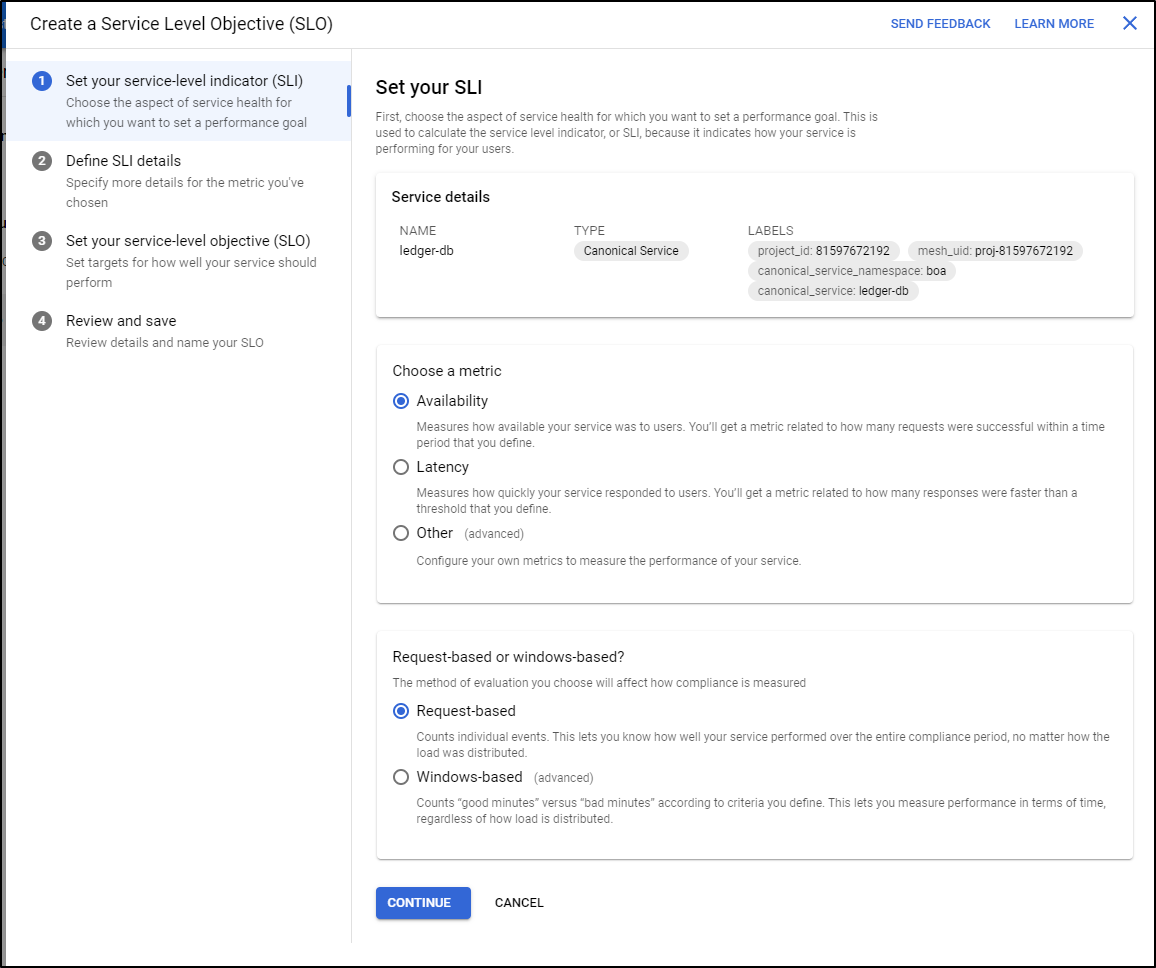

Then select the type of SLI

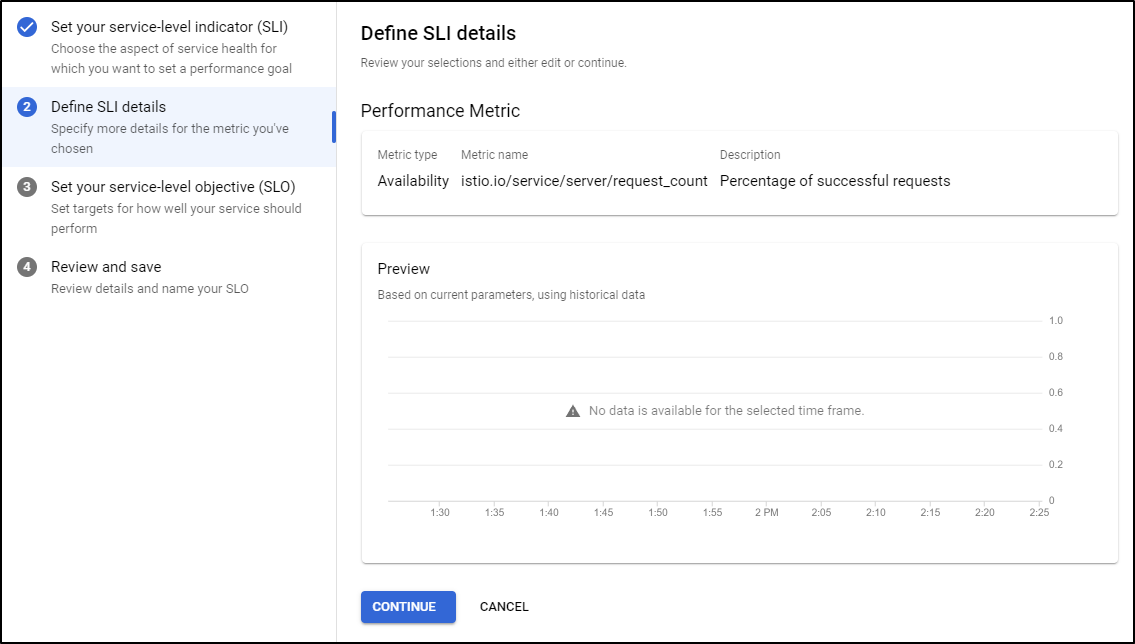

Set any details on SLI (if applicable)

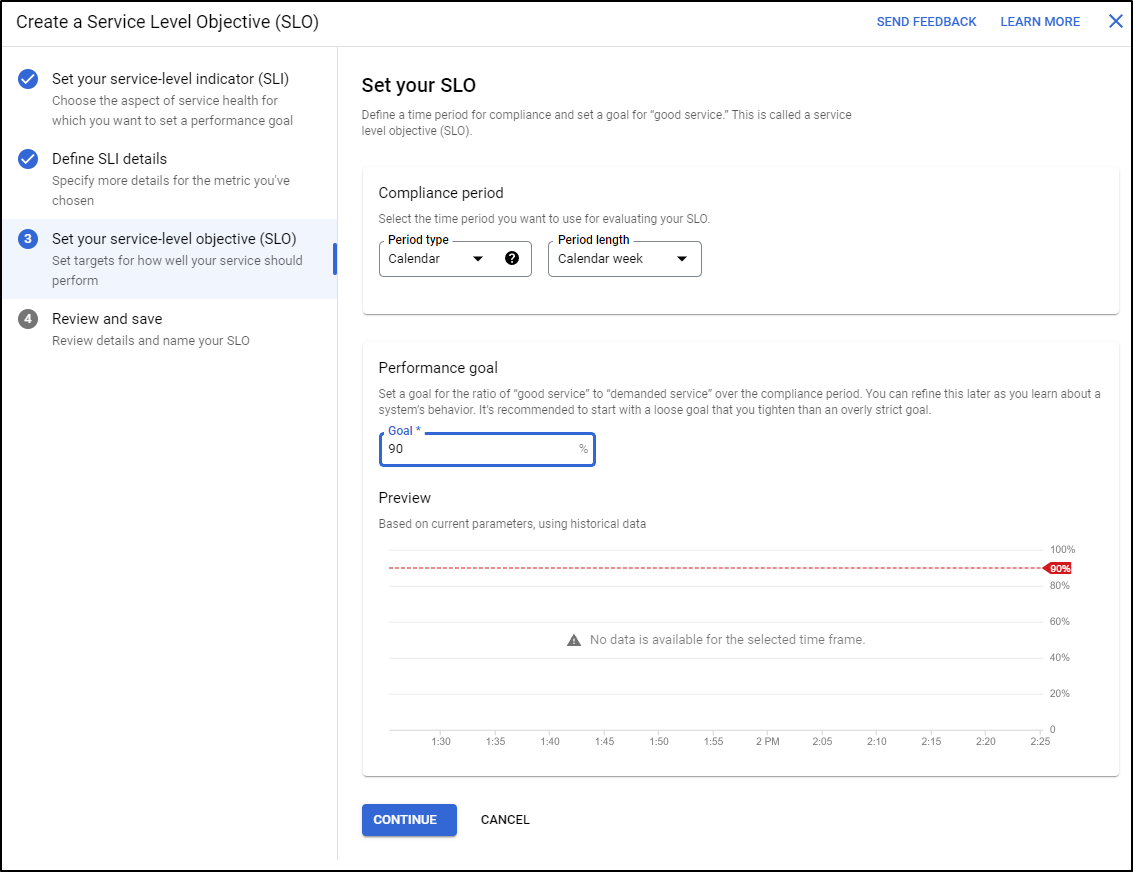

Now set your SLO (Objective). We’ll want a metric specific (such as percentage) and a duration (such as week)

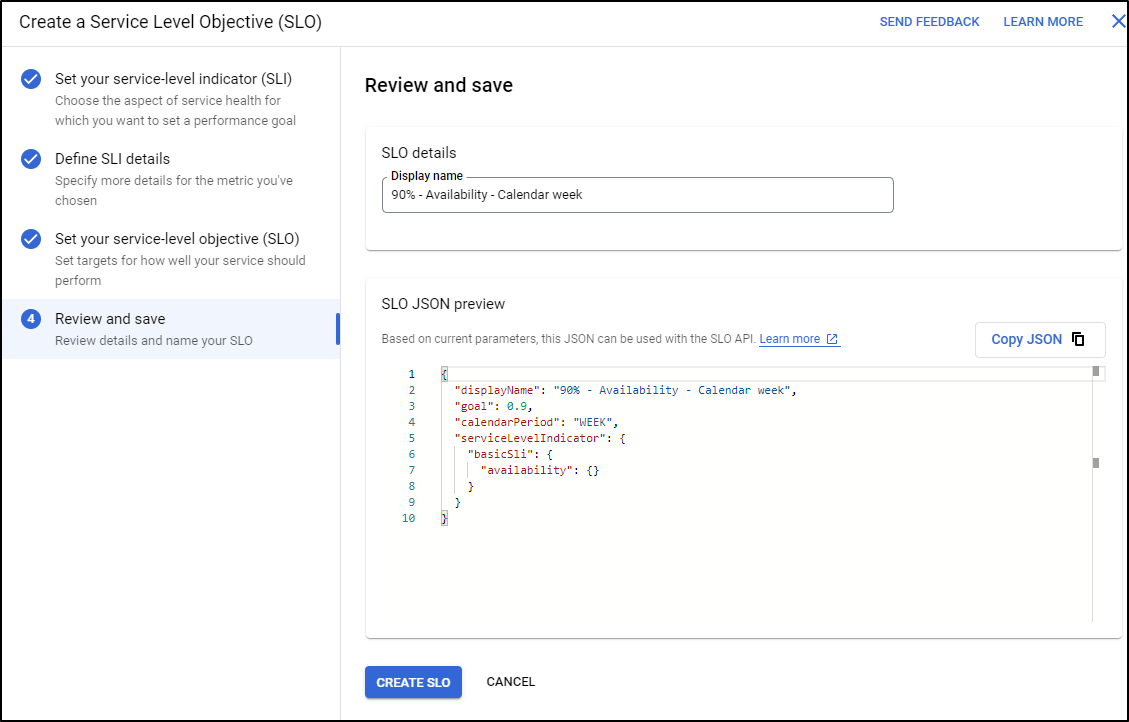

Then we can see the JSON and save it

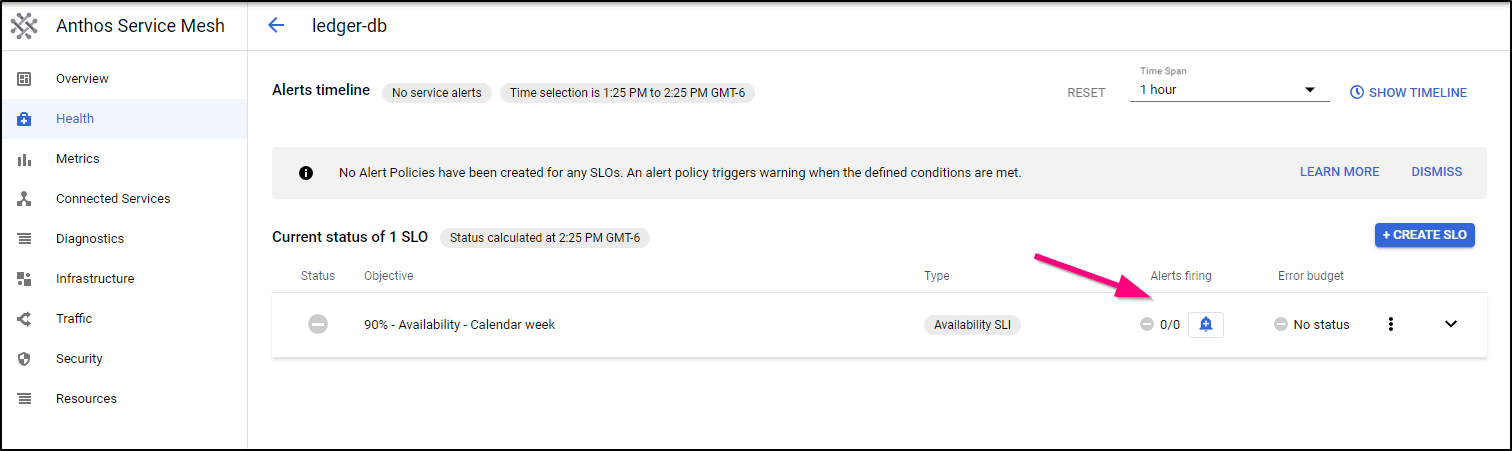

Now I can stop here and just use the Health Dashboard to see SLOs. However, we likely want to create an alert. Click the “+” Alert icon

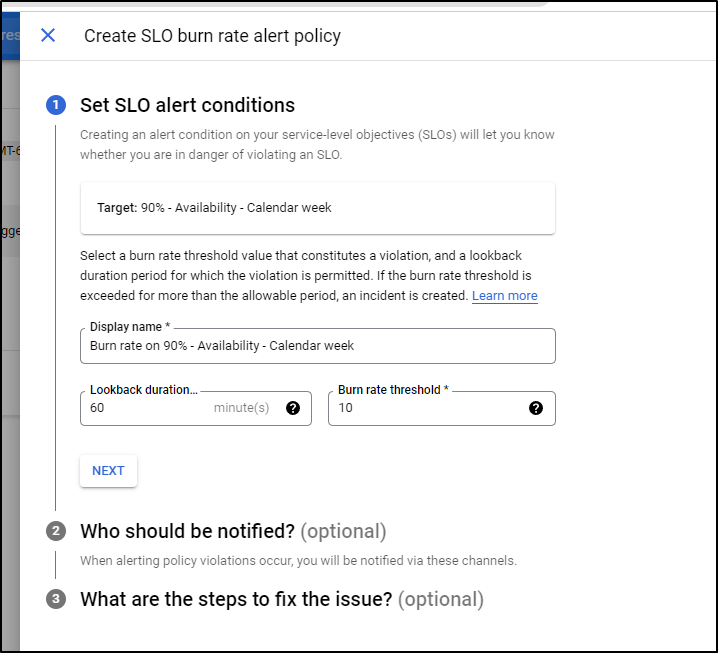

This brings up the alerting menu

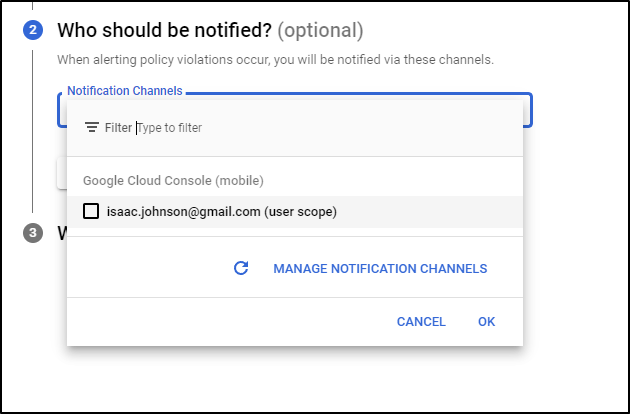

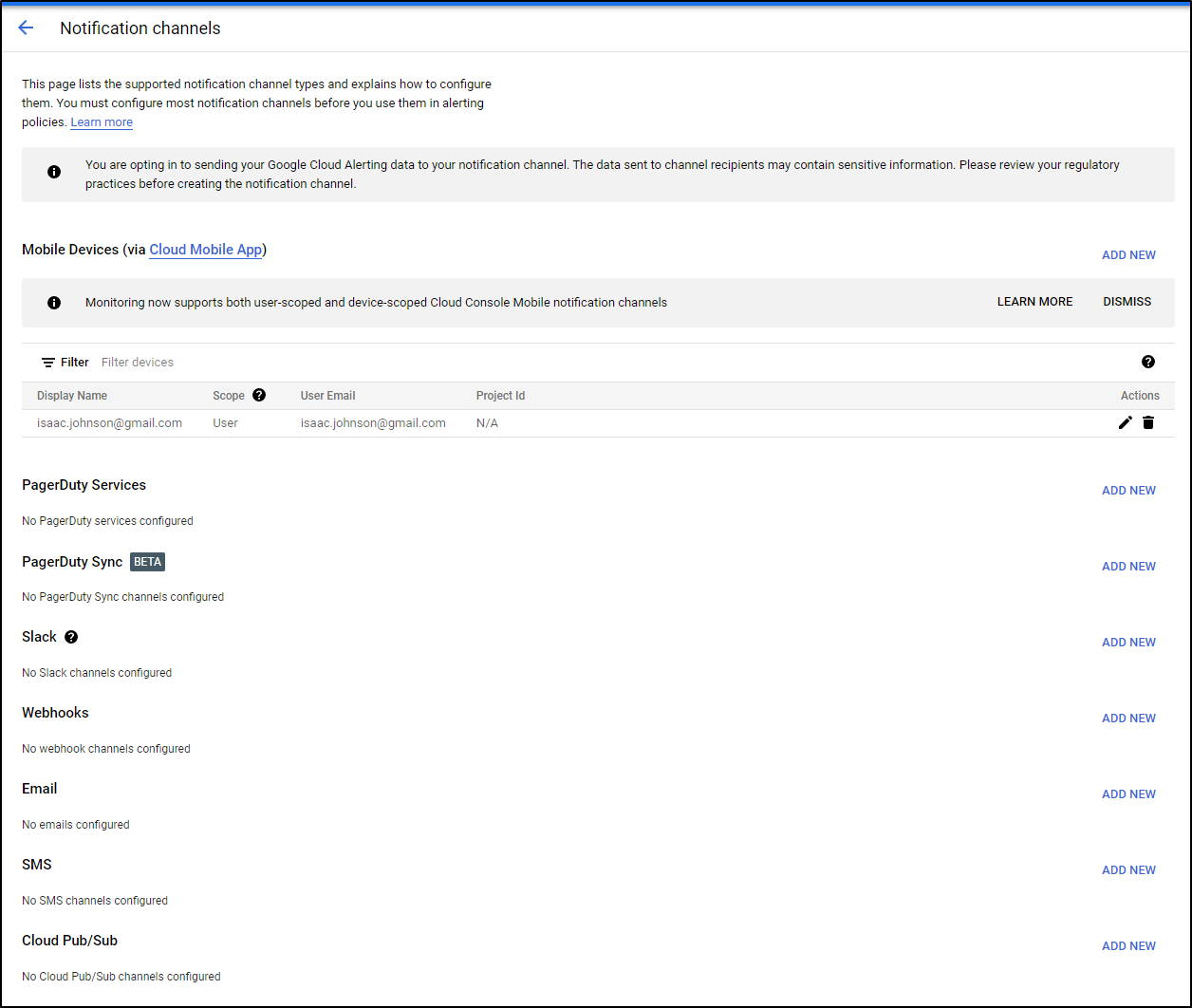

who should be notified:

Notifications, by default, include the email, but we can do all sorts of fun stuff including Slack, Pagerduty or even webhooks (which could be anything, such as Rundeck)

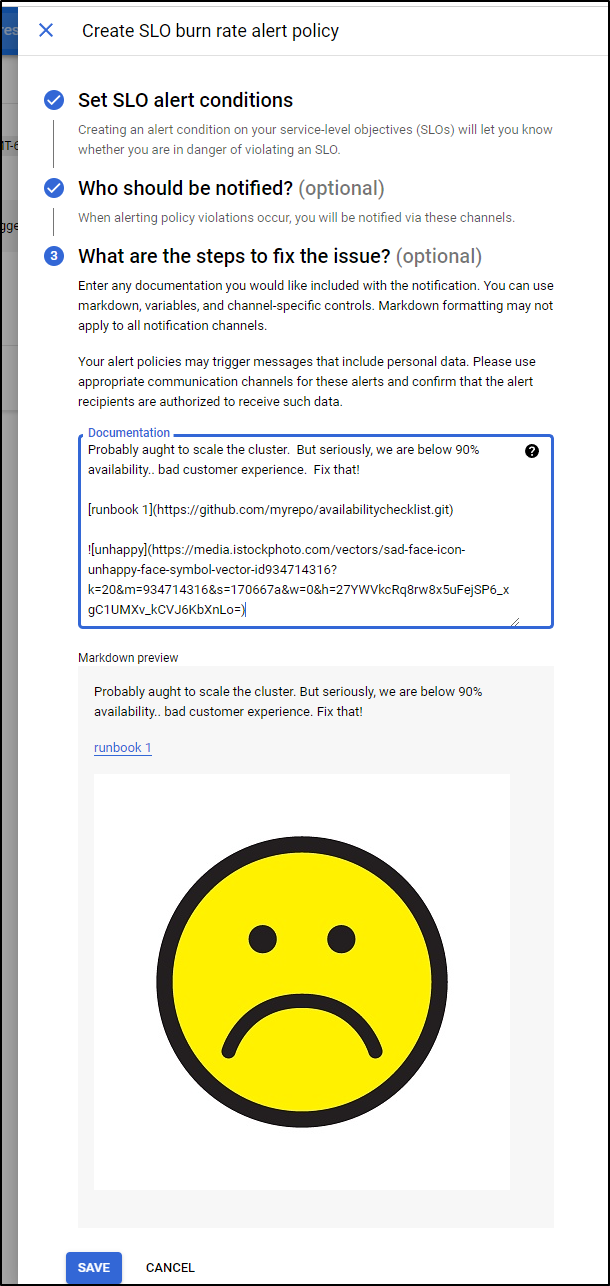

Our alerts can include some markdown including links and images

Adding a Cluster to Anthos

We can use bmctl to create a cluster from scratch (akin to kubeadm). This integrates with AWS, VSphere and baremetal. Use the “Create Cluster” to create a brand new cluster this way.

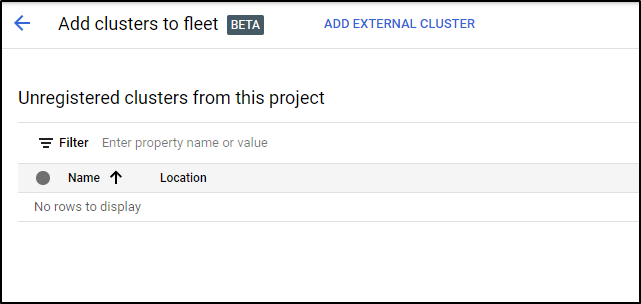

Otherwise, if you want to add an on-prem or ‘other cloud’ cluster already running, use “Register Existing Cluster” to add a cluster to a fleet

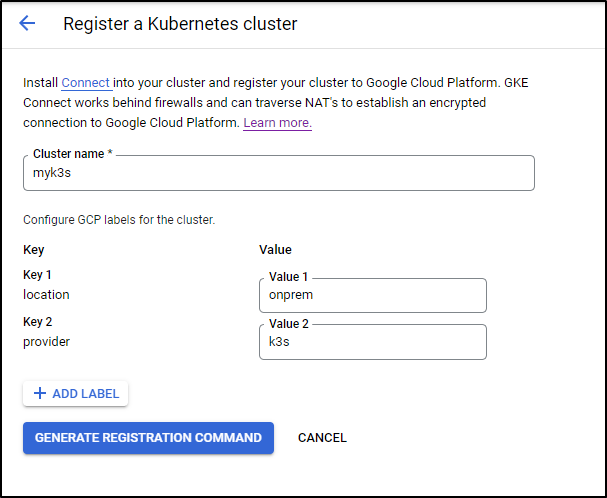

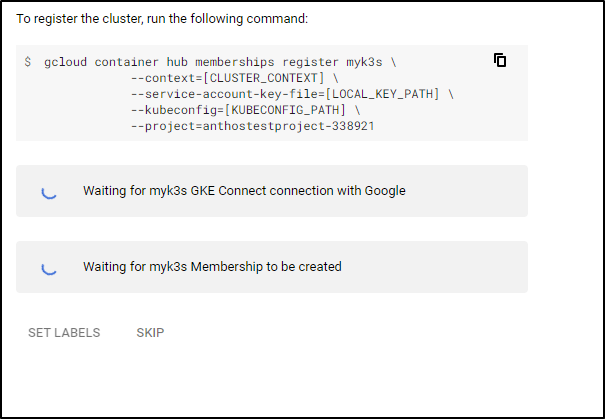

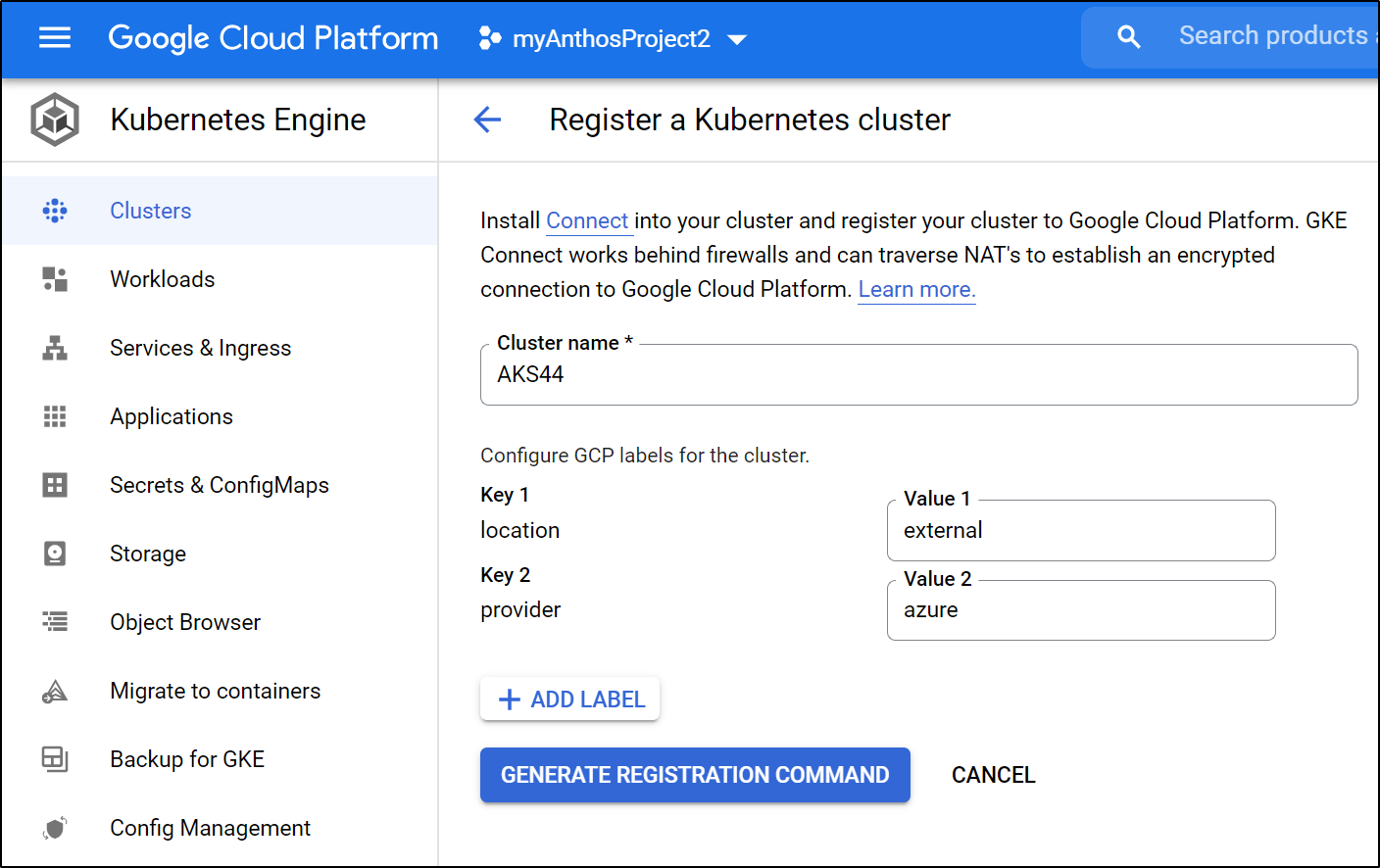

Click “Add External Cluster”. From there we can set applicable lables then click the “Generate Registration Command” button

For this we need an SA that can access the APIs:

$ GKE_PROJECT_ID=anthostestproject-338921

$ FLEET_HOST_PROJECT_ID=anthostestproject-338921

$ FLEET_HOST_PROJECT_NUMBER=$(gcloud projects describe "${FLEET_HOST_PROJECT_ID}" --format "value(projectNumber)")

# adding a policy

$ gcloud projects add-iam-policy-binding "${FLEET_HOST_PROJECT_ID}" --member "serviceAccount:service-${FLEET_HOST_PROJECT_NUMBER}@anthostestproject-338921.iam.gserviceaccount.com" --role roles/gkehub.serviceAgent

Updated IAM policy for project [anthostestproject-338921].

bindings:

- members:

- serviceAccount:service-81597672192@gcp-sa-anthosconfigmanagement.iam.gserviceaccount.com

...snip....

$ gcloud projects add-iam-policy-binding "${GKE_PROJECT_ID}" --member "serviceAccount:service-${FLEET_HOST_PROJECT_NUMBER}@anthostestproject-338921.iam.gserviceaccount.com" --role roles/gkehub.serviceAgent

Updated IAM policy for project [anthostestproject-338921].

bindings:

- members:

- serviceAccount:service-81597672192@gcp-sa-anthosconfigmanagement.iam.gserviceaccount.com

role: roles/anthosconfigmanagement.serviceAgent

...snip...

Then we can list our service accounts and download the key:

$ gcloud iam service-accounts list

DISPLAY NAME EMAIL DISABLED

anthosservice01 anothosservice01@anthostestproject-338921.iam.gserviceaccount.com False

service-81597672192@anthostestproject-338921.iam.gserviceaccount.com False

Compute Engine default service account 81597672192-compute@developer.gserviceaccount.com False

$ gcloud iam service-accounts keys create ./mykeys.json --iam-account="service-81597672192@anthostestproject-338921.iam.gserviceaccount.com"

created key [089d8a600365b7d60f0fbbfa7c55c602792ab01e] of type [json] as [./mykeys.json] for [service-81597672192@anthostestproject-338921.iam.gserviceaccount.com]

So we the current context and kubeconfig file:

$ cat ~/.kube/config | grep ^current-context

current-context: default

So our command will be:

gcloud container hub memberships register myk3s \

--context=default \

--service-account-key-file=./mykeys.json \

--kubeconfig=/home/builder/.kube/config \

--project=anthostestproject-338921

invoked:

$ gcloud container hub memberships register myk3s \

> --context=default \

> --service-account-key-file=./mykeys.json \

> --kubeconfig=/home/builder/.kube/config \

> --project=anthostestproject-338921

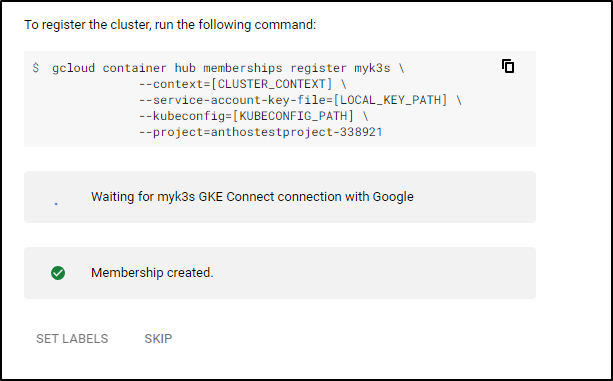

Waiting for membership to be created...done.

Created a new membership [projects/anthostestproject-338921/locations/global/memberships/myk3s] for the cluster [myk3s]

Generating the Connect Agent manifest...

Deploying the Connect Agent on cluster [myk3s] in namespace [gke-connect]...

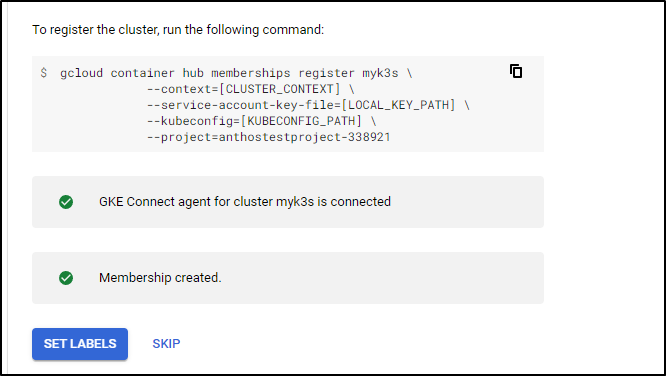

Deployed the Connect Agent on cluster [myk3s] in namespace [gke-connect].

Finished registering the cluster [myk3s] with the Hub.

I did not see it connect and the logs showed a permission issue:

$ kubectl logs -n gke-connect gke-connect-agent-20220107-01-00-66d584578c-p6pk6 | tail -n20

2022/01/26 21:21:12.607956 tunnel.go:324: serve: opening egress stream...

2022/01/26 21:21:12.607930 dialer.go:225: Dial successful, current connections: 1

2022/01/26 21:21:12.608065 tunnel.go:332: serve: registering project_number="81597672192", connection_id="myk3s" connection_class="DEFAULT" agent_version="20220107-01-00" ...

2022/01/26 21:21:12.730161 tunnel.go:381: serve: recv error: rpc error: code = PermissionDenied desc = The caller does not have permission

2022/01/26 21:21:12.730268 dialer.go:277: dialer: dial: connection to gkeconnect.googleapis.com:443 failed after 216.846961ms: serve: receive request failed: rpc error: code = PermissionDenied desc = The caller does not have permission

2022/01/26 21:21:12.760536 dialer.go:207: dialer: connection done: serve: receive request failed: rpc error: code = PermissionDenied desc = The caller does not have permission

2022/01/26 21:21:12.760595 dialer.go:295: dialer: backoff: 47.586854673s

E0126 21:21:16.193136 1 reflector.go:131] third_party/golang/kubeclient/tools/cache/reflector.go:99: Failed to list *unstructured.Unstructured: the server could not find the requested resource

E0126 21:21:55.113358 1 reflector.go:131] third_party/golang/kubeclient/tools/cache/reflector.go:99: Failed to list *unstructured.Unstructured: the server could not find the requested resource

2022/01/26 21:22:00.348598 dialer.go:239: dialer: dial interval was 47.835224048s

2022/01/26 21:22:00.348686 dialer.go:183: dialer: waiting for next event, outstanding connections=0

2022/01/26 21:22:00.348746 dialer.go:264: dialer: dial: connecting to gkeconnect.googleapis.com:443...

2022/01/26 21:22:00.440461 dialer.go:275: dialer: dial: connected to gkeconnect.googleapis.com:443

2022/01/26 21:22:00.440699 tunnel.go:324: serve: opening egress stream...

2022/01/26 21:22:00.440532 dialer.go:225: Dial successful, current connections: 1

2022/01/26 21:22:00.440916 tunnel.go:332: serve: registering project_number="81597672192", connection_id="myk3s" connection_class="DEFAULT" agent_version="20220107-01-00" ...

2022/01/26 21:22:00.624441 tunnel.go:381: serve: recv error: rpc error: code = PermissionDenied desc = The caller does not have permission

2022/01/26 21:22:00.624523 dialer.go:277: dialer: dial: connection to gkeconnect.googleapis.com:443 failed after 275.753082ms: serve: receive request failed: rpc error: code = PermissionDenied desc = The caller does not have permission

2022/01/26 21:22:00.643350 dialer.go:207: dialer: connection done: serve: receive request failed: rpc error: code = PermissionDenied desc = The caller does not have permission

2022/01/26 21:22:00.643409 dialer.go:295: dialer: backoff: 42.394959714s

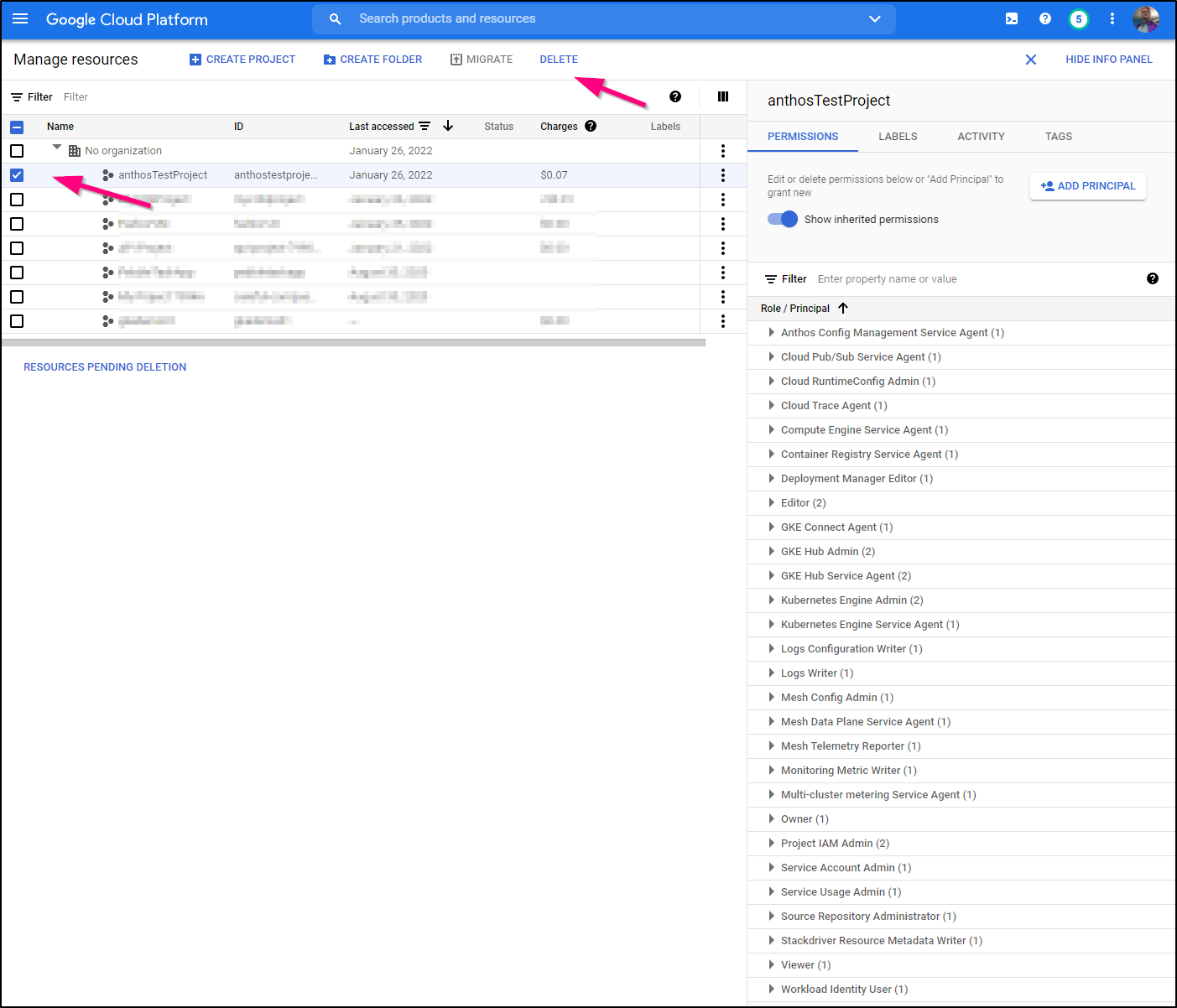

Let’s double check our IAM perms:

$ gcloud services enable --project=${FLEET_HOST_PROJECT_ID} container.googleapis.com gkeconnect.googleapis.com gkehub.googleapis.com cloudresourceman

ager.googleapis.com iam.googleapis.com

Operation "operations/acat.p2-81597672192-74970c93-bd49-4e12-ac50-97f01d42e836" finished successfully.

$ gcloud projects add-iam-policy-binding ${FLEET_HOST_PROJECT_ID} --member user:isaac.johnson@gmail.com --role=roles/gkehub.admin --role=roles/iam.serviceAccountAdmin --role=roles/iam.serviceAccountKeyAdmin --role=roles/resourcemanager.projectIamAdmin

Updated IAM policy for project [anthostestproject-338921].

bindings:

- members:

- serviceAccount:service-81597672192@gcp-sa-anthosconfigmanagement.iam.gserviceaccount.com

role: roles/anthosconfigmanagement.serviceAgent

...snip....

My policies showed missing users, so I corrected that:

$ gcloud projects get-iam-policy ${FLEET_HOST_PROJECT_ID} | grep gkehub

role: roles/gkehub.admin

- serviceAccount:service-81597672192@gcp-sa-gkehub.iam.gserviceaccount.com

role: roles/gkehub.serviceAgent

$ gcloud beta services identity create --service=gkehub.googleapis.com --project=${FLEET_HOST_PROJECT_ID}

Service identity created: service-81597672192@gcp-sa-gkehub.iam.gserviceaccount.com

$ gcloud projects add-iam-policy-binding "${FLEET_HOST_PROJECT_ID}" \

> --member "serviceAccount:service-${FLEET_HOST_PROJECT_NUMBER}@gcp-sa-gkehub.iam.gserviceaccount.com" \

> --role roles/gkehub.serviceAgent

$ gcloud projects add-iam-policy-binding "${GKE_PROJECT_ID}" \

--member "serviceAccount:service-${FLEET_HOST_PROJECT_NUMBER}@gcp-sa-gkehub.iam.gserviceaccount.com" \

--role roles/gkehub.serviceAgent

$ gcloud projects add-iam-policy-binding ${FLEET_HOST_PROJECT_ID} \

ember="> --member="serviceAccount:service-${FLEET_HOST_PROJECT_NUMBER}@${FLEET_HOST_PROJECT_ID}.iam.gserviceaccount.com" --role="roles/gkehub.admin"

Updated IAM policy for project [anthostestproject-338921].

bindings:

- members:

- serviceAccount:service-81597672192@gcp-sa-anthosconfigmanagement.iam.gserviceaccount.com

role: roles/anthosconfigmanagement.serviceAgent

..snip...

$ gcloud projects add-iam-policy-binding ${FLEET_HOST_PROJECT_ID} --member="serviceAccount:service-${FLEET_HOST_PROJECT_NUMBER}@${FLEET_HOST_PROJECT_ID}.iam.gserviceaccount.com" --role="roles/container.admin"

$ gcloud projects add-iam-policy-binding ${FLEET_HOST_PROJECT_ID} --member="serviceAccount:service-${FLEET_HOST_PROJECT_NUMBER}@${FLEET_HOST_PROJECT_ID}.iam.gserviceaccount.com" --role="roles/gkehub.connect"

That seemed to work:

Now we can see new errors, but at least the agent is running

$ kubectl logs gke-connect-agent-20220107-01-00-66d584578c-vwwdt -n gke-connect | tail -n10

2022/01/26 21:35:37.891957 public_key_authenticator.go:97: authenticated principal is cloud-client-api-gke@system.gserviceaccount.com

2022/01/26 21:35:37.892249 nonstreaming.go:72: GET "https://kubernetes.default.svc.cluster.local/api/v1/nodes?resourceVersion=0"

2022/01/26 21:35:37.894324 nonstreaming.go:126: Response status "403 Forbidden" for "https://kubernetes.default.svc.cluster.local/api/v1/pods?resourceVersion=0"

2022/01/26 21:35:37.895053 nonstreaming.go:126: Response status "403 Forbidden" for "https://kubernetes.default.svc.cluster.local/api/v1/nodes?resourceVersion=0"

2022/01/26 21:35:48.015436 public_key_authenticator.go:97: authenticated principal is cloud-client-api-gke@system.gserviceaccount.com

2022/01/26 21:35:48.015840 nonstreaming.go:72: GET "https://kubernetes.default.svc.cluster.local/api/v1/nodes?resourceVersion=0"

2022/01/26 21:35:48.016955 public_key_authenticator.go:97: authenticated principal is cloud-client-api-gke@system.gserviceaccount.com

2022/01/26 21:35:48.017275 nonstreaming.go:72: GET "https://kubernetes.default.svc.cluster.local/api/v1/pods?resourceVersion=0"

2022/01/26 21:35:48.019491 nonstreaming.go:126: Response status "403 Forbidden" for "https://kubernetes.default.svc.cluster.local/api/v1/pods?resourceVersion=0"

2022/01/26 21:35:48.019549 nonstreaming.go:126: Response status "403 Forbidden" for "https://kubernetes.default.svc.cluster.local/api/v1/nodes?resourceVersion=0"

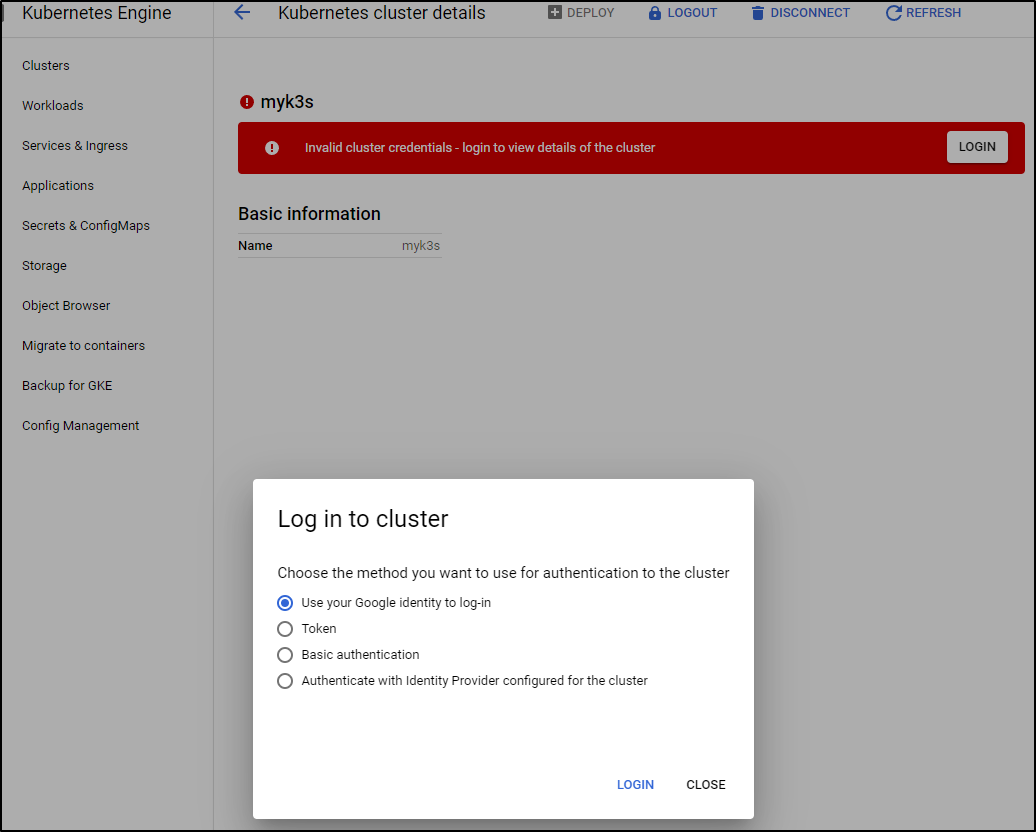

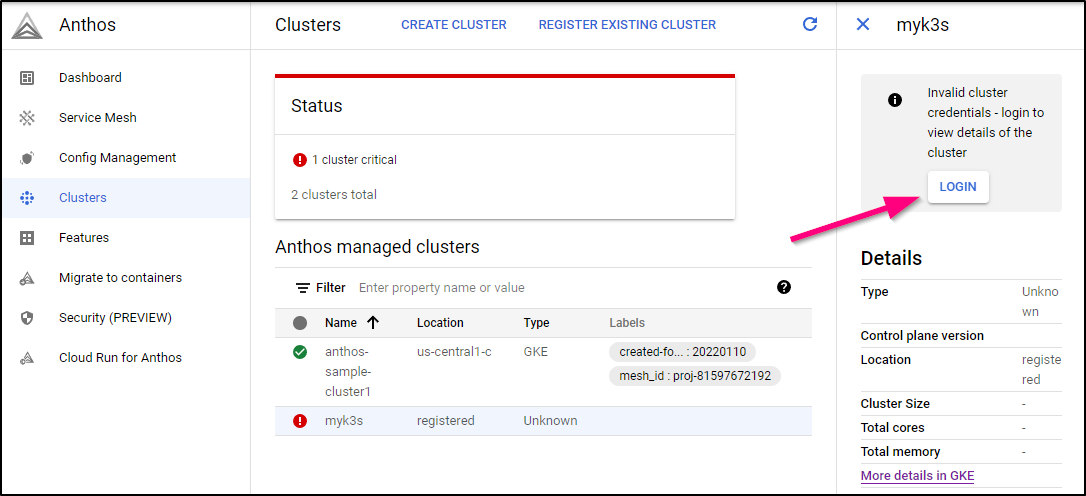

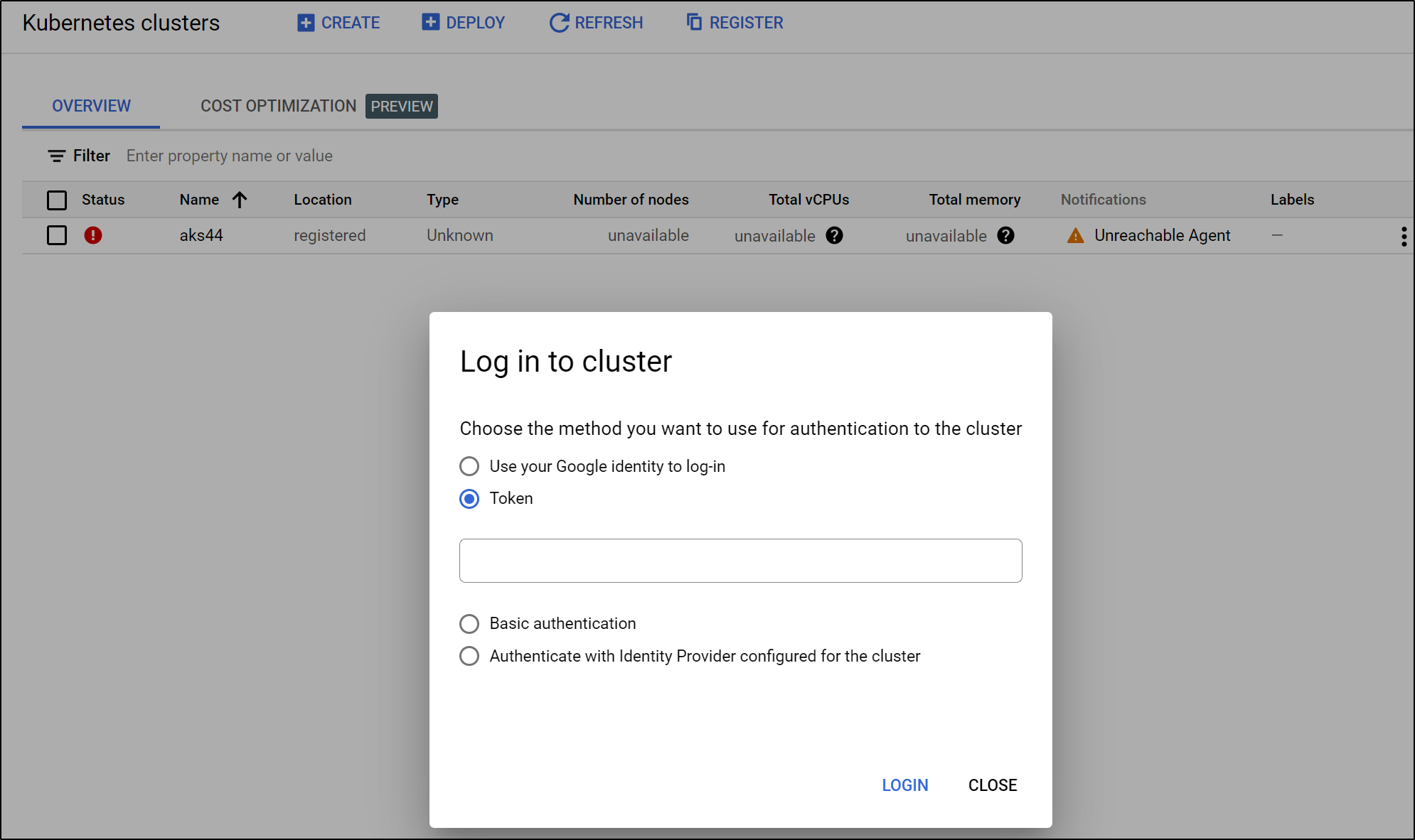

This required me to go to Anthos and login with an admin user token.

you may see the login page as such:

I did a quick admin user create (same steps you would use for the kubernetes dashboard):

$ cat adminaccount.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

$ kubectl apply -f adminaccount.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

$ kubectl describe secret -n kube-system $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-v9tk7

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 28745a62-9149-4f59-be98-d182420f7087

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUasSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdSUasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasddfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdPv5g

ca.crt: 570 bytes

Then use that in the clusters page (e.g. https://console.cloud.google.com/anthos/clusters?project=anthostestproject-338921)

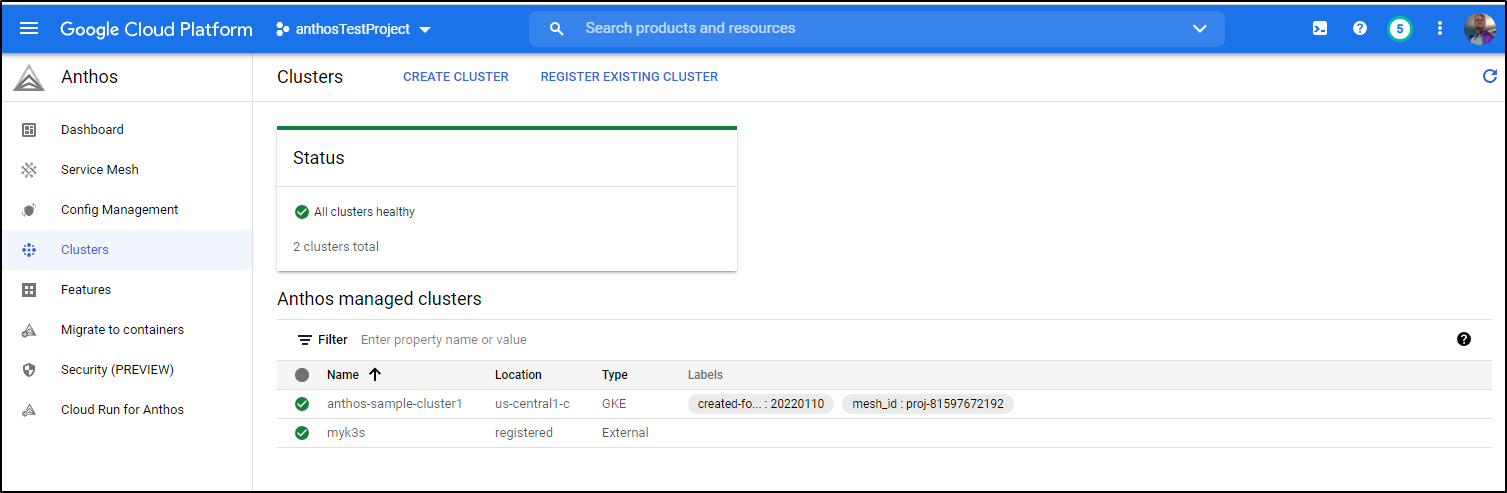

Once logged in with the token, you can see the cluster is green (instead of red / critical):

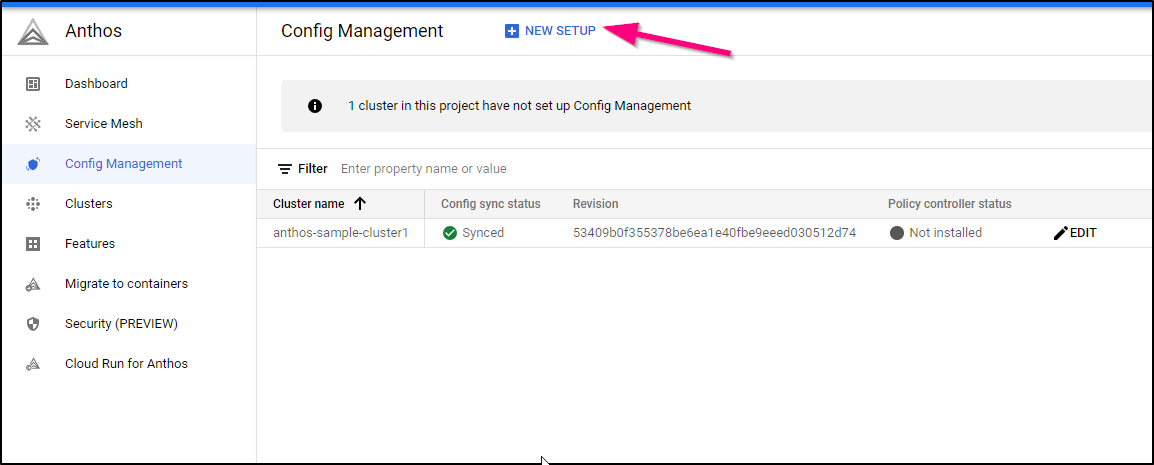

Config Management

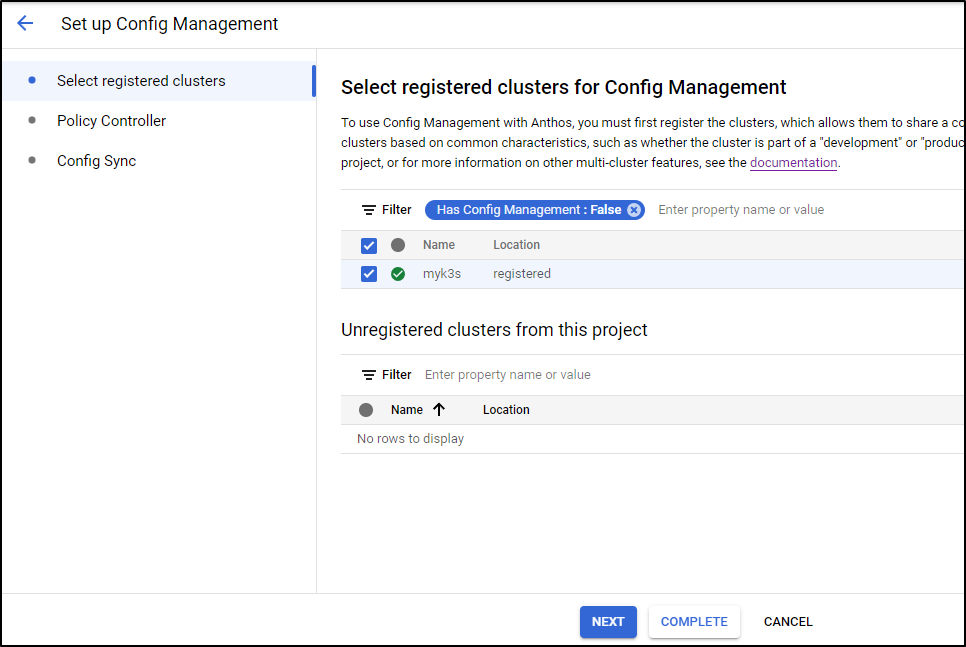

Next, in order to manage things, we need to setup Config Management. We can click “New Setup” under the Config Management area of Anthos

we check our cluster and click next

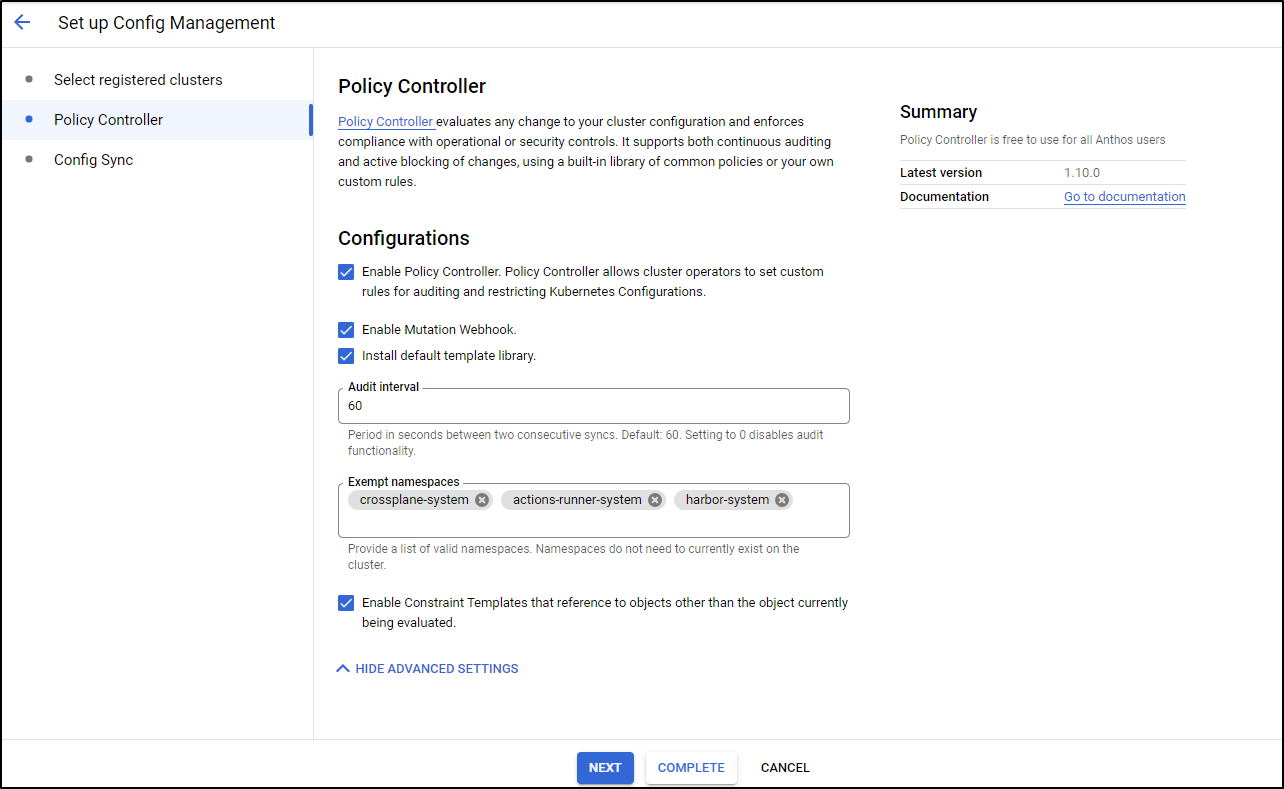

Next, we can opt out of managing namespaces we do not wish Anthos to check

then press “complete” to finish and sync

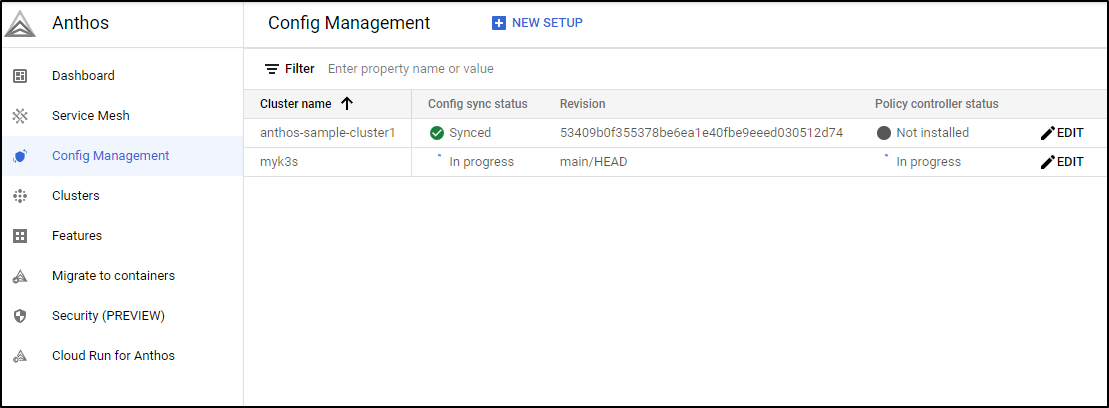

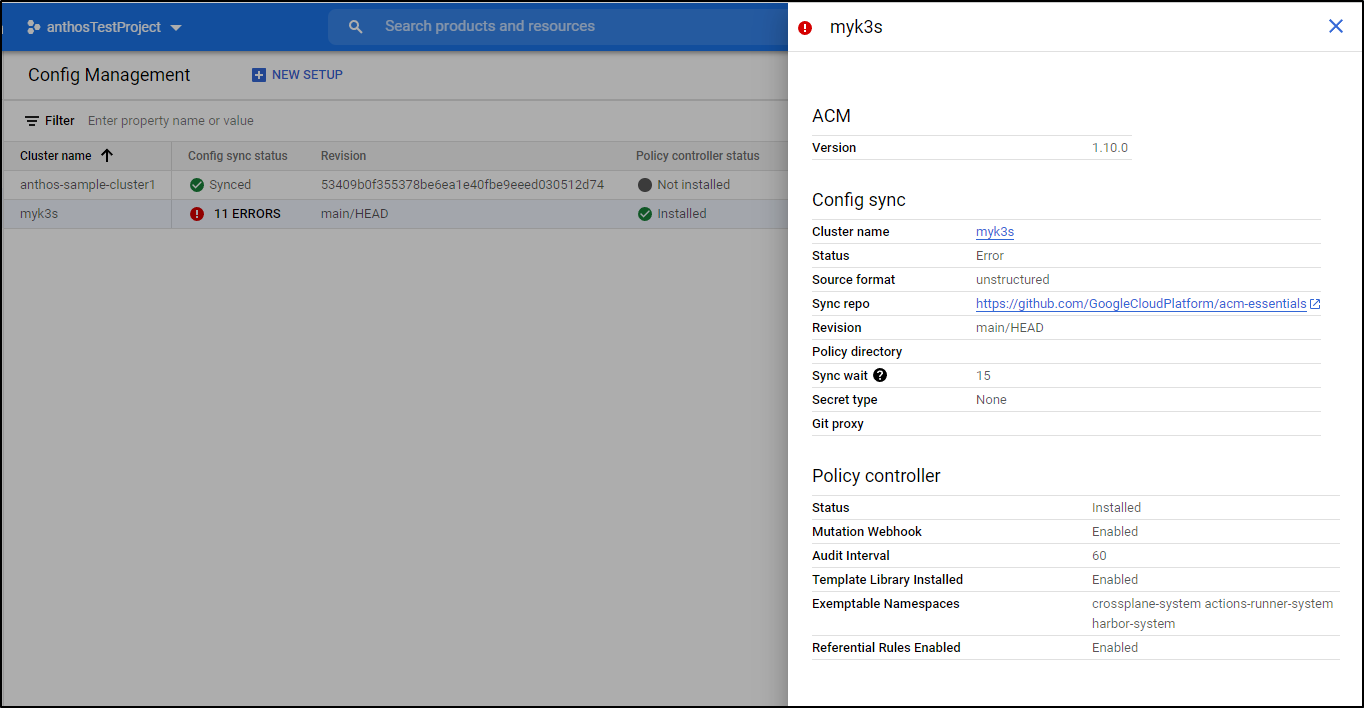

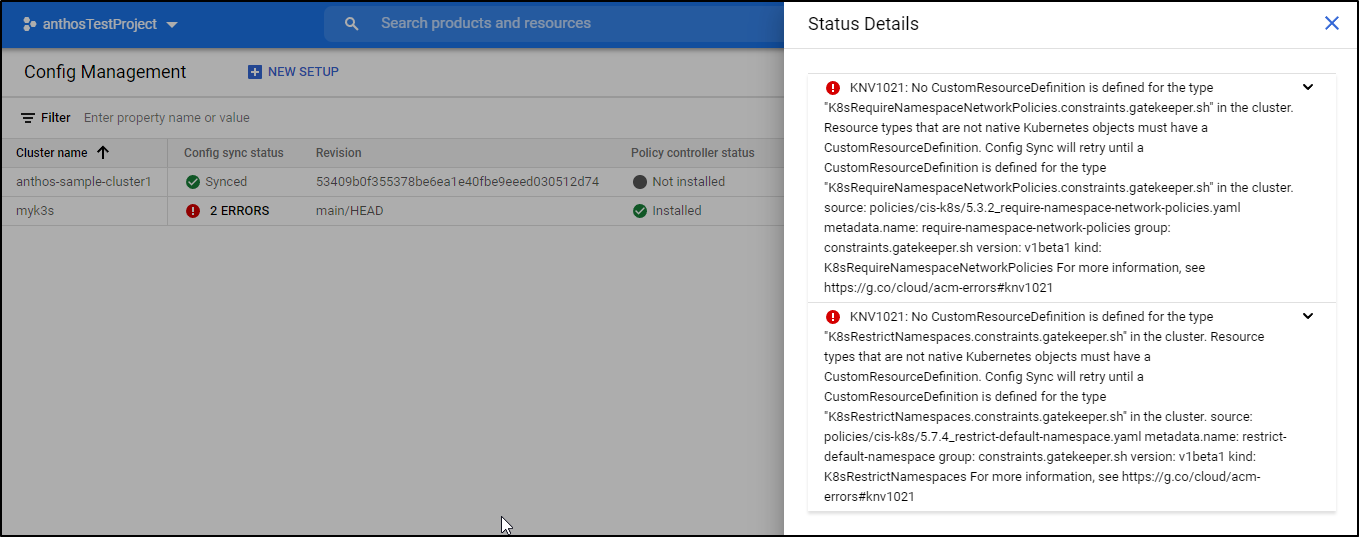

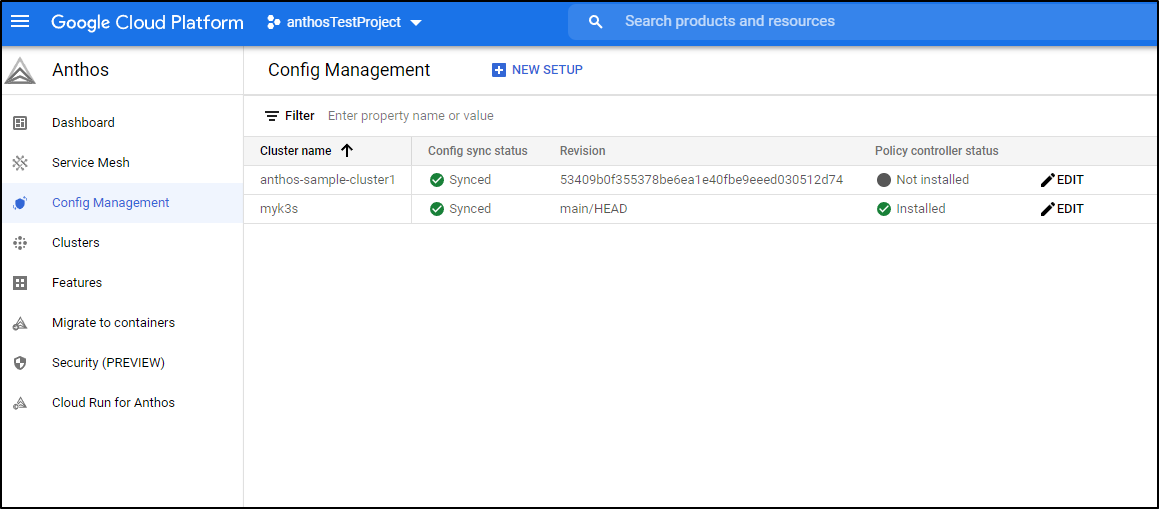

When done, we can see status and any errors

By default, this will compare to this ACM repo: https://github.com/GoogleCloudPlatform/acm-essentials . You can see the policies here.

Some errors will get fixed, but others may require intervention

However, after the gatekeeper deployment, the new gatekeeper install started to break things:

cert-manager cert-manager-cainjector-6d59c8d4f7-5lm5x 0/1 CrashLoopBackOff 54 90d

default dapr-operator-8457cdc644-ldbns 0/1 CrashLoopBackOff 30 54d

kube-system local-path-provisioner-5ff76fc89d-92dfg 0/1 Error 32 90d

resource-group-system resource-group-controller-manager-55c5f48d7c-2ksdh 3/3 Running 4 7m53s

actions-runner-system actions-runner-controller-5588c9c84d-45g52 1/2 CrashLoopBackOff 33 57d

I liked the green check, but I did not like things falling down… I will say eventually all things came back okay, but it was touch-n-go for a few..

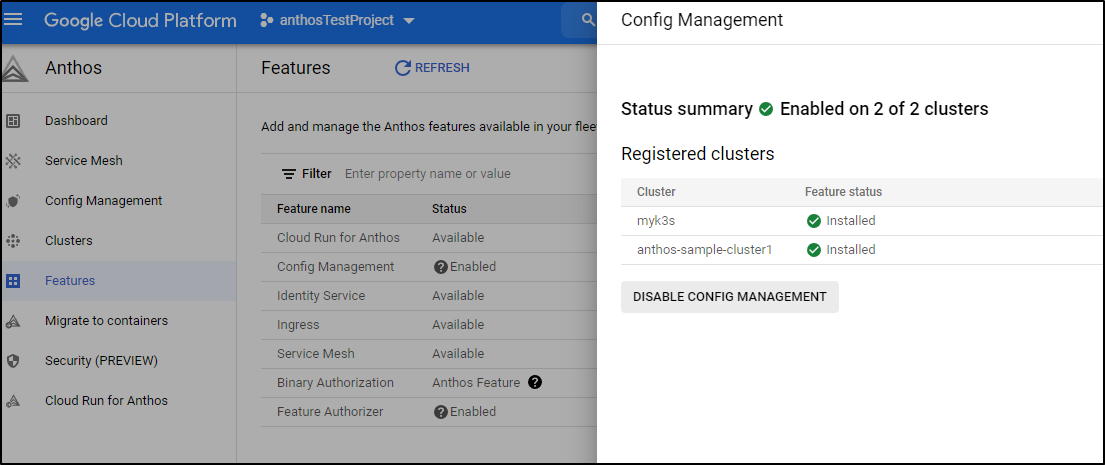

The image above shows one thing with which I take issue. Let’s say i want to use a different ACM repo, or I decide I want to get rid of the gatekeeper. There is no “off” or “disable”.

Instead you have to follow these steps: https://cloud.google.com/anthos-config-management/docs/how-to/uninstalling-anthos-config-management

Which says basically you can disable it for ALL clusters from the Anthos features page.

or you can remove the CM operator from the cluster:

$ kubectl get configmanagement

I0126 16:32:06.844432 5245 request.go:665] Waited for 1.131105687s due to client-side throttling, not priority and fairness, request: GET:https://192.168.1.77:6443/apis/constraints.gatekeeper.sh/v1alpha1?timeout=32s

NAME AGE

config-management 20m

We’ll pack all the steps into one command to remove this thing from my cluster:

$ kubectl delete configmanagement --all && kubectl delete ns config-management-system && kubectl delete ns config-management-monitoring && kubectl delete crd configmanagements.configmanagement.gke.io && kubectl -n kube-system delete all -l k8s-app=config-management-operator

configmanagement.configmanagement.gke.io "config-management" deleted

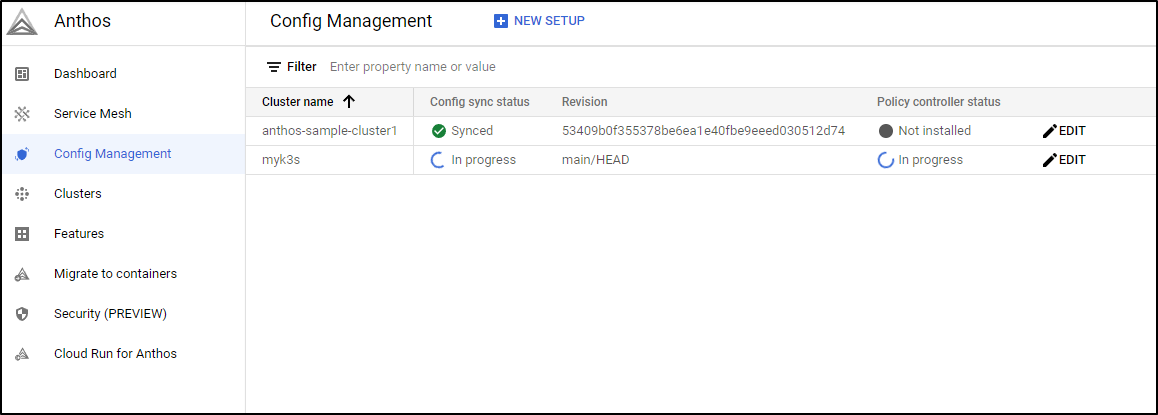

You’ll see the sync and status icons switch to In Progress as you do that

Cleanup

If you added on-prem/other cloud clusters, you’ll want to remove them from Config Management (if you haven’t already). See the prior section on that.

Next, I’ll remove any users I created for Anthos use:

$ cat adminaccount.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

$ kubectl delete -f adminaccount.yaml

serviceaccount "admin-user" deleted

clusterrolebinding.rbac.authorization.k8s.io "admin-user" deleted

Next, the simplist approach to removing Anthos is to just delete the project that contained it. https://console.cloud.google.com/cloud-resource-manager

Cost

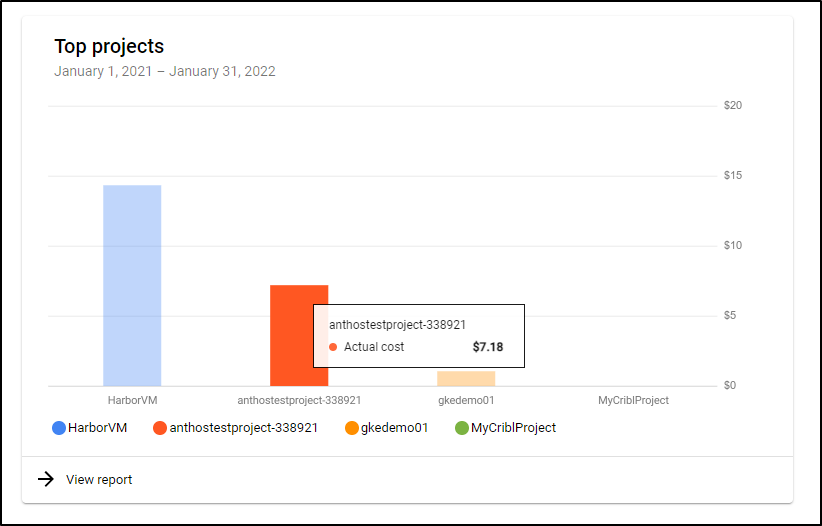

I had the project up for a full day experimenting and the costs were about US$0.07.

The next day I checked and the Bill showed $7.18 for the 12 or so hours I was experimenting

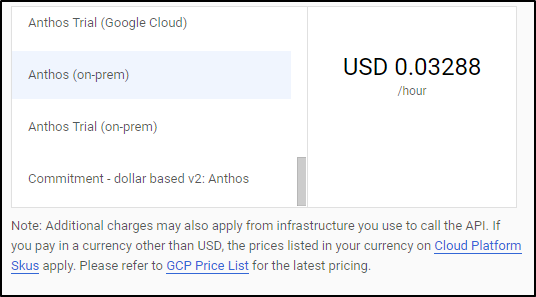

We can see from Anthos pricing that after our trial, we’ll pay US$0.03288/hour ($24.46/mo) on-prem cluster. Other clouds will be US$0.01096/hour ($8.15/mo).

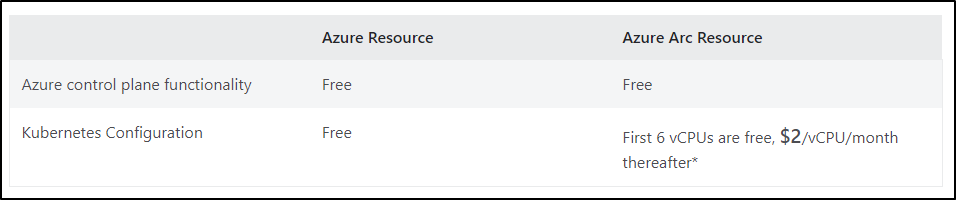

If we wish to compare to an equivalent service. Azure Arc for K8s is free for the control plane and $2/vCPU a month (after the first 6) for Configuration management (for my K3s, that would make it $44/month)

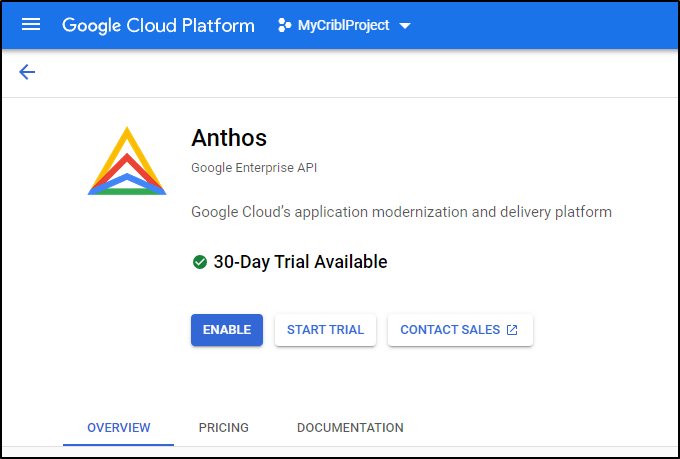

Later in this writeup (AKS), you will see me enable the “Start Trial” in which I ran Anthos for several hours. In that case, the cost was $0.10. So indeed, opting in on the “Trial” of Anthos has an affect on billing.

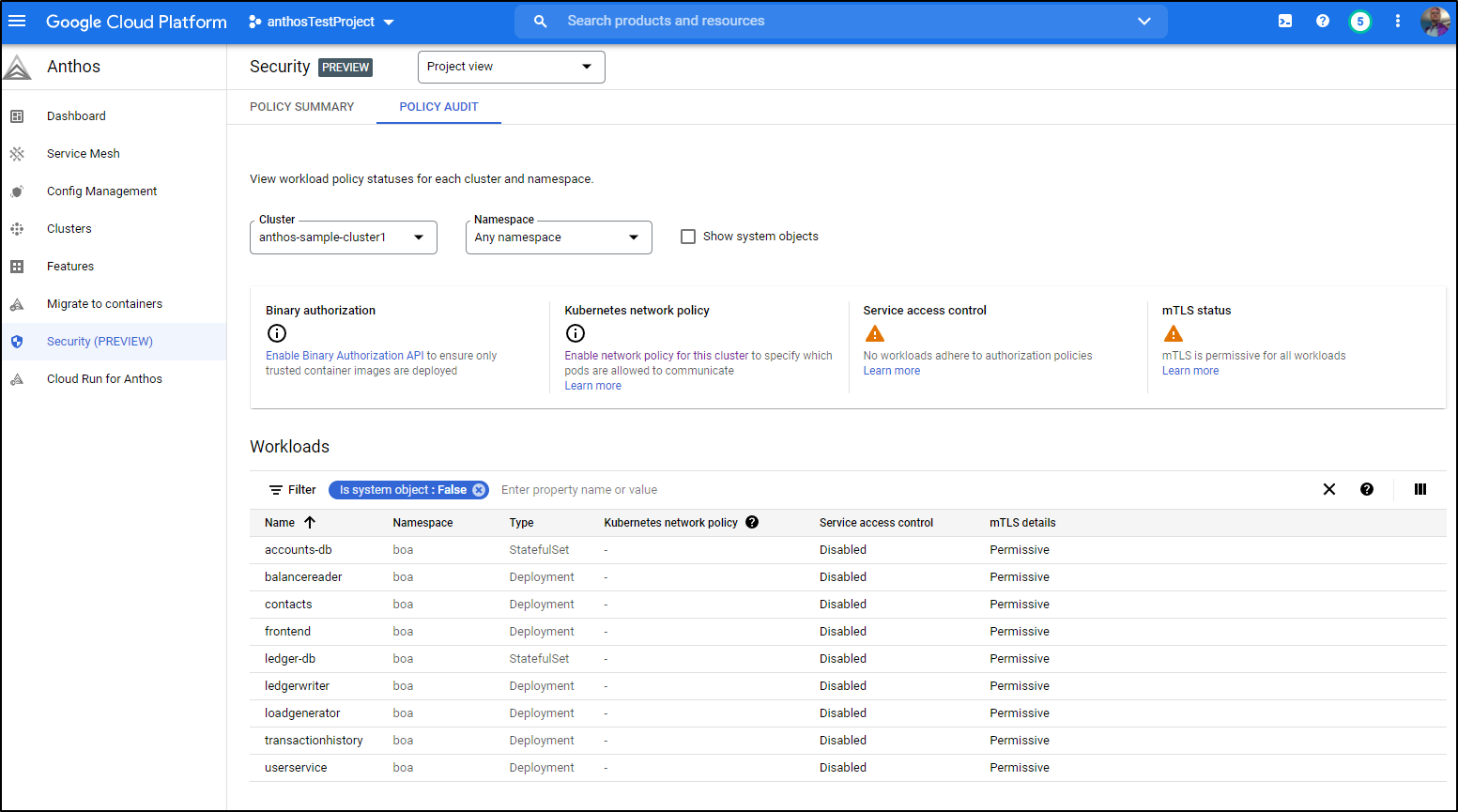

Security

There is a preview page, though it just shows status presently, of Security issues with your clusters:

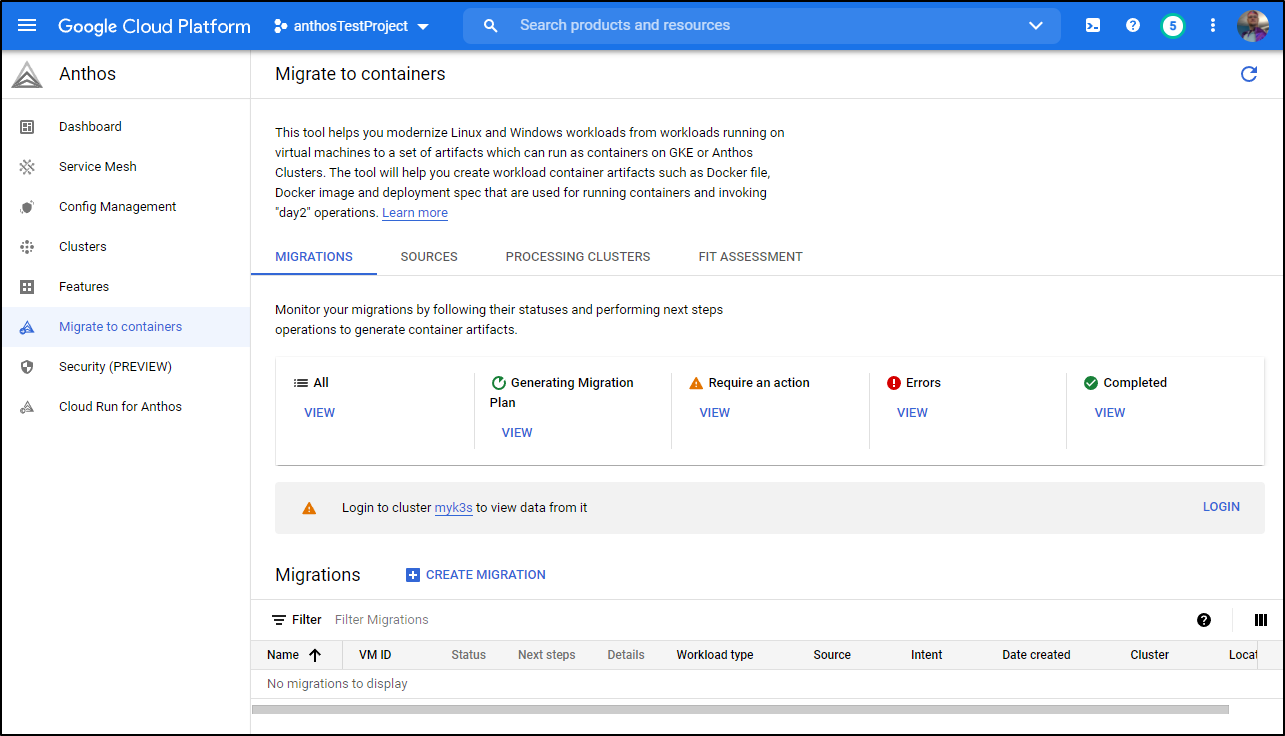

Migrate to Containers

Let’s say you want to use Anthos to migrate Linux or Windows workloads to GKE containers. We can use the “Migrate to Containers” system to do this:

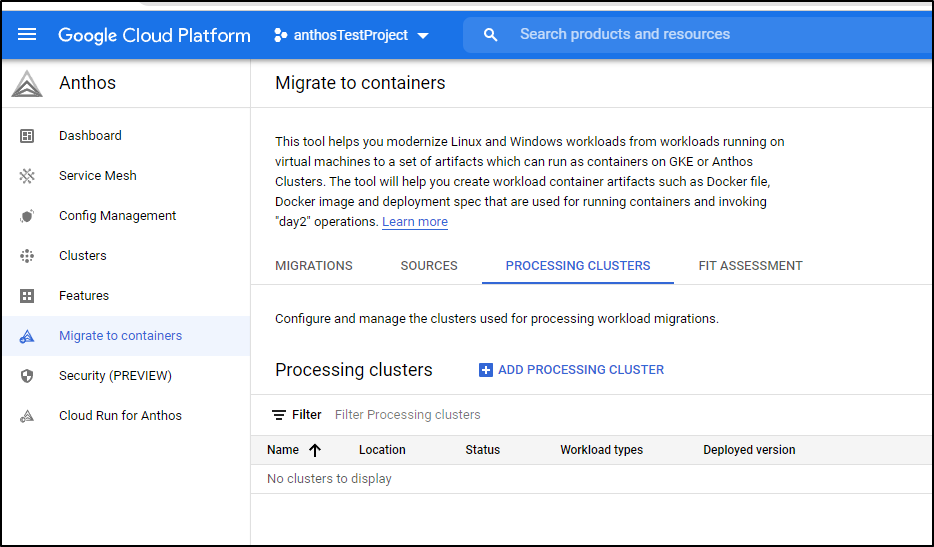

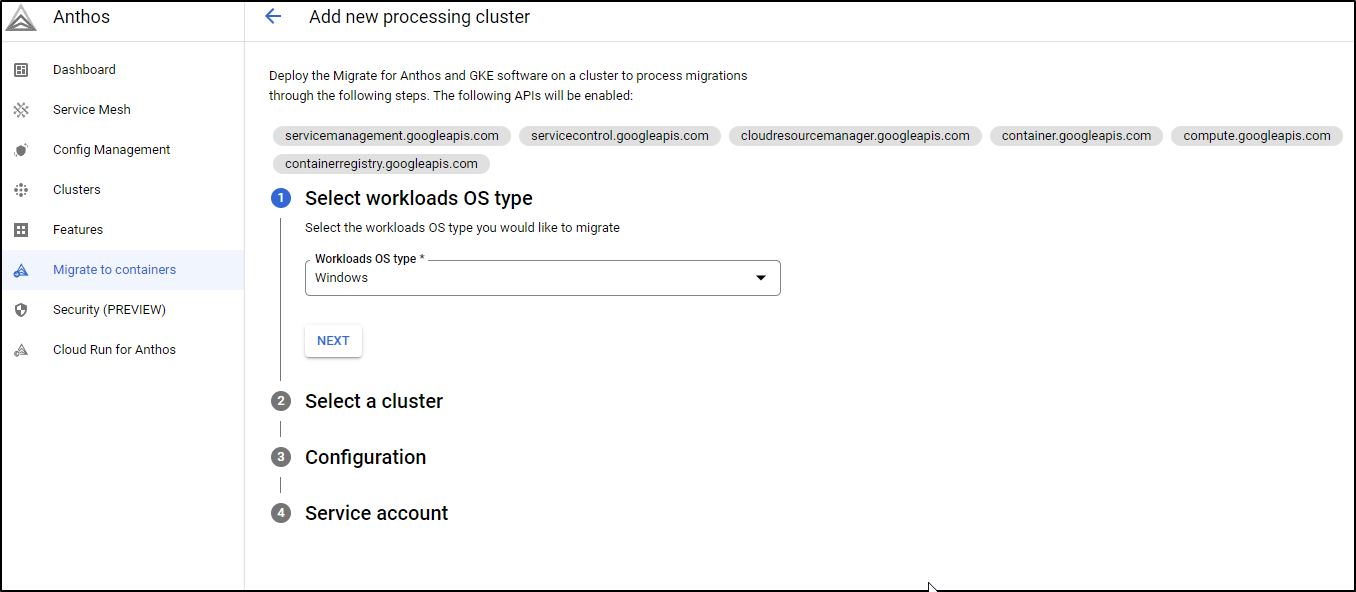

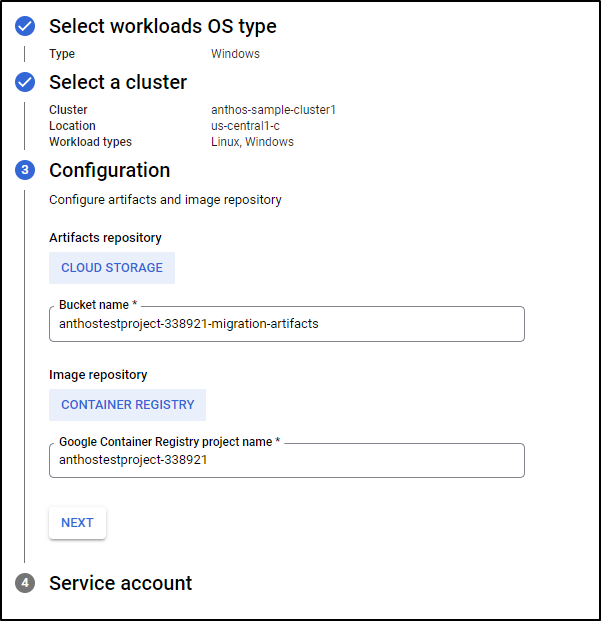

To begin, we need a cluster to act as the “processing” cluster. If we intend to handle Windows Workloads, it will need to have at lead one windows node pool.

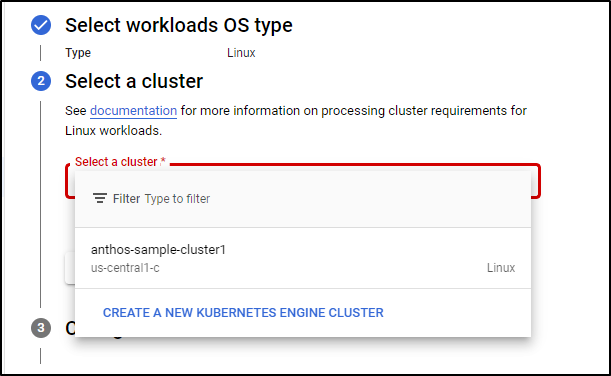

We can create one from the Processing Clusters menu:

Chose the OS type

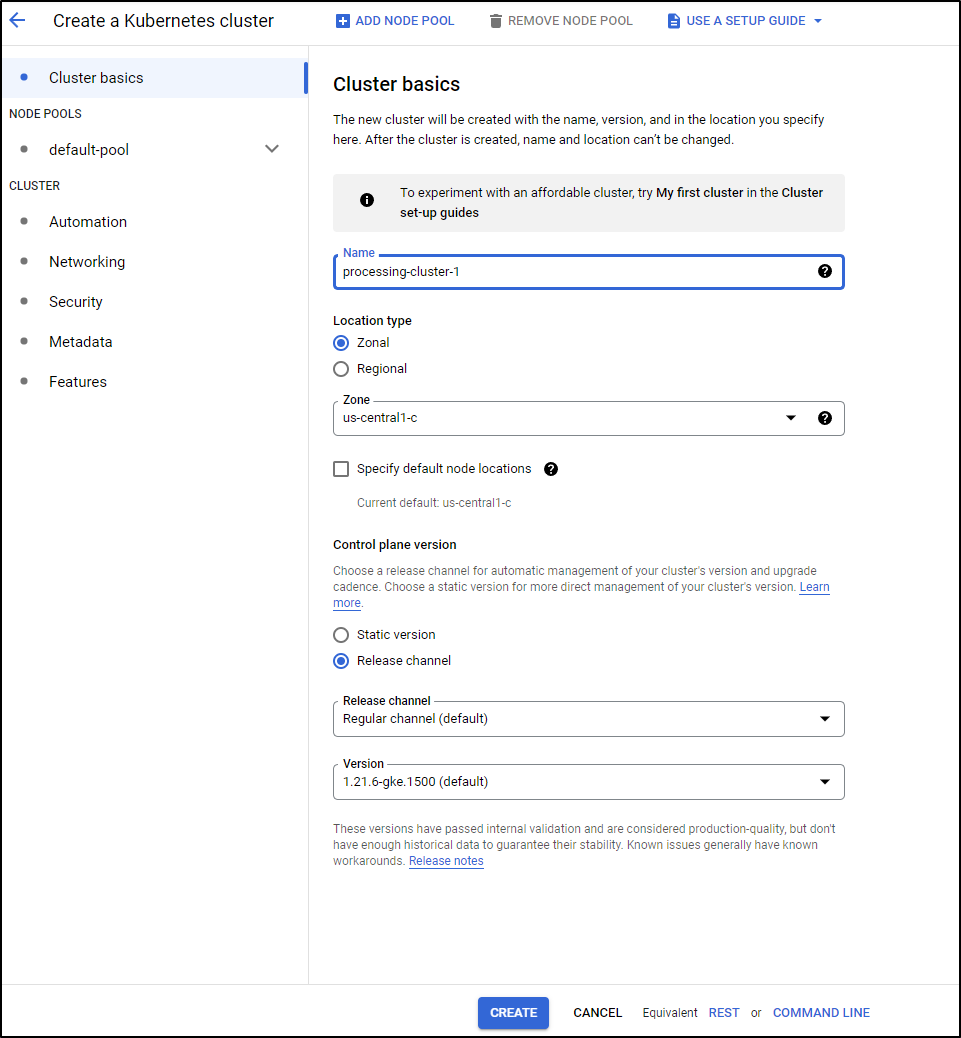

In my case, it popped me out to a K8s create page to create the control plane, where I needed to also add a Windows node pool.

There I created a processing-cluster-1

If we are doing Linux, we could use the cluster we already made in Anthos

I was pretty much blocked, it seems I need to perhaps add a “cloud-access” scope but that isn’t in the UI where the docs say it should be

you can, however create it from the command line:

$ gcloud container node-pools create pool-2 --cluster anthos-sample-cluster1 --zone us-central1-c --num-nodes 1 --scopes https://www.googleapis.com/auth/devstorage.read_write,https://www.googleapis.com/auth/cloud-platform --image-type WINDOWS_LTSC

Creating node pool pool-2...done.

Created [https://container.googleapis.com/v1/projects/anthostestproject-338921/zones/us-central1-c/clusters/anthos-sample-cluster1/nodePools/pool-2].

NAME MACHINE_TYPE DISK_SIZE_GB NODE_VERSION

pool-2 e2-medium 100 1.21.6-gke.1500

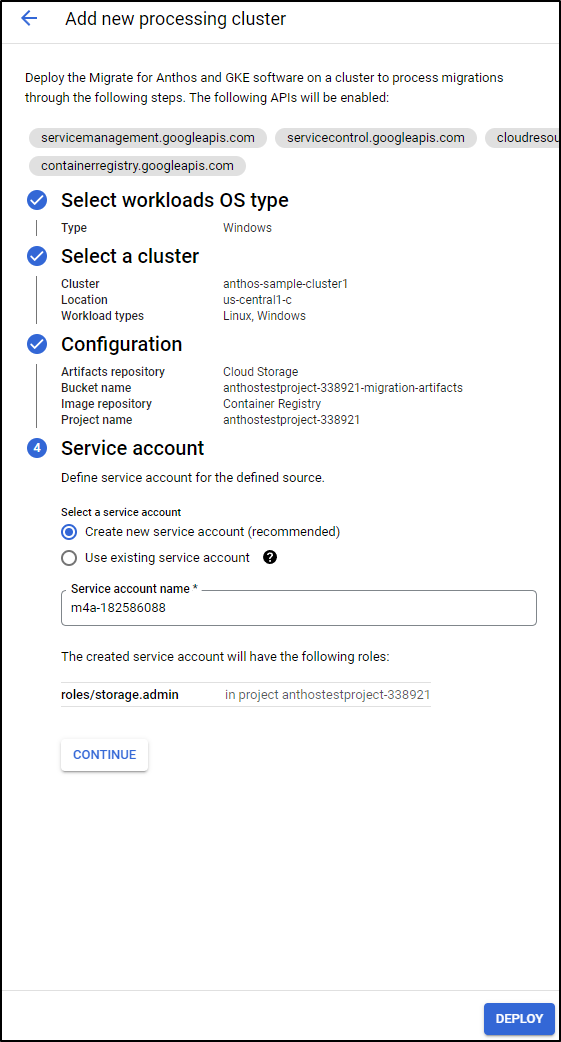

I could then use Windows pools and select the cluster. Next set your CR and Cloud Storage location

And lastly a Service account and click deploy

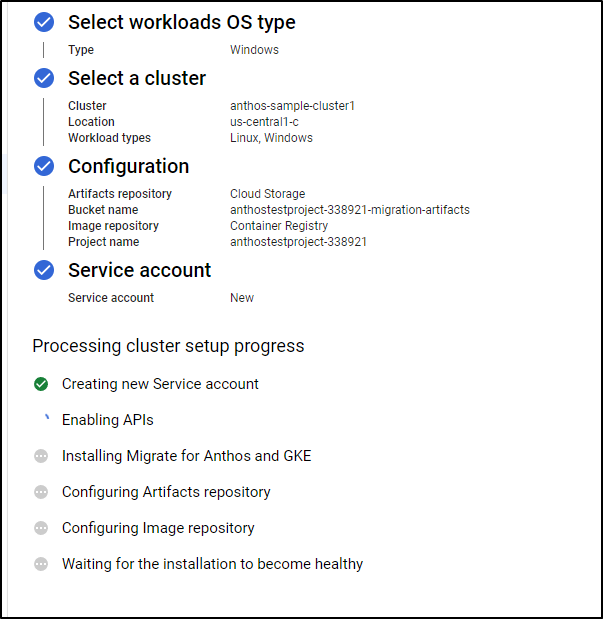

It will start the process of creating the processing cluster

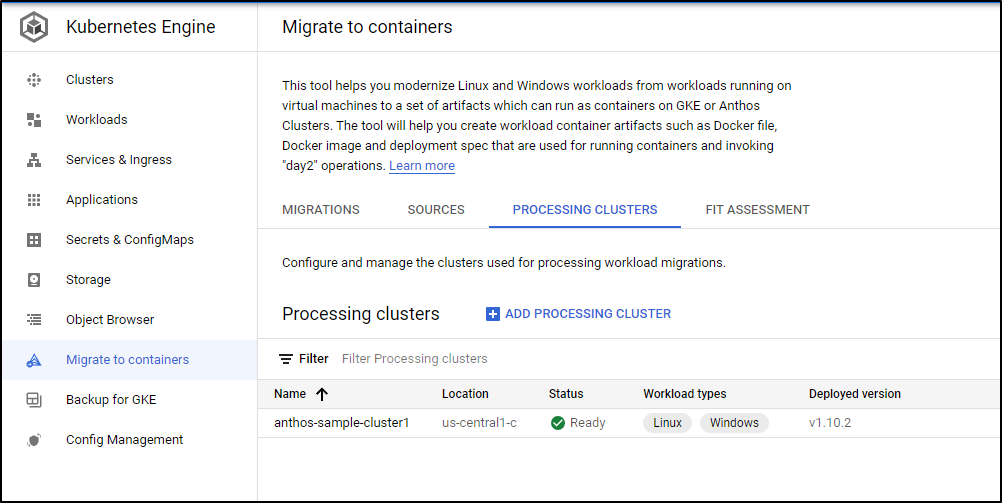

When done, we will see it in the processing clusters area

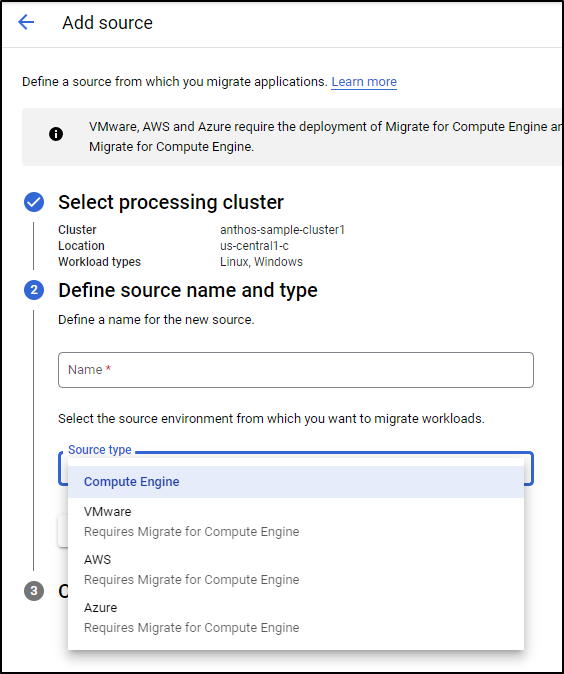

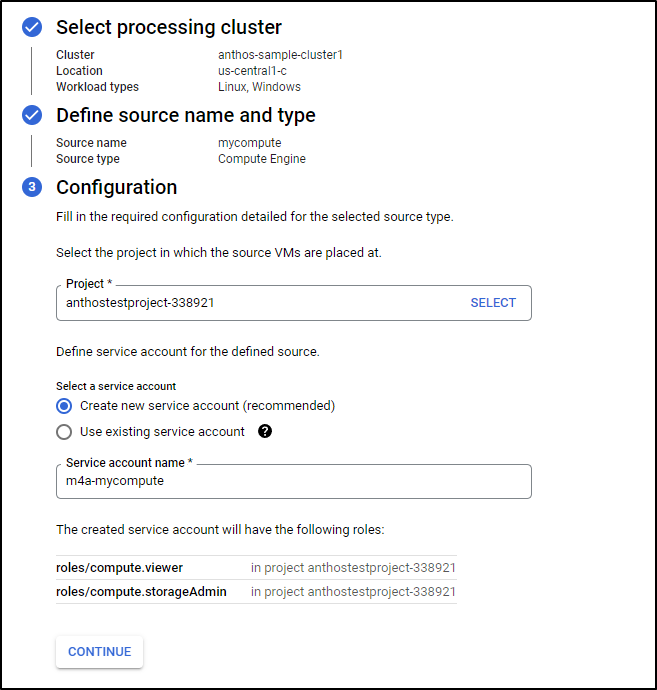

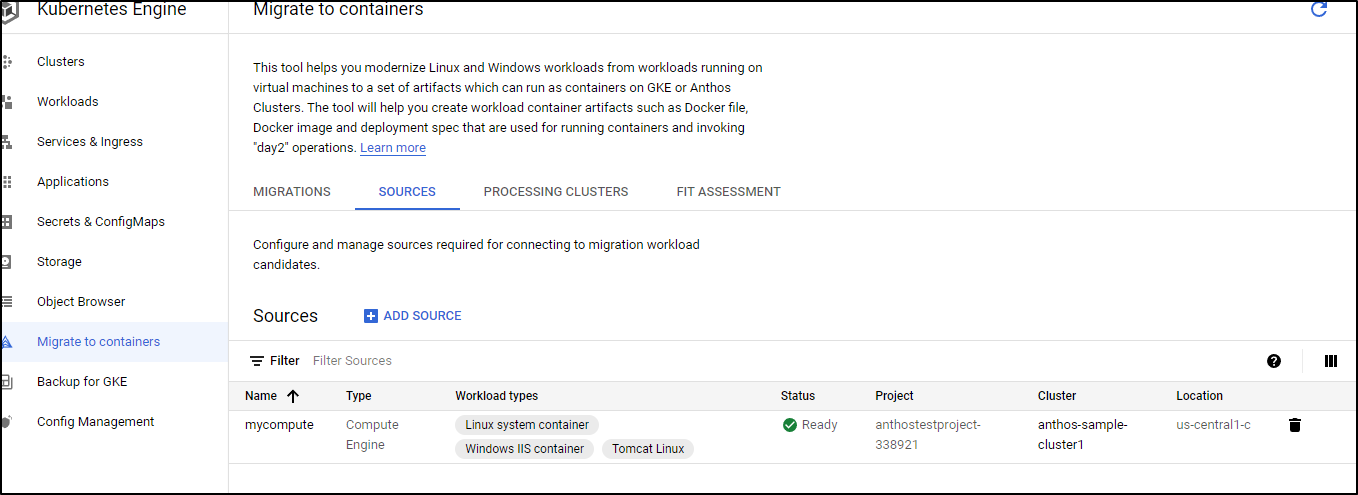

Now we can choose a source: Compute Engine, VMware, Azure or AWS. The latter 3 require a compute engine to be stood up in their Infra.

If we choose Compute Engine, we can create a VM in our project for the purpose of Migration

Click continue

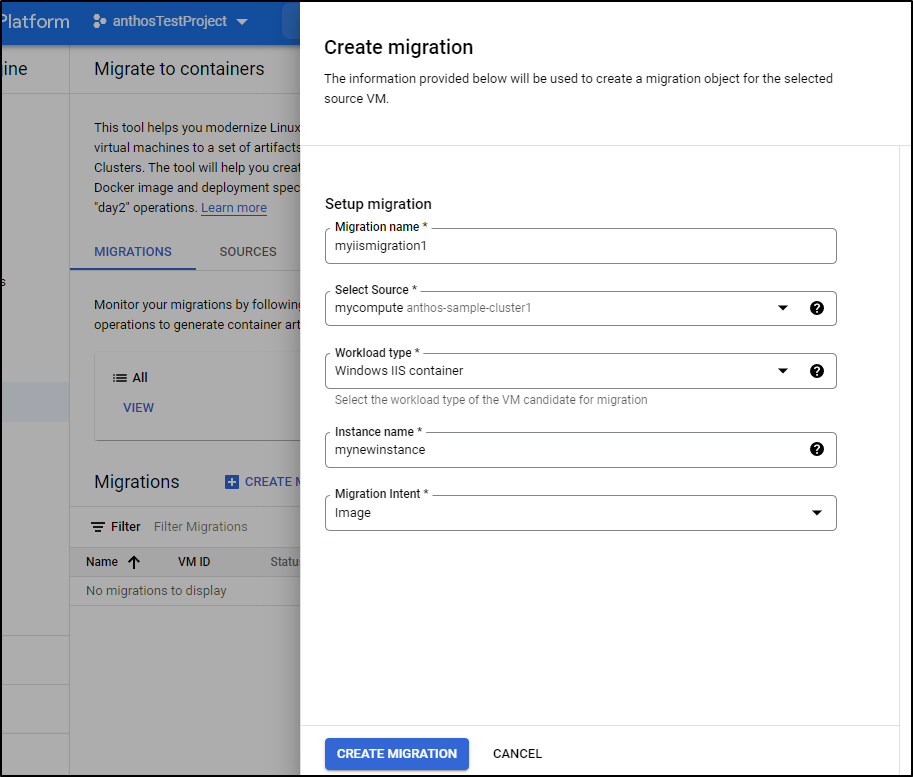

Lastly, we can create a migration - in this case it would take a Windows IIS Container and turn it into an Image.

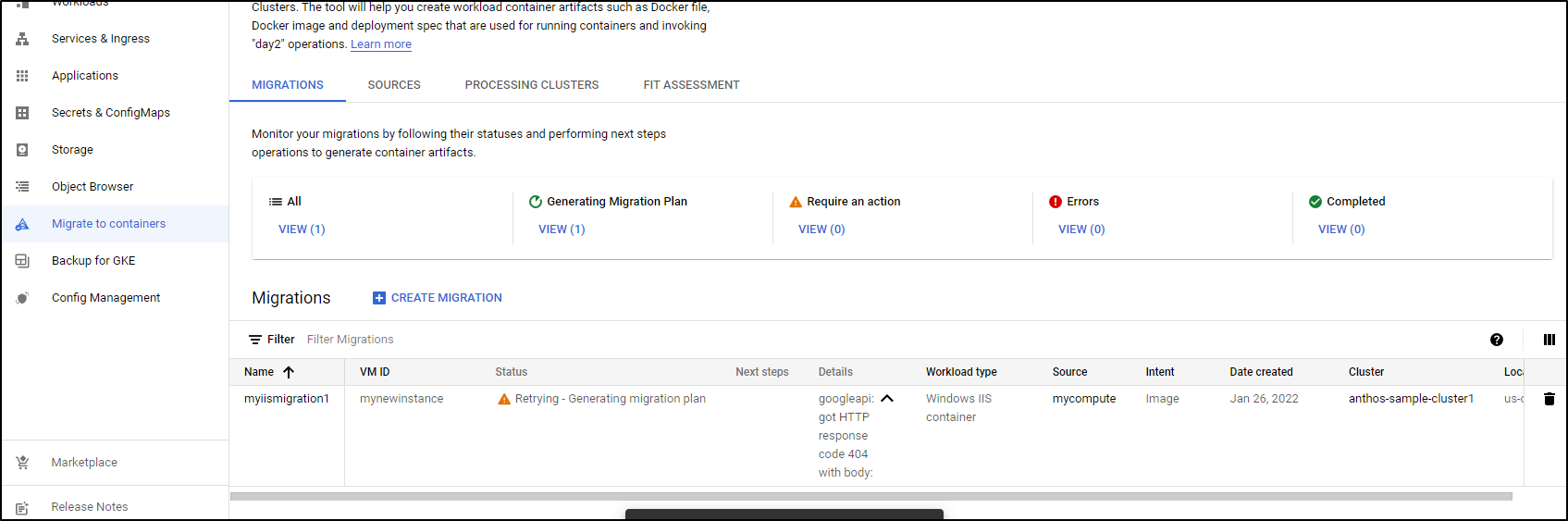

Without a proper IIS Container, we of course will see errors, such as this 404 error

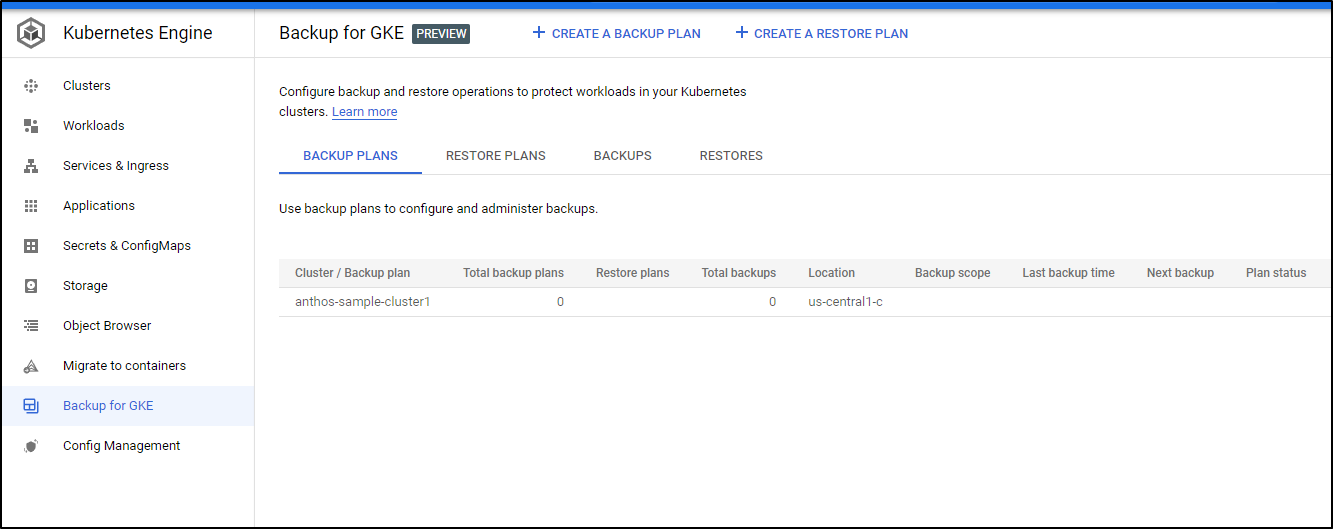

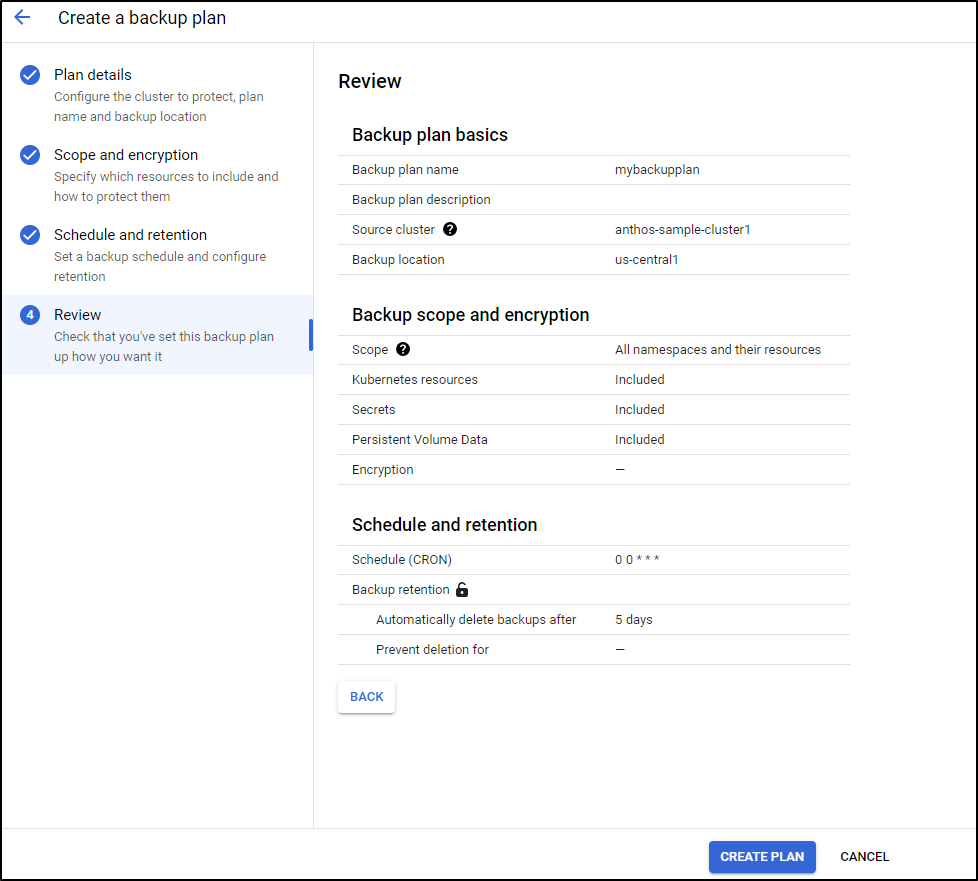

# Kubernetes Backups

You can enable Backups of GKE via the Backup plan.

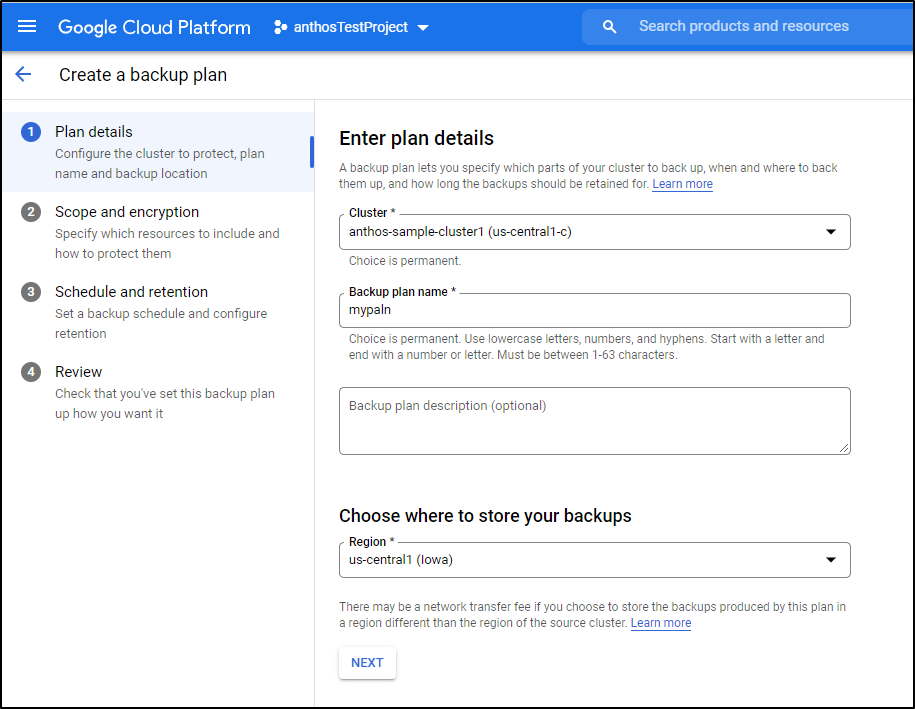

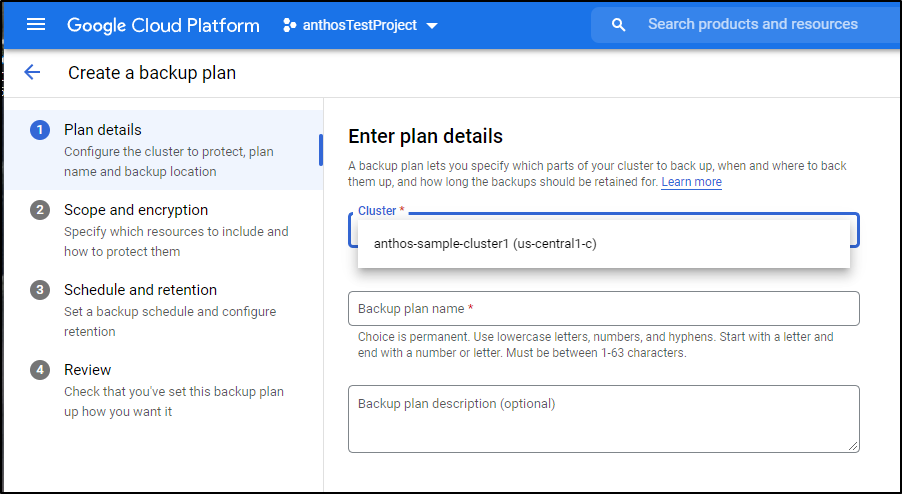

First enable the Backup for GKE API

You can then create a Backup plan either under Anthos or GKE menus

Create the plan

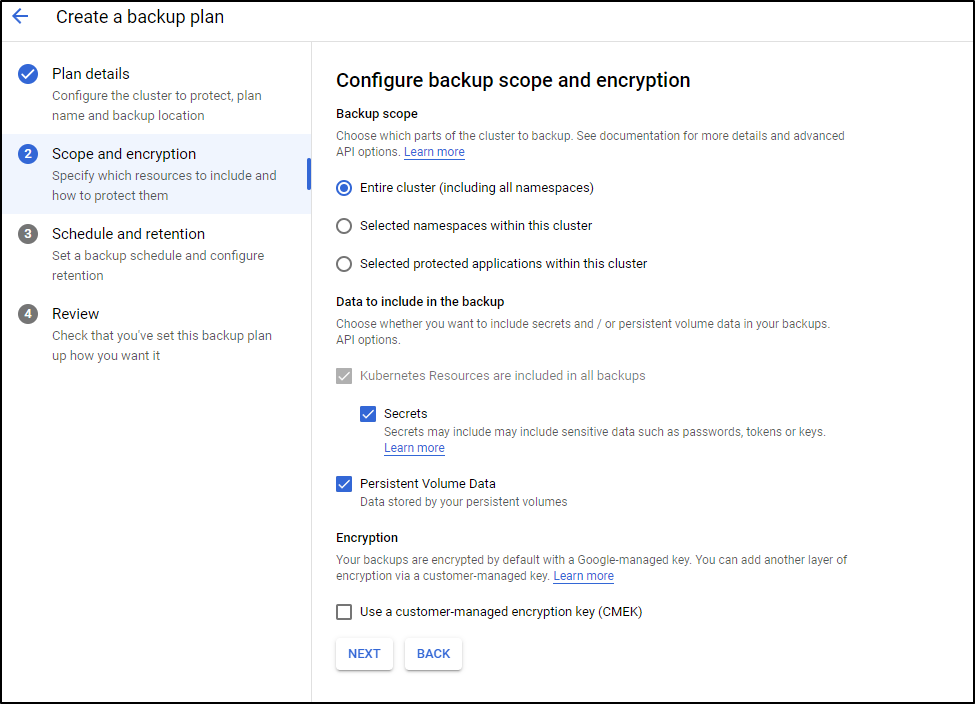

set what it is you swish to backup

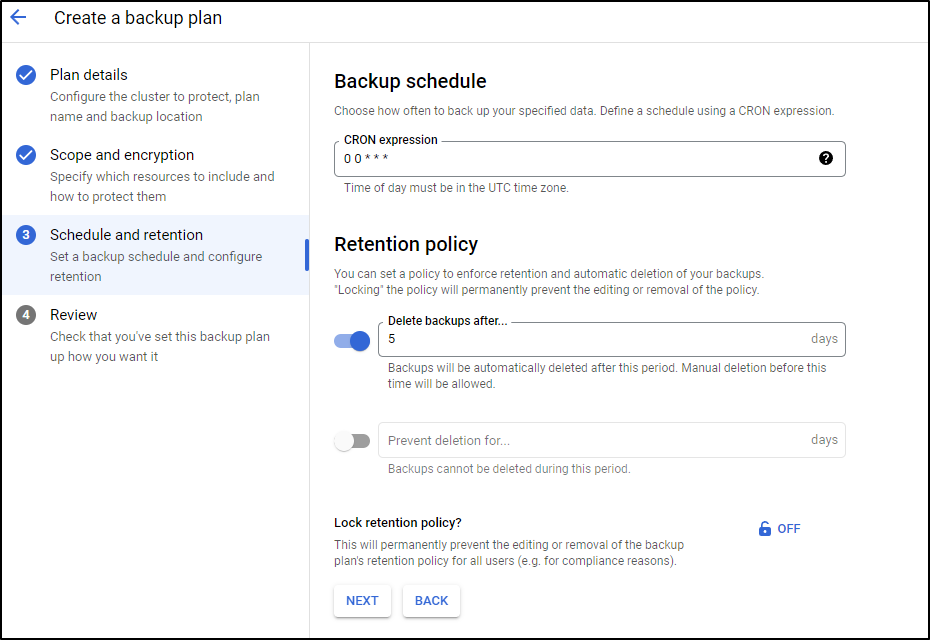

next set the schedule and retention

Lastly, click Create Plan to create the plan

Sadly, while I can see my Anthos cluster in GKE

It was not an option for backups in the GKE Backups

AKS and Anthos

I wanted to try this again but this time with AKS.

First, I created a fresh project

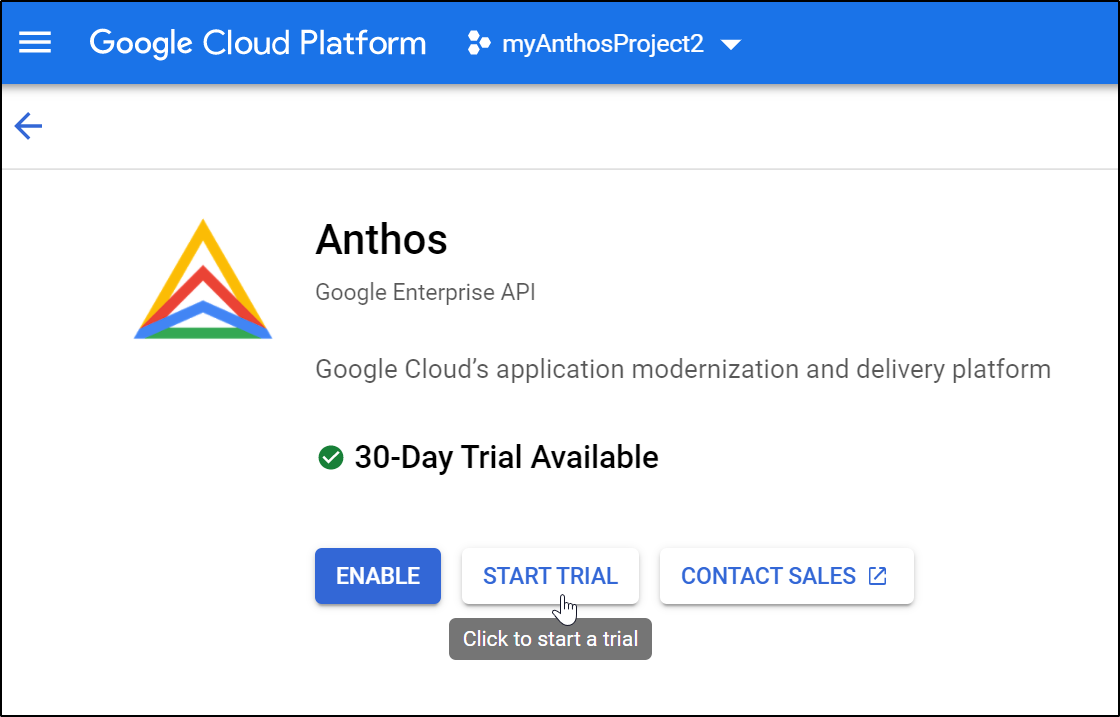

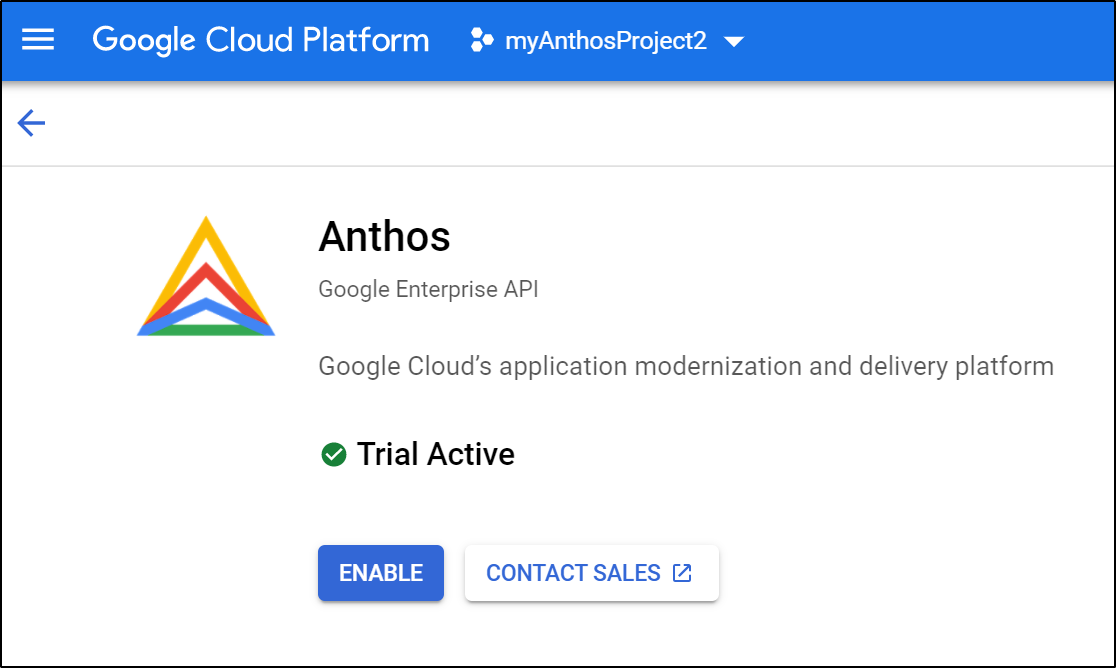

This time I enable “Start Trial” which may reduce my costs (at least for 30d).

Now it indicates a ‘free trial’ is in play when I click enable

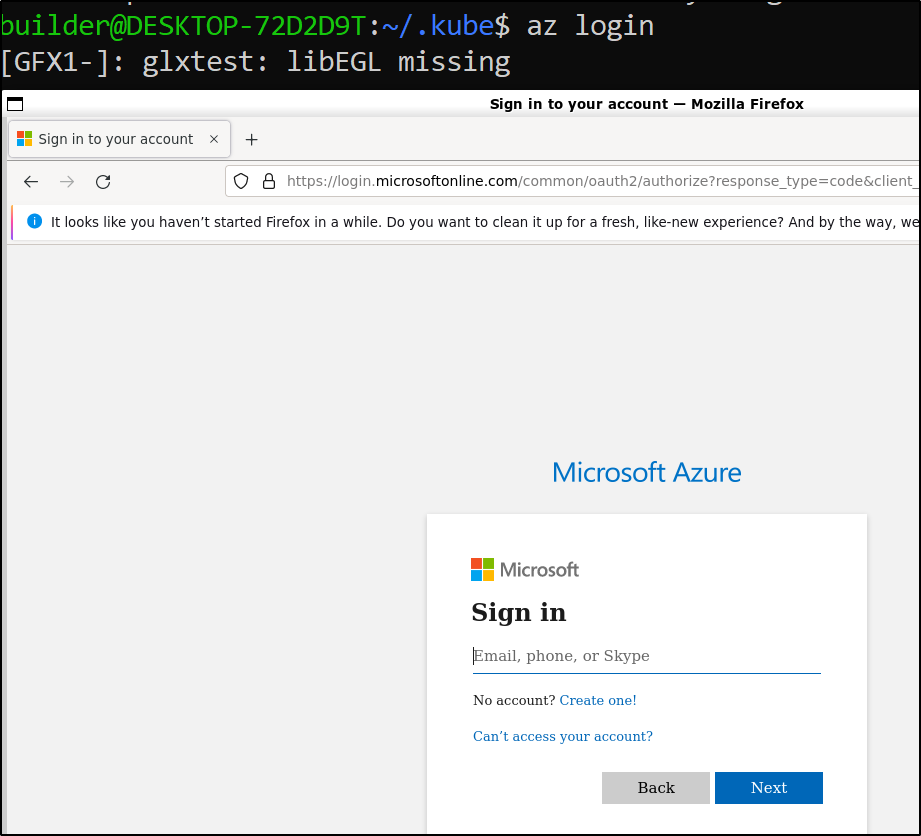

This is just a fun side note; In Windows 11 you can upgrade your WSL and it will send X requests to Windows. This basically means you get graphical Linux apps. I installed Firefox to play with but the result was that when I go to do az login to refresh my creds, it launches a Linux Firefox instead of forward out a request to the default browser in windows.

Creating a small AKS cluster

$ az ad sp create-for-rbac -n idjaks44sp.tpk.pw --skip-assignment --output json > my_sp.json

$ export SP_PASS=`cat my_sp.json | jq -r .password`

$ export SP_ID=`cat my_sp.json | jq -r .appId`

$ az group create --name idjaks44rg --location centralus

{

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjaks44rg",

"location": "centralus",

"managedBy": null,

"name": "idjaks44rg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

$ az aks create --resource-group idjaks44rg --name idjaks44 --location centralus --node-count 2 --enable-cluster-autoscaler --m

in-count 2 --max-count 3 --generate-ssh-keys --network-plugin azure --network-policy azure --service-principal $SP_ID --client-secret $SP_PASS

$ az aks get-credentials -n idjaks44 -g idjaks44rg --admin

Merged "idjaks44-admin" as current context in /home/builder/.kube/config

$ cat ~/.kube/config | grep current

current-context: idjaks44-admin

Next, in Anthos we’ll go to add an External Cluster.

This will generate a semi-usefull command like this:

gcloud container hub memberships register AKS44 \

--context=[CLUSTER_CONTEXT] \

--service-account-key-file=[LOCAL_KEY_PATH] \

--kubeconfig=[KUBECONFIG_PATH] \

--project=myanthosproject2

all the IAM fun now…

$ gcloud auth login

$ gcloud projects list | grep anthos

myanthosproject2 myAnthosProject2 511842454269

$ gcloud config set project myanthosproject2

Updated property [core/project].

$ gcloud services enable servicemanagement.googleapis.com

$ gcloud iam service-accounts list --project myanthosproject2

DISPLAY NAME EMAIL DISABLED

Compute Engine default service account 511842454269-compute@developer.gserviceaccount.com False

$ gcloud projects add-iam-policy-binding myanthosproject2 --member "serviceAccount:511842454269-compute@developer.gserviceaccount.com" --role roles/gkehub.serviceAgent

$ gcloud iam service-accounts keys create ./mykeys.json --iam-account="511842454269-compute@developer.gserviceaccount.com"

created key [f6384f2c940c72bd3935c8960c78ad0e06ca6608] of type [json] as [./mykeys.json] for [511842454269-compute@developer.gserviceaccount.com]

Now hook them together

$ gcloud container hub memberships register aks44 --context idjaks44-admin --kubeconfig=/home/bu

ilder/.kube/config --service-account-key-file=/home/builder/Workspaces/jekyll-blog/mykeys.json

Waiting for membership to be created...done.

Created a new membership [projects/myanthosproject2/locations/global/memberships/aks44] for the cluster [aks44]

Generating the Connect Agent manifest...

Deploying the Connect Agent on cluster [aks44] in namespace [gke-connect]...

Deployed the Connect Agent on cluster [aks44] in namespace [gke-connect].

Finished registering the cluster [aks44] with the Fleet.

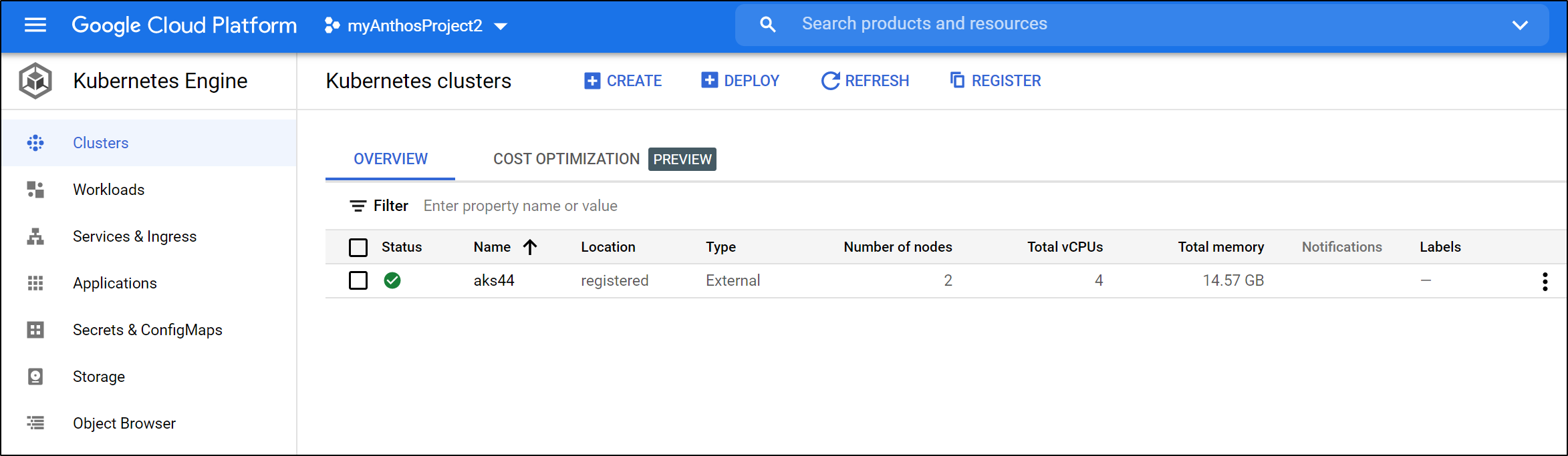

It’s connected now, but needs a login token

I’ll need an admin token:

$ kubectl apply -f adminaccount.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

$ kubectl describe secret -n kube-system $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') | grep ^token

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjU5dGZEemxYU2lfWjB0WlNNQnFhTmRUT1kydTBCOXZ4MTZiZThQTF90YVEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWo2MjhuIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5NTg5ZTRkNi1iOWRhLTQyOWMtOWNlZC1kNWI3YmE0NTRkM2UiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.ZCGT4MfSAMFdWsbLcqEJhmhbYLMSkWXRx9WC_fbrY3GLmHDSB6739Tolc6Mx62iMvWpKfPPkXXWZIKO9OFFkobaMUP8nsXsS9RPlf4DxW_JJ7AjaImyxZnShfuwrO4syc9jcH0Y-PoJaYLgXVEEjJZXlFTUkvZ6ObEU8J21FqwB7D1cGYY1x2YJBQrcQ7EVtSchupLcDmtEOG8-GKwJut9a_pVVdXQldoI_uvY41FdbklrMTwONBVJwbm9M4zWqTMYcfVO5XpOC8RI9SsUJ0UKab_YI6pIA4eGNfj33oFZXjC1xcqoAakwVP9exAx0LHUi0ZtTgJikRo0S9ipkBDhhgJ4puq80eZY5EiI230LnxfMNX-FLvqTQSrUtfy6B6IIzWyhMhEezdxI4WVrLDvJhn1Mskg_RTZnehFNltckpCnTofCOdMZqtb-iNjXlPO4_L9KEX5BsWdINOuQmChd0g3pdYC6C1EDeyHlMNwPURYZhyXENVcDcaQsVAdcu28zc66vTpViB4eee_GcrwjFn6oZBJh4CFcbKkeRFqg3Q6uR2n3BmnsMG5d6pXzrS9EXIUXHEPBTSt32wRAHeGZtG_rpfpKFHbhPa-8TAgWzzCCRxwllLFPmZk6kOa8J35rRvoKacBA-ZrkISblFi3xRu61KAXxQ7VHPWWujII9PY2I

The update the IAM bindings

$ gcloud projects add-iam-policy-binding myanthosproject2 --member "serviceAccount:511842454269-compute@developer.gserviceaccount.com" --role=roles/gkehub.admin --role=roles/iam.serviceAccountAdmin --role=roles/iam.serviceAccountKeyAdmin --role=roles/resourcemanager.projectIamAdmin

Updated IAM policy for project [myanthosproject2].

bindings:

- members:

$ gcloud projects add-iam-policy-binding myanthosproject2 --member "serviceAccount:511842454269-compute@developer.gserviceaccount.com" --role "roles/gkehub.connect"

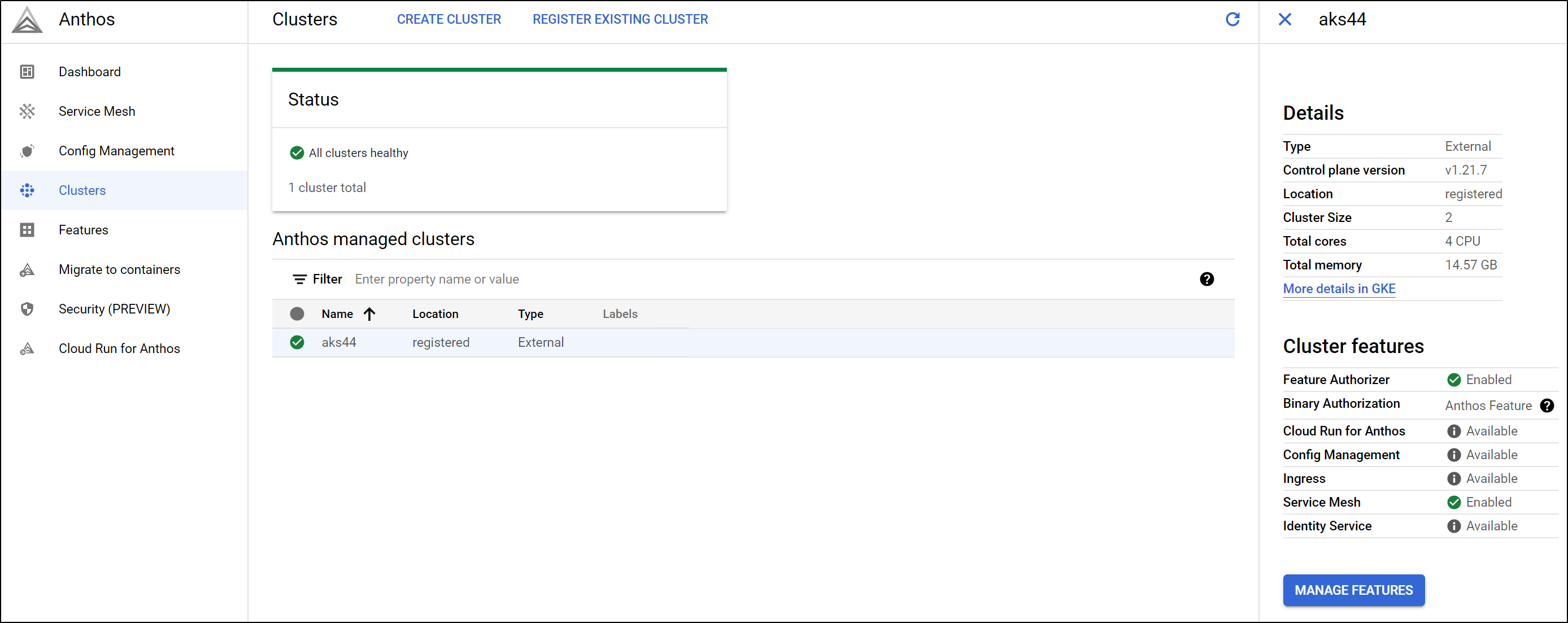

Now we see green checks for “aks44”

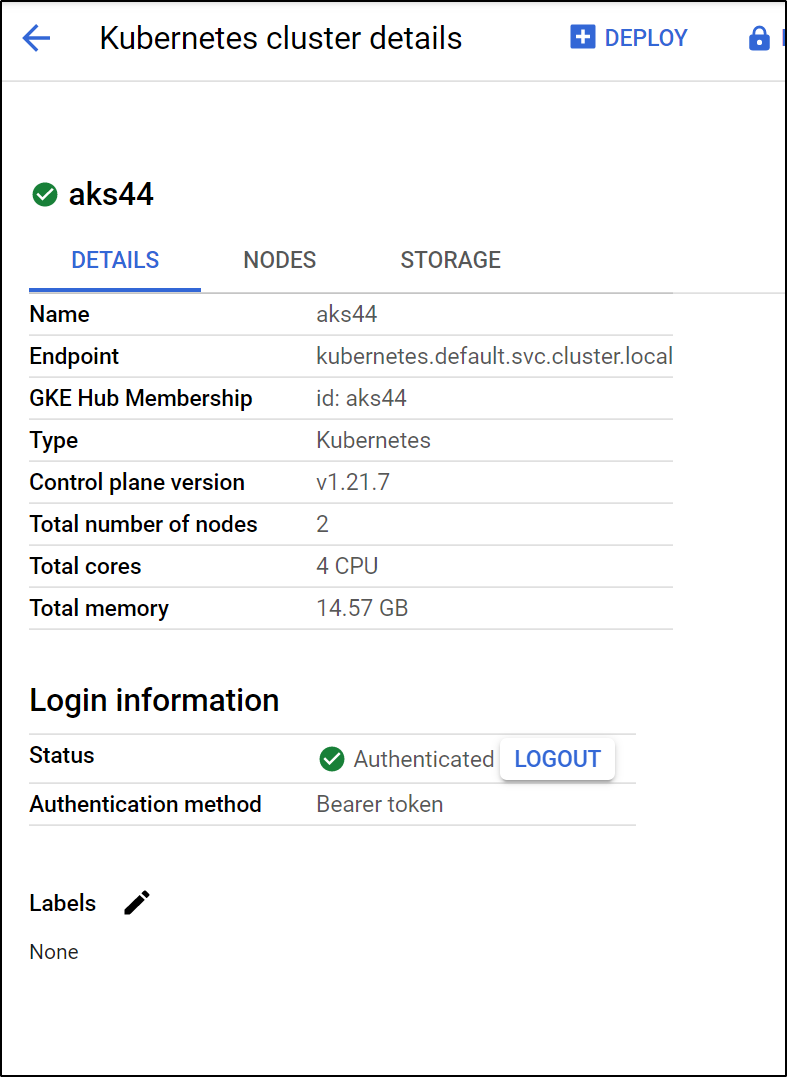

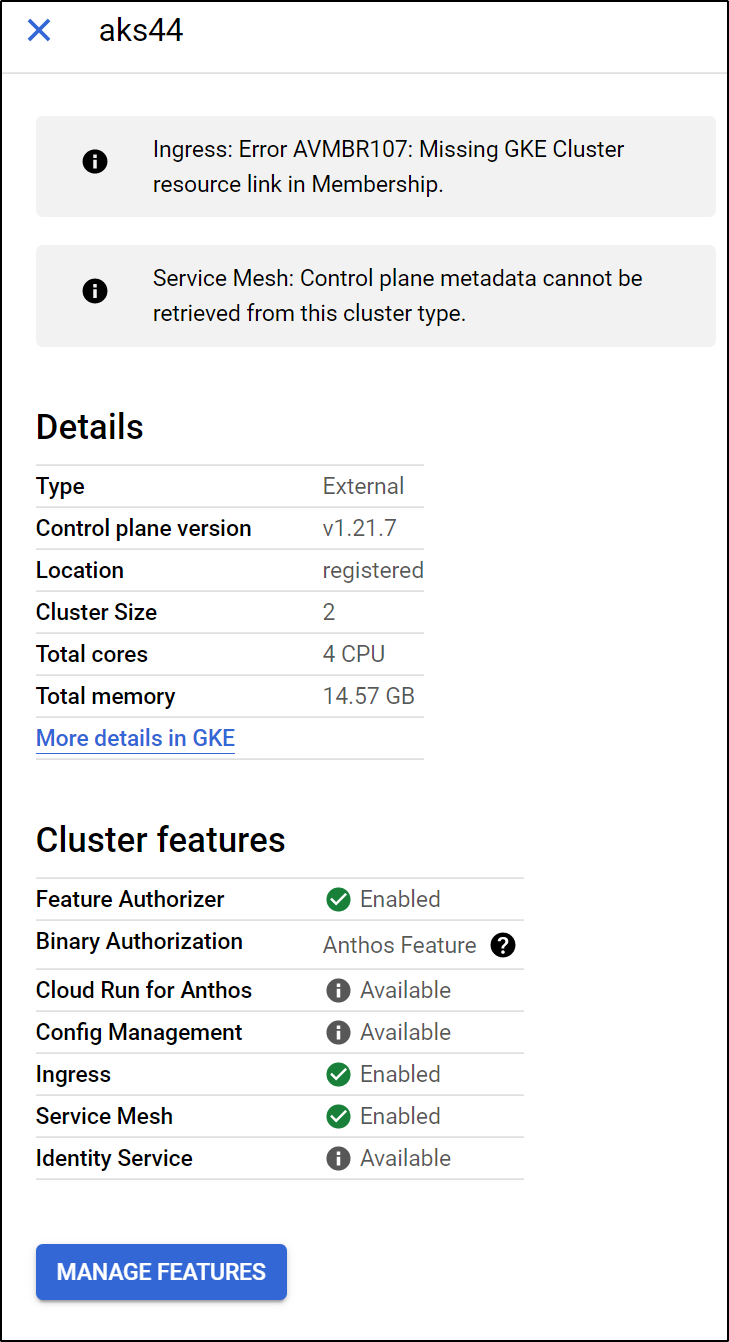

We can see the details of the Cluster

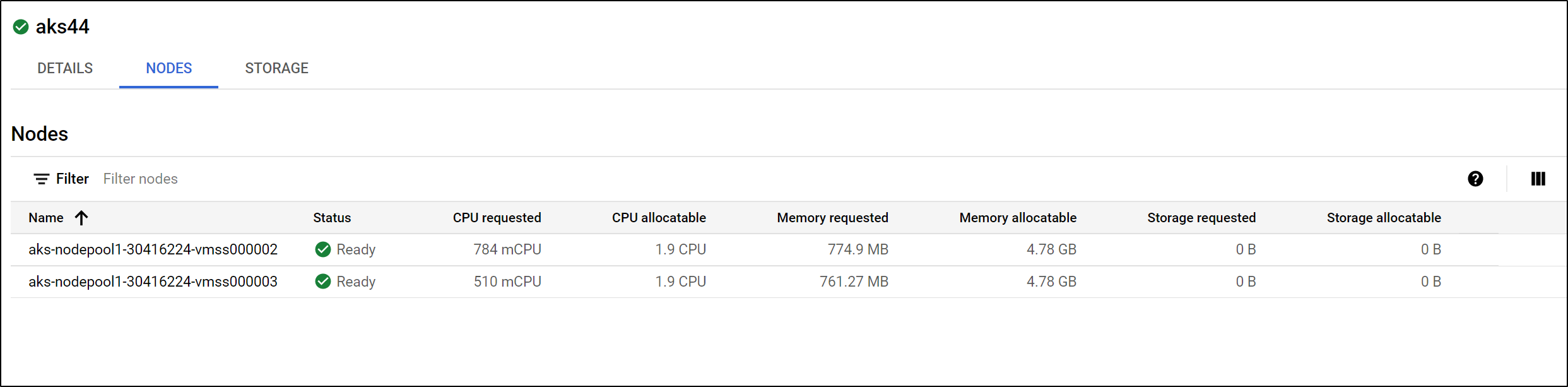

as well as the Node details:

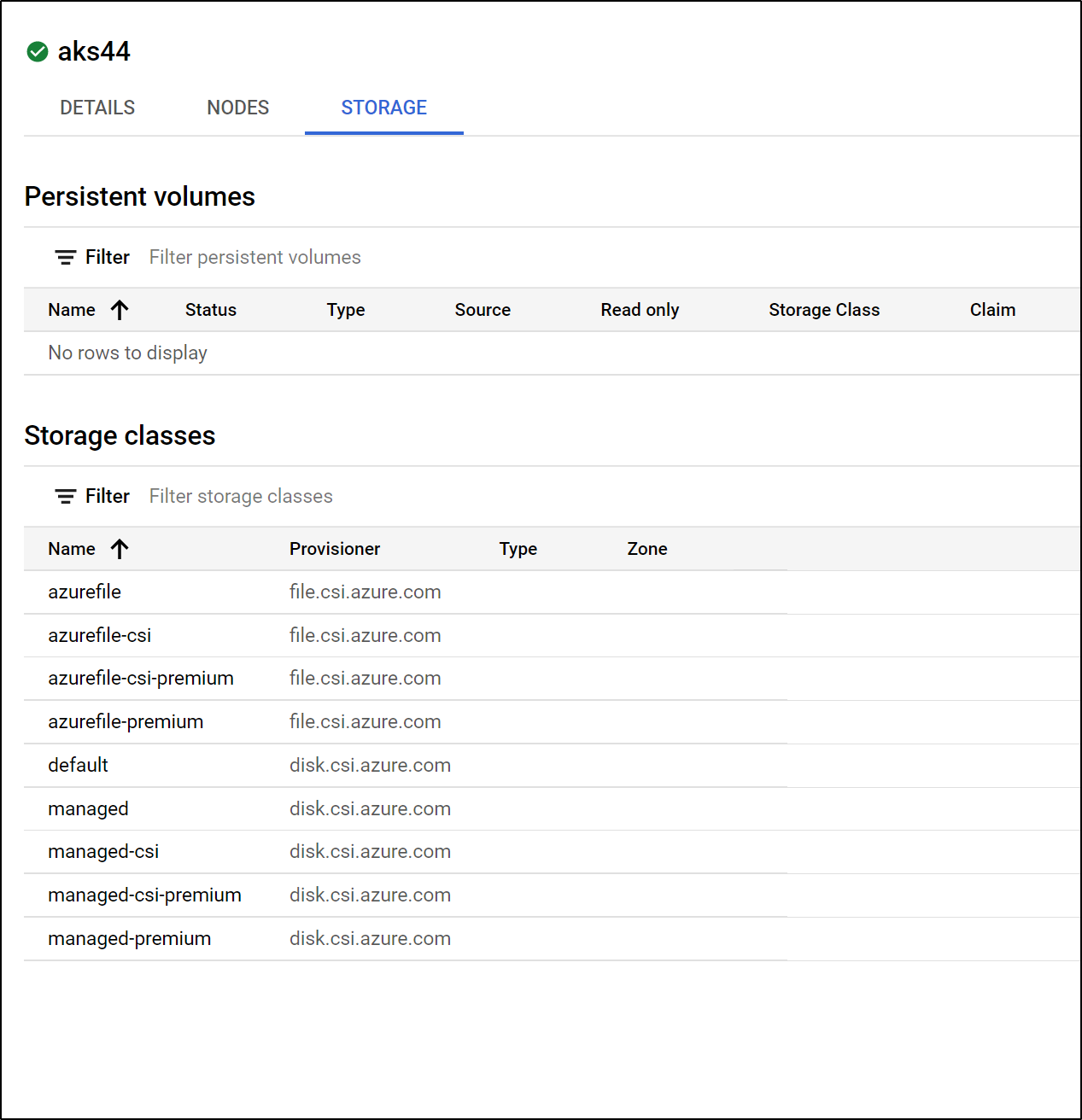

Storage Classes and PVCs:

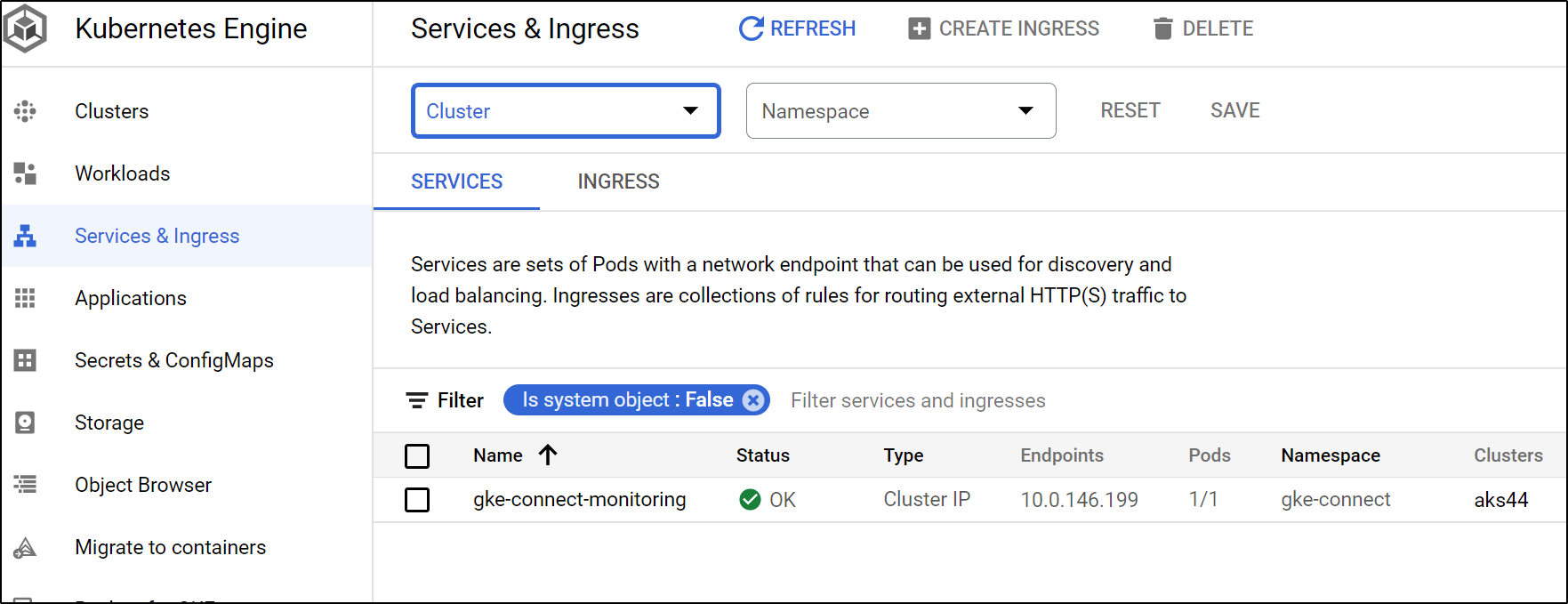

Under services, we can see, at this point, the only service is the GKE connector itself

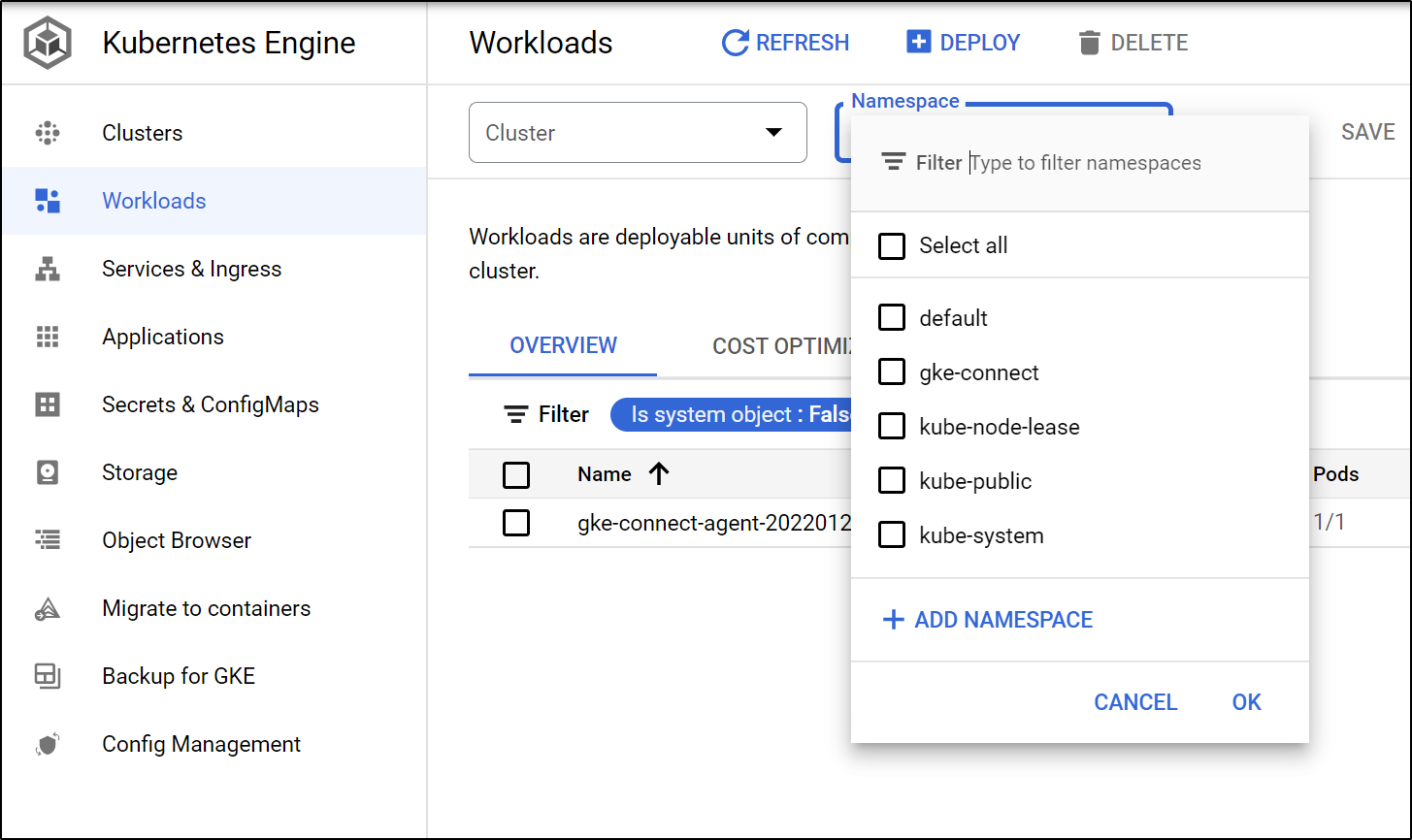

We can also see deployed workloads and optionally limit by namespace

New Deployment (Workloads)

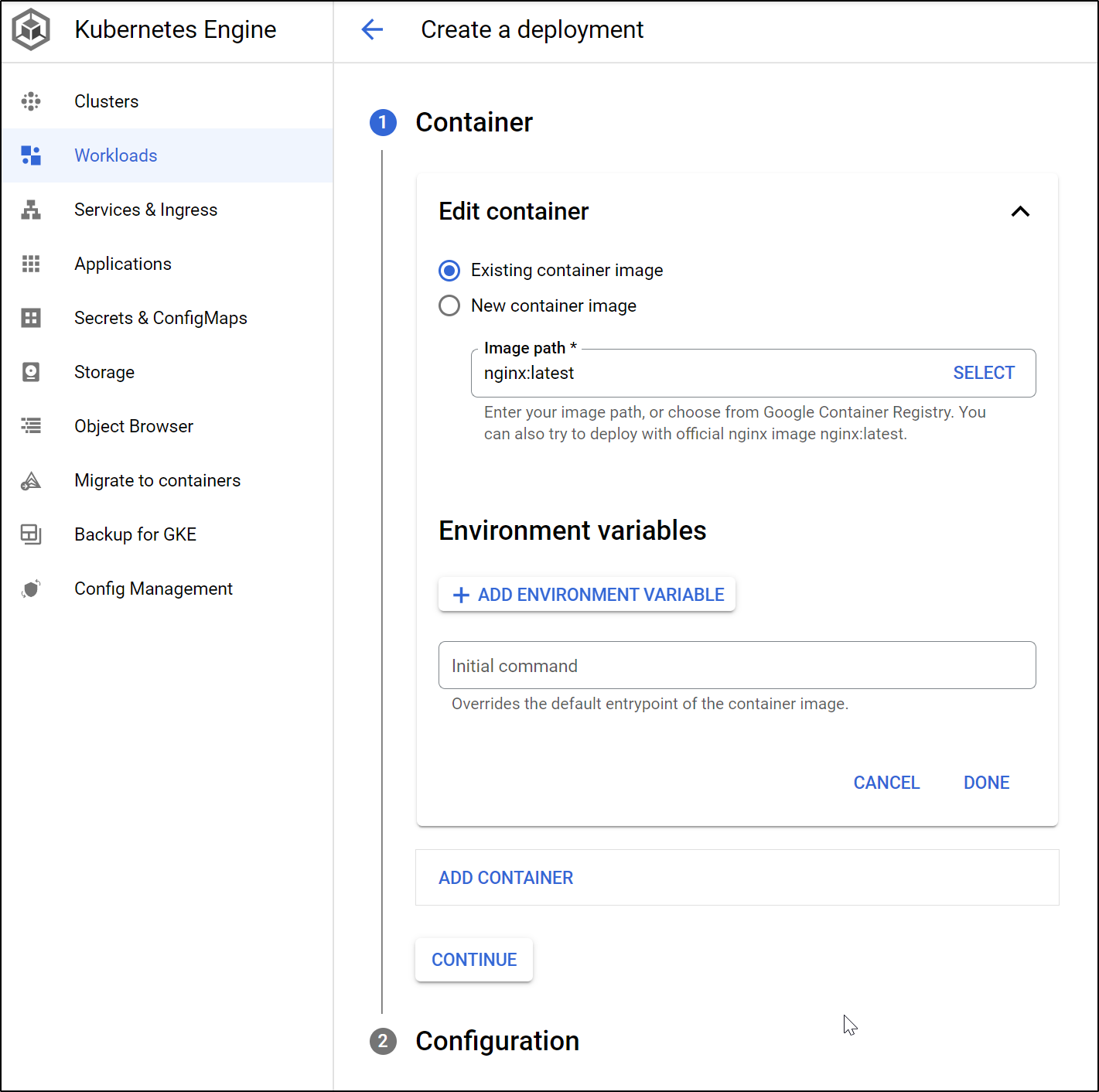

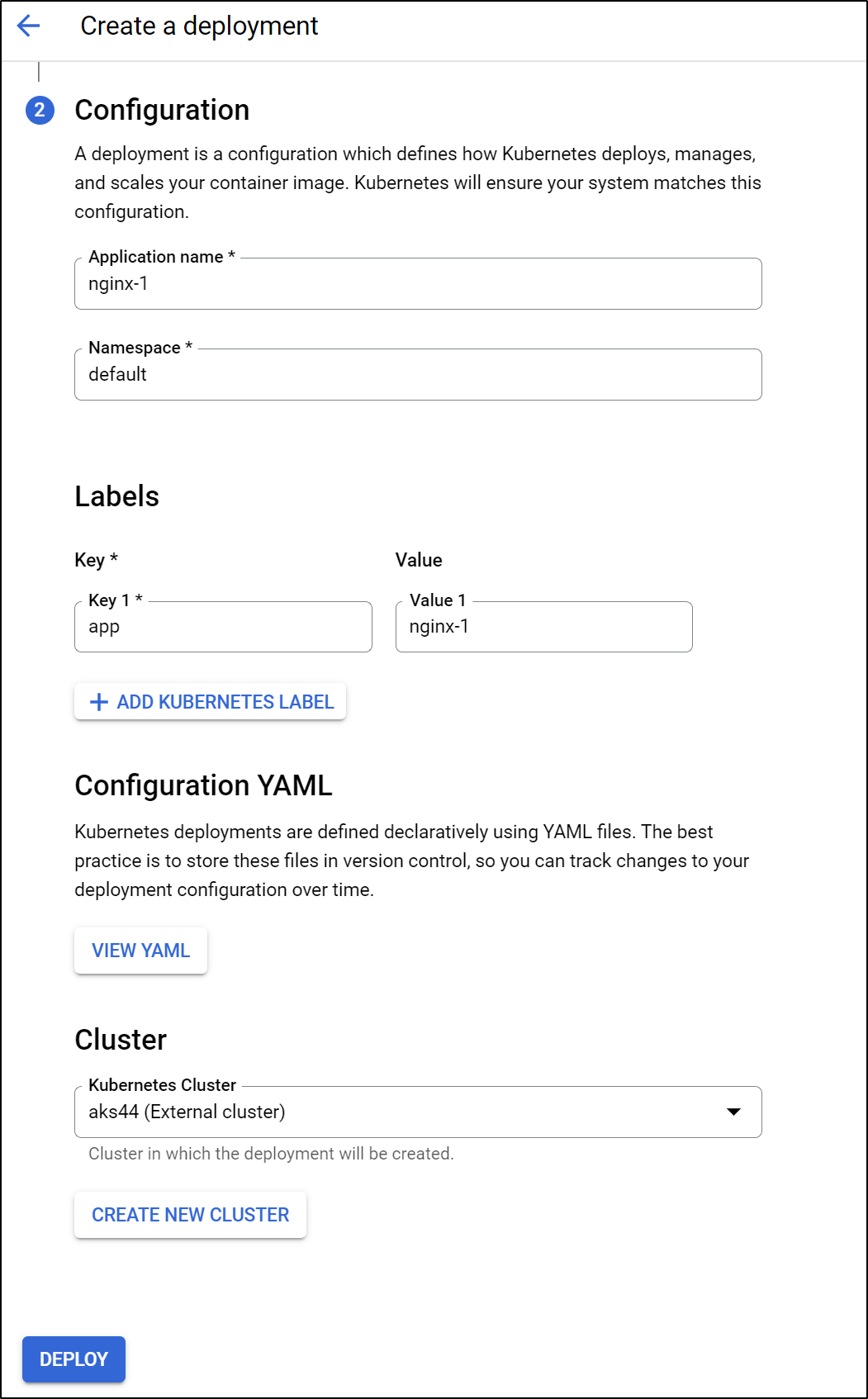

We can create a new deployment from the workloads screen

Then set all the other details

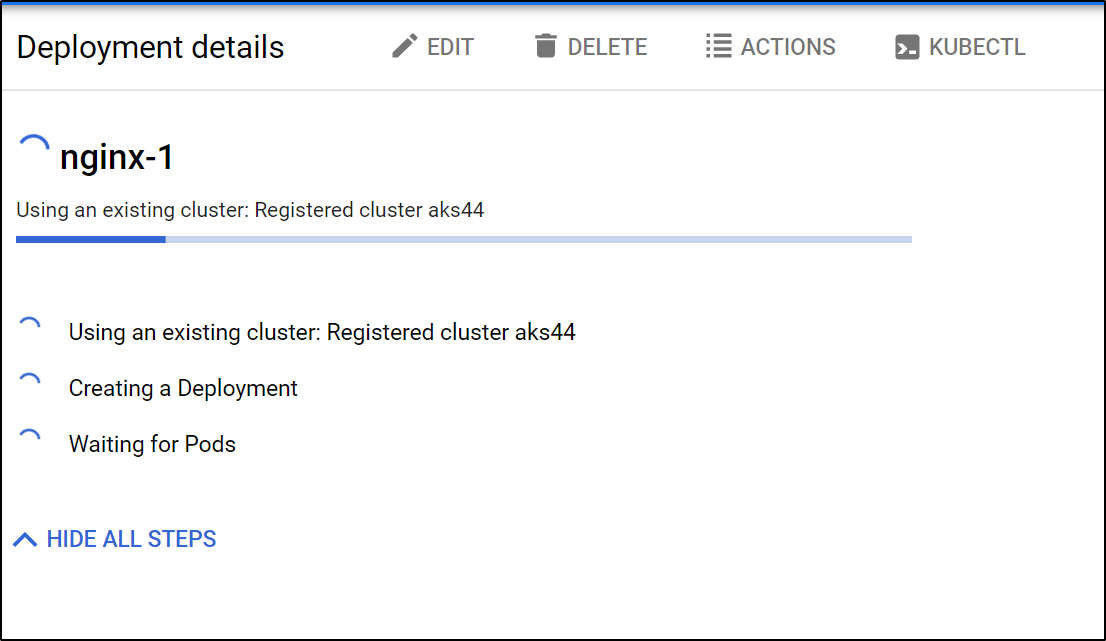

Click deploy to start deploying

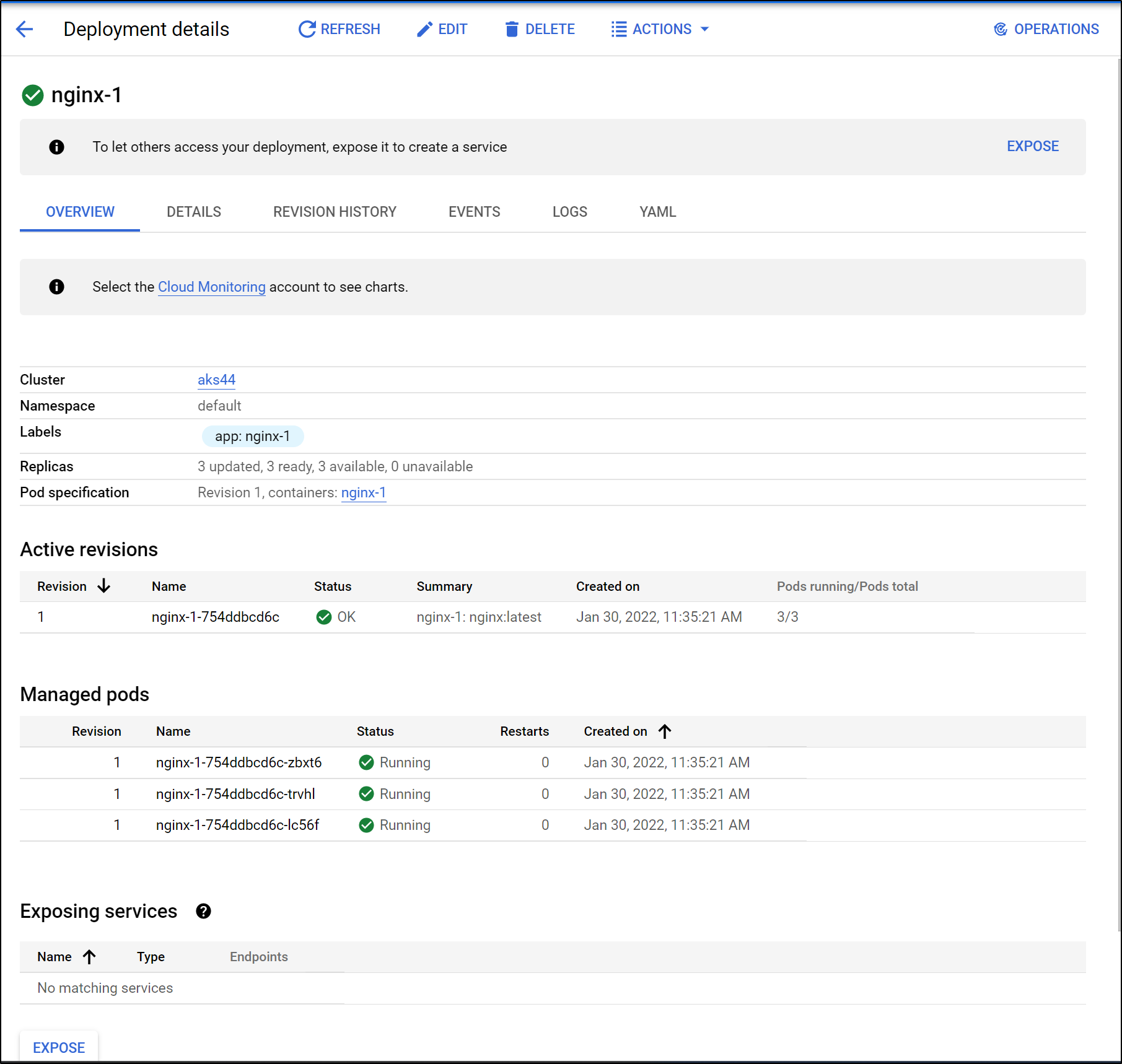

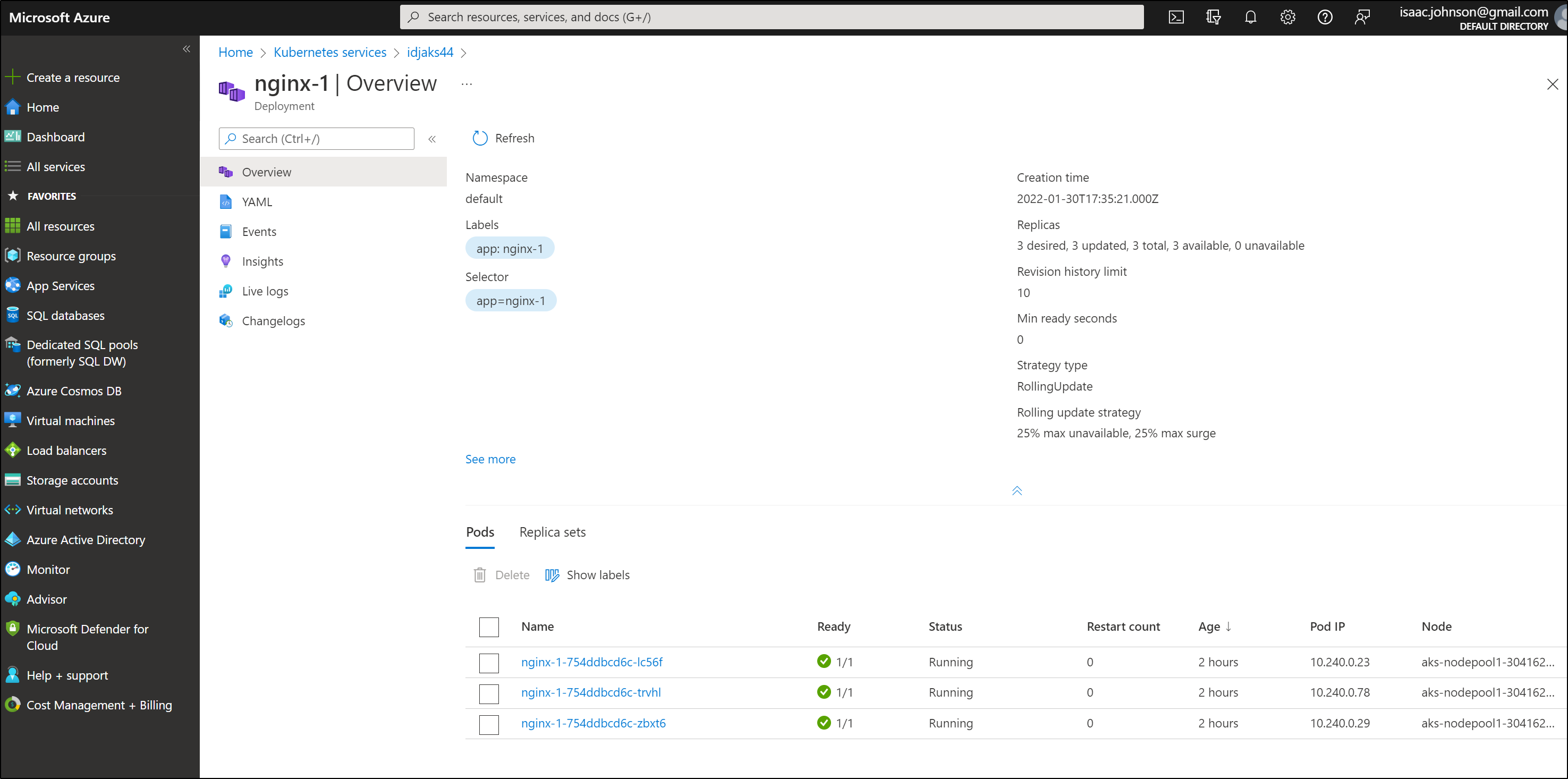

Once deployed we can see the details.

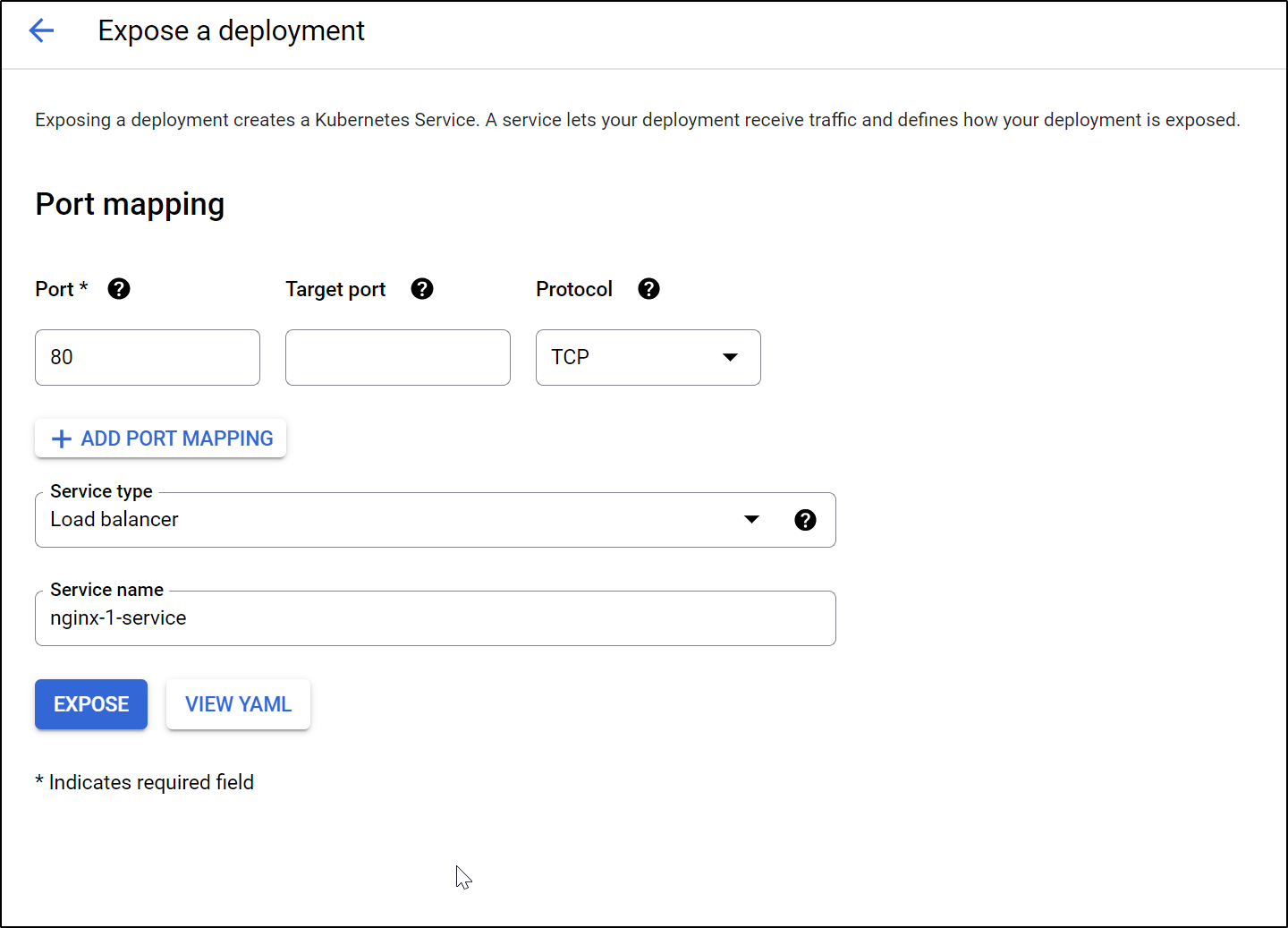

We can then indicate we wish to expose the service with an external loadbalancer

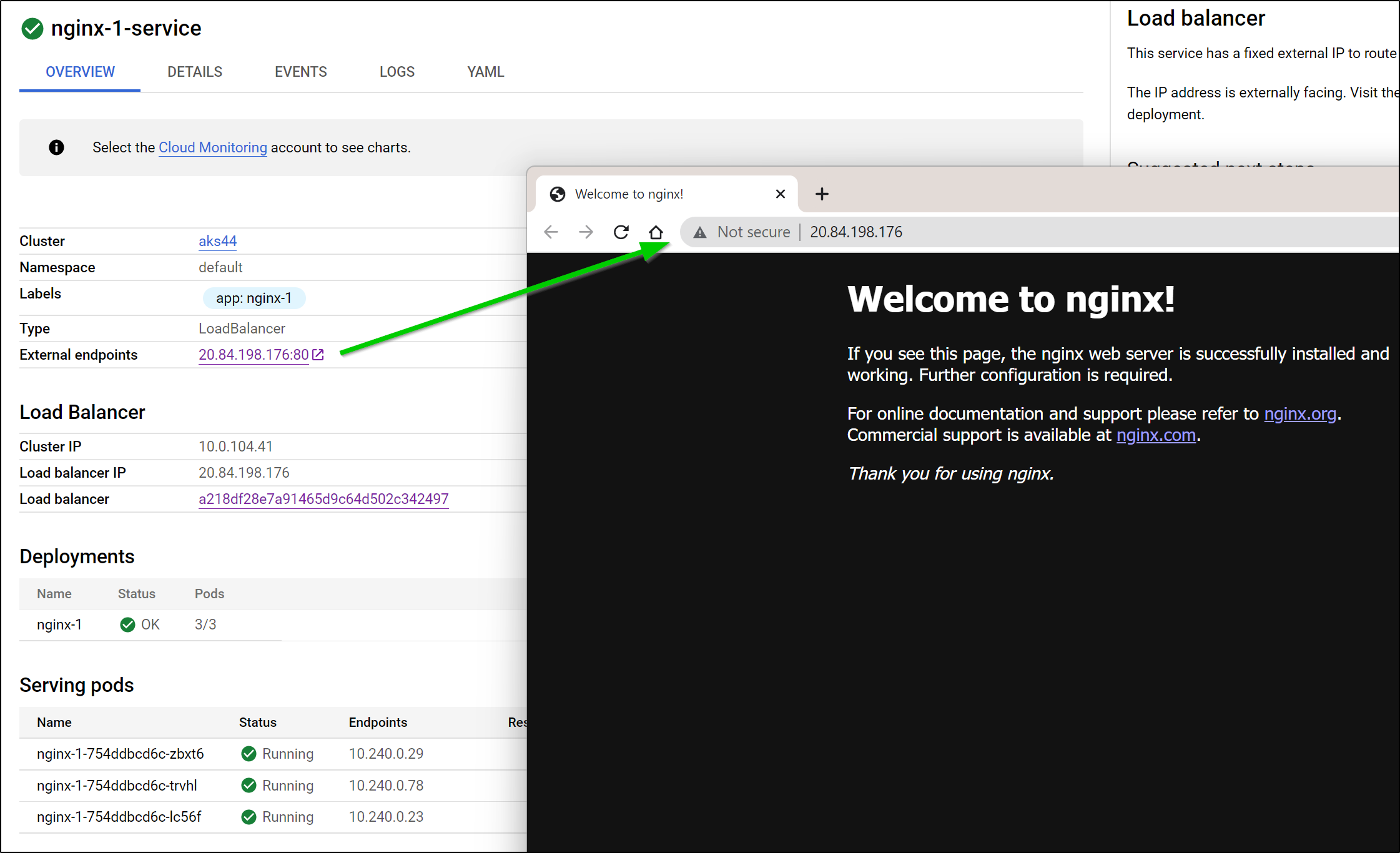

This worked and we can test the public ingress to our basic Nginx deployment.

Enabling Service Mesh

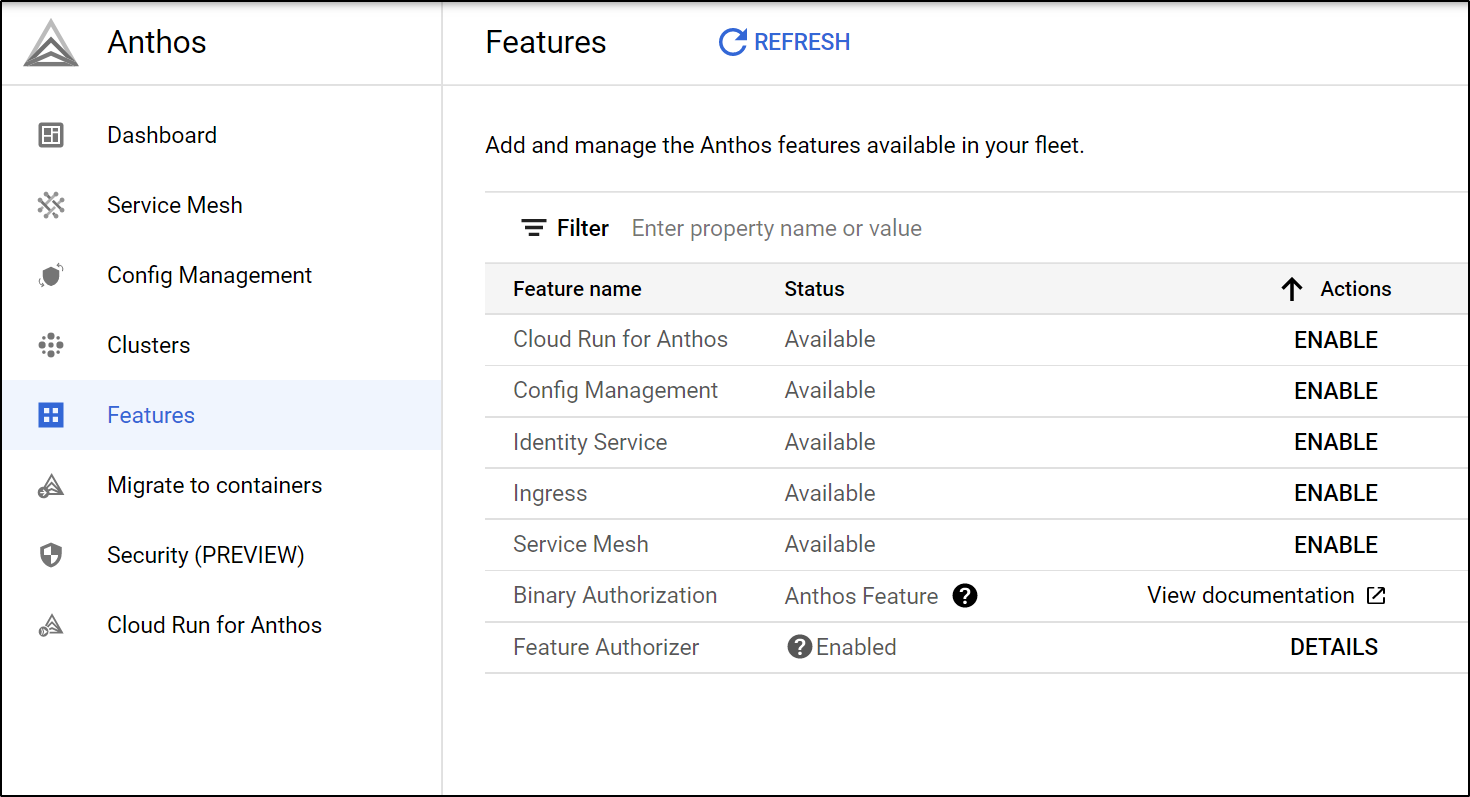

We can enable service mesh (based on Istio) from the Features menu.

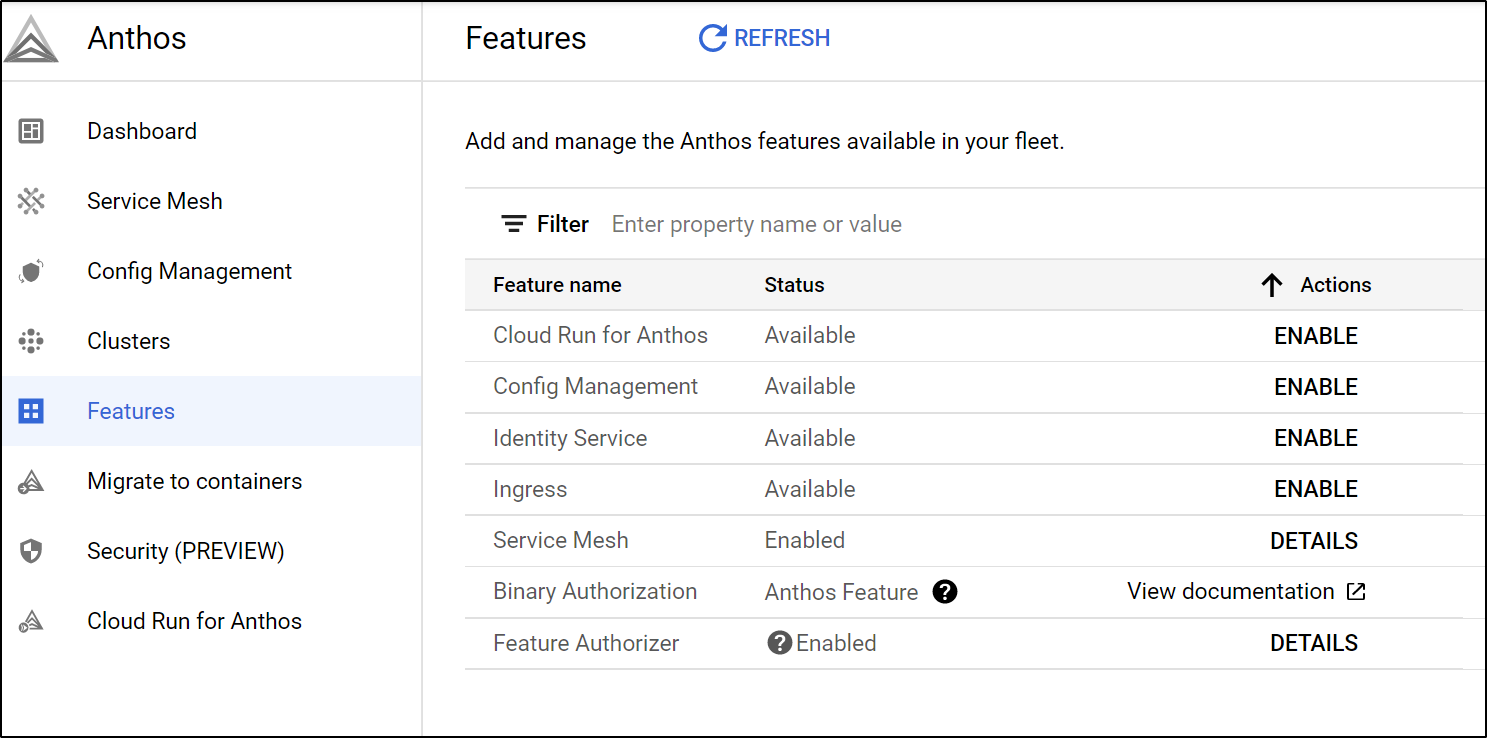

Once we click enable, we can see it enabled

and if we look up Cluster details, we can see Service Mesh is now enabled

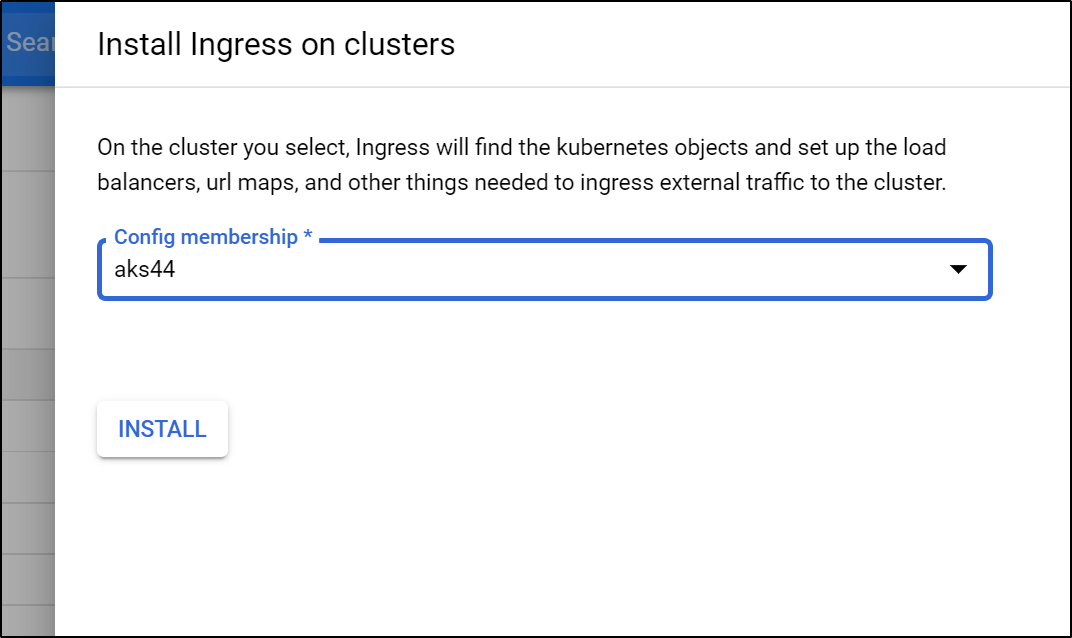

We can also install Googles Ingress to the cluster (note: I did not find this to do anything)

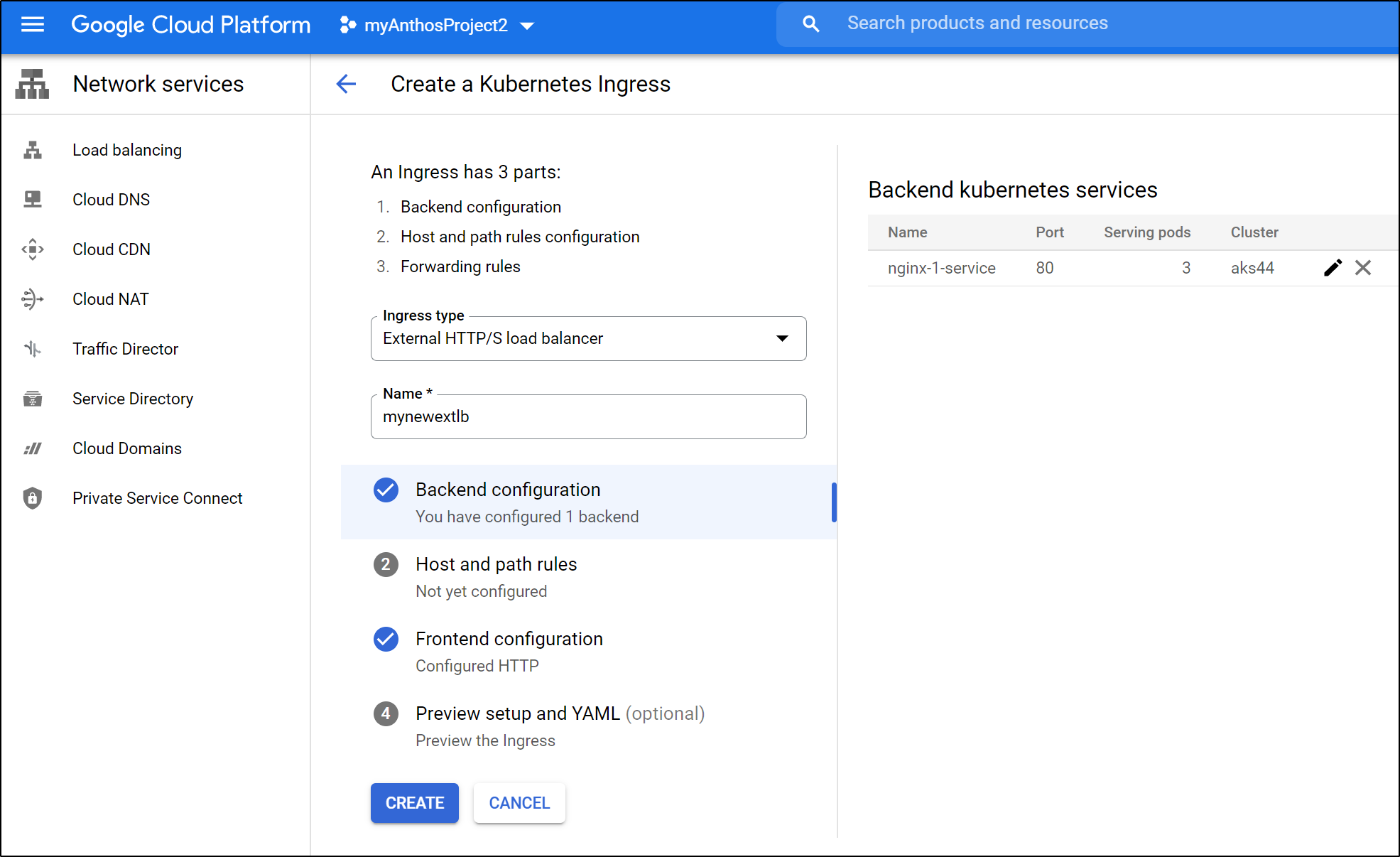

Under the GKE area, we can create a new External LB:

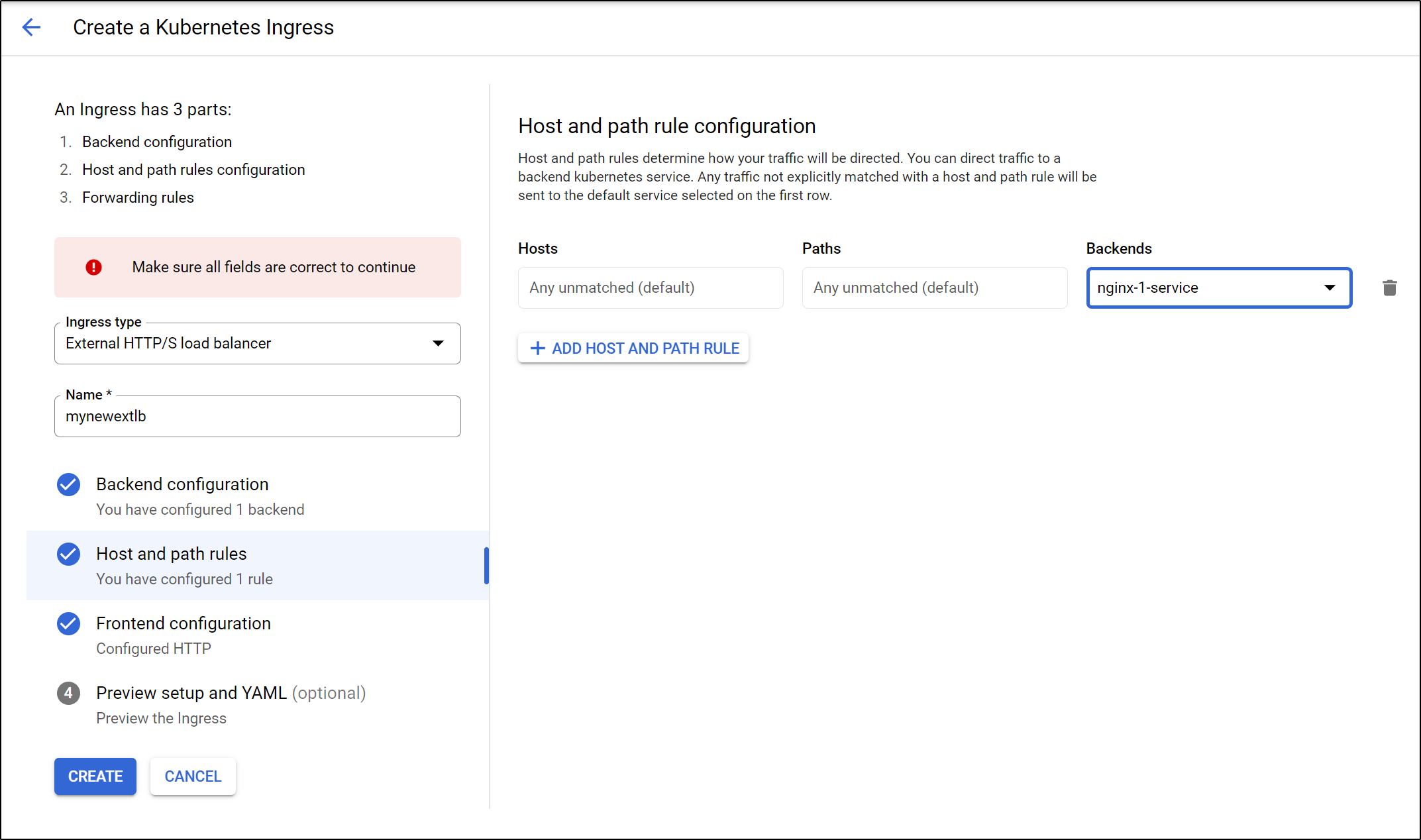

Set your backends (in case you want different paths to route to different services)

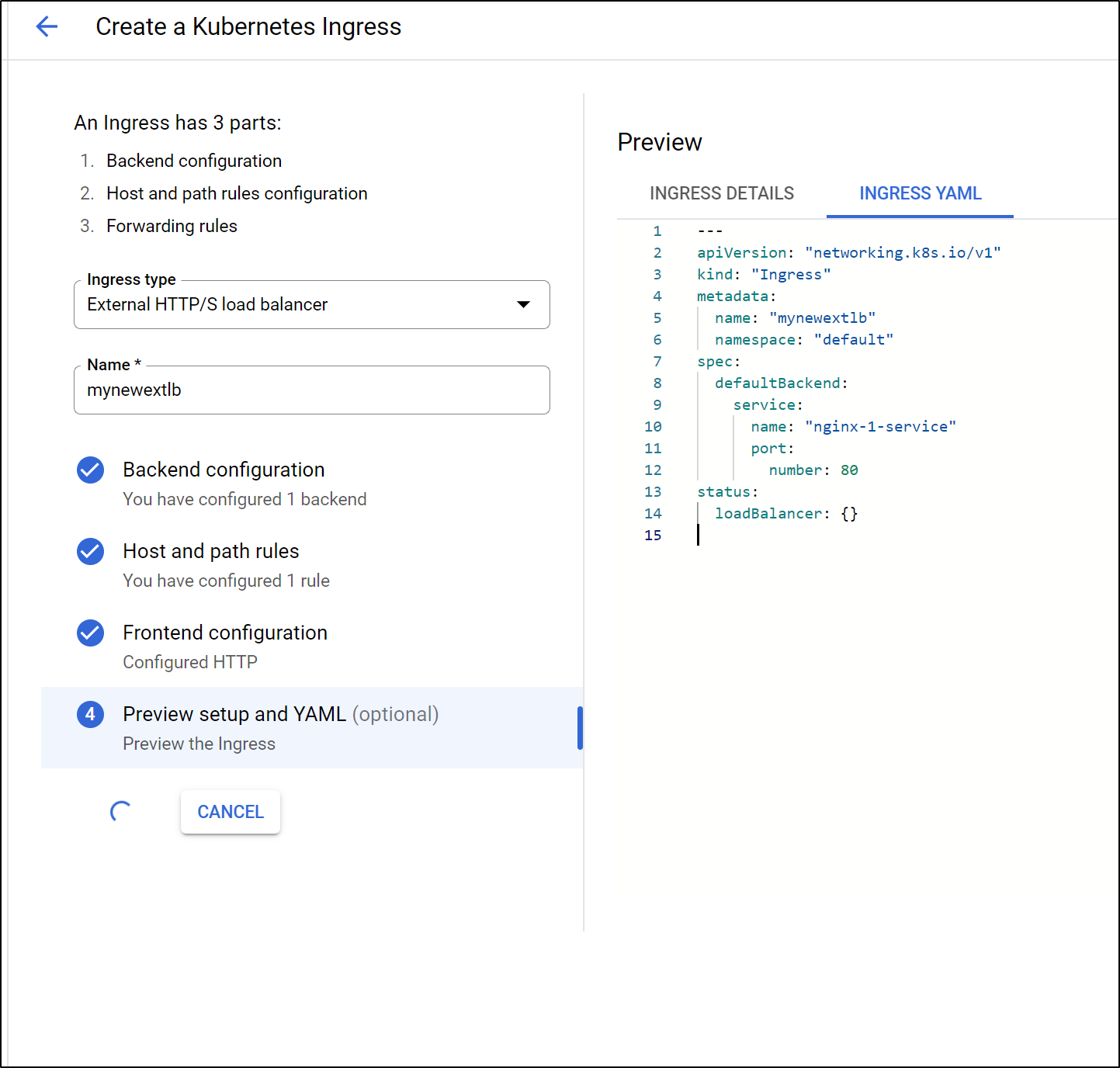

Then choose “create” to create the Ingress.

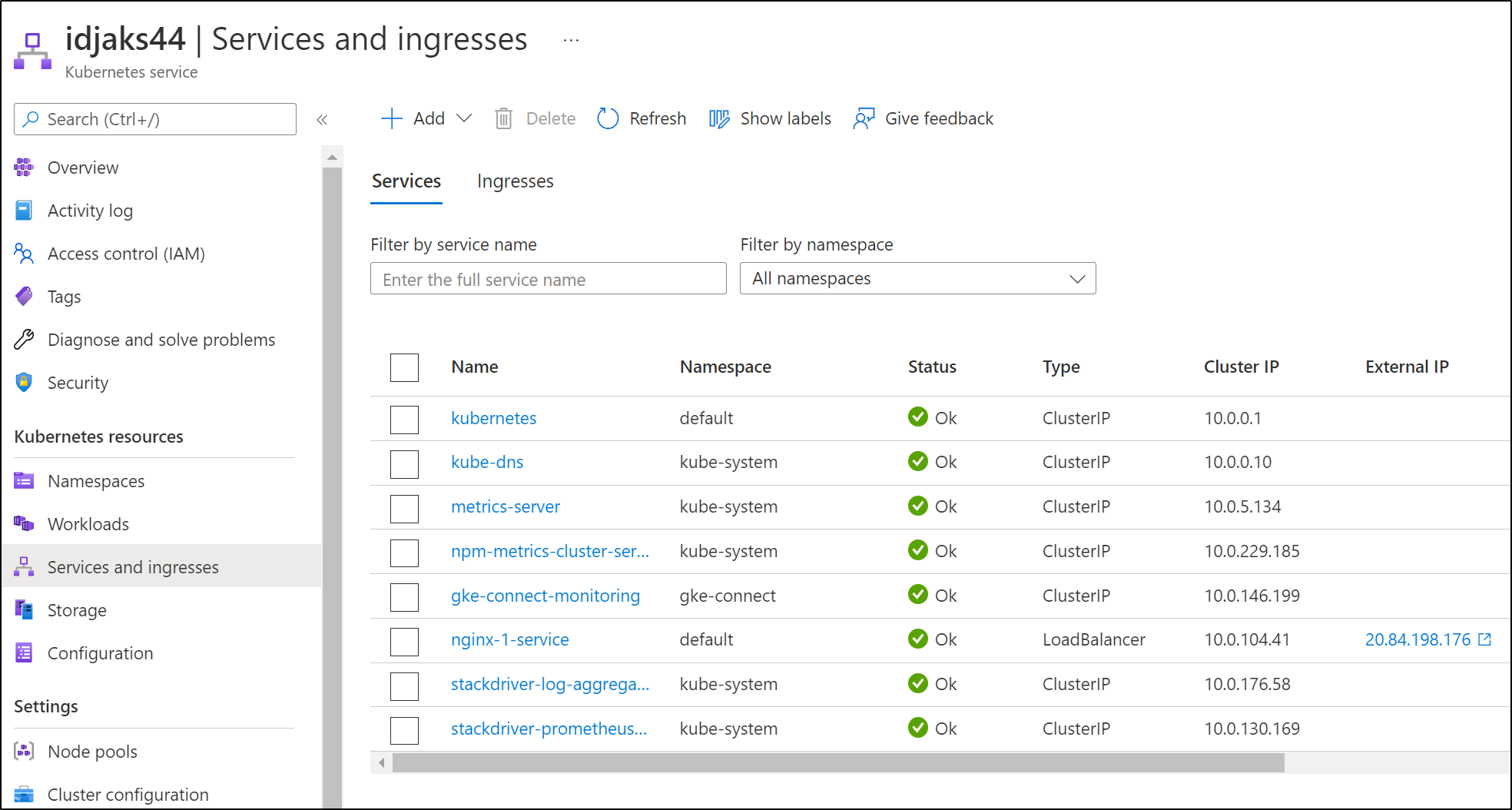

From a Kubernetes standpoint, we won’t see a proper Ingress object. And the only Service with external traffic is the Nginx service which we had already exposed:

$ kubectl get ingress --all-namespaces

No resources found

builder@DESKTOP-72D2D9T:~$ kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 143m

default nginx-1-service LoadBalancer 10.0.104.41 20.84.198.176 80:31959/TCP 63m

gke-connect gke-connect-monitoring ClusterIP 10.0.146.199 <none> 8080/TCP 111m

kube-system kube-dns ClusterIP 10.0.0.10 <none> 53/UDP,53/TCP 143m

kube-system metrics-server ClusterIP 10.0.5.134 <none> 443/TCP 143m

kube-system npm-metrics-cluster-service ClusterIP 10.0.229.185 <none> 9000/TCP 143m

However, the “create ingress” page never returned. I fetched the YAML and applied it. However, as there is no ingress controller satisfying ingress definitions, nothing picked it up:

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

mynewextlb <none> * 80 60s

I do see some errors in the details pane on the cluster details in Anthos now:

Logging

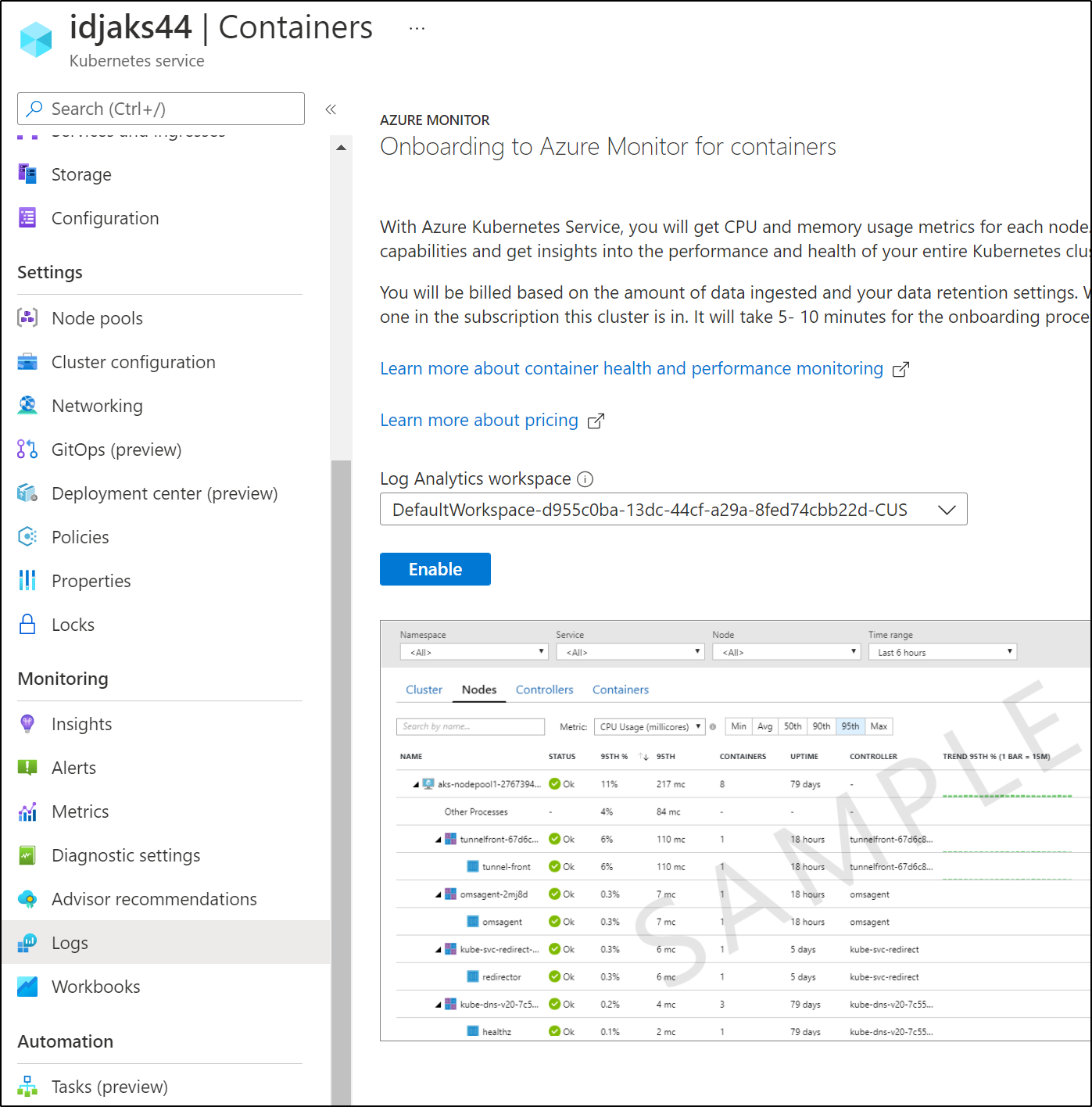

In order to enable logging into GCP we will need to add the Stack driver to our AKS cluster directly. The option to “enable” in GKE is only for actual GCP-hosted GKE clusters.

First we need to add some roles for logs and metrics to our SP

$ gcloud projects add-iam-policy-binding myanthosproject2 --member "serviceAccount:511842454269-compute@developer.gserviceaccount.com" --role "roles/logging.logWriter"

$ gcloud projects add-iam-policy-binding myanthosproject2 --member "serviceAccount:511842454269-compute@developer.gserviceaccount.com" --role "roles/monitoring.metricWriter"

$ kubectl create secret generic google-cloud-credentials -n kube-system --from-file mykeys.json

secret/google-cloud-credentials created

# look up "location" by checking the membership value of our cluster (as you see below, it is "global")

$ gcloud container hub memberships describe aks44 | grep name

name: projects/myanthosproject2/locations/global/memberships/aks44

Next, we need to clone the Anthos-samples repo

$ git clone https://github.com/GoogleCloudPlatform/anthos-samples

Cloning into 'anthos-samples'...

remote: Enumerating objects: 1220, done.

remote: Counting objects: 100% (231/231), done.

remote: Compressing objects: 100% (107/107), done.

remote: Total 1220 (delta 175), reused 129 (delta 124), pack-reused 989

Receiving objects: 100% (1220/1220), 1.40 MiB | 2.69 MiB/s, done.

Resolving deltas: 100% (700/700), done.

# next, open it up and set values for our project, cluster and location

builder@DESKTOP-72D2D9T:~/Workspaces/anthos-samples/attached-logging-monitoring/logging$ vi aggregator.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/anthos-samples/attached-logging-monitoring/logging$ sed -i 's/\[PROJECT_ID\]/myanthosproject2/g' aggregator.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/anthos-samples/attached-logging-monitoring/logging$ sed -i 's/\[CLUSTER_NAME\]/aks44/g' aggregator.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/anthos-samples/attached-logging-monitoring/logging$ sed -i 's/\[CLUSTER_LOCATION\]/global/g' aggregator.yaml

Ecit the aggregator.yaml for our volumeclass and name of our keys file

spec:

# storageClassName: standard #GCP

# storageClassName: gp2 #AWS EKS

storageClassName: default #Azure AKS

# also, since i didnt save my creds as "credentials.json", i changed the name to match my json file

env:

- name: GOOGLE_APPLICATION_CREDENTIALS

value: /google-cloud-credentials/mykeys.json

Now apply

$ kubectl apply -f aggregator.yaml

serviceaccount/stackdriver-log-aggregator created

service/stackdriver-log-aggregator-in-forward created

networkpolicy.networking.k8s.io/stackdriver-log-aggregator-in-forward created

networkpolicy.networking.k8s.io/stackdriver-log-aggregator-prometheus-scrape created

statefulset.apps/stackdriver-log-aggregator created

configmap/stackdriver-log-aggregator-input-config created

configmap/stackdriver-log-aggregator-output-config created

$ kubectl apply -f forwarder.yaml

serviceaccount/stackdriver-log-forwarder created

clusterrole.rbac.authorization.k8s.io/stackdriver-user:stackdriver-log-forwarder created

clusterrolebinding.rbac.authorization.k8s.io/stackdriver-user:stackdriver-log-forwarder created

daemonset.apps/stackdriver-log-forwarder created

configmap/stackdriver-log-forwarder-config created

When done, we should see the aggregator running

$ kubectl get pods -n kube-system | grep stackdriver-log

stackdriver-log-aggregator-0 1/1 Running 0 5m16s

stackdriver-log-aggregator-1 1/1 Running 0 3m55s

stackdriver-log-forwarder-rzfvq 1/1 Running 0 5m9s

stackdriver-log-forwarder-w7ngx 1/1 Running 0 5m9s

$ kubectl logs stackdriver-log-aggregator-0 -n kube-system | tail -n10

2022-01-30 19:14:48 +0000 [info]: #5 fluentd worker is now running worker=5

2022-01-30 19:14:48 +0000 [info]: #9 fluentd worker is now running worker=9

2022-01-30 19:14:48 +0000 [info]: #7 listening port port=8989 bind="0.0.0.0"

2022-01-30 19:14:48 +0000 [info]: #7 fluentd worker is now running worker=7

2022-01-30 19:14:54 +0000 [info]: #8 [google_cloud] Successfully sent gRPC to Stackdriver Logging API.

2022-01-30 19:14:54 +0000 [info]: #7 [google_cloud] Successfully sent gRPC to Stackdriver Logging API.

2022-01-30 19:14:56 +0000 [info]: #4 [google_cloud] Successfully sent gRPC to Stackdriver Logging API.

2022-01-30 19:14:57 +0000 [info]: #3 [google_cloud] Successfully sent gRPC to Stackdriver Logging API.

2022-01-30 19:14:57 +0000 [info]: #5 [google_cloud] Successfully sent gRPC to Stackdriver Logging API.

2022-01-30 19:14:58 +0000 [info]: #0 [google_cloud] Successfully sent gRPC to Stackdriver Logging API.

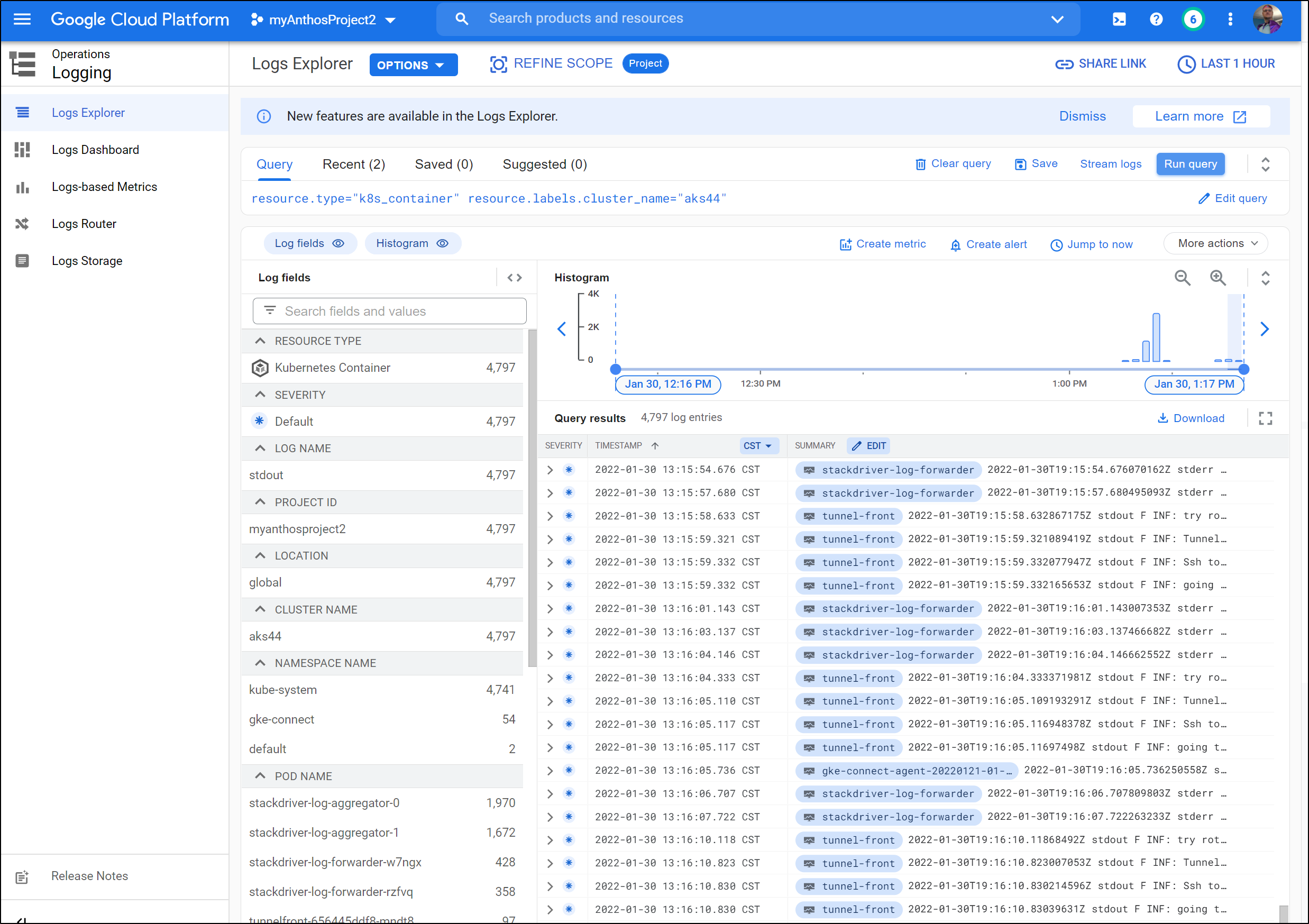

We can now see our logs in Log Explorer with resource.type="k8s_container" resource.labels.cluster_name="aks44"

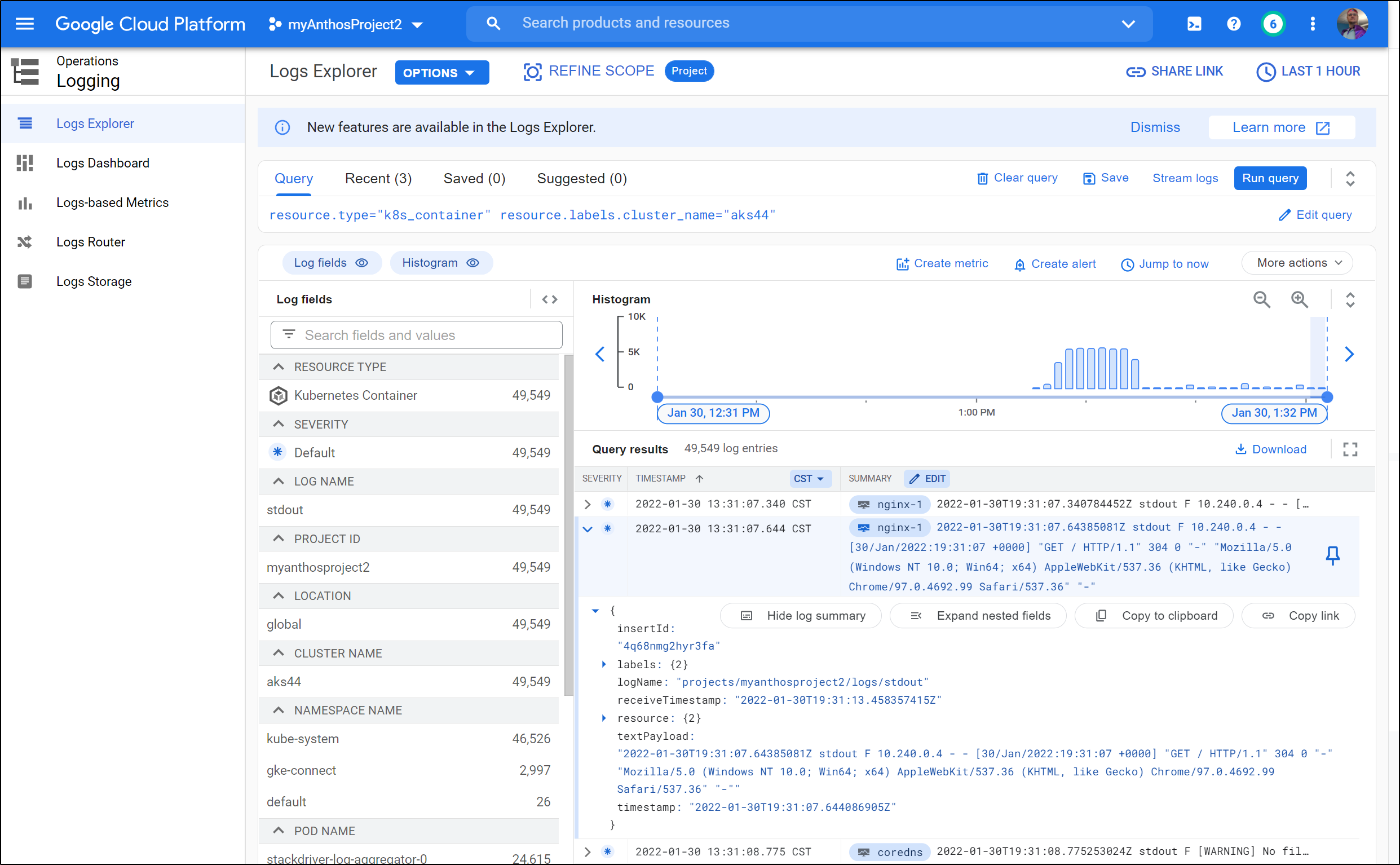

And after hitting the Nginx service, we can see results in the Logs

Monitoring

We tackled logging, now let’s capture metrics from our Anthos connected cluster.

We will use that same Anthos-sample repo to install a metrics collector (Prometheus).

Like before, we need to replace the keywords in the YAML file unique to our project and cluster.

builder@DESKTOP-72D2D9T:~/Workspaces/anthos-samples/attached-logging-monitoring/logging$ cd ../monitoring/

builder@DESKTOP-72D2D9T:~/Workspaces/anthos-samples/attached-logging-monitoring/monitoring$ vi prometheus.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/anthos-samples/attached-logging-monitoring/monitoring$ sed -i 's/\[CLUSTER_LOCATION\]/global/g' prometheus.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/anthos-samples/attached-logging-monitoring/monitoring$ sed -i 's/\[CLUSTER_NAME\]/aks44/g' prometheus.yaml

builder@DESKTOP-72D2D9T:~/Workspaces/anthos-samples/attached-logging-monitoring/monitoring$ sed -i 's/\[PROJECT_ID\]/myanthosproject2/g' prometheus.yaml

# set Azure storage class

$ sed -i 's/# storageClassName: default/storageClassName: defa

ult/g' prometheus.yaml

# like before change the name of our keys file

$ find . -type f -exec sed -i 's/google-cloud-credentials\/cre

dentials.json/google-cloud-credentials\/mykeys.json/g' {} \;

Now we can apply the files

$ kubectl apply -f server-configmap.yaml

configmap/stackdriver-prometheus-sidecar-config

$ kubectl apply -f sidecar-configmap.yaml

$ kubectl apply -f prometheus.yaml

Get some logs to see if it is setup right

$ kubectl logs stackdriver-prometheus-k8s-0 -n kube-system stackdriver-prometheus-sidecar

level=info ts=2022-01-30T19:26:03.165Z caller=main.go:293 msg="Starting Stackdriver Prometheus sidecar" version="(version=0.8.0, branch=master, revision=6540ca8d032a413513940f329ca2aaf14c267a53)"

level=info ts=2022-01-30T19:26:03.165Z caller=main.go:294 build_context="(go=go1.12, user=kbuilder@kokoro-gcp-ubuntu-prod-724580205, date=20200731-04:21:42)"

level=info ts=2022-01-30T19:26:03.165Z caller=main.go:295 host_details="(Linux 5.4.0-1067-azure #70~18.04.1-Ubuntu SMP Thu Jan 13 19:46:01 UTC 2022 x86_64 stackdriver-prometheus-k8s-0 (none))"

level=info ts=2022-01-30T19:26:03.165Z caller=main.go:296 fd_limits="(soft=1048576, hard=1048576)"

level=info ts=2022-01-30T19:26:03.169Z caller=main.go:598 msg="Web server started"

level=info ts=2022-01-30T19:26:03.170Z caller=main.go:579 msg="Stackdriver client started"

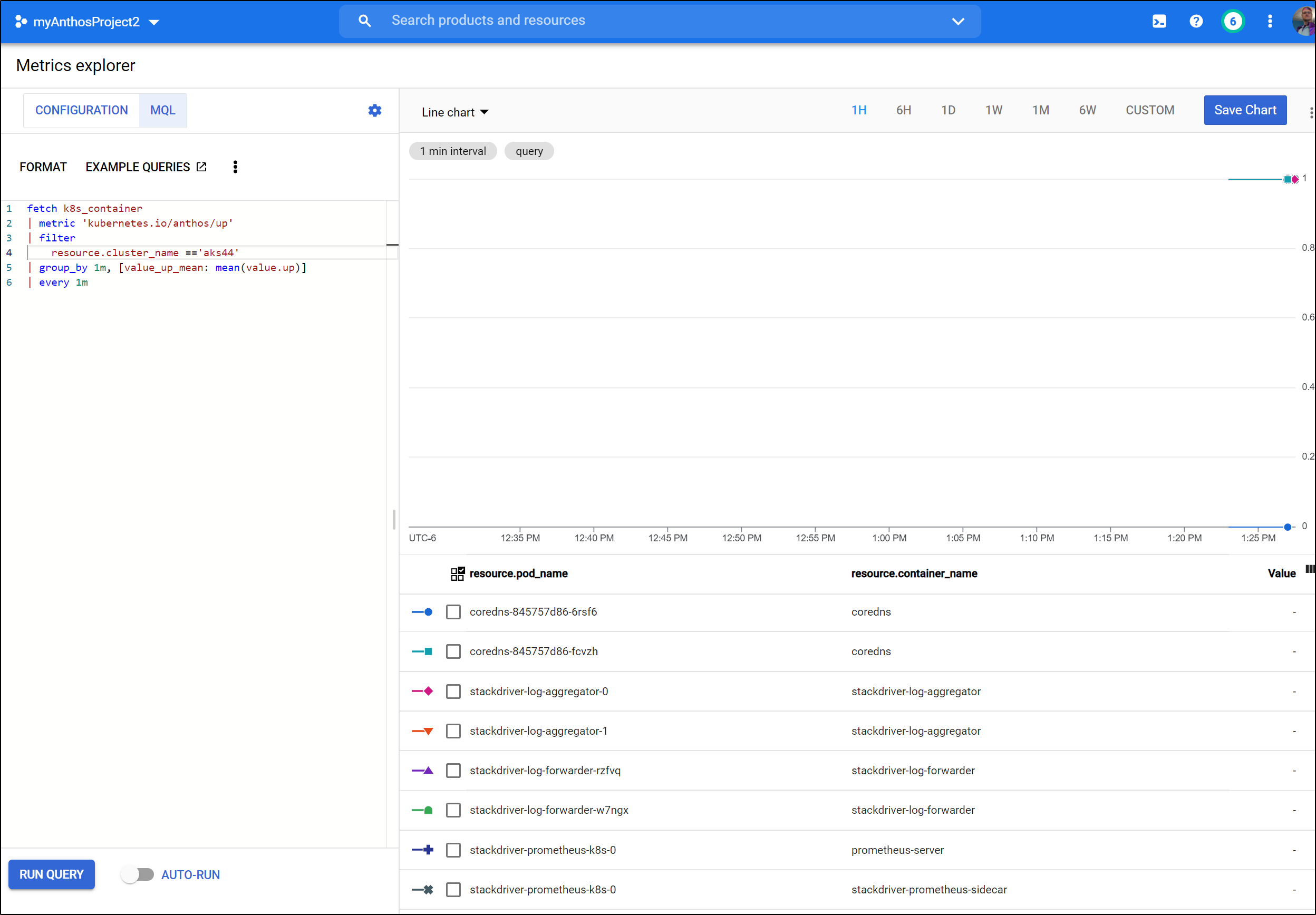

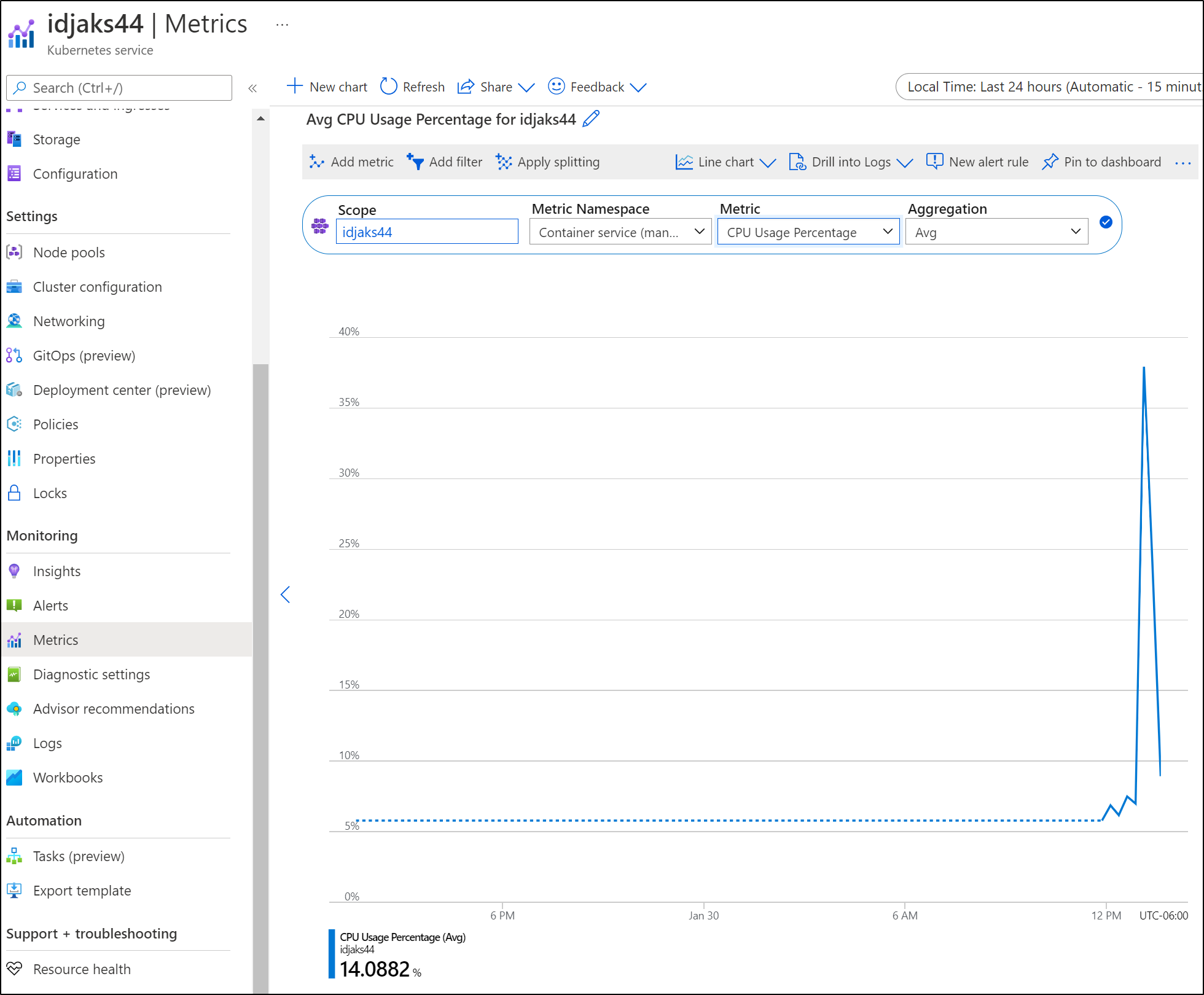

Now we can see results under Metrics Explorer

fetch k8s_container

| metric 'kubernetes.io/anthos/up'

| filter

resource.project_id == 'myanthosproject2'

&& (resource.cluster_name =='aks44')

| group_by 1m, [value_up_mean: mean(value.up)]

| every 1m

Azure

We can still see most of the same information in Azure, such as workloads

Ingress, including external LBs

and if we enable it, Logs and Metrics

Some metrics, however, we get OOTB with Azure, such as basic Cluster Health ones:

Cleanup

To stop incurring costs, we’ll delete the Google Project https://console.cloud.google.com/cloud-resource-manager

We can delete the AKS cluster and RG from Azure to remove all the Azure things we made:

$ az aks list -o table

Name Location ResourceGroup KubernetesVersion ProvisioningState Fqdn

-------- ---------- --------------- ------------------- ------------------- -----------------------------------------------------------

idjaks44 centralus idjaks44rg 1.21.7 Succeeded idjaks44-idjaks44rg-d955c0-31fe89bd.hcp.centralus.azmk8s.io

$ az aks delete -n idjaks44 -g idjaks44rg

Are you sure you want to perform this operation? (y/n): y

- Running ..

Later, I realized I kept getting Rate Limiting notices on my on-prem cluster (Waited for due to client-side throttling... , not priority and fairness gatekeeper). This was because my on-prem cluster still had OPA Gatekeeper installed. If you do not want this, you can uninstall with:

$ kubectl delete -f https://raw.githubusercontent.com/open-policy-agent/gatekeeper/release-3.5/deploy/gatekeeper.yaml

The rate limiting also was likely left by an admission controller. Basically you need to disable it with kube-apiserver (however, in K3s, I do not have this):

kube-apiserver --disable-admission-plugins=PodNodeSelector,AlwaysDeny ...

I found more things left around as well such as reposync and rootsync objects.

$ kubectl get rootsyncs root-sync -n config-management-system -o yaml | head -n50

apiVersion: configsync.gke.io/v1beta1

kind: RootSync

metadata:

annotations:

configmanagement.gke.io/managed-by-hub: "true"

configmanagement.gke.io/update-time: "1643237183"

creationTimestamp: "2022-01-26T22:46:25Z"

generation: 1

name: root-sync

namespace: config-management-system

resourceVersion: "181343772"

uid: ba21d9c3-41d5-482f-88e5-2b8a50779314

spec:

git:

...

I removed the whole namespace:

$ kubectl delete ns config-management-system

namespace "config-management-system" deleted

Also there were PSPs left:

$ kubectl get podsecuritypolicy

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES

gkeconnect-psp false RunAsAny MustRunAsNonRoot MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

acm-psp false RunAsAny MustRunAsNonRoot MustRunAs MustRunAs false configMap,downwardAPI,emptyDir,persistentVolumeClaim,projected,secret

$ kubectl delete podsecuritypolicy gkeconnect-psp

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy "gkeconnect-psp" deleted

$ kubectl delete podsecuritypolicy acm-psp

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy "acm-psp" deleted

I’m sure I will continue to find more GKE things left in my on-prem cluster.

Summary

We setup Anthos with a sample deployment and explored several of its features. These included the Service Mesh (based on Istio) and configuring GKE clusters as created in Anthos. We examined Backup Policies and touched on the “Migrate to Containers” flow. We onboarded an On-Prem cluster and setup Policy Management (then showed how to remove it). Lastly, we touched on Pricing.

We also did it all again and onboarded an Azure Kuberentes Service cluster to see how it compared. We were able to install an Nginx deployment through GCP and expose its service externally. While it took a different git repo and a bunch of hand steps, we were able to setup Logging and Metrics collection to GCP from our AKS cluster.

Anthos is most similar to Azure Arc. In looking at Policy Management, for instance, both fundamentally create gatekeepers that implement Open Policy Agent (OPA) standards.

Unlike Arc, Anthos includes Cloud Run for serverless and unlike Anthos, Arc includes GitOps for git based CD to Kubernetes. Anthos Config Management may also apply configurations, but this was not evident in the system (would need to test further).

A lot of features of “Anthos” are just features of GKE exposed in another menu. Many documentation steps of how to enable things did not line up to the command line and I generally found the process quirky and unpolished.

I will own that I personally find Google IAM system very cumbersome and unwieldy. Unlike AWS IAM where we can just set a nice fat JSON policy and apply it, or impersonate an elevated role, in GCP we have this mix of identities tied to services that need specific APIs enabled. And then at times applied places. All of which is often hidden in the UI and requires many runs of the gcloud CLI tool. Again, comparing to Azure where AAD Identity management is quite clear with roles and identities and, in a rare occasion (e.g. AKV), access policies.

Anthos can be enabled with just an API enablement on a given project (we used a quickstart):

The trial covers you up to US$800/30d and then you will be billed beyond that. Be aware, if you “go nuts”, know you could get a big fat bill. I enabled it and it cost me just under US$8 so it was not free (to me).

I did like the Policy Management via Anthos/GKE. I thought that offered some good stuff. My cluster is ‘delicate’ so it was not perfect. I wish I could set the policy agent to only monitor specific namespaces (compared to all namespaces with exclusions). I also wish I could configure it after the fact. That seems to be a missed opportunity.

The ability to create SLOs with Alerts is handy and not something I’ve seen elsewhere. In my industry (SRE), tracking SLIs to ensure we achieve SLOs is a big deal. Being able to expose that and alert it early is a very handy feature. That alone would get me to add my non-GKE clusters to Anthos.

The costs, however, spook me. I’m cost adverse and when one moment I see 7 cents and the next morning its 7 bucks, I pull the plug. It’s fine to charge money - this I am good with - but I do not like hockey stick billing graphs and so understanding (and planning for) the costs would be key, at least for me, to Anthos adoption.

More Links:

- Anthos Technical Overview: https://cloud.google.com/anthos/docs/concepts/overview#try

- Explore Anthos (Sample App): https://cloud.google.com/anthos/docs/tutorials/explore-anthos

- GCP Quick start: Anthos Sample Deployment