Published: Oct 27, 2021 by Isaac Johnson

I’m a sucker for Schwag. I’ll admit I have a vast collection of Tees from various conferences and it’s really something I miss in the last years of quarantine - trade show halls and silly branded gadgets.

So when a sales rep from Lightstep offered me a Tee or gift card for listening to a pitch, I was game. I worked through their product as we see below and had some nice emails with their technical staff as I tried to get it to work with Dapr.

History

Lightstep was founded in 2015 by Benjamin Cronin (Operations), Ben Sigelman (CEO) and Daniel Spoonhower (“Spoons”: Chief Architect). The latter two came out of Google. Benjamin had been in a few startups prior including the iOS social App Matter. They are a relatively small Bay Area startup with about 100-250 employees. [ source: crunchbase, pagan ]

In a blog post announcing Lightstep in 2017, Ben talked about analyzing Lyft data and how Lightstep grew out of Dapper, Googles distributing tracing tool that came out of their research department in 2010.

Financials

After five rounds of series C funding, the last in December of 2018 ($41m), they brought in at total of $70 million. Then in 2021, they were acquired by Service Now (May-Aug 2021), finalizing just a few months ago. Prior to the acquisition they were valued around $100-500m. As of 2019 they were tracking $5-10m in revenue a year.

I want to add that when asked for comment, Ben Sigelman (CEO) informed me that while he couldn’t get into specifics, these numbers are well below the actuals. I had gotten them from public summary data in Crunchbase and Pagan Research so I’ll believe Mr. Sigelman on this. I had mentioned them mostly to give some relative size (as this is not a financial blog).

Setup

First, let’s signup for a developer account here

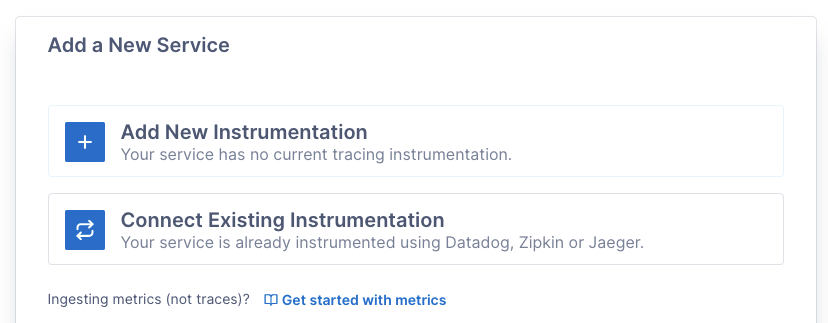

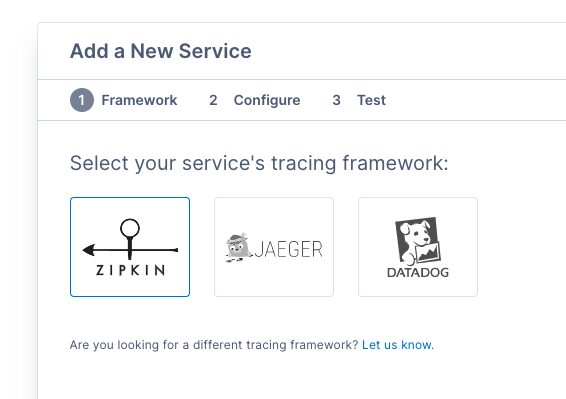

Next, we can setup a connection with the wizard:

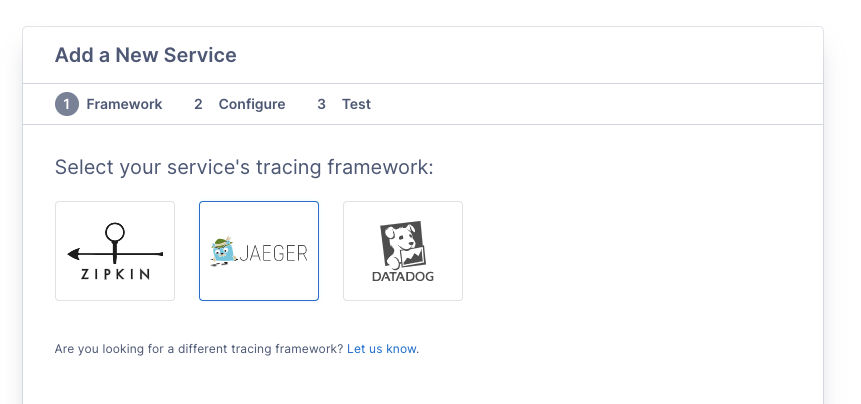

We have OTel now so let’s use our Zipkin backend for that

or Jaeger

We’ll add Jaeger

make sure to expose only the ports you use in your deployment scenario!

docker run –rm

-p6831:6831/udp

-p6832:6832/udp

-p5778:5778/tcp

-p5775:5775/udp

jaegertracing/jaeger-agent:1.21

–reporter.grpc.host-port=

Getting started: Fresh code

Let’s use the dapr react form to test lightstep

nvm node 10

$ nvm install 10.24.1

Downloading and installing node v10.24.1...

Downloading https://nodejs.org/dist/v10.24.1/node-v10.24.1-darwin-x64.tar.xz...

################################################################################################################################################################################################################################## 100.0%

Computing checksum with shasum -a 256

Checksums matched!

Now using node v10.24.1 (npm v6.14.12)

$ nvm use 10.24.1

Now using node v10.24.1 (npm v6.14.12)

Since i just added nvm with brew install nvm i needed to also set some bash vars:

$ export NVM_DIR="$HOME/.nvm"

$ [ -s "/usr/local/opt/nvm/nvm.sh" ] && . "/usr/local/opt/nvm/nvm.sh"

$ [ -s "/usr/local/opt/nvm/etc/bash_completion.d/nvm" ] && . "/usr/local/opt/nvm/etc/bash_completion.d/nvm"

install the current dependencies:

$ npm install

npm WARN read-shrinkwrap This version of npm is compatible with lockfileVersion@1, but package-lock.json was generated for lockfileVersion@2. I'll try to do my best with it!

updated 311 packages and audited 311 packages in 7.616s

8 packages are looking for funding

run `npm fund` for details

found 3 moderate severity vulnerabilities

run `npm audit fix` to fix them, or `npm audit` for details

$ npm run build

> react-form@1.0.0 build /Users/johnisa/Workspaces/quickstarts/pub-sub/react-form

> npm run buildclient && npm install

> react-form@1.0.0 buildclient /Users/johnisa/Workspaces/quickstarts/pub-sub/react-form

> cd client && npm install && npm run build

npm WARN read-shrinkwrap This version of npm is compatible with lockfileVersion@1, but package-lock.json was generated for lockfileVersion@2. I'll try to do my best with it!

> fsevents@1.2.9 install /Users/johnisa/Workspaces/quickstarts/pub-sub/react-form/client/node_modules/fsevents

> node install

node-pre-gyp WARN Using request for node-pre-gyp https download

[fsevents] Success: "/Users/johnisa/Workspaces/quickstarts/pub-sub/react-form/client/node_modules/fsevents/lib/binding/Release/node-v64-darwin-x64/fse.node" is installed via remote

> core-js@2.6.9 postinstall /Users/johnisa/Workspaces/quickstarts/pub-sub/react-form/client/node_modules/babel-runtime/node_modules/core-js

> node scripts/postinstall || echo "ignore"

Thank you for using core-js ( https://github.com/zloirock/core-js ) for polyfilling JavaScript standard library!

The project needs your help! Please consider supporting of core-js on Open Collective or Patreon:

> https://opencollective.com/core-js

> https://www.patreon.com/zloirock

Also, the author of core-js ( https://github.com/zloirock ) is looking for a good job -)

> core-js@3.1.4 postinstall /Users/johnisa/Workspaces/quickstarts/pub-sub/react-form/client/node_modules/core-js

> node scripts/postinstall || echo "ignore"

Thank you for using core-js ( https://github.com/zloirock/core-js ) for polyfilling JavaScript standard library!

The project needs your help! Please consider supporting of core-js on Open Collective or Patreon:

> https://opencollective.com/core-js

> https://www.patreon.com/zloirock

Also, the author of core-js ( https://github.com/zloirock ) is looking for a good job -)

added 68 packages from 33 contributors, updated 1385 packages and audited 1453 packages in 28.862s

found 387 vulnerabilities (150 moderate, 234 high, 3 critical)

run `npm audit fix` to fix them, or `npm audit` for details

> client@0.1.0 build /Users/johnisa/Workspaces/quickstarts/pub-sub/react-form/client

> react-scripts build

Creating an optimized production build...

Browserslist: caniuse-lite is outdated. Please run next command `yarn upgrade`

Compiled with warnings.

./src/Nav.js

Line 11: The href attribute requires a valid value to be accessible. Provide a valid, navigable address as the href value. If you cannot provide a valid href, but still need the element to resemble a link, use a button and change it with appropriate styles. Learn more: https://github.com/evcohen/eslint-plugin-jsx-a11y/blob/master/docs/rules/anchor-is-valid.md jsx-a11y/anchor-is-valid

Search for the keywords to learn more about each warning.

To ignore, add // eslint-disable-next-line to the line before.

File sizes after gzip:

38.96 KB build/static/js/2.6c6c5cfd.chunk.js

1.09 KB build/static/js/main.897b49e4.chunk.js

772 B build/static/js/runtime~main.ea92a0de.js

518 B build/static/css/main.45a95b41.chunk.css

The project was built assuming it is hosted at the server root.

You can control this with the homepage field in your package.json.

For example, add this to build it for GitHub Pages:

"homepage" : "http://myname.github.io/myapp",

The build folder is ready to be deployed.

You may serve it with a static server:

yarn global add serve

serve -s build

Find out more about deployment here:

https://bit.ly/CRA-deploy

audited 311 packages in 1.513s

8 packages are looking for funding

run `npm fund` for details

found 3 moderate severity vulnerabilities

run `npm audit fix` to fix them, or `npm audit` for details

Install the lightstep otel package:

$ npm install lightstep-opentelemetry-launcher-node --save

npm WARN deprecated @types/bson@4.2.0: This is a stub types definition. bson provides its own type definitions, so you do not need this installed.

npm WARN deprecated @types/graphql@14.5.0: This is a stub types definition. graphql provides its own type definitions, so you do not need this installed.

npm WARN deprecated @opentelemetry/node@0.24.0: Package renamed to @opentelemetry/sdk-trace-node

npm WARN deprecated @opentelemetry/metrics@0.24.0: Package renamed to @opentelemetry/sdk-metrics-base

npm WARN deprecated @opentelemetry/tracing@0.24.0: Package renamed to @opentelemetry/sdk-trace-base

added 129 packages, and audited 312 packages in 8s

8 packages are looking for funding

run `npm fund` for details

7 moderate severity vulnerabilities

To address issues that do not require attention, run:

npm audit fix

To address all issues (including breaking changes), run:

npm audit fix --force

Run `npm audit` for details.

We will wrap the invokation in index.js with lightstep:

$ git diff react-form/client/src/index.js

diff --git a/pub-sub/react-form/client/src/index.js b/pub-sub/react-form/client/src/index.js

index 62059bd..fe3e4fe 100644

--- a/pub-sub/react-form/client/src/index.js

+++ b/pub-sub/react-form/client/src/index.js

@@ -9,9 +9,23 @@ import './index.css';

import App from './App';

import * as serviceWorker from './serviceWorker';

-ReactDOM.render(<App />, document.getElementById('root'));

+const { lightstep, opentelemetry } =

+ require("lightstep-opentelemetry-launcher-node");

+

+// Set access token. If you prefer, set the LS_ACCESS_TOKEN environment variable instead, and remove accessToken here.

+const accessToken = 'Tm90IG15IHJlYWwga2V5LCBidXQgZ2xhZCB5b3UgY2hlY2tlZAo=';

-// If you want your app to work offline and load faster, you can change

-// unregister() to register() below. Note this comes with some pitfalls.

-// Learn more about service workers: https://bit.ly/CRA-PWA

-serviceWorker.unregister();

+const sdk = lightstep.configureOpenTelemetry({

+ accessToken,

+ serviceName: 'react-form',

+});

+

+sdk.start().then(() => {

+ ReactDOM.render(<App />, document.getElementById('root'));

+

+ // If you want your app to work offline and load faster, you can change

+ // unregister() to register() below. Note this comes with some pitfalls.

+ // Learn more about service workers: https://bit.ly/CRA-PWA

+ serviceWorker.unregister();

+

+});

\ No newline at end of file

I tried updating the form:

// ------------------------------------------------------------

// Copyright (c) Microsoft Corporation.

// Licensed under the MIT License.

// ------------------------------------------------------------

const { lightstep, opentelemetry } =

require("lightstep-opentelemetry-launcher-node");

// Set access token. If you prefer, set the LS_ACCESS_TOKEN environment variable instead, and remove accessToken here.

const accessToken = 'Tm90IG15IHJlYWwga2V5LCBidXQgZ2xhZCB5b3UgY2hlY2tlZAo=';

const sdk = lightstep.configureOpenTelemetry({

accessToken,

serviceName: 'react-form',

});

sdk.start().then(() => {

import React from 'react';

import ReactDOM from 'react-dom';

import './index.css';

import App from './App';

import * as serviceWorker from './serviceWorker';

ReactDOM.render(<App />, document.getElementById('root'));

// If you want your app to work offline and load faster, you can change

// unregister() to register() below. Note this comes with some pitfalls.

// Learn more about service workers: https://bit.ly/CRA-PWA

serviceWorker.unregister();

});

But even then, no data showed up in Lightstep.

I’m going to assume this is because the Dapr React app is pretty old (Node 8 based). Despite my efforts, it just wasn’t going to work.

Fresh Express JS

I moved onto just creating a fresh Node 14 Express app.

$ nvm install 14.18.1

Downloading and installing node v14.18.1...

Downloading https://nodejs.org/dist/v14.18.1/node-v14.18.1-darwin-x64.tar.xz...

################################################################################################################################################################################################################################## 100.0%

Computing checksum with shasum -a 256

Checksums matched!

Now using node v14.18.1 (npm v6.14.15)

Do an npm init in the folder:

$ npm init

This utility will walk you through creating a package.json file.

It only covers the most common items, and tries to guess sensible defaults.

See `npm help init` for definitive documentation on these fields

and exactly what they do.

Use `npm install <pkg>` afterwards to install a package and

save it as a dependency in the package.json file.

Press ^C at any time to quit.

package name: (lightstep)

version: (1.0.0)

description: testing lighstep with express

entry point: (index.js)

test command:

git repository:

keywords: express, lightstep

author: Isaac Johnson

license: (ISC) MIT

About to write to /Users/johnisa/Workspaces/lightstep/package.json:

{

"name": "lightstep",

"version": "1.0.0",

"description": "testing lighstep with express",

"main": "index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [

"express",

"lightstep"

],

"author": "Isaac Johnson",

"license": "MIT"

}

Is this OK? (yes) yes

Then install express that matches Node 14

$ npm install --save express@4.17.1

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN lightstep@1.0.0 No repository field.

+ express@4.17.1

added 50 packages from 37 contributors and audited 50 packages in 2.076s

found 0 vulnerabilities

add the Otel client for lightstep:

npm install lightstep-opentelemetry-launcher-node --save

npm WARN deprecated @types/graphql@14.5.0: This is a stub types definition. graphql provides its own type definitions, so you do not need this installed.

npm WARN deprecated @opentelemetry/node@0.24.0: Package renamed to @opentelemetry/sdk-trace-node

npm WARN deprecated @types/bson@4.2.0: This is a stub types definition. bson provides its own type definitions, so you do not need this installed.

npm WARN deprecated @opentelemetry/tracing@0.24.0: Package renamed to @opentelemetry/sdk-trace-base

npm WARN deprecated @opentelemetry/metrics@0.24.0: Package renamed to @opentelemetry/sdk-metrics-base

npm WARN lightstep@1.0.0 No repository field.

+ lightstep-opentelemetry-launcher-node@0.24.0

added 130 packages from 212 contributors and audited 180 packages in 12.296s

6 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

I added a “run” step (probably could name it better) to package.json. This made the file, in the end, look as such:

$ cat package.json

{

"name": "lightstep",

"version": "1.0.0",

"description": "testing lighstep with express",

"main": "index.js",

"scripts": {

"run": "node index.js",

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [

"express",

"lightstep"

],

"author": "Isaac Johnson",

"license": "MIT",

"dependencies": {

"express": "^4.17.1",

"lightstep-opentelemetry-launcher-node": "^0.24.0"

}

}

And my hello-world Express index.js:

$ cat index.js

const { lightstep, opentelemetry } =

require("lightstep-opentelemetry-launcher-node");

// Set access token. If you prefer, set the LS_ACCESS_TOKEN environment variable instead, and remove accessToken here.

const accessToken = 'Tm90IG15IHJlYWwga2V5LCBidXQgZ2xhZCB5b3UgY2hlY2tlZAo=';

const sdk = lightstep.configureOpenTelemetry({

accessToken,

serviceName: 'react-form',

});

sdk.start().then(() => {

const express = require('express')

const app = express()

const port = 3000

app.get('/', (req, res) => {

res.send('Hello World!')

})

app.listen(port, () => {

console.log(`Example app listening at http://localhost:${port}`)

})

});

Then we can run:

$ npm run run

> lightstep@1.0.0 run /Users/johnisa/Workspaces/lightstep

> node index.js

Example app listening at http://localhost:3000

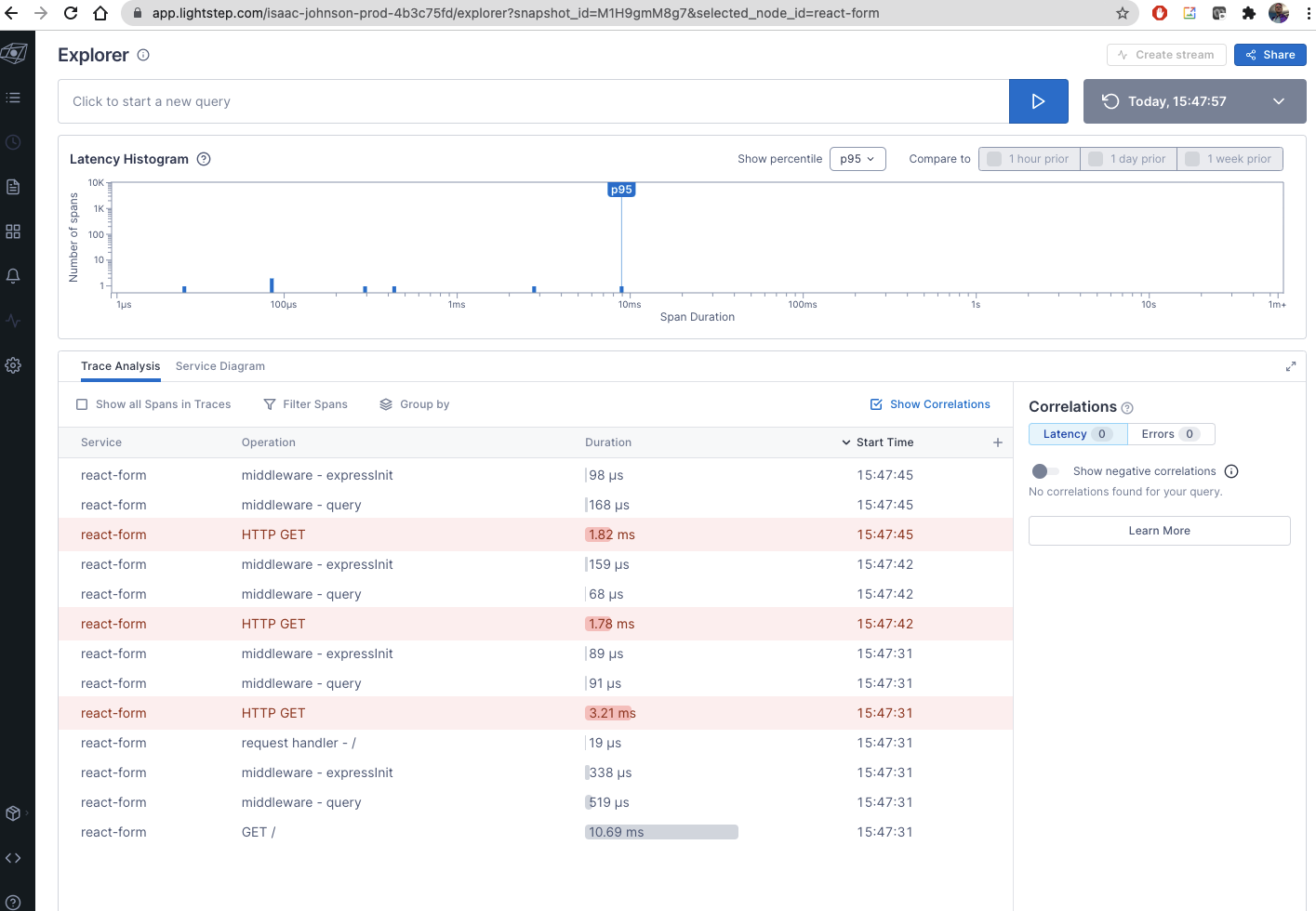

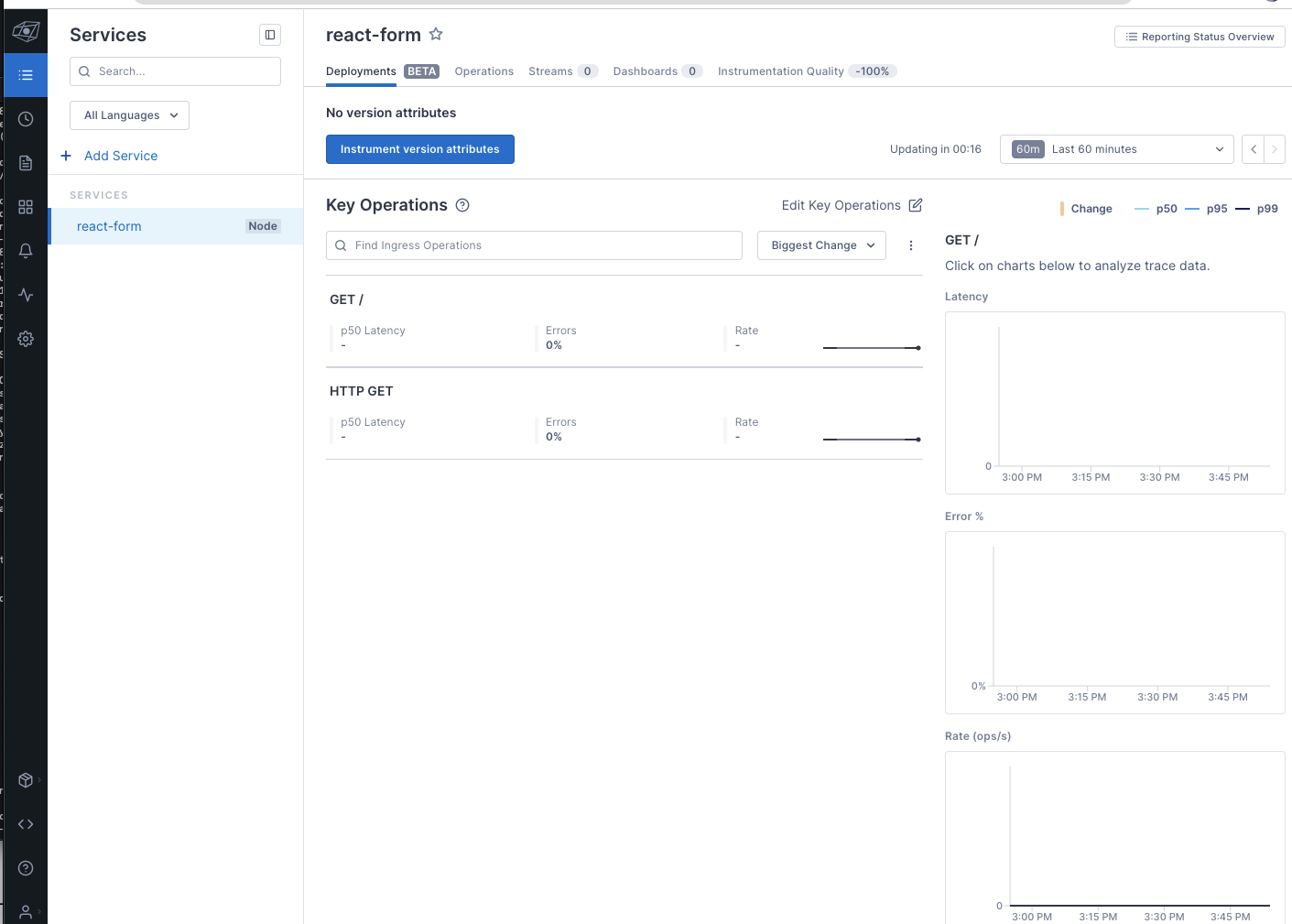

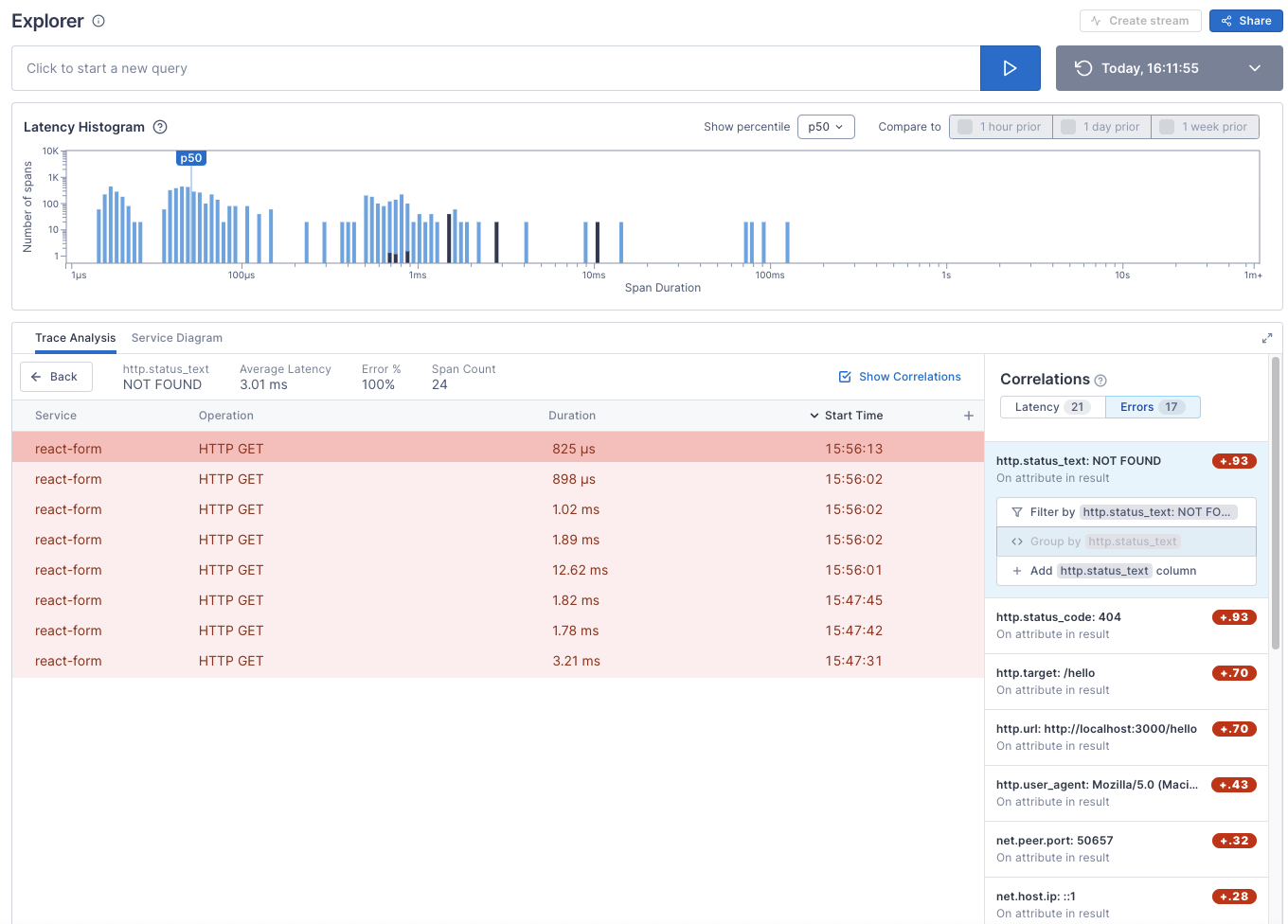

Once i hit the page a few times, i could finally see some data in Lightstep:

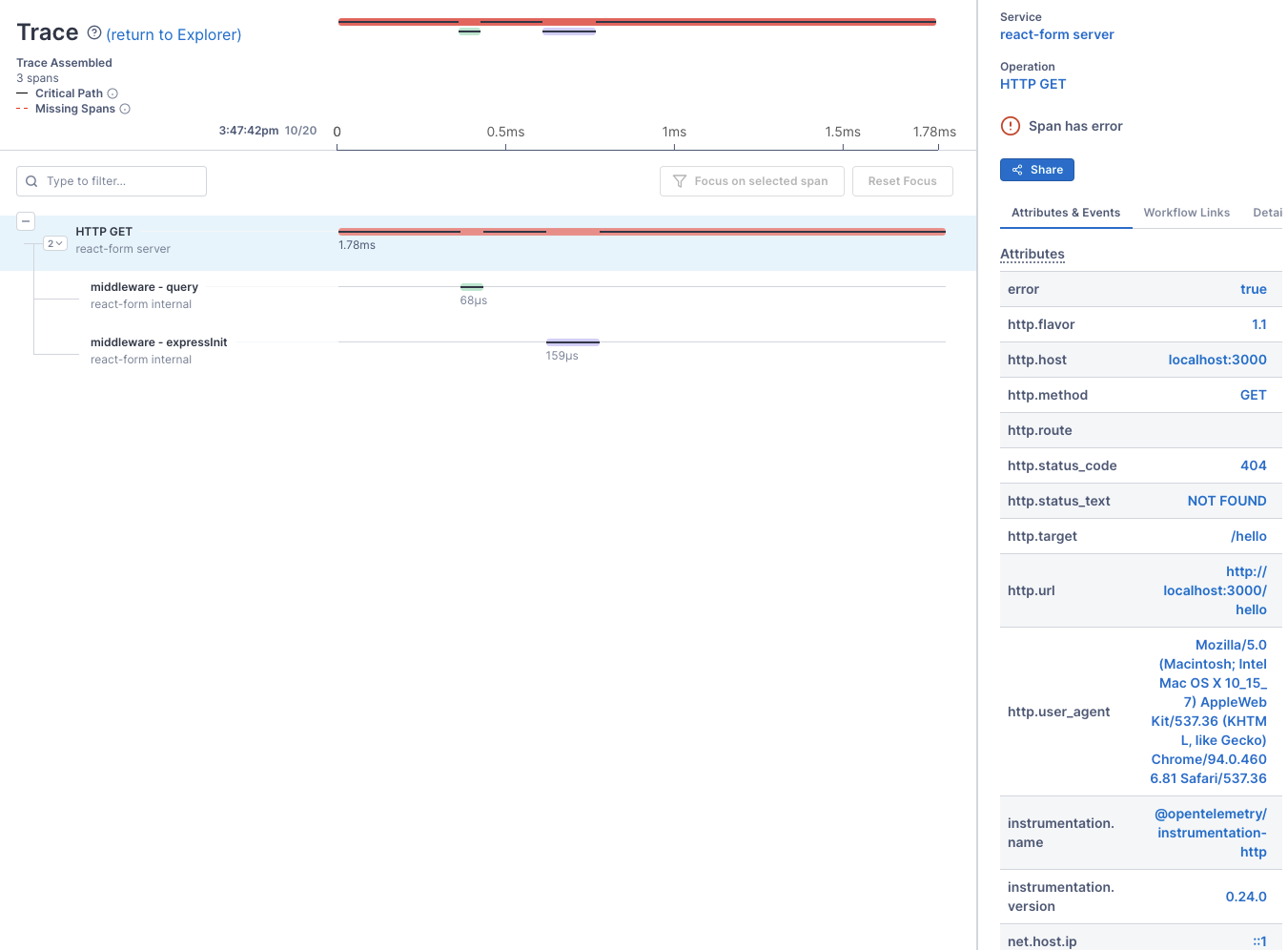

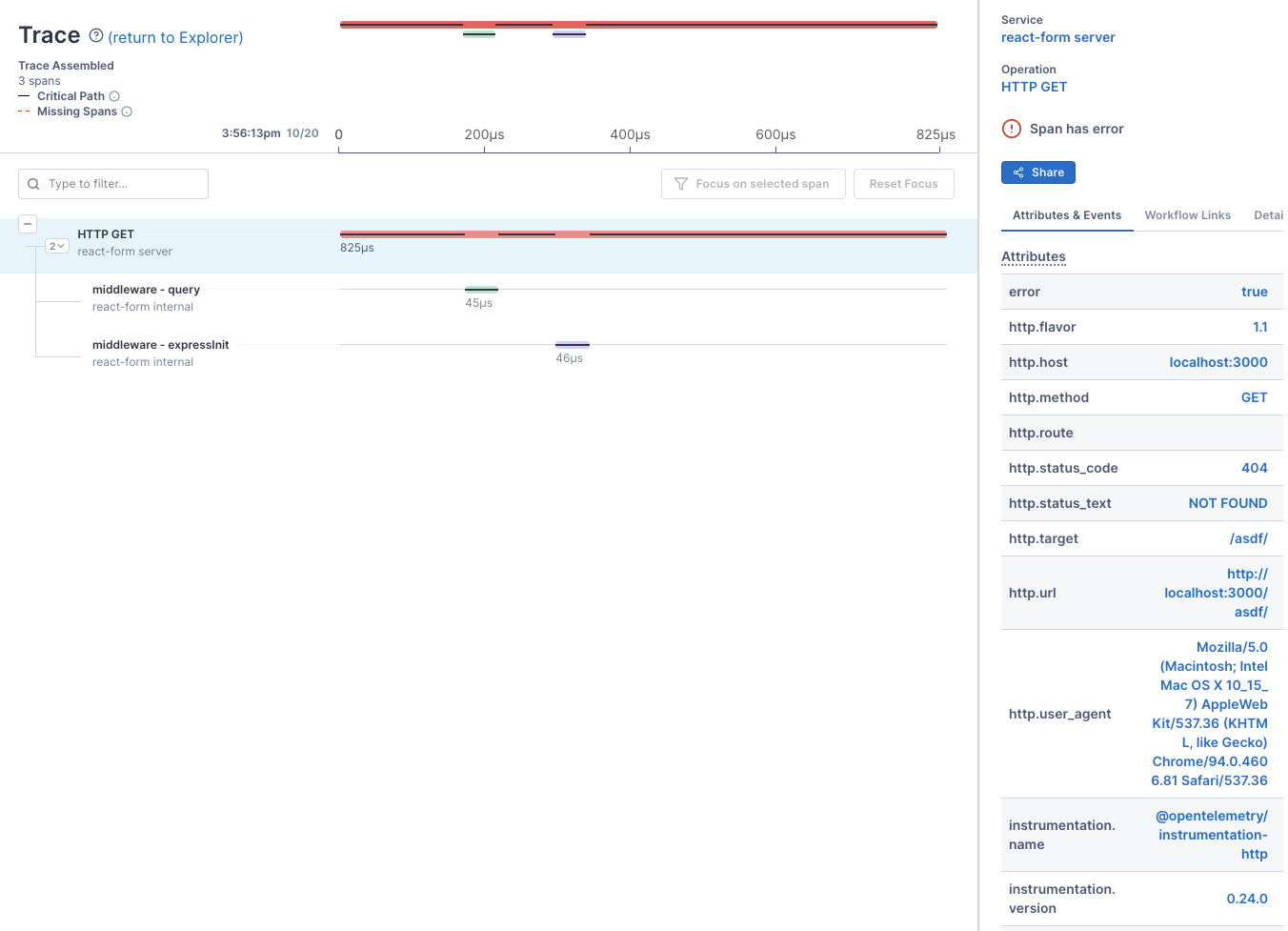

We can click a specific trace to get details:

If we leave the window at an hour, we don’t see much

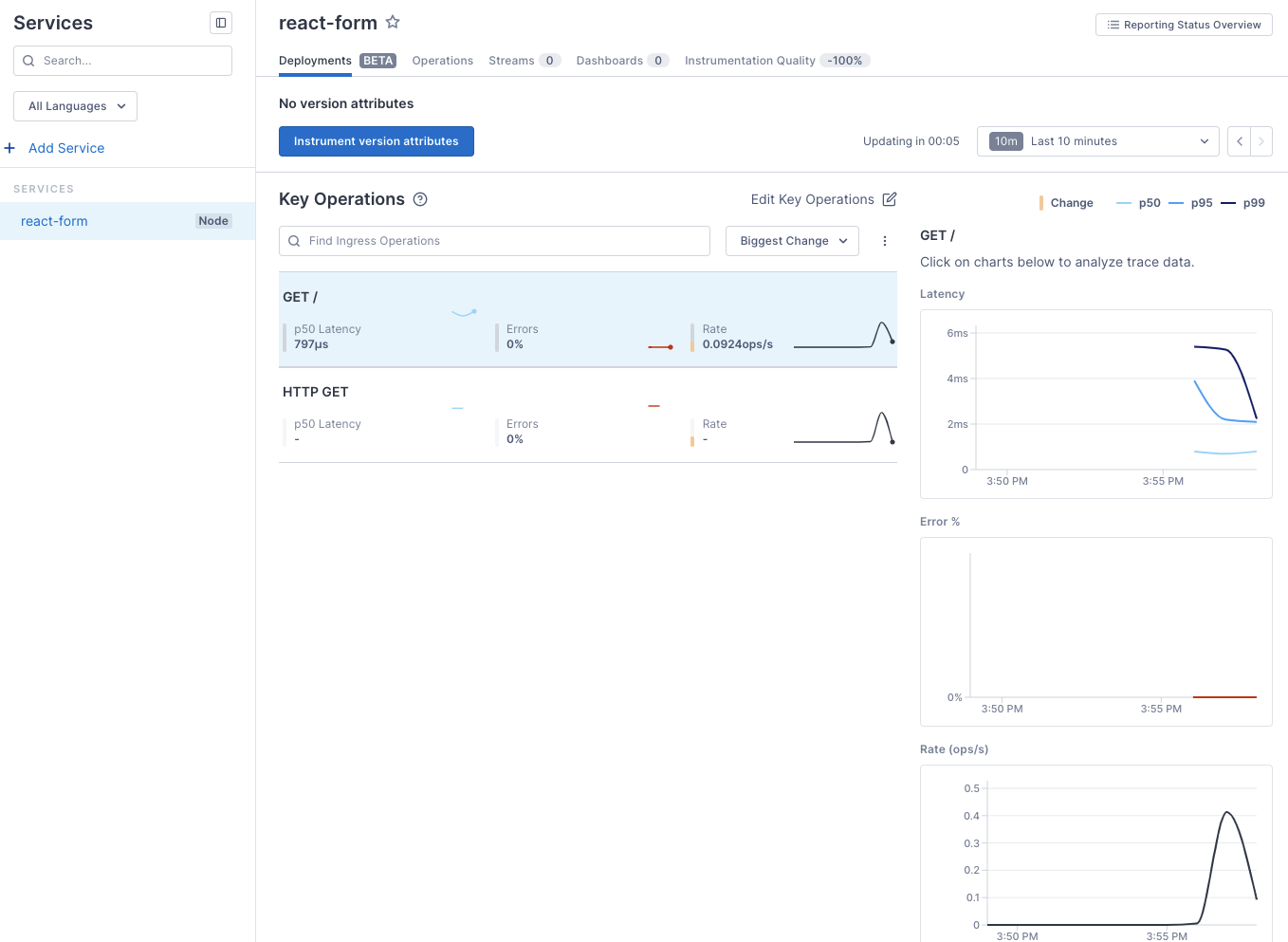

but narrowing to 15m and hitting the endpoint a bit, we see more information

adding in some weather APIs… we add request

$ npm install request --save

npm WARN deprecated request@2.88.2: request has been deprecated, see https://github.com/request/request/issues/3142

npm WARN deprecated har-validator@5.1.5: this library is no longer supported

npm WARN deprecated uuid@3.4.0: Please upgrade to version 7 or higher. Older versions may use Math.random() in certain circumstances, which is known to be problematic. See https://v8.dev/blog/math-random for details.

npm WARN lightstep@1.0.0 No repository field.

+ request@2.88.2

added 42 packages from 50 contributors and audited 222 packages in 3.622s

7 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

We need an API key from WeatherAPI: https://home.openweathermap.org/api_keys

update index.js:

const { lightstep, opentelemetry } =

require("lightstep-opentelemetry-launcher-node");

// Set access token. If you prefer, set the LS_ACCESS_TOKEN environment variable instead, and remove accessToken here.

const accessToken = 'Tm90IG15IHJlYWwga2V5LCBidXQgZ2xhZCB5b3UgY2hlY2tlZAo=';

const sdk = lightstep.configureOpenTelemetry({

accessToken,

serviceName: 'react-form',

});

sdk.start().then(() => {

const express = require('express')

const request = require('request');

const app = express()

const port = 3000

app.get('/', (req, res) => {

let apiKey = 'cmFpbiByYWluIGdvIGF3YXkK';

let city = 'minneapolis';

let url = `http://api.openweathermap.org/data/2.5/weather?q=${city}&appid=${apiKey}`

request(url, function (err, response, body) {

if(err){

console.log('error:', error);

} else {

console.log('body:', body);

}

});

res.send('Hello World!')

})

app.listen(port, () => {

console.log(`Example app listening at http://localhost:${port}`)

})

});

Run for a bit:

npm run run

> lightstep@1.0.0 run /Users/johnisa/Workspaces/lightstep

> node index.js

Example app listening at http://localhost:3000

body: {"coord":{"lon":-93.2638,"lat":44.98},"weather":[{"id":804,"main":"Clouds","description":"overcast clouds","icon":"04d"}],"base":"stations","main":{"temp":284.9,"feels_like":284.53,"temp_min":282.77,"temp_max":286.82,"pressure":1010,"humidity":92},"visibility":10000,"wind":{"speed":0.45,"deg":103,"gust":2.68},"clouds":{"all":90},"dt":1634764151,"sys":{"type":2,"id":2008025,"country":"US","sunrise":1634733328,"sunset":1634771986},"timezone":-18000,"id":5037649,"name":"Minneapolis","cod":200}

body: {"coord":{"lon":-93.2638,"lat":44.98},"weather":[{"id":701,"main":"Mist","description":"mist","icon":"50d"}],"base":"stations","main":{"temp":284.89,"feels_like":284.55,"temp_min":282.62,"temp_max":286.82,"pressure":1010,"humidity":93},"visibility":6437,"wind":{"speed":0.89,"deg":44,"gust":4.02},"clouds":{"all":90},"dt":1634763739,"sys":{"type":2,"id":2008025,"country":"US","sunrise":1634733328,"sunset":1634771986},"timezone":-18000,"id":5037649,"name":"Minneapolis","cod":200}

body: {"coord":{"lon":-93.2638,"lat":44.98},"weather":[{"id":804,"main":"Clouds","description":"overcast clouds","icon":"04d"}],"base":"stations","main":{"temp":284.91,"feels_like":284.57,"temp_min":282.77,"temp_max":286.82,"pressure":1010,"humidity":93},"visibility":10000,"wind":{"speed":0.89,"deg":44,"gust":4.02},"clouds":{"all":90},"dt":1634763842,"sys":{"type":2,"id":2008025,"country":"US","sunrise":1634733328,"sunset":1634771986},"timezone":-18000,"id":5037649,"name":"Minneapolis","cod":200}

body: {"coord":{"lon":-93.2638,"lat":44.98},"weather":[{"id":804,"main":"Clouds","description":"overcast clouds","icon":"04d"}],"base":"stations","main":{"temp":284.91,"feels_like":284.57,"temp_min":282.77,"temp_max":286.82,"pressure":1010,"humidity":93},"visibility":10000,"wind":{"speed":0.89,"deg":44,"gust":4.02},"clouds":{"all":90},"dt":1634763842,"sys":{"type":2,"id":2008025,"country":"US","sunrise":1634733328,"sunset":1634771986},"timezone":-18000,"id":5037649,"name":"Minneapolis","cod":200}

body: {"coord":{"lon":-93.2638,"lat":44.98},"weather":[{"id":804,"main":"Clouds","description":"overcast clouds","icon":"04d"}],"base":"stations","main":{"temp":284.9,"feels_like":284.53,"temp_min":282.77,"temp_max":286.82,"pressure":1010,"humidity":92},"visibility":10000,"wind":{"speed":0.45,"deg":103,"gust":2.68},"clouds":{"all":90},"dt":1634764151,"sys":{"type":2,"id":2008025,"country":"US","sunrise":1634733328,"sunset":1634771986},"timezone":-18000,"id":5037649,"name":"Minneapolis","cod":200}

body: {"coord":{"lon":-93.2638,"lat":44.98},"weather":[{"id":701,"main":"Mist","description":"mist","icon":"50d"}],"base":"stations","main":{"temp":284.89,"feels_like":284.55,"temp_min":282.62,"temp_max":286.82,"pressure":1010,"humidity":93},"visibility":6437,"wind":{"speed":0.89,"deg":44,"gust":4.02},"clouds":{"all":90},"dt":1634763739,"sys":{"type":2,"id":2008025,"country":"US","sunrise":1634733328,"sunset":1634771986},"timezone":-18000,"id":5037649,"name":"Minneapolis","cod":200}

We can see that long query reflected in the traces:

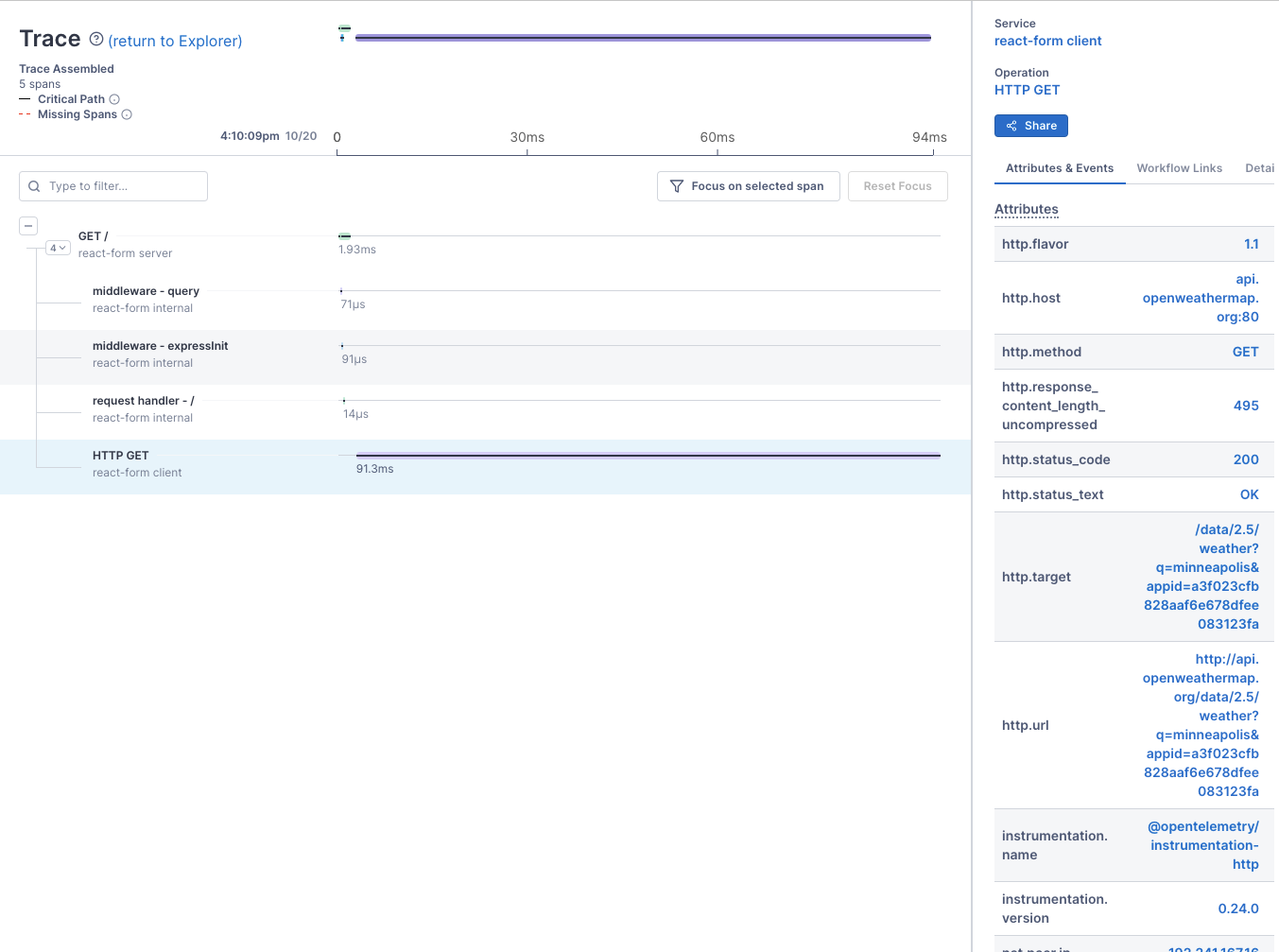

Looking for errors

We can search for errors, such as HTTP status:

Clicking on one of them, shows the trace that got us there:

and we can see the endpoint that got us that error (/asdf)

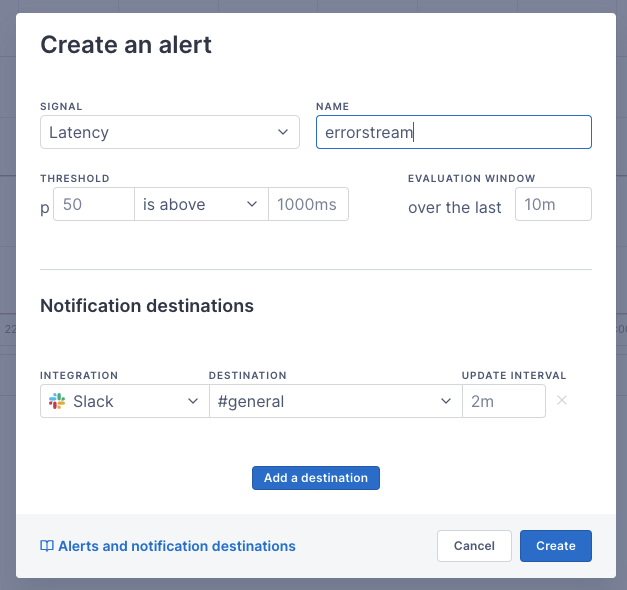

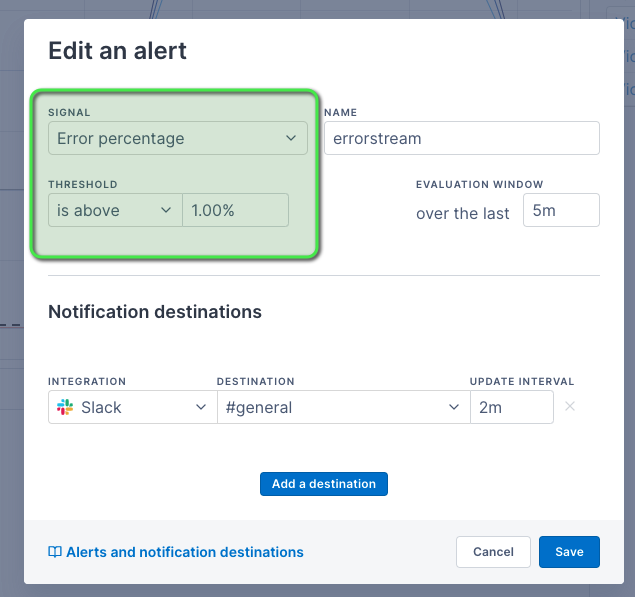

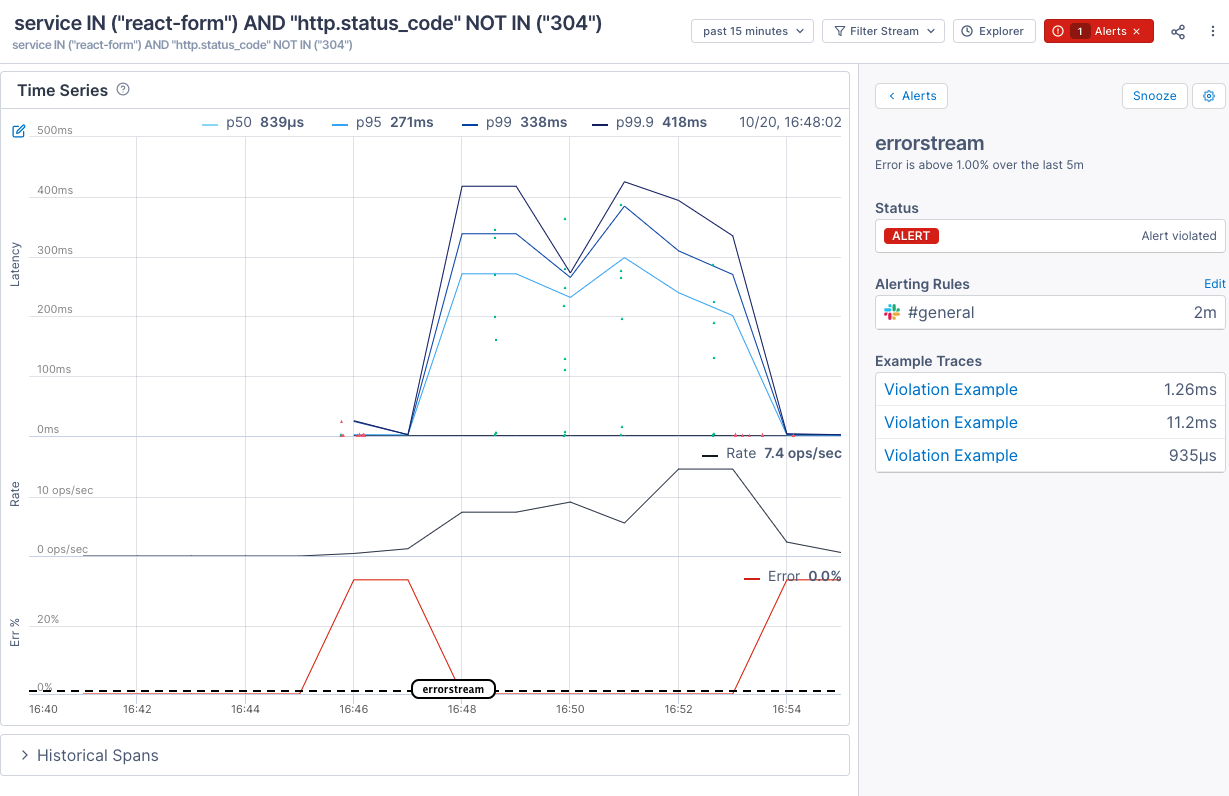

Streams

we can create a Stream that watches for status codes outside a range.

From there we can create an alert from the stream.

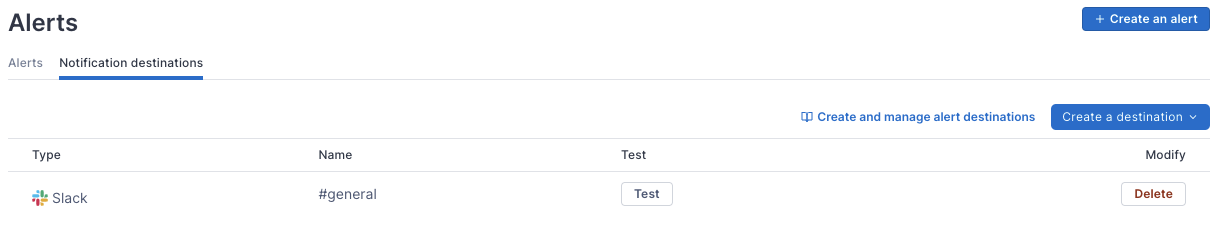

I’ll take a quick moment to add a Notification endpoint for Slack

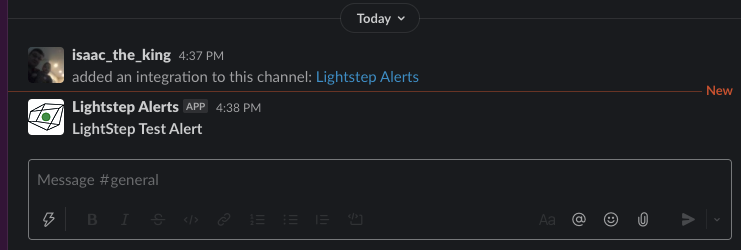

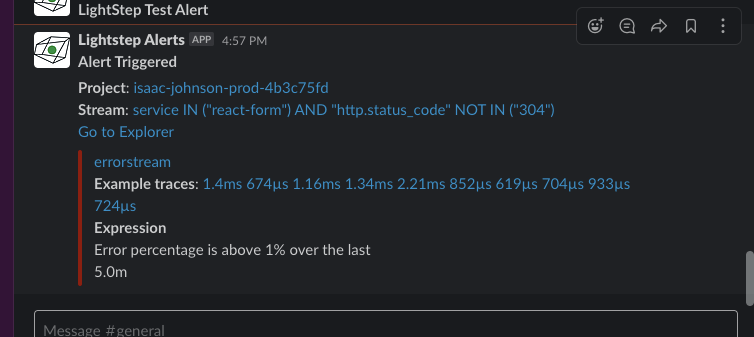

we can do a quick test

we can see the result

Then you can create the alert there:

And we can see the results once created:

You supposidly can create an alert on a metric. The docs show some examples but i was not able to select the http.status_code.

We can watch and see alerts

however i did not see alerts actually show up in my slack based on latency.

However, i could query on an Alert:

and get results

and lastly we see the error in slack now:

Presently our targets can be slack, pagerduty, bigpanda or a generic webhook (which would cover most other things)

and after a while we can see the alerts clear:

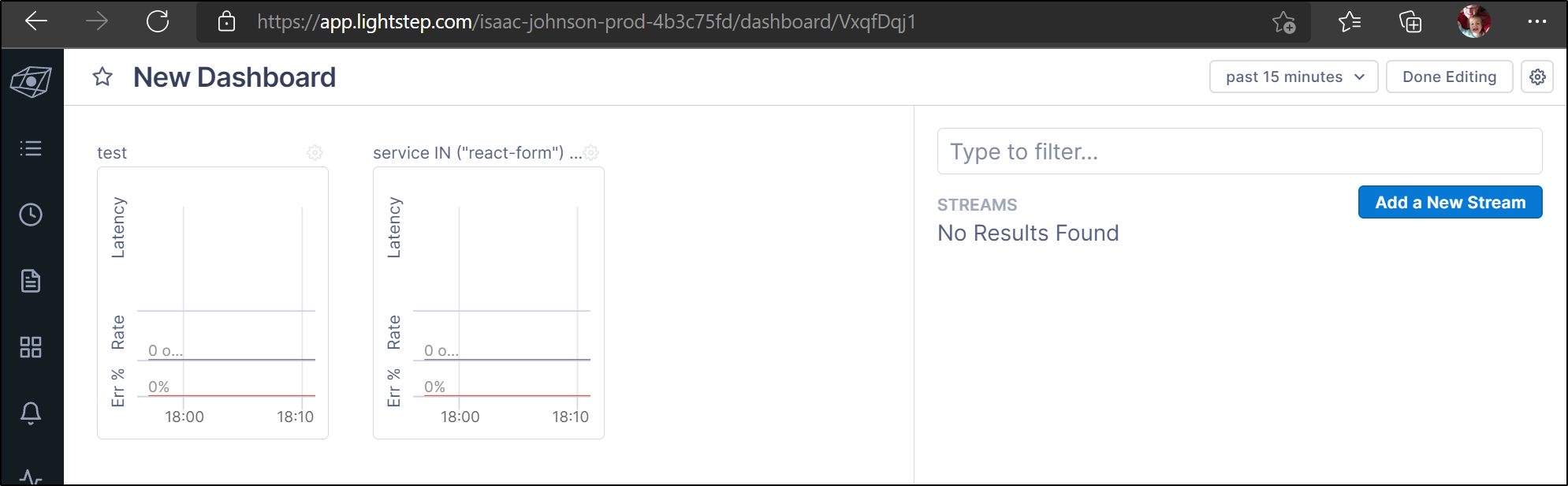

Dashboards

We can create a dashboard, however all that means is a single graph per stream

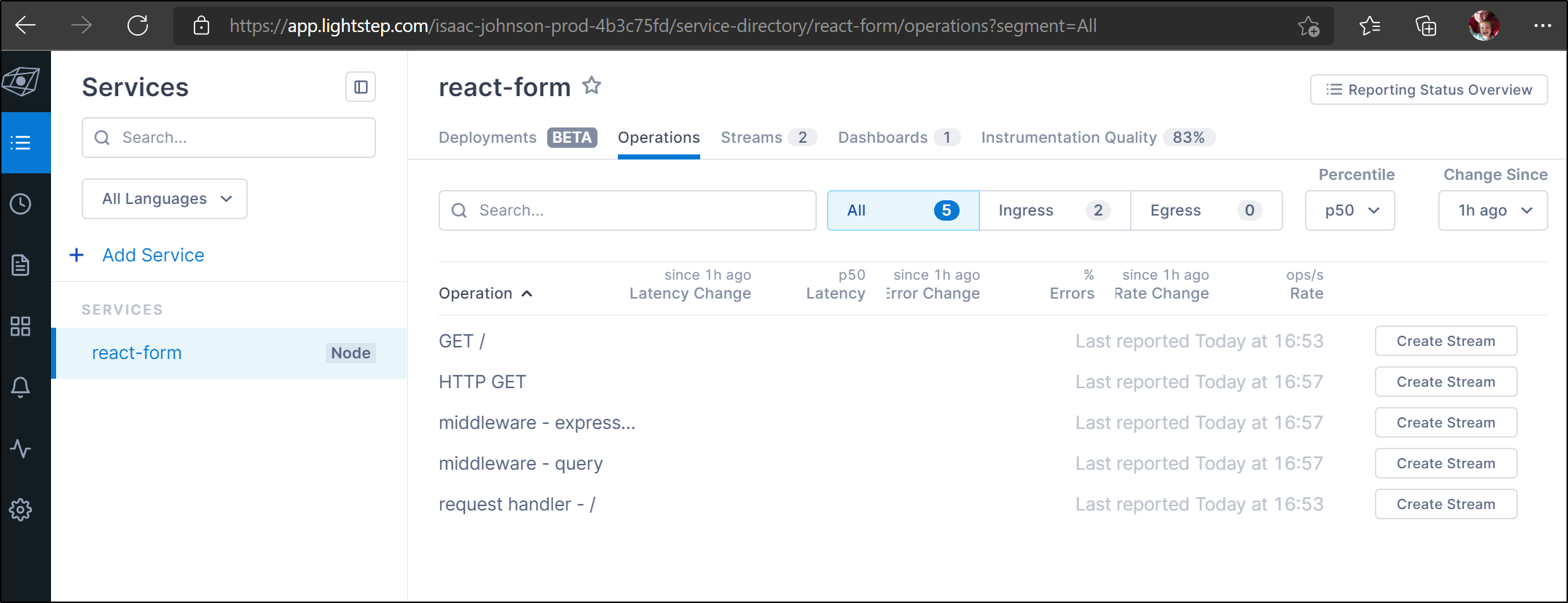

Services

We can see the services and create streams from them:

Satellites

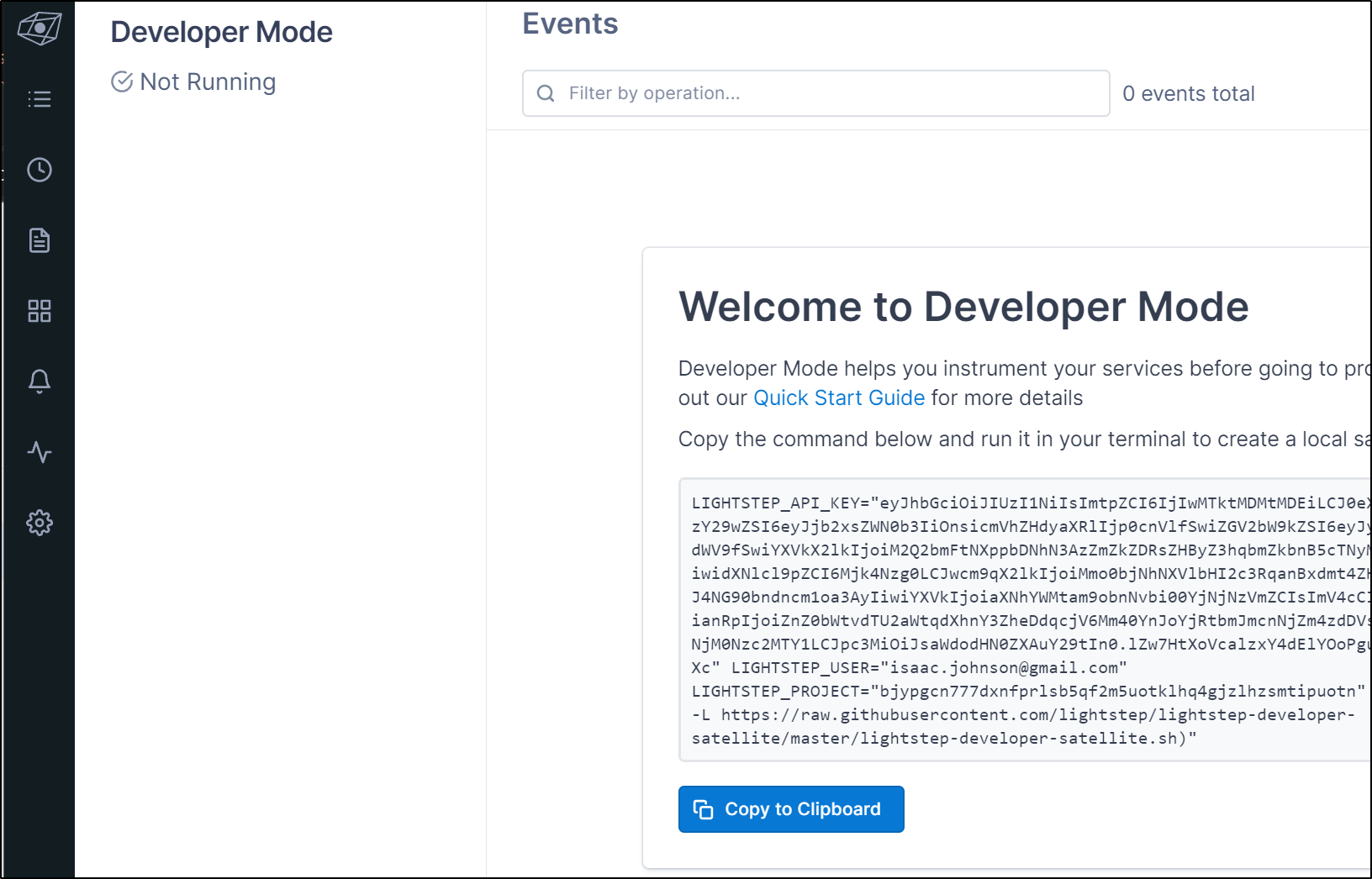

One of the unique features of Lightstep is “Satellite Mode”. By launching a Lightstep Satellite, a developer can instrument her code without needing to deploy it into a managed cluster.

In developer mode, we see the invokation steps:

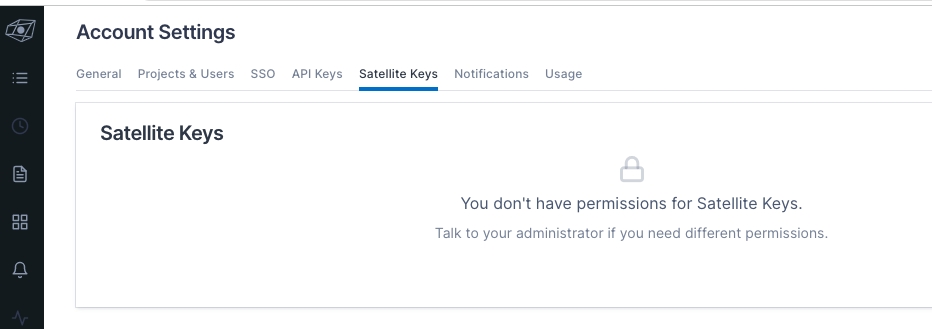

I could only see that in “Developer Mode”. In both a Trial of the Teams plan and community plan, the Satellite Keys area in my settings was locked out. But again, i could see it in this Developer Mode screen.

$ LIGHTSTEP_API_KEY="eyJhasdfasdfasdfasdfasdfasdfasdfasdfE" LIGHTSTEP_USER="isaac.johnson@gmail.com" LIGHTSTEP_PROJECT="bjypgcn777dxnfprlsb5qf2m5uotklhq4gjzlhzsmtipuotn" bash -c "$(curl -L https://raw.githubusercontent.com/lightstep/lightstep-developer-satellite/master/lightstep-developer-satellite.sh)"

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 6436 100 6436 0 0 26377 0 --:--:-- --:--:-- --:--:-- 26377

latest: Pulling from lightstep/developer-satellite

34667c7e4631: Pull complete

d18d76a881a4: Pull complete

119c7358fbfc: Pull complete

2aaf13f3eff0: Pull complete

96ce2a6ea64e: Pull complete

a4afab25e2ea: Pull complete

21995bcfa9b5: Pull complete

Digest: sha256:a7ca76ad250ca4954f4b05b3f3018b9cb78ab0bf17128c1dfb1cdcb080ea678e

Status: Downloaded newer image for lightstep/developer-satellite:latest

docker.io/lightstep/developer-satellite:latest

Starting the LightStep Developer Satellite on port 8360.

To access this satellite from inside a Docker container, configure the

Tracer's "collector_host" to 172.17.0.1.

This process will be restarted by the Docker daemon automatically, including

when this machine reboots. To stop this process, run:

bash -c "$(curl -L https://raw.githubusercontent.com/lightstep/lightstep-developer-satellite/master/stop-developer-satellite.sh)"

30aasdfasdfasdfasdfasdfasdfasdf60f

I tried to change the code to use the ls-trace module:

/*

const { lightstep, opentelemetry } =

require("lightstep-opentelemetry-launcher-node");

// Set access token. If you prefer, set the LS_ACCESS_TOKEN environment variable instead, and remove accessToken here.

const accessToken = 'AbasdfasfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfasdfsadfasdfasdfasdfT8';

const sdk = lightstep.configureOpenTelemetry({

accessToken,

serviceName: 'react-form',

});

sdk.start().then(() => {

*/

const tracer = require('ls-trace').init(

{

experimental: {

b3: true

}

}

)

but saw no data.

I tried both settings on the Env var:

705 export DD_TRACE_GLOBAL_TAGS="lightstep.service_name:expressjs,lightstep.access_token:AbBasdfasdfasfdasdfasdfasdfasdfasdfasdfsafT8"

717 export DD_TRACE_AGENT_URL=http://localhost:8360

703 export DD_TRACE_AGENT_URL=https://172.17.0.1:8360

The docker logs on the container (satellite) seemed fine:

[selftest.go:31 I1021 13:05:46.719389] Satellite Self-Test Results...

API Key authorized for:

1 Organizations [isaac-johnson-4b3c75fd]

1 Projects [bjypgcn777dxnfprlsb5qf2m5uotklhq4gjzlhzsmtipuotn]

1 Access Tokens [FJrBbKtT...]

0 Disabled Access Tokens []

Tests:

Connected to api-grpc.lightstep.com:8043: success

Require unique port numbers: success

Self-Test Status: SUCCESS

[exported.go:150 I1021 13:05:46.720486] Log continues at <forwarding_logger>

And docker ps suggested it was monitoring the right port:

$ docker ps | head -n2

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

30ab361daa2b lightstep/developer-satellite:latest "/bin/sh -c 'if [ -z…" 17 minutes ago Up 17 minutes 80/tcp, 443/tcp, 0.0.0.0:8360-8361->8360-8361/tcp lightstep_developer_satellite

I checked the docs a few times, but don’t see what i was doing in err.

And even after i checked the Satellite Keys area and it was empty (under account settings for Dev and Prod):

AKS

Since all of this is being done on a fresh mac or in my older existing kubernetes cluster, Let’s do a fresh run in AKS

$ az account set --subscription "Visual Studio Enterprise Subscription" && az group create -n mydemoaksrg --location centralus && az aks create -n basicclusteridj -g mydemoaksrg && az aks get-credentials -n basicclusteridj -g mydemoaksrg --admin && dapr init -k

{

"id": "/subscriptions/

...

Making the jump to hyperspace...

ℹ️ Note: To install Dapr using Helm, see here: https://docs.dapr.io/getting-started/install-dapr-kubernetes/#install-with-helm-advanced

✅ Deploying the Dapr control plane to your cluster...

✅ Success! Dapr has been installed to namespace dapr-system. To verify, run `dapr status -k' in your terminal. To get started, go here: https://aka.ms/dapr-getting-started

Now let’s check Dapr status

$ dapr status -k

NAME NAMESPACE HEALTHY STATUS REPLICAS VERSION AGE CREATED

dapr-dashboard dapr-system False Waiting (ContainerCreating) 1 0.8.0 11s 2021-10-22 06:57.50

dapr-sentry dapr-system True Running 1 1.4.3 11s 2021-10-22 06:57.50

dapr-sidecar-injector dapr-system False Running 1 1.4.3 11s 2021-10-22 06:57.50

dapr-operator dapr-system True Running 1 1.4.3 11s 2021-10-22 06:57.50

dapr-placement-server dapr-system False Running 1 1.4.3 11s 2021-10-22 06:57.50

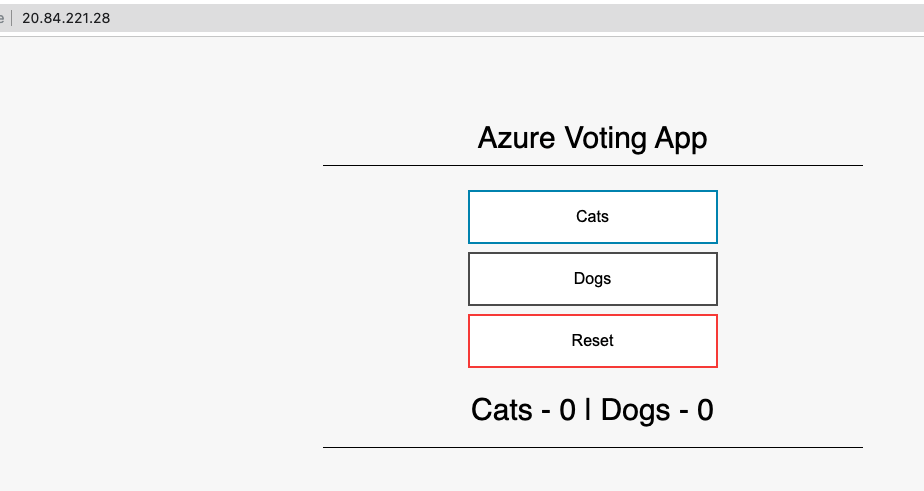

Let’s grab the Azure Vote app. We can follow the instructions here

$ git clone https://github.com/Azure-Samples/azure-voting-app-redis.git

Cloning into 'azure-voting-app-redis'...

remote: Enumerating objects: 174, done.

remote: Total 174 (delta 0), reused 0 (delta 0), pack-reused 174

Receiving objects: 100% (174/174), 37.21 KiB | 604.00 KiB/s, done.

Resolving deltas: 100% (78/78), done.

$ ls

azure-voting-app-redis

$ cd azure-voting-app-redis/

$ ls

LICENSE README.md azure-vote azure-vote-all-in-one-redis.yaml docker-compose.yaml jenkins-tutorial

$ cd azure-vote

and before i forget, we need an ACR to host a container

$ az acr create -g mydemoaksrg -n idjbasicacr1 --sku Basic

Resource provider 'Microsoft.ContainerRegistry' used by this operation is not registered. We are registering for you.

Registration succeeded.

{

"adminUserEnabled": false,

"anonymousPullEnabled": false,

"creationDate": "2021-10-22T12:03:51.507625+00:00",

...

"zoneRedundancy": "Disabled"

}

$ az aks update -n basicclusteridj -g mydemoaksrg --attach-acr idjbasicacr1

AAD role propagation done[############################################] 100.0000%{

"aadProfile": null,

"addonProfiles": null,

"agentPoolProfiles": [

We will build and push the vote app;

$ az acr build --image azure-vote-front:v1 --registry idjbasicacr1 --file Dockerfile .

Packing source code into tar to upload...

Uploading archived source code from '/var/folders/xd/5bjmj9_s1hl16_b9k0fnv525nmnf6k/T/build_archive_9be058fc5ae74f19af6d45203334bcb3.tar.gz'...

Sending context (3.133 KiB) to registry: idjbasicacr1...

Queued a build with ID: cj1

Waiting for an agent...

2021/10/22 12:15:48 Downloading source code...

2021/10/22 12:15:49 Finished downloading source code

2021/10/22 12:15:50 Using acb_vol_91c3e103-6e4c-483a-80d6-c79420fff9b7 as the home volume

2021/10/22 12:15:50 Setting up Docker configuration...

2021/10/22 12:15:50 Successfully set up Docker configuration

2021/10/22 12:15:50 Logging in to registry: idjbasicacr1.azurecr.io

2021/10/22 12:15:51 Successfully logged into idjbasicacr1.azurecr.io

2021/10/22 12:15:51 Executing step ID: build. Timeout(sec): 28800, Working directory: '', Network: ''

2021/10/22 12:15:51 Scanning for dependencies...

2021/10/22 12:15:52 Successfully scanned dependencies

2021/10/22 12:15:52 Launching container with name: build

Sending build context to Docker daemon 17.41kB

Step 1/3 : FROM tiangolo/uwsgi-nginx-flask:python3.6

python3.6: Pulling from tiangolo/uwsgi-nginx-flask

5e7b6b7bd506: Pulling fs layer

fd67d668d691: Pulling fs layer

1ae016bc2687: Pulling fs layer

0b0af05a4d86: Pulling fs layer

ca4689f0588c: Pulling fs layer

981ebf72add4: Pulling fs layer

94ed2ee96337: Pulling fs layer

e49e07f12c65: Pulling fs layer

0f08bac7320e: Pulling fs layer

81a0d7f51d3d: Pulling fs layer

45b3ee0e1290: Pulling fs layer

ce9e70713770: Pulling fs layer

e882f9f0a345: Pulling fs layer

cd67f5e18994: Pulling fs layer

fac5e97674c4: Pulling fs layer

8e8b6cae656c: Pulling fs layer

d6aedd13aa3a: Pulling fs layer

8af53450c113: Pulling fs layer

f4085b389d61: Pulling fs layer

bd0175ca87a3: Pulling fs layer

8409f1b445a6: Pulling fs layer

3e06db044205: Pulling fs layer

bc4fdf3baacd: Pulling fs layer

a34149461b9f: Pulling fs layer

52b8d7e75039: Pulling fs layer

829e42bb45c7: Pulling fs layer

b6d7f7b867d3: Pulling fs layer

3a20ff797468: Pulling fs layer

aab9399febeb: Pulling fs layer

ad39310f24a7: Pulling fs layer

8e8b6cae656c: Waiting

d6aedd13aa3a: Waiting

8af53450c113: Waiting

f4085b389d61: Waiting

bd0175ca87a3: Waiting

8409f1b445a6: Waiting

0b0af05a4d86: Waiting

ca4689f0588c: Waiting

981ebf72add4: Waiting

94ed2ee96337: Waiting

3e06db044205: Waiting

bc4fdf3baacd: Waiting

e49e07f12c65: Waiting

0f08bac7320e: Waiting

a34149461b9f: Waiting

81a0d7f51d3d: Waiting

52b8d7e75039: Waiting

45b3ee0e1290: Waiting

829e42bb45c7: Waiting

b6d7f7b867d3: Waiting

ce9e70713770: Waiting

e882f9f0a345: Waiting

3a20ff797468: Waiting

cd67f5e18994: Waiting

aab9399febeb: Waiting

ad39310f24a7: Waiting

fac5e97674c4: Waiting

1ae016bc2687: Verifying Checksum

1ae016bc2687: Download complete

5e7b6b7bd506: Verifying Checksum

5e7b6b7bd506: Download complete

fd67d668d691: Verifying Checksum

fd67d668d691: Download complete

981ebf72add4: Verifying Checksum

981ebf72add4: Download complete

94ed2ee96337: Verifying Checksum

94ed2ee96337: Download complete

0b0af05a4d86: Verifying Checksum

0b0af05a4d86: Download complete

e49e07f12c65: Verifying Checksum

e49e07f12c65: Download complete

0f08bac7320e: Verifying Checksum

0f08bac7320e: Download complete

81a0d7f51d3d: Verifying Checksum

81a0d7f51d3d: Download complete

45b3ee0e1290: Verifying Checksum

45b3ee0e1290: Download complete

ce9e70713770: Verifying Checksum

ce9e70713770: Download complete

e882f9f0a345: Verifying Checksum

e882f9f0a345: Download complete

fac5e97674c4: Verifying Checksum

fac5e97674c4: Download complete

cd67f5e18994: Verifying Checksum

cd67f5e18994: Download complete

d6aedd13aa3a: Verifying Checksum

d6aedd13aa3a: Download complete

8e8b6cae656c: Verifying Checksum

8e8b6cae656c: Download complete

8af53450c113: Verifying Checksum

8af53450c113: Download complete

5e7b6b7bd506: Pull complete

f4085b389d61: Verifying Checksum

f4085b389d61: Download complete

ca4689f0588c: Verifying Checksum

ca4689f0588c: Download complete

fd67d668d691: Pull complete

1ae016bc2687: Pull complete

0b0af05a4d86: Pull complete

bd0175ca87a3: Download complete

3e06db044205: Verifying Checksum

3e06db044205: Download complete

8409f1b445a6: Verifying Checksum

8409f1b445a6: Download complete

bc4fdf3baacd: Verifying Checksum

bc4fdf3baacd: Download complete

52b8d7e75039: Verifying Checksum

52b8d7e75039: Download complete

a34149461b9f: Verifying Checksum

a34149461b9f: Download complete

3a20ff797468: Verifying Checksum

3a20ff797468: Download complete

aab9399febeb: Download complete

829e42bb45c7: Verifying Checksum

829e42bb45c7: Download complete

b6d7f7b867d3: Verifying Checksum

b6d7f7b867d3: Download complete

ad39310f24a7: Verifying Checksum

ad39310f24a7: Download complete

ca4689f0588c: Pull complete

981ebf72add4: Pull complete

94ed2ee96337: Pull complete

e49e07f12c65: Pull complete

0f08bac7320e: Pull complete

81a0d7f51d3d: Pull complete

45b3ee0e1290: Pull complete

ce9e70713770: Pull complete

e882f9f0a345: Pull complete

cd67f5e18994: Pull complete

fac5e97674c4: Pull complete

8e8b6cae656c: Pull complete

d6aedd13aa3a: Pull complete

8af53450c113: Pull complete

f4085b389d61: Pull complete

bd0175ca87a3: Pull complete

8409f1b445a6: Pull complete

3e06db044205: Pull complete

bc4fdf3baacd: Pull complete

a34149461b9f: Pull complete

52b8d7e75039: Pull complete

829e42bb45c7: Pull complete

b6d7f7b867d3: Pull complete

3a20ff797468: Pull complete

aab9399febeb: Pull complete

ad39310f24a7: Pull complete

Digest: sha256:0c084c39521a68413f2944c88e0a1d7db2098704abd1126827f223f150365597

Status: Downloaded newer image for tiangolo/uwsgi-nginx-flask:python3.6

---> 79d81e4ae7e1

Step 2/3 : RUN pip install redis

---> Running in 671376ec133d

Collecting redis

Downloading redis-3.5.3-py2.py3-none-any.whl (72 kB)

Installing collected packages: redis

Successfully installed redis-3.5.3

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

WARNING: You are using pip version 21.2.4; however, version 21.3 is available.

You should consider upgrading via the '/usr/local/bin/python -m pip install --upgrade pip' command.

Removing intermediate container 671376ec133d

---> d2153724387a

Step 3/3 : ADD /azure-vote /app

---> 82f26f3276d4

Successfully built 82f26f3276d4

Successfully tagged idjbasicacr1.azurecr.io/azure-vote-front:v1

2021/10/22 12:16:17 Successfully executed container: build

2021/10/22 12:16:17 Executing step ID: push. Timeout(sec): 3600, Working directory: '', Network: ''

2021/10/22 12:16:17 Pushing image: idjbasicacr1.azurecr.io/azure-vote-front:v1, attempt 1

The push refers to repository [idjbasicacr1.azurecr.io/azure-vote-front]

157c8a54a768: Preparing

ece889b0ff34: Preparing

eee4aae87b93: Preparing

903a083e9fdb: Preparing

adcbf7752a87: Preparing

ca630d436bdb: Preparing

3361490d031f: Preparing

3cfc8ff8eeb8: Preparing

1e8842a02618: Preparing

28927a234b22: Preparing

b487965c4c44: Preparing

c692b4bc31fc: Preparing

658f50d62f86: Preparing

070df8195765: Preparing

5bb18de488ea: Preparing

39ac71ea6081: Preparing

0c67045d7e68: Preparing

4d40a05326d7: Preparing

fe2564966123: Preparing

3c11b624ca8a: Preparing

9730b4c39d3f: Preparing

325747df28e5: Preparing

849dbbba87b9: Preparing

94f11a4547de: Preparing

9b7497bb0968: Preparing

fb214f2e7a76: Preparing

58ce68cb1417: Preparing

6664df8b907f: Preparing

193c69a58521: Preparing

65bd1a7ee0f5: Preparing

e637b6ee6754: Preparing

22f8b5520ced: Preparing

ca630d436bdb: Waiting

3361490d031f: Waiting

3cfc8ff8eeb8: Waiting

1e8842a02618: Waiting

9730b4c39d3f: Waiting

325747df28e5: Waiting

28927a234b22: Waiting

b487965c4c44: Waiting

849dbbba87b9: Waiting

c692b4bc31fc: Waiting

658f50d62f86: Waiting

94f11a4547de: Waiting

9b7497bb0968: Waiting

070df8195765: Waiting

fb214f2e7a76: Waiting

5bb18de488ea: Waiting

39ac71ea6081: Waiting

58ce68cb1417: Waiting

0c67045d7e68: Waiting

4d40a05326d7: Waiting

6664df8b907f: Waiting

fe2564966123: Waiting

193c69a58521: Waiting

3c11b624ca8a: Waiting

65bd1a7ee0f5: Waiting

e637b6ee6754: Waiting

ece889b0ff34: Pushed

157c8a54a768: Pushed

eee4aae87b93: Pushed

adcbf7752a87: Pushed

903a083e9fdb: Pushed

ca630d436bdb: Pushed

3cfc8ff8eeb8: Pushed

3361490d031f: Pushed

1e8842a02618: Pushed

28927a234b22: Pushed

b487965c4c44: Pushed

c692b4bc31fc: Pushed

658f50d62f86: Pushed

070df8195765: Pushed

5bb18de488ea: Pushed

39ac71ea6081: Pushed

4d40a05326d7: Pushed

0c67045d7e68: Pushed

fe2564966123: Pushed

9730b4c39d3f: Pushed

3c11b624ca8a: Pushed

849dbbba87b9: Pushed

325747df28e5: Pushed

94f11a4547de: Pushed

9b7497bb0968: Pushed

58ce68cb1417: Pushed

fb214f2e7a76: Pushed

65bd1a7ee0f5: Pushed

e637b6ee6754: Pushed

193c69a58521: Pushed

22f8b5520ced: Pushed

6664df8b907f: Pushed

v1: digest: sha256:036063010c6d2aada283363c9ec5175b26c5e2c4d68c649dc23f8c86c38c3b75 size: 7003

2021/10/22 12:17:05 Successfully pushed image: idjbasicacr1.azurecr.io/azure-vote-front:v1

2021/10/22 12:17:05 Step ID: build marked as successful (elapsed time in seconds: 26.242763)

2021/10/22 12:17:05 Populating digests for step ID: build...

2021/10/22 12:17:06 Successfully populated digests for step ID: build

2021/10/22 12:17:06 Step ID: push marked as successful (elapsed time in seconds: 47.430511)

2021/10/22 12:17:06 The following dependencies were found:

2021/10/22 12:17:06

- image:

registry: idjbasicacr1.azurecr.io

repository: azure-vote-front

tag: v1

digest: sha256:036063010c6d2aada283363c9ec5175b26c5e2c4d68c649dc23f8c86c38c3b75

runtime-dependency:

registry: registry.hub.docker.com

repository: tiangolo/uwsgi-nginx-flask

tag: python3.6

digest: sha256:0c084c39521a68413f2944c88e0a1d7db2098704abd1126827f223f150365597

git: {}

Run ID: cj1 was successful after 1m23s

Make a quick helm chart and add a dependency block for redis

$ cat azure-vote-front/Chart.yaml

apiVersion: v2

name: azure-vote-front

description: A Helm chart for Kubernetes

dependencies:

- name: redis

version: 14.7.1

repository: https://charts.bitnami.com/bitnami

# A chart can be either an 'application' or a 'library' chart.

#

# Application charts are a collection of templates that can be packaged into versioned archives

# to be deployed.

#

# Library charts provide useful utilities or functions for the chart developer. They're included as

# a dependency of application charts to inject those utilities and functions into the rendering

# pipeline. Library charts do not define any templates and therefore cannot be deployed.

type: application

# This is the chart version. This version number should be incremented each time you make changes

# to the chart and its templates, including the app version.

# Versions are expected to follow Semantic Versioning (https://semver.org/)

version: 0.1.0

# This is the version number of the application being deployed. This version number should be

# incremented each time you make changes to the application. Versions are not expected to

# follow Semantic Versioning. They should reflect the version the application is using.

# It is recommended to use it with quotes.

appVersion: "1.16.0"

Update the chart

$ helm dependency update azure-vote-front

Getting updates for unmanaged Helm repositories...

...Successfully got an update from the "https://charts.bitnami.com/bitnami" chart repository

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "datadog" chart repository

Update Complete. ⎈Happy Helming!⎈

Saving 1 charts

Downloading redis from repo https://charts.bitnami.com/bitnami

Deleting outdated charts

Getting values.yaml

$ cat values.yaml

replicaCount: 1

backendName: azure-vote-backend-master

redis:

image:

registry: mcr.microsoft.com

repository: oss/bitnami/redis

tag: 6.0.8

fullnameOverride: azure-vote-backend

auth:

enabled: false

image:

repository: idjbasicacr1.azurecr.io/azure-vote-front

pullPolicy: IfNotPresent

tag: "v1"

service:

type: LoadBalancer

port: 80

update the deployment.yaml in templates to add an env block:

env:

- name: REDIS

value: {{ .Values.backendName }}

e.g. should look as such (look after imagePullPolicy)

$ cat azure-vote-front/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "azure-vote-front.fullname" . }}

labels:

{{- include "azure-vote-front.labels" . | nindent 4 }}

spec:

{{- if not .Values.autoscaling.enabled }}

replicas: {{ .Values.replicaCount }}

{{- end }}

selector:

matchLabels:

{{- include "azure-vote-front.selectorLabels" . | nindent 6 }}

template:

metadata:

{{- with .Values.podAnnotations }}

annotations:

{{- toYaml . | nindent 8 }}

{{- end }}

labels:

{{- include "azure-vote-front.selectorLabels" . | nindent 8 }}

spec:

{{- with .Values.imagePullSecrets }}

imagePullSecrets:

{{- toYaml . | nindent 8 }}

{{- end }}

serviceAccountName: {{ include "azure-vote-front.serviceAccountName" . }}

securityContext:

{{- toYaml .Values.podSecurityContext | nindent 8 }}

containers:

- name: {{ .Chart.Name }}

securityContext:

{{- toYaml .Values.securityContext | nindent 12 }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

env:

- name: REDIS

value: {{ .Values.backendName }}

ports:

- name: http

containerPort: 80

protocol: TCP

livenessProbe:

httpGet:

path: /

port: http

readinessProbe:

httpGet:

path: /

port: http

resources:

{{- toYaml .Values.resources | nindent 12 }}

{{- with .Values.nodeSelector }}

nodeSelector:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.affinity }}

affinity:

{{- toYaml . | nindent 8 }}

{{- end }}

{{- with .Values.tolerations }}

tolerations:

{{- toYaml . | nindent 8 }}

{{- end }}

Helm install:

$ helm install -f values.yaml azure-vote-front ./azure-vote-front/

NAME: azure-vote-front

LAST DEPLOYED: Fri Oct 22 07:40:28 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get --namespace default svc -w azure-vote-front'

export SERVICE_IP=$(kubectl get svc --namespace default azure-vote-front --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

echo http://$SERVICE_IP:80

I will note in testing, re-launching the chart did not recreate the LB.. so if this happens to you, just override the fullname:

$ helm install -f values.yaml azure-vote-front --set fullnameOverride=azure-vote-front2 ./azure-vote-front/

NAME: azure-vote-front

LAST DEPLOYED: Fri Oct 22 07:44:54 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get --namespace default svc -w azure-vote-front2'

export SERVICE_IP=$(kubectl get svc --namespace default azure-vote-front2 --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

echo http://$SERVICE_IP:80

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-backend-headless ClusterIP None <none> 6379/TCP 49s

azure-vote-backend-master ClusterIP 10.0.235.85 <none> 6379/TCP 49s

azure-vote-backend-replicas ClusterIP 10.0.17.88 <none> 6379/TCP 49s

azure-vote-front2 LoadBalancer 10.0.61.182 20.84.221.28 80:30682/TCP 49s

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 58m

We can pull a good example from the Dapr quickstarts:

$ git clone ttps://github.com/dapr/quickstarts.git

$ cd quickstarts/observability

# verify zipkin settings

$ cat deploy/appconfig.yaml

apiVersion: dapr.io/v1alpha1

kind: Configuration

metadata:

name: appconfig

spec:

tracing:

samplingRate: "1"

zipkin:

endpointAddress: "http://zipkin.default.svc.cluster.local:9411/api/v2/spans"

Now let’s add zipkin OTel

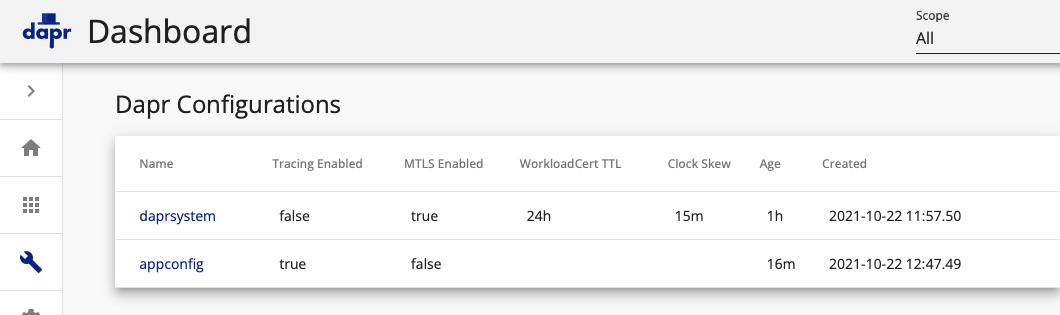

$ kubectl apply -f ./deploy/appconfig.yaml

configuration.dapr.io/appconfig created

$ dapr configurations --kubernetes

NAME TRACING-ENABLED METRICS-ENABLED AGE CREATED

appconfig true true 7s 2021-10-22 07:47.49

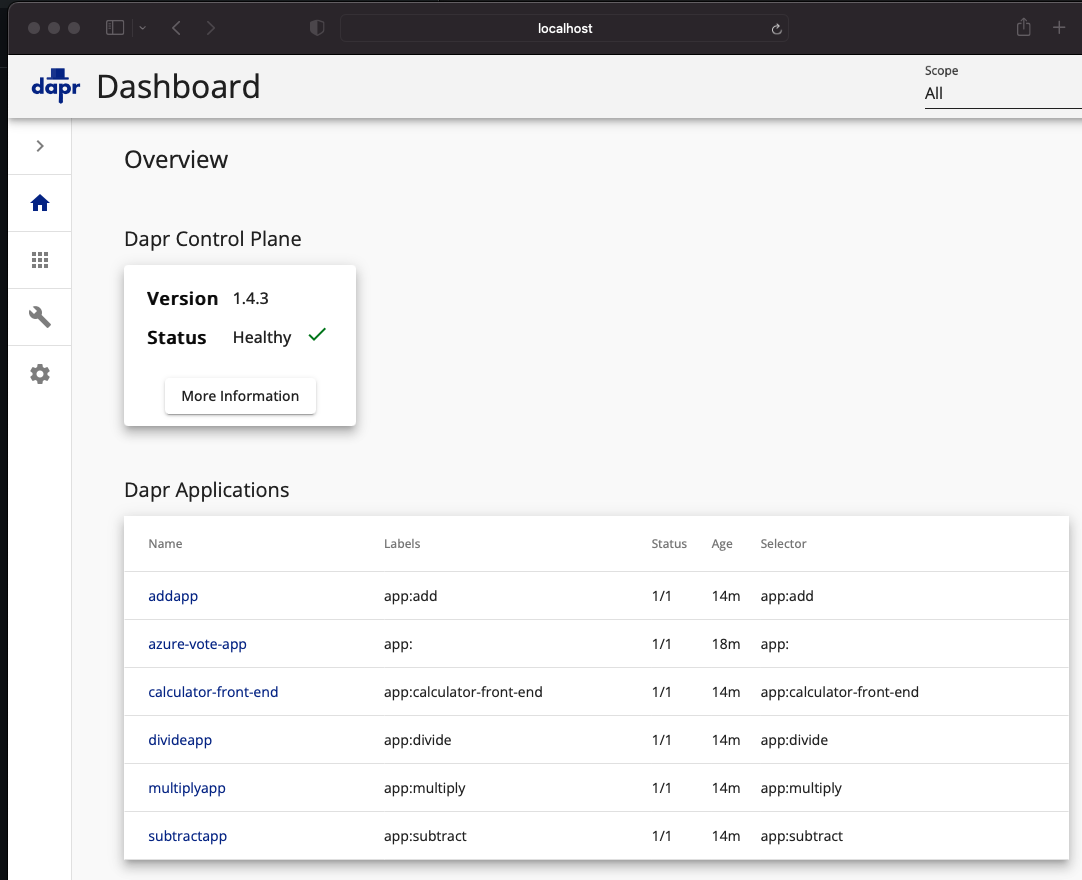

Deploy the distributed calculator app:

$ kubectl apply -f ../distributed-calculator/deploy/

configuration.dapr.io/appconfig unchanged

deployment.apps/subtractapp created

deployment.apps/addapp created

deployment.apps/divideapp created

deployment.apps/multiplyapp created

service/calculator-front-end created

deployment.apps/calculator-front-end created

component.dapr.io/statestore created

What applies the appconfig is the Dapr annotation…

$ cat ../distributed-calculator/deploy/react-calculator.yaml | head -n40 | tail -n10

app: calculator-front-end

annotations:

dapr.io/enabled: "true"

dapr.io/app-id: "calculator-front-end"

dapr.io/app-port: "8080"

dapr.io/config: "appconfig"

spec:

containers:

- name: calculator-front-end

image: dapriosamples/distributed-calculator-react-calculator:latest

We can go back and now annotate our azure vote app so Dapr starts tracing but just adding an annotation to our values file (at the end):

replicaCount: 1

backendName: azure-vote-backend-master

redis:

image:

registry: mcr.microsoft.com

repository: oss/bitnami/redis

tag: 6.0.8

fullnameOverride: azure-vote-backend

auth:

enabled: false

image:

repository: idjbasicacr1.azurecr.io/azure-vote-front

pullPolicy: IfNotPresent

tag: "v1"

service:

type: LoadBalancer

port: 80

podAnnotations:

dapr.io/config: "appconfig"

dapr.io/app-id: "azure-vote-app"

dapr.io/enabled: "true"

dapr.io/app-port: "80"

$ helm upgrade -f values.yaml azure-vote-front --set fullnameOverride=azure-vote-front2 ./azure-vote-front/

Release "azure-vote-front" has been upgraded. Happy Helming!

NAME: azure-vote-front

LAST DEPLOYED: Fri Oct 22 07:55:28 2021

NAMESPACE: default

STATUS: deployed

REVISION: 2

NOTES:

1. Get the application URL by running these commands:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get --namespace default svc -w azure-vote-front2'

export SERVICE_IP=$(kubectl get svc --namespace default azure-vote-front2 --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

echo http://$SERVICE_IP:80

Verifying the new pod is annotated:

$ kubectl get pods azure-vote-front2-c8444f89-nm5qb -o yaml | head -n5

apiVersion: v1

kind: Pod

metadata:

annotations:

dapr.io/config: appconfig

and we see the sidecar was injected (2 containers on pod now):

$ kubectl get pods | grep azure-vote

azure-vote-backend-master-0 1/1 Running 0 16m

azure-vote-backend-replicas-0 1/1 Running 0 16m

azure-vote-backend-replicas-1 1/1 Running 0 15m

azure-vote-backend-replicas-2 1/1 Running 0 15m

azure-vote-front2-547d498848-bs9tc 2/2 Running 0 40s

Another way we can view that apps are tracked in Dapr and have Tracing enabled is via the Dapr dashboard:

$ dapr dashboard -k

ℹ️ Dapr dashboard found in namespace: dapr-system

ℹ️ Dapr dashboard available at: http://localhost:8080

and we can see Appconfig has tracing enabled:

This last part is presently “spaghetti against the wall” as i try every exporter i can think of to try and get data to lightstep:

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-collector-conf

labels:

app: opentelemetry

component: otel-collector-conf

data:

otel-collector-config: |

receivers:

zipkin:

endpoint: 0.0.0.0:9411

extensions:

health_check:

pprof:

endpoint: :1888

zpages:

endpoint: :55679

exporters:

logging:

loglevel: debug

# Depending on where you want to export your trace, use the

# correct OpenTelemetry trace exporter here.

#

# Refer to

# https://github.com/open-telemetry/opentelemetry-collector/tree/main/exporter

# and

# https://github.com/open-telemetry/opentelemetry-collector-contrib/tree/main/exporter

# for full lists of trace exporters that you can use, and how to

# configure them.

zipkin:

endpoint: "http://ingest.lightstep.com/api/v2/spans?lightstep.service_name=dapr&lightstep.access_token=Lr1WiHsuG2ezVkW5j%2F3SAtwaoQe3hE9GpZzVlwXF2zLJaT8FniZPPNkFHXaz26yfP9EGrNXMAlEPT149ItvL4YFVO0%2Fasdfasdfasdfasdf"

otlphttp:

endpoint: https://ingest.lightstep.com:443

datadog/api:

service: "dapr,lightstep.access_token:Lr1WiHsuG2ezVkW5j/3SAtwaoQe3hE9GpZzVlwXF2zLJaT8FniZPPNkFHXaz26yfP9EGrNXMAlEPT149ItvL4YFVO0/asdfasdfasdfasdf"

env: "lightstep.access_token:Lr1WiHsuG2ezVkW5j/3SAtwaoQe3hE9GpZzVlwXF2zLJaT8FniZPPNkFHXaz26yfP9EGrNXMAlEPT149ItvL4YFVO0/asdfasdfasdfasdf"

tags:

- "lightstep.service_name:dapr,lightstep.access_token:Lr1WiHsuG2ezVkW5j/3SAtwaoQe3hE9GpZzVlwXF2zLJaT8FniZPPNkFHXaz26yfP9EGrNXMAlEPT149ItvL4YFVO0/asdfasdfasdfasdf"

api:

key: "Lr1WiHsuG2ezVkW5j/3SAtwaoQe3hE9GpZzVlwXF2zLJaT8FniZPPNkFHXaz26yfP9EGrNXMAlEPT149ItvL4YFVO0/asdfasdfasdfasdf"

site: ingest.lightstep.com

service:

extensions: [pprof, zpages, health_check]

pipelines:

traces:

receivers: [zipkin]

# List your exporter here.

exporters: [datadog/api,otlphttp,zipkin,logging]

# datadog/api maybe?

---

apiVersion: v1

kind: Service

metadata:

name: otel-collector

labels:

app: opencesus

component: otel-collector

spec:

ports:

- name: zipkin # Default endpoint for Zipkin receiver.

port: 9411

protocol: TCP

targetPort: 9411

selector:

component: otel-collector

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: otel-collector

labels:

app: opentelemetry

component: otel-collector

spec:

replicas: 1 # scale out based on your usage

selector:

matchLabels:

app: opentelemetry

template:

metadata:

labels:

app: opentelemetry

component: otel-collector

spec:

containers:

- name: otel-collector

image: otel/opentelemetry-collector-contrib-dev:latest

command:

- "/otelcontribcol"

- "--config=/conf/otel-collector-config.yaml"

resources:

limits:

cpu: 1

memory: 2Gi

requests:

cpu: 200m

memory: 400Mi

ports:

- containerPort: 9411 # Default endpoint for Zipkin receiver.

volumeMounts:

- name: otel-collector-config-vol

mountPath: /conf

livenessProbe:

httpGet:

path: /

port: 13133

readinessProbe:

httpGet:

path: /

port: 13133

volumes:

- configMap:

name: otel-collector-conf

items:

- key: otel-collector-config

path: otel-collector-config.yaml

name: otel-collector-config-vol

It keeps restarting validating the DD api key… i’ll drop that block and try again

$ kubectl describe pod otel-collector-67f645b9b7-vnq68 | tail -n10

Normal Scheduled 2m54s default-scheduler Successfully assigned default/otel-collector-67f645b9b7-vnq68 to aks-nodepool1-22955716-vmss000000

Normal Pulled 2m51s kubelet Successfully pulled image "otel/opentelemetry-collector-contrib-dev:latest" in 3.398318778s

Normal Pulled 2m25s kubelet Successfully pulled image "otel/opentelemetry-collector-contrib-dev:latest" in 471.59831ms

Warning Unhealthy 2m1s (x5 over 2m41s) kubelet Readiness probe failed: Get "http://10.244.1.16:13133/": dial tcp 10.244.1.16:13133: connect: connection refused

Normal Pulling 115s (x3 over 2m54s) kubelet Pulling image "otel/opentelemetry-collector-contrib-dev:latest"

Warning Unhealthy 115s (x6 over 2m45s) kubelet Liveness probe failed: Get "http://10.244.1.16:13133/": dial tcp 10.244.1.16:13133: connect: connection refused

Normal Killing 115s (x2 over 2m25s) kubelet Container otel-collector failed liveness probe, will be restarted

Normal Created 114s (x3 over 2m49s) kubelet Created container otel-collector

Normal Started 114s (x3 over 2m49s) kubelet Started container otel-collector

Normal Pulled 114s kubelet Successfully pulled image "otel/opentelemetry-collector-contrib-dev:latest" in 516.85306ms

$ kubectl get pods | grep otel

otel-collector-67f645b9b7-vnq68 0/1 CrashLoopBackOff 4 2m39s

I removed dd bit.. try again

$ !596

kubectl apply -f otel-deployment.yaml

configmap/otel-collector-conf configured

service/otel-collector unchanged

deployment.apps/otel-collector configured

$ kubectl get pods | grep otel

otel-collector-67f645b9b7-7xwgs 1/1 Running 0 83s

but still no new entries in dev or prod…

I think the issue is with the TLS settings here

Trying Docker Kompose to convert the docker-compose here.

$ kompose convert

WARN Unsupported depends_on key - ignoring

WARN Service "jaeger-emitter" won't be created because 'ports' is not specified

WARN Service "metrics-load-generator" won't be created because 'ports' is not specified

WARN Volume mount on the host "../main.go" isn't supported - ignoring path on the host

WARN Volume mount on the host "./otel-agent-config.yaml" isn't supported - ignoring path on the host

WARN Volume mount on the host "./otel-collector-config.yaml" isn't supported - ignoring path on the host

WARN Volume mount on the host "./prometheus.yaml" isn't supported - ignoring path on the host

WARN Service "zipkin-emitter" won't be created because 'ports' is not specified

INFO Kubernetes file "jaeger-all-in-one-service.yaml" created

INFO Kubernetes file "otel-agent-service.yaml" created

INFO Kubernetes file "otel-collector-service.yaml" created

INFO Kubernetes file "prometheus-service.yaml" created

INFO Kubernetes file "zipkin-all-in-one-service.yaml" created

INFO Kubernetes file "jaeger-all-in-one-deployment.yaml" created

INFO Kubernetes file "jaeger-emitter-deployment.yaml" created

INFO Kubernetes file "metrics-load-generator-deployment.yaml" created

INFO Kubernetes file "metrics-load-generator-claim0-persistentvolumeclaim.yaml" created

INFO Kubernetes file "otel-agent-deployment.yaml" created

INFO Kubernetes file "otel-agent-claim0-persistentvolumeclaim.yaml" created

INFO Kubernetes file "otel-collector-deployment.yaml" created

INFO Kubernetes file "otel-collector-claim0-persistentvolumeclaim.yaml" created

INFO Kubernetes file "prometheus-deployment.yaml" created

INFO Kubernetes file "prometheus-claim0-persistentvolumeclaim.yaml" created

INFO Kubernetes file "zipkin-all-in-one-deployment.yaml" created

INFO Kubernetes file "zipkin-emitter-deployment.yaml" created

AKS Try 2

$ az account set --subscription "Visual Studio Enterprise Subscription" && az group create -n mydemoaksrg --location centralus && az aks create -n basicclusteridj -g mydemoaksrg && az aks get-credentials -n basicclusteridj -g mydemoaksrg --admin && dapr init -k

{

"id": "/subscriptions/d4c094eb-

... snip ...

}

A different object named basicclusteridj already exists in your kubeconfig file.

Overwrite? (y/n): y

A different object named basicclusteridj-admin already exists in your kubeconfig file.

Overwrite? (y/n): y

Merged "basicclusteridj-admin" as current context in /Users/johnisa/.kube/config

⌛ Making the jump to hyperspace...

ℹ️ Note: To install Dapr using Helm, see here: https://docs.dapr.io/getting-started/install-dapr-kubernetes/#install-with-helm-advanced

✅ Deploying the Dapr control plane to your cluster...

✅ Success! Dapr has been installed to namespace dapr-system. To verify, run `dapr status -k' in your terminal. To get started, go here: https://aka.ms/dapr-getting-started

Apply the Dapr Quickstarts

$ kubectl apply -f observability/deploy/

configuration.dapr.io/appconfig created

deployment.apps/multiplyapp created

deployment.apps/zipkin created

service/zipkin created

$ kubectl apply -f distributed-calculator/deploy/

configuration.dapr.io/appconfig unchanged

deployment.apps/subtractapp created

deployment.apps/addapp created

deployment.apps/divideapp created

deployment.apps/multiplyapp configured

service/calculator-front-end created

deployment.apps/calculator-front-end created

component.dapr.io/statestore created

check on services:

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

addapp-dapr ClusterIP None <none> 80/TCP,50001/TCP,50002/TCP,9090/TCP 2m50s

calculator-front-end LoadBalancer 10.0.106.178 52.158.214.175 80:30835/TCP 2m50s

calculator-front-end-dapr ClusterIP None <none> 80/TCP,50001/TCP,50002/TCP,9090/TCP 2m50s

divideapp-dapr ClusterIP None <none> 80/TCP,50001/TCP,50002/TCP,9090/TCP 2m50s

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 8m45s

multiplyapp-dapr ClusterIP None <none> 80/TCP,50001/TCP,50002/TCP,9090/TCP 3m5s

subtractapp-dapr ClusterIP None <none> 80/TCP,50001/TCP,50002/TCP,9090/TCP 2m50s

zipkin ClusterIP 10.0.130.97 <none> 9411/TCP 3m5s

I’de rather not port-forward all the time for zipkin. so let’s expose it. In production this is not something we would expose without some form of auth:

$ kubectl expose deployment zipkin --type=LoadBalancer --name=zipkinext

service/zipkinext exposed

$ kubectl get svc zipkinext

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

zipkinext LoadBalancer 10.0.21.209 52.158.212.254 9411:31112/TCP 58s

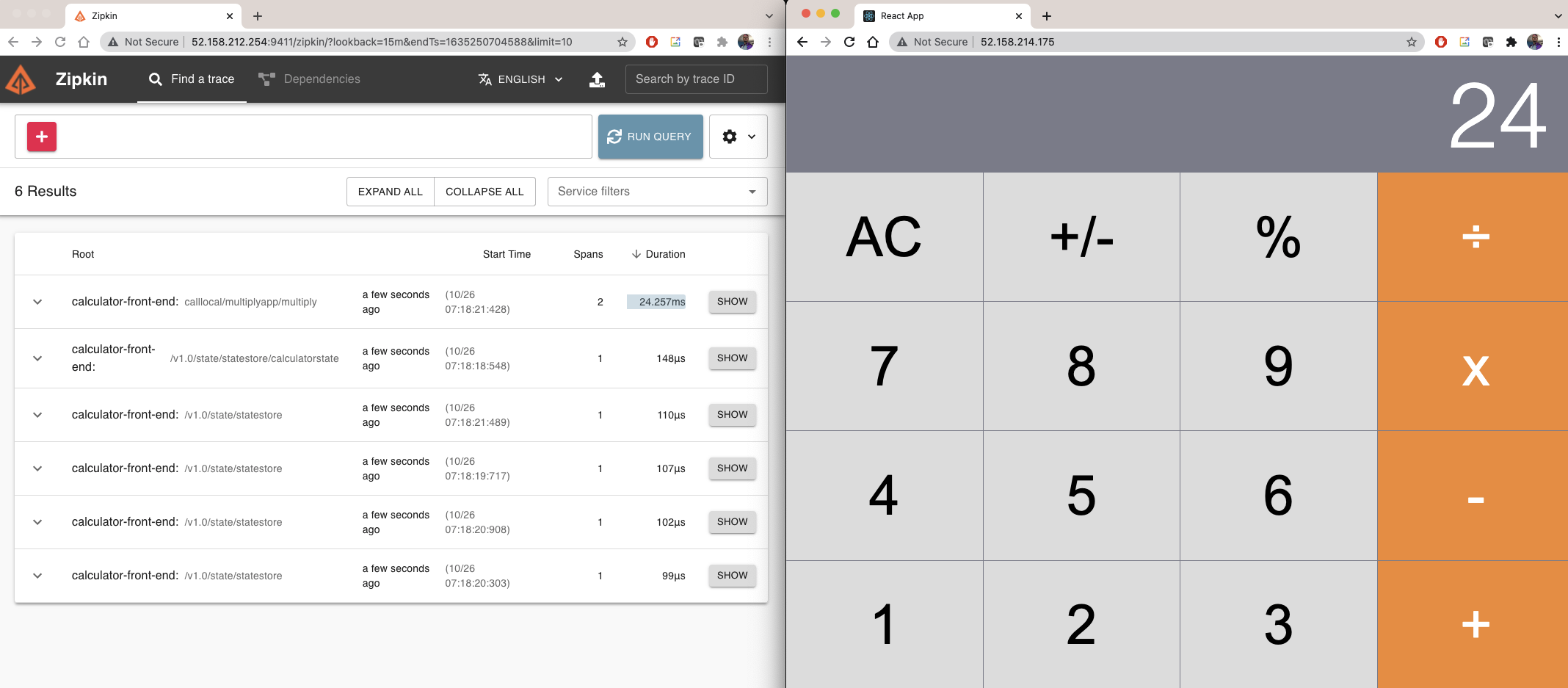

And we can immediately see trace data in standard off the shelf zipkin from the calculator app:

Now i’m going to try every permutation i can think of to pass the lightstep token:

$ cat exporter.yaml

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: zipkinext1

spec:

type: exporters.zipkin

version: v1

metadata:

- name: enabled

value: "true"

- name: exporterAddress

value: "https://ingest.lightstep.com:443/api/v2/spans?lightstep.access_token=AbBJcpF7hbXr83hqOQ4j5Je3LhfvaA41sG/BGKnb/Dlg/Bnip2jmqYSPNJbuZsY/7DLHxOUqeezjcO7SZEXtDHu1z0Z+hLEtQ/Xoasdf"

---

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: zipkinext2

spec:

type: exporters.zipkin

version: v1

metadata:

- name: enabled

value: "true"

- name: exporterAddress

value: "https://ingest.lightstep.com:443/api/v2/spans?lightstep.access_token=AbBJcpF7hbXr83hqOQ4j5Je3LhfvaA41sG%2FBGKnb%2FDlg%2FBnip2jmqYSPNJbuZsY%2F7DLHxOUqeezjcO7SZEXtDHu1z0Z%2BhLEtQ%2FXoasdf"

---

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: zipkinext3

spec:

type: exporters.zipkin

version: v1

metadata:

- name: enabled

value: "true"

- name: exporterAddress

value: "https://ingest.lightstep.com:443?lightstep.access_token=AbBJcpF7hbXr83hqOQ4j5Je3LhfvaA41sG/BGKnb/Dlg/Bnip2jmqYSPNJbuZsY/7DLHxOUqeezjcO7SZEXtDHu1z0Z+hLEtQ/Xoasdf"

---

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: zipkinext4

spec:

type: exporters.zipkin

version: v1

metadata:

- name: enabled

value: "true"

- name: exporterAddress

value: "https://ingest.lightstep.com:443?lightstep.access_token=AbBJcpF7hbXr83hqOQ4j5Je3LhfvaA41sG%2FBGKnb%2FDlg%2FBnip2jmqYSPNJbuZsY%2F7DLHxOUqeezjcO7SZEXtDHu1z0Z%2BhLEtQ%2FXoasdf"

applying:

$ kubectl apply -f exporter.yaml

component.dapr.io/zipkinext1 created

component.dapr.io/zipkinext2 created

component.dapr.io/zipkinext3 created

component.dapr.io/zipkinext4 created

rotate the pods to force update of sidecars:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

addapp-86cfcb8969-dfkwf 2/2 Running 1 22m

calculator-front-end-5bb947dfcf-cdk8p 2/2 Running 1 22m

divideapp-6b94b477f5-6f7ld 2/2 Running 1 22m

multiplyapp-545c4bc54d-v644w 2/2 Running 1 22m

subtractapp-5c6c6bc4fc-lck42 2/2 Running 1 22m

zipkin-789f9f965f-z6jpq 1/1 Running 0 22m

$ kubectl delete pod addapp-86cfcb8969-dfkwf && kubectl delete pod calculator-front-end-5bb947dfcf-cdk8p && kubectl delete pod divideapp-6b94b477f5-6f7ld && kubectl delete pod multiplyapp-545c4bc54d-v644w && kubectl delete pod subtractapp-5c6c6bc4fc-lck42

pod "addapp-86cfcb8969-dfkwf" deleted

pod "calculator-front-end-5bb947dfcf-cdk8p" deleted

pod "divideapp-6b94b477f5-6f7ld" deleted

pod "multiplyapp-545c4bc54d-v644w" deleted

pod "subtractapp-5c6c6bc4fc-lck42" deleted

I then used the calc app, but no services.

I also tried applying all 4 transforms to appconfig.yaml to see if that would work.

$ kubectl get configuration appconfig -o yaml > appconfig.yaml

$ vi appconfig.yaml

$ kubectl apply -f appconfig.yaml

configuration.dapr.io/appconfig configured

$ kubectl delete --all pods --namespace=default

pod "addapp-86cfcb8969-nk42g" deleted

pod "calculator-front-end-5bb947dfcf-dggw8" deleted

pod "divideapp-6b94b477f5-9rrxm" deleted

pod "multiplyapp-545c4bc54d-57p4g" deleted

pod "subtractapp-5c6c6bc4fc-254xm" deleted

pod "zipkin-789f9f965f-745xm" deleted

I would get errors in the daprd sidecar:

2021/10/26 12:45:48 failed the request with status code 400

2021/10/26 12:45:50 failed the request with status code 400

So again, I did my damnest to make this work.

Comparing to DataDog

As i could not get Dapr via Otel to send data to them and every example sent to me thus far has been running on-prem or exposing Kubernetes through an ingress (which seems rather nuts), I wanted to sanity check by just adding Datadog to this fresh AKS setup:

I changed back the Deployments container spec to contrib (image: otel/opentelemetry-collector-contrib-dev:latest), set the command to the otelcontrib binary (“/otelcontribcol”) and lastly added an exporter for datadog with my API key.

From the YAML:

data:

otel-collector-config: |

receivers:

zipkin:

endpoint: 0.0.0.0:9411

processors:

batch:

memory_limiter:

ballast_size_mib: 700

limit_mib: 1500

spike_limit_mib: 100

check_interval: 5s

extensions:

health_check:

pprof:

endpoint: :1888

zpages:

endpoint: :55679

exporters:

logging:

loglevel: debug

# Depending on where you want to export your trace, use the

# correct OpenTelemetry trace exporter here.

#

# Refer to

# https://github.com/open-telemetry/opentelemetry-collector/tree/main/exporter

# and

# https://github.com/open-telemetry/opentelemetry-collector-contrib/tree/main/exporter

# for full lists of trace exporters that you can use, and how to

# configure them.

zipkin:

endpoint: "ingest.lightstep.com:443"

otlp:

endpoint: "ingest.lightstep.com:443"

headers:

"lightstep-access-token": "Lr1WiHsuG2ezVkW5j%2F3SAtwaoQe3hE9GpZzVlwXF2zLJaT8FniZPPNkFHXaz26yfP9EGrNXMAlEPT149ItvL4YFVO0%asdfasdfasdf"

datadog:

api:

key: "f******************************4"

service:

extensions: [pprof, zpages, health_check]

pipelines:

traces:

receivers: [zipkin]

processors: [memory_limiter, batch]

# List your exporter here.

exporters: [otlp,logging,datadog]

# datadog/api maybe?

---

apiVersion: v1

kind: Service

metadata:

name: otel-collector

labels:

app: opencesus

component: otel-collector

spec:

ports:

- name: zipkin # Default endpoint for Zipkin receiver.

port: 9411

protocol: TCP

targetPort: 9411

selector:

component: otel-collector

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: otel-collector

labels:

app: opentelemetry

component: otel-collector

spec:

replicas: 1 # scale out based on your usage

selector:

matchLabels:

app: opentelemetry

template:

metadata:

labels:

app: opentelemetry

component: otel-collector

spec:

containers:

- name: otel-collector

image: otel/opentelemetry-collector-contrib-dev:latest # otel/opentelemetry-collector-dev:latest # otel/opentelemetry-collector:latest #

command:

- "/otelcontribcol" # "/otelcol"

- "--config=/conf/otel-collector-config.yaml"

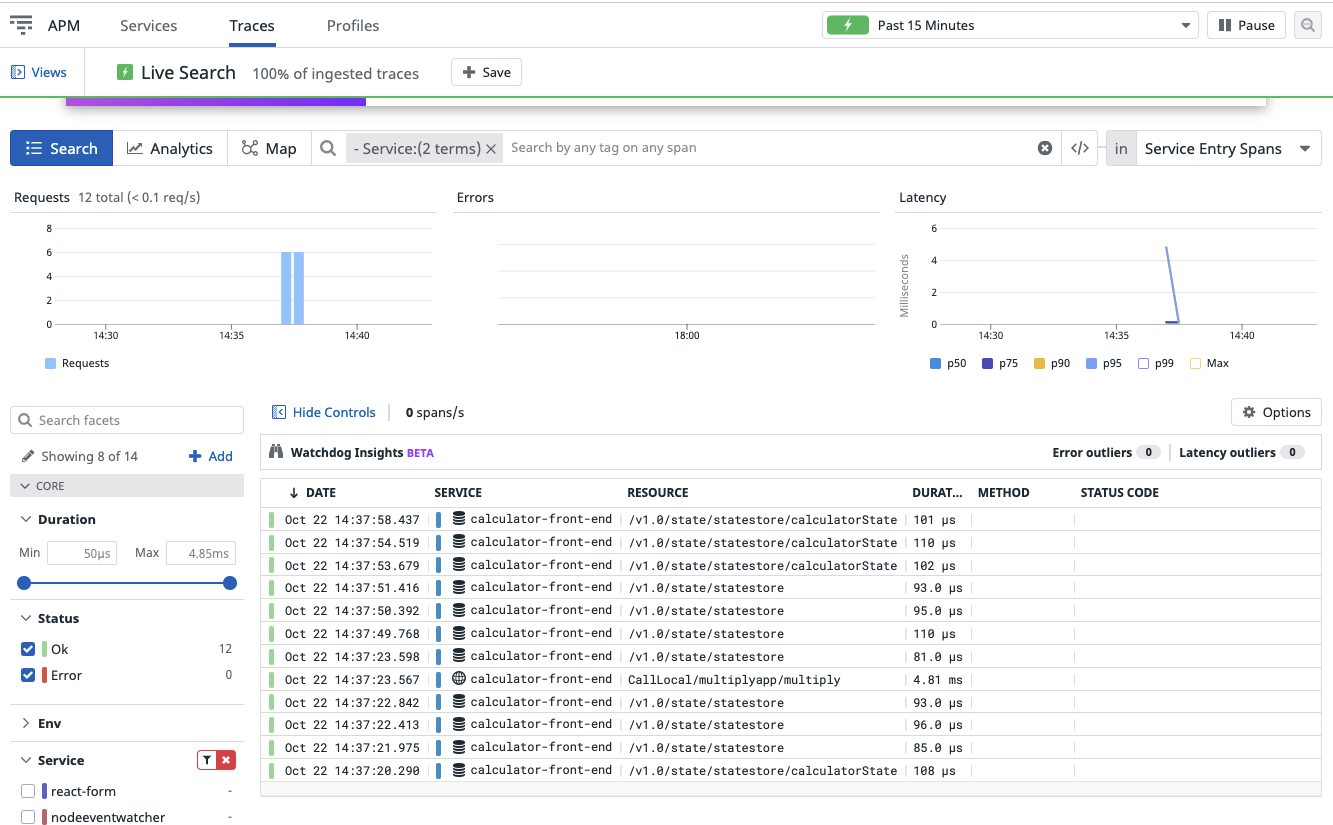

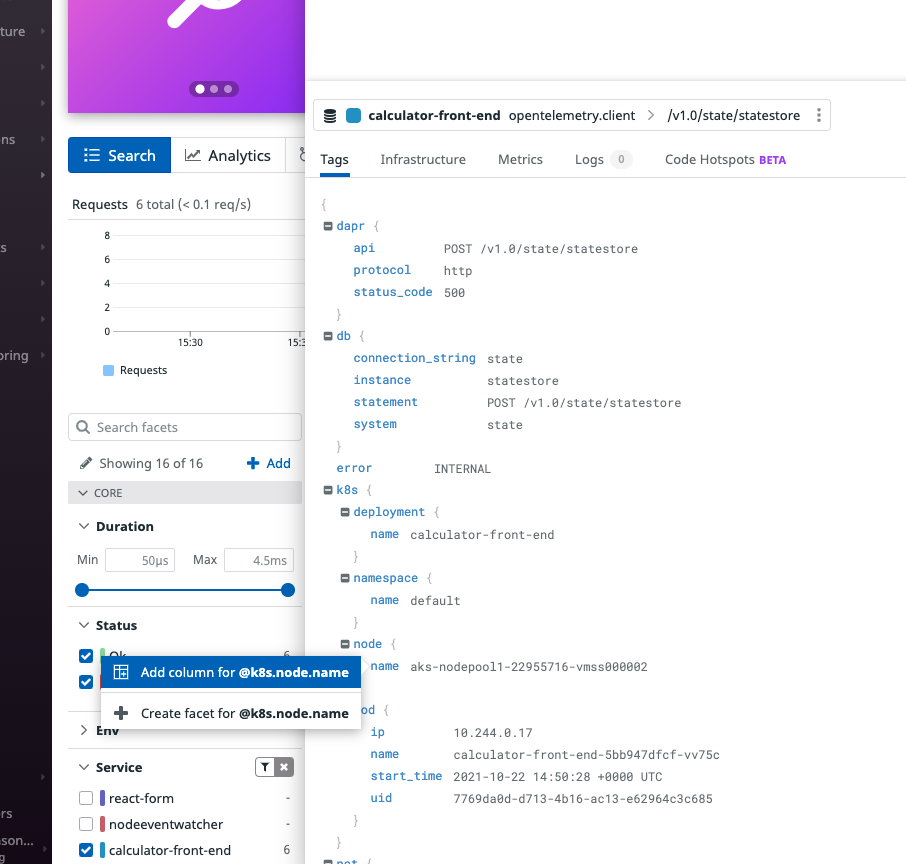

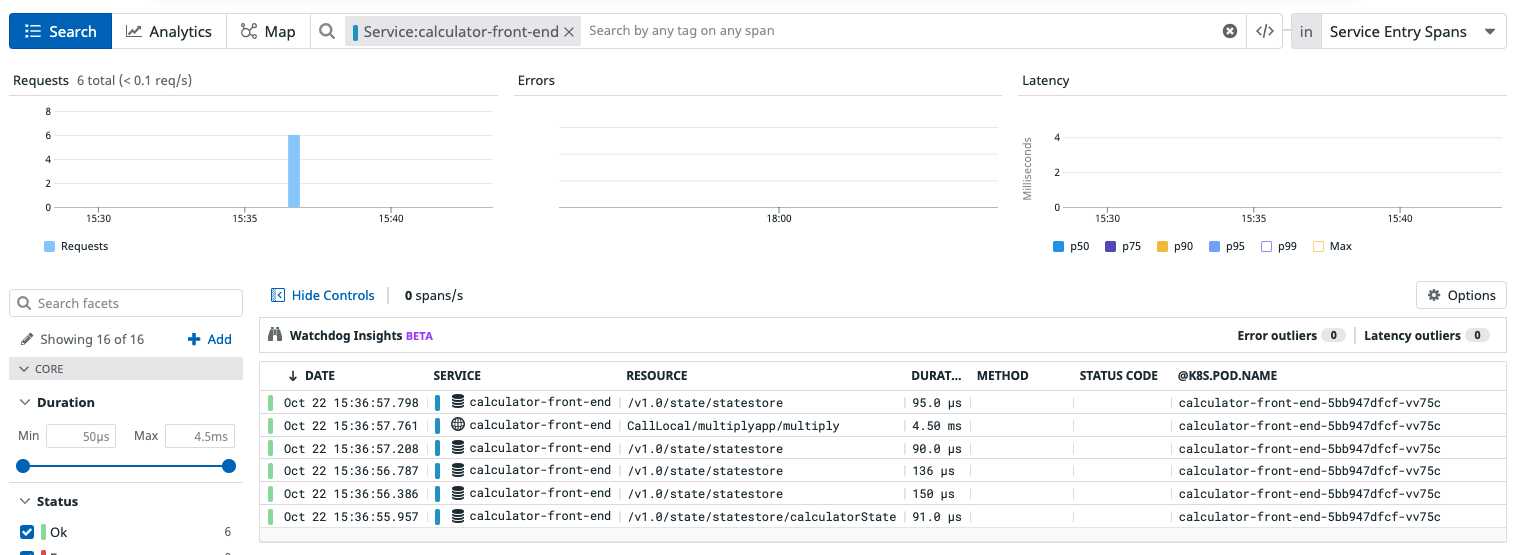

Pretty immediately after clicking around the Calculator App i got traces:

Let’s amp it up.. going to add k8sattributes and redeploy…

receivers:

zipkin:

endpoint: 0.0.0.0:9411

processors:

batch:

k8sattributes:

memory_limiter:

ballast_size_mib: 700

limit_mib: 1500

spike_limit_mib: 100

check_interval: 5s

extensions:

health_check:

pprof:

endpoint: :1888

zpages:

endpoint: :55679

exporters:

logging:

loglevel: debug

# Depending on where you want to export your trace, use the

# correct OpenTelemetry trace exporter here.

#

# Refer to

# https://github.com/open-telemetry/opentelemetry-collector/tree/main/exporter

# and

# https://github.com/open-telemetry/opentelemetry-collector-contrib/tree/main/exporter

# for full lists of trace exporters that you can use, and how to

# configure them.

zipkin:

endpoint: "ingest.lightstep.com:443"

otlp:

endpoint: "ingest.lightstep.com:443"

headers:

"lightstep-access-token": "Lr1WiHsuG2ezVkW5j%2F3SAtwaoQe3hE9GpZzVlwXF2zLJaT8FniZPPNkFHXaz26yfP9EGrNXMAlEPT149ItvL4YFVO0%asdfasdfasdf"

datadog:

env: development

service: mydaprservice

api:

key: "f******************4"

service:

extensions: [pprof, zpages, health_check]

pipelines:

traces:

receivers: [zipkin]

processors: [memory_limiter, k8sattributes, batch]

# List your exporter here.

exporters: [otlp,logging,datadog]

I noticed an error in the logs.. seems we need to adjust some RBAC rules.

E1022 19:59:05.443238 1 reflector.go:138] k8s.io/client-go@v0.22.2/tools/cache/reflector.go:167: Failed to watch *v1.Pod: failed to list *v1.Pod: pods is forbidden: User "system:serviceaccount:default:default" cannot list resource "pods" in API group "" at the cluster scope: RBAC: clusterrole.rbac.authorization.k8s.io "service-reader" not found

E1022 19:59:40.563543 1 reflector.go:138] k8s.io/client-go@v0.22.2/tools/cache/reflector.go:167: Failed to watch *v1.Pod: failed to list *v1.Pod: pods is forbidden: User "system:serviceaccount:default:default" cannot list resource "pods" in API group "" at the cluster scope: RBAC: clusterrole.rbac.authorization.k8s.io "service-reader" not found

I’m going to create a reader role for k8s attributes:

$ cat readerrole.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: default

name: servicepod-reader

rules:

- apiGroups: [""] # "" indicates the core API group

resources: ["services"]

verbs: ["get", "watch", "list"]

- apiGroups: [""] # "" indicates the core API group

resources: ["pods"]

verbs: ["get", "watch", "list"]

$ kubectl apply -f readerrole.yaml

clusterrole.rbac.authorization.k8s.io/servicepod-reader created

Then apply it:

$ kubectl create clusterrolebinding servicepod-reader-pod --clusterrole=servicepod-reader --serviceaccount=default:default

clusterrolebinding.rbac.authorization.k8s.io/servicepod-reader-pod created

Lastly rotate the pod to get it:

$ kubectl delete pod `kubectl get pods -l component=otel-collector -o json | grep name | head -n1 | sed 's/",//' | sed 's/^.*"//' | tr -d '\n'`

pod "otel-collector-67f645b9b7-9kbrx" deleted

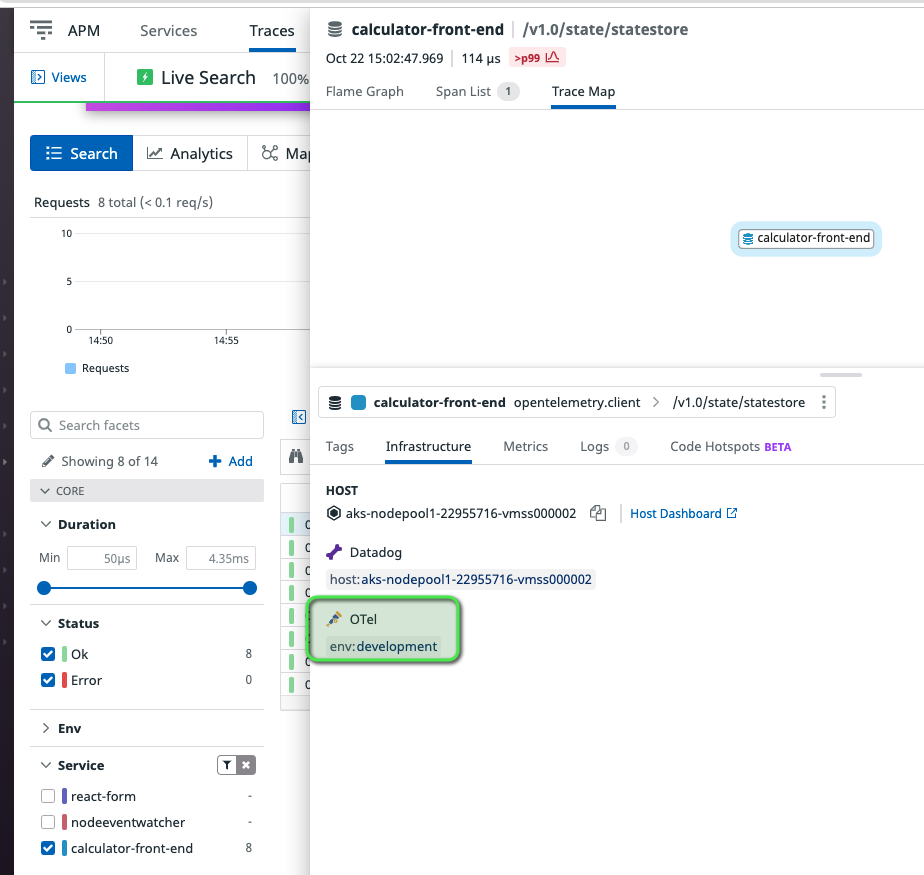

And we can see the tag added:

Adding in k8sevents:

data:

otel-collector-config: |

receivers:

zipkin:

endpoint: 0.0.0.0:9411

hostmetrics:

scrapers:

cpu:

disk:

memory:

network:

processors:

batch:

k8sattributes:

memory_limiter:

ballast_size_mib: 700

limit_mib: 1500

spike_limit_mib: 100

check_interval: 5s

extensions:

health_check:

pprof:

endpoint: :1888

zpages:

endpoint: :55679

exporters:

logging:

loglevel: debug

datadog:

env: development

service: mydaprservice

api:

key: "f************************************4"

service:

extensions: [pprof, zpages, health_check]

pipelines:

traces:

receivers: [zipkin]

processors: [memory_limiter, k8sattributes, batch]

exporters: [logging,datadog]

metrics:

receivers: [hostmetrics]

processors: [batch]

exporters: [datadog]

---

Let me then see the Kubernetes details in the trace:

And clicking the “Add a Column” info about let me see it inline with the trace:

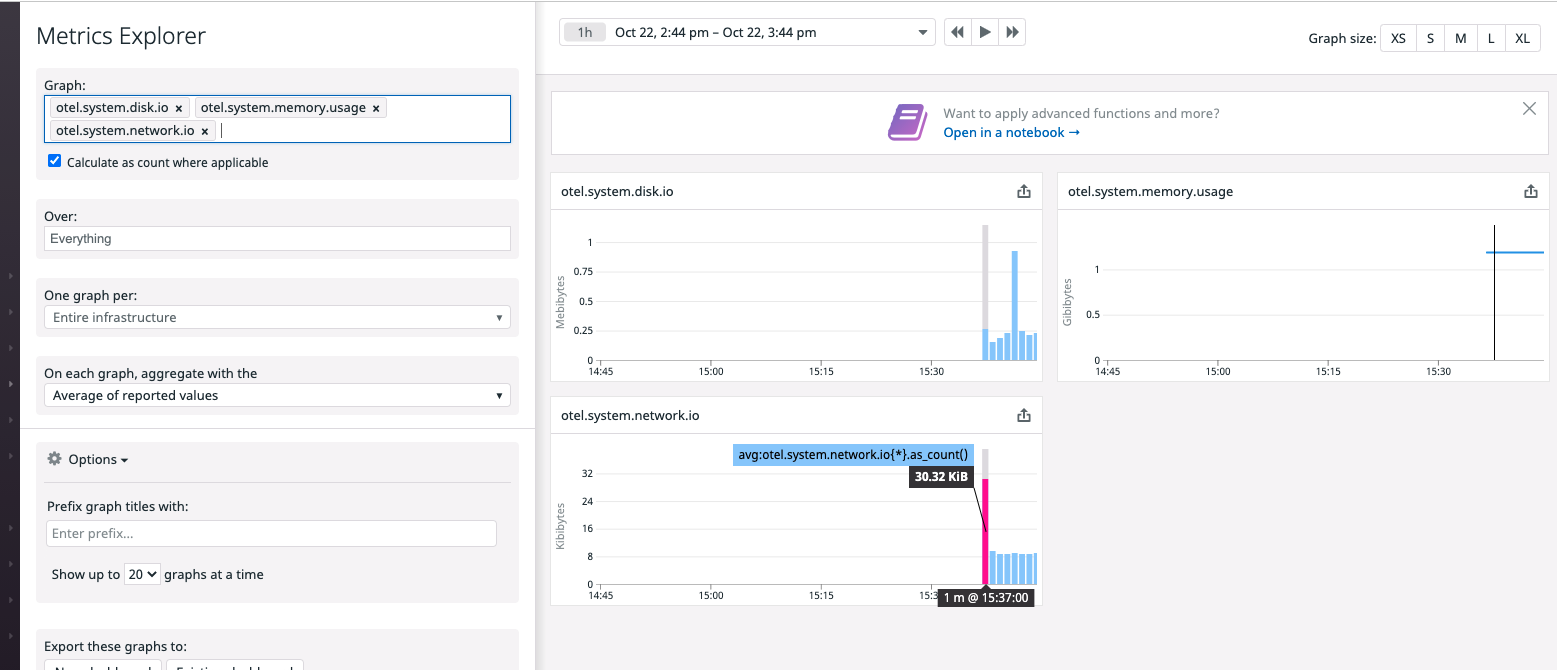

Lastly, i can use the Metrics Explorer to fetch the hostmetrics scraper data in Datadog

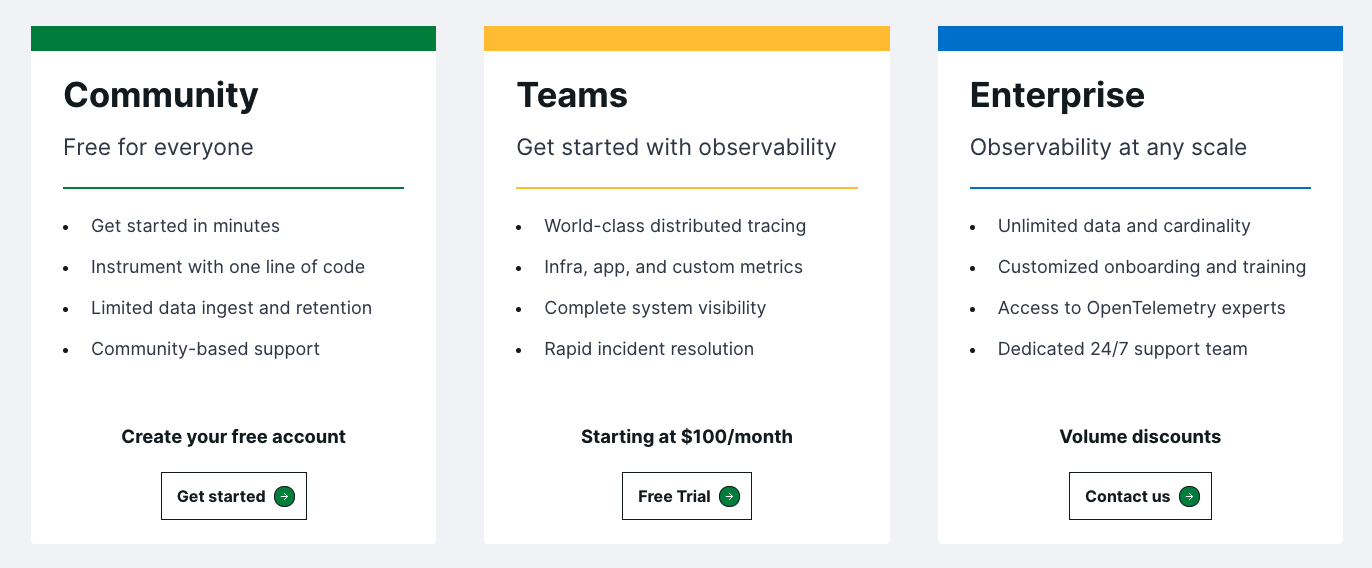

Pricing

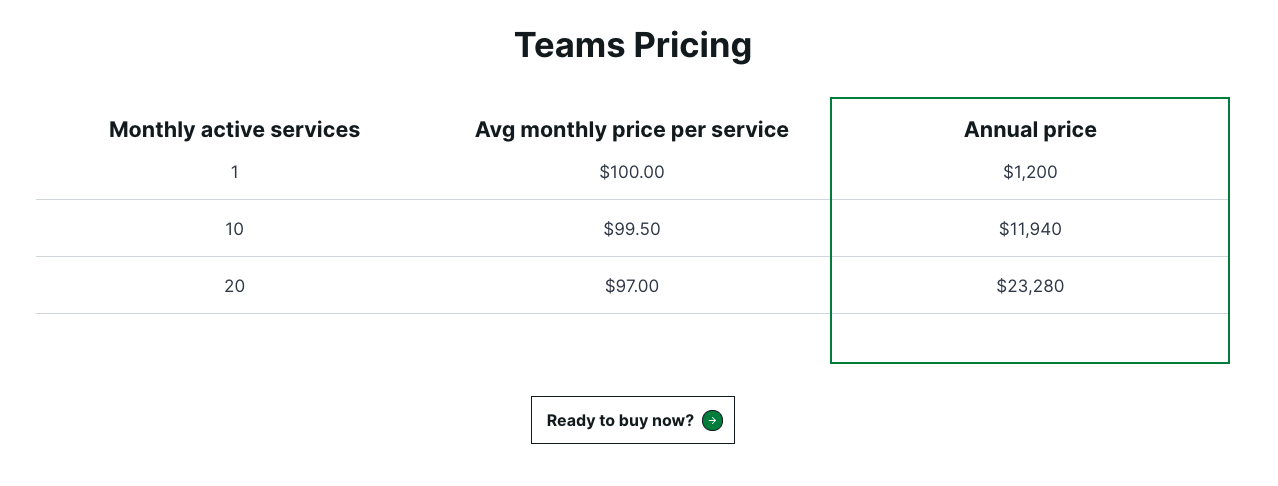

They have 3 options. A free community edition (i’ve been using here). A Teams edition that essentially costs $100/service/month and an Enterprise which i would guess is their “let’s make a deal” option

Teams Details (at time of writing):

I should point out that the $100 or so per month per service gives you 1Tb of span data, 10k time series, 20 key operations and 10 streams with that service.

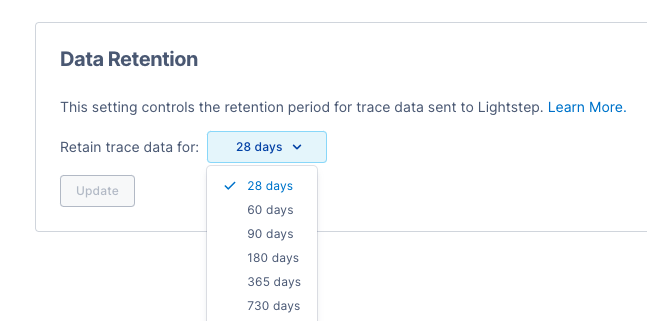

Also, the default retention is 28d but you can increase it (i did not see a cost note on that so assumably it just counts against your Tb per service quota):

I can compare to DD which operates on a per-host module of US$31-$40/host for all the APM traces from that host. DD also handles functions at $5/mo for serverless.

Summary

I was interested in hearing more about Lightstep after being pingged a few times by a Sales rep. The sales guy reflected that I had expressed interest at the last Kubecon, for which I had forgetten. I recalled little of their product and at first I confused Dapper and Dapr as they are homonyms (Dapper being the Google project that became Zipkin, the latter being Distribute Application Runtime that Microsoft now shepherds)

I really like the tracing abilities. It has all these clever transforms of the trace data which i could imagine being useful in a more complicated setup.

What I didn’t like was that every example sent to me, every one that came up in search, and those in their docs detailed direct instrumentation. I really do not want to have to have my instrumentation implementation compiled into my code. Having zero touch instrumentation is an important detail.

For my own work, I tend to use Dapr via OTel to trace service to service calls without having to modify my code. More importantly, in most real world situations, we don’t really want to have separate binaries because then our production and test environments differ (the ‘debug’ container vs ‘prod’ container).

This is both for cleanliness as well as avoiding vendor lockin. If my code is pre-compiled to transmit data to a specific vendor on a specific key, then i have to manage a tracing key as well as opening ports. If my data is sensitive, then i have to examine all that is sent to make sure GDPR and FDA rules are not violated.

Moreover, I felt that the tool lacked a key piece - a collection agent. Perhaps I’m just old school, but tools like Newrelic, Sumologic and Datadog all have ‘contrib’ exporters in OpenTelemetry whereas Lightstep wanted me to try and shoehorn the Datadog settings to redirect data to them. This felt a bit dirty to me (relying on a competitor’s agent) and i can only imagine in time, the Datadog agent would update in a way that would break trace data transmissions.

The last example I was sent involved opening an ingress to expose trace data on a public endpoint from which Lightstep would then consume it. I also don’t care for opening up public ingress to export a stream of trace data.

In the end, with the lack of Dapr via OTel support - or at least an inability on my end to solve it myself, I decided I didn’t want to fight any further. I tried on multiple days and clusters. I gave it a real shot.

Perhaps some more experienced developers than I can make Lightstep work for their needs. I see it, personally, as a very pretty SaaS Zipkin+ and if i have to work that hard to collect traces, I likely would be just fine hosting my own Zipkin instance.

My opinion is that Lightstep is an APM play using trace data. They talked to me about ‘log data’ attached to traces, but i could not replicate (but I saw them show it, so assumably it can be done).

Ben Sigelman let me know they are much more than that now; He sees it as a “fully-fledged primary observability tool” with many companies using them just for that. However, I saw only Jaeger and Zipkin formats and the problem I see is that the zipkin format doesn’t natively send log data and the data they showed me was more about attributes (metadata) instead of log data.