Published: Mar 19, 2021 by Isaac Johnson

I was chatting with some folks from Mirantis about Lens and some of their offerings and they highly encouraged me to try out K0s (“K Zeros”). This week I took some time to dig into it. My goal was mostly trying to see how it compared to Rancher’s K3s.

Setup

Let’s start with the pi and go from there. # spoiler: this didn’t go to well.

We first download the binary:

$ curl -sSLf https://get.k0s.sh | sudo sh

Downloading k0s from URL: https://github.com/k0sproject/k0s/releases/download/v0.11.0/k0s-v0.11.0-arm64

Verify the binary is installed:

$ k0s --help

k0s - The zero friction Kubernetes - https://k0sproject.io

This software is built and distributed by Mirantis, Inc., and is subject to EULA https://k0sproject.io/licenses/eula

Usage:

k0s [command]

Available Commands:

api Run the controller api

completion Generate completion script

controller Run controller

default-config Output the default k0s configuration yaml to stdout

docs Generate Markdown docs for the k0s binary

etcd Manage etcd cluster

help Help about any command

install Helper command for setting up k0s on a brand-new system. Must be run as root (or with sudo)

kubeconfig Create a kubeconfig file for a specified user

kubectl kubectl controls the Kubernetes cluster manager

reset Helper command for uninstalling k0s. Must be run as root (or with sudo)

status Helper command for get general information about k0s

token Manage join tokens

validate Helper command for validating the config file

version Print the k0s version

worker Run worker

Flags:

-c, --config string config file (default: ./k0s.yaml)

--data-dir string Data Directory for k0s (default: /var/lib/k0s). DO NOT CHANGE for an existing setup, things will break!

-d, --debug Debug logging (default: false)

--debugListenOn string Http listenOn for debug pprof handler (default ":6060")

-h, --help help for k0s

Use "k0s [command] --help" for more information about a command.

The thing to remember is that there is not an uninstall, however “reset” serves that purpose.

Installing k0s on Pi

Installing is a two step process (https://docs.k0sproject.io/latest/k0s-single-node/) . First we generate a config that drives it. This is very similar to kubespray or kubeadm

ubuntu@ubuntu:~/k0s$ k0s default-config > k0s.yaml

ubuntu@ubuntu:~/k0s$ cat k0s.yaml

apiVersion: k0s.k0sproject.io/v1beta1

images:

konnectivity:

image: us.gcr.io/k8s-artifacts-prod/kas-network-proxy/proxy-agent

version: v0.0.13

metricsserver:

image: gcr.io/k8s-staging-metrics-server/metrics-server

version: v0.3.7

kubeproxy:

image: k8s.gcr.io/kube-proxy

version: v1.20.4

coredns:

image: docker.io/coredns/coredns

version: 1.7.0

calico:

cni:

image: calico/cni

version: v3.16.2

flexvolume:

image: calico/pod2daemon-flexvol

version: v3.16.2

node:

image: calico/node

version: v3.16.2

kubecontrollers:

image: calico/kube-controllers

version: v3.16.2

installConfig:

users:

etcdUser: etcd

kineUser: kube-apiserver

konnectivityUser: konnectivity-server

kubeAPIserverUser: kube-apiserver

kubeSchedulerUser: kube-scheduler

kind: Cluster

metadata:

name: k0s

spec:

api:

address: 192.168.1.208

sans:

- 192.168.1.208

- 172.17.0.1

storage:

type: etcd

etcd:

peerAddress: 192.168.1.208

network:

podCIDR: 10.244.0.0/16

serviceCIDR: 10.96.0.0/12

provider: calico

calico:

mode: vxlan

vxlanPort: 4789

vxlanVNI: 4096

mtu: 1450

wireguard: false

flexVolumeDriverPath: /usr/libexec/k0s/kubelet-plugins/volume/exec/nodeagent~uds

withWindowsNodes: false

overlay: Always

podSecurityPolicy:

defaultPolicy: 00-k0s-privileged

telemetry:

interval: 10m0s

enabled: true

Now install and start the service

ubuntu@ubuntu:~/k0s$ sudo k0s install controller -c ~/k0s/k0s.yaml --enable-worker

INFO[2021-03-16 18:14:08] creating user: etcd

INFO[2021-03-16 18:14:08] creating user: kube-apiserver

INFO[2021-03-16 18:14:09] creating user: konnectivity-server

INFO[2021-03-16 18:14:09] creating user: kube-scheduler

INFO[2021-03-16 18:14:09] Installing k0s service

ubuntu@ubuntu:~/k0s$ sudo systemctl start k0scontroller.service

Accessing it (or rather, trying to)

Trying to access proved a bit challenging. I couldn’t directly use the built in kubectl because it didn’t see me as logged in

ubuntu@ubuntu:~/k0s$ sudo chmod a+rx /var/lib/k0s/

ubuntu@ubuntu:~/k0s$ sudo chmod a+rx /var/lib/k0s/pki

ubuntu@ubuntu:~/k0s$ sudo chmod a+r /var/lib/k0s/pki/*.*

ubuntu@ubuntu:~/k0s$ k0s kubectl get nodes

Unable to connect to the server: x509: certificate signed by unknown authority

Then i tried a snap install

ubuntu@ubuntu:~/k0s$ cp /var/lib/k0s/pki/admin.conf ~/.kube/config

ubuntu@ubuntu:~/k0s$ sudo snap install kubectl --classic

kubectl 1.20.4 from Canonical✓ installed

ubuntu@ubuntu:~/k0s$ kubectl get nodes --insecure-skip-tls-verify

error: You must be logged in to the server (Unauthorized)

I actually tried a lot of hand rigged paths, making new configs, etc. Nothing would get past either the bad/cert signature issue or login issue.

Trying on WSL

First we download as before

builder@DESKTOP-JBA79RT:~/k0s$ curl -sSLf https://get.k0s.sh | sudo sh

[sudo] password for builder:

Downloading k0s from URL: https://github.com/k0sproject/k0s/releases/download/v0.11.1/k0s-v0.11.1-amd64

Then let’s make a config

builder@DESKTOP-JBA79RT:~/k0s$ k0s default-config > k0s.yaml

builder@DESKTOP-JBA79RT:~/k0s$ cat k0s.yaml

apiVersion: k0s.k0sproject.io/v1beta1

images:

konnectivity:

image: us.gcr.io/k8s-artifacts-prod/kas-network-proxy/proxy-agent

version: v0.0.13

metricsserver:

image: gcr.io/k8s-staging-metrics-server/metrics-server

version: v0.3.7

kubeproxy:

image: k8s.gcr.io/kube-proxy

version: v1.20.4

coredns:

image: docker.io/coredns/coredns

version: 1.7.0

calico:

cni:

image: calico/cni

version: v3.16.2

flexvolume:

image: calico/pod2daemon-flexvol

version: v3.16.2

node:

image: calico/node

version: v3.16.2

kubecontrollers:

image: calico/kube-controllers

version: v3.16.2

installConfig:

users:

etcdUser: etcd

kineUser: kube-apiserver

konnectivityUser: konnectivity-server

kubeAPIserverUser: kube-apiserver

kubeSchedulerUser: kube-scheduler

kind: Cluster

metadata:

name: k0s

spec:

api:

address: 172.25.248.143

sans:

- 172.25.248.143

storage:

type: etcd

etcd:

peerAddress: 172.25.248.143

network:

podCIDR: 10.244.0.0/16

serviceCIDR: 10.96.0.0/12

provider: calico

calico:

mode: vxlan

vxlanPort: 4789

vxlanVNI: 4096

mtu: 1450

wireguard: false

flexVolumeDriverPath: /usr/libexec/k0s/kubelet-plugins/volume/exec/nodeagent~uds

withWindowsNodes: false

overlay: Always

podSecurityPolicy:

defaultPolicy: 00-k0s-privileged

telemetry:

interval: 10m0s

enabled: true

And while we can install.. WSL cannot do init services..

builder@DESKTOP-JBA79RT:~/k0s$ sudo k0s install controller -c ~/k0s/k0s.yaml --enable-worker

INFO[2021-03-19 08:02:54] creating user: etcd

INFO[2021-03-19 08:02:54] creating user: kube-apiserver

INFO[2021-03-19 08:02:54] creating user: konnectivity-server

INFO[2021-03-19 08:02:54] creating user: kube-scheduler

INFO[2021-03-19 08:02:54] Installing k0s service

builder@DESKTOP-JBA79RT:~/k0s$ sudo systemctl start k0scontroller.service

System has not been booted with systemd as init system (PID 1). Can't operate.

However, we can just start the service manually…

builder@DESKTOP-JBA79RT:~/k0s$ sudo /usr/bin/k0s controller --config=/home/builder/k0s/k0s.yaml --enable-worker=true

INFO[2021-03-19 08:09:26] using public address: 172.25.248.143

INFO[2021-03-19 08:09:26] using sans: [172.25.248.143]

INFO[2021-03-19 08:09:26] DNS address: 10.96.0.10

INFO[2021-03-19 08:09:26] Using storage backend etcd

INFO[2021-03-19 08:09:26] initializing Certificates

…

Note: this is a chatty service so expect a lot of log scroll…

In a fresh bash shell

$ sudo cat /var/lib/k0s/pki/admin.conf > ~/.kube/config

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

desktop-jba79rt Ready <none> 57s v1.20.4-k0s1

Trying on a mac

First inclination was to follow the same steps as linux. But the linux steps really are just arm and x64.

$ curl -sSLf https://get.k0s.sh | sudo sh

Password:

Downloading k0s from URL: https://github.com/k0sproject/k0s/releases/download/v0.11.1/k0s-v0.11.1-amd64

sh: line 39: /usr/bin/k0s: Operation not permitted

However, we can try with the kubectl binary. From releases (https://github.com/k0sproject/k0sctl/releases) we can download the latest k0sctl for mac: https://github.com/k0sproject/k0sctl/releases/download/v0.5.0/k0sctl-darwin-x64

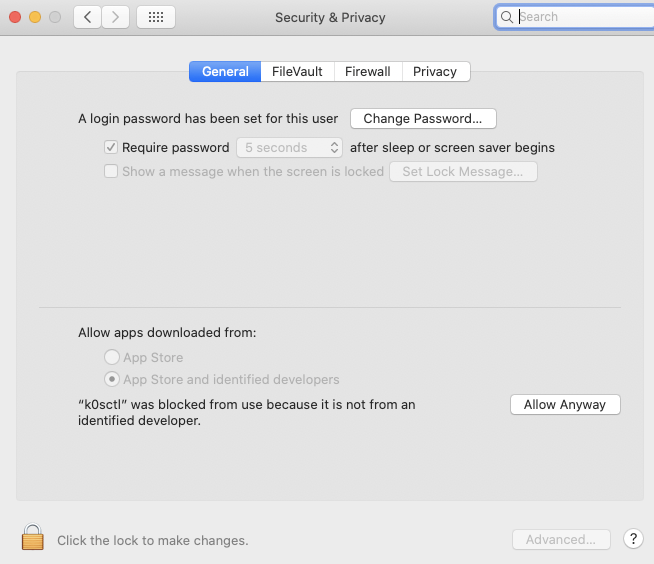

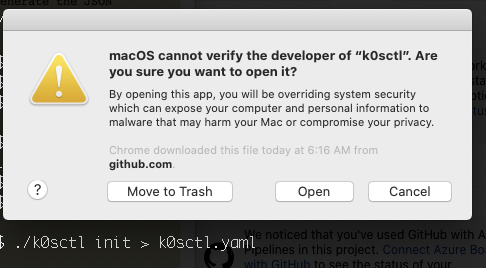

As an unsigned binary, we have to fight macos (because they know better than me what i want to run on my computer…grrr)

You can go to security & privacy to allow. Of course, you used to be able to set “always allow”, but they disabled it (because they know better than me and want to force me to use their walled garden app store)

Once you click allow anyway, you are given the privilege of clicking open in the next window

We can now see the YAML file it created

$ cat k0sctl.yaml

apiVersion: k0sctl.k0sproject.io/v1beta1

kind: Cluster

metadata:

name: k0s-cluster

spec:

hosts:

- ssh:

address: 10.0.0.1

user: root

port: 22

keyPath: /Users/isaacj/.ssh/id_rsa

role: controller

- ssh:

address: 10.0.0.2

user: root

port: 22

keyPath: /Users/isaacj/.ssh/id_rsa

role: worker

k0s:

version: 0.11.1

I thought perhaps it would create some local IP vms or something.. But clearly this is just like Kubeadm that plans to SSH as root and update nodes:

$ ./k0sctl apply -d --config k0sctl.yaml

⠀⣿⣿⡇⠀⠀⢀⣴⣾⣿⠟⠁⢸⣿⣿⣿⣿⣿⣿⣿⡿⠛⠁⠀⢸⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠀█████████ █████████ ███

⠀⣿⣿⡇⣠⣶⣿⡿⠋⠀⠀⠀⢸⣿⡇⠀⠀⠀⣠⠀⠀⢀⣠⡆⢸⣿⣿⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀███ ███ ███

⠀⣿⣿⣿⣿⣟⠋⠀⠀⠀⠀⠀⢸⣿⡇⠀⢰⣾⣿⠀⠀⣿⣿⡇⢸⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠀███ ███ ███

⠀⣿⣿⡏⠻⣿⣷⣤⡀⠀⠀⠀⠸⠛⠁⠀⠸⠋⠁⠀⠀⣿⣿⡇⠈⠉⠉⠉⠉⠉⠉⠉⠉⢹⣿⣿⠀███ ███ ███

⠀⣿⣿⡇⠀⠀⠙⢿⣿⣦⣀⠀⠀⠀⣠⣶⣶⣶⣶⣶⣶⣿⣿⡇⢰⣶⣶⣶⣶⣶⣶⣶⣶⣾⣿⣿⠀█████████ ███ ██████████

k0sctl v0.5.0 Copyright 2021, k0sctl authors.

Anonymized telemetry of usage will be sent to the authors.

By continuing to use k0sctl you agree to these terms:

https://k0sproject.io/licenses/eula

DEBU[0000] Preparing phase 'Connect to hosts'

INFO[0000] ==> Running phase: Connect to hosts

ERRO[0075] [ssh] 10.0.0.2:22: attempt 1 of 60.. failed to connect: dial tcp 10.0.0.2:22: connect: operation timed out

ERRO[0075] [ssh] 10.0.0.1:22: attempt 1 of 60.. failed to connect: dial tcp 10.0.0.1:22: connect: operation timed out

ERRO[0080] [ssh] 10.0.0.1:22: attempt 2 of 60.. failed to connect: dial tcp 10.0.0.1:22: connect: connection refused

Docker version

Since i’m looking for a fast solution, let’s move on to the dockerized version and see how well that works.

docker run -d --name k0s --hostname k0s --privileged -v /var/lib/k0s -p 6443:6443 docker.io/k0sproject/k0s:latest

Unable to find image 'k0sproject/k0s:latest' locally

latest: Pulling from k0sproject/k0s

f84cab65f19f: Pull complete

62ee4735a41a: Pull complete

86e9485af690: Pull complete

4fe5e4ec65da: Pull complete

30d8cc0db847: Pull complete

Digest: sha256:5e915f27453e1561fb848ad3a5b8d76dd7efd926c3ba97a291c5c33542150619

Status: Downloaded newer image for k0sproject/k0s:latest

387ade843d505caafdfc6f1234c9b1c1da6317407c961d79ea17bd5c667bb042

We can see this ran

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

387ade843d50 k0sproject/k0s:latest "/sbin/tini -- /bin/…" 37 seconds ago Up 35 seconds 0.0.0.0:6443->6443/tcp k0s

Let’s grab that kubeconfig:

$ docker exec k0s cat /var/lib/k0s/pki/admin.conf

apiVersion: v1

clusters:

- cluster:

server: https://localhost:6443

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURBRENDQWVpZ0F3SUJBZ0lVTXpFRDFsTWk5K21jLzYzWCtINmpVUVk5V1Jrd0RRWUpLb1pJaHZjTkFRRUwKQlFBd0dERVdNQlFHQTFVRUF4TU5hM1ZpWlhKdVpYUmxjeTFqWVRBZUZ3MHlNVEF6TVRreE1USTBNREJhRncwegpNVEF6TVRjeE1USTBNREJhTUJneEZqQVVCZ05WQkFNVERXdDFZbVZ5Ym1WMFpYTXRZMkV3Z2dFaU1BMEdDU3FHClNJYjNEUUVCQVFVQUE0SUJEd0F3Z2dFS0FvSUJBUURocUxTZWdTYU5TY1hJa2JHOEE1eWlCbDhwWjV1M2RtZHkKTHA3R2JMZlRvRVFYazUvRHk2bi9xVXZMQThMcFZKV05xVU1tSDV4MFFMaWZXSmt4am5XNXVWNUFhVkdrdDB2VgpDaVVzazY0bHNJY2xpem5xY09oNFRVb3VQVUZZNVQrQUp4aDV2eUxSTjJVV3B6TkpqZDkvVG4xZ0RJWStSczdkCnZ2TVdBbkt2dkNFUmkxQmxtTStYZWM3WTVmTkJiTzZ0NWZtTGJpMm5UQUFUMHZNdHRYUFNhaUd2K0pqN2ZWbjAKZm1JRTNkanY0VkNTY3pnWU10Tmt1T3dDMzJteHZBSEhVYXNFVzBWbDdZemJ2K1FSaTd6aWNXckhLeGdsaWlGcwo3dy80L3NrSGoySHZkYlRMc2k5RzRKTkJld1UvQ1dlVVNaTFo2ait2eU9peWdnRmdSTkZaQWdNQkFBR2pRakJBCk1BNEdBMVVkRHdFQi93UUVBd0lCQmpBUEJnTlZIUk1CQWY4RUJUQURBUUgvTUIwR0ExVWREZ1FXQkJRS2J3U2wKbFFjUlJHYjZxMmI4NUswZFZaTHByVEFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBakY1MitEOEQrQkpodXFDcQo1dVV4N2RTMFZpcFZzQWtaWmxTZFNmWGc5aUdOVjBzQ0NJbXdBMWdBS2Q0RE5qdjRnTElhSTgwU2hXcnFoY0c5CjhFaElEbW85UzBEWjdmbisySGNMTUIxTnNWMmRNTW5jVE5oSC9SUmRhZWZ3aFE4NnZYdnRvcVlhRURWRm1lUkIKaWorYWdTL0gyTkU1SmsvaEloV2QycDArYTdsbUVKdm9oajQ0Mi9yQnpJcXVNSEdWSVNjaVhvRzNqUUhCUWdzdApKRE56amxZc3V0REpTcXY1N3RTeHkxaFpCTXF1SU5seWQyak5ibEZmaUNCRlp3NUJrV2hSS01MVVl6YTFJYXVsCnpxcHl2N0s3RjhONHA4S2t6VHdjNHMzZmRBNU9jcGJRQUpmdXl1RmhVRXREYk9DMi9HVHpYTncrZEcyN2ZralcKL2xxT3lnPT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

name: local

contexts:

- context:

cluster: local

namespace: default

user: user

name: Default

current-context: Default

kind: Config

preferences: {}

users:

- name: user

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURXVENDQWtHZ0F3SUJBZ0lVRU9KaE83dG5wa21wYWJqSUFrbFI0TW5wb2o0d0RRWUpLb1pJaHZjTkFRRUwKQlFBd0dERVdNQlFHQTFVRUF4TU5hM1ZpWlhKdVpYUmxjeTFqWVRBZUZ3MHlNVEF6TVRreE1USTBNREJhRncweQpNakF6TVRreE1USTBNREJhTURReEZ6QVZCZ05WQkFvVERuTjVjM1JsYlRwdFlYTjBaWEp6TVJrd0Z3WURWUVFECkV4QnJkV0psY201bGRHVnpMV0ZrYldsdU1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0MKQVFFQTcveENGampVeXZ2VmNIT1JCbFI0cDNsSk1NbWV4NXhNMVVoeU1qYmVjbjhPSm16aS9jNXNIRFZkdi9lRApUaWdVMGFIR3FSeXE2RWdmQmdsWHZPOHJZR3VlUE5mUkp4TU5FcFFhMWp2MnVtY0grRVowMmxKbFJEMDU0RE9KCmdBRzJIM1ZsTmthTFFtVGNNRnorRUNVaTJyeHNmdmJwUkU4WHhDcS9vWXJnZzNvSVozQTZSN21laUdGV1NlbjIKRnZ0WC82Zkd2T3MvaGZ1ekIvamsyV1RkMGthNkJNc241OExLM1BWeDZoc05yL3hVZWtTbkRQdXpjVDVtUE0yUQowdWdoMGZNd0NKVDBtalpwVSszSkpOd3FiblNFZzVCWXhSaEhoYXdqTVZRbmUzaERrK013ZGtxMlRvNWt5RFF5CmRycUl4MFlaMjJBdmVpQ2xEbjZPOUpWWDRRSURBUUFCbzM4d2ZUQU9CZ05WSFE4QkFmOEVCQU1DQmFBd0hRWUQKVlIwbEJCWXdGQVlJS3dZQkJRVUhBd0VHQ0NzR0FRVUZCd01DTUF3R0ExVWRFd0VCL3dRQ01BQXdIUVlEVlIwTwpCQllFRkJDMEpSOE4yY2pvbXp0Uk0zRExZTU04TzBYUk1COEdBMVVkSXdRWU1CYUFGQXB2QktXVkJ4RkVadnFyClp2emtyUjFWa3VtdE1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQWxCaFRhMVR4M3VKbnNIY0FEdXVSeFNFNm0KYVkxdlp1K0hBTHo5dFlnTW53eUt5aGZKQnRLS3VtUE43SXlrR21zRDFSNDEydXRsVTQ2QWdqL1BLdVFoYUpHbApacHpoRDcvenI3M29GZ29nQWlSYTd3bW1iYVRxZmh4OHpxR3hqWGtGRnZhRjZRQVZDVzhYVlkzUU8rN1FxSDBXCmtvNyt6Rjl5UG5LdWQvQWZnZHlWUTRheWI5NE9pQ2lmdGNOcTZZU1hWb1lmbHBibXo3NTBKbEV2T2QvUUt6WEoKbHNrQjM3eFNZZytHUlpJWVdtdDRnOWtXZzlQRHMwM0dQVnlEcWJPV1RzU3l0Y3o5MzliTmhCWURXSzFsbTA3RgpReXFVaGdOOUt4L3hYc0NhNmpHMjg2cXBQVGl5UlRUampPa01pUE1mVk9lcFRINXFESGNnU0d0UTBHOVkKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBNy94Q0ZqalV5dnZWY0hPUkJsUjRwM2xKTU1tZXg1eE0xVWh5TWpiZWNuOE9KbXppCi9jNXNIRFZkdi9lRFRpZ1UwYUhHcVJ5cTZFZ2ZCZ2xYdk84cllHdWVQTmZSSnhNTkVwUWExanYydW1jSCtFWjAKMmxKbFJEMDU0RE9KZ0FHMkgzVmxOa2FMUW1UY01GeitFQ1VpMnJ4c2Z2YnBSRThYeENxL29ZcmdnM29JWjNBNgpSN21laUdGV1NlbjJGdnRYLzZmR3ZPcy9oZnV6Qi9qazJXVGQwa2E2Qk1zbjU4TEszUFZ4NmhzTnIveFVla1NuCkRQdXpjVDVtUE0yUTB1Z2gwZk13Q0pUMG1qWnBVKzNKSk53cWJuU0VnNUJZeFJoSGhhd2pNVlFuZTNoRGsrTXcKZGtxMlRvNWt5RFF5ZHJxSXgwWVoyMkF2ZWlDbERuNk85SlZYNFFJREFRQUJBb0lCQUFrcVlqL0ZCZU9Hd0wwZAp2TkU0RXErYXd6L1l3M2F1TW9VRzBrMjJxTUpJdGZxanQvdW5rWnQyTWxGdG01YzFrcTM2OXNKb3RPSlFOdGNkCkxqdXFDc2NROVNjdnV1NDZIUXF6bTE3SlJZQVRCVXZuTFBsVm9rWjc3Q1R5OHZKdm5rd1BTTUZNNm02cnVYa2oKWkJTcnhtd0NQRWFSR1pHaFQ3QVBUZWhXaklLWTZKLzZWWnU3M2VZQVJDQk9JS3ZPY3k4VmRzV2NlMmQvMHcrVQpFYkk5c0k2bmpiTTZmdzA1Y1IxN3FUcUxCWnhWeEdQWTVWa3kzWUdSQ3RsaUZNRGZaeGlYM3AvTThUVzdLalJZCmtGeXNEeklyT0JJUDJlQVVjOGFQa1daK2ExdVJxcTdpNllpQmlTUnVnT081WnBCblViVEdxcHZhWVRtVkdRNzAKZ2ZNaSswRUNnWUVBK3dqbkRYTDAveDNHdG1sWm9qV1krY0E2TDFwa2xkdmhlV2kvSFNwUERxZ01HdFphTTJGVwo4OFRyWGpZVDgveW9SR2MwTmU0eVBqdEFKdXJac0xJckMzVzRuNkwvOUJCZXJncHQ5VjEvU2N3b1lJTklBYllFCkwzWTltQnpHKzhTNGtJaCtQL29odUU4V2pRTkxjTzh3VC9hNlBLeEJJVXdpbmp6NmtPQW5RR2tDZ1lFQTlMdG8KWXUzSEEwQ25TenhIME1TSG1SclpUZGIrYlliL2VUSjkzbHVXWkhSZVlmQ0xIQWhkZkpZUlhqNFRqLyt3Mnp5UApQdVIza0Y5Yi9qcHZuZldQeUI1YXdsZURHajVTNFVneGcwTXRieTlseWFsYTI5SmxBcFNwK0dpS0hoeTNmYzNECk1qVGRwbG1DSTljVnVuNEtRd2hhQS84TldudDhSZ1Uxd3BjLzdMa0NnWUVBMTJkMU0ySWYvY1RrSE9QYktNZ0sKNHN0aVlmMlRiOC9EOHJUQndObnNDbXlDTG9rZHp4YklVTlg1RE5ja1dlakR6aVlzYzlaWFFIVUJBQ1BtOWFwOApLeEl4Z0xHU0pTL2l2ajV1eWVzWGJSQ0UyUVB0UnFLVGh0SlQyZkZmZ092MVh2ZndOUitCemEvM2JycVVBbTBMCnJLSE9mbjlrUjVrWDMyWDlyMURYL1hrQ2dZRUF2SFR3Zm5ZQ01iUUEzOFBNdmF3SmkxSU1rbytEbjQ0OGZ2VHQKem1RUzNNcHJ2OW0vRmRndlBYaEdhWjg5Nno2SHoxdkVKemRDQnpBWHBCOFZ3cnJOZk5vN0k3ckdIMWhzOUVSbQp0R0R2eE5YbitUSHI0S2tVMWJicmFIb0FHZzRkRXNoM2p0cjg4Rk92RHJCYmNDQU5BTytXZWN0WFdoMExadFF1ClFPbTk5U2tDZ1lCMVZMOFFiSXJMVUhKRzhOUnAvV0tvbnI5NnJPRm85Tk80YTBqdFYyT1dVVjUyTk1SeUJUMUcKWjZTMU01SUp2M1o2eHZIalMvVWlHenpseTFUQm93K0ZtbHk4Q2dJWHBCUEZYek5Fb1hEYzQzNWt4NHAybWJhQQpoRWhWVEtyZlcvdTlNUXFhaXUvbGtPSWljZHdpWktoV3BvSXltaUN1SE9jUEp6MXZsaERTWVE9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

We can pipe that to our kubeconfig to view the nodes:

$ docker exec k0s cat /var/lib/k0s/pki/admin.conf > ~/.kube/config

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k0s Ready <none> 2m8s v1.20.4-k0s1

Next let’s add a worker

isaacj-macbook:k0s isaacj$ docker exec -t -i k0s k0s token create --role=worker

H4sIAAAAAAAC/2yVzW7jOhKF93kKv0D31Y+Ve21gFi2bjCJHzJAiixJ3tKgbWdRfJMWRNZh3H8TdDcwAsytWHXyHIIg6D3q4QDlOl77bb67uQ9F8THM5TvuHb5tf9f5hs9lspnK8luN+U83zMO3/+MP90/vu/vnd+e7tH7db/64pynG+/H0p9Fx+0x9z1Y+X+fbN6FnvNy+pM7+k7oEJE/NLeGQQi1SomDpYsPvMmQ/WjVPBQobIkUoYlIP9VMShchrg2YDZEU9c2uDkufXLmq/yMD+TdgABNgA3Ho3DmBTDy9kdYh2pmlvMmPg80QaHxjGIgSG0wRHlGJjAWy6CKnHVIJvqZGDIRbvUJcfvEliohPKTqCGA8CMHNpbIFSkPCUNxxbris3wbfs/q/55xEXclUu8U4KCcQFKLCSCWmSNWZ1BB3roywUPOM6YSC77yDNbCDRNkjsLH0aEhcV4TJAQcKGCgAjmpiJFx8F2bOvj69SZCsL4QC1fS8FwEPHerWHtx9IqQW8rmcD5Wn7IGN/HYrCJ74tEPn3lxohp2ZYBzvYori6x3vuwWyFRCeZUUWJ3ACRYQ7pweYZtgnGipsrSdt7oNMpKBJAJXYM1ojqEHb8NRA6x6zZ1zROLcW4ayDZbc2fUEMzj7QEEoRYCOVAxbfQSvFEvK6xjADx+5Hd7V0V7habsoh8UyndNiNfbQKY+DCc929owlWLR2oe0y83TOlUx8yaHlNr7wVTlEqpk/xUPSBoIKLJJIEROxXGBSaWG8kzO8E0/Jc/3jpFo3ZpxY3eUOWMJzf+ikcB3ezi73zTFZ47mMVJiiCvKMYFhDeT4aVbaxd3Jxqrl51JJkha1eyiczadk8FZ+9b25/OS8+GdOn/pZGyuYNSwrPBmxlJ27jxrjiSl3TABDNce/py+yVYjeU0nSsNSm3SlNpCLU4ZN7AtI3Dg3VDgkyYAFgWGUwvO18ICI3THGg7hALFHW/Us7Dugcp8y0QsqGChEG9XLp4/mYMWkAwpF2fUxiz1Yl94n6czxrVo4iiv1ZK0z1sipk+FleZReAOEX2lrxkJWoxZFYNbw6w+uzOKQOyT88tc2d5PLjF4RHakdepPhY0F71wBsicd4gtVQYLVSOWvZLlxh0sqnItDCvEIdrtQh8TkzYSJNmHrUYSh4NzXrOGqqlL99Cq/Kig73uVMEh7rCGjXoLP8KxBoiWZv2fJlu6RNJuHheeEdk0jLCZVADCvr0sEtFyyolla8x2pJO5aZj1wKaigkmWes2wj6ftOzHXBr+4rzduBVu2k5XjZoePHorjj/GnJvpLOBk2l2va+okl92NdkNcZEBSZGQKpNZQXdlK3oWoDkKa1fwYTgwFj7pdVOGDw9DAiyx3ic94GdlF4+HAM+ymIphKSW/aBpczUq0W5MCawSciHsGr0tRxE4DmMec4zjOYDt2wFFHjEWfyWV29kujHNvXmR4hMTaJkVS0LidjVxVPMqBhakzUuaytgGUO53R2Tyy6CaMh5V4wKFTfiqVE3xenFWxbuN90/uXP6tZsxt/SNOpACap5SQUJA4r6XX2j/j4fNptNtud9YZ3oo+m4ul/lnSvysf6bE78i4q74aH9P99HEum3L+du77eZpHPfwv7WMcy27+9pt0b9pLZ/abQ9/9fXl7GMby73Isu6Kc9pt//fvhi3o3/wX5P/i78f0Kc2/Lbr9Zx2nbPn73bD+U29LV3Vju7G5++E8AAAD//9nwda4CBwAA

# save token to env var

isaacj-macbook:k0s isaacj$ export token=`docker exec -t -i k0s k0s token create --role=worker`

Add worker1

docker run -d --name k0s-worker1 --hostname k0s-worker1 --privileged -v /var/lib/k0s docker.io/k0sproject/k0s:latest k0s worker $token

And in a moment we can see it come up…

$ docker run -d --name k0s-worker1 --hostname k0s-worker1 --privileged -v /var/lib/k0s docker.io/k0sproject/k0s:latest k0s worker $token

e98d00411e5258a053d9cfbd107e05f66dda0cd190545da2b263800201392315

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k0s Ready <none> 4m57s v1.20.4-k0s1

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k0s Ready <none> 5m11s v1.20.4-k0s1

k0s-worker1 NotReady <none> 12s v1.20.4-k0s1

This worked, however the documentation from k0s notes that we have some network limitations doing it via docker containers:

Known limitations#

No custom Docker networks

Currently, we cannot run k0s nodes if the containers are configured to use custom networks e.g. with --net my-net. This is caused by the fact that Docker sets up a custom DNS service within the network and that messes up CoreDNS. We know that there are some workarounds possible, but they are bit hackish. And on the other hand, running k0s cluster(s) in bridge network should not cause issues.

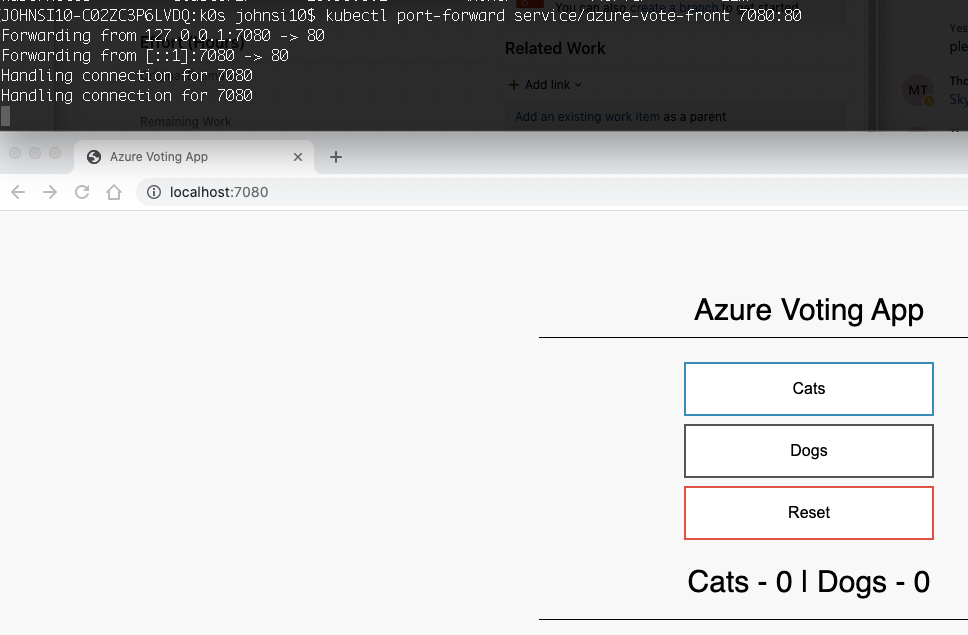

Testing it

One of my favourite quick test runs is to use the Azure Vote app: https://github.com/Azure-Samples/azure-voting-app-redis/blob/master/azure-vote-all-in-one-redis.yaml

$ cat azure-vote-app.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-back

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-back

template:

metadata:

labels:

app: azure-vote-back

spec:

nodeSelector:

"beta.kubernetes.io/os": linux

containers:

- name: azure-vote-back

image: mcr.microsoft.com/oss/bitnami/redis:6.0.8

env:

- name: ALLOW_EMPTY_PASSWORD

value: "yes"

ports:

- containerPort: 6379

name: redis

---

apiVersion: v1

kind: Service

metadata:

name: azure-vote-back

spec:

ports:

- port: 6379

selector:

app: azure-vote-back

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-vote-front

spec:

replicas: 1

selector:

matchLabels:

app: azure-vote-front

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

minReadySeconds: 5

template:

metadata:

labels:

app: azure-vote-front

spec:

nodeSelector:

"beta.kubernetes.io/os": linux

containers:

- name: azure-vote-front

image: mcr.microsoft.com/azuredocs/azure-vote-front:v1

ports:

- containerPort: 80

resources:

requests:

cpu: 250m

limits:

cpu: 500m

env:

- name: REDIS

value: "azure-vote-back"

---

apiVersion: v1

kind: Service

metadata:

name: azure-vote-front

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: azure-vote-front

Install it

$ kubectl apply -f ./azure-vote-app.yaml

deployment.apps/azure-vote-back created

service/azure-vote-back created

deployment.apps/azure-vote-front created

service/azure-vote-front created

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

azure-vote-back 0/1 1 0 5s

azure-vote-front 0/1 1 0 5s

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

azure-vote-back 1/1 1 1 21s

azure-vote-front 0/1 1 0 21s

The first try at pulling the image failed.. I am going to assume it’s mostly due to my overburdened Macbook and not the software…

Warning Failed 44s kubelet Error: ImagePullBackOff

Normal Pulling 33s (x2 over 2m4s) kubelet Pulling image "mcr.microsoft.com/azuredocs/azure-vote-front:v1"

Normal Pulled 2s kubelet Successfully pulled image "mcr.microsoft.com/azuredocs/azure-vote-front:v1" in 30.1524056s

We can see it’s running now:

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

azure-vote-back 1/1 1 1 2m49s

azure-vote-front 1/1 1 1 2m49s

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

azure-vote-back ClusterIP 10.109.184.1 <none> 6379/TCP 3m9s

azure-vote-front LoadBalancer 10.107.123.105 <pending> 80:30368/TCP 3m9s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 12m

And we can see it’s running:

Via K8s

Let’s get our local cluster:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

isaac-macbookpro Ready <none> 82d v1.19.5+k3s2

isaac-macbookair Ready master 82d v1.19.5+k3s2

Can we deploy k0s into k8s?

Let’s create a quick YAML for the master k0s:

$ cat k0s-master.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: k0s-master

spec:

replicas: 1

selector:

matchLabels:

app: k0s-master

template:

metadata:

labels:

app: k0s-master

spec:

nodeSelector:

"beta.kubernetes.io/os": linux

volumes:

- name: k0smount

containers:

- name: k0s-master

image: docker.io/k0sproject/k0s:latest

volumeMounts:

- name: k0smount

mountPath: /var/lib/k0s

ports:

- containerPort: 6443

name: kubernetes

securityContext:

allowPrivilegeEscalation: true

---

apiVersion: v1

kind: Service

metadata:

name: k0s-master

spec:

ports:

- port: 6443

selector:

app: k0s-master

And we can apply:

$ kubectl apply -f ./k0s-master.yaml

deployment.apps/k0s-master created

service/k0s-master created

The first time through, it was mad about the volume mount perms.

kubectl logs k0s-master-6985645f66-v9tpz

time="2021-03-19 12:08:24" level=info msg="no config file given, using defaults"

Error: directory "/var/lib/k0s" exist, but the permission is 0777. The expected permission is 755

Usage:

This can be fixed with a bit of an init container hack

$ cat k0s-master.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: k0s-master

spec:

replicas: 1

selector:

matchLabels:

app: k0s-master

template:

metadata:

labels:

app: k0s-master

spec:

nodeSelector:

"beta.kubernetes.io/os": linux

volumes:

- name: k0smount

containers:

- name: k0s-master

image: docker.io/k0sproject/k0s:latest

volumeMounts:

- name: k0smount

mountPath: /var/lib

ports:

- containerPort: 6443

name: kubernetes

securityContext:

allowPrivilegeEscalation: true

initContainers:

- name: volume-mount-hack

image: busybox

command: ["sh", "-c", "mkdir /var/lib/k0s && chmod 755 /var/lib/k0s"]

volumeMounts:

- name: k0smount

mountPath: /var/lib

---

apiVersion: v1

kind: Service

metadata:

name: k0s-master

spec:

ports:

- port: 6443

selector:

app: k0s-master

Now when applied, we see a running container:

$ kubectl get pods -l app=k0s-master

NAME READY STATUS RESTARTS AGE

k0s-master-b959fd5c4-vj662 1/1 Running 0 82s

$ kubectl get svc k0s-master

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

k0s-master ClusterIP 10.43.111.145 <none> 6443/TCP 2m15s

Now, let’s keep the insanity going and dump the kubeconfig into a local file:

kubectl exec k0s-master-b959fd5c4-vj662 -- cat /var/lib/k0s/pki/admin.conf > ~/.kube/k0s-in-k8s-config

We can now port forward to the master node:

$ kubectl port-forward k0s-master-b959fd5c4-vj662 9443:6443

Forwarding from 127.0.0.1:9443 -> 6443

Forwarding from [::1]:9443 -> 6443

And in another shell, i’ll sed the config to use the portforward (9443) and check on nodes and deployments

$ sed -i '' 's/:6443/:9443/' ~/.kube/k0s-in-k8s-config

$ kubectl get nodes --kubeconfig=/Users/jmyuser/.kube/k0s-in-k8s-config

No resources found

$ kubectl get deployments --kubeconfig=/Users/myuser/.kube/k0s-in-k8s-config

No resources found in default namespace.

$ kubectl get ns --kubeconfig=/Users/myuser/.kube/k0s-in-k8s-config

NAME STATUS AGE

default Active 11m

kube-node-lease Active 11m

kube-public Active 11m

kube-system Active 11m

If we wanted to continue, we could create a token from the master and use that in a deployment of a worker node..

$ kubectexec k0s-master-b959fd5c4-vj662 -- k0s token create --role=worker

H4sIAAAAAAAC/2xV246jPNa9z1PkBao/G5L6vor0XxSJHQKBxMYH8B1gOiQ2hyLk+GvefVTpbmlGmrvtvZbWsizvvSZ5fxTVcD527WJ6hZPSXs5jNZwXk7fp73oxmU6n03M1XKthMa3HsT8v/voLgh8z5wf44bqL99nMfXHKahiPP49lPlZv+WWsu+E4Pt50PuaL6TYB4zaBS8p1wI7eioqAJ1wFBGBOXxgYlwYGCaceRfGKSNErgN2EB54CViSuenCjarmKDWvqmAvs6NZ7j5C+hI7dMYZmGlAqeb8tYB/kvjoxgynlt5BY7GmgERU6Jhb7hGFBOZ4xPq8jqHpp61CLPuPN/VQx/CUF9RRXbuTbWCD8zgQdKgS/aGpjioKatuWtOvR/sNN/YowHbYXUFxFiqcBcEoNjgWiqV1gVQs2zBsoI9xlLqYqMcJWjcc6hFyG94i72lzYOslOMOBdLIrAgHIGEB0gD/OImAF+/34TzyC2a2GhUp4Xp32l6cHbr0okh3RMzg9Tae4VxImXA8tMhzBuNCqRC6pS3THyCJI0vlU9bdorXGft8FEhRbQ9PkdKs8vmDOkGUrCGLnnqVG3Qt/F7mDkR5E4QE2nYn70J89tfc38wSHB91SvdS4D4E+sEaMadQOyXcPEMgEubjkJyUVVa5GTAzZUQdoc2zhDojQKQE3NvcapQAvC9sNiwbsY+OoyNWNBLrD3eX9h1f82d5qq+56NchsCe67nuejDUHCnJfRTr1tkUD86ztM7qO/cygGbeWlSleM0B5LEiomvqqjQgKi7sKqy/iklu5yp7S2Cw3+rQFwsuxavh6TKNn0HBfJxXHULeByWUwYzDYF9iToTMPcwRnBYhuu89uxtNskK2+ZhLz0FFP2dIxt9284AKUEq4qecdc3jeU6z0z3aCWY11AdBOgxiH44MTFFyJ1TAz2qNPT3ATe0kAvRtqLhDDU15gcP1zOhaeBXZKm9zgKWmbVhhu4JDKbUR5wwqnH+eHK+OZGAboLSZGCOCUm4NKMrj6ewxDcccTqPFodbtz3Op5SoLh1k1QBivCONHooZT3kvJzrp/f9B5/UYI+B2Pv2Z6voRpw63vGPZcLsRoEOklvvZ7J7aITvNK3fibl7W0ekAkDLHx/HrevNYiBc7nQPurKXZB3UlRG7GNA7YdEjR2oQT/rkqTUhrFksx20Me7E8jqu8tUnCAl4JdQ2dmGSG+uTxj1u0lkqpW2rmAeHwRK3wRVr3pRFRBOKVgIdB+F6kpIh3vLsKV8yTk71E7BAmWFx5U7scqU2Vbp7stBm4DWDR4oCy+L0y8aZq6lX0tCvNFdnC+pYsP3ZsfX9PcLwnbr8pntirUM24f7jlAj856W6ygVGFaF58zykXX1rGTiG1KfxPyHGQUN9CKuy7bOicuSJKEG5LP4M7XIu8EfuCx+tMiozxeSN93KhGs6W1u2R9Bju/t6z5x5ErvU/QeE3MbEh4EGk5txyWM56ieXI6uLqNbxTUD4pqv1iPc51in5h7XlrblMZ2ie9dQicKicCGJCPdMxD+3s2YGXIgQCQC2XXCY08g/trLW9L932Q6bfOmWkwNOE/Krh2r+/grJX7Vv1LiT2S8WN+Ny/l1uhSVrca3ouvG8zjk/X+rXYahase3P0qvpjm2ejFddu3P42HSD9XPaqjasjovpv//r8m36sv8t8j/kH8Zv64wdqZqF1Nz/Buc9I+P+uv+9/Ewg83jeOt+NpN/BwAA//85gYNTAgcAAA==

and defining a worker YAML using that token

$ cat k0s-worker.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: k0s-worker1

spec:

replicas: 1

selector:

matchLabels:

app: k0s-worker1

template:

metadata:

labels:

app: k0s-worker1

spec:

nodeSelector:

"beta.kubernetes.io/os": linux

volumes:

- name: k0smount

containers:

- name: k0s-worker1

image: docker.io/k0sproject/k0s:latest

command: ["k0s", "worker", "H4sIAAAAAAAC/2xV246jPNa9z1PkBao/G5L6vor0XxSJHQKBxMYH8B1gOiQ2hyLk+GvefVTpbmlGmrvtvZbWsizvvSZ5fxTVcD527WJ6hZPSXs5jNZwXk7fp73oxmU6n03M1XKthMa3HsT8v/voLgh8z5wf44bqL99nMfXHKahiPP49lPlZv+WWsu+E4Pt50PuaL6TYB4zaBS8p1wI7eioqAJ1wFBGBOXxgYlwYGCaceRfGKSNErgN2EB54CViSuenCjarmKDWvqmAvs6NZ7j5C+hI7dMYZmGlAqeb8tYB/kvjoxgynlt5BY7GmgERU6Jhb7hGFBOZ4xPq8jqHpp61CLPuPN/VQx/CUF9RRXbuTbWCD8zgQdKgS/aGpjioKatuWtOvR/sNN/YowHbYXUFxFiqcBcEoNjgWiqV1gVQs2zBsoI9xlLqYqMcJWjcc6hFyG94i72lzYOslOMOBdLIrAgHIGEB0gD/OImAF+/34TzyC2a2GhUp4Xp32l6cHbr0okh3RMzg9Tae4VxImXA8tMhzBuNCqRC6pS3THyCJI0vlU9bdorXGft8FEhRbQ9PkdKs8vmDOkGUrCGLnnqVG3Qt/F7mDkR5E4QE2nYn70J89tfc38wSHB91SvdS4D4E+sEaMadQOyXcPEMgEubjkJyUVVa5GTAzZUQdoc2zhDojQKQE3NvcapQAvC9sNiwbsY+OoyNWNBLrD3eX9h1f82d5qq+56NchsCe67nuejDUHCnJfRTr1tkUD86ztM7qO/cygGbeWlSleM0B5LEiomvqqjQgKi7sKqy/iklu5yp7S2Cw3+rQFwsuxavh6TKNn0HBfJxXHULeByWUwYzDYF9iToTMPcwRnBYhuu89uxtNskK2+ZhLz0FFP2dIxt9284AKUEq4qecdc3jeU6z0z3aCWY11AdBOgxiH44MTFFyJ1TAz2qNPT3ATe0kAvRtqLhDDU15gcP1zOhaeBXZKm9zgKWmbVhhu4JDKbUR5wwqnH+eHK+OZGAboLSZGCOCUm4NKMrj6ewxDcccTqPFodbtz3Op5SoLh1k1QBivCONHooZT3kvJzrp/f9B5/UYI+B2Pv2Z6voRpw63vGPZcLsRoEOklvvZ7J7aITvNK3fibl7W0ekAkDLHx/HrevNYiBc7nQPurKXZB3UlRG7GNA7YdEjR2oQT/rkqTUhrFksx20Me7E8jqu8tUnCAl4JdQ2dmGSG+uTxj1u0lkqpW2rmAeHwRK3wRVr3pRFRBOKVgIdB+F6kpIh3vLsKV8yTk71E7BAmWFx5U7scqU2Vbp7stBm4DWDR4oCy+L0y8aZq6lX0tCvNFdnC+pYsP3ZsfX9PcLwnbr8pntirUM24f7jlAj856W6ygVGFaF58zykXX1rGTiG1KfxPyHGQUN9CKuy7bOicuSJKEG5LP4M7XIu8EfuCx+tMiozxeSN93KhGs6W1u2R9Bju/t6z5x5ErvU/QeE3MbEh4EGk5txyWM56ieXI6uLqNbxTUD4pqv1iPc51in5h7XlrblMZ2ie9dQicKicCGJCPdMxD+3s2YGXIgQCQC2XXCY08g/trLW9L932Q6bfOmWkwNOE/Krh2r+/grJX7Vv1LiT2S8WN+Ny/l1uhSVrca3ouvG8zjk/X+rXYahase3P0qvpjm2ejFddu3P42HSD9XPaqjasjovpv//r8m36sv8t8j/kH8Zv64wdqZqF1Nz/Buc9I+P+uv+9/Ewg83jeOt+NpN/BwAA//85gYNTAgcAAA=="]

volumeMounts:

- name: k0smount

mountPath: /var/lib

ports:

- containerPort: 6443

name: kubernetes

securityContext:

allowPrivilegeEscalation: true

initContainers:

- name: volume-mount-hack

image: busybox

command: ["sh", "-c", "mkdir /var/lib/k0s && chmod 755 /var/lib/k0s"]

volumeMounts:

- name: k0smount

mountPath: /var/lib

Then apply it

$ kubectl apply -f k0s-worker.yaml

deployment.apps/k0s-worker1 created

And we see both working now..

$ kubectl get pods | tail -n2

k0s-master-b959fd5c4-vj662 1/1 Running 0 17m

k0s-worker1-7bb8444844-2z8hv 1/1 Running 0 48s

While it launched, i don’t see the nodes show up:

$ export KUBECONFIG=/Users/isaacj/.kube/k0s-in-k8s-config

$ kubectl get nodes

No resources found

But I am seeing a running control plane..

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-5f6546844f-6s676 0/1 Pending 0 17m

kube-system coredns-5c98d7d4d8-h9clg 0/1 Pending 0 17m

kube-system metrics-server-6fbcd86f7b-q7hhk 0/1 Pending 0 17m

It does seem kubenet is having troubles.. But other than that, the pod logs looked fine

$ kubectl logs k0s-worker1-7bb8444844-2z8hv | tail -n5

time="2021-03-19 12:37:50" level=info msg="\t/go/src/github.com/kubernetes/kubernetes/_output/local/go/src/k8s.io/kubernetes/vendor/k8s.io/client-go/tools/cache/shared_informer.go:368" component=kubelet

time="2021-03-19 12:37:50" level=info msg="created by k8s.io/kubernetes/vendor/k8s.io/client-go/informers.(*sharedInformerFactory).Start" component=kubelet

time="2021-03-19 12:37:50" level=info msg="\t/go/src/github.com/kubernetes/kubernetes/_output/local/go/src/k8s.io/kubernetes/vendor/k8s.io/client-go/informers/factory.go:134 +0x191" component=kubelet

time="2021-03-19 12:37:50" level=warning msg="exit status 255" component=kubelet

time="2021-03-19 12:37:50" level=info msg="respawning in 5s" component=kubelet

I did try adding a command to enable work in the k0s-master and tried again:

containers:

- name: k0s-master

image: docker.io/k0sproject/k0s:latest

command: ["k0s", "controller", "--enable-worker"]

Then got fresh k8s config:

isaacj-macbook:k0s isaacj$ kubectl exec k0s-master-76d596b48f-68x8m -- cat /var/lib/k0s/pki/admin.conf > ~/.kube/k0s-in-k8s-config

isaacj-macbook:k0s isaacj$ sed -i '' 's/:6443/:9443/' ~/.kube/k0s-in-k8s-config

isaacj-macbook:k0s isaacj$ kubectl port-forward k0s-master-76d596b48f-68x8m 9443:6443

Forwarding from 127.0.0.1:9443 -> 6443

Forwarding from [::1]:9443 -> 6443

Handling connection for 9443

Handling connection for 9443

Handling connection for 9443

Handling connection for 9443

And listed nodes and namespaces which showed i was connecting, but still no active nodes

$ kubectl get ns

NAME STATUS AGE

default Active 2m28s

kube-node-lease Active 2m30s

kube-public Active 2m30s

kube-system Active 2m30s

$ kubectl get nodes

No resources found

I had one more nutty idea.. Would this work in AKS where I have Azure CNI instead of kubenet?

This cluster is properly namespaced.. So i’ll need to do this in my own NS:

isaacj-macbook:k0s isaacj$ kubectl get pods -n isaacj

NAME READY STATUS RESTARTS AGE

vote-back-azure-vote-1605545802-6dbb7bbd9-mblhs 1/1 Running 0 35h

vote-front-azure-vote-1605545802-6db7445477-82jct 1/1 Running 0 35h

isaacj-macbook:k0s isaacj$ kubectl apply -f k0s-master.yaml -n isaacj

deployment.apps/k0s-master created

service/k0s-master created

and see it running

$ kubectl get pods -n isaacj

NAME READY STATUS RESTARTS AGE

k0s-master-7667c9976f-kch9q 1/1 Running 0 57s

vote-back-azure-vote-1605545802-6dbb7bbd9-mblhs 1/1 Running 0 35h

vote-front-azure-vote-1605545802-6db7445477-82jct 1/1 Running 0 35h

We can snag the kubeconfig:

kubectl exec k0s-master-7667c9976f-kch9q -n isaacj -- cat /var/lib/k0s/pki/admin.conf > ~/.kube/k0s-in-k8s-config-aks

$ sed -i '' 's/:6443/:9443/' ~/.kube/k0s-in-k8s-config-aks

$ kubectl port-forward k0s-master-7667c9976f-kch9q -n isaacj 9443:6443

Forwarding from 127.0.0.1:9443 -> 6443

Forwarding from [::1]:9443 -> 6443

But still no go

$ export KUBECONFIG=/Users/isaacj/.kube/k0s-in-k8s-config-aks

$ kubectl get nodes

No resources found

$ kubectl get pods

No resources found in default namespace.

$ kubectl get ns

NAME STATUS AGE

default Active 2m43s

kube-node-lease Active 2m45s

kube-public Active 2m45s

kube-system Active 2m45s

I’ll clean this up before logging out

$ kubectl delete -f k0s-master.yaml -n isaacj

deployment.apps "k0s-master" deleted

service "k0s-master" deleted

Summary

K0s is a nice offering that I would see as similar to RKE or other on-prem cluster offerings. The idea that it’s as simple as k3s isn’t quite there. I would argue, however, it was easier to set up than Rancher Kubernetes Engine (RKE).

I’m not sure if it was the particular Pi i had, but it was disappointing that the simple shell script installer just wouldn’t fly on ubuntu on my Pi. However, running it manually on my Ubuntu WSL was just fine and it ran in my k8s which was x86 as well.

It’s likely a kubernetes offering I’ll follow and try again in the future, but for now, I’m keeping k3s on my home clusters.